Cosine Prompt-Based Class Incremental Semantic Segmentation for Point Clouds

Abstract

1. Introduction

- We present a cosine prompt-based class incremental learning approach for 3D semantic segmentation, achieving the balance between old and new knowledge through prompt expansion, pseudo-label generation, and LwF loss, thereby forming an end-to-end rehearsal-free framework.

- To accommodate new feature representations, we design a cosine prompt module. This module incorporates learnable prompts dedicated to new classes into a shared prompt pool while freezing the prompts associated with old classes, facilitating stable and discriminative feature learning.

- Extensive comparative experiments against other methods on S3DIS and ScanNet v2 datasets demonstrate the superior performance of our proposed approach. Furthermore, we conduct in-depth ablation studies to evaluate each component.

2. Related Work

2.1. Class Incremental Learning

2.2. Class Incremental Semantic Segmentation

2.3. Class Incremental Learning on Point Cloud

3. Methodology

3.1. Problem Formulation

- Base learning phase: Train feature extractor and classifier on base dataset .

- Incremental learning phase (Step i):

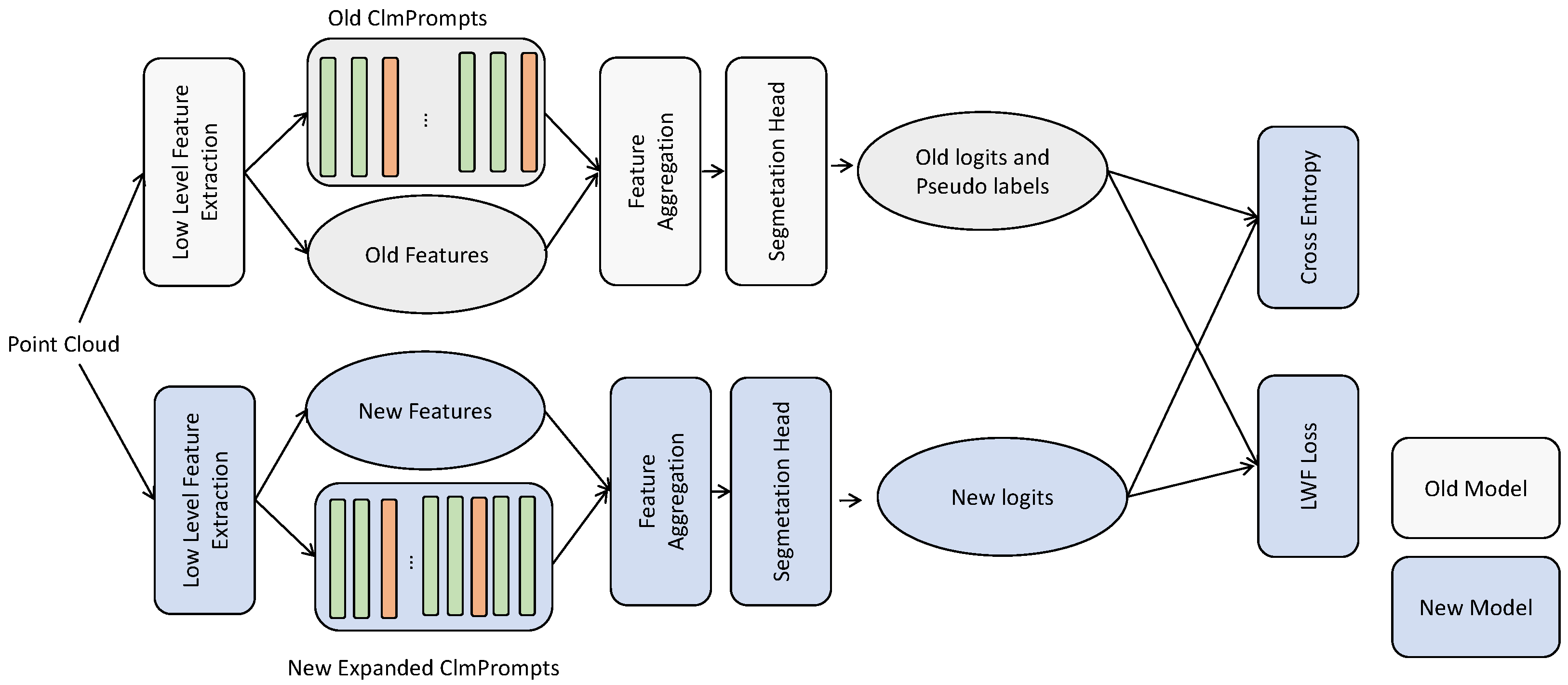

3.2. Framework Overview

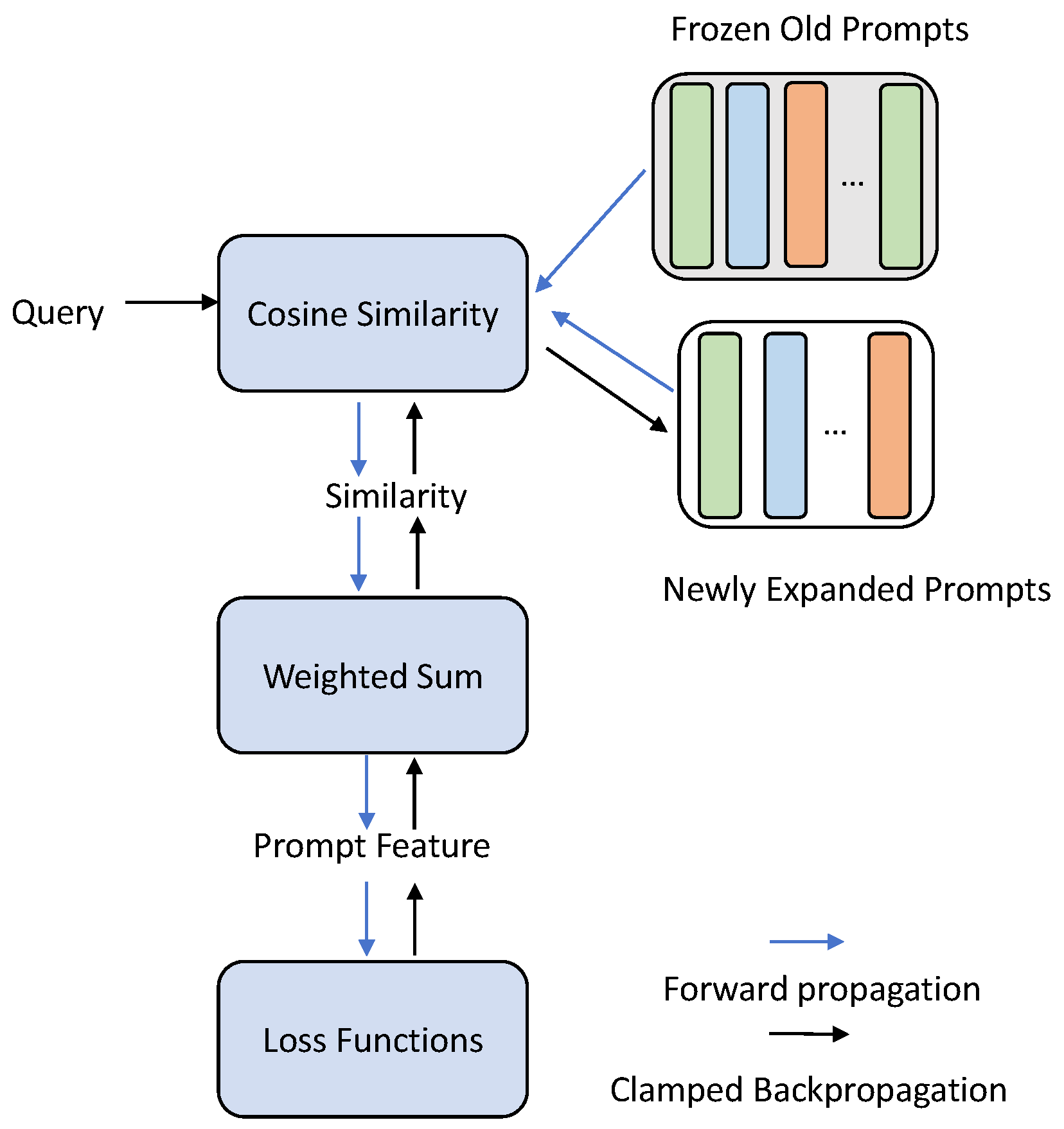

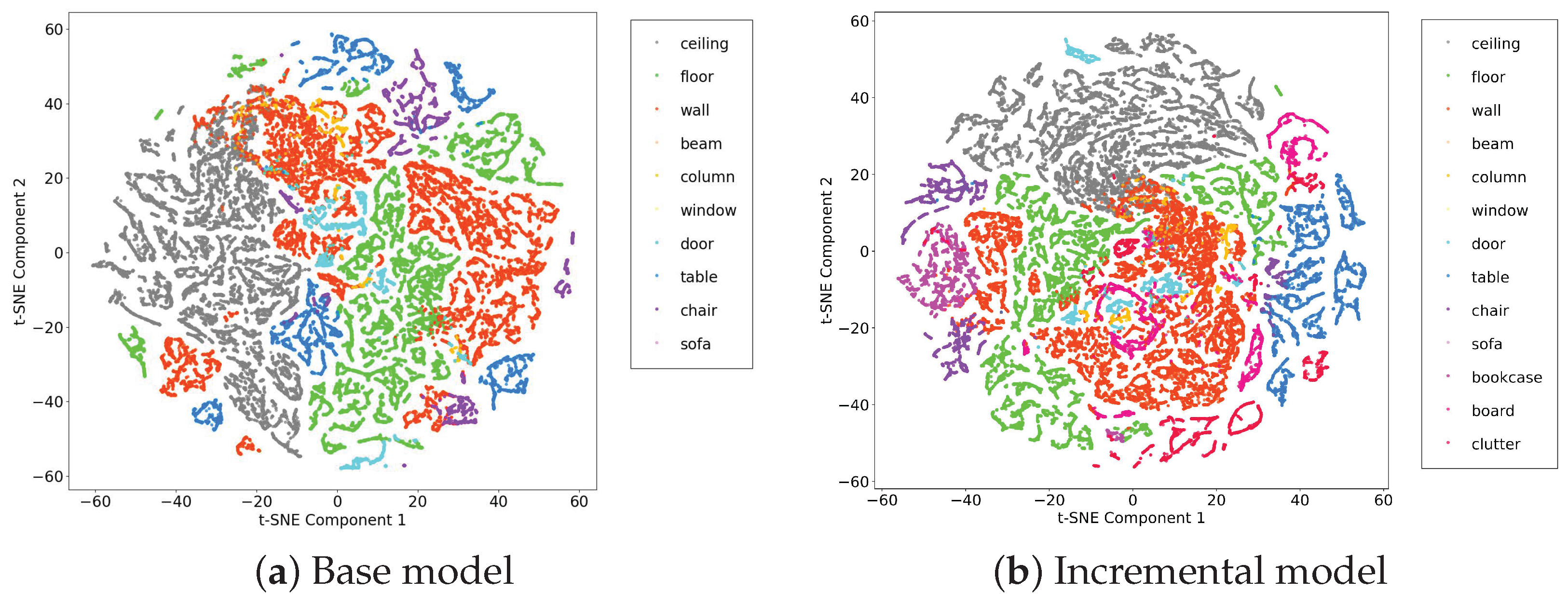

3.3. Cosine Prompt Learning

3.4. Pseudo-Label Generation

3.5. LwF Loss

3.6. Training Process

4. Experiments

4.1. Experimental Setup

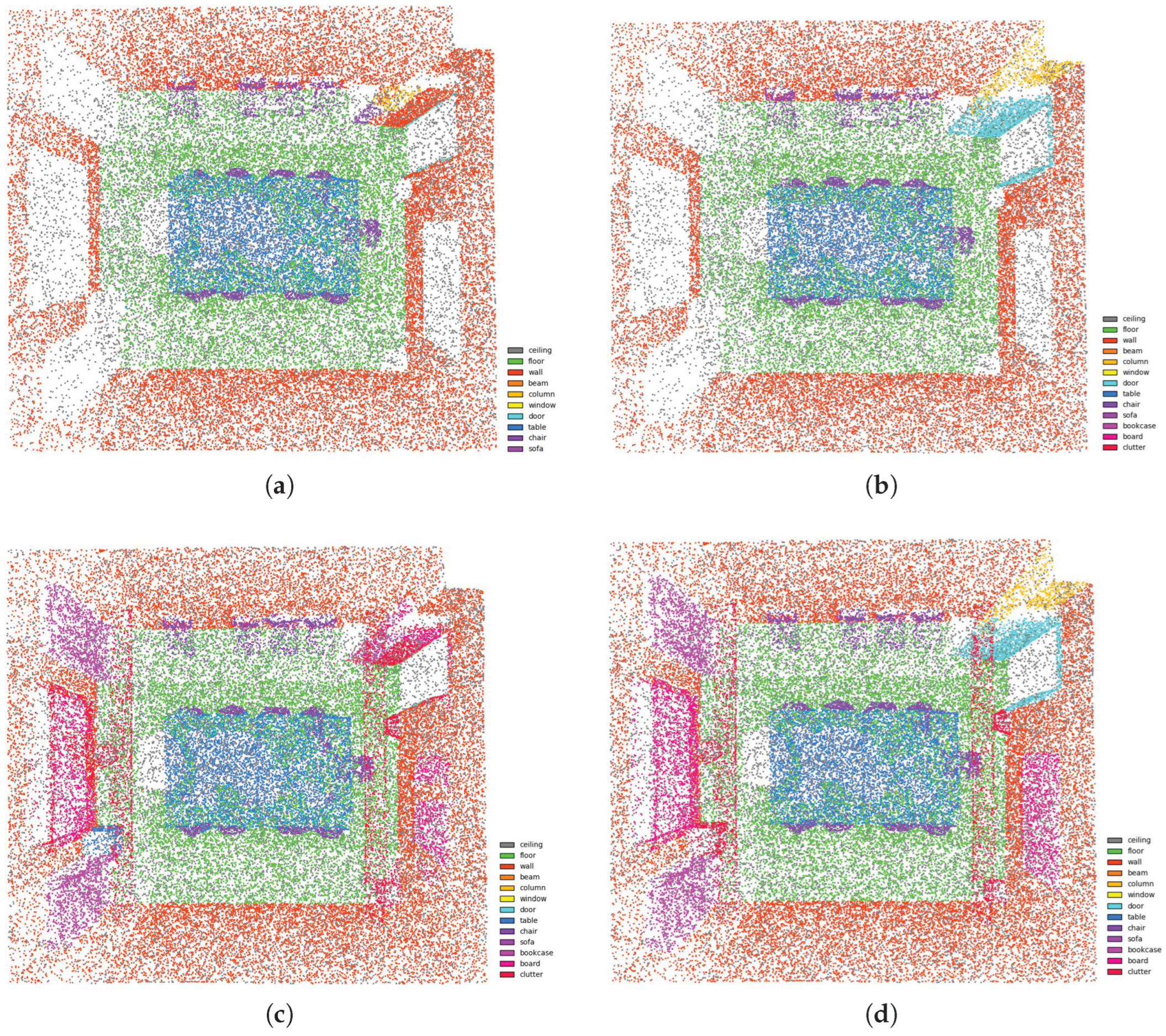

4.2. Comparison Experiments

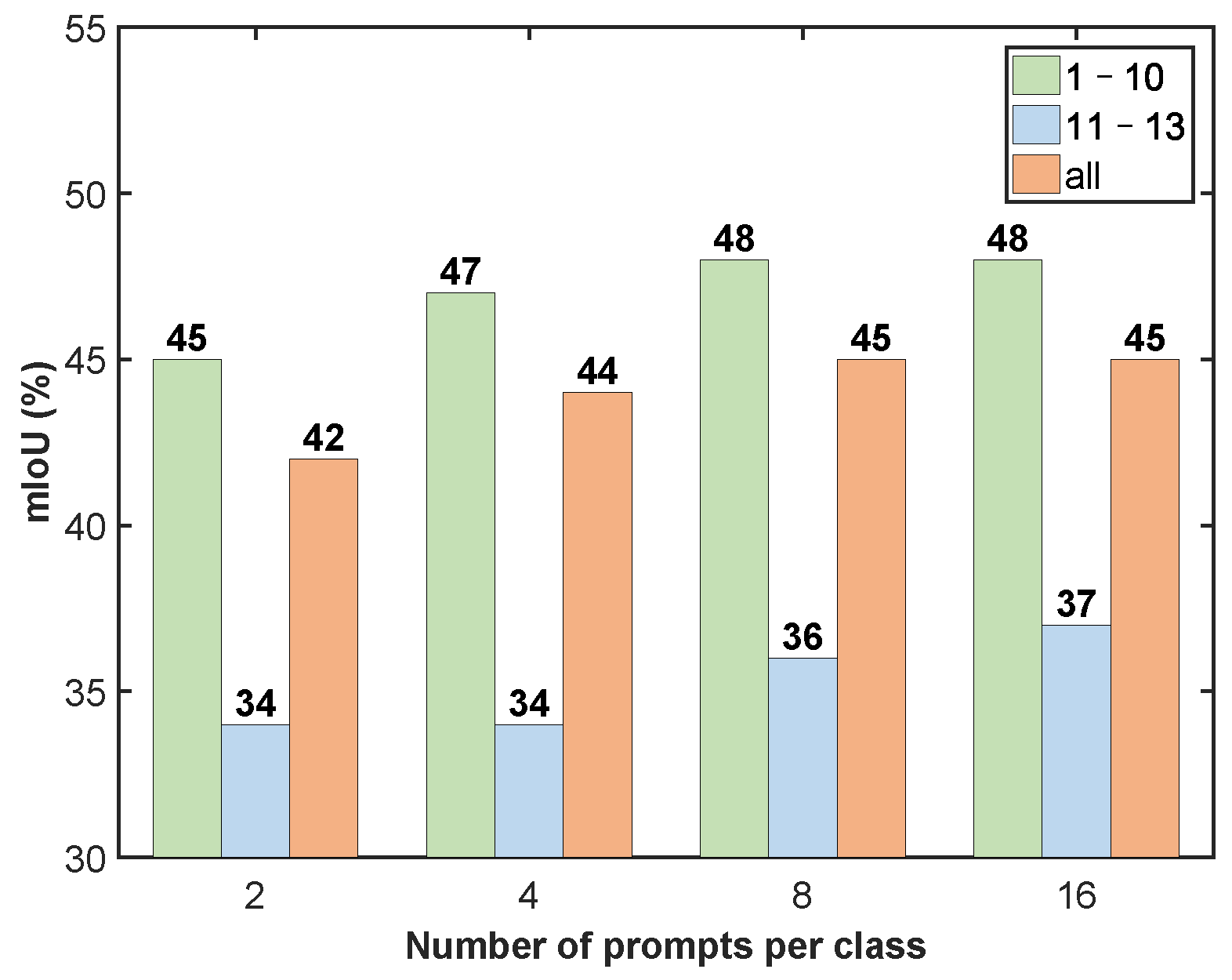

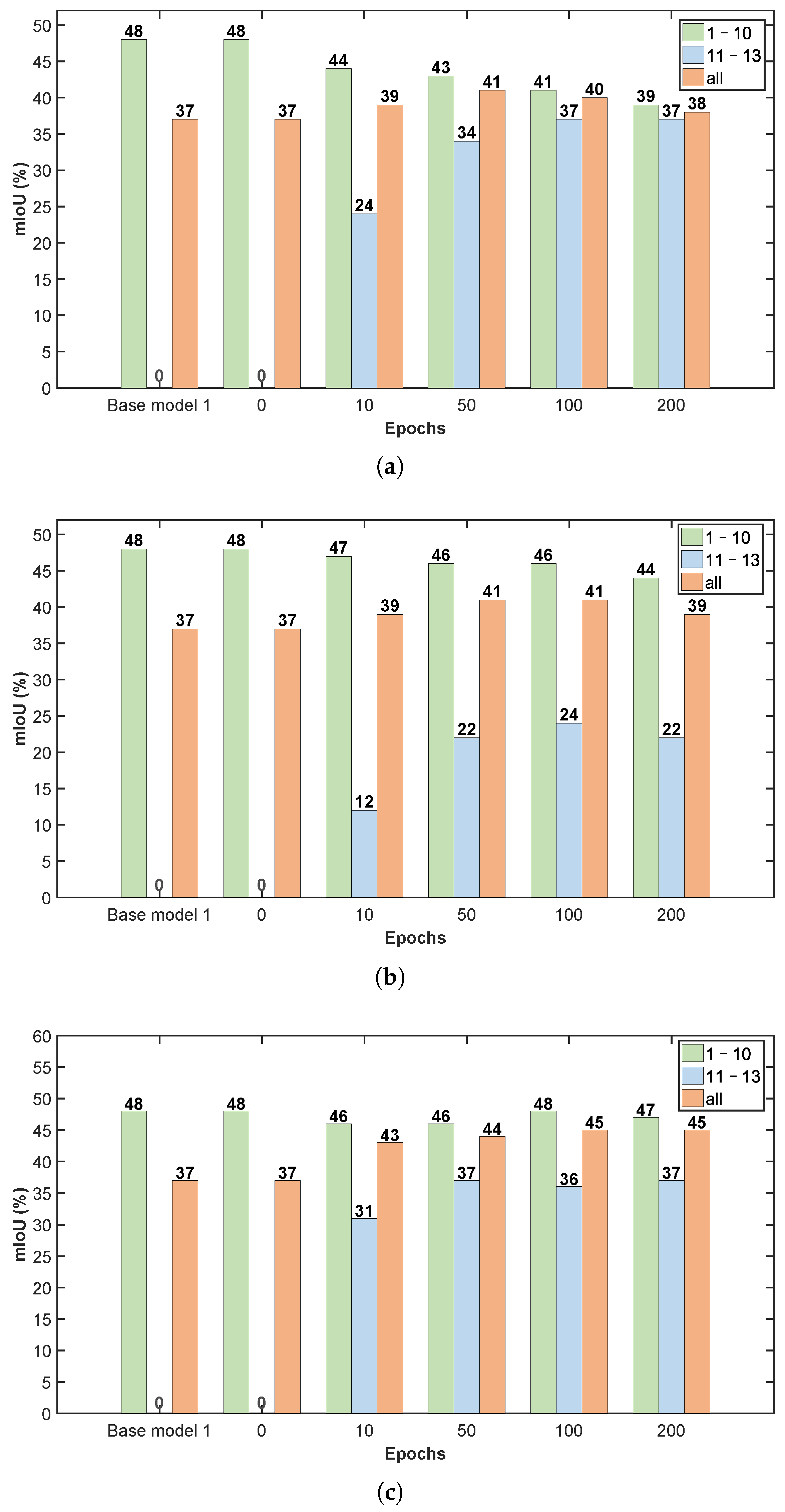

4.3. Ablation Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sarker, S.; Sarker, P.; Stone, G.; Gorman, R.; Tavakkoli, A.; Bebis, G.; Sattarvand, J. A comprehensive overview of deep learning techniques for 3D point cloud classification and semantic segmentation. Mach. Vis. Appl. 2024, 35, 67. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A comprehensive survey of continual learning: Theory, method and application. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5362–5383. [Google Scholar] [CrossRef] [PubMed]

- Zhou, D.W.; Wang, Q.W.; Qi, Z.H.; Ye, H.J.; Zhan, D.C.; Liu, Z. Class-incremental learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9851–9873. [Google Scholar] [CrossRef] [PubMed]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. icarl: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Douillard, A.; Cord, M.; Ollion, C.; Robert, T.; Valle, E. Podnet: Pooled outputs distillation for small-tasks incremental learning. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 86–102. [Google Scholar]

- Gao, R.; Liu, W. Ddgr: Continual learning with deep diffusion-based generative replay. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: Cambridge, MA, USA, 2023; pp. 10744–10763. [Google Scholar]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.; Lee, K.; Shin, J.; Lee, H. Overcoming catastrophic forgetting with unlabeled data in the wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 312–321. [Google Scholar]

- Gao, Z.; Han, S.; Zhang, X.; Xu, K.; Zhou, D.; Mao, X.; Dou, Y.; Wang, H. Maintaining fairness in logit-based knowledge distillation for class-incremental learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 16763–16771. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef] [PubMed]

- Benzing, F. Unifying importance based regularisation methods for continual learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; PMLR: Cambridge, MA, USA, 2022; pp. 2372–2396. [Google Scholar]

- Yan, S.; Xie, J.; He, X. Der: Dynamically expandable representation for class incremental learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3014–3023. [Google Scholar]

- Douillard, A.; Ramé, A.; Couairon, G.; Cord, M. Dytox: Transformers for continual learning with dynamic token expansion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9285–9295. [Google Scholar]

- Wang, Z.; Zhang, Z.; Ebrahimi, S.; Sun, R.; Zhang, H.; Lee, C.Y.; Ren, X.; Su, G.; Perot, V.; Dy, J.; et al. Dualprompt: Complementary prompting for rehearsal-free continual learning. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 631–648. [Google Scholar]

- Dong, J.; Cong, Y.; Sun, G.; Ma, B.; Wang, L. I3dol: Incremental 3d object learning without catastrophic forgetting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 6066–6074. [Google Scholar]

- Zamorski, M.; Stypułkowski, M.; Karanowski, K.; Trzciński, T.; Zięba, M. Continual learning on 3d point clouds with random compressed rehearsal. Comput. Vis. Image Underst. 2023, 228, 103621. [Google Scholar] [CrossRef]

- Liu, Y.; Cong, Y.; Sun, G.; Zhang, T.; Dong, J.; Liu, H. L3DOC: Lifelong 3D object classification. IEEE Trans. Image Process. 2021, 30, 7486–7498. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhong, L.; Zhuang, H. ReFu: Recursive Fusion for Exemplar-Free 3D Class-Incremental Learning. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 3396–3405. [Google Scholar]

- Chowdhury, T.; Cheraghian, A.; Ramasinghe, S.; Ahmadi, S.; Saberi, M.; Rahman, S. Few-shot class-incremental learning for 3d point cloud objects. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 204–220. [Google Scholar]

- Yang, Y.; Hayat, M.; Jin, Z.; Ren, C.; Lei, Y. Geometry and uncertainty-aware 3d point cloud class-incremental semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21759–21768. [Google Scholar]

- Boudjoghra, M.E.A.; Lahoud, J.; Cholakkal, H.; Anwer, R.M.; Khan, S.; Khan, F.S. Continual Learning and Unknown Object Discovery in 3D Scenes via Self-distillation. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 416–431. [Google Scholar]

- Su, Y.; Chen, S.; Wang, Y.G. Balanced residual distillation learning for 3D point cloud class-incremental semantic segmentation. Expert Syst. Appl. 2025, 269, 126399. [Google Scholar] [CrossRef]

- Cermelli, F.; Mancini, M.; Bulo, S.R.; Ricci, E.; Caputo, B. Modeling the background for incremental learning in semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9233–9242. [Google Scholar]

- Douillard, A.; Chen, Y.; Dapogny, A.; Cord, M. Plop: Learning without forgetting for continual semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4040–4050. [Google Scholar]

- Lin, Z.; Wang, Z.; Zhang, Y. Continual semantic segmentation via structure preserving and projected feature alignment. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 345–361. [Google Scholar]

- Zhang, C.B.; Xiao, J.W.; Liu, X.; Chen, Y.C.; Cheng, M.M. Representation compensation networks for continual semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7053–7064. [Google Scholar]

- Xiao, J.W.; Zhang, C.B.; Feng, J.; Liu, X.; van de Weijer, J.; Cheng, M.M. Endpoints weight fusion for class incremental semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7204–7213. [Google Scholar]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3d semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1534–1543. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. ScanNet: Richly-annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Methods | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1–8 | 9–13 | All | 1–10 | 11–13 | All | 1–12 | 13 | All | |

| FT | 36.06 | 39.81 | 37.50 | 41.21 | 36.69 | 40.17 | 41.04 | 25.36 | 39.84 |

| LWF [7] | 50.88 | 20.01 | 39.01 | 46.04 | 24.13 | 40.99 | 44.55 | 24.37 | 42.99 |

| 3DPC [20] | 48.94 | 39.56 | 45.33 | 45.15 | 45.33 | 45.19 | 44.08 | 35.69 | 43.43 |

| BalDis [22] | 50.68 | 40.62 | 46.81 | 49.20 | 44.12 | 47.26 | 46.94 | 38.35 | 46.28 |

| CosPrompt | 49.99 | 37.21 | 45.07 | 47.93 | 36.44 | 45.28 | 46.84 | 25.50 | 45.20 |

| Joint | 53.37 | 42.73 | 49.28 | 51.79 | 40.90 | 49.28 | 50.00 | 40.60 | 49.28 |

| Methods | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1–15 | 16–20 | All | 1–17 | 18–20 | All | 1–19 | 20 | All | |

| FT | 9.88 | 13.47 | 10.41 | 8.67 | 10.17 | 8.90 | 8.26 | 10.67 | 8.85 |

| LWF [7] | 30.07 | 9.07 | 24.81 | 25.71 | 10.70 | 23.46 | 24.48 | 10.14 | 23.76 |

| 3DPC [20] | 34.16 | 13.43 | 28.98 | 28.38 | 14.31 | 26.27 | 25.74 | 12.62 | 25.08 |

| BalDis [22] | 33.82 | 15.30 | 29.19 | 31.40 | 15.63 | 29.04 | 30.02 | 15.57 | 29.30 |

| CosPrompt | 40.71 | 10.45 | 33.15 | 35.46 | 11.59 | 31.88 | 33.60 | 10.52 | 32.45 |

| Joint | 42.42 | 15.63 | 35.72 | 38.82 | 18.13 | 35.72 | 36.78 | 15.63 | 35.72 |

| CosPrompt | PLG | LWF | 1–10 | 11–13 | All |

|---|---|---|---|---|---|

| √ | √ | 45.16 | 38.54 | 43.63 | |

| √ | √ | 41.26 | 39.01 | 40.74 | |

| √ | √ | 47.06 | 37.50 | 44.85 |

| Metrics | Total Params | Trainable | GPU Mem. | FLOPs | Inc. T | Inf. T | Freeze BB |

|---|---|---|---|---|---|---|---|

| 3DPC [20] | 0.41 M | 0.41 M | 14.87 GB | 2.61 GFLOPs | 5.52 h | 2.29 ms | No |

| CosPrompt | 21.9 M | 4.10 M | 10.09 GB | 9.83 GFLOPs | 2.00 h | 6.42 ms | Yes |

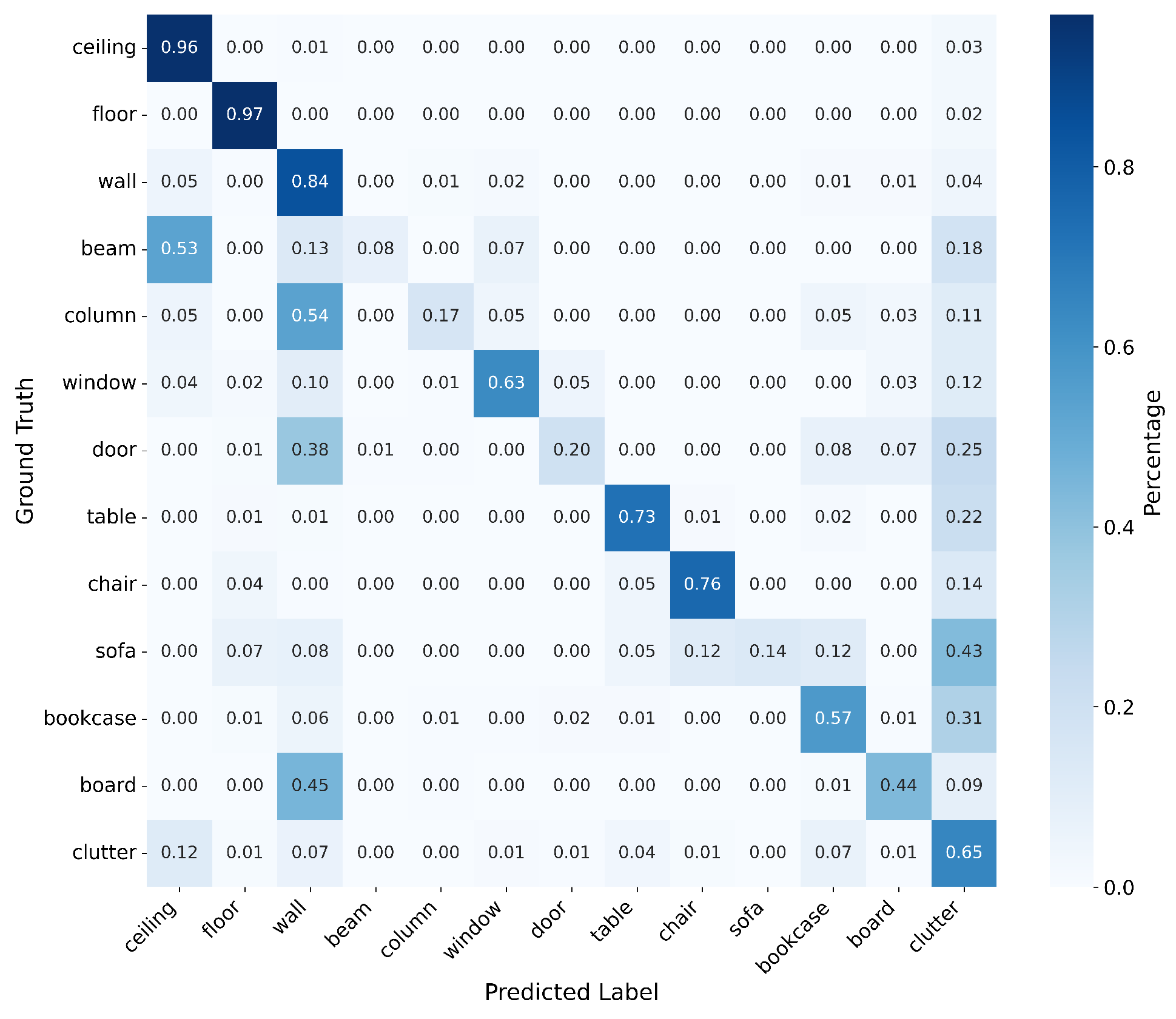

| Class | Ceil. | Floor | Wall | Beam | Col. | Win. | Door | Table | Chair | Sofa | Book. | Board | Clut. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Density ( points/m2) | 1.5 | 13.2 | 23.2 | 0.03 | 1.3 | 3.3 | 2.4 | 3.4 | 1.7 | 0.1 | 8.4 | 1.0 | 7.1 |

| mIoU (%) | 84.6 | 94.0 | 71.8 | 1.2 | 14.0 | 51.4 | 17.4 | 63.3 | 68.7 | 12.9 | 49.5 | 25.3 | 34.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, L.; Li, H.; Pang, M.; Liu, K.; Han, X.; Xiong, F. Cosine Prompt-Based Class Incremental Semantic Segmentation for Point Clouds. Algorithms 2025, 18, 648. https://doi.org/10.3390/a18100648

Guo L, Li H, Pang M, Liu K, Han X, Xiong F. Cosine Prompt-Based Class Incremental Semantic Segmentation for Point Clouds. Algorithms. 2025; 18(10):648. https://doi.org/10.3390/a18100648

Chicago/Turabian StyleGuo, Lei, Hongye Li, Min Pang, Kaowei Liu, Xie Han, and Fengguang Xiong. 2025. "Cosine Prompt-Based Class Incremental Semantic Segmentation for Point Clouds" Algorithms 18, no. 10: 648. https://doi.org/10.3390/a18100648

APA StyleGuo, L., Li, H., Pang, M., Liu, K., Han, X., & Xiong, F. (2025). Cosine Prompt-Based Class Incremental Semantic Segmentation for Point Clouds. Algorithms, 18(10), 648. https://doi.org/10.3390/a18100648