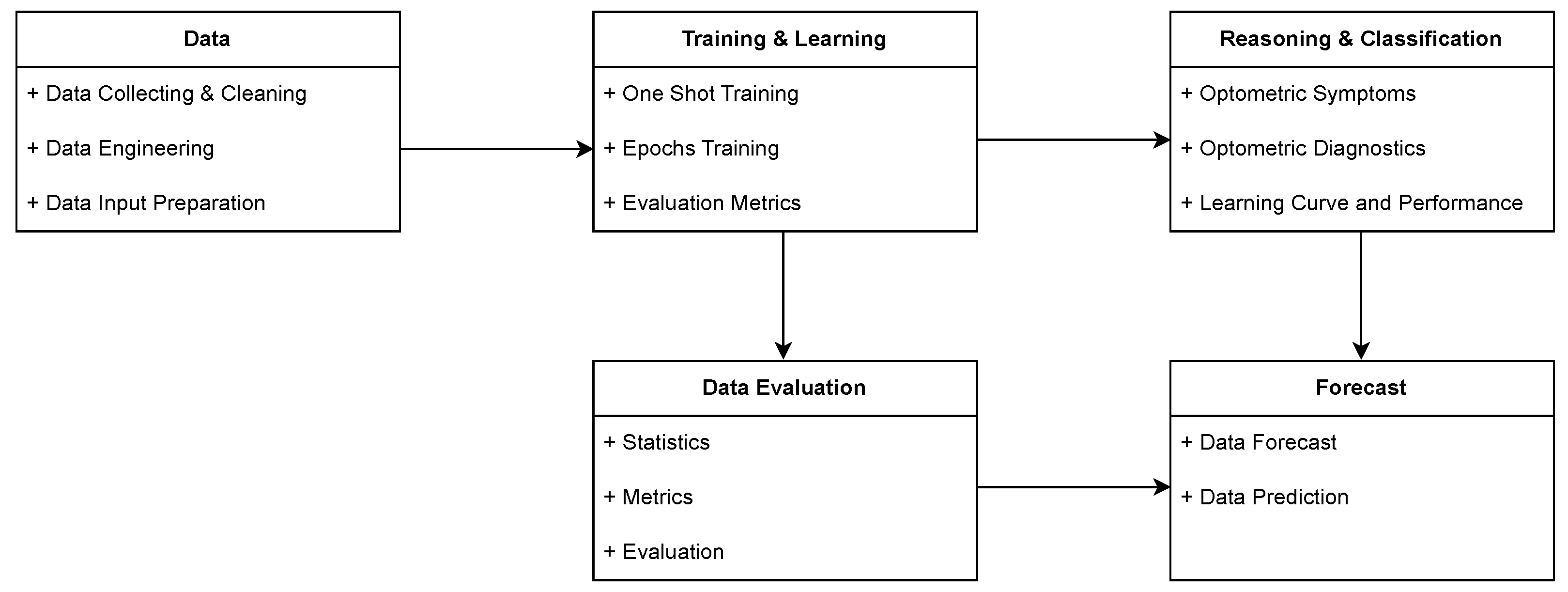

The initial stage of the process encompasses data collection, cleaning, engineering, and input preparation to ensure high-quality input for ML and AI models. Optometry-related academic articles published between 2000 and 2023 were retrieved from the Web of Science database. To refine the dataset, all non-essential metadata, such as DOI, webpage links, and author information, were removed. Subsequently, the dataset underwent preprocessing to optimize its structure for ML algorithms. This step ensured that the raw optometric data was adequately processed and formatted to enhance its suitability for subsequent ML and AI analyses.

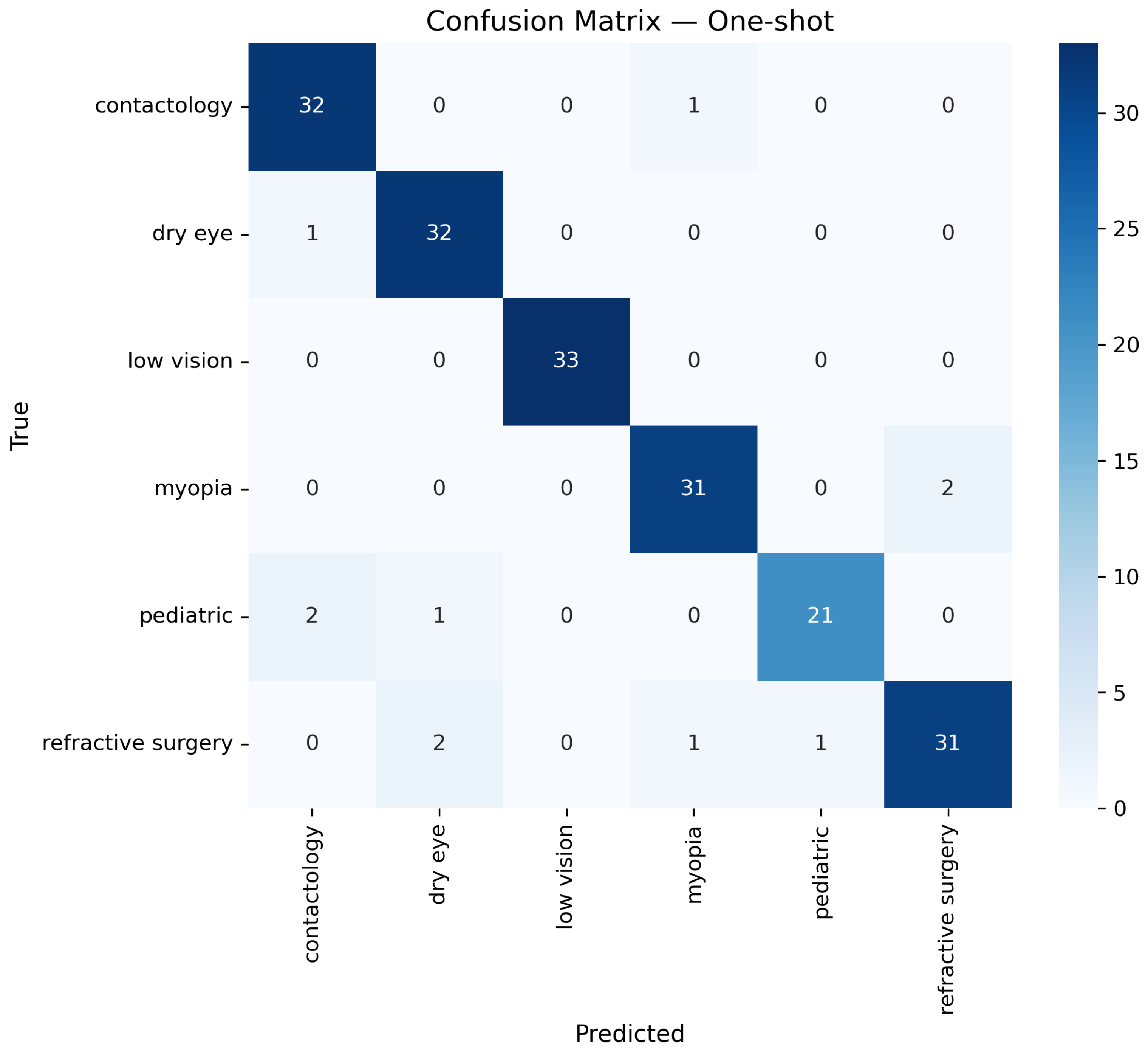

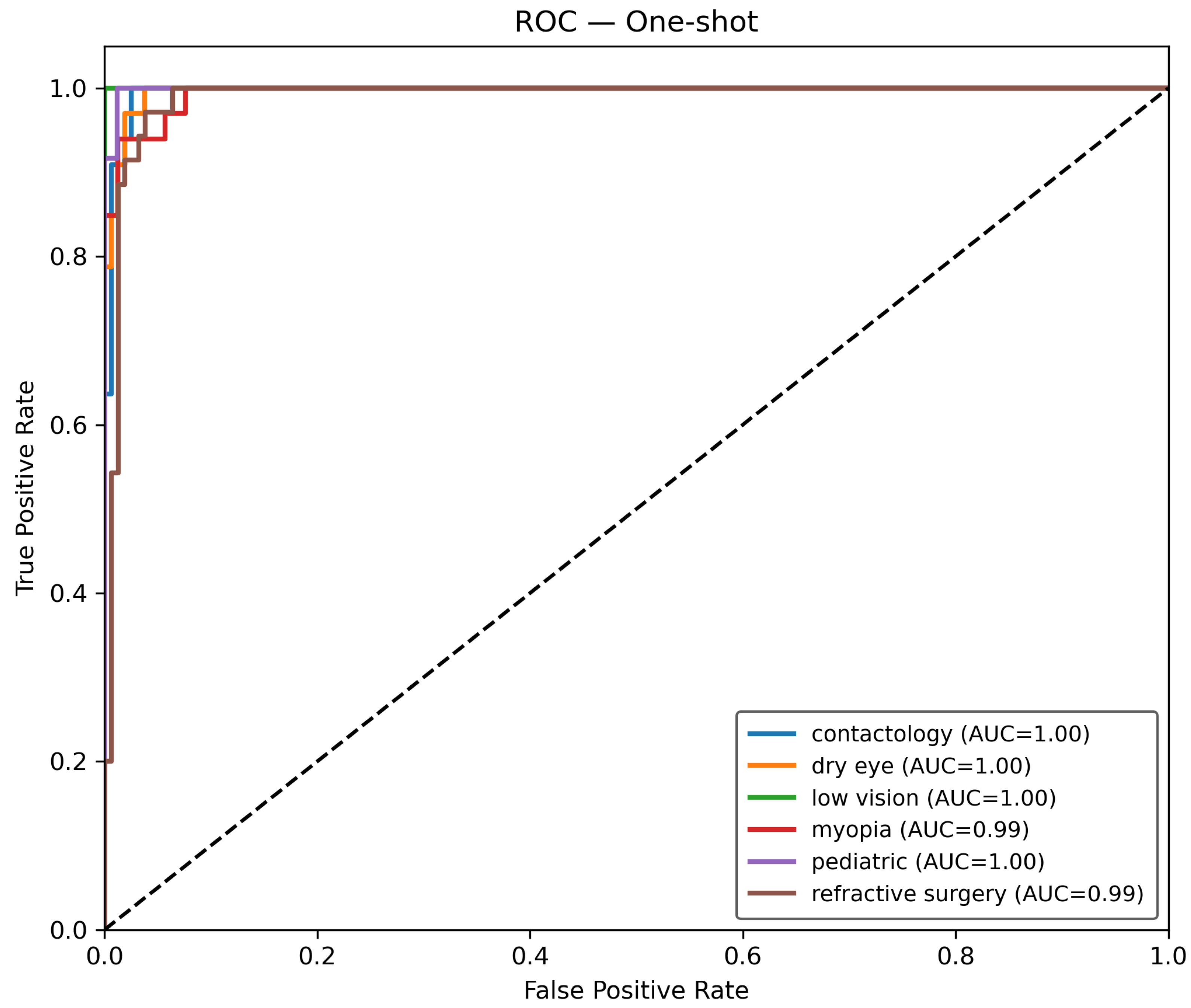

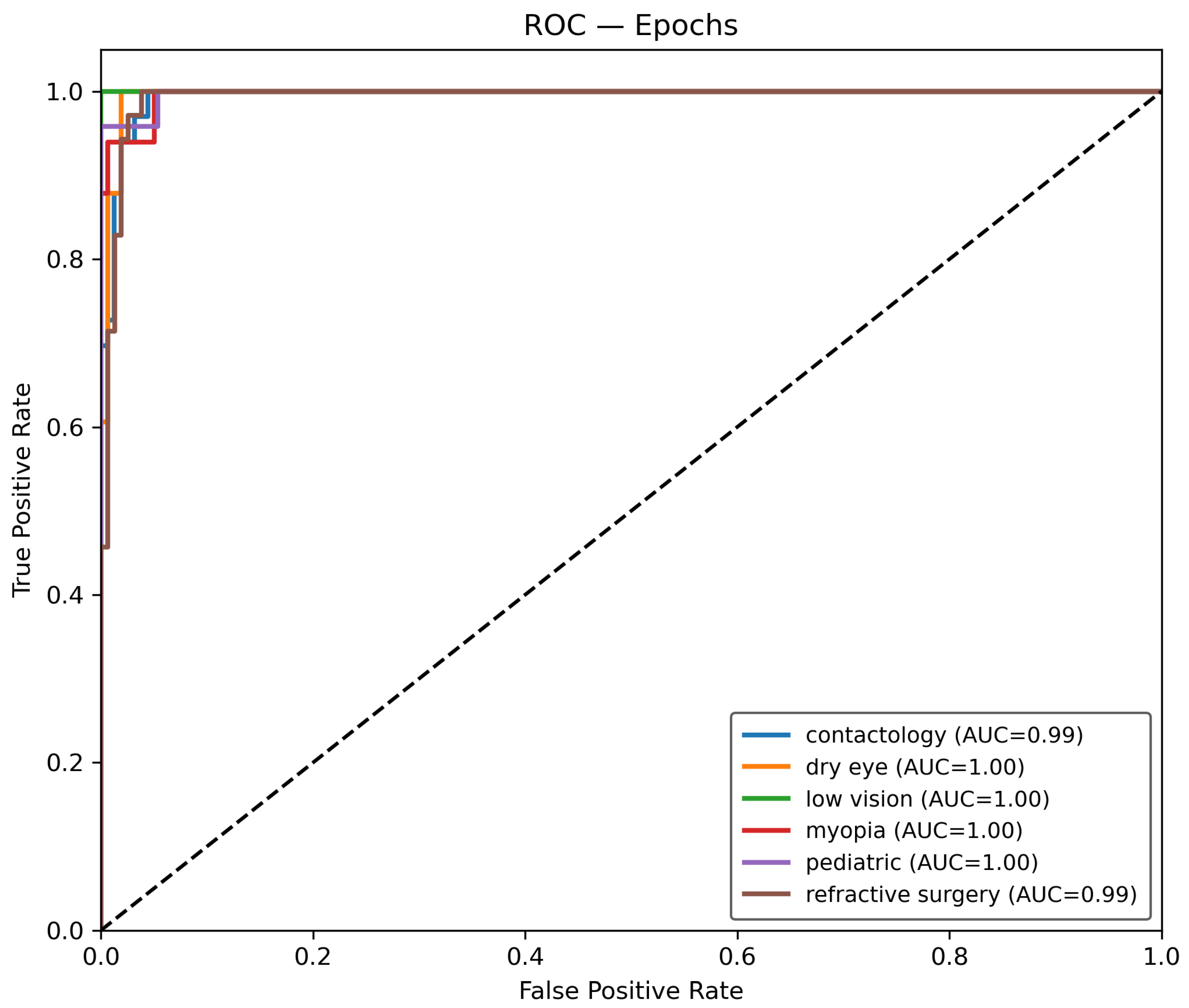

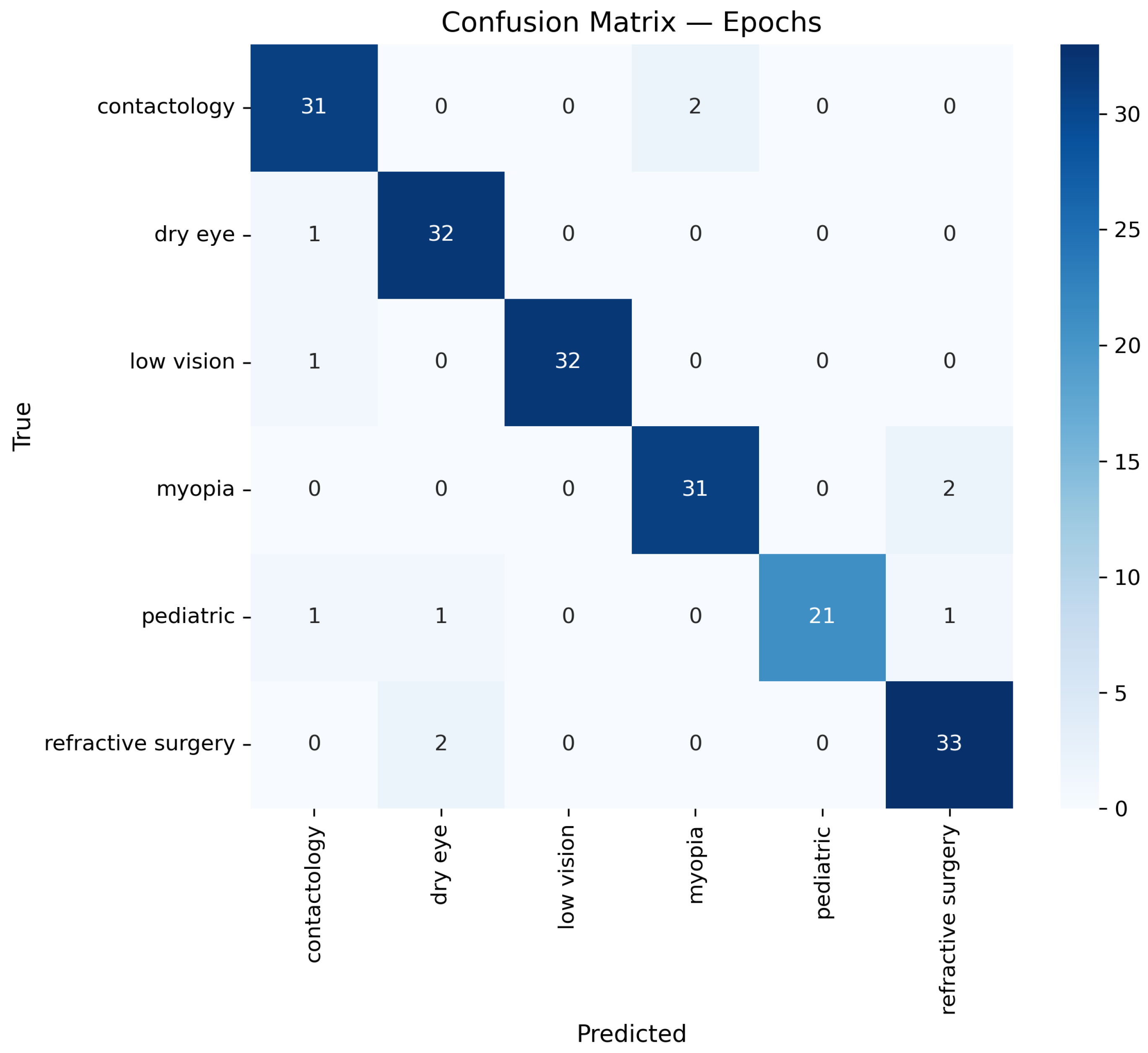

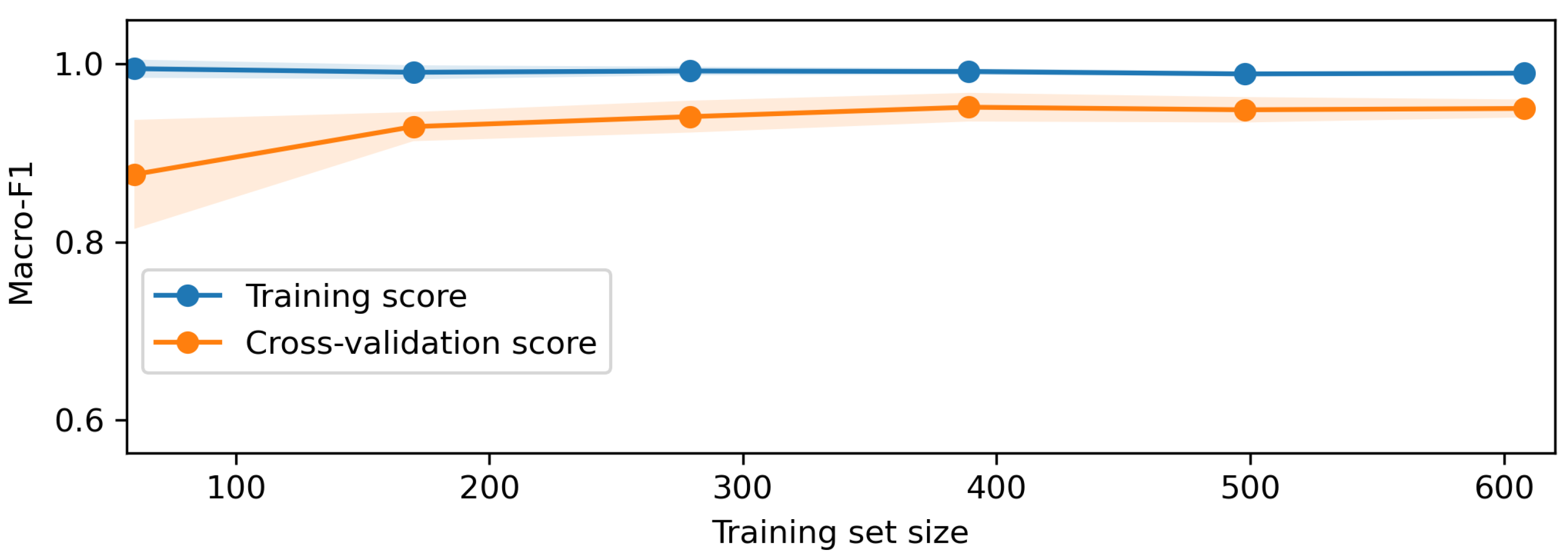

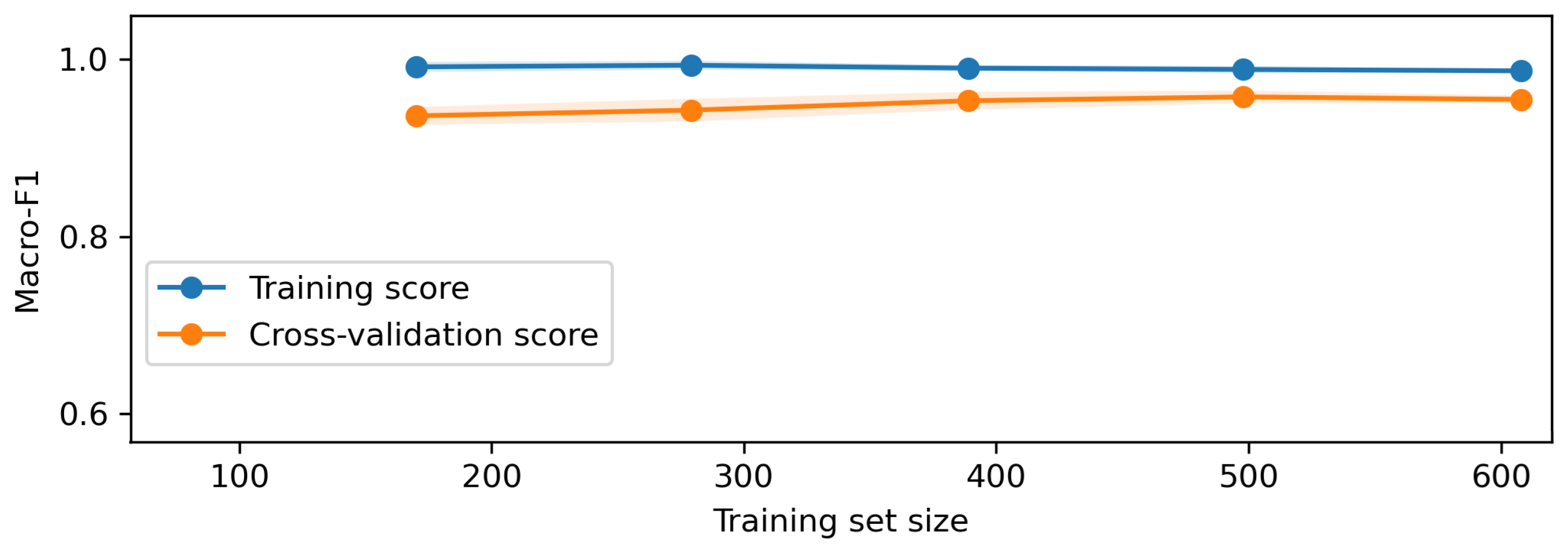

Once the data is prepared, it is used in the O-RF ML workflow comprising one-shot training and epoch-based training. One-shot training refers to models trained with minimal supervised labeled data. Regarding the epoch training, it involved iterative learning over multiple cycles, allowing models to refine their weights and optimize performance. After these two different approaches, the evaluation metrics were computed to ensure the reliability and validity of the models by assessing classification accuracy, precision, recall, F1-score, and AUC-ROC.

The AI block focuses on the optometric data recognition, diagnostic inference, and learning curve assessment. The optometric data extraction involves recognizing features from the raw data using NLP capabilities. This optometric data diagnostic leverage the trained AI models to provide automated assessments. The learning curve and performance analysis provide an overview of the model’s ability and capacity in the performance of the diagnosis tasks.

The data science module underpins the entire framework, integrating statistical methodologies, metric analysis, and evaluation techniques. These quantitative methods ensure that AI models remain interpretable and statistically valid, fostering transparency and trust for data forecasts and diagnoses.

2.2. O-RF Mathematical Formulation

The O-RF model has undergone one-shot and epoch training. In order to do so, it is necessary to train O-RF in identifying and diagnosing each type of data within the entire dataset. Regarding this, a supervised training method was chosen, where data is labeled in accordance with its characteristics. The number of data classified under each label is presented in

Table 2.

Having the dataset and the training data available, the next step is to perform the mathematical formulation that transforms the

Table 1 and

Table 2 raw text data into a numerical feature representation using the Term Frequency-Inverse Document Frequency (TF-IDF) method. This process is essential for converting unstructured textual data into a structured format suitable for machine learning models.

For any given document

and a term

, let

denote the frequency of term

in document

. The normalized term frequency is defined as:

In Equation (

1), numerator

represents the raw count of term

in document

, and the denominator sums the counts of all

d terms in

, thus normalizing the term frequency relative to the document length. The inverse document frequency quantifies the importance of the term

across the entire corpus

consisting of

N documents.

In Equation (

2),

N is the total number of documents, and

denotes the number of documents in which term

appears. The addition of 1 in the denominator prevents division by zero, ensuring numerical stability.

The TF-IDF weight for term

in document

is the product of the normalized term frequency and the inverse document frequency.

Equation (

3) combines local term frequency with global inverse document frequency, resulting in a weight

that reflects both the importance of

in

and its rarity across the corpus.

With the TF-IDF weights computed, each document

can be represented as a

d-dimensional feature vector.

In Equation (

4),

encapsulates the TF-IDF weights for all

d terms in the vocabulary, where each

is defined in (

3).

The overall transformation from raw text to a numerical feature vector is encapsulated by the mapping function

T.

Equation (

5) represents the complete process for converting a document

into its corresponding TF-IDF feature vector, where the computation for each term

is performed as described in the previous steps.

Despite a careful data labeling process to avoid class imbalance, an automated process to deal specifically with detected imbalance in the data was created for O-RF. The process to deal with this imbalance can be mathematically described. Let be the set of samples belonging to the minority class, where is the number of minority samples. To address class imbalance, the Synthetic Minority Oversampling Technique (SMOTE) is employed to generate synthetic samples by interpolating between existing minority samples and their nearest neighbors in the feature space.

For each minority sample

, the

k nearest neighbors

are identified, typically using the Euclidean distance. Then, for each selected neighbor

, a synthetic sample

is generated using Equation (

6).

In Equation (

6),

is a random variable drawn from a uniform distribution

. This ensures that the synthetic sample

lies on the line segment between

and

. If an oversampling ratio

is defined (with, for instance,

implying equal numbers of minority and majority class samples), the number of synthetic samples

required to achieve the desired balance.

Equation (

7) determines the total number of new synthetic samples to be generated so that the final minority class count becomes

.

Generating synthetic samples via interpolation as shown in (

6), ensuring that new samples reside within the local neighborhood of existing minority instances. Adjusting the overall class distribution according to the desired oversampling ratio defined in (

7). This mathematical framework allows the model to learn from a more balanced dataset if a data imbalance is detected, thus mitigating biases introduced by the original class imbalance.

The O-RF uses a derivation of the original RF since it relies on training exclusively with optometry academic articles. For the O-RF model, let denote the training dataset, where is the feature vector and is the class label of the i-th sample. The O-RF is an ensemble method that aggregates predictions from T decision trees to improve the classification.

For each tree

t (

), a bootstrap sample

is drawn from

with replacement. At each node of tree

t, a random subset of features is selected from the full set of

d features. This subset is given by Equation (

8) where

m is a hyperparameter such that

.

At each node, the optimal split is determined by selecting the feature

and a threshold

that minimizes an impurity measure

. The choice is the Gini impurity because it measures how often a randomly chosen element would be incorrectly labeled if it were labeled randomly and independently. Regarding this, the Gini impurity is defined for a region

R as Equation (

9).

In Equation (

9), the

is the proportion of samples in

R that belong to class

c. The optimal split

is obtained by solving Equation (

10).

where

and

are the two regions created by splitting

R with the candidate

, and

denotes the number of samples in region

R.

Each decision tree

partitions the feature space into disjoint regions

, where each region

is associated with class

c. The prediction of tree

t for a new sample

is calculated per Equation (

11).

where

is the indicator function that equals 1 if

belongs to region

and 0 otherwise.

The final prediction

of the O-RF is obtained by aggregating the predictions of all

T trees via majority voting using Equation (

12), where mode denotes the most frequently occurring class among the individual tree predictions.

One of the key features for every ML model is the chosen hyperparameters since they are crucial to the model tuning and outcome. Let the hyperparameter configuration be denoted as per Equation (

13).

where

represents the discrete search space formed by the Cartesian product of the possible values for each hyperparameter.

The goal of grid search is to identify the optimal configuration

that maximizes the performance of the model, as measured by a cross-validation metric. This is mathematically formulated in Equation (

14).

where

denotes the cross-validation score obtained for configuration

. In a

K-fold cross-validation framework, the cross-validation score is calculated as the average performance across all the

K folds per Equation (

15).

where

is the performance metric measured on the

i-th fold using the hyperparameter configuration

. The optimized hyperparameters for the O-RF model are presented in

Table 3.

The last step in to compute the performance metrics for the O-RF model. In order to assess all the relevant metrics, let us consider

N to be the total number of samples in the test set. For each sample

i, let

denote the true label and

the predicted label. The first performance metric calculated per Equation (

16) is accuracy, which measures the proportion of correctly classified samples, or in other terms, accuracy gives how often the model is correct.

In Equation (

16),

is a binary indicator function, which is 1 when the condition is true and 0 otherwise.

Another important indicator in ML models evaluation is precision. The precision metric, given in Equation (

17), provides insights into the model’s ability to correctly predict positive instances while minimizing the risk of false predictions.

where for any specific class

c,

is the number of true positives,

is the number of false positives, and

is the number of false negatives.

Recall, also known as sensitivity or true positive rate, is also another important metric in classification that emphasizes the model’s ability to identify all relevant instances. Recall, given by Equation (

18), measures the proportion of actual positive cases correctly identified by the model.

The F1-score for class

c, calculated by Equation (

19), represents the harmonic mean of precision and recall.

The Receiver Operating Characteristic (ROC) curve is defined by the true positive rate (TPR) given by Equation (

20), and the false positive rate (FPR) given by Equation (

21) for various threshold values

t. For class

c, these are computed as:

where

is the number of true negatives for class

c at threshold

t. The Area Under the Curve (AUC) for class

c is then given by Equation (

22).

which represents the probability that a randomly chosen positive instance is ranked higher than a randomly chosen negative instance.

Another crucial performance metric is specificity, also known as the true negative rate (TNR). Specificity measures the model’s ability to correctly identify negative instances, ensuring that the model minimizes false positives. This metric is defined in Equation (

23).

A high specificity value indicates that the model is effective in recognizing negative instances while reducing the likelihood of false positives.

2.3. Forecast

The ARIMA model is a classical time series forecasting method that captures temporal dependencies in the data through autoregressive (AR) and moving average (MA) components, combined with differencing to induce stationarity. An ARIMA model is denoted as ARIMA(), where p is the order of the autoregressive part, d is the degree of differencing, and q is the order of the moving average part.

Regarding Equation (

24),

denotes the observed time series at time

t,

B is the backshift operator defined by

,

are the autoregressive coefficients,

is the moving average coefficient, and

is a white noise error term.

Once the model is fitted to the historical data, the

h-step-ahead forecast is computed as the conditional expectation using Equation (

25).

where

T is the last observed time point and

represents the information set up to time

T. The term

corresponds to

from Equation (

12), connecting the ML to the forecast model.

With this feature, the ARIMA model examines the time-series data to predict future trends by leveraging historical patterns. This approach aids in understanding how specific categories change over time and in forecasting future developments in optometry. To facilitate this transformation, dimensionality reduction techniques can be employed to process embedding , extracting the most relevant features that highlight key data trends. This step of quantification is essential for effectively capturing the core aspects of the research focus within the high-dimensional space of O-RF embeddings.