This section describes the successive processing phases carried out by a minimalist Transformer module to produce a multipoint forecast of a univariate data series. The relevant Python code can be found in [

19]. Successive processing stages are here showcased on a tiny time series obtained by querying Google Trends [

20] on the term “restaurant”. The series shows a trend (at the time of writing, it is holiday season in Italy and interest in dining out is increasing) as well as seasonality, due to increased interest on weekends. Only 35 data points are considered, 7 of which are kept for validation and 28 are used for training. The series

is the following:

3.1. Encoding

The objective of the encoding component (i.e., the encoder) in a Transformer architecture is to process an input sequence and convert the raw input into a contextually enriched representation that captures both local and global dependencies. This representation can then be used effectively by the rest of the model.

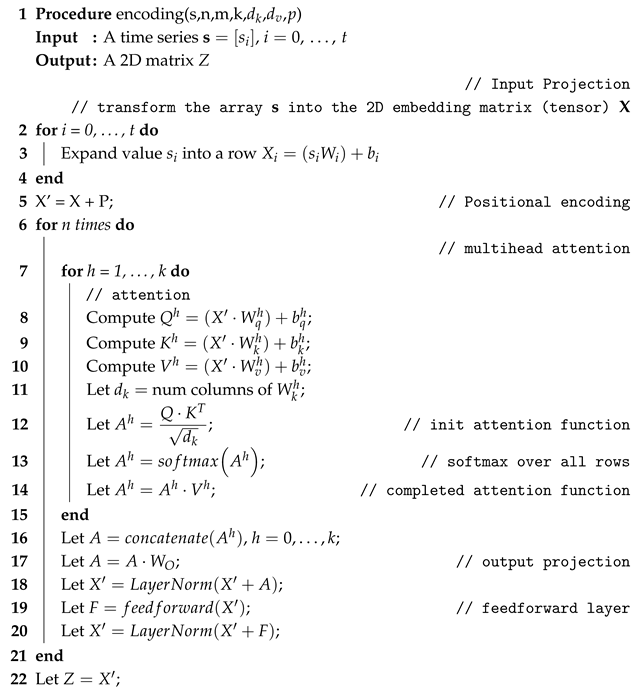

The general structure of the encoding module is represented by the pseudocode in Algorithm 1 and sketched in

Figure 2. All intermediate numerical results obtained for the restaurant series case study are reported in the text or in

Appendix A when they are too big to be presented inline. This is to enable the interested reader to immediately grasp the dimensionalities and values involved in this tiny example, and possibly to follow the corresponding evolution of a run of the code provided in [

19].

In the case of the running example, the following input parameter values are used: n = 7, m = 4, k = 2, = = 2, p = 16.

The individual steps are detailed below.

| Algorithm 1: The encoding module |

![Algorithms 18 00645 i001 Algorithms 18 00645 i001]() |

The input consists of the data series to be forecast—in this case, it is an array and of six control hyperparameters.

For the restaurant time series, a straightforward preprocessing step has been applied: min-max normalization of the raw data. Subsequently, each sequence was constructed using a sliding window of length over the series.

Single scalar values for each single timestep may not be expressive enough to capture contextual information. Each data point is projected into a higher-dimensional space where nonlinear dependencies between features can be easier to identify.

In the minimalist Transformer model proposed in this study, the projections, also known as embeddings, were obtained by multiplying each datapoint by a shared real-valued vector initialized as a random Gaussian vector, and by adding a bias array . These values can later be learned. In this way, a matrix is computed, which contains, for each original timestep, its m-dimensional representation.

In the restaurant series, the projections were based on vectors of 4 values, obtaining a set of matrices, each of which with

rows and

columns, leaving 4 parameters to be learned for the projection vector and the other 4 for the bias array. Each matrix

X represents a window over the input time series; the first of these matrices is presented in the

Appendix A as Equation (

A1). The learned arrays

are also shown in the

Appendix A, as Equation (

A2).

The basic attention mechanism, which will be implemented in lines 12–14, is insensitive to the permutation of the input values [

2] and is in itself unusable for modeling data series. Therefore, the model does not inherently deal with the sequential position of the elements: the attention only depends on the set of elements, not on their order. To inject ordering information, each input embedding

is added to a positional vector. This is obtained by means of a matrix

, which yields the transformed input:

where

n denotes the sequence length and

m denotes the embedded dimension.

The matrix P can be either static, remaining fixed during the training phase, or learnable, changing at each step to better adapt to the task at hand. It can also be either absolute, depending solely on the element’s position, or relative, in which case the element itself influences the values of the matrix’s rows.

In the proposed minimalist architecture, matrix

is an absolute, learnable positional encoding, in the restaurant use case consisting of 7 rows and 4 columns. This equates to a further 28 parameters that need to be learned. The matrix

P used in the running example is presented in the

Appendix A as Equation (

A3).

The main loop of the algorithm repeats a block of code called an Encoding Block n times. In it, matrix X is first passed to a module implementing Multihead Attention (Lines 7–15). The outputs of the heads are then concatenated and projected to keep the size consistent (Lines 16–17). Next, a residual connection with a normalization is applied (Line 18). The output is then passed through a small feedforward network, after which another residual connection and normalization are applied (Lines 19–20). It is important to note that each iteration uses its own set of parameters for the projections, attention weights, and feedforward layers; parameters are not shared between iterations. All these steps are detailed in the following.

This loop iterates a basic attention mechanism that dynamically weighs the contribution of each past point in the series when computing the representation of those under scrutiny. It computes a set of attention scores relating the “query” vector of the current point and “key” vectors of all sequence values. In the sample application, a query comes from the embedding of the last known observation, while the keys correspond to those of all past observations. The resulting attention weights determine which points matter most and the forecast is then given by a weighted sum of the corresponding “value” vectors.

Queries (the elements currently focused on), keys (descriptors of the corresponding values), and values (the actual information) are all represented as matrices. Typically, queries and keys share the same inner dimension

, while values may have a different dimension

. At each iteration, the corresponding set of query, key, and value is referred to as a

head. The matrices and the bias identified for the two heads used in the running example are reported in the

Appendix A in Equations (

A4) and (

A5).

Line 8: generates the query matrix and projects the input embeddings into vectors that represent what has been looked for in the past. This is achieved by using a dynamic parameter matrix and a bias array , which are learnt.

Line 9: generates the key matrix and projects the input embeddings into vectors that represent the information content of each corresponding embedding. This is achieved by using a dynamic parameter matrix and a bias array , which are learnt.

Line 10: generates the value matrix and projects the input embeddings into vectors that represent the relevant information of each available embedding. This is achieved by using a dynamic parameter matrix and a bias array , which are learnt.

Line 11: initializes the scaling factor.

Line 12: implements the first part of the attention function, in this case using the

Scaled Dot-Product Attention [

2]. The similarity between sequence elements is computed from

Q and

K through a dot product. The key matrix is transposed so that the operation compares each feature of every query with the corresponding feature of every key. This initial

A matrix will contain similarity values

between every query vector and every key vector.

Line 13: transforms the similarity values into ‘probability’ scores. The operator is applied to all elements over every row: . In this way, for every query vector, normalized weights of similarity towards key vectors are obtained.

Line 14: completes the computation of the attention function by implementing a weighted sum of the value vectors, , thereby aggregating information from the relevant timesteps.

Line 16: lines 7–15 are repeated

k times, each time obtaining an attention matrix

,

. This line concatenates all these matrices into a single bigger one,

. The matrix

A obtained for the running example is reported in the

Appendix A as Equation (

A6).

Line 17: since concatenation may modify the dimensionality, a final output projection is applied to ensure that the original size is kept .

In the running example, we set

. This produced 6 matrices, all of size

, totaling 48 more parameters to learn. The output matrix

is, instead, of size

. Matrix

for the running example is reported in Equation (

A7) of the

Appendix A.

Lines 18, 20: (Add and Norm)

This block tries to stabilize training by normalizing activations while keeping a residual path. It is implemented by a layer function , which operates independently for each row i (i.e., for each embedding), computing the mean and the variance , and updating the features of row i as , where are learnable parameters.

In the running example, having set

, and having two different

LayerNorm blocks, 16 parameters were set to be learned. For the first layer:

While for the second layer:

The final processing step of the minimalist Transformer architecture implements a two-layer network with a ReLU activation function between them:

where:

The ReLU function is applied element-wise. The intermediate dimension p is typically chosen to be larger than m to improve model capacity and generalization.

In the model, we set

, meaning a total number of

parameters, as resulting also in

Figure 2. The values learned by the sample architecture is reported in the

Appendix A in Equations (

A8) and (

A9).

The full output, stored in matrix , contains the encoded representation of the input timesteps. In order to distinguish it thereafter, it will be denoted as Z.

3.2. Decoding

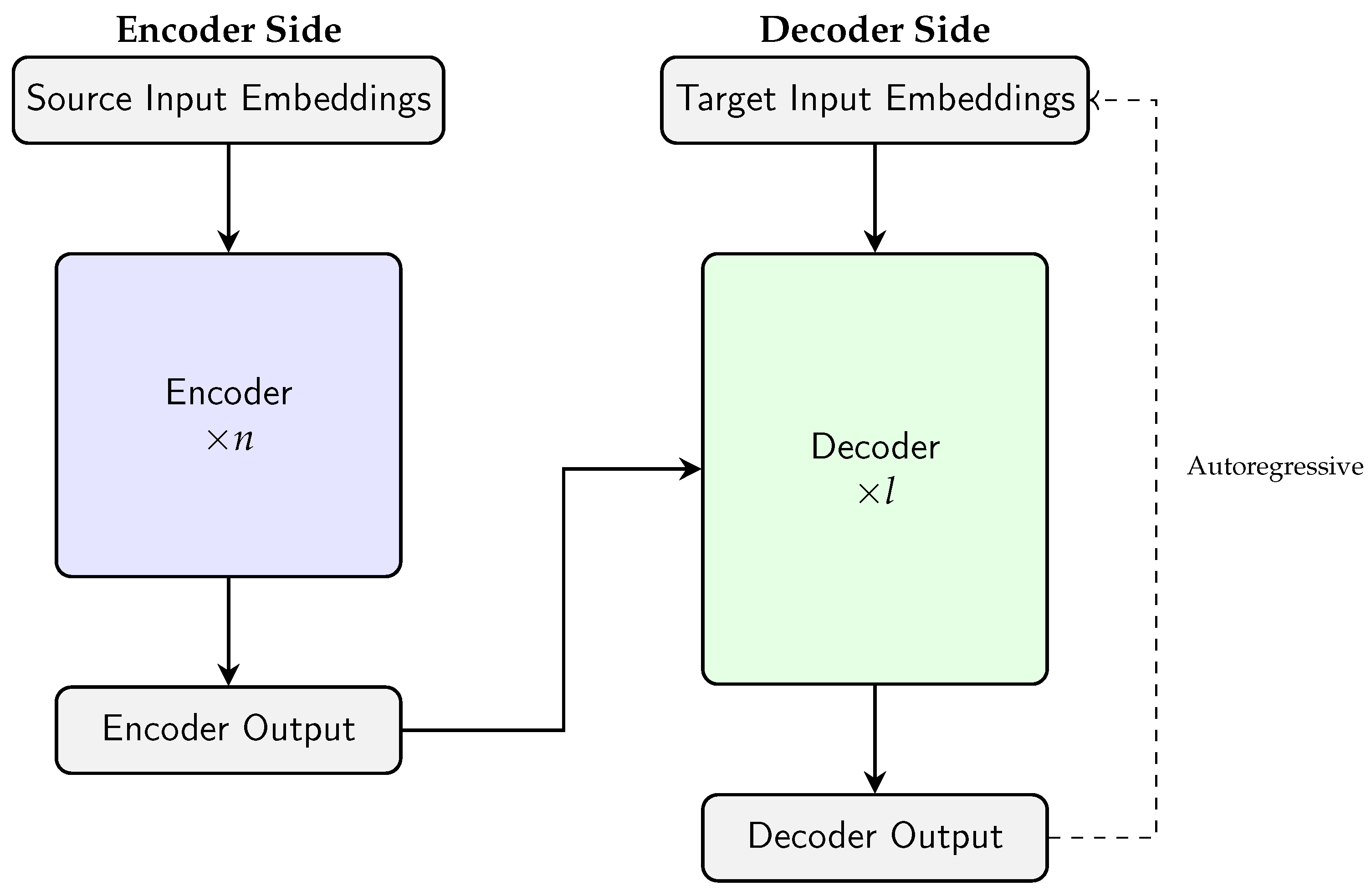

The objective of the decoding component (i.e., the decoder) in a Transformer architecture is to generate an output sequence step by step. This is achieved by using the encoded representation of the input and the output data generated by the encoding component.

The general structure of the decoding module is represented by the pseudocode in Algorithm 2 and in

Figure 3. The individual steps are detailed below.

The decoder takes the encoder output as its main input (Line 1) and iteratively updates a sequence represented by matrix , where each row represents the i-th output embedding, except for the first row, which has a special row. Initially, the matrix is zero-initialized (Line 2). The first row is set as the <CLS> token (Line 3), a learnable embedding used in order to kick-start the learning process. The sequence is then updated autoregressively, i.e., one timestep at a time, with each new element depending on the previously generated ones.

The learned

<CLS> token for the restaurant series is the following vector:

Just as in the encoding phase, the main loop of the algorithm updates for l iterations (Lines 5–24) the matrix . The block of code implementing each iteration is called a Decoding Block. In the block, Matrix Y is first processed by the Multihead Masked Self-Attention, which enables the decoder to process to previous positions in the output sequence while preventing it from attending to future positions, thereby enforcing autoregressive generation. Next, interacts with the encoder output through a Multihead Cross-Attention module, which enables the model to selectively focus on relevant parts of the input sequence (as encoded by the encoder) when generating each output token. This is the key mechanism that connects the encoder and decoder. Finally, the output is passed through some postprocessing steps to produce the final result of the single iteration.

The general procedure outlined above is explained in more detail below.

The Masked Self-Attention mechanism works in the same way as a Multihead Attention function, but it ensures that the generation of each successive sequence value can only consider the present or the past, never the future. This is achieved using a look-ahead mask that prevents feedback in time. The following operations are performed inside each loop (i.e., each head).

Line 7: generates the query, key, and value matrices, projecting the already-generated embeddings into semantic vectors. This projection is achieved by using dynamic parameter matrices and a dynamic bias array, both of which must be learnt.

Line 8: computes the first part of the Scaled-Dot Product Attention function.

Line 9: masks out any similarity values computed between a query vector from a timestep and a key vector coming from a future timestep. During the subsequent softmax step, the value becomes 0, thus avoiding dependency on the future.

Line 10: computes the last part of the attention function.

After the loop of Lines 7–10 is completed, the different output matrices must be aggregated together.

Line 12: the matrices are concatenated by row, obtaining the complete A matrix.

Line 13: the matrix A is multiplied by . The latter matrix is a learnable parameter.

The operations above are referred to as ‘Self-Attention’ because the projections of and V all come from the same input vector, . In the example architecture, we set the number of heads for the self-attention layers to .

Each operation in the decoding stage is followed by a residual connection and a normalization step. The function works row-wise on the embeddings, standardizing them with learnable scaling and shifting parameters ().

The second Attention step in the decoding phase links the partially generated output with the encoder’s representation of the input. Specifically, the decoder’s current output is projected into queries, while the encoder’s output provides the keys and values. Similar to Multihead Attention, this process involves a loop (Lines 15–18) and an aggregation step at the end (Lines 19–20).

Line 16: generates the query, key, and value matrices, projecting the decoder’s current output into queries and the encoder’s output into keys and values. Each projection uses a learnable parameter matrix to transform the embeddings into semantic vectors.

Line 17: computes the attention function, using the already computed matrices.

The above operations are repeated times, getting attention outputs.

Line 19: All the head’s outputs are concatenated by the rows in a single matrix .

Line 20: The attention matrix is multiplied by , which is a learnable parameter.

The name Cross-Attention is due to the fact that the output of the encoding phase Z and the decoding current output are combined, crossing the two matrices together. In the example, we set the number of heads of the Cross-Attention layer to .

The decoder applies a position-wise two-layer feedforward network with ReLU activation, as was done in the encoder (Line 22):

At the end of the decoding loop, matrix

is obtained. Before mapping the

j-th embedding back into its scalar prediction,

is passed through a final feedforward network and regularized (Line 25):

For enhanced regularization purposes, a scale value and a bias value are determined through two learnable matrices

, based upon the mean across the timesteps of the encoded input series

:

where

represents the sigmoid function.

If we are at the i-th iteration of the outer output cycle (Lines 4–27), the output matrix contains in position i the predicted embedding for timestep i.

| Algorithm 2: The decoding module |

![Algorithms 18 00645 i002 Algorithms 18 00645 i002]() |

3.3. Learning

The encoding and decoding processes described in

Section 3.1 and

Section 3.2 are actually included in an overarching loop that implements learning by adjusting all parameters (e.g., weights of projection matrices, attention heads, feed-forward layers, etc.) to minimize a loss function over the training data. This is typically achieved through stochastic gradient descent (SGD)-based optimization, usually backpropagation.

The process is the standard neural learning process, flowing through three main phases:

a forward pass where input sequences (time series points) are embedded into vectors, flowing through layers of multi-head self-attention and feed-forward networks obtain a forecasted value.

loss computation, where the loss function measures how far the predictions are from the ground truth. In the case of forecasting data series, the most commonly used loss functions are Mean Squared Error (MSE) or Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and Huber loss, which combines the robustness of MAE with the smoothness of MSE.

a backward pass, where gradients of the loss with respect to the parameters are computed via backpropagation and parameters are updated using an optimization algorithm (usually a variant of gradient descent).

The backward pass implements the actual learning process by reversing the computation flow that was used to make the forecast, as presented in

Figure 2. A Transformer’s computation graph has many layers, including an embedding layer, multiple self-attention blocks (each with projections

), feed-forward networks, layer norms, residual connections, etc. During backpropagation, the chain rule of calculus is applied to each layer, as with standard MLPs, and the gradient flows backwards through each operation. This means that the

, feed-forward weights, embedding matrices, and LayerNorm parameters all receive gradient-based updates depending on the value of the loss function.

Learning is a computationally intensive phase based on standard gradient descent optimizers, and it greatly benefits from GPU-optimized codes. In the minimalist architecture, the backward computations have been delegated to the relevant PyTorch classes [

21].

The embedding matrices and are initialized such that to represent exactly the same projection. Specifically, is initialized by drawing each of its elements from a uniform distribution, and is set to be since the bias arrays are zero-initialized. Then, during training, they are set to change without any constraint.

The training technique is as follows. The input sequence of fixed length n is given to the model. The predicted element at the i-th timestep is looked at, and the loss function between the ground truth element is computed. The employed loss is the MSE. The backpropagation step, which updates the internal parameters of the mode, follows. Then, with probability p, the matrix is filled not with the predicted element but with the actual one, . The probability of that happening is gradually decreased during the training phase in order to let the model be more reliant on its own predictions, as it will be during inference.

In the case of the running example, the total number of parameters to learn in the Transformer model was 737.