Abstract

With the escalating global cyber threats, Distributed Denial of Service (DDoS) attacks have become one of the most disruptive and prevalent network attacks. Traditional DDoS detection systems face significant challenges due to the unpredictable nature, diverse protocols, and coupled behavioral patterns of attack traffic. To address this issue, this paper proposes a novel approach for DDoS attack detection by leveraging the Transformer architecture to model both temporal dependencies and behavioral patterns, significantly improving detection accuracy. We utilize the global attention mechanism of the Transformer to effectively capture long-range temporal correlations in network traffic, and the model’s ability to process multiple traffic features simultaneously enables it to identify nonlinear interactions. By reconstructing the CIC-DDoS2019 dataset, we strengthen the representation of attack behaviors, enabling the model to capture dynamic attack patterns and subtle traffic anomalies. This approach represents a key contribution by applying Transformer-based self-attention mechanisms to accurately model DDoS attack traffic, particularly in handling complex and dynamic attack patterns. Experimental results demonstrate that the proposed method achieves 99.9% accuracy, with 100% precision, recall, and F1 score, showcasing its potential for high-precision, low-false-alarm automated DDoS attack detection. This study provides a new solution for real-time DDoS detection and holds significant practical implications for cybersecurity systems.

1. Introduction

With the continuous development of Internet infrastructure, Distributed Denial of Service (DDoS) attacks have gradually become one of the most common and harmful attacks in the field of cyberspace security. DDoS attacks usually send a large number of forged requests or malformed protocol packets to the target server by manipulating a large number of devices located in different regions, thereby exhausting the server’s bandwidth, system resources, or computational power, and preventing legitimate user requests from receiving a normal response, which seriously affects the normal operation of the enterprise and the operational efficiency of the port.

In recent years, DDoS attacks have evolved in complexity, becoming more diverse, automated, and stealthy, thereby reducing the effectiveness of traditional detection methods. Wang (2024) analyzes the Cybersecurity Forecast 2024 Report, which states that the global cybersecurity situation is continuing to deteriorate, with the number of global cybersecurity incidents increasing 11-fold over the past 8 years, with DDoS (Distributed Denial of Service) attacks accounting for 50.2% of the total [1]. The increase in DDoS attacks, especially during events such as the Russia–Ukraine conflict, has led to growing concerns over their impact on critical infrastructures like ports [2].

DDoS attacks not only have a strong suddenness, but also often through protocol mixing, attack-source randomization, and other ways to circumvent the identification of traditional detection methods. Traditional rule- or threshold-based detection methods rely on explicit traffic characterization with manually set parameters, and this class of methods has good interpretability and deployment simplicity [3,4]. However, the increasingly diverse and low-profile DDoS attack traffic also puts higher demands on the detection system in terms of detection accuracy, feature modeling capability, and behavioral differentiation. For this reason, more and more researchers have started to adopt intelligent methods to automatically extract attack features from complex network traffic with the help of machine learning and deep learning models to achieve efficient detection of DDoS attacks.

Among them, machine learning methods based on statistical features have achieved significant results in early DDoS detection research due to their simplicity of modeling and stable performance, but with the rise in traffic dimensions and behavioral complexity, stronger feature modeling and adaptive capabilities have gradually become the focus of research. In contrast, deep learning methods are able to automatically extract more complex patterns through end-to-end training, and in particular, models with strong sequence-modeling capabilities (e.g., LSTM, Transformer) show great potential in modeling temporal behaviors in dealing with DDoS attacks. The Transformer model, as an emerging structure in recent years, has a global attention mechanism and high parallelism, which is suitable for capturing long-distance dependency and sudden traffic changes, and thus has become a new research hotspot in the field of DDoS detection.

The current problem focuses on how to incorporate the evolutionary characteristics of modern DDoS attacks to build detection models with high accuracy and good generalization capabilities. In this paper, we propose a DDoS attack detection method based on the Transformer architecture, which reconstructs the dataset containing multiple attack types and fully exploits the time-series features and hidden attack patterns in network traffic, aiming to improve the modeling capability of complex time-series correlation and latent behavioral patterns of attacks, so as to achieve high-precision and low-false-alarm automated detection of diverse DDoS attack patterns.

2. Related Work

In recent years, a lot of research has been conducted in academia to address the problem of detecting DDoS attacks, ranging from traditional machine learning methods to deep learning structures. In the early approaches, DDoS attack detection mainly relied on traditional machine learning methods to achieve classification by modeling statistical features of network traffic [5]. These methods are usually based on the premise of feature extraction, which extracts hand-designed features such as packet length, flow duration, port number distribution, traffic directionality, etc., and then make classification judgments by models such as Support Vector Machines (SVMs) [6] and Decision Trees (DTs) [7]. For example, Ivanova et al. (2022) used Support Vector Machines (SVMs) to classify multiple types of DDoS attacks, which addresses the decline in classification accuracy caused by fuzzy boundaries between attack types and effectively improves the accuracy and robustness of detection [8]. Farhana et al. (2023) proposed a DDoS attack detection method based on Boruta feature selection and random forest classification, which achieves optimal identification of real positive examples while maintaining a low false-alarm rate by filtering the most discriminative traffic features and training an integrated model [9]. Although DDoS detection methods based on feature engineering and traditional machine learning have achieved good results in static scenarios and improved the accuracy and efficiency of attack identification to a certain extent, such methods generally rely on artificially designed features and a priori rules, and their adaptability to variable network environments and novel attack patterns is still the focus of current research.

More critically, Hassan (2024) pointed out that relying on “feature independence” or the “static view of traffic” as a modeling premise makes it difficult to effectively portray the dynamic evolution process in DDoS attacks [10]. With the continuous deepening of research, scholars gradually recognize that DDoS attack traffic has significant timing dependence and feature coupling. Mittal et al. (2023) noted that time-series features such as sudden enhancements, continuous connection attempts, and periodic behaviors are often seen during attacks [11]; Wang et al. (2024) further analyzed that multiple traffic features (e.g., packet length entropy, port statistics) tend to mutate synchronously in DDoS attacks in SDN environments, suggesting that univariate or static models are insufficient for characterizing attack behavior [12]. These findings have driven the introduction of temporal modeling strategies, and recurrent neural networks such as LSTM and GRU are widely used to model attack behavior [13,14]. However, there is still some room for performance optimization of the loop structure, limited by its ability to capture long-range dependencies and the training efficiency issue in large-scale traffic processing.

Facing the problems of limited deep temporal relationship-modeling capability and inefficient inference in high concurrency scenarios, Xi (2024) pointed out that the adoption of the Transformer architecture, which strengthens temporal dependency modeling and cross-feature interactions through a global attention mechanism, can significantly improve the detection performance of complex network attacks [15]. On the one hand, DDoS traffic often contains a large number of redundant packets, protocol field repetitions, and high-dimensional feature intersections, and traditional models are susceptible to local noise interference. While Transformer can model feature sequences in multiple dimensions in parallel, which helps to retain key discriminative information in complex contexts [16,17]. On the other hand, anomalous behaviors in DDoS attacks are often distributed in a nonlinear pattern at different locations in the time axis, and the sensitivity to input disorder and the ability to dynamically assign weights that Transformer possesses make it more advantageous in identifying this type of sparse but critical attack signal [18,19]. Therefore, compared with the traditional methods relying on manually designed features, the ability of Transformer models to adaptively mine critical traffic patterns in an end-to-end manner, showing unique advantages in dealing with multi-type and highly dynamic DDoS attacks, has become a major concern in current research.

At the same time, how to optimize the structure and semantic enhancement on the basis of the existing data to build a dataset more suitable for complex detection tasks has become a key link to improve the versatility and evaluation reliability of DDoS detection models. Early datasets such as KDD’99 and NSL-KDD played a seminal role in the field of intrusion detection, providing an extensive training and validation base for traditional machine learning methods [20]. However, with increasingly complex attack patterns and dynamically evolving network environments, researchers urgently need detection datasets with higher behavioral reproducibility and feature diversity to support relevant model design and evaluation. Therefore, the new generation of high-quality datasets such as CICIDS2017 and CIC-DDoS2019 have been constructed one after another, which are commonly used to support more complex intrusion detection research tasks by virtue of their advantages of real traffic background, multi-class attack behaviors, and rich feature structure [21]. Although the above datasets are already of high quality, the direct use of raw data may still face problems such as structural inconsistency, label noise, or insufficient task matching, so targeted preprocessing and reconstruction of them has become a common practice in research. For example, Akgun (2022) used operations such as eliminating zero/missing values, de-duplication, and sliding-window segmentation to organize CIC-DDoS2019 data into structurally canonical time slices, which provided a stable input format for the CNN LSTM model [22]. In addition, Becerra Suarez et al. (2024) also performed a systematic reconstruction of the CIC-DDoS2019 dataset, which contains steps such as anomalous sample rejection, feature selection, normalization process, and sliding-window construction [23]. Experiments show that this preprocessing process not only improves the quality of feature representation, but also significantly enhances the detection performance and stability of multiple machine learning models. Therefore, based on the existing high-quality datasets, structural reconstruction for key factors such as distribution of attack types, feature organization, and time-order structure has become an important means to improve the accuracy and generalization capability of DDoS detection models.

To summarize, the traditional detection methods based on static features show obvious limitations when facing the diversity, suddenness, and sequentiality of modern DDoS attacks, and the construction of high-quality datasets provides the basic support for research based on deep models. On this basis, the Transformer model, by virtue of its ability to jointly model complex inter-feature relationships and temporal structures, has shown strong performance and development potential in DDoS detection tasks, and is gradually becoming an important direction in current research, as shown in Table 1. The different methods vary in their approaches, datasets, and the associated challenges they face.

Table 1.

Comparison of DDoS detection methods.

3. Data Preprocessing and Feature Construction

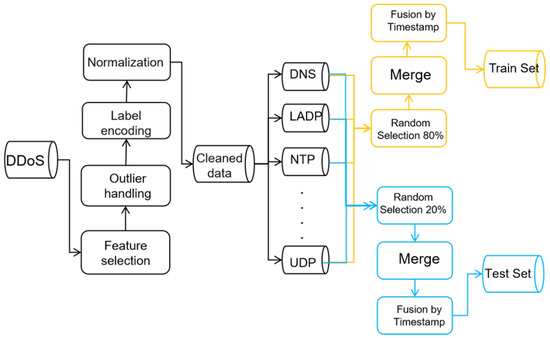

In order to effectively improve the detection performance of the model for DDoS attacks, the preprocessing and feature construction of the dataset is a crucial part of the foundation. Since the CIC-DDoS2019 dataset has obvious advantages in terms of the diversity of attack types and the rationality of experimental design, and can more comprehensively validate the model’s detection ability in complex network environments, we choose this dataset for experiments. In this section, we will introduce the preprocessing method and feature-construction process of this dataset in detail, as shown in Figure 1, to lay a solid foundation for the subsequent model training and performance evaluation.

Figure 1.

DDoS data processing and feature construction.

The CIC-DDoS2019 dataset is constructed based on a hierarchical experimental framework, which systematically simulates a variety of typical DDoS attack scenarios in real network environments. The dataset collects traffic data of 13 different types of DDoS attacks, including NTP, DNS, LDAP, MSSQL, NetBIOS, SNMP, SSDP, UDP, UDPLag, WebDDoS, Syn, TFTP, and Portmap attacks, covering a variety of attack mechanisms, such as reflective, resource-consuming, and application-layer attacks, as shown in Table 2.

Table 2.

DDoS attack-type coverage table.

The CIC-DDoS2019 dataset uses 87 flow attributes to describe each flow. The 87 traffic attributes can be categorized into nine classes:

- 1.

- Base Identity class;

- 2.

- Access Traffic Statistics class;

- 3.

- Access Packet Length Statistics class;

- 4.

- Rate and Ratio class;

- 5.

- Timing (IAT) class;

- 6.

- TCP Flag class;

- 7.

- Message Header Length-related class;

- 8.

- Bulk and Subflow-related class;

- 9.

- Other statistical attributes.

Among them, there are eight attributes in the Base Identity class, which are used to uniquely identify the communication object, protocol type, and occurrence time of the network flow, and are the basic information for constructing temporal characterization and traffic analysis, as shown in Table 3.

Table 3.

List of characterization fields for basic identification classes.

There are five attributes in the Access Traffic Statistics class, which are mainly used to measure the macroscopic characteristics such as the number, direction, and duration of packets during a network communication, reflecting the communication intensity and pattern of the network flow, as shown in Table 4.

Table 4.

List of characterization fields for Access Traffic Statistics classes.

There are 13 attributes in the Access Packet Length Statistics class, which are designed to comprehensively portray the distribution characteristics, degree of fluctuation, and directional differences of forward and backward packets in the network flow in terms of length, so as to identify abnormal packet length patterns and data transmission behaviors in DDoS attacks, as shown in Table 5.

Table 5.

List of characterization fields for the Access Packet Length Statistics class.

There are eight attributes in the Rate and Ratio class that reflect the transmission strength of the network flow per unit of time and the proportional relationship between the forward and reverse traffic, thus capturing the key behavioral characteristics such as abnormal rate and traffic skewing in DDoS attacks, as shown in Table 6.

Table 6.

List of characterization fields for Rate and Ratio classes.

There are 22 attributes in the Timing (IAT) class, which are designed to comprehensively capture the time-interval characteristics of forward and reverse packet arrivals in the network flow, as well as the time distribution pattern of the flow in the active and idle states, so as to effectively identify the abnormal sending rhythms and bursty behavioral patterns in DDoS attacks, as shown in Table 7.

Table 7.

List of Timing (IAT) class fields.

In addition, there are other attribute classes used for TCP flag analysis, Message Header Length analysis, and Bulk and Subflow behavior analysis, as well as statistical feature classes. These features are detailed in Table 8, Table 9, Table 10 and Table 11.

Table 8.

List of TCP Flag class fields.

Table 9.

List of Message Header Length-related class fields.

Table 10.

List of Bulk and Subflow-related classes.

Table 11.

List of statistical feature classes.

In order to enhance the model’s ability to perceive the behavioral patterns of DDoS attacks, this paper constructs a multidimensional feature set based on raw network traffic data. From these, 9 feature attributes, namely ‘Unnamed: 0’, ‘Timestamp’, ‘Flow ID’, ‘Source IP’, ‘Destination IP’, ‘SimillarHTTP’, ‘Fwd Header Length.1’, ‘Inbound’, and ‘Protocol’, were eliminated and 78 representative structured features were retained for modeling. The removal was carefully considered: several fields such as ‘Unnamed: 0’, ‘Flow ID’, ‘Source IP’, and ‘Destination IP’ were identifiers without behavioral meaning; ‘SimillarHTTP’, ‘Fwd Header Length.1’, and ‘Inbound’ were found either redundant or weakly correlated with DDoS patterns. More importantly, the ‘Protocol’ and ‘Timestamp’ attributes, although intuitively useful, were excluded for specific reasons. The ‘Protocol’ field was highly redundant, since variations in protocol types were already well captured by traffic statistics (e.g., packet length distributions, flow rates), and its inclusion did not improve detection accuracy while increasing complexity. The ‘Timestamp’ field was unnecessary because temporal dependencies were already modeled explicitly through the sliding-window mechanism (), which encodes the order and evolution of flows without relying on absolute time values. Including ’Timestamp’ would likely introduce redundancy and noise. By eliminating these nine attributes, we ensured that the final 78 features focus on dimensions directly relevant to DDoS detection, including traffic duration, packet length statistics, upstream and downstream transmission rates, control flag counts (e.g., SYN, PSH, ACK), directional indicators, and inter-arrival times (IAT), which capture essential differences between benign and attack traffic. Some of the core features are shown in Table 12.

Table 12.

Examples of some of the original feature fields.

To mitigate the impact of missing values in the DDoS attack data on model training, a null value filtering strategy was introduced. For each network flow record, the system first checks whether any fields are missing; if the fields are complete, the record is retained and included in the cleaned dataset, whereas records with missing values are discarded. This strategy ensures that the final dataset consists only of complete records, thereby guaranteeing feature integrity and data consistency during the model training phase.

Additionally, recognizing the significant differences between DDoS attacks and normal traffic in terms of characteristic manifestations and behavioral patterns, a binary encoding method was employed to differentiate between traffic categories, with DDoS attacks marked as 1 and normal traffic marked as 0. This encoding method is concise and effective, enabling the model to clearly distinguish between attack and normal traffic in the classification task, thereby improving both detection accuracy and computational efficiency.

Considering that DDoS attack traffic differs significantly from normal traffic in terms of characteristics such as packet rate, length distribution, and TCP control field counts, feature normalization was applied during the preprocessing phase to address the impact of scale inconsistencies between different features on model training. This approach improves the model’s ability to learn features with high variability. The normalization process uses the following standard deviation-based formula:

where

- : denotes the current collected flow feature;

- and : represent the mean and standard deviation computed from normal flow data, respectively.

This normalization ensures that DDoS attack traffic is mapped to a feature scale consistent with normal traffic, thus reducing the impact of scale differences between features on classifier training and inference accuracy.

To ensure effective model training and fairness in test evaluation, a dataset-splitting strategy was employed, combining category-balanced sampling with time-series maintenance. Specifically, for each DDoS attack type (including BENIGN, NTP, DNS, LDAP, MSSQL, NetBIOS, SNMP, SSDP, UDP, UDPLag, WebDDoS, Syn, Portmap, and TFTP), 80% of the samples were randomly selected as the training set, with the remaining 20% used for testing. This approach ensured that the data distribution between the training and testing phases remained consistent. Moreover, all samples were sorted by timestamp to preserve the natural structure of traffic over time, thus avoiding any disruption to the temporal characteristics of the attack behavior caused by random sampling. This method more closely reflects the real-world logic of DDoS attacks and allows the model to accurately learn the temporal evolution of attack patterns.

Through systematic DDoS data-preprocessing operations, including feature selection, missing value handling, label encoding, and robust normalization, the scale and distribution of the data were effectively unified. This significantly enhanced the model’s ability to characterize the diverse behaviors of DDoS attacks, laying a solid foundation for stable training and efficient detection of subsequent models.

4. Construction of DDoS Attack Detection System Based on Transformer Modeling

DDoS attacks are characterized by suddenness in time distribution compared to normal access traffic. Normal network traffic typically exhibits a smooth and predictable temporal distribution, whereas DDoS attacks display anomalous behaviors in the time dimension, such as sudden traffic surges and irregular access frequencies, which tend to create abnormal traffic patterns. In addition, different types of DDoS attacks have significant differences in the statistical attributes in the network flow due to the differences in the attack vectors, protocol utilization, and control strategies. For example, in the dimensions of total packet length, forward/backward packet ratio, flag bit distribution, inter-packet time interval, and activity–idle period, all of them embody structural patterns that are different from those of normal traffic. The generation mechanism of this anomalous traffic determines its non-smoothness and non-independence in several characterization dimensions.

Aiming at the network traffic sequences with high burstiness, high feature redundancy, and uneven distribution in DDoS attacks, the Transformer model relies on the self-attention mechanism to effectively model the nonlinear interaction between temporal dynamics and multiple features. In traditional sequence-modeling approaches, the propagation of information is usually limited to the sequential delivery of time steps, whereas Transformer’s global attention mechanism allows the model to establish a direct correlation between any two time steps, thus effectively capturing long-distance dependent features.

In the face of DDoS attacks such as flow duration, Fwd IAT Std, PSH Flag Count, Packet Length Variance, and other time windows that show strong anomalous characteristics, Transformer can automatically focus on critical time points and feature combinations to extract underlying behavioral patterns of DDoS attacks. In addition, Transformer’s multi-head attention mechanism further enhances the model’s ability to characterize DDoS attack behaviors. Different attention heads are able to capture the fine-grained interaction patterns of network traffic in the dimensions of time, direction, and protocols from multiple feature subspaces in parallel, which helps to capture subtle distinctions across different types of attacks.

At the same time, Transformer compensates for its non-recursive structure’s ability to perceive temporal order with its positional encoding mechanism, which enables the model to capture temporal mutations, spacing anomalies, and cyclical fluctuations in the fast-paced bursty behaviors of DDoS attack traffic to improve the accuracy of identifying anomalous patterns.

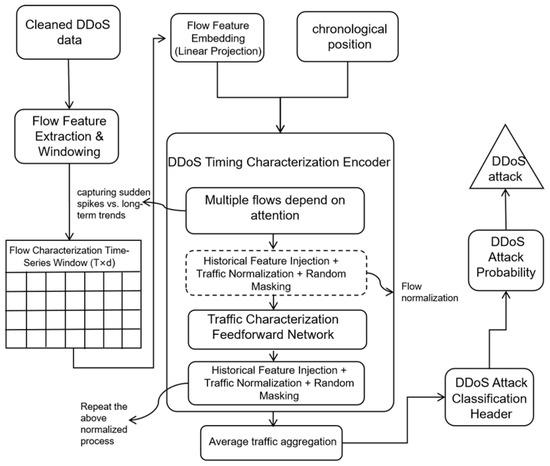

Therefore, to address the suddenness of DDoS attacks in the time dimension and the variability in the feature dimension, attack detection methods based on the Transformer architecture can not only effectively model their complex behavioral patterns but also adapt to the needs of efficient inference and detection under high concurrency and large-scale network traffic. Based on the above considerations, this paper proposes a DDoS attack detection method based on the Transformer model, which aims to fully explore the temporal features and potential multidimensional dependencies in network traffic and improve the identification and detection capability of complex and diverse DDoS attacks. The overall architecture of the system is shown in Figure 2.

Figure 2.

Architecture of DDoS detection system based on Transformer model.

In this model, the preprocessed DDoS attack traffic data will be divided into multiple time windows. Each time window contains time steps, and each time step contains features, and the raw traffic data is represented as a matrix:

where denotes the i-th feature at the t-th time step, and each row represents a time step of data that contains the DDoS traffic features at that moment.

In order to enhance the expressiveness of the raw DDoS traffic features and to provide richer feature representations for subsequent time-series modeling, in this paper, we first dimensionally expand the feature vectors at each time step before feeding the data into the Transformer model. The feature vector at each time step is mapped to a dimension D by linear projection to obtain the corresponding feature representation :

where is the weight matrix and is the bias term.

Next, in order for the Transformer to recognize the sequential information between time steps in the DDoS sequence, we need to add positional encoding to the feature vectors for each time step. For each time step t, the position-encoding vector will be added with the upscaled feature vector to get the final input representation :

This enables the Transformer model to perceive the sequential information of each time step, which helps the model to correctly understand the temporal dependencies when processing DDoS timing data, avoiding information loss.

Subsequently, all the DDoS input sequences are fed into the encoder part of the Transformer. Transformer’s encoder consists of multiple stacked layers, each containing a self-attention mechanism and a feed-forward neural network. The relationship between different time steps in the input sequence is computed by means of a multi-head self-attention mechanism. For the input at each layer, we perform a linear transformation to obtain the DDoS query, DDoS key, and DDoS value matrices:

where denotes the input features of layer l, and are the weight matrices for query, key, and value, respectively.

Next, in order to enable the Transformer to effectively focus on critical time steps in the DDoS traffic sequence, its ability to capture attack timing patterns is enhanced. The model first computes the dot product between the query and the key and obtains the attention weight matrix through a scaling operation:

where is the scaling factor, which is used to suppress the anomalies of attention distribution caused by the uneven magnitude of eigenvalues in the DDoS attack traffic, and to ensure that the model is more stable in calculating the attention weights. This attention weight matrix will be used to perform a weighted average of the DDoS value matrix to generate an output representation of the current attention header that is used to capture key signature patterns in DDoS traffic:

In DDoS detection tasks, due to the complex timing characteristics and patterns of network traffic data, in order to be able to capture this information comprehensively and accurately, the outputs of multiple attention heads are spliced together and then subjected to a linear transformation to obtain the final attention output:

This mechanism enables the model to parse the dynamic features of DDoS attack traffic from multiple perspectives, and each attention head can focus on a different time step or feature dimension, thus improving the recognition accuracy of diverse attack behaviors and the overall detection performance of the model.

Subsequently, the fused multi-head attention outputs are fed into a feed-forward neural network (FFN) for further processing to enhance the model’s expressive and nonlinear modeling capabilities for DDoS attack features. This feed-forward network consists of two fully connected layers, the middle of which is nonlinearly transformed by the ReLU activation function:

Among them, the first fully connected layer maps the feature space of the multi-head attention output to a higher-dimensional space to improve the model’s ability to express complex feature-combination relationships. The second fully-connected layer maps it back to the original dimension to maintain the consistency of the network structure. With this two-layer feed-forward structure, the model is able to further extract the complex interaction patterns implied in DDoS traffic, improving the ability to identify abnormal behavioral features and the overall detection accuracy.

After being processed by multi-head attention and feed-forward networks, the coded features of DDoS traffic may still be locally perturbed due to the suddenness of the attack and the unstable packet structure, resulting in uneven feature propagation. To ensure that the model can learn these complex attack behavior features stably in the deep structure, this paper introduces residual connectivity and layer normalization mechanisms in each coding layer. By summing up the residuals of the inputs and outputs of each layer and applying normalization, the model is able to effectively avoid the problem of vanishing or exploding gradients in the face of DDoS traffic data, and ensures the stable propagation of higher-order features:

With the gradual stacking of Transformer encoder layers, the model continuously performs multilevel feature modeling and attack behavior representation of DDoS traffic sequences in both time and feature dimensions. The output of each time step not only contains local features (e.g., instantaneous traffic, packet length, PSH flags, etc.) at the current moment, but also incorporates temporal correlation information in the context, thus reflecting the evolution of the attack behavior more comprehensively.

In order to aggregate the dynamic features of the entire traffic fragment into a unified discriminative basis, the model applies an average pooling operation on all time-step outputs of the last layer of the encoder to generate the global feature representation :

This vector comprehensively reflects the statistical and temporal characteristics of DDoS traffic in the whole time window, and is fed into the fully connected layer as an input to complete the mapping from the high-dimensional feature space to the attack discrimination space to generate the prediction score :

Finally, the score is normalized by the Softmax function to output the probability that the current traffic sequence belongs to a DDoS attack:

This probability value serves as the result of the model’s discrimination of the current traffic sequence, reflecting its likelihood of being recognized as a DDoS attack.

5. Results and Evaluation

To systematically evaluate the effectiveness of the DDoS attack detection model based on Transformer architecture proposed in this paper, the experiments are carried out on the CIC-DDoS2019 dataset. The model is deployed on the VMware ESXi 8.0.2 virtualization platform running on an Ubuntu 24.04 virtual machine with a configuration that includes Intel Xeon Gold 5318H processors (70 cores in total), 350 GB of RAM. The hardware information is shown in Table 13.

Table 13.

Configuration details.

Based on the above running environment, this paper trains the proposed Transformer model based on the PyTorch(version 2.6.0) framework. The model input features have a dimension of 77, and the original features are first embedded into a 128-dimensional representation space by linear mapping. Then, they are input into a Transformer encoder consisting of two layers of multi-head self-attention (MHSA) mechanism for temporal modeling. The encoder outputs are aggregated into global representations via an average pooling operation, and finally, a two-layer fully connected neural network is used to achieve binary discrimination.

In the training process, the dataset is split into 80% for training and 20% for testing. The model is trained using the Cross-Entropy Loss function (CEL) and the Adam optimizer. The initial learning rate is set to , and the batch size is 128. The maximum number of training rounds is set to 20. To enhance training efficiency and mitigate overfitting, we introduce an early stopping mechanism based on performance dynamics. When the model does not show significant improvement in performance indicators in three consecutive training rounds, it will automatically terminate the training and keep the current parameters as the final model. The training process is carried out by Mini-Batch Iteration (MBI), and the training loss, validation loss, and validation accuracy are recorded after each round of training to provide a basis for subsequent performance evaluation and result analysis.

The pseudo-code of the training procedure is as Algorithm 1 follows:

| Algorithm 1 Training procedure for transformer-based DDoS detection model |

|

In order to systematically evaluate the classification effect of the model in the DDoS attack identification task, this paper selects four metrics: accuracy, precision, recall, and F1 score, as the evaluation criteria.

- Accuracy measures the overall correctness of the model in identifying DDoS attacks and normal traffic, reflecting its ability to comprehensively classify attacks and non-attacks in a large-scale traffic environment:

- Precision measures the percentage of samples determined by the model to be DDoS attacks that are actually confirmed attacks, reflecting its ability to reduce false positives:

- Recall represents the percentage of all actual DDoS attack traffic that the model successfully identifies, measuring its ability to control leakage and its coverage of attack behavior:

- F1-score is the reconciled average of precision and recall, which is used to comprehensively evaluate the accuracy and completeness of the model in recognizing DDoS attacks:

In the above evaluation metrics, denotes the number of DDoS attack samples successfully identified by the model, is the number of samples correctly identified as normal traffic, denotes the number of normal traffic misclassified as an attack, and denotes the number of attack samples that are not identified. The multidimensional performance metrics computed based on these basic statistics can comprehensively reflect the actual effectiveness of the model in the DDoS attack detection task from different perspectives.

Based on the above training configurations and evaluation criteria, this paper systematically tests the proposed DDoS detection model on the CIC-DDoS2019 dataset and quantitatively analyzes its performance. The experimental results are shown in Table 14. The model shows very high detection accuracy and stability in the binary classification task. In the test samples, the model achieves an overall accuracy of 99.99%, an F1 score of 100%, and a precision and recall rate of 100%, indicating that the model is able to achieve almost complete identification coverage of attack traffic with no false positives. Further looking at the category details, the model achieves 97.23% precision and 99.64% recall for normal traffic (category 0), with an F1 score of 98.42%; whereas for DDoS attack traffic (category 1), the model achieves 100% precision and recall, showing an absolute ability to capture attack samples.

Table 14.

Classification performance of the Transformer-based model on the CIC-DDoS2019 test set.

Comparison with Naive Bayes Model:

To further verify the advantages of the proposed Transformer-based DDoS detection model in terms of comprehensive performance, this paper selects the Naïve Bayes (NB) model as the baseline model for comparison, and carries out a multi-dimensional comparative analysis centering on classification accuracy, stability, and key performance indicators. With its simple structure and efficient inference, the Naive Bayes model is commonly used in traditional network traffic classification scenarios, and can reflect the basic capability of classical probabilistic modeling methods in DDoS detection.

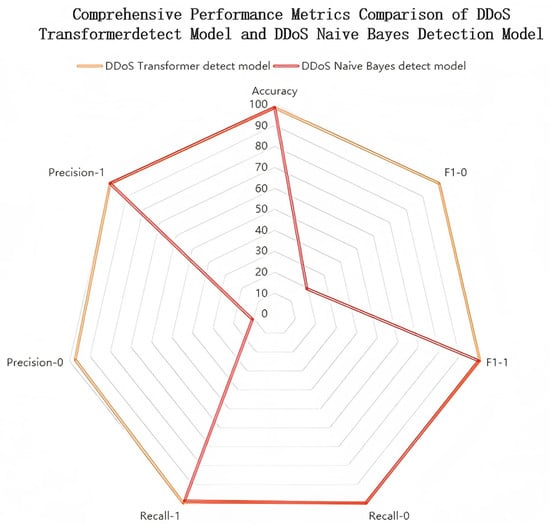

In this paper, we first constructed a radar chart based on classification accuracy, recognition coverage, and stability of results, portraying the detection performance of the model on different categories from a multi-dimensional perspective, and compared and analyzed the proposed Transformer model with the Naive Bayes model. The comparison is shown in Figure 3. The figure reflects the obvious differences in the performance of the two models in various indicators: the Transformer model has a balanced distribution of the overall boundary, showing good classification consistency, especially in the detection dimension of the attack traffic, which is shown as a complete closed graphic boundary, highlighting its extremely high reliability in critical attack identification scenarios. In contrast, the structure of the Naive Bayes model in the radargram shows obvious asymmetric features. Although it still maintains a good performance in recognizing samples in the attack category (category 1), its detection effect on the normal category (category 0) is significantly weaker, especially in the dimensions of accuracy and F1 score, reflecting a serious false-alarm problem and an imbalance of category recognition in the model.

Figure 3.

Radar chart comparing the combined performance metrics of the DDoS Transformer detection model and the DDoS Naive Bayes detection model.

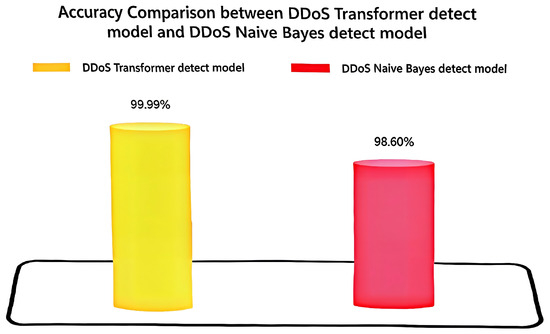

Comparison of Accuracy Metrics:

On the basis of the radar chart analysis of the multidimensional performance of the models, this paper further extracts the overall classification accuracies of the two models on the complete test set and draws histograms for comparative analysis to portray more intuitively their comprehensive discriminative abilities in large-scale traffic identification tasks. As shown in Figure 4, the Transformer model achieves 99.99% accuracy on the test samples, which almost reaches the theoretical upper limit, indicating that it still has a strong generalization ability and abnormal behavior identification ability when facing highly complex and dynamically changing network traffic. In contrast, the accuracy of the Naive Bayes model under the same data conditions is 98.60%, although the overall performance is impressive, but there is still a gap with Transformer. This gap suggests that the strong assumption of feature independence by Naive Bayes is weakly adapted to the highly coupled multidimensional features in DDoS attack traffic.

Figure 4.

Comparison of accuracy metrics of DDoS Transformer detection model and DDoS Naive Bayes detection model graphs.

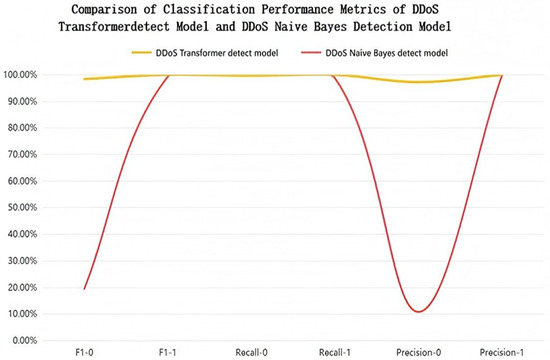

Comparison of Classification Performance Metrics:

After completing the overall accuracy assessment, this paper further analyzes the difference in the category discrimination ability between the Transformer and the Naive Bayes model in the DDoS attack detection task, focusing on their specific performance in distinguishing normal traffic from attack traffic. To this end, three key categorization metrics, namely precision rate, recall rate, and F1 score, are selected to calculate the scores under the two traffic categories, respectively. The comparison is shown in Figure 5.

Figure 5.

Comparison of classification performance metrics of DDoS Transformer detection model and DDoS Naive Bayes detection model graphs.

As can be seen from the results in the figure, the Transformer model performs consistently in both normal and attack traffic categories, with the three metrics of precision, recall, and F1 score highly consistent, and the overall curve approximating the level. Among them, for attack traffic (category 1), the model achieves 100% detection accuracy, with no missed reports or false alarms; the indicators on normal traffic (category 0) also remain above 97%, indicating that the model achieves a good balance between anomaly detection and false-alarm control.

In contrast, the Naive Bayes model, although close to Transformer in terms of recall on attack traffic, significantly decreases in terms of precision rate and F1 score on normal traffic, with a significant downward trend in the curve. This indicates that although the model is able to capture attack behaviors, it lacks the ability to recognize normal traffic and is prone to misclassifying legitimate traffic as abnormal, resulting in higher false-alarm rates.

Comprehensive visualization results show that the Transformer model outperforms the Naive Bayes in terms of overall accuracy, category identification balance, and false-alarm control. Although the latter has some detection ability on attack traffic, it has a higher false-alarm rate in normal traffic judgment, and the fluctuation of classification performance is obvious. In contrast, the Transformer model, by virtue of its global modeling capability of high-dimensional features and stable time-series expression, performs better in terms of clarity of classification boundaries and consistency of metrics distribution, reflecting stronger feature adaptation capability and discriminative stability.

6. Discussion

In this paper, we propose a DDoS attack detection model based on the Transformer architecture to address the complexity of modern DDoS attacks, particularly in terms of traffic dimensions and behavioral patterns. By structurally reconstructing and semantically enhancing the CIC-DDoS2019 dataset, we create a sample collection with greater temporal continuity and task suitability. This enhanced dataset effectively supports the dynamic modeling and accurate identification of attack behaviors through deep learning models. Building upon this, we design and implement a temporal modeling module based on the multi-attention mechanism and positional encoding strategy, which enables the model to jointly capture temporal dependencies and the evolving patterns of network attacks. The experimental results demonstrate that our proposed model achieves excellent performance in terms of accuracy, precision, recall, and F1 score, significantly outperforming traditional machine learning methods. The model also shows good generalization ability and practical potential.

However, there are several potential future directions to extend this research. First, to address the computational complexity of the Transformer in large-scale traffic processing, structural optimization strategies, such as sparse attention and segmented-window attention, can be introduced to enhance computational efficiency. Second, combining Graph Neural Networks (GNNs) or cross-traffic interaction modeling mechanisms can further improve the model’s ability to capture potential correlations among multi-source attack behaviors. Third, the introduction of self-supervised learning or few-shot learning mechanisms can enhance the model’s transferability and robustness in low-label environments. Furthermore, constructing multimodal and highly dynamic DDoS attack datasets from the data construction perspective would provide more solid experimental support for evaluating model generalizability and scalability.

On the other hand, future work must also address some ethical considerations. As the proposed model processes network traffic data, privacy concerns related to sensitive user data must be carefully managed. Ensuring that traffic data used for training does not contain personally identifiable information (PII) and that ethical data handling practices are implemented will be crucial for deploying this model in real-world environments. In addition, maintaining transparency about the model’s decision-making process and mitigating any potential biases in the detection process are also important areas that deserve attention.

In summary, the Transformer-based DDoS attack detection method proposed in this paper has achieved promising results in modeling temporal dependencies and behavioral patterns, thus improving detection accuracy and robustness. This work lays a solid theoretical foundation and provides a feasible path for future research in intelligent network attack detection mechanisms, while also highlighting the need for future considerations of computational efficiency and ethical data usage.

Author Contributions

Conceptualization, Y.L.; methodology, Y.L.; software, Y.L.; validation, Y.L., X.D. and A.Y.; formal analysis, X.D. and A.Y.; investigation, Y.L. and A.Y.; resources, Y.L.; data curation, X.D.; writing—original draft preparation, Y.L., X.D. and A.Y.; writing—review and editing, X.D. and A.Y.; visualization, X.D.; supervision, J.G.; project administration, Y.L. and J.G.; funding acquisition, A.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Key R&D Program of China (No. 2023YFE0113200), the National Natural Science Foundation of China (No. 72203029), and the Youth Foundation Project of Humanities and Social Sciences Research of the Ministry of Education (No. 22YJCZH210). The APC was funded by the National Key R&D Program of China (No. 2023YFE0113200).

Data Availability Statement

Data are available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Y.; Wang, X.; Ni, Q.; Yu, W.; Huang, M. BCDM: An Early-Stage DDoS Incident Monitoring Mechanism Based on Binary-CNN in IPv6 Network. IEEE Trans. Netw. Serv. Manag. 2024, 21, 5873–5887. [Google Scholar] [CrossRef]

- Senarak, C. Port cyberattacks from 2011 to 2023: A literature review and discussion of selected cases. Marit. Econ. Logist. 2024, 26, 105–130. [Google Scholar] [CrossRef]

- Tsobdjou, L.D.; Pierre, S.; Quintero, A. An online entropy-based DDoS flooding attack detection system with dynamic threshold. IEEE Trans. Netw. Serv. Manag. 2022, 19, 1679–1689. [Google Scholar] [CrossRef]

- Hossain, M.A.; Islam, M.S. Enhancing DDoS attack detection with hybrid feature selection and ensemble-based classifier: A promising solution for robust cybersecurity. Meas. Sens. 2024, 32, 101037. [Google Scholar] [CrossRef]

- Abiramasundari, S.; Ramaswamy, V. Distributed denial-of-service (DDOS) attack detection using supervised machine learning algorithms. Sci. Rep. 2025, 15, 13098. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Zhang, J.; Liu, J.; Zhang, X.; Li, Y.; Xu, T. A cooperative DDoS attack detection scheme based on entropy and ensemble learning in SDN. EURASIP J. Wirel. Commun. Netw. 2021, 2021, 90. [Google Scholar] [CrossRef]

- Bouke, M.A.; Abdullah, A.; ALshatebi, S.H.; Abdullah, M.T.; El Atigh, H. An intelligent DDoS attack detection tree-based model using Gini index feature selection method. Microprocess. Microsystems 2023, 98, 104823. [Google Scholar] [CrossRef]

- Ivanova, V.; Tashev, T.; Draganov, I. DDoS attacks classification using SVM. WSEAS Trans. Inf. Sci. Appl. 2022, 19, 1–11. [Google Scholar] [CrossRef]

- Farhana, N.; Firdaus, A.; Darmawan, M.F.; Ab Razak, M.F. Evaluation of Boruta algorithm in DDoS detection. Egypt. Inform. J. 2023, 24, 27–42. [Google Scholar] [CrossRef]

- Hassan, A.I.; El Reheem, E.A.; Guirguis, S.K. An entropy and machine learning based approach for DDoS attacks detection in software defined networks. Sci. Rep. 2024, 14, 18159. [Google Scholar] [CrossRef]

- Mittal, M.; Kumar, K.; Behal, S. Deep learning approaches for detecting DDoS attacks: A systematic review. Soft Comput. 2023, 27, 13039–13075. [Google Scholar] [CrossRef]

- Wang, K.; Fu, Y.; Duan, X.; Liu, T. Detection and mitigation of DDoS attacks based on multi-dimensional characteristics in SDN. Sci. Rep. 2024, 14, 16421. [Google Scholar] [CrossRef]

- Yu, Y.; Zeng, X.; Xue, X.; Ma, J. LSTM-based intrusion detection system for VANETs: A time series classification approach to false message detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 23906–23918. [Google Scholar] [CrossRef]

- ALMahadin, G.; Aoudni, Y.; Shabaz, M.; Agrawal, A.V.; Yasmin, G.; Alomari, E.S.; Al-Khafaji, H.M.R.; Dansana, D.; Maaliw, R.R. VANET network traffic anomaly detection using GRU-based deep learning model. IEEE Trans. Consum. Electron. 2023, 70, 4548–4555. [Google Scholar] [CrossRef]

- Xi, C.; Wang, H.; Wang, X. A novel multi-scale network intrusion detection model with transformer. Sci. Rep. 2024, 14, 23239. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Jiang, Y.; Liao, Y.; Li, Z. DDoS-MSCT: A DDoS Attack Detection Method Based on Multiscale Convolution and Transformer. IET Inf. Secur. 2024, 2024, 1056705. [Google Scholar] [CrossRef]

- Ali, M.; Saleem, Y.; Hina, S.; Shah, G.A. DDoSViT: IoT DDoS attack detection for fortifying firmware Over-The-Air (OTA) updates using vision transformer. Internet Things 2025, 30, 101527. [Google Scholar] [CrossRef]

- Barut, O.; Luo, Y.; Li, P.; Zhang, T. R1dit: Privacy-preserving malware traffic classification with attention-based neural networks. IEEE Trans. Netw. Serv. Manag. 2022, 20, 2071–2085. [Google Scholar] [CrossRef]

- Harshdeep, K.; Sumalatha, K.; Mathur, R. DeepTransIDS: Transformer-Based Deep learning Model for Detecting DDoS Attacks on 5G NIDD. Results Eng. 2025, 26, 104826. [Google Scholar] [CrossRef]

- Devarakonda, A.; Sharma, N.; Saha, P.; Ramya, S. Network intrusion detection: A comparative study of four classifiers using the NSL-KDD and KDD’99 datasets. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2022; Volume 2161, p. 012043. [Google Scholar]

- Aktar, S.; Nur, A.Y. Towards DDoS attack detection using deep learning approach. Comput. Secur. 2023, 129, 103251. [Google Scholar] [CrossRef]

- Akgun, D.; Hizal, S.; Cavusoglu, U. A new DDoS attacks intrusion detection model based on deep learning for cybersecurity. Comput. Secur. 2022, 118, 102748. [Google Scholar] [CrossRef]

- Becerra-Suarez, F.L.; Fernández-Roman, I.; Forero, M.G. Improvement of distributed denial of service attack detection through machine learning and data processing. Mathematics 2024, 12, 1294. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).