Abstract

Smart cities are an emerging technology that is receiving new ethical attention due to recent advancements in artificial intelligence. This paper provides an overview of smart city ethics while simultaneously performing novel theorization about the definition of smart cities and the complicated relationship between (smart) cities, ethics, and politics. We respond to these ethical issues by providing an innovative representation of the agent-deed-consequence (ADC) model in symbolic terms through deontic logic. The ADC model operationalizes human moral intuitions underpinning virtue ethics, deontology, and utilitarianism. With the ADC model made symbolically representable, human moral intuitions can be built into the algorithms that govern autonomous vehicles, social robots in healthcare settings, and smart city projects. Once the paper has introduced the ADC model and its symbolic representation through deontic logic, it demonstrates the ADC model’s promise for algorithmic ethical decision-making in four dimensions of smart city ethics, using examples relating to public safety and waste management. We particularly emphasize ADC-enhanced ethical decision-making in (economic and social) sustainability by advancing an understanding of smart cities and human-AI teams (HAIT) as group agents. The ADC model has significant merit in algorithmic ethical decision-making, especially through its elucidation in deontic logic. Algorithmic ethical decision-making, if structured by the ADC model, successfully addresses a significant portion of the perennial questions in smart city ethics, and smart cities built with the ADC model may in fact be a significant step toward resolving important social dilemmas of our time.

1. Introduction: Smart Cities and Smart City Ethics

Imagine you are walking down a city sidewalk when suddenly a SWAT team descends upon you. The SWAT team was deployed because your behavior has triggered the sensors embedded within every aspect of your urban life. If you have just committed a crime, you may be dismayed at the prospect of jail time. If you were acting in accordance with the law, you would want an explanation for why you have been detained. Smart cities, once a vision of the future, are now actual phenomena, and SWAT teams are sometimes deployed in part due to smart city technologies, such as ShotSpotter. When decisions about public safety are made by automated systems, city denizens will want those algorithmic decisions about ethics to both reflect human moral intuitions and be explainable. As of yet, there is no approach to algorithmic decision-making in smart cities that behaves as humans would. There have been attempts at algorithmic ethical decision-making in other domains, perhaps most notably with autonomous vehicles (AVs). These attempts have predominantly been “unitheory” (focusing on one ethical theory) approaches, and these, as well as other attempts at pluralist algorithmic ethical decision-making, have been shown to be insufficient (see Section 3). Our approach, using the agent-deed-consequence (ADC) model, is a pluralist approach with proof of concept already shown through empirical work in the context of AVs. In the context of smart cities, algorithmic ethical decision-making is most frequently critiqued for issues having to do with prejudicial bias, transparency, explainability, and the black box problem [1,2,3]. As we will show, the ADC model’s ability to symbolically represent human moral intuitions, gathered from user populations, and then develop algorithms to govern new technologies in accordance with those moral intuitions, is a significant step toward resolving these ethical issues. Here we move beyond the pre-existing literature about applying the ADC model to algorithmic ethical decision-making by representing the model in deontic logic, advancing further toward actually building emerging technologies with the ADC model. We have selected the ADC model for application in smart cities over other normative or applied ethical models due to the ADC model’s proof of concept in other domains and its empirical validation through prior research.

The smart city is an ambiguous concept that attempts to capture a wide variety of real-world technological and administrative practices that have emerged in typically urban, local-level governance since the start of the 21st century [4]. Clarity on the ethical considerations of smart cities in some way depends on clarity about what exactly it is one means when one refers to the smart city [5] (pp. 1186–1187).

Engineered approaches to the technological enhancement of cities have long existed (e.g., Roman aqueducts); in order for the label of smart city to retain some difference from other cities, we restrict its application to those cities whose enhancements are enabled by information and communication technologies (ICTs). Even scholars who seek to problematize both the label “smart” and the emphasis on ICTs in defining what “smart” is begin from the assumption that the smart city as a concept is intimately connected to the Internet and other attendant ICTs. Hollands is one of the most clear-eyed critics of the smart city, but still here the first of six characteristics of the smart city reads as follows: “utilization of networked infrastructure to improve economic and political efficiency and enable social, cultural, and urban development” [4] (p. 308). We do not seek to minimize critiques of smart city definitions that overemphasize the importance of ICTs in making a city “smart.” Indeed, we concur with Caragliu et al., who write in their survey of smart cities in Europe, “the availability and quality of the ICT infrastructures is not the only definition of a smart or intelligent city” [6] (p. 67). In fact, the authors suggest that this focus on communication infrastructure is a “bias” that “reflects the time period when the smart city label gained interest… The stress on the Internet as ‘the’ smart city signifier no longer suffices” [6] (pp. 69–70).

To lessen the emphasis on ICTs in the definition of a smart city, one might simply characterize the difference between “city” and “smart city” as similar to the difference between “phone” and “smartphone,” where the necessary difference is computing ability (especially with regard to Internet functionality), but additional differences exist through broader technological advancements that have occurred since the start of the 21st century (including, but not limited to, global positioning systems, motion sensing technology, the ubiquity of cameras, the ability to download external applications, etc.). A similar distinction based on necessary difference can be drawn between any piece of technology to which “smart” is now affixed (e.g., televisions, refrigerators) and their predecessors.

Other commentators seek to problematize the application of “smart” to technological advancements, arguing that such a rhetorical move serves as a Trojan Horse for techno-solutionism and techno-optimism [4,6,7,8,9]. This criticism largely parallels recent scholars who question the “intelligence” of artificial intelligence [10]. These criticisms often highlight the centrality of humans for successful deployment of emerging technologies, with Sanfilippo and Frischmann arguing that “it is not the city that is smart. Rather, smartness is better understood and evaluated in terms of affordances supposedly smart tools provide people” [11] (p. 296). One particularly appealing definition of the smart city can be found in [6], where the authors write that “we believe a city to be smart when investments in human and social capital and traditional (transport) and modern (ICT) communication infrastructure fuel sustainable economic growth and a high quality of life, with a wise management of natural resources, through participatory governance” (p. 70).

Although this semantic debate is crucial for making clear the stakes of smart cities and other emerging technologies in terms of their impact on humanity, there can be no denying that smart cities, as an umbrella concept covering many disparate phenomena, utilize technologies developed since the turn of the 21st century. As such, ethical questions regarding the deployment of smart cities and smart city technologies often map directly onto ethical questions in the context of emerging technologies more generally [12,13,14,15,16,17,18,19]. Questions regarding prejudice, transparency, privacy, and autonomy apply in smart city ethics just as they do in data ethics and the ethics of artificial intelligence. Ziosi et al. provide a substantial review of the ethical considerations surrounding smart cities. The authors find four dimensions that are consistent within the literature on smart city ethics. These dimensions are “network infrastructure,” “post-political governance,” “social inclusion,” and “sustainability” [5].

In terms of network infrastructure, Ziosi et al. focus on concerns having to do with “control, surveillance, data privacy, and security” [5] (p. 1188). In other words, this dimension of smart city ethics largely parallels the perennial concerns of data ethics in the era of “Big Tech.” By post-political governance, Ziosi et al. have in mind the new blend of “market mechanisms,” “privatization,” and “technology” with traditional, public sector governance in urban management [5] (p. 1191). In the authors’ view, the obfuscation of the public/private divide is heightened in the post-political smart city, although one might turn to the pre-existing literature on urban polycentric governance for further clarity on this concern [20]. Social inclusion refers to ethical considerations surrounding “citizen participation and inclusion” and “inequality and discrimination” in smart city development, deployment, and access to ostensible smart city benefits [5] (p. 1192). Finally, sustainability attempts to capture the deceptively intuitively clear ethical issues having to do with environmentally conscious urbanism. The authors are careful to indicate that sustainability must include other realms, such as the social and the economic, but they proceed to focus their attention on the environment as “an element to protect and as a strategic component for the future” [5] (p. 1193).

Again, these themes can largely be mapped on to ethical considerations for other emerging technologies. Privacy, surveillance, and data rights have been at the forefront of the fields of data ethics, computer ethics, and technology ethics for more than a decade. Concerns regarding post-political governance could be traced to the origins of neoliberalism, as networked technologies have enabled private actors to have more influence in the lives of citizens, particularly with the rise of “Big Tech.” Social inclusion, while perhaps newer to the scene, has been an item of interest in emerging technology ethics since the Arab Spring, when wide swathes of people used the relatively new (at the time) technology of social media to organize large-scale public, political demonstrations. Environmental sustainability has seen a recent uptick in technology ethics literature thanks to the 2020s boom of artificial intelligence, where some have raised concerns about the resources needed to build and power artificial intelligence applications in the context of the ever-worsening climate change crisis.

However, ethical questions in smart cities differ slightly from these domains because smart city ethics are, from the start, always political, due to the political valence of the city as a government entity and location for public discourse and economic functions. By political, we mean that there are no straightforward solutions to the problems posed by or supposedly solved for by smart cities. Instead, a wide range of stakeholders must converge upon a decision according to some (implicitly or explicitly) agreed upon decision-making strategy [21,22,23,24,25,26,27]. In this decision-making process, conflicts between various stakeholders are either resolved or not, subject to compromise, differential power relations, or the discovery of shared interests. Echoing the work of Rittel and Webber, we ought to consider the planning issues (and their associated ethical dimensions) of smart cities as “wicked problems” where “as distinguished from problems in the natural sciences, which are definable and separable and may have solutions that are findable, the problems of governmental planning—especially those of social or policy planning—are ill-defined; and they rely upon elusive political judgment for resolution” [28] (p. 160). Even the most straightforward problem in a (smart) city is accompanied by deeply complex interactional dynamics between governmental entities, corporations, universities, citizens, and so on that must somehow be adjudicated [29].

Consider the decision by a city to invest financial resources to add smart technologies to its waste removal facilities. Even taking an imaginative leap of faith in this scenario, where we can picture that this decision by the city simply and substantially benefits the entire city’s functioning and populace, by making waste removal more efficient, economical, and environmentally friendly, the city’s administrators and populace must still reckon with significant challenges in terms of externalities and spillover effects. While in a toy example we can imagine that smart city waste removal facilities really do unproblematically benefit the whole of the city, that benefit will likely not reach all stakeholders equally, and similarly the burdens associated with the improvement will also be distributed in uneven ways. Some stakeholders may be displeased by heightened investment in pre-existing waste facilities that are close to their homes; they might instead desire that new facilities be built elsewhere in town. Other stakeholders may resent the impacts of a graduated income tax, such that they pay “more” than others for these improvements that really do (in our simplistic thought experiment) benefit the whole. Even in the impossibly simple case presented here, one begins to see challenges that come with smart city ethics as compared to the ethics of other emerging technologies. Cities are fundamentally an exercise in living together despite the fact that cities hold the most diversity of background and belief in human social life. Although all other emerging technologies are irreducibly social phenomena as well, the scale and complexity of urban social life make smart city ethics, arguably, a qualitatively different arena for ethical theory and application. There is already some success in using emerging technologies to support ethical decision-making in smart cities, even with our preliminary example of complex land-use decisions. This is positively indicated by He et al. [30], although that project, which uses the combination of a visual model and a language model to enable urban function identification (e.g., building type and land-use categorizations), deals more with ethics at the macro-level (economic policy and planning), whereas our intervention is in algorithmic ethical decision-making in smart cities at the micro-level (daily interactions).

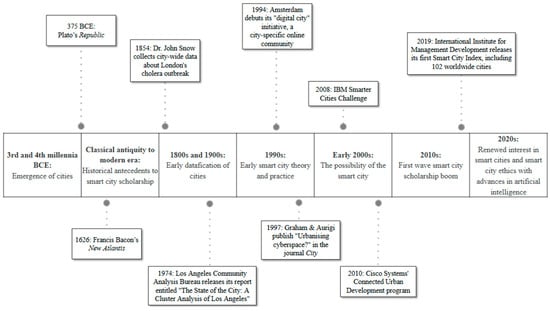

The potentially privileged space of the city in terms of ethics and politics may actually militate against the idea that smart city ethics is a new object of study. Although we have just presented an argument for considering smart city ethics as fundamentally different than the ethics of other emerging technologies, one might reply by suggesting that, while the preceding considerations are true, the ethical quandaries related to smart cities are not fundamentally different from the ethical quandaries that have occupied cities since at least Plato’s Republic (for a pre-history and history of smart cities, see Figure 1). As Ziosi et al. write, “a review on the ethical aspects of smart cities should consider that some of the challenges it identifies might simply be longstanding issues relating to urban development, rather than novel problems” [5] (p. 1188). In other words, it may be that ethical quandaries in smart cities have more to do with ethical quandaries in cities simpliciter and the presence of emerging technologies is merely a new development on ancient problems of human social life.

Figure 1.

Pre-history and history of smart cities.

Regardless of one’s position on the novelty of ethical challenges in smart cities, if decisions about those ethical challenges will be algorithmically supported, smart city citizens will want those decisions to align with their moral intuitions. In pursuit of this end, in Section 2 we introduce the extensive literature on the agent-deed-consequence (ADC) model and its applications in emerging technologies to the realm of smart cities for the first time. First, we describe the ADC model, which considers major ethical theories simultaneously and makes those theories symbolically representable, thus rendering an overall judgment of a situation that combines that situation’s major elements. We show how the ADC model would characterize police response to a terrorist attack using the tools of deontic logic, which is a logical system concerned with ethical obligations, permissions, and prohibitions. This sets the stage for future work regarding the ADC model’s relation to deontic logic, and it also demonstrates the potential for the ADC model to be built directly into the algorithms that control emerging technologies. In Section 3, we describe pre-existing efforts to apply the ADC model to emerging technologies, including autonomous vehicles (AVs), social robots in healthcare settings, and human-AI teaming (HAIT) more generally. Not only does this section demonstrate the fecundity of the ADC model in real-world socio-technical issues, but it also begins an argument in favor of human control of and responsibility for new technologies. In Section 4, we consider how ADC-driven algorithmic decision-making would resolve frequently discussed ethical challenges in smart cities. We use the example of a car-based terrorist attack to show how the ADC would respond to ethical challenges associated with network infrastructure. In the category of post-political governance, we argue that the ADC mitigates the possibility of smart city initiatives that would exclude the city’s populace and simultaneously reduces partisan squabbling. In ethical challenges associated with social inclusion, the ADC makes transparent those elements of algorithmic decision-making that are problematic when represented as objective. Our considerations about how the ADC model can respond to smart city challenges provides justification for human-centered approaches to emerging technologies, and this claim is supported by prior work on the ADC model in the context of HAIT. In Section 5, we apply the findings of the ADC model in HAIT to smart cities, suggesting that smart cities should be built as human-centered human-AI teams. This conception of smart cities would enable those smart cities to function as group agents, where the city itself has capacities irreducible to the human and non-human parts that constitute it. When understanding smart cities as group agents, we gain clarity about ethical issues such as responsibility, especially in terms of deontic logic. We close the final section by considering waste management technologies in smart cities, demonstrating the ADC model’s salience in sustainability issues and in bolstering social collaboration toward complex challenges.

2. The Agent-Deed-Consequence (ADC) Model

This paper applies Dubljević and Racine’s ADC model to smart city ethics, providing empirical justification for human-centered smart city development and deployment. The ADC model operationalizes the moral intuitions underpinning virtue ethics, deontology, and utilitarianism. Rather than assuming the primacy of ethical approaches that focus on the agent (e.g., virtue ethics), the deed (e.g., deontology), or the consequence (e.g., utilitarianism), the ADC model explains moral decision-making by considering all three types of ethical approach simultaneously. Each component of human moral judgment, which underlie the traditional ethical theories that largely focus on one element in relative isolation from the others, can be assigned a positive or negative intuitive evaluation before the three components are then combined into an overall positive or negative judgment of the moral acceptability of an action [31].

For instance, for each sub-component of morality, the ADC model provides numerical representations for aspects that are meritorious or supererogatory (represented as +2); obligatory (as +1); indifferent (0); potentially excusable (−1) and forbidden or reprehensible (−2). These numerical representations can be applied to each part of the ADC model, meaning that ethical situations can be assessed according to all three ethical components (A for agent, D for deed, and C for consequence) as well as numerical representations of deontic relations (ranging from +2 to −2), resulting in representations for any given act using a combination of alphanumeric characters.

Specific contextual aspects are also taken into consideration. For example, if a police officer is attempting to prevent a terrorist attack, this is evaluated as obligatory (+1), whereas this would be supererogatory (+2) in case of a civilian [32,33]. Armed with this insight, many different morally salient situations can be numerically represented as a way of clarifying the flexibility and stability of human moral intuition. By way of illustration, if a decorated police officer (corresponding to low-stakes virtue, or A + 1) tries to apprehend a shoplifter (corresponding to low-stakes duty, or D + 1) and causes the death of an innocent bystander (corresponding to high-stakes reprehensible outcome, or C − 2), this could be seen as morally bad. Symbolically, this can be represented as ¬⊙{[p cstit S]/ADC}, expressing that there does not exist a conditional obligation to bring about this situation, given the values of A, D, and C [34,35]. A related, stronger claim, ⊙¬{[p cstit S]/ADC}, expressing that there is a conditional obligation not to bring about this situation, given the values of A, D, and C, is not settled because the unweighted morality (M) of the situation is not sufficiently negative. Whereas, if a shoplifter (A − 1) tries to prevent a terrorist attack (D + 2) and succeeds in preventing loss of life and damage to property (C + 1), this would be seen as morally good (⊙{[p cstit S]/ADC}), but not quite as good as if a dedicated police officer (A + 1) tried to prevent a terrorist attack (D + 1) and succeeded in preventing loss of life and property and in apprehending a terrorist (C + 2). The latter can be symbolically represented as ⊙{[p cstit S]/ADC} & M(S)/T → ⊙[p cstit S], expressing the idea that the morality of S is settled as “right.”

With the additional formula M = (A* WA) + (D* WD) + (C* WC), Ref. [36] (Tables 4 and 5) where W or weight of A, D, and C can be at first postulated in a Bayesian fashion during design [37]. Bayesian weights are assigned to data or models to reflect their relative importance or reliability within a Bayesian framework, allowing for a more nuanced combination of information than simple averaging. This weighting can be determined by the amount of noise in data or by incorporating prior knowledge about model performance. Unlike traditional methods, Bayesian weighting does not discard data but instead adjusts the influence of each data point or model, leading to more accurate and stable results. In prior work, Cecchini and Dubljević [36] postulated that in situations dealing with hazardous materials, ADC-based algorithms could be programmed to be very cautious (WA = 0.8/1), while prompt to protect the materials from others (WC = 0.2/0.5), possibly at the cost of breaking some rules (WD = 0.5), considering the potential long-term damage. These weights are then updated based on empirical research using validated measures in representative samples of the population [38]. It is clear that the ADC model has great value in explaining and representing how humans actually make moral judgments in the real-world and in real-time. By combining the ADC model with systems of deontic or imperative logic, complex ethical scenarios can be symbolically represented and then input into algorithms; thus, the ADC model has extraordinary potential for developing the capacity for ethical judgment in artificially intelligent systems (see also Table 1). It is possible that the ADC model could be integrated with reinforcement learning in order to better support ethical decision-making, given the dynamism of urban systems. More likely, however, is a hybrid or neurosymbolic approach. In future work, we will investigate the ADC model’s merit for building pluralism into deontic logic. Deontic logic will need to be simplified for machinic understanding, but this simplification actually supports the ADC model’s push toward explainability in algorithmic decision-making.

Table 1.

Symbolic representation of the ADC model and deontic operators.

3. The ADC Model and Human-AI Teaming (HAIT)

Recently, the ADC model has been applied in the context of human-AI teaming (HAIT). In contrast to other attempts at algorithmic ethical decision-making, the ADC model explores the possibility of AI-empowered moral decision-making, rather than AI-assisted moral decision-making, such as in the case of Meier et al.’s “proof of concept” for algorithmic ethical decision-making in the clinic as represented by the Medical Ethics Advisor named METHAD [32,39]. The ADC model provides the ability to computationally assess moral judgments, without bias toward specific ethical frameworks, and then build those computational judgments directly into AI systems. Of course, moral judgments are complex and context sensitive. Additional problems for actual implementation abound. While the “proof of concept” for algorithmic frugality of the ADC model is clearly promising, issues such as lack of traditional truth values and the need to replace truth values with a different “test of adequacy” [40] or soundness of a calculus [41], incompleteness [42], the need to adequately combine deontic logic operators with those of necessity and possibility [43], and temporal logic to be able to deal with updating circumstances [34], and incompatible demands [44], signal the need to temper enthusiasm and that much hard work is yet to be done. The computational and formalistic approach taken by the ADC model allows for ethical AI based on data taken from multiple stakeholder groups. In this way, the ADC model provides the basis for ethical judgment by AI in ways that align with human moral intuitions across cultural differences and problem spaces, expressing Boole’s idea that the validity of analysis does not depend on symbol interpretation, but solely on pure calculus of rules and formulas combined with a fixed interpretation [45].

The encouraging possibilities of building algorithmically ethical emerging technologies by way of the ADC model are already evident through the model’s application in the context of autonomous vehicles (AVs). Dubljević shows how the ADC model successfully sidesteps the traditional paradigm of autonomous vehicle ethics, where the scholarly and technical discussion typically centers on the relative benefits and disadvantages of “selfish” or “utilitarian” AV behavior [46] (p. 2462). Instead, just as we saw above, the ADC model allows technologists and ethicists to build empirically justified human moral intuitions, found from cutting-edge empirical research, directly into the algorithmic decision-making of autonomous vehicles. Thus, the ADC model enables autonomous vehicles to act in ways that more closely align with human moral decision-making than algorithms that are built on single component ethical theories (those that focus only on the agent, deed, or consequence in relative isolation) or with algorithms built on datasets from particular cultural backgrounds or problem spaces. Further, ethical research on autonomous vehicles typically focuses far too much on simplistic, trolley-like scenarios, where the moral ambiguity of the problem space is lost when compared with the ethical quandaries of the real world. Dubljević shows that in a terrorist attack scenario, AVs built on the ADC model would outperform AVs built on both selfish and utilitarian algorithms [46] (p. 2467). Further, AVs built on traditional ethical theories might actually make the overall situation worse due to their inflexible approach to algorithmic ethics [46] (p. 2467).

Our contention is that the ADC model not only provides a path toward building autonomous vehicles with human-like ethics embedded into their algorithms but also the potential for more ethical HAIT in general. Pflanzer et al. argue that human-AI teaming may require greater ethical attention than situations where AI works in isolation or with other AI agents, as joint human-AI teams can be deployed in a wider variety of problem spaces and thus are subject to a wider variety of ethical issues [36] (p. 917). Joint human-AI systems are often placed in more ambiguous ethical arenas with more high-stakes outcomes. In these scenarios, it is a necessity that AI team members act in alignment with human moral intuitions. This is a necessity not only so that AI team members make ethical decisions as part of the team’s functioning but also so that the human elements of the joint human-AI team come to trust their AI teammates, thus leading to greater levels of task-success and overall ethical action [36] (pp. 917–919).

The fecundity of the ADC model in the context of HAIT largely maps onto the same promise pointed to in Dubljević’s paper on the ADC in the context of AVs [46]. However, HAIT comes with some new ethical challenges that may be less present in the context of AVs. In general, the issue is that “humans are not very comfortable with viewing intelligent machines along ethical boundaries” [36] (p. 926). However, instances of human-AI teaming have the benefit of including a human in the algorithmic loop. As Pflanzer et al. write, “a human proxy that regulates the ethical framework used in such applications will be accountable for justifying the weights utilized in the ADC algorithm and explaining why a particular ethical framework was selected, a solution that satisfies the notion that even if responsibility is delegated to AI, it always remains somewhat human” [36] (p. 929). The ADC model can provide the basis for ethical action that algorithmically aligns with human moral intuitions, but humans may be skeptical of AI systems in spite of this promising academic and technical possibility. While this skepticism challenges the potential of robustly team-like human-AI teams, inclusion of human elements within algorithmic ethical decision-making may resolve some of the ethical challenges associated with embedding artificially intelligent systems in society, including but not limited to autonomous vehicles, social robots in healthcare settings, and smart cities.

4. The ADC Model and Smart City Ethics

As Ziosi et al. indicate, ethical considerations in the context of smart cities can be grouped into four dimensions: “network infrastructure,” “post-political governance,” “social inclusion,” and “sustainability” [5]. Our task in this section is to show that the ADC model, particularly its application in HAIT, can resolve the ethical quandaries that emerge in each of these four dimensions. Given the comprehensive nature of Ziosi et al.’s review, the ADC model’s success in these scenarios will indicate the utility of building the algorithmic decision-making of smart cities in alignment with human moral intuitions as made possible by the ADC model and its resultant research program.

In terms of Ziosi et al.’s identification of ethical issues in “network infrastructure” [5], let us see how the ADC model fares in the context of surveillance, privacy, and security. Consider Dubljević’s example of the ADC model as algorithmically driven ethical decision-making during a car-based terrorist attack (A − 2; D − 2; C − 2) [46]. In such a case, each of the three main values assessed in the ADC model would provide a very clear ethical valuation of using smart city surveillance technologies to track the car qua terrorist. Symbolically, this can be represented as ⊙¬{[p cstit S]/ADC}, expressing that there is a conditional obligation not to bring about this situation, given the values of A, D, and C are settled as sufficiently negative (M = −6, regardless of the weighting). Thus, the ADC would justify surveillance, assuming that the target population’s moral intuitions, built into the algorithmic decision-maker, align with our everyday intuitions. In fact, it would have been an ethically wrong omission not to use surveillance to prevent a terrorist attack on the civilian population. However, many other cases of algorithmically catalyzed surveillance operations would provide a more mixed picture within the ADC model. In cases not involving straightforwardly harmful, criminal behavior (e.g., misdemeanors, such as jaywalking, vs. felonies, such as murder), the ADC model would recommend the input of skilled human decision-makers before automated processes of smart city surveillance would begin. Symbolically, this can be represented as ¬⊙{[p cstit S]/ADC}, expressing that there is a no conditional obligation to bring about this situation, given the values of A, D, and C. Unlike human decision-makers, AI decision systems cannot fall back on intuitive plausibility and must consult others before deriving imperatives and obligations to prevent what is referred to as “deontic explosion” in the literature [47,48,49,50,51]. Since AI systems are lacking common sense, they will not be able to differentiate between conditional obligations that are inferred from those that are explicitly given in disjunctive (⊙{[p cstit S]→ ⊙[p cstit S]⊻[p cstit Q] → ⊙[p cstit Q], expressing that if it is conditionally obligatory for the smart city to engage in surveillance to track the current location of the terrorist, then logically, it is conditionally obligatory to either engage in surveillance to track the current location or issue a call to a SWAT team to the current location, which would imply that may be conditionally obligatory to call the SWAT team to the current location, and so on) or conjunctive (⊙{[p cstit S&Q] → ⊙[p cstit Q], expressing that if it is conditionally obligatory to engage in surveillance to track the current location and issue a call to a SWAT team to the correct location, which would imply that may be conditionally obligatory to call the SWAT team to the location without setting up surveillance to check if the location is correct) premises and obligations. Therefore, checking with human decision makers in cases of ambiguity, that is when the weighted values of ADC are not in the extremes (M ≥ +4 ∨ M ≤ −4) is warranted. In other words, systems must be built that can handle derivative obligations while keeping the original intention. This strategy of hybrid decision-making allows both for the prudent allocation of human labor resources as well as the ADC model’s signature flexibility, and it responds to the main competing desiderata of smart city ethics in terms of surveillance technologies. The ADC-empowered smart city would quickly adjust for clear-cut cases of moral harm while simultaneously keeping humans at the center of more challenging ethical cases.

In terms of “post-political governance,” Ziosi et al. note that many authors share concerns that while automation may “allow local governments to run more efficiently,” automation also has the potential to threaten participatory governance through traditional public administration channels [5] (p. 1192). The ADC model avoids these charges to a greater extent than other algorithmic management strategies, as the ADC model builds in from the start the moral intuitions of its target populations. Further, the lessons of the ADC model’s application in HAIT show the value of human decision-making even when processes can be fully automated. So, a data-driven approach based on the ADC model could be seen as a way to reduce partisan squabbling of stakeholders in the urban environment. If the formulaic representation of the decision procedure is available in advance, prior to individual conflicts arising, it could be considered as a binding resolution and a new opportunity to decrease politicization. While this would not completely resolve all conflicts about values, establishing cut-off points in a rational way, beyond emotionally driven post hoc recriminations, increases the rationality of the decision procedure. It is important to note that any ADC-based algorithm would merely serve as decision support, not the final decision-maker. Ultimately, elected officials make final decisions, and they may make them based on partisan interests. However, the information about algorithmic recommendations would be public knowledge, and we posit this may be enough to curb “squabbling.” We assume that any cut-off point for algorithmic decision-making in terms of the values of A, D and C would be neither too low nor too high. Ambiguity in one or more subcomponents (e.g., A0, D − 2, C0) could lead to artificially inflated values and overly paternalistic intervention. Similarly, setting algorithmic parameters to complete extremes (e.g., A − 2, D − 2, C − 2) would render them practically useless. We posit that to infer automatic assignment of conditional obligation for the smart city, at least one of the subcomponents needs to have an extreme value (e.g., M ≥ +4 {[A + 1, D + 1, C + 2]…} ∨ M ≤ −4 {[A − 1, D − 2, C − 1]…}).

A similar story can be told about the third dimension identified by Ziosi et al., social inclusion [5]. Yigitcanlar et al. and O’Neil note the tendency of algorithmic decision-making to sustain and intensify pre-existing social challenges in the realms of race and economic class [52,53,54]. These concerns should not be minimized; however, the ADC model may prove to be better equipped at avoiding prejudicial algorithmic outcomes due to its broad inclusion of cultural differences in human moral intuitions as well as its flexible, multivariable approach to ethical evaluation (as opposed to traditional ethical theories that are too simplistic in capturing the wide variety of human ethical judgment in real time). Given these matters of fact about how the ADC model is built on the moral intuitions of user populations, the model’s application in smart cities is well-positioned to be generalizable across different cultural contexts. Unlike naive approaches to the problem of algorithmic fairness, which claim that predictions and decisions are entirely objective and “unburdened by value judgments” [5] (p. 1193), the ADC model provides transparency of values in the sub-components of morality that provide the raw input for computation and the intuitive validity of specific judgments (as noted above, Bayesian postulating and updating of weights can be used to train and fine-tune the system, and periodic surveying of the smart city population may prove beneficial and a possible solution to the value alignment problem [55]). We will address the ADC model’s applicability in the fourth dimension of Ziosi et al.’s analysis, “sustainability” [5], in depth in a later section, but our claim is that the ADC model’s application in human-AI teaming points to the potential for smart cities with humans at the center to function as “group agents” [56]. Group agents, as a human-built “technology” for expanding the range and strength of human capabilities and capacities, can be used to increase the likelihood of humans choosing to cooperate and accomplish successful collaborative actions. We contend that using smart city technologies and the ADC model to create large-scale group agents can enable humanity to work toward resolving the social and economic dimensions of sustainability [57,58,59].

Evaluating the ADC model in the context of Ziosi et al.’s four dimensions highlights precisely what Pflanzer et al. found in applying the ADC model to human-AI teaming: the centrality of human knowledge, decision-making, and responsibility in the development and deployment of ethical algorithms [5,36]. A central tenet of the application of the ADC model in emerging technologies is human input. The ADC model does not seek to create ethical frameworks to guide emerging technologies from the armchair; instead, the ADC model uses empirical findings about human moral intuitions to build algorithms that support ethical action. Humans are “in the loop” because data from humans’ moral intuitions is precisely the training data for the algorithmically driven ethical emerging technologies. Further, as Coin and Dubljević argue, it is not enough to merely include some arbitrary set of humans as the training set for these new technologies [32]. In order for algorithmically driven ethical machines to align with human moral intuitions, technologies must align with the granular, context- and culture- sensitive variations in human moral intuitions across diverse problem spaces and cultures. In this way, the ADC model’s application to smart city ethics necessarily includes human (moral) knowledge and decision-making, successfully avoiding many traditional smart city ethical quandaries as identified by Ziosi et al. and the smart city ethics literature reviewed therein [5]. Similarly, the ADC model’s application in HAIT contexts addresses the need for human responsibility in cases of supposedly ethical algorithmic action.

The above considerations provide empirical justification for a prevailing trend in both smart city ethics and artificial intelligence ethics: human-centeredness [11,60,61]. Proponents of human-centered emerging technologies claim that these technologies must be developed and deployed in ways that prioritize human flourishing. Additionally, the human-centered camp often contends that emerging technologies must retain skilled human decision making in order to achieve human flourishing in domains affected by artificial intelligence and other 21st century technologies.

Schmager et al. define human-centered AI (HCAI) as “focuse[d] on understanding purposes, human values, and desired AI properties in the creation of AI systems by applying Human Centered Design practices. HCAI seeks to augment human capabilities while maintaining human control over AI systems” [61] (p. 6). In HCAI’s initial formulation, human-centeredness was posited as a method for addressing systems complexity [61] (p. 21). Given our description of the particularly challenging ethical standing of cities, we might suggest that the complex system of smart city ethics requires a human-centered perspective. Schmager et al. specifically discuss algorithmic decision-making and HCAI, ultimately advancing a position enabled by the ADC model’s application in smart city ethics [61]. The authors write that “capabilities can be enhanced by ‘Decision-Support Systems’ where algorithmic systems are leveraged to provide assistance to human actors… An appropriate allocation of function between users and technology… should be based on several factors including the relative competence of technology and humans in terms of reliability, flexibility of response, and user well-being” [61] (pp. 8–9). In the context of ethical decision-making, Schmager et al.’s review of HCAI indicates the necessity of “stakeholder participation,” “iterative processes,” and “identifying social values, deciding on a moral deliberation approach, and linking values to formal system requirements and concrete functionalities,” all of which are enabled and supported by the ADC approach to algorithmic ethics [61] (pp. 10–19). Value sensitive design is quite similar to human-centered AI and one of its intellectual forebears. Sanfilippo and Frischmann’s approach to human-centered smart city development takes many of its cues from Batya Friedman’s (and her collaborators’) work on value sensitive design, and this research may too be fruitfully combined with the ADC model. For more, see [62,63,64].

The research program inaugurated by the ADC model and its resultant application in autonomous vehicles, social robotics, and more general cases of human-AI teaming not only supports the normative claims made by human-centered technology proponents, it also indicates a way to create ethical algorithms that are human-centered in a wide range of emerging technologies.

5. Smart Cities as Joint Human-AI Group Agents

Given the above, applying the ADC model to smart city ethics advances an understanding of human-centered smart city ethics while simultaneously providing the toolkit to building smart cities where algorithmic decision-making aligns with human moral intuitions. The ADC model’s intervention in the human-AI teaming literature suggests additional reasons for human-centeredness, particularly with considerations on responsibility. Thus, we suggest that smart cities too should function as human-AI teams with human inclusion both at the stage of algorithmic development (i.e., through the ADC model) and at the stage of real-world deployment (i.e., through human-AI teaming). As such, we might think of smart cities that are built along the lines recommended here as “group agents” [56], where the smart city has properties that are realized only at the group level rather than as a mere aggregation of the properties at the individual level. This approach could be helpful in establishing conditional obligations for the AI systems deployed in smart cities. In the formula ⊙¬{[p cstit S]/ADC}, it is the group agent that has the obligation to prevent a certain situation, say a terrorist attack. But not every member of the group is in the position to help [65]. Here it can be said that ◇[p cstit S] is a logical prerequisite of both ⊙{[p cstit S]/ADC} and ⊙¬{[p cstit S]/ADC}, and knowledge of ADC values of the particular situation S is needed to ascertain of an obligation, conditional or otherwise is settled as “right.” This means that most individuals would be excused of an obligation and that algorithmic systems may have an advantage for assigning responsibility for seeing to it that a certain situation is resolved in a particular way.

Paradigmatic group agents range from a married couple, a sports team, a limited liability corporation (LLC), a university, the modern State, and so on and so forth. These group agents have been created by humans for human purposes. Each of these group agents are a technology for expanding the range and strength of human capabilities. For example, a sports team can play games that individuals cannot; an enduring club or professional team can perform maneuvers that a pick-up team cannot. As Hindriks writes, corporate groups “have properties or powers that the individuals involved do not have” [66] (p. 129). As Pflanzer et al. write, “intelligent machines can be used to extend human performance through HAIT, and methods of enhancing teamwork between humans and artificial intelligence (AI) systems are being thoroughly researched. Yet with added capabilities often come added responsibilities” [36] (p. 917). In other words, Pflanzer et al. argue that human-AI teams in practice satisfy two important criteria for group agency: group-level properties not instantiated at the individual level and explanatory (and thus normative) weight regarding a full accounting of our social world. Understanding human-AI teams as group agents can help both with our understanding of the enhanced and added capabilities that emerge when humans and AI work robustly together as one unit and help us understand the ascription of responsibility to joint human and non-human systems. Human-centered AI provides a similar response to questions of ascription of responsibility in human-AI teams. Dignum and Dignum argue that HCAI systems are socio-technical systems with responsibility found in the social component of any given system [67]. Group agency is studied by social ontology, a branch of philosophy that deals with the metaphysics of the social world. As Epstein characterizes it, “social ontology is the study of the nature of the social world. ‘What is X?’ social ontology asks, where ‘X’ stands for any social thing whatever” [68] (p. 1). Social ontology examines with great detail what additional capabilities can be created through joint agency, e.g., the contemporary corporation’s ability to withstand membership changes and yet remain the same firm. Social ontology also reckons with the changes in responsibility with such group formation, e.g., corporate responsibility and proxy agency [69], where we might sometimes best ascribe responsibility to the firm as a whole (the former) or sometimes to an individual member who holds a major decision-making role and thus a higher burden of blame (the latter). There are many types of group agents and many different arguments for their (non)existence. For our purposes here, it suffices to say that human-AI teams might function in some ways that can be explained through the conceptual resources of group agency. If that is true, and if it is true that smart cities can/should be built as human-AI teams, then perhaps there is also a link between smart cities and group agents.

This may be a contested claim among social ontologists. However, there are four reasons that smart cities might be suitable candidates for group agency status even in traditional social ontology. First, Chwe argues that the common knowledge condition is instantiated among a group as large as a Super Bowl audience, even though those individuals involved are spread across the globe [70]. Pettit writes that “recent work on the conditions that might lead us to ascribe such joint attitudes, and to posit collective subjects, has stressed the fact that we usually expect a complex web of mutual awareness on the part of individuals involved” [71]. This mutual awareness is of a kind with the common knowledge condition and identified throughout the literature on collective intentionality [72,73,74,75]. A Super Bowl audience is a different entity than a smart city, but this claim does lend credence to the scalability of common knowledge through technology. Willis and Aruigi [76] imagine an energy use dashboard that allows neighbors to compare their energy usage with each other, not only satisfying the common knowledge condition but also lending support to our claims about smart cities qua group agents as a way to respond to sustainability challenges. In general, our contention is that smart city communicative technologies are a prime candidate for facilitating the exchange of information required for group collaboration. Second, Huebner [77] and Mulgan [78] argue for the scalability of minds, specifically group minds, and this also occurs through technological means. Mindedness ascriptions significantly lessen the barrier to entry for group agency status, as group minds sidestep some metaphysical issues having to do with the “problem of collective intentionality.” Huebner’s paradigmatic example of a macro-scale group mind is CERN, which he argues does share intentions collectively. Third, Bratman [79] and Shapiro [80] posit the existence of very large group agents (with collective intentionality) without any reference to group minds. Both operate on the “mesh, not match” model of group agency stemming from Bratman’s earlier work, and these arguments support the idea that smart cities can become group agents through institutional design. Fourth, emerging literature on collective intelligence, such as from Olszowski [81], supports the possibility of collective decision-making procedures regarding urban governance. Olszowski examines four collective intelligence participatory governance platforms that support not only collective decision-making among city populaces but also, in his view, the emergence of group-level cognitive capacity. These four arguments about scalability—of common knowledge, of minds and intentionality, of group agents, and decision-making—all point to the potential of smart cities to function as group agents even in the most restrictive sense. An additional issue is ascribing responsibility for any harm done by group agent decisions. While we do not have the space to offer a lengthy theory on this particular issue, we posit that interests of actual humans (e.g., citizens) take precedence over those of artificial agents (e.g., corporations). In a democracy, elected representatives (e.g., mayor or members of an executive council) take responsibility for decisions of group agents, including smart cities, in their charge.

We contend that smart cities, if built in accordance with the ADC model, could function as human-AI teams and thus as group agents in some sense. The ADC model allows for some ethical decision-making to be automated by smart city and AI technologies, thereby increasing the capabilities of the city as a whole, including its government, businesses, universities (i.e., the “triple helix model” [82]), its populace, and its technological infrastructure. As we posited above, such ethical automation in clear-cut cases would enable skilled human decision-makers to focus their attention on more complicated issues. This alone is a substantial increase in city-wide ethical capacity, and it very well could lead to emergent properties that would be irreducible to the mere sum of the city’s parts. This is perhaps especially true in the context of smart city ethics, where complex value judgments in a political community involve a near-infinite number of variables. Despite this emphasis on the possibilities afforded by algorithmic ethical decision-making, Pflanzer et al. conclude that such decision-making by AI may actually require membership in a human-AI team, as the “agency of AI cannot be assumed… for AI to be held morally accountable for its actions, then it must be designed to be autonomous for that task scenario, and that agency must be understood by testers, operators of, and passive users and spectators of the AI” [36] (p. 927). That is, if smart cities supported by artificial intelligence mechanisms were to in fact meet the criteria for robust ethical judgments (by way of the ADC model), those smart cities would necessarily be a collaborative effort between humans and non-humans to the extent that the group would in fact be best understood as an agent in itself. The ethical decision-making of AI applications with skilled human input satisfies the definition of human-AI teaming given by Pflanzer et al. [36] (p. 929), and using the ADC model to create this binding of human and machine is a fruitful way to resolve many of the complaints that are frequently lodged about algorithmic decision-making.

Our claim is that smart cities built on the ADC model can use algorithmic ethics to extend the capabilities of cities as a whole, thereby rendering the smart city as a group agent with group-level properties irreducible to its individual parts. This claim is given support by understanding smart cities as joint human-AI teams. Earlier, we posited that such a view provides some clarity about how the ADC model can respond to the fourth dimension of smart city ethics identified by Ziosi et al., sustainability [5]. In particular, we contend that using smart city technologies and the ADC model to create large-scale group agents can enable humanity to work toward resolving the social and economic dimensions of sustainability [54,55,56]. Primarily, we “frame” [83,84] climate change as a “social dilemma,” defined as “a collective action or coordination problem, a possible conflict between the ends of individual behavior (individual welfare) and the performance of a group of people, acting as a social system (social welfare)” [85] (p. 160). By focusing on sustainability as a social dilemma, we are from the start focusing on the two dimensions of sustainability left unexamined by Ziosi et al., the social and the economic [5]. Much more work has been done on the environmental dimension of sustainability than on the social and economic dimensions of sustainability, and this is particularly true in the case of smart city literature. Rather than focusing on highly contested claims about how environmentally friendly smart cities actually are or could be, our view is that smart cities show great promise in resolving the social dilemmas associated with climate change. Humanity has developed many possible responses to climate change issues, as evidenced by the debates between “green energy” and “geoengineering” [86,87,88]. Our concern here is the ability of humans to come to an agreement on strategy and tactics and then act in accordance with that agreement. Smart cities, rendered group agents by way of the ADC model and lessons from human-AI teaming, would seem to solve this social dilemma by encouraging behavior toward social welfare rather than individual welfare. While no individual has the ability to resolve sustainability issues, group agents such as smart cities have an exponentially increased ability and responsibility [89]. A notion of group ability can be introduced through a combination of ordinary historical possibility together with a concept of group action. If we use “g” to denote a group protagonist, then ◇[g cstit S] is the logical prerequisite of ⊙{[g cstit S]/ADC} and ⊙¬{[g cstit S]/ADC}, and an absolute obligation can be derived from a conditional obligation together with the necessity of its antecedent condition [90]. For related perspectives from social philosophy and environmental ethics, please consult [91,92,93,94]. In other words, building smart cities such that they function as human-AI teams, and thus group agents, provides a valuable option for enhancing the ethically loaded decision-making of humans vis-à-vis sustainability and related issues. (As noted elsewhere, human-centered AI is an ADC model-supported framework for developing human-AI teams and potentially smart cities that function as group agents. In contrast to the individualistic paradigm of HCAI, Dignum and Dignum have suggested “humanity-centered AI,” which prioritizes societal or community well-being (i.e., resolving social dilemmas) [67].) This is potentially true for two reasons. First, ethical algorithmic decision-making, as argued for above, allows for the resolution of clear-cut ethical quandaries by automated means, enabling more efficient resource allocation elsewhere (e.g., allowing skilled human decision-makers to focus on more complex or ethically challenging situations). Second, integration into a group agent (no matter the type), supports the shift toward other-oriented thoughts and actions. In other words, group agent formation comes with a whole host of normative obligations that condition one’s individual thoughts and actions. In the case of the economic and social dimensions of sustainability, we contend that such other-oriented behavior may lead to significant progress on the social dilemma(s) of climate change.

The ADC model provides clarity about the potential for algorithmic decision-making to support this shift in smart cities from individual or sub-group benefitting actions toward resolving the social and economic dimensions of sustainability. Consider our earlier example of the always political nature of smart city ethics: the decision by a city to invest financial resources to add smart technologies to its waste removal facilities. Waste disposal is an issue of sustainability, encompassing all three dimensions of sustainability as identified by Bibri [57,58,59]. Smart city technologies show significant potential in alleviating the technological and social dimensions of this problem of environmental sustainability. The TrashTrack initiative, undertaken in collaboration with MIT’s SENSEable City Lab, was an attempt to apply the abilities of a sentient city, where smart city technologies are ubiquitous and pervasive [95,96], to waste management. The goal was to “understand the ‘removal-chain’ as we do the ‘supply-chain’, and where we can use this knowledge to not only build more efficient and sustainable infrastructures but to promote behavioral change” [97]. The project attached hundreds of small, GPS-enabled “smart” tags to “different types of trash so that these items [could] be followed through the city’s waste management system, revealing the final journey of our everyday objects in a series of real time visualizations” [97]. TrashTrack focused specifically on recycling, asking “how much greenhouse gas is created and how much energy is wasted in the process,” as well as if recycling actually happens as cities and other groups promise [98].

In contrast to the TrashTrack initiative, Pijpers offers an incisive critique of Rotterdam’s often invasive and otherwise questionable attempts to combat illegal waste disposal through an extensive system of surveillance cameras [99]. Pijpers argues that waste management surveillance systems fail to see the forest (e.g., the breakdown of civil society) for the trees (data). Pijpers’ argument centers on both the shortcomings of human ethical decision-making and the importance of humans with intimate, local knowledge [99]. On the one hand, Rotterdam’s citizens are chosen to be followed by cameras (and the officials who control them) after exhibiting “unusual behavior,” which is left open to the interpretation (and perhaps exploitation) of the officials in charge of surveillance [99] (p. 7). On the other hand, Pijpers posits that placing a human at the center of the deployment of these smart city technologies in waste management may be cause for optimism. He writes that about one element of his ethnographic study that “though Amy is a public servant and not a grass-roots activist, there is a potential opening of political contestation [against the surveillance system] in being emplaced—with a human body as interface—in the ecology of the neighborhood, if this moment of communication could make possible a culture of care” [99] (p. 11).

Although the TrashTrack project represents the great promise of smart city technologies in creating more environmentally friendly urban systems (inclusive of the people who call those urban systems home), the lessons from the city of Rotterdam greatly complicate that rosy picture. However, the ADC model works well to resolve these issues. As we have noted above, the ADC model can remove some of the burden of ethical decision-making from human actors in clear-cut cases. In the case of the invasive surveillance and arbitrary tracking techniques critiqued by Pijpers, the introduction of ethical algorithms can provide some much-needed objectivity to waste management surveillance practices that are marred by prejudice. At the same time, the ADC model serves as a method for representing human moral intuitions by building into the algorithm the ethical frameworks of user populations and recommends delegation of more complicated ethical evaluations to human decision-makers. In this way, applying the ADC model to smart city waste management systems responds to both desiderata that emerge from Pijpers’ analysis, providing the basis for optimism in the potential of smart city technologies to respond to ethical quandaries surrounding sustainability. (The tracking of trash as a supply chain is crucial for knowledge of ADC values of the particular situation S and needed to ascertain if an obligation, conditional or otherwise is settled as “right,” so symbolically this could be represented as ⊙{[g cstit S]/ADC}. However, surveillance of all “unusual behavior” is not necessary for solving the problem at hand and is actually detrimental for establishing group agency at the level of the smart city and so ⊙¬{[p cstit R]/ADC}.)

To summarize, in this final section we have provided an argument for understanding smart cities as group agents based on the ADC model and its uptake in human-AI teaming. Further evidence for this view would be the presence of irreducibly group-level attributes, and we have shown, through an analysis of smart city waste management technologies that are built in accordance with the ADC model, that smart cities can respond to the ethical quandaries of sustainability in ways that outstrip the current capabilities of our social world. As such, we can draw a number of important conclusions. First, the ADC model shows promise in all four dimensions of smart city ethics as identified by Ziosi et al. [5]. Second, smart cities built in accordance with the ADC model and its use in human-AI teaming may qualify as group agents, thus making a significant step toward resolving social dilemmas such as climate change. Third, in smart city development and deployment, human-centeredness ought to be a core design principle. Human-centeredness can be achieved by the ADC model, and human-AI teaming literature along with our analysis of smart city waste management technologies provide justification for this view.

6. Conclusions

To recapitulate our larger argument, we began by providing an overview of smart city ethics while simultaneously performing novel theorization about the definition of smart cities and the complicated relationship between (smart) cities, ethics, and politics. Next, we introduced the ADC model and its symbolic representation through deontic logic. The ADC model, when formalized symbolically, allows for its application in the context of human-AI teaming and in specific use-cases such as autonomous vehicles and social robotics in healthcare settings. However, the ADC model’s promise for algorithmic ethical decision-making had yet to be demonstrated in smart cities. Thus, we examined the ADC model’s potential in four dimensions of smart city ethics, with particular emphasis on (economic and social) sustainability, granting an understanding of smart cities and human-AI teams as group agents. The result of our analysis is as follows. First, we have shown the ADC model’s merit in algorithmic ethical decision-making. Second, we have shown that algorithmic ethical decision-making, if structured by the ADC model, successfully addresses a significant portion of the perennial questions in smart city ethics. Finally, we have shown that smart cities built with the ADC model may in fact be a significant step toward resolving important social dilemmas of our time. This paper’s goal is to provide a proof of concept, and its current limitations are lines for further research, e.g., deploying the ADC model’s representation in deontic logic in algorithmically governed emerging technologies.

Author Contributions

D.S. and V.D. contributed equally to this article. D.S. Investigation, conceptualization, writing—original draft, visualization and analysis. V.D. Conceptualization, methodology, project administration, analysis and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

The research reported in this article was partially funded by a CAREER award from the National Science Foundation (#2043612; awarded to V.D.). All claims expressed in this article are solely those of the authors and are neither representative of nor unduly influenced by the NSF or any other entity.

Data Availability Statement

There is no data associated with this work.

Acknowledgments

The authors would like to thank Shay Logan, Shawn Standefer, and the members of the Neurocomputational Ethics Research Group at North Carolina State University (Dario Cecchini, Shaun Respess, Ashley Beatty, Michael Pflanzer, Sean Reeves, Julian Wilson, Ishita Pai Raikar, Menitha Akula, Savannah Beck, Sumedha Somayajula, Lena Sall, J.T. Lee, and Katie Farrell) for useful feedback on an early draft of this paper. D.S. would like to thank Georg Theiner for his help in conceptualizing smart cities as group agents.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Brauneis, R.; Goodman, E.P. Algorithmic Transparency for the Smart City. Yale J. Law Tech. 2018, 20, 103. [Google Scholar] [CrossRef]

- Lim, H.S.M.; Taeihagh, A. Algorithmic Decision-Making in AVs: Understanding Ethical and Technical Concerns for Smart Cities. Sustainability 2019, 11, 5791. [Google Scholar] [CrossRef]

- Zambonelli, F.; Salim, F.; Loke, S.W.; De Meuter, W.; Kanhere, S. Algorithmic Governance in Smart Cities: The Conundrum and the Potential of Pervasive Computing Solutions. IEEE Technol. Soc. Mag. 2018, 37, 80–87. [Google Scholar] [CrossRef]

- Hollands, R.G. Will the Real Smart City Please Stand Up?: Intelligent, Progressive or Entrepreneurial? City 2008, 12, 303–320. [Google Scholar] [CrossRef]

- Ziosi, M.; Hewitt, B.; Juneja, P.; Taddeo, M.; Floridi, L. Smart Cities: Reviewing the Debate About Their Ethical Implications. AI Soc. 2024, 39, 1185–1200. [Google Scholar] [CrossRef]

- Caragliu, A.; Del Bo, C.; Nijkamp, P. Smart Cities in Europe. In Creating Smart-er Cities; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Haarstad, H. Constructing the Sustainable City: Examining the Role of Sustainability in the ‘Smart City’ Discourse. J. Environ. Policy Plan. 2017, 19, 423–437. [Google Scholar] [CrossRef]

- Karvonen, A.; Cugurullo, F.; Caprotti, F. Introduction: Situating Smart Cities. In Inside Smart Cities: Place, Politics, and Urban Innovation; Routledge: New York, NY, USA, 2019. [Google Scholar]

- Allwinkle, S.; Cruickshank, P. Creating Smart-er Cities: An Overview. In Creating Smart-er Cities; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Narayanan, A.; Kapoor, S. AI as Normal Technology. Knight First Amendment Institute at Columbia University. Available online: http://knightcolumbia.org/content/ai-as-normal-technology (accessed on 31 May 2025).

- Sanfilippo, M.R.; Frischmann, B. Slow-Governance in Smart Cities: An Empirical Study of Smart Intersection Implementation in Four US College Towns. Internet Policy Rev. 2023, 12. [Google Scholar] [CrossRef]

- Waheeb, R. Using the Ethics of Artificial Intelligence in a Virtuous and Smart City. SSRN J. 2023. [Google Scholar] [CrossRef]

- Sanchez, T.W.; Brenman, M.; Ye, X. The Ethical Concerns of Artificial Intelligence in Urban Planning. J. Am. Plan. Assoc. 2025, 91, 294–307. [Google Scholar] [CrossRef]

- Juvenile Ehwi, R.; Holmes, H.; Maslova, S.; Burgess, G. The Ethical Underpinnings of Smart City Governance: Decision-Making in the Smart Cambridge Programme, UK. Urban Stud. 2022, 59, 2968–2984. [Google Scholar] [CrossRef]

- Kitchin, R. The Ethics of Smart Cities and Urban Science. Phil. Trans. R. Soc. 2016, 374, 20160115. [Google Scholar] [CrossRef]

- Zwitter, A.; Helbing, D. Ethics of Smart Cities and Smart Societies. Ethics Inf. Technol. 2024, 26, 69. [Google Scholar] [CrossRef]

- Lomeli, B.; Grajeda, M.; Gutierrez, S. Smart Cities and the Ethical Dilemma. Young Sci. Philos. Bord. 2024, 1, 4. [Google Scholar]

- Mark, R.; Anya, G. Ethics of Using Smart City AI and Big Data: The Case of Four Large European Cities. ORBIT J. 2019, 2, 1–36. [Google Scholar] [CrossRef]

- Goodman, E.P. Smart City Ethics: How “Smart” Challenges Democratic Governance. In The Oxford Handbook of Ethics of AI; Dubber, M.D., Pasquale, F., Das, S., Eds.; Oxford University Press: Oxford, UK, 2020; pp. 823–839. [Google Scholar] [CrossRef]

- Ostrom, V.; Tiebout, C.M.; Warren, R. The Organization of Government in Metropolitan Areas: A Theoretical Inquiry. Am. Polit. Sci. Rev. 1961, 55, 831–842. [Google Scholar] [CrossRef]

- Rawls, J. Justice as Fairness: A Restatement; Kelly, E., Ed.; Harvard University Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Rawls, J. Lectures on the History of Political Philosophy; Freeman, S.R., Ed.; Belknap Press of Harvard University Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Meehan, J. (Ed.) Feminists Read Habermas: Gendering the Subject of Discourse; Routledge Library Editions: Feminist Theory; Routledge: London, UK, 1995. [Google Scholar]

- Nussbaum, M.C. Ethical Choices in Long-Term Care: What Does Justice Require? WHO: Geneva, Switzerland, 2002. [Google Scholar]

- Nussbaum, M.C. Beyond the Social Contract: Toward Global Justice. In Tanner Lectures on Human Values; University of Utah Press: Salt Lake City, UT, USA, 2004; Volume 24. [Google Scholar]

- Habermas, J. The Inclusion of the Other: Studies in Political Theory; Cronin, C., De Greiff, P., Eds.; Studies in Contemporary German Social Thought; MIT Press: Cambridge, UK, 2000. [Google Scholar]

- Habermas, J. Between Facts and Norms: Contributions to a Discourse Theory of Law and Democracy; Rehg, W., Translator; Studies in Contemporary German Social Thought; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a General Theory of Planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- Colding, J.; Barthel, S.; Sörqvist, P. Wicked Problems of Smart Cities. Smart Cities 2019, 2, 512–521. [Google Scholar] [CrossRef]

- He, D.; Liu, X.; Shi, Q.; Zheng, Y. Visual-Language Reasoning Segmentation (LARSE) of Function-Level Building Footprint across Yangtze River Economic Belt of China. Sustain. Cities Soc. 2025, 127, 106439. [Google Scholar] [CrossRef]

- Dubljević, V.; Racine, E. The ADC of Moral Judgment: Opening the Black Box of Moral Intuitions with Heuristics About Agents, Deeds, and Consequences. AJOB Neurosci. 2014, 5, 3–20. [Google Scholar] [CrossRef]

- Coin, A.; Dubljević, V. Using Algorithms to Make Ethical Judgements: METHAD vs. the ADC Model. Am. J. Bioeth. 2022, 22, 41–43. [Google Scholar] [CrossRef]

- Hiplinen, R.; Mcnamara, P. Deontic Logic: A Historical Survey and Introduction. In Handbook of Deontic Logic and Normative Systems; Gabbay, D.M., Horty, J., Eds.; College Publications: London, UK, 2013. [Google Scholar]

- Horty, J. Agency and Deontic Logic; Oxford University Press: Oxford, UK; New York, NY, USA, 2001. [Google Scholar]

- Xu, M. Axioms for Deliberative Stit. J. Philos. Log. 1998, 27, 505–552. [Google Scholar] [CrossRef]

- Pflanzer, M.; Traylor, Z.; Lyons, J.B.; Dubljević, V.; Nam, C.S. Ethics in Human–AI Teaming: Principles and Perspectives. AI Ethics 2023, 3, 917–935. [Google Scholar] [CrossRef]

- Cecchini, D.; Dubljević, V. Moral Complexity in Traffic: Advancing the ADC Model for Automated Driving Systems. Sci. Eng. Ethics 2025, 31, 5. [Google Scholar] [CrossRef] [PubMed]

- Dubljević, V.; Cacace, S.; Desmarais, S.L. Surveying Ethics: A Measurement Model of Preference for Precepts Implied in Moral Theories (PPIMT). Rev. Philos. Psychol. 2022, 13, 197–214. [Google Scholar] [CrossRef]

- Meier, L.J.; Hein, A.; Diepold, K.; Buyx, A. Algorithms for Ethical Decision-Making in the Clinic: A Proof of Concept. Am. J. Bioeth. 2022, 22, 4–20. [Google Scholar] [CrossRef] [PubMed]

- Weinberger, O. Bemerkungen zur Grundlegung der Theorie des Jusistischen Denkens. Jahrb. Rechtssoziol. Rechtstheor. 1972, 2, 134–161. [Google Scholar]

- Chellas, B.F. The Logical Form of Imperatives; Perry Lane Press: South Burlington, VT, USA, 1969. [Google Scholar]

- Priest, G. Contradiction: A Study of the Transconsistent; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Prior, A.N. Formal Logic; Clarendon Press: Oxford, UK, 1955. [Google Scholar]

- van Fraasen, B. Values and the Heart’s Command. J. Philos. 1973, 70, 5–19. [Google Scholar] [CrossRef]

- Boole, G. The Mathematical Analysis of Logic; Macmillan: New York, NY, USA, 1847. [Google Scholar]

- Dubljević, V. Toward Implementing the ADC Model of Moral Judgment in Autonomous Vehicles. Sci. Eng. Ethics 2020, 26, 2461–2472. [Google Scholar] [CrossRef]

- Williams, B.A.O. Imperative Inference. Analysis 1963, 23, 30–36. [Google Scholar] [CrossRef]

- Weingartner, P.; Schurz, G. Paradoxes Solved by Simple Relevance Criteria. Log. Anal. 1986, 29, 3–40. [Google Scholar]

- Weinberger, O. The Logic of Norms Founded on Descriptive Language. Ratio Juris 1991, 4, 284–307. [Google Scholar] [CrossRef]

- Weingartner, P. Alternative Logics: Do Sciences Need Them? Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Hansen, J. Imperative Logic and Its Problems. In Handbook of Deontic Logic and Normative Systems; Gabbay, D.M., Horty, J., Eds.; College Publications: Marshalls Creek, PA, USA, 2013; pp. 137–192. [Google Scholar]

- Yigitcanlar, T.; Butler, L.; Windle, E.; Desouza, K.C.; Mehmood, R.; Corchado, J.M. Can Building “Artificially Intelligent Cities” Safeguard Humanity from Natural Disasters, Pandemics, and Other Catastrophes? An Urban Scholar’s Perspective. Sensors 2020, 20, 2988. [Google Scholar] [CrossRef] [PubMed]

- Yigitcanlar, T.; Desouza, K.; Butler, L.; Roozkhosh, F. Contributions and Risks of Artificial Intelligence (AI) in Building Smarter Cities: Insights from a Systematic Review of the Literature. Energies 2020, 13, 1473. [Google Scholar] [CrossRef]

- O’Neil, C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy, 1st ed.; B/D/W/Y Broadway Books: New York, NY, USA, 2017. [Google Scholar]

- Christian, B. The Alignment Problem: Machine Learning and Human Values; W. W. Norton & Company: New York, NY, USA, 2020. [Google Scholar]

- Shussett, D. Group Agency. In Encyclopedia of the Philosophy of Law and Social Philosophy; Sellers, M., Kirste, S., Eds.; Springer: Dordrecht, The Netherlands, 2024; pp. 1–9. [Google Scholar] [CrossRef]

- Bibri, S.E. Smart Sustainable Cities of the Future; The Urban Book Series; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Bibri, S.E. Advances in the Leading Paradigms of Urbanism and Their Amalgamation: Compact Cities, Eco–Cities, and Data–Driven Smart Cities; Advances in Science, Technology & Innovation; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Bibri, S.E. Compact Urbanism and the Synergic Potential of Its Integration with Data-Driven Smart Urbanism: An Extensive Interdisciplinary Literature Review. Land Use Policy 2020, 97, 104703. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered AI; Oxford Scholarship Online; Oxford University Press: Oxford, UK, 2022. [Google Scholar] [CrossRef]

- Schmager, S.; Pappas, I.O.; Vassilakopoulou, P. Understanding Human-Centred AI: A Review of Its Defining Elements and a Research Agenda. Behav. Inf. Technol. 2025, 44, 3771–3810. [Google Scholar] [CrossRef]

- Friedman, B.; Hendry, D.G. Value Sensitive Design: Shaping Technology with Moral Imagination; The MIT Press: Cambridge, MA, USA, 2019. [Google Scholar] [CrossRef]

- Friedman, B.; Hendry, D.G.; Borning, A. A Survey of Value Sensitive Design Methods. FNT Hum.–Comput. Interact. 2017, 11, 63–125. [Google Scholar] [CrossRef]