1. Background

Artificial intelligence (AI) is a rapidly advancing technology now used across multiple fields. Among the latest advancements in this domain is Google Gemini, a multimodal AI model developed by Google and released in December 2023 [

1]. Gemini directly competes with OpenAI’s ChatGPT-3.5 and ChatGPT-4 models [

1] and has demonstrated strong performance across a range of cognitive tasks, including text analysis, programming assistance, logical reasoning, reading comprehension, and mathematical problem-solving [

2]. Google Gemini has also proven useful in educational settings, providing outputs that can be tailored and customized to an individual’s specific learning needs, as well as the user’s level of understanding [

3]. However, Google Gemini’s potential for medical education remains understudied, particularly in generating NBME-standard pharmacology questions—a gap this study addresses.

Much of the existing literature on LLMs in medical education has focused on OpenAI’s GPT models, which have demonstrated strong performance in standardized exams and clinical reasoning tasks. Google’s Gemini, though transformer-based like GPT, has been optimized for multimodal integration, longer context handling, and efficiency in reasoning tasks. While GPT is known for producing coherent narratives and high factual recall, Gemini has shown promise in structured prompt handling and multimodal contexts. These differences underscore the importance of evaluating Gemini independently rather than assuming parity with GPT.

The National Board of Medical Examiners (NBME) is the organization responsible for overseeing the United States Medical Licensing Examination (USMLE), a multi-step exam series required for medical licensure in the United States. The NBME develops board examinations administered throughout medical school, including Step 1, which is typically taken prior to clinical rotations [

4]. The USMLE Step 1 consists of seven sections with 40 questions each, administered over an eight-hour testing period [

4]. Its primary objective is to assess medical students’ proficiency in the foundational sciences, including pathology, physiology, pharmacology, biochemistry and nutrition, microbiology, immunology, anatomy, embryology, histology, cell biology, behavioral sciences, and genetics [

5]. Pharmacology accounts for approximately 15–22% of the Step 1 exam content [

5].

A previous study has assessed the performance of Chat Generative Pre-Trained Transformer (ChatGPT)-3.5 in generating NBME-style pharmacology questions. The study found that ChatGPT-3.5 generated questions that aligned with the NBME guidelines, but did not contain the complexity and difficulty required for USMLE Step exams [

6]. However, as of Google Gemini’s release in May 2023, there has been limited data on Google Gemini’s capabilities to generate and answer NBME questions [

7]. Therefore, the present study specifically focused on assessing Google Gemini’s ability to generate questions that adhere to the NBME Item Writing Guide in the field of pharmacology [

8].

Artificial intelligence (AI) models have demonstrated significant potential to advance scientific research, healthcare delivery, and medical education [

9]. In particular, ChatGPT’s ability to pass the USMLE Step 1 exam highlights its promise as a supportive tool for medical students [

10,

11]. Several studies have identified ChatGPT as a freely accessible educational resource; however, concerns remain regarding its tendency to generate factually inaccurate information and to produce oversimplified NBME-style questions [

6]. This raises the question of whether its emerging competitor, Google Gemini, can offer similar or improved educational utility.

Google Gemini has quickly gained widespread recognition due to its accessibility and versatility across various fields. Unlike many other AI models, Google Gemini does not require a subscription, making it a cost-effective tool for users. This affordability is particularly advantageous for students and educators, especially in low-income countries where access to expensive educational resources can be a significant barrier. By providing a free platform for generating educational content, Google Gemini has the potential to bridge gaps in medical education by offering students the opportunity to practice and improve their knowledge through high-quality, NBME-style questions without incurring additional costs. This makes it a valuable tool not only for individual learners but also for educational institutions seeking to enhance their examination resources [

12].

However, a pertinent concern arises regarding the accuracy of the medical information that AI can provide within these clinical vignettes [

9]. If Google Gemini can sufficiently generate questions that are medically accurate and adhere to the NBME Item-Writing Guide, it could serve as a valuable and accessible resource for medical students.

Given that previous research has primarily focused on ChatGPT-3.5’s efficacy in answering NBME questions correctly as well as generating NBME questions, this study aims to expand the scope by evaluating Google Gemini’s capabilities to generate NBME-style pharmacology questions that meet the NBME guidelines for question writing [

6]. Further, our study’s objective is to provide feedback to enhance Google Gemini’s capabilities as a tool for medical education.

While ChatGPT has been widely studied, there is a significant gap in the literature regarding Gemini’s ability to generate board-standard assessment items. To our knowledge, this is the first structured evaluation of Google Gemini’s pharmacology question-writing quality using NBME criteria.

2. Methods

2.1. Part 1: Development of NBME-Style Pharmacology Multiple Choice Questions Utilizing Google Gemini ‘Prompt Design Strategies’

To develop sufficient prompts for Google Gemini to generate the most optimal clinical vignettes, we adapted the following procedure:

The selection of organ systems and related drugs was designed to ensure a comprehensive evaluation across a wide range of pharmacological areas. Each drug was chosen to represent a specific organ system, allowing for a balanced assessment across common medical conditions and treatments. To ensure coverage of high-yield USMLE Step 1 pharmacology topics, we selected 10 medications from the following organ systems, with their given drug in parentheses: hematology/lymphatics (warfarin), neurology (norepinephrine), cardiovascular (metoprolol), respiratory (albuterol), renal (lisinopril), gastrointestinal (bisacodyl), endocrine (metformin), reproductive (norethindrone), musculoskeletal (cyclobenzaprine), and behavioral medicine (trazodone).

Following the selection of organ systems and corresponding medications, we applied prompt engineering techniques to generate NBME-style questions aligned with established item-writing standards. This process incorporated the “prompt design strategies” recommended by Google AI [

13]. To formulate the most effective prompt for generating high-quality questions, we implemented the following steps:

First, a pharmacology expert created 12 NBME-style questions as a reference. Information technology (IT) experts then constructed an initial prompt in adherence with the pharmacology expert’s questions using Google Gemini’s prompt design strategies. The following prompt design strategies were implemented into the generation of the optimal Google Gemini prompt: providing clear and specific instructions, specifying any constraints, defining the format of the response, and trialing different phrasing [

13]. The prompts were refined to better align with the NBME standards. The refinement included providing the questions to pharmacology experts to evaluate the generated question’s medical accuracy and clinical relevance. Pharmacology experts further fine-tuned these questions in accordance with the NBME Item-Writing Guidelines.

Following 12 iterations, the pharmacology experts reviewed the quality of the generated questions, and the refined prompt was established as the standard template for use with Google Gemini. The standard prompt is as follows, with brackets indicating the variable of which different medication names were inputted to be evaluated: ‘Can you provide an expert-level sample NBME question about the [mechanism of action, indication, OR side effect] of [drug] and furnish the correct answer along with a detailed explanation?’ Due to the probabilistic nature of large language models, the same prompt may yield different outputs across multiple runs. This variability was noted but not quantified in the current study design.

Ten medications representing high-yield USMLE organ systems (e.g., warfarin for hematology, metoprolol for cardiovascular) were selected to ensure breadth while maintaining feasibility for expert review. For each drug, one topic—mechanism of action, indication, or side effect—was randomly assigned and used to guide question generation and subsequent evaluation.

The multiple-choice questions and explanations generated by Google Gemini were evaluated using a grading system adapted from the NBME Item-Writing Guide [

14]. This guide offers comprehensive recommendations for constructing USMLE-style questions that effectively assess both foundational medical knowledge and clinical reasoning. Based on these standards, we adapted the following 16 evaluation criteria:

Give the correct answer with a medically accurate explanation.

Question stem avoids describing the class of the drug in conjunction with the name of the drug. Violations of this criterion typically involved explicit drug-class disclosure (e.g.,

Appendix A’s warfarin example).

The question applies foundational medical knowledge, rather than basic recall.

The clinical vignette is in complete sentence form.

The question can be answered without looking at multiple choice options, referred to as the “cover-the-option” rule.

Avoid long or complex multiple-choice options.

Avoid using frequency terms within the clinical vignette, such as “often” or “usually” and rather “most likely” or “best indicated”.

Avoid “none of the above” in the answer choices.

Avoid nonparallel, inconsistent answer choices, thus all following the same format and structure.

The clinical vignette avoids negatively phrased lead-ins, such as “except”.

The clinical vignette avoids general grammatical cues that can lead you to the correct answer, such as “an” at the end of the question stem, so you avoid answer choices that begin with consonants.

Avoid grouped or collectively exhaustive answer choices. For instance, the answer choices are “a decrease in X”, “an increase in X”, and “no change in X”.

Avoid absolute terms, such as “always” and “never” in the answer choices.

Avoid having the correct answer choice stand out. For example, this can be when one of the answer choices is longer and more in-depth relative to the other answer choices.

Avoid repeated words or phrases in the clinical vignette that clue the correct answer choice.

Create a balanced distribution of key terms in the answer choices. For instance, choosing the answer that is the most common with the other options and avoiding answers that are least similar [

14].

2.2. Part 2: Evaluating Google Gemini-Generated Clinical Vignette and Answer Explanations

To evaluate the quality of the Google Gemini-generated questions and answers, the following steps were followed. The evaluation process was completed by a panel of two pharmacology experts with experience writing NBME-style pharmacology questions as part of the pharmacology curriculum at their respective universities. Both experts had over five years of NBME question-writing experience; a small, focused panel was chosen to allow detailed qualitative feedback alongside quantitative scoring. As part of the preliminary grading process, two randomly selected Google Gemini-generated pharmacology questions were first evaluated. The pharmacology experts individually graded the questions based on the 16 criteria and provided either a 0 or 1 for fulfillment of each criterion. This yields a per-item score ranging from 0 to 16.

Each of the 16 rubric criteria was scored using a binary (0/1) system. This approach was chosen to ensure clarity in applying the NBME rubric, reduce ambiguity in rater judgments, and facilitate inter-rater agreement. The binary method simplified consensus for nuanced criteria and supported the reproducibility of expert ratings. A higher score reflects that the Google Gemini-generated questions closely follow the NBME Item-Writing Guide. Following this preliminary scoring, the experts convened to reconcile any discrepancies and to discuss the rationale behind each score, thereby calibrating their evaluations for consistency. After this calibration phase, the experts independently assessed the remaining questions and provided both quantitative scores and qualitative feedback for improvement. In addition to the overall score per question, agreement between experts was also tracked for each individual criterion. For example, we calculated the agreement rate on Criterion 5 (“cover-the-option” rule) by recording how often both raters marked the same score (0 or 1) across the 10 questions. This allowed us to assess whether there was high consistency in recognizing pseudo-vignette questions, which do not require clinical reasoning to answer.

Finally, the pharmacology experts were asked to estimate the difficulty and complexity of each question. The panel was asked to rate the difficulty of each Google Gemini-generated question on a Likert scale using the following question: “On a scale from very easy to very difficult, how would you rate the difficulty of this question for students?” The responses then ranged from 1 to 5, with 1 being very easy and 5 being very difficult. For complexity, they answered: “On a scale from very simple to very complex, how would you rate the complexity of this question for students?” (1 = very simple; 5 = very complex).

3. Results

To assess the inter-rater reliability of the scores between the two pharmacology experts, Cohen’s Kappa coefficient was calculated. Cohen’s Kappa coefficient was 0.81, which indicates that there is a good correlation between the two experts and was not due to chance. Afterwards, the expert’s ratings for the generated questions were averaged.

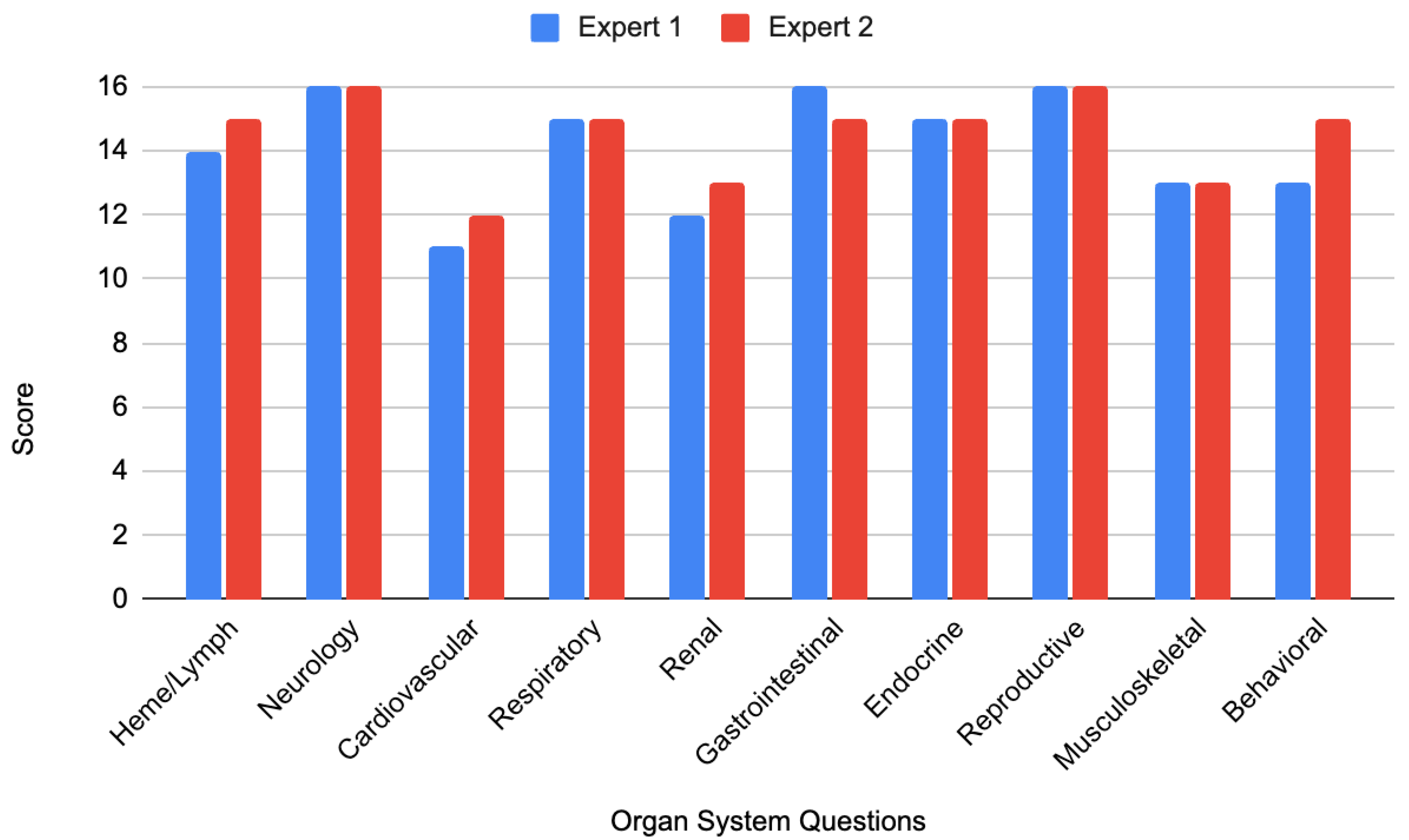

Expert 1 assigned an average score of 14.1 out of 16 (88.1%), while Expert 2 reported an average of 14.5 out of 16 (90.6%). Despite a high mean score (14.3/16, 89.3%), 20% of questions failed to integrate clinical reasoning (e.g., the metformin ‘pseudo vignette’ in

Appendix B). The largest scoring discrepancy occurred for the question related to the behavioral system, which received a score of 13/16 from Expert 1 and 15/16 from Expert 2. This difference arose primarily from disagreement over whether the stem’s wording provided an inadvertent cue, affecting Criterion 14 scoring. Detailed scores by organ system are presented in

Figure 1.

Next, researchers averaged the expert ratings for each organ system. The average score for each organ system was as follows: hematology/lymphology 14.5/16, neurology 16/16, reproductive 16/16, cardiovascular 11.5/16, renal 12.5/16, gastrointestinal 15.5/16, musculoskeletal 13/16, and behavioral 14/16.

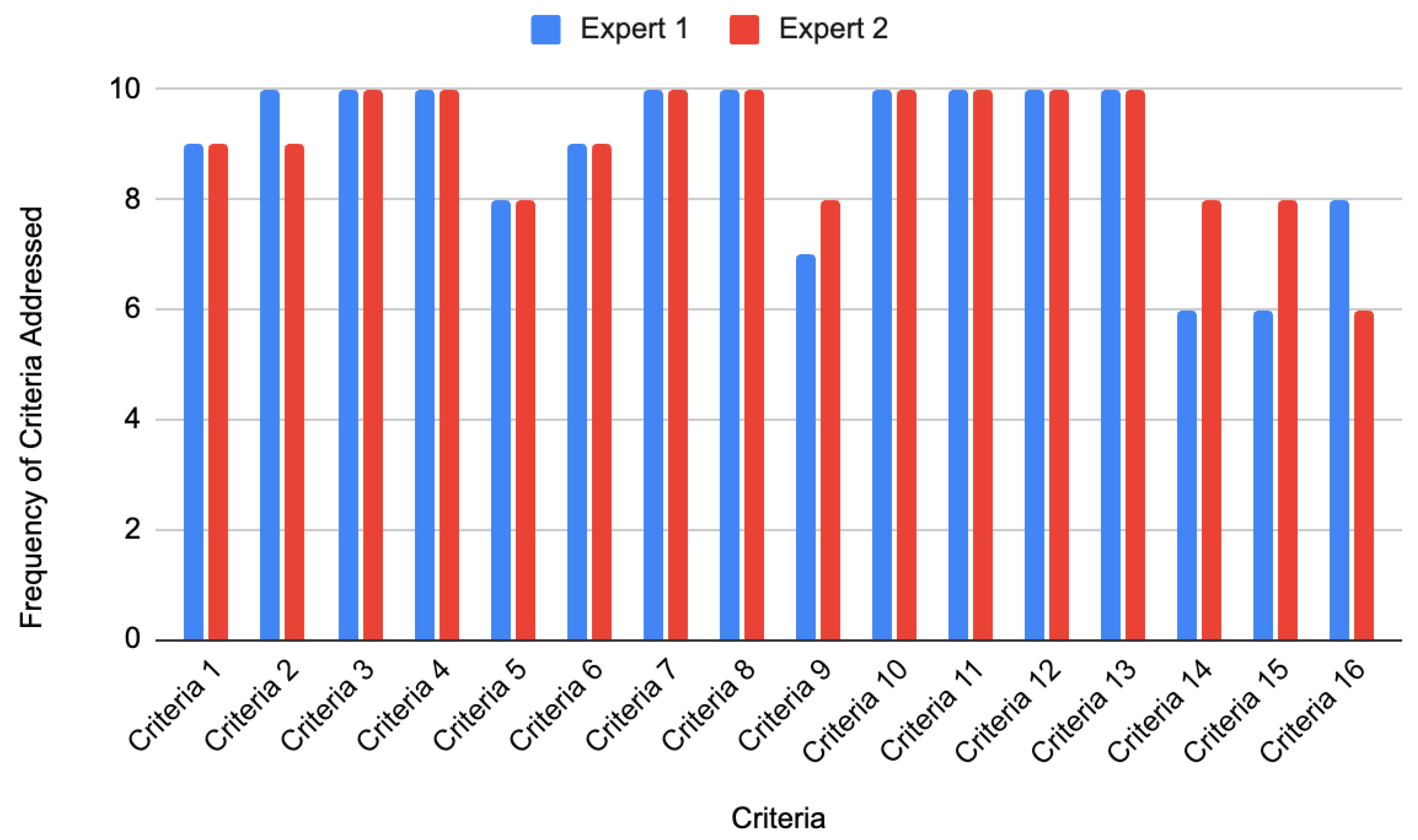

Researchers also analyzed how frequently Google Gemini met each of the 16 NBME criteria across all questions. These results are illustrated in

Figure 2.

According to Expert 1, Google Gemini fully met the following criteria in all 10 generated questions: Criteria 2–4, 7–8, and 10–13. Criterion 1 (medical accuracy) and Criterion 6 (clarity of answer choices) were fulfilled in 9 out of 10 questions. Critically, medical inaccuracies (hallucinations) invalidated 1 of 10 questions (10%), rendering them unsuitable for educational use without expert verification. Criteria 5 (cover-the-option rule) failed in 2/10 questions primarily due to vignettes lacking diagnostic ambiguity (e.g.,

Appendix B’s metformin question). These ‘pseudo vignettes’ did not require clinical integration for resolution. Criteria 9 and 11 were fulfilled in 7 out of 10 questions. Finally, Criteria 14 (distinctiveness of correct answer) and 15 (avoidance of repeated cues) were satisfied in 6 out of 10 questions. These results are presented in

Figure 2. For Criterion 5, both experts gave a score of “1” (criterion met) to 8 out of the 10 questions. Importantly, their scoring for all 10 questions was identical, reflecting a 100% agreement rate for this criterion. This suggests strong consistency in identifying pseudo-vignettes and reinforces the reliability of the evaluation. The detailed agreement table is provided in

Appendix B.

Additionally, according to expert 2, Google Gemini fulfilled criteria 3–4, 7–8, 10, 12 and 13 by all 10 questions. Criterion 1–2 and 6 were fulfilled by 9 out of the 10 questions. Criteria 4, 9, 14, and 15 were fulfilled by 8 out of the 10 questions. Lastly, criterion 16 was fulfilled by 6 out of the 10 questions. These results can be seen in

Figure 2.

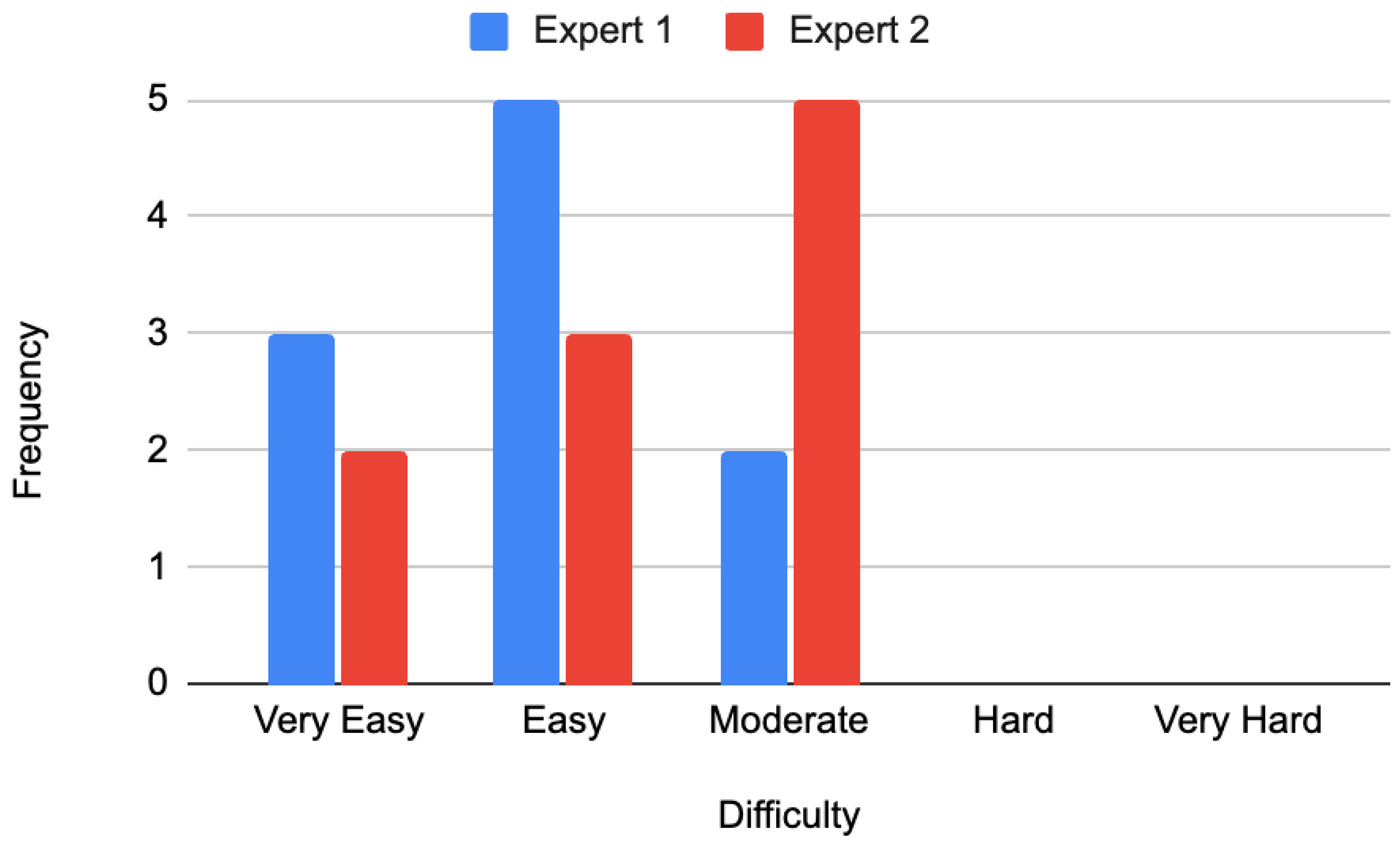

Regarding perceived difficulty, Expert 1 rated three questions as very easy, five as easy, and two as moderately difficult, with none rated as difficult or very difficult. Expert 2 rated two questions as very easy, three as easy, and five as moderately difficult. Similarly, none were rated as difficult or very difficult. The distribution of difficulty ratings is illustrated in

Figure 3.

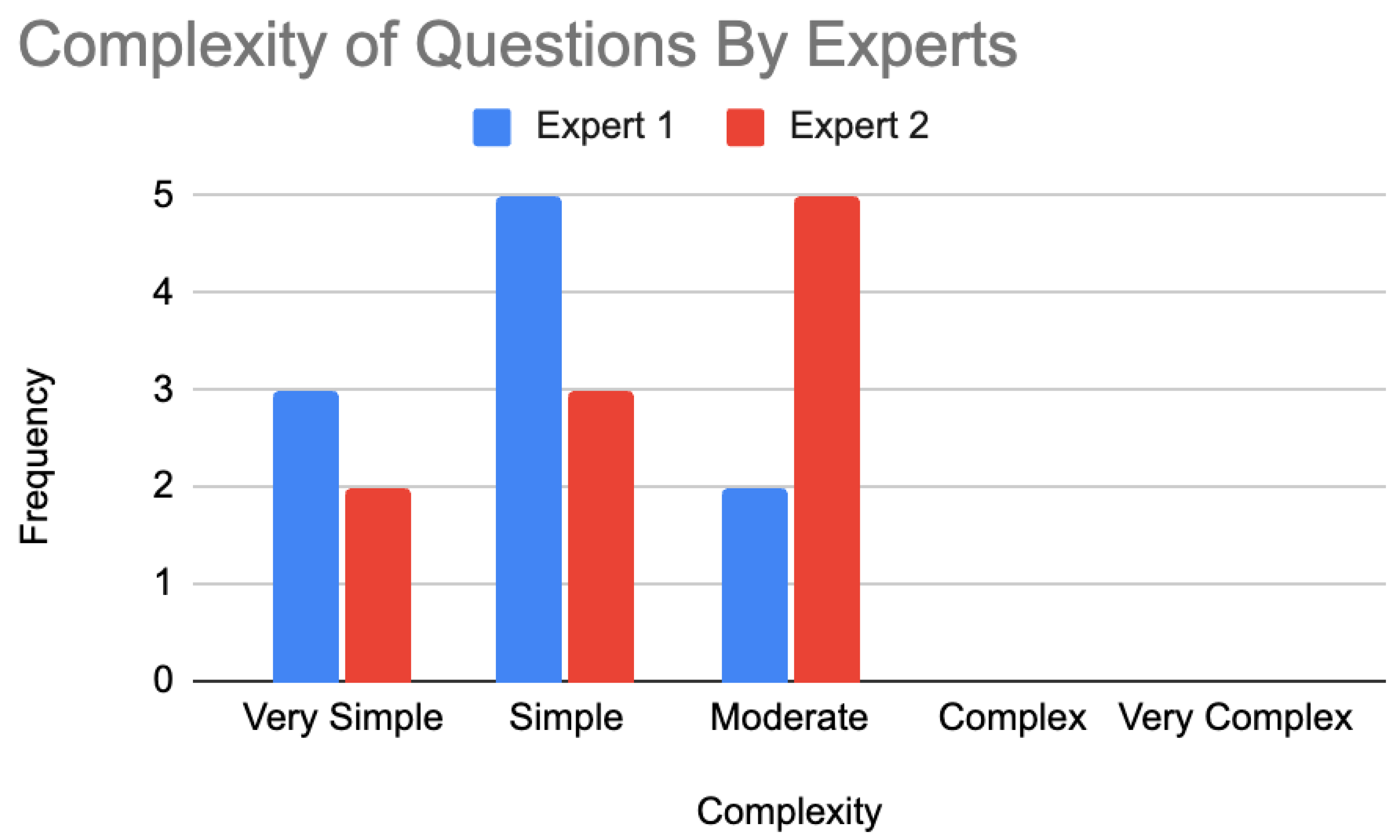

In terms of the complexity of the Google Gemini-generated questions, expert 1 found three questions very simple, five questions simple, two questions moderate, and no questions complex or very complex. Expert 2 found two questions very simple, three questions simple, five questions moderate, and no questions complex or very complex. The evaluation of the complexity of these questions is presented in

Figure 4.

4. Discussion

Given the average score of 14.3 out of 16 (89.3%) amongst the two experts, Google Gemini displays strong capabilities in being a sufficient tool for generating NBME-standard questions. This performance surpasses ChatGPT-3.5’s reported average of 12.1/16 [

6] on identical pharmacology metrics, though both models exhibit similar limitations in clinical reasoning depth (pseudo vignettes: Gemini 20% vs. ChatGPT-3.5 35%). Google Gemini fulfilled criteria 3, 4, 7, 8, and 10–13, displaying that it is capable of generating questions that abide by the grammatical and structural requirements of the NBME. This structural reliability means that, with improved clinical reasoning depth, Gemini’s output could serve as a useful first draft for educators preparing USMLE-style materials. However, when generating the answer choices for the questions or avoiding giving cues that lead to crossing out certain answer choices immediately, improvement is needed.

According to both experts, criterion 1, which assesses the medical accuracy of the pharmacology question, was followed by 9 out of the 10 (90%) generated questions. Although it fulfilled the requirement 90 percent of the time, the question is immediately invalidated if provided with medically inaccurate information. For example, when asked to generate a question regarding albuterol and its mechanism of action, Google Gemini stated that albuterol is a phosphodiesterase enzyme inhibitor, leading to increased cAMP levels. Although albuterol does increase cAMP levels downstream, it does so through beta-2 receptor agonism, not a phosphodiesterase enzyme inhibitor such as theophylline [

15]. Albuterol and theophylline are both medications that are used to treat medical conditions such as asthma [

16]. Given the importance of having medically accurate information as a source of learning, it is crucial that Google Gemini can provide questions that correctly distinguish between similar medications to ensure the student can correctly differentiate these concepts in a clinical setting.

Previous research has demonstrated that AI models are prone to “hallucinations”—instances where the model generates factually incorrect information, particularly when differentiating between closely related concepts [

17,

18]. In the earlier example, Google Gemini correctly noted that albuterol increases cAMP levels but incorrectly attributed this effect to phosphodiesterase inhibition, a mechanism associated with theophylline. Although only one out of ten generated questions contained a medical inaccuracy, the use of AI-generated material in educational settings demands 100% factual accuracy.

The isolated error in the mechanism of action of albuterol highlights a critical concern: even infrequent factual inaccuracies undermine the reliability of AI-generated assessment items. In educational settings, particularly high-stakes examinations, 100% factual accuracy is essential; therefore, mechanisms for independent fact-checking are needed before deployment.

Furthermore, because large language models like Gemini produce probabilistic outputs, the same prompt may yield different results on repeated runs. This inherent variability raises concerns about reproducibility—an essential consideration for any educational tool intended for assessment. A question generated accurately once may be flawed in another instance using the same input. Future evaluations should assess the consistency and quality of multiple outputs per prompt to better understand and mitigate this variation.

To reduce the likelihood of such hallucinations, Google Cloud recommends a technique known as regularization, which involves supplying relevant domain-specific input to better constrain the model’s outputs [

18]. In this context, regularization could involve prompting Google Gemini with example questions that clearly distinguish albuterol as a beta-2 receptor agonist and theophylline as a phosphodiesterase inhibitor. Providing such guided examples may help the model generate more accurate content and avoid conflating pharmacological mechanisms.

Criteria 2 evaluated if Google Gemini can avoid describing the class of the drug in conjunction with the name of the drug. Expert 1 stated that criterion 2 was met in all 10 questions, but expert 2 stated it was not met when evaluating the indication of bisacodyl. The indication of Bisacodyl for constipation was described in the question stem, which provided cues within the answer choice to look for the immediate relief of constipation. By providing the drug class within the question stem, it provides the medical student a strong cue to look for matching characteristics of the drug class within the answer choice, entirely eliminating any foundational medical knowledge application. Ensuring Google Gemini’s ability to still provide reasonable ambiguity within the question stem regarding the class of the drug can strengthen its capability to generate strong NBME-standard pharmacology questions for medical students. For instance, a well-constructed question might state that a patient is taking warfarin without explicitly identifying it as a vitamin K antagonist. The student would then need to rely on their pharmacological knowledge to select the correct mechanism of action, such as “inhibition of vitamin K epoxide reductase.” In contrast, explicitly stating the drug class in the stem would provide a direct cue, undermining the item’s ability to test foundational understanding. (See example in

Appendix A).

Criterion 5 gauged Google Gemini’s ability to generate questions that can be answered without referencing the multiple-choice options—often referred to as the “cover-the-option” rule. This criterion ensures that the question stem provides enough clinical and contextual information for the test-taker to formulate an answer independently, thereby assessing their ability to apply foundational knowledge rather than relying on pattern recognition. This closely aligns with Criterion 3, which evaluates whether the question requires the application of foundational medical knowledge instead of simple factual recall. According to both experts, Criterion 5 was met in 8 out of the 10 questions. For instance, when prompted to generate a question on the mechanism of action of metformin, Google Gemini created a vignette involving a 42-year-old woman with type 2 diabetes. However, the question merely asked for the drug’s mechanism of action without requiring the student to use any information from the vignette. Such a question exemplifies a “pseudo vignette”—a superficial clinical context that does not influence how the question is answered. This design fails to leverage clinical reasoning and instead tests rote memorization. Effective NBME-style questions should require test-takers to synthesize the clinical presentation with their knowledge of pharmacology to arrive at the correct answer. Examples that demonstrate how questions either meet or fail to meet this criterion are presented in

Appendix B.

Criterion 6 assesses whether Google Gemini avoids generating long or overly complex answer choices. This standard was met in 9 out of 10 questions according to both experts. Criterion 14 evaluates whether the correct answer choice is conspicuously different from the distractors—often due to excessive length or specificity. This criterion was met in 6 out of 10 questions by Expert 1 and 8 out of 10 by Expert 2.

Longer or more detailed answer choices can inadvertently serve as cues, drawing disproportionate attention and undermining the question’s ability to assess actual knowledge. For example, in a question on the indication of lisinopril, Google Gemini generated five options: four listing a single indication and one listing two. The extended length and additional content of that answer may cue students toward it, particularly if they recognize one indication but not the other—thus compromising the question’s discriminative validity.

To better align with NBME standards, Google Gemini should ensure that all answer choices are similar in length and limited to a single concept. This improves fairness and better evaluates a student’s understanding of pharmacological principles.

Criteria 9 assesses Google Gemini’s ability to avoid nonparallel, inconsistent answer choices. Answer choices should follow a consistent theme centered around the clinical vignette, as well as the pharmacological concept that is being assessed. For example, when asked to generate an NBME-standard question on the indications of metoprolol therapy, Google Gemini provided a clinical vignette of a 55-year-old woman with a history of hypertension and chronic heart failure presenting with dyspnea and fatigue. The question seems to be centered around a patient presenting with a cardiovascular issue (heart failure), but the answer choices include relief of migraine headaches, treatment of acute allergic rhinitis, management of chronic obstructive pulmonary disease, control of hypertension and improvement of heart failure symptoms, and eradication of an H. pylori infection. Out of all these answer choices, the control of hypertension and improvement of heart failure is the only issue that presents with cardiovascular symptoms. By providing inconsistent answer choices that seem to discuss pathological characteristics around other organ systems, the answer choice can be easily singled out if the medical student can properly identify the cardiovascular issue presented within the clinical vignette. If the answer choices were all consistent with cardiovascular pathologies, this can properly assess the medical student’s knowledge in seeing metoprolol’s indication for hypertension and heart failure.

Criterion 15 evaluates whether Google Gemini avoids repeating key words or phrases from the clinical vignette in the correct answer choice, which can inadvertently cue the test-taker. The inclusion of such cues can undermine the question’s ability to assess true medical knowledge by encouraging pattern recognition over conceptual reasoning.

For example, in a question on the indication of cyclobenzaprine, the vignette described a 50-year-old man with muscle spasms following heavy lifting and noted that he was prescribed cyclobenzaprine. The question then asked, “Cyclobenzaprine is most appropriate for which of the following therapeutic purposes?” with the correct answer being “relaxation of skeletal muscle spasms associated with acute low back pain.” The repetition of “muscle spasms” in both the stem and the answer choice provides a clear verbal cue, potentially allowing the student to select the correct option without applying deeper pharmacological reasoning.

To generate valid NBME-style questions, it is essential that Google Gemini avoids duplicating key terms between the vignette and the correct answer choice. This would help ensure that students are assessed on their understanding of pharmacological concepts rather than their ability to identify linguistic patterns.

To illustrate the range in question quality,

Appendix B presents examples of both a high-scoring and a low-scoring question generated by Google Gemini. These examples include evaluator notes and highlight common issues such as pseudo vignettes, cueing, and imbalanced answer choices. Providing these examples enhances transparency and offers practical insight into how the 16-item NBME-based rubric was applied.

Criterion 16 evaluates whether the number of key terms used in each answer choice is balanced. Maintaining a consistent distribution of clinically relevant terms across all options ensures that no single choice stands out, thereby preserving the integrity of the assessment.

For example, in a question on the indications of lisinopril, the vignette referenced a patient with a history of hypertension and heart failure—conditions for which lisinopril is commonly prescribed. However, only one of the five answer choices included these specific key terms, while the others did not. This discrepancy may cue the test-taker to the correct answer without requiring full comprehension of the drug’s indications.

To prevent this type of unintentional cueing, Google Gemini must ensure that key terms derived from the clinical vignette are either evenly distributed across all answer choices or excluded entirely. This helps maintain a level playing field and ensures that questions assess medical knowledge rather than test-taking strategy.

Overall, none of the Google Gemini-generated questions exceeded a moderate difficulty rating on the Likert scale (

Figure 3). In discussion with all of the criteria above and what Google Gemini failed to address, some generated questions solely evaluated basic recall or provided cues to the answer choices immediately. By using questions that require the integration of applying medical knowledge and the provided clinical vignette, they will be at the proper difficulty level for the students to answer correctly [

19,

20]. Additionally, these more difficult questions are more effective at assessing the medical student’s skills to apply such clinical reasoning needed within their future medical practice [

21]. While binary scoring was sufficient for this pilot study, non-binary scales such as Likert ratings could capture additional nuance in future evaluations, particularly regarding dimensions like clinical integration and difficulty. Google Gemini’s limitations in embedding this level of reasoning, as well as its tendency to provide cues within the question stem or answer choices, contributed to the overall perception that the questions were less challenging than appropriate for high-stakes assessments like the USMLE Step 1.

5. Real-World Applicability

Despite its current limitations, Google Gemini demonstrates several promising applications in real-world medical education. It could serve as a first-step content generation tool for educators, helping to draft NBME-style questions that can later be refined, validated, and approved by trained faculty. Additionally, Gemini offers an accessible and cost-effective resource for medical students, particularly in low-resource settings where commercial board preparation materials may be unaffordable or unavailable.

However, due to issues such as occasional medical inaccuracies, the use of pseudo vignettes, and cueing in answer choices, Gemini-generated questions are not yet suitable for use as stand-alone assessment items. Effective integration of AI tools like Gemini into medical curricula would require faculty oversight, iterative validation, and refinement to ensure educational integrity and alignment with NBME standards.

To further illustrate these strengths and limitations,

Appendix B provides side-by-side examples of both high-scoring and low-scoring Gemini-generated questions. These annotated examples include expert evaluator comments highlighting key features—such as vignette quality, cueing issues, and clinical reasoning depth. Incorporating these examples enhances transparency and offers practical guidance for educators, instructional designers, and researchers aiming to evaluate or improve AI-generated assessment tools. Until such shortcomings are addressed, Gemini-generated content should be used with faculty supervision and not as a standalone substitute for validated board-preparation resources.

6. Conclusions

Based on the average score of 14.3 out of 16 amongst the two experts, Google Gemini demonstrates moderate proficiency in generating structurally sound questions that align with the NBME guidelines. However, beyond the face value of the high average score, Gemini’s questions lack the clinical reasoning depth and distractor quality of established resources like UWorld®, with 20% being non-diagnostic pseudo vignettes.

Google Gemini received 100% fulfillment in criteria 3, 4, 7, 8, and 10–13, showcasing its ability to provide structurally and grammatically sound questions according to the NBME guidelines. However, by delving deeper into the answer choices, it struggled significantly. On one occasion, Google Gemini explained the drug class within the question stem, which immediately cued to only one answer choice that described that same drug class, reducing the need for applying medical knowledge. By avoiding the drug class being explicitly stated either in the question stem or answer choice, it can avoid giving unnecessary cues that guide the medical student to the correct answer.

On multiple occasions, Google Gemini failed to integrate the clinical vignette meaningfully into the question structure, resulting in what are commonly referred to as “pseudo vignettes.” In such cases, students could answer the question through simple factual recall without engaging in clinical reasoning or synthesizing information from the vignette. This diminishes the question’s educational value and misaligns with USMLE Step 1 priorities, where >60% of questions require clinical reasoning integration [

5].

For Google Gemini to serve as an effective tool in medical education, it must generate questions that require students to interpret the clinical scenario and apply relevant pharmacological principles. Strengthening this integration would enhance the tool’s utility in preparing students for exams such as the USMLE Step 1 and support deeper clinical learning.

In addition to the pseudo vignettes, Google Gemini’s quality in generating NBME-standard pharmacology questions fell short in the formatting of its generated answer choices. In some cases, answer choices generated by Google Gemini were excessively long, which could give away the correct option. Additionally, Google Gemini occasionally failed to maintain parallelism in answer choices or maintain a balance of key terms between answer choices, unintentionally highlighting the correct answer choice. These consistent cues led to easier questions in which the application of medical knowledge was not needed, and was merely pattern recognition to identify the correct answer choice. Providing answer choices that are balanced in their length and having an appropriate number of key concepts is key to providing questions that have reasonable difficulty to properly assess the medical student’s knowledge.

In summary, Google Gemini currently falls short of producing pharmacology questions that consistently meet NBME standards when compared to established board preparation resources. This limitation is primarily due to its insufficient integration of clinical vignettes and the frequent inclusion of cues that allow test-takers to identify the correct answer without applying clinical reasoning or foundational knowledge.

To serve as a reliable educational tool, Google Gemini must generate questions that demand the synthesis of clinical information with pharmacological knowledge. With targeted improvements in vignette integration, distractor construction, and factual reliability, Google Gemini could evolve into a robust adjunct tool for pharmacology education and USMLE preparation—provided expert oversight remains integral. Future evaluations should include larger item sets across multiple medical disciplines to establish Gemini’s broader applicability and reliability. Beyond expert-led assessments, future work should develop objective and scalable evaluation methods, including hybrid human–AI rubric scoring, blinded consensus evaluations, and validation through medical student performance. These approaches would strengthen reproducibility and external validity, ensuring greater confidence in the educational applicability of LLM-generated NBME-style questions.

7. Limitations and Future Direction

Artificial intelligence is a rapidly evolving technology that continuously improves its capabilities and addresses prior shortcomings. This progress must be considered when interpreting the findings of this study, especially as newer iterations of Google Gemini may have improved since data collection and manuscript preparation.

First, while our sample size was limited to 10 questions, this choice was intentional to prioritize depth over breadth in this pilot study. Each item underwent a detailed 16-criterion NBME rubric evaluation with binary scoring, calibration, and qualitative analysis by two experts, a process that was resource-intensive but ensured high inter-rater reliability (Cohen’s Kappa = 0.81). This design provided a rigorous proof-of-concept benchmark but is not intended as a definitive measure of Gemini’s performance. Future research will scale this framework to larger datasets (e.g., 100+ questions) using hybrid methodologies—automated checks for formatting/structural criteria alongside expert review for nuanced aspects like clinical reasoning and medical accuracy. Second, another limitation stems from the probabilistic nature of large language models: identical prompts may yield different outputs across runs, raising concerns about reproducibility. In this study, one output was evaluated per drug-topic pair, which provided a snapshot of Gemini’s capabilities but not a measure of stability. Future work should systematically generate multiple outputs per prompt to quantify output stability, identify recurring patterns of hallucination (e.g., consistent misattribution of drug mechanisms), and assess practical reliability. For educational use, consistency across repeated generations may be as important as producing occasional high-quality items. Third, expert evaluation may not reflect student performance; trials comparing Gemini-generated questions with UWorld® are warranted.

Although a standardized prompt was developed through iterative refinement and used consistently across all drug scenarios, Gemini’s probabilistic nature means that the quality, accuracy, or complexity of its generated questions may vary upon repetition. This raises concerns about reproducibility. Future studies should explore generating multiple outputs per drug to assess output stability and variation in quality.

Although calibration sessions and strong inter-rater agreement (Cohen’s Kappa = 0.81) support the reliability of our scoring, this design does not fully eliminate individual subjectivity—particularly for nuanced criteria such as pseudo-vignettes, cueing, or balance of key terms. Future studies could mitigate this limitation by employing larger reviewer panels, blinded consensus assessments, or structured group scoring methods (e.g., Delphi rounds). Such approaches would reduce individual variability, provide more representative consensus ratings, and strengthen the generalizability of the findings.

The evaluation process relied on two expert pharmacologists with experience in NBME-style question writing. While a calibration session was conducted to enhance consistency, applying the 16-item rubric still involves subjective interpretation, particularly for nuanced criteria such as identifying pseudo vignettes, assessing cueing, or determining the balance of key terms. Even though the inter-rater reliability was high (Cohen’s Kappa = 0.81), subtle biases may still have influenced the scoring. Future research should consider using larger reviewer panels, blinded consensus evaluations, or structured group scoring to reduce individual variability.

This study also has a limited disciplinary scope, focusing exclusively on pharmacology questions. While this narrow focus allowed for in-depth expert analysis using a rigorous NBME rubric, it does not reflect Gemini’s broader potential. Future work should expand evaluation across multiple NBME domains (e.g., pathology, physiology, microbiology) and incorporate multimodal elements such as laboratory data and imaging, which are common in board exams. Advances in multi-task and multimodal learning frameworks may provide inspiration for broadening the evaluation scope.

As such, it does not evaluate Google Gemini’s performance in generating NBME-style questions in other core areas of medical education, such as pathology, physiology, microbiology, or biochemistry. Additionally, the generated vignettes did not incorporate more complex clinical elements like laboratory values or imaging findings, which are commonly seen in actual board exams. Broadening the scope to include these factors would provide a more comprehensive understanding of the model’s utility.

Another limitation is that the study did not evaluate real-world performance or educational impact. While the generated questions were reviewed by experts, future research must assess real-world efficacy through student performance metrics, comparing Gemini-generated questions against established resources like UWorld®. Therefore, the effectiveness of Gemini-generated content in improving student learning, identifying knowledge gaps, or preparing for actual exams remains unknown. Future research should incorporate medical student participants and compare performance on AI-generated questions versus established commercial question banks.

Despite these limitations, this study provides a foundational framework for evaluating AI-generated assessment content and highlights key areas where further improvement is needed. As AI continues to evolve, ongoing research will be essential to ensure these tools can reliably support medical education without compromising accuracy or educational rigor.