Abstract

This paper presents various machine learning methods with different text features that are explored and evaluated to determine the authorship of the texts in the example of the Azerbaijani language. We consider techniques like artificial neural network, convolutional neural network, random forest, and support vector machine. These techniques are used with different text features like word length, sentence length, combined word length and sentence length, n-grams, and word frequencies. The models were trained and tested on the works of many famous Azerbaijani writers. The results of computer experiments obtained by utilizing a comparison of various techniques and text features were analyzed. The cases where the usage of text features allowed better results were determined.

Keywords:

authorship recognition of literary works; authorship attribution; author identification; text feature engineering; machine learning MSC:

68Q32; 68T20

1. Introduction

Authorship analysis is widely used to identify the author of an anonymous or disputed literary work, especially in a copyright dispute, to verify the authorship of suicide letters, for information to determine whether an anonymous message or statement was written by a known terrorist, to identify the author of malicious computer programs, for example, computer viruses and malware, and to identify the authors of certain Internet texts, such as e-mails, blog posts, and texts on Internet forum pages [1,2].

One of the important classes of problems in the authorship analysis of texts is authorship recognition of texts. Studies in the direction of text authorship recognition differ in the types of the text features, i.e., stylistic characteristics of the authors in the texts, genre and size characteristics of texts, the language, authorship identification approach used, etc. Among the scientific studies carried out on the identification of the author of texts, several works can be found related to the identification of the author of texts in newspapers [3,4,5,6,7,8,9,10] and the identification of the author of literary works [11,12,13,14,15].

Machine learning methods and models are widely used in text author recognition. Among these methods and models are support vector machine [4,5,6,11,16,17,18], naive Bayes [7,8,16], random forest [5,6,8,19], k-nearest neighbors [5,6,7], and artificial neural network [15,20,21]. The recognition of the author of the text can be considered in the example of texts in different languages, e.g., Arabic, Chinese, Dutch, English, German, Greek, Russian, Spanish, Turkish, and Ukrainian [3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,22,23,24,25,26]. Different types of text features, for example, frequencies of selected words or frequencies of some tags/signs that replace words (e.g., part-of-speech tags) and frequencies of their n-grams [3,4,6,8,14,16,22,23,27], frequencies of character n-grams [5,7,9,10,12,17,24,25,28], and frequencies of word lengths [13], can be used in author identification.

In the present study, a comparative analysis of the effectiveness of the use of different methods and models of machine learning with different feature groups consisting of different types of text features to recognize the authorship of texts was carried out based on the results of computer experiments in the example of large and small literary works of some Azerbaijani writers.

The rest of this article is structured as follows: In Section 2, the considered authorship recognition problem is discussed. In Section 3, various text feature types used in the study are given. In Section 4, feature selection procedures used in the study are described. In Section 5, feature groups used in the study are depicted. In Section 6, the dataset and characteristics of the recognition methods are given. In Section 7, analysis and discussion of the results of computer experiments are carried out. Finally, we draw our conclusions in Section 8 and provide several ideas for future work.

2. Purpose of the Study

There are certain categories of problems in the direction of authorship analysis of texts, namely:

- authorship verification of texts—determination of whether a given text was written by a certain person.

- authorship attribution of texts, author recognition of texts, or authorship identification—determination of the author of a given text from predetermined, suspected candidate authors.

- plagiarism detection—detection of similarities between two given texts.

- author profiling—detection of author’s characteristics or building author profile consisting of some characteristics—age, gender, education level, etc. based on the given text.

- detection of stylistic inconsistencies within a text—detection of text parts that do not correspond to the general writing style of the text that is written by more than one author.

The purpose of the study is to solve the problem of determining the authors of texts. The problem arises in relation to the author of a given text among a priori well-known authors. It is possible that the author of the text is not among these candidates. In the considered research study, there is a set of texts written by candidate authors. The author of a text is determined using computer models that use assessments of the stylistic quantitative characteristics of the texts of this author and the stylistic quantitative characteristics of the texts of candidate authors.

This evaluation can be performed with the help of machine learning methods. Each machine learning method can be used with different sets of text features. In the present study, frequencies of word lengths, frequencies of sentence lengths, frequencies of character n-grams, statistical characteristics of character n-grams frequencies in the text, and frequencies of selected words were used, where a word length, sentence length, and character n-gram refer to the number of letters in a word, the number of words in a sentence, and a given combination of given characters, respectively. Also, in addition to the frequencies of the selected words in a given text whose author is to be determined, the frequencies of the words in the candidate authors’ texts in the training set were also used in some feature groups as text features. The generated feature sets were used with artificial neural network, support vector machine, and random forest methods, and machine learning models. In addition, an n-gram frequency character group was also used with a convolutional neural network in the form of a two-dimensional matrix.

A comparative analysis of different feature sets with different machine learning methods and models was performed based on the results of computer experiments conducted on the example of works of literary fiction in the Azerbaijani language by eleven Azerbaijani authors.

The results of the conducted research study on feature types, feature selection procedures, and machine learning techniques can be used for author recognition in many other languages.

3. Types of Text Features

Below, it is assumed that the author of a given text is one of a certain limited number of candidate authors, and some other texts written by these candidate authors are known in advance. Let us adopt the following notation.

Consider the set of given candidate authors , where is the number of the candidate authors, and let us denote the set of texts of author by , where is the number of texts of the author , .

The set of all texts with known authors is divided into two non-intersecting subsets where is the training set and is the test set.

Text feature groups consist of the following text characteristics, which are considered by many researchers to be important features and have a distinctive character among authors.

3.1. Sentence Length Frequency

By sentence length, we mean the number of words in a sentence [29]. The frequency of sentences of a certain length in a given text is calculated by dividing the number of sentences of that length by the total number of sentences in the text [30].

3.2. Word Length Frequency

By word length, we mean the number of letters in a word [13]. The frequency of words of a given length in a given text is calculated by dividing the number of words of that length by the total number of words in the text.

3.3. Character n-Gram Frequency

In the scientific literature, a character n-gram is considered to mean any given combination of the given characters [12,17,24,25].

For a given , let us denote the character alphabet set, i.e., the set of all character 1-grams from which characters in any character n-gram are selected as follows:

Hereafter in the article, notation will be used for dimensional multi-index . Here, each of the indices can take a value in the given set , hence .

After that, we will use n-grams and character n-grams interchangeably. An n-gram with an arbitrary multi-index whose characters are chosen from the character alphabet set will be used as , where .

Let us denote the set of all character n-grams as follows:

The frequency of any arbitrary character n-gram with characters in a given text is calculated by dividing the number of times that is used by the total number of character n-grams in the text.

3.4. Variance of Character n-Gram Frequencies

Statistical characteristics (e.g., variances) of character n-gram frequencies in the separate parts of an arbitrary given text can be used as text features.

If we calculate the frequencies of an arbitrary character n-gram in the separate parts of a given text and find the variance [30] of these frequencies, this variance shows the fulfillment of this character n-gram in the separate parts—at the beginning, in the middle, and at the end of that text, i.e., the stability of the character n-gram in the text. In other words, if the variance of frequencies of a character n-gram in a text, which is calculated as aforementioned, is small, this n-gram can be considered stable in that text. Even if we calculate the frequency of an arbitrary character n-gram in each of the texts of a certain author candidate in the training set and find the variance of these frequencies, the small value of this variance indicates that the character n-gram characterizes the texts of that author well. Or if we combine the known texts of each of the author candidates in the considered problem and get a single text for each author candidate (for number of authors, we obtain texts), if the variance of an arbitrary character n-gram in one of these texts is small, and the variances in the others’ are large, this character n-gram characterizes one author candidate better than others, so this character n-gram can be effectively used in the considered author recognition problem. It turns out that the variance values, which indicate how character n-grams are distributed in a certain text or the stability of character n-grams, are informative in terms of author recognition. Hence, we considered that along with the frequencies of character n-grams in a given text, their variances in the text can be used as text features as well. In other words, by dividing the given text into a certain number of separate, non-intersecting parts and calculating the frequencies of an arbitrary character n-gram in these parts, the variance of these frequencies can be used as some of the features of that text.

To calculate the values of the statistical characteristics of any character n-gram in a given text for an arbitrary , the text must first be divided into certain almost equally sized non-intersecting parts, the frequencies of that character n-gram in each of these parts of the text must be found, and the variance value of these frequencies must be calculated.

3.5. Word Frequency

Word frequencies in texts are important features that can be related to authors [3,4,14,22]. The frequency of a particular word in a given text is calculated by dividing the number of times that word occurs by the total number of words in the text.

The results of our research (see Section 7) show that if the frequency of an arbitrary word in a given text is used together with the frequencies of the unified texts of the candidate authors among the text features, it positively affects the recognition efficiency, where, by the unified text of a candidate author, we mean the text resulting from merging the author’s texts in the training set. In addition to the frequencies of a given word in the unified texts of the candidate authors, the frequency of this word in the unified text obtained as the result of merging all the texts in the training set can be used among the text features. The rules for calculating the frequencies of an arbitrary word in the combined texts of the candidate authors and in the text resulting from merging all the texts of the training set are described below.

The total usage frequency of an arbitrary word by any author is obtained by dividing the sum of the number of that word in the author’s texts in the training set by the total number of words in the author’s texts in the training set. The total frequency of an arbitrary word in all texts in the training set is calculated by dividing the total number of that word in the texts in the training set by the total number of words in the texts in the training set.

4. Feature Selection Procedures

Various feature selection procedures have been proposed [1,2,6,16,31]. Here, two different feature selection procedures for word selection and two for selecting character n-grams whose frequencies will be used as text features are proposed, as described below. Recall that these feature selection procedures are performed on the training set texts whose authors are known.

4.1. Procedure for Selecting Frequently Used Words by Authors (Procedure 1)

During the selection of words, the frequencies of which are to be used as text features in author recognition with the help of this procedure, the total frequencies of words used by each of the authors are taken into account separately. This first feature selection procedure differs from the second feature selection procedure (see Section 4.2), i.e., in procedure 2, word selection is carried out based on the total number of times the words are used in the texts in the training set without considering the authors of the texts; in procedure 1, the most or average or least used words of the author were selected, where , then words from authors’ lexicons are combined into a final set of words.

The determination of the most or average or least used words in the authors’ lexicons is based on the total usage frequencies of the words in the author’s , texts, here , where is the set of texts in the training set, is the set of the author’s texts in the dataset , and is the number of texts of in dataset T. Let us denote the total usage frequency of an arbitrary word in author’s texts by , where is the set of all words in the training set , and .

In order to select the most or average or least used words by an author from among the elements of the word set , at first, has to be sorted according to the descending order of the usage frequency of the words by the author , where . Let us denote the set of words arranged according to the descending order of usage frequency of the words by the author by . For given consider the set of most frequently used words of author as and the final set of most frequently used words of authors as , where

The most frequently used words of authors should be selected in such a way that authors’ lexicons do not intersect, i.e., .

It is possible that the most used words of a certain author , although not included in the set of most used words of , are also used a lot by another author , where . These kinds of words may not have a discriminatory character between authors and . Therefore, in the author recognition problem considered in the study, in addition to the most frequently used words in the authors’ lexicons, the selection of words that are used on average was also used. These words are neither at the beginning nor at the end of a sorted list , where . This is analogous to the way described above, where the most frequently used words of authors are selected, except the following Formula (4) has to be used instead of the aforementioned (2):

where indicates an integer obtained as a result of rounding down an arbitrary positive number. For example, , .

Formula (4) was obtained by only changing the indices of words, i.e., j, in the set of words in Formula (2), where . This is an analogous operation not only for the most or average used words but also the words in other percentiles, e.g., those with a frequency higher than 75% can be selected.

4.2. Procedure for Selecting Frequently Used Words in the Training Set (Procedure 2)

During the selection of words, the frequencies of which are to be used as text features in author recognition with the help of this procedure, the total frequencies of words in the training set is taken as a basis without taking into account the authors.

Let us denote the total usage frequency of an arbitrary word in by , where is the set of all words in the training set , and . When using this procedure, the set of all the words in the texts is at first sorted according to the decreasing order of the total usage frequency in the training set :

where .

The set of most frequently used given words in the training set is as follows:

In the description of the first feature selection procedure (see Section 4.1), we noted that some of the most frequently used words of one author may be used a lot by another author, and in this case, these words may not be discriminative between these authors. Therefore, in the first feature selection procedure, along with the most frequently used words by the authors, words used as average were also selected. A similar situation may arise when using feature selection procedure 2. It is possible that some words, which are generally the most used words in the training set, are commonly used by more than one author; thus, they may not have a discriminatory character. Therefore, in addition to selecting the most used words in the training set, the selection of words that are used on average, i.e., are neither at the beginning nor at the end of , is also employed. The set of these words is as follows:

4.3. Procedure for Selecting Frequently Used Character n-Grams in the Training Set (Procedure 3)

During the selection of character n-grams (the frequencies of which are to be used as text features in author recognition), with the help of this procedure, the total frequencies of character n-grams in the training set are taken as a basis without taking into account the authors. During the execution of this procedure, the set of all character n-grams have to be sorted according to the decreasing order of the total usage frequency in the training set. The characters in these character n-grams, each with characters, are selected from the character alphabet set . Let us denote this obtained sorted set by . Consider the set of most or average or least frequently used character n-grams used most or average or least in the training set as .

We will denote the total usage frequency of a certain character n-gram in the texts in the training set by .

The set of all character n-grams obtained by sorting the character n-grams in the order of decreasing total usage frequency in the training set is as follows:

is the multi-index of a given arbitrary character n-gram numbered with in the set of character n-grams , where .

The set of most used character n-grams in the training set is as follows:

Feature selection procedure 3 is used to select character n-grams rather than words, unlike feature selection procedure 2. In the description of the second feature selection procedure, it was noted that the most used words in the training set may not be discriminative among authors; therefore, it is necessary to select the words that are averagely used in those texts as well. This is also typical for the third feature selection procedure used to select character n-grams.

The set of character n-grams that are used on average, which means they are neither at the beginning nor at the end of in the texts in the training set, is as follows:

4.4. Procedure for Selecting Character n-Grams with Different Characterizing Degrees of Authors (Procedure 4)

In solving author recognition problems, text features should be used such that the values of these features are similar in the texts of one author and different in the texts of different authors. In other words, characterizing degrees of authors per feature, the values of which are used in author recognition, have to differ from each other. This feature selection procedure was proposed for the selection of character n-grams for which the characterizing degrees of the authors differ.

During the execution of this procedure, firstly, degrees of not characterizing the authors in the sense that will be mentioned below per a given n-gram are determined, and based on these degrees of non-characterization, their complements—the characterizing degrees—are determined. Then, an indicator indicating how much the characterizing degrees of the authors differ from each other in terms of one of the following meanings per that n-gram is calculated. In the study, different metrics were used to calculate the latter.

We consider the degree of not characterizing a given author per an arbitrary n-gram as the average difference of characterizing degrees (mean of the pairwise differences of these frequencies) of the corresponding frequencies in the texts of author as:

where are the frequencies of n-gram in the texts of author numbered with accordingly, . Considering that for the frequency of an arbitrary in a given text is true, it is clear that . Then, the characterizing degree of an author per a n-gram is , where .

For simplicity, the characterizing degrees of the authors per a n-gram is sorted in decreasing order: where .

The difference of characterizing degrees of the authors for an arbitrary character n-gram can be defined as one of the following:

As the difference of characterizing degrees per an n-gram can be used.

Sorted set of character n-grams ordered in the decreasing order of the difference indicators of characterizing degrees of authors per character n-grams in is as follows:

is the multi-index of a given arbitrary character n-gram numbered with in , where .

The set of character n-grams with high discrimination indicator—characterizing degree difference among authors in the training set—is as follows:

5. Characteristics of Feature Groups

Using the value of a single feature of a particular text, e.g., the frequency of 5-word sentences or the frequency of the character 2-gram “ab”, is not sufficient for an effective author identification in terms of adequately identifying the authors of the texts that will be executed. Therefore, the values of more than one feature are used in a specific feature group consisting of different features for an arbitrary text; see [1,25]. The characteristics of the feature groups used in this study are detailed below.

5.1. Sentence Length Frequency and Word Length Frequency Types of Feature Groups

Only two feature groups consisting of features related to the sentence length frequency type were used. These feature groups differ according to the sentence lengths to be used and the number of these different sentence lengths, where sentence length means the number of words in a sentence. In one such feature group, there are frequencies of sentences with lengths of 5, 6, …, 14. We will denote this feature group by the name SL10 in the study, where SL stands for “sentence length”, and 10 indicates the number of features in the feature group. Naming the groups of features used in the study with such short expressions is for the reader to understand the differences in those groups and also for use in the results of computer experiments if needed. Another feature group, SL25, consists of frequencies of sentences with lengths of 5, 6, …, 29.

The number of feature groups consisting of only word length type features is also two. These feature groups differ according to the word lengths to be used and the number of these different word lengths, where word length means the number of letters in a word. In one such feature group, there are frequencies of words with lengths of 3, 4, …, 7. We will denote this feature group by the name WL5, where WL stands for “word length”, and 5 indicates the number of features in the group. Another feature group, WL10, consists of frequencies of words with lengths of 3, 4, …, 12.

A mixed feature group consisting of features belonging to the types of sentence length frequency and word length frequency was also used. The values of the features in this feature group are the frequencies of sentences with lengths 5, 6, …, 14 and the frequencies of words with lengths 3, 4, …, 12. Let us denote this feature group by SL10&WL10, where the “&” symbol is used in the names of mixed feature groups.

In the study, the number of feature groups used in the considered author recognition problem, which contains features of sentence length frequency and word length frequency types, is 5:

sentence length frequency: {number of feature groups} = 2;

word length frequency: {number of feature groups} = 2;

mixed groups of sentence length frequencies and word length frequencies: {number of feature groups} = 1.

5.2. Character n-Gram Frequency and Variance of Character n-Gram Frequencies Types of Feature Groups

In the study, the character alphabet set—the set that contains the characters in character n-grams with a given used in the author recognition problem considered in the example of Azerbaijani writers—is the letters of the alphabet of the Azerbaijani language, in other words, , where . The character alphabet set is clearly the set of character 1-grams, each with 1 character. In the study, for the frequencies of character 1-grams—unigrams—and for the frequencies of character 2-grams—bigrams—were used in the feature groups consisting of character n-gram frequency type features.

A feature group consisting of the frequencies of all unigrams in the character alphabet set was used. We will denote this feature group by Ch1g32 in the study, where “Ch1g” is for “character 1-grams”, and 32 represents the number of features in that group.

Different subsets of all character bigrams set were used to create feature groups. Recall that characters in these bigrams are selected from the character alphabet set . These feature groups differ from each other according to the feature selection procedure used and the variety and number of bigrams, the values of which are used in these feature groups. In the study feature selection, procedures 3 and 4 are used to select character bigrams from . For procedure 3—the procedure for selecting frequently used character n-grams in the training set—and procedure 4—the procedure for selecting character n-grams with different characterizing degrees of authors—see Formulas (8)–(10) and (11)–(16), respectively. A total of 26 and 21 different feature groups were created with the help of feature selection procedures 3 and 4, respectively. Two of these twenty-six feature groups of bigrams were selected with the help of the third feature selection procedure, each consisting of frequencies of 100 bigrams selected directly from the set . These two feature groups consist of the frequencies of the 100 most and average used bigrams in the training set selected from 1024 character bigrams in the set . We will denote these feature groups as Ch2g_p3high100 and Ch2g_p3middle100, respectively. Here, “Ch2g” stands for “character 2-grams”; “p3” indicates that the third feature selection procedure is used; and 100 indicates the number of features in each of the groups.

The other 24 feature groups were selected from the bigrams whose frequencies were used in these two feature groups—Ch2g_p3high100 and Ch2g_p3middle100. Among the high-frequency 100 bigrams in the training set 5, 10, …, 25, 50, the first and last bigrams in the descending list of bigrams ordered by the frequencies in the training set are selected. We denote them by Ch2g_p3high100first5, …, Ch2g_p3high100first50 and Ch2g_p3high100last5, …, Ch2g_p3high100last50. Among the middle—neither high nor low—frequency 100 bigrams in the training set 5, 10, …, 25, 50, the first and last bigrams in the descending list of bigrams ordered by the frequencies in the training set are also selected. We denote them by Ch2g_p3middle100first5, …, Ch2g_p3middle100first50 and Ch2g_p3middle100last5, …, Ch2g_p3middle100last50.

With the help of the fourth feature selection procedure, 21 feature groups consisting of the frequencies of character bigrams selected from the set were created. In seven of these groups, the two best-characterized authors, the three best-characterized authors in the other seven groups, and all the authors in the remaining seven groups were taken into account during the calculation of the difference indicator of the characterizing degrees of authors (see Formulas (12)–(14)). We will denote them by 4.1, 4.2, and 4.3 in feature group names as some variants of the fourth feature selection procedure. Feature groups consisting of frequencies of 5, 10, …, 25, 50, 100 character bigrams with the highest indicators using each of the aforementioned metrics were created. Let us denote them as Ch2g_p4.1high5, …, Ch2g_p4.1high50; Ch2g_p4.2high5, …, Ch2g_p4.2high50; Ch2g_p4.3high5, …, Ch2g_p4.3high50, respectively.

Character unigrams and character bigrams selected with the help of feature selection procedure 3 are used to create feature groups consisting of variance of character n-gram frequencies as well. They are analogous to Ch1g32; Ch2g_p3high100, Ch2g_p3middle100; Ch2g_p3high100first5, …, Ch2g_p3high100first50; Ch2g_p3high100last5, …, Ch2g_p3high100last50; Ch2g_p3middle100first5, …, Ch2g_p3middle100first50; Ch2g_p3middle100last5, …, Ch2g_p3middle100last50. But instead of character n-gram frequencies, variances of character n-gram frequencies are employed in feature groups. We use “ ChngVar” prefix instead of “Chng” in the names of these groups, where “Var” is for “variance”.

Mixed feature groups consisting of features of character n-gram frequency and character n-gram frequency variance types were also used. In one of these mixed feature groups, the character n-gram frequencies and variances of character n-gram frequencies for all unigrams were used, i.e., a group Ch1g&Ch1gVar64 was created consisting of all features in the Ch1g32 and Ch1gVar32 groups. In the other four mixed feature groups, character n-gram frequencies and variances of character n-gram frequencies for the most frequently used 5, 10, 15, 20 character bigrams in the training set were used. We denote these groups by Ch2g&Ch2gVar_p3high100first10, …, Ch2g&Ch2gVar_p3high100first40.

In this study, the number of feature groups used in the considered author recognition problem, which contains the features of character n-gram frequency and variance of character n-gram frequency types, is 79:

1-grams: {number of feature types} × {number of n-gram subsets} = 2 × 1 = 2;

2-grams selected with the help of the third feature selection procedure: {number of feature types} × ({number of multi-gram n-gram sets} × (1 + {number of selection criteria of few-gram n-gram subsets) × {few-number of n-gram subsets })) = 2 × (2 × (1 + 2 × 6)) = 52;

2-grams selected with the help of the fourth feature selection procedure: {number of feature types} × {number of metrics} × {number of n-gram subsets} = 1 × 3 × 7 = 21;

mixed groups of character n-gram frequencies and variances of character n-gram frequencies: {number of n-gram subsets} = 4.

5.3. Word Frequency Type of Feature Groups

In the considered author recognition problem, different feature groups consisting of features related to the type of word frequency were used.

These feature groups differ from each other according to the variety and number of words used in these groups, as well as the frequency types, i.e., in addition to the frequencies of the words in the given text, the features of the given text may also include the frequencies of these words in candidate authors’ unified texts and the total usage frequencies in the training set (see Section 3.5).

We divide these feature groups into several types. It may be confusing, but it is not about the type of features but the type of feature group. We will only separate groups of features of word frequency type into such feature group types due to the large number of approaches used for the selection of words, the values of which, in these groups, are quite different from each other. Let us take a look at the characteristics of each of the different feature group types in the study, which consist of features belonging to the word frequency feature type used:

1. With the help of feature selection procedure 1—the procedure for selecting frequently used words by authors (see Formulas (1)–(4))—the most frequently used words of the authors in their own texts in the training set are determined separately per each author. Using procedure 1, all the words in the training set are sorted times for number of authors each to the order of the decreasing total usage frequency by an appropriate author, which gives L sets of words. Then, an almost equal number of words is selected from each of the word sets. Finally, selected words are collected together as a final set of words selected with feature selection procedure 1. In the study, 15, 25, 45, and 50 high frequent and middle frequent words of authors are used as feature groups. We denote these feature groups by the names W_p1high15, …, W_p1high50, W_p1middle15, …, W_p1middle50, where “W” is “word”, “p1” indicates that the first feature selection procedure is used, “high” and “middle” indicates that the most and middle frequently used words by the authors are selected, and 15, …, 50 indicate counts of features in these groups.

2. Words that are rarely used in everyday life can be more effective in author recognition in terms of discriminating texts of different authors than words that are often used in daily life. Taking this into account, among the most used words by the authors individually, the frequencies of which were used in groups W_p1high15, …, W_p1high50, we manually selected the words that we assume are used less often in everyday life and manually selected the feature groups consisting of the frequencies of these words. In other words, we removed words that are often used in everyday life among the words selected with the help of the first feature selection procedure. The numbers of words in such groups are 3, 15, 25, 40, and 45, and let us denote these groups by the names W_p1highMAN3, …, W_p1highMAN45, respectively, where “MAN” is for “manual”, which means that after selecting the most used words by the authors with the help of feature selection procedure 1, some of these words were manually selected from this word set.

3. Using the second feature selection procedure—the procedure for selecting frequently used words in the training set (see Formulas (5)–(7))—5, 10, …, 25, 50 most and middle occurring words in the training set where the authors of the texts are not taken into account were selected and used in feature groups. Let us denote these feature groups as W_p2high5, …, W_p2high50, W_p2middle5, …, W_p2middle50.

4. In author recognition problems, such text features should be used that represent the stylistic characteristics of its author in any given text of him/her [1]. It is clear that in a given author recognition problem, if the text features effectively reflect the stylistic characteristics of any author, the features of texts of a certain author must remain invariant according to their topics.

In this study, an author recognition problem is considered in the example of Azerbaijani writers. There are two types of parts of speech in the Azerbaijani language: main and auxiliary. Based on the morphology of the Azerbaijani language, words related to auxiliary parts of speech, e.g., conjunctions, do not have lexical meaning, so they do not seriously affect the topic of a text. These words are function words that do not possess a meaning but have a function in sentences. For function words, see, e.g., [1]. So, the frequency of words related to auxiliary parts of speech can be more effective as text features than the frequency of words related to the main parts of speech because they can more adequately reflect the author’s stylistics since they are independent of the text’s topic.

For this type of word frequency feature group, feature selection procedure 2 is used, but the word set W, where selected words are selected, contains only words of auxiliary parts of speech in the training set instead of all the words in the training set. In this study, 5, 10, …, 25, 50 highest and middle frequent auxiliary parts of speech words are used for feature group creation. Let us denote them by W_p2highAUX5, …, W_p2highAUX50, W_p2middleAUX5, …, W_p2middleAUX50, where “AUX” is for “auxiliary”, which means that the words whose frequencies are used in the feature groups belong only to the auxiliary parts of speech.

5. In the word frequency feature groups described above, the frequencies of words in the given text features that were intended to be extracted were used. In this type of word frequency group, not only the frequencies of the selected words in the given text but also the total frequencies of those words in the texts of the authors in the training set and in all the texts in the training set without taking authors into account are used.

In this study, some feature groups were used in which not only the frequencies of the selected words in the given text but also the total frequencies of the words in the unified texts of the authors in the training set and the total frequency in the training set without taking authors into account were used. During the use of groups belonging to this type of feature group, among the features of an arbitrary text, there is the frequency of a given word in that text, the total frequency of the authors’ texts in the training set, and the total frequency of all texts in the training set.

As is clear from the description above, the main difference between this type of word frequency group and the previous types is not about the words selected but the text(s) from which it is calculated. Using a word, the frequency of that word in a given text, the total frequencies in authors’ unified texts in the training set, and the total frequency in the training set would be calculated. In the study, in addition to the word frequencies in a given text, the total frequencies obtained based on the texts in the training set used with word sets were used in the groups W_p2high50, W_p1highMAN3, W_p1highMAN15, W_p1highMAN25, W_p1highMAN40, W_p1highMAN45. Thus, W&WA&WT_p2high50, W&WA&WT_p1highMAN3, …, W&WA&WT_p1highMAN45 groups were created, where “W”, “WA”, and “WT” denote the frequencies of words in the given text, the total usage frequencies of words by authors, and the total usage frequencies of words in the training set, respectively.

In the study, the number of feature groups used in the considered author recognition problem that contain features related to the word frequency type is 43:

- W_p1 feature groups—feature groups that used procedure 1 for word selection: {number of word selection criteria} × {number of word sets} = 2 × 4 = 8;

- W_p1MAN feature groups—manually intervened feature groups that used procedure 1 for word selection: {number of word selection criteria} × {number of word sets} = 1 × 5 = 5;

- W_p2 feature groups—feature groups that used procedure 2 for word selection: {number of word selection criteria} × {number of word sets} = 2 × 6 = 12;

- W_p2AUX feature groups—feature groups that used procedure 2 to select words from among words belonging to some chosen parts of speech, i.e., auxiliary parts of speech: {number of word selection criteria} × {number of word sets} = 2 × 6 = 12;

- W&WA&WT feature groups—along with the frequencies of the words in the given text, the total frequencies in the training set are used: {number of feature groups of type 3} + {number of feature groups of type 2} = 1 + 5 = 6.

The calculation of the frequency of an arbitrary word in any given text in the training set, the total frequencies of that word in the authors’ texts in the training set, and the total frequency in all texts in the training set are outlined in Section 3.5.

6. Recognition and Dataset

The machine learning methods and text sets, e.g., the training sets or test sets used in the study, are outlined below.

6.1. Description of Training and Test Sets

The dataset used in the author recognition problem considered in the study was prepared by the authors of the study as a result of the collection of large and small literary fiction works of 11 Azerbaijani writers. This dataset consists of 28 large and 123 small works, for a total of 151 works of those 11 authors (Table 1). Let us call this Dataset-0. The number of large works per author varies between one and five, and the number of small works varies between 0 and 46. In order to increase the number of observations, i.e., texts with known authorship for use in parameter identifications of mathematical models in feature selection when using one of the feature selection procedures, and so on, each of the large literary works in Dataset-0 was divided into 10 almost equal separate parts, and another dataset was created with small works and those text parts of large works. Let us call this Dataset-1. In Dataset-1, the number of texts per author varies between 25 and 56. Since there are 123 small works in Dataset-0, and each of the 28 large works in Dataset-0 was divided into 10 parts, the total number of observations in Dataset-1 is . By splitting Dataset-1, approximately 80–20% training and a test set of 325 and 78 texts with known authorship, respectively, were created (Table 2).

Table 1.

Datasets used in the study.

Table 2.

Training and test sets used in the study.

In parametric identifications of mathematical models in feature selection, the training set was used, where the training and test sets are non-intersecting subsets of Dataset-1, , where and are the training and test sets, respectively. Author recognition approaches, each consisting of mathematical models used in author recognition, text feature groups, and so on, used in the considered author recognition problem in the study were evaluated based on the recognition accuracies obtained for the test set, which is a subset of Dataset-1 and Dataset-0—sets of large and small literary works themselves.

6.2. Recognition Models

Support vector machine (SVM) and random forest (RF) methods, as well as multilayer feedforward artificial neural network (ANN) models of machine learning, were used in the considered author recognition problem.

ANNs used in the study differ from each other in terms of the number of layers, the number of neurons in these layers, and the values of synapses and biases among these neurons. In ANNs used for solving the considered author recognition problem, the number of neurons at the input layer—the first layer—is equal to the number of elements of the text feature vector, i.e., the number of elements in the feature group, and the number of neurons at the output layer—the last layer—is the number of authors. Intermediate layers, i.e., layers other than input or output layers, are called hidden layers. All ANNs used in the author recognition problem considered in the study are four-layered. In other words, the number of hidden layers is two. The number of neurons in the hidden layers of the ANNs used in the study differs according to the number of neurons in the input layer, i.e., the number of neurons in the last layer is the number of authors in the problem; in other words, the same for all ANNs used in the study. Let us note that the number of neurons in the input layer equals the number of features in the feature group. The number of neurons in the hidden layers in the ANNs used in the study varies from 3 to 10, and the total number of parameters—synapses and biases in the ANNs—varies from 271 to 325.

In ANNs, SoftMax was used as the activation function for the last layer, and a rectified linear unit was used for the other layers. In the parametric identification of ANNs, the Adam optimization method [32] was used. This method should not be confused with the Adams method; the Adam method is a finite-dimensional unconstrained optimization method. For the stopping criterion, “EarlyStopping” was used [33]. This aims to stop optimization when the value of the object function does not improve. If the stopping condition “EarlyStopping” is not satisfied, an additional stopping condition is also used to stop the optimization; with this stopping condition, optimization stops when the number of epochs exceeds a sufficiently large number. By epoch, we mean at least one step using all the observations in the training set; for example, training 10 epochs means that each of the instances/observations in the training set was used 10 times in training. The upper limit for epochs was taken as 200 in the author recognition problem considered in the study.

The radial basis function was used as a kernel in SVM. The number of trees—estimators used in RF—is 100, and the maximum depth of trees is 5.

The 2-gram frequencies of the 100 most frequently used character n-grams in the texts in the training set were also used with a CNN [33] in the form of a 10 × 10 matrix. The other machine learning methods and models used were tested with nearly all feature groups. The architecture of the used CNN consists of three convolution layers with 4, 4, 1 filters with dimensions of 3 × 3, 3 × 3, and 2 × 2 with padding choices “same”, “valid”, or “valid”, respectively, one subsampling layer after each of these convolution layers, and two fully connected layers with 4 and 11 neurons, respectively. For subsampling, layer average pooling was used.

During parametric identification, the observations in the training set were involved in training in a mixed manner; in other words, observations in the training set were “shuffled” [33]. Since the number of observations belonging to different classes, i.e., authors in the training database differ from each other, the observations in the teaching set were given different class weights during their participation in training. The weight of each observation used in training is determined based on the number of observations in the class, i.e., the author of that observation.

Excluding the character bigrams selected using feature selection procedure 4, all feature groups were used with ANN, SVM, and RF. Frequencies of character bigrams selected using feature selection procedure 4 were used with SVM and RF.

7. Analysis and Discussion of Results of Computer Experiments

The Python programming language and related libraries were used. Specifically, Scikit-Learn library was used to work with SVM and RF, and the Keras library in TensorFlow framework to work with ANN [34,35].

By recognition accuracy in a given set of texts whose authors are known, we mean the ratio of the number of texts whose author is correctly identified to the number of all texts in the set [33]. The sets used are as follows: Dataset-0, which consists of large and small literary works of authors; Dataset-1, which consists of small works and separate parts of each of the large works in Dataset-0; and training and test sets obtained from the division of Dataset-1. The recognition accuracies obtained for the training set and the test set, as well as for Dataset-0—a set of original large and small literary works, themselves used with different methods of machine learning with different feature groups used—are given in the tables and images below. Sometimes, recognition accuracies with a certain confidence percentage on the test set, for example, 50%, 75%, 95%, and 99%, are also given, where the confidence percentage means that texts whose author is known with a confidence that is below the percentage are accepted as not correctly recognized. Note that in the figures and tables provided in this study, we will denote Dataset-0 with the word “Works” for simplicity and immediate understanding.

7.1. Analysis of the Recognition Effectiveness of Character n-Gram Frequency and Variance of Character n-Gram Frequencies

In the study, the maximum recognition accuracies obtained using different methods and machine learning models with character n-gram frequency and variance of character n-gram frequency types of features on Dataset-0 are given in Table 3. For only groups of character bigram frequencies, Table 3 takes into account both feature selection procedures 3 and 4. From the recognition accuracies given in this table, it can be seen that character bigrams—2-grams—are more effective than character unigrams—1-grams—in many cases. Variance of character n-gram frequency type feature groups did not outperform character n-gram frequency type feature groups in recognition. Mixed feature groups with features belonging to both of these two types did not show a more effective result in recognition than feature groups consisting of features belonging to the character n-gram frequency type. The highest recognition accuracy was obtained in one of the bigram frequency groups among all the feature groups that belong to all the feature types using character n-grams.

Table 3.

Recognition accuracies (%) obtained by character n-gram frequencies and variances of character n-gram frequencies.

In the study, two feature selection procedures for selecting character n-grams were used—a procedure for selecting frequently used character n-grams in the training set and a procedure for selecting character n-grams with different characterizing degrees of authors. For feature selection procedures 3 and 4, see Formulas (8)–(10) and (11)–(16), respectively.

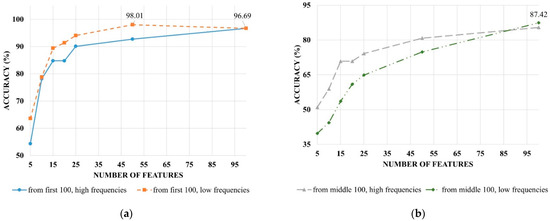

In Figure 1 and Figure 2, the maximum obtained recognition accuracies of bigram frequencies and variance of bigram frequencies in various machine learning methods and models for bigrams selected with feature selection procedure 3 on Dataset-0 were given. Charts (a) and (b) in each of the figures show the results for bigrams selected from the top 100 and averagely used, i.e., first and middle in the descending list of bigrams ordered by the total frequency in the training set character bigrams, respectively. In each of the charts in Figure 1 and Figure 2, two graphs are given. One of these graphs shows the results obtained with the highest frequency 5, 10, …, 25, 50, 100 bigrams among 100 bigrams, i.e., first or average in descending order of total frequency in the training set, and the other graph shows the results obtained with the lowest frequency 5, 10, …, 25, 50, 100 bigrams.

Figure 1.

Recognition accuracies obtained with variances of 2-gram frequencies in feature selection procedure 3: (a) frequencies of first bigrams in the descending order of total frequency in the training set; (b) frequencies of middle bigrams in the descending order of total frequency in the training set.

Figure 2.

Recognition accuracies obtained with 2-gram frequencies in feature selection procedure 3: (a) variances of frequencies of first bigrams in the descending order of total frequency in the training set; (b) variances of frequencies of middle bigrams in the descending order of total frequency in the training set.

From the graphs in Figure 1, it can be seen that the frequencies of the most frequently used bigrams in the training set give higher recognition accuracy than the frequencies of the averagely used n-gram characters in the training set. The second conclusion drawn from this figure is that among the first 100 bigrams in the descending order of frequency in the training set, those with lower frequencies in the training set are more effective than those with higher frequencies. This means that in order to use their frequencies in feature groups, it is advised to select character n-grams in the training set that are neither among the most used nor the least used ones in the texts. The conclusions about the recognition accuracies obtained with groups of features of character bigram frequency type in Figure 1 are analogous to the conclusions about the recognition accuracies obtained with groups of features of character bigram frequencies variance type in Figure 2. Character bigrams used to obtain results are appropriately the same for these two feature types in Figure 1 and Figure 2.

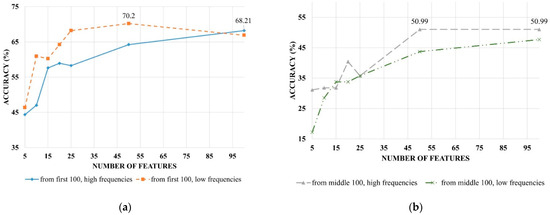

The maximum recognition accuracies obtained on Dataset-0 by various machine learning methods and models—here, just SVM and RF were used with frequencies of bigrams selected using feature selection procedure 4 (the procedure for selecting character n-grams with different characterizing degrees of authors)—are shown in Figure 3. In each chart in Figure 3, there are two graphs. In chart (a), the results were obtained using 4.1 and 4.2 variants, and in chart (b), 4.2 and 4.3 variants of feature selection procedure 4 are represented. For a detailed description of the feature groups using 4.1, 4.2, and 4.3 variants, see Section 5.2. For 4.1–3 variants of the fourth feature selection procedure, see Formulas (12)–(14). For calculating the difference indicator characterizing degrees of authors, these variants use characterizing degrees of best-characterized two, three, and all authors, respectively.

Figure 3.

Recognition accuracies obtained with 2-gram frequencies using feature selection procedure 4: (a) 4.1 and 4.2 variants of the feature selection procedure (see Formulas (12) and (13)); (b) 4.2 and 4.3 variants of the feature selection procedure (see Formulas (13) and (14)).

It can be seen from Figure 3 that as the number of best-characterized authors taken into account when calculating the difference indicator of characterizing degrees increases, in many cases, more accurate recognition results are obtained. Despite this, the procedure for selecting character n-grams with different characterizing degrees of authors—feature selection procedure 4—did not outperform the procedure for selecting frequently used character n-grams in the training set—feature selection procedure 3.

7.2. Analysis of the Recognition Effectiveness of Word Frequency

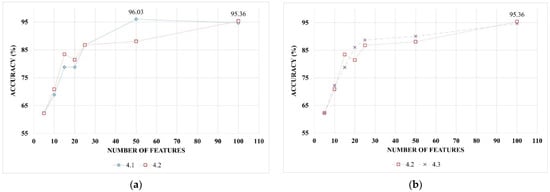

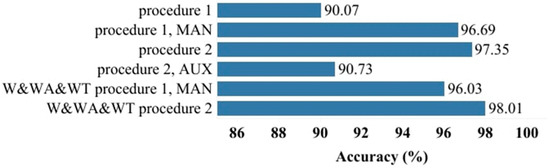

The maximum recognition accuracies on Dataset-0 obtained with word frequencies are shown in Figure 4: from left to right, the first column shows the word selection approaches, and the second column shows the recognition accuracies. It should be noted that in the study, two feature selection procedures were used to select words whose frequencies will be used in word frequency feature groups. For the calculation of word frequencies and for word frequency feature groups, see Section 3.5 and Section 5.3, respectively. In the author recognition problem under consideration using one of these procedures, the most frequently and middle frequently used words in the lexicons of the candidate authors were selected. Using another procedure, the most frequently and middle frequently used words in the training set were selected without taking into account the author differences. For feature selection procedures 1 and 2, see Formulas (1)–(4) and (5)–(7), respectively.

Figure 4.

Recognition accuracies obtained with word frequencies (%).

It can be seen from Figure 4 that the frequencies of words selected using only procedure 2 turned out to be more effective in recognition than the frequencies of words selected using only procedure 1. Recognition accuracy increased when selecting words by manually removing some words—words used in everyday life, names of characters in literature works, and so on from among the words most used by authors, i.e., selected using feature selection procedure 1 (see groups “procedure 1” and “procedure 1, MAN” in Figure 4). Recognition accuracy decreased when the most common words in the training set, i.e., selected using feature selection procedure 2, were not selected from all words but only from words belonging to auxiliary parts of speech that do not have lexical meaning (see groups “procedure 2” and “procedure 2, AUX” in Figure 4). When the most frequently used words of authors are selected separately by the authors using feature selection procedure 1, in addition to the usage frequencies of words in a given text, the total usage frequency of words in each candidate author’s texts, and the total usage frequency in the training set are used in recognition, recognition effectiveness decreases (see groups “procedure 1, MAN” and “W&WA&WT procedure 1, MAN” in Figure 4). This may be due to the fact that the frequencies in the authors’ texts have already been taken into account when selecting words using procedure 1. In addition to the frequencies of the most frequently used words in the training set without taking into account author differences, total usage frequencies in candidate authors’ texts and the overall frequency in the training set are also used, and recognition accuracy increases (see “procedure 2” and “W&WA&WT, procedure 2” groups in Figure 4).

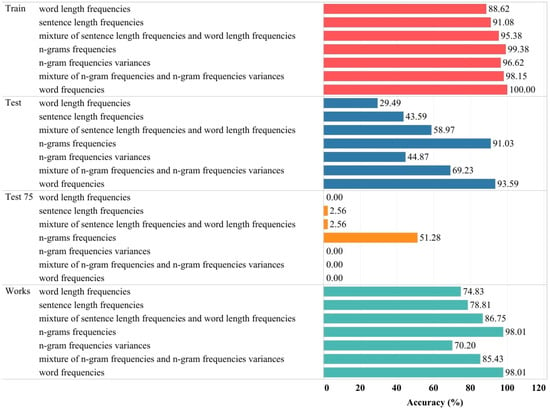

7.3. Comparative Analysis of Different Types of Features

Figure 5 shows the recognition results obtained with different feature types: from left to right, the first column shows the text set on which accuracy is calculated, the second shows feature types, and the third column shows the recognition accuracies. From Figure 5, it can be seen that features belonging to the character n-gram frequency type and features belonging to the word frequency type were more effective in recognition than features belonging to other feature types. Mixed feature groups, in which features belonging to character n-gram frequency and character n-gram frequency variance types were used together, did not improve the recognition accuracy obtained with feature groups consisting of features belonging to only one of these types. Mixed feature groups that shared features belonging to the sentence length frequency and word length frequency types resulted in higher recognition accuracy than feature groups consisting of features belonging to only one of these types, i.e., using sentence length frequencies and word length frequencies together in a mixed feature group was more effective in recognition.

Figure 5.

Maximum recognition accuracies obtained using different types of features.

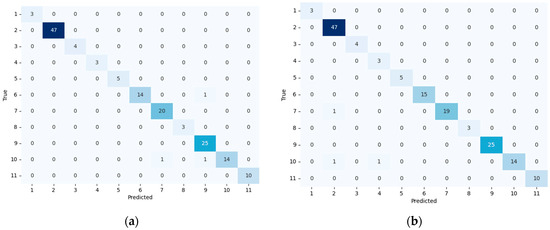

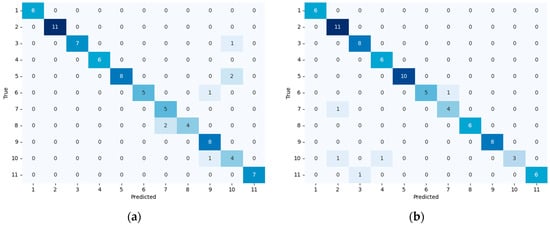

Table 4 shows the comprehensive recognition results on different text sets and with different confidence percentages of two feature groups with the highest recognition accuracy on Dataset-0 in the author recognition problem considered in the study. One of these two feature groups contains the character n-gram frequencies, and another contains word frequencies. It is clear from the results in the table that these two feature groups caused the same accuracies in Dataset-0 (see “Works” in Table 4), but it seems recognition is performed with more confidence with character n-gram frequencies (e.g., see “Test 75”—on the test set with 75% confidence). These two cases differ not only because of obtaining different recognition accuracies with certain degrees of confidence but also because of recognition indicators for different author candidates. For these two cases, it seems from the confusion matrices [33] given in Figure 6 and Figure 7 that they were correspondingly obtained on Dataset-0 and the test set. This can be seen from the precision and recall values on Dataset-0 for different author candidates of these two cases given in Table 5 and Table 6 as well. The overall precision, recall, F1 score, and Cohen’s kappa [36] values obtained on different text sets for those two cases are given in Table 7. It should be noted that the values of precision, recall, and F1 score in Table 7 are the average of per-class precision, recall, and F1 score indicators for author candidates weighted according to the number of texts of those author candidates in the text set.

Table 4.

Maximum recognition accuracies of two best-performing feature groups (%).

Figure 6.

Confusion matrices obtained with two best-performing feature groups on the literary works: (a) n-gram frequencies; (b) word frequencies.

Figure 7.

Confusion matrices obtained with two best-performing feature groups on the test set: (a) n-gram frequencies; (b) word frequencies.

Table 5.

Recognition results per different authors with n-gram frequencies on literary works.

Table 6.

Recognition results per different authors with word frequencies on literary works.

Table 7.

Overall recognition results.

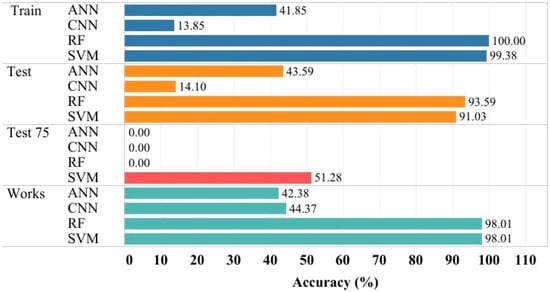

Figure 8 shows the maximum recognition accuracies obtained by various machine learning methods and models: from left to right, the first column shows text sets (the bases on which recognition accuracies are calculated), the second column shows machine learning methods and models, and the third column shows recognition accuracies. From this figure, it can be seen that higher recognition accuracies were obtained using SVM and RF than using ANN. From the recognition accuracies obtained with 75% confidence on the test set, it is clear that SVM recognizes with greater confidence than RF.

Figure 8.

Maximum recognition accuracies obtained by different methods of machine learning.

In the study, the results of the comparative analysis of using different text feature types with different machine learning techniques were obtained on the example of a dataset consisting of texts in the Azerbaijani language. Nevertheless, the approaches used in the study and the methodology of the conducted experiments can be used in the recognition of text authors in other languages as well.

The models we have built depend strongly not only on linguistic features but also on the region (geographical location) of residence of the text authors. This was pointed out by Gore, R.J. et al., whose research is related to the dependence of the characteristics of the authors’ texts on the region of their residence [37].

At the same time, we believe that the geographical factor is stable over time for the authors of texts, and the movement of authors geographically does not significantly affect the stylistic “fingerprint” of authors.

8. Conclusions

A comparative analysis of the effectiveness of using different machine learning classification methods with different text feature groups consisting of different feature types and their mixtures was carried out in the example of literary novels in the Azerbaijani language of several famous Azerbaijani writers.

Among the feature types, more accurate results in terms of recognition accuracy were achieved with character 2-gram frequencies and selected word frequencies. On the literary works considered, the classification methods of support vector machine and random forest with selected word frequencies had the same accuracy, but the support vector machine with character 2-gram frequencies showed more reliable results in identifying authors with higher confidence.

Since the recognition effectiveness of different machine learning methods with various groups of features of different types represents comparable results, for future work, we plan to consider using different group decision-making methods for the combined decision-making of different author recognition approaches. This is intended to increase the reliability of the to-be-developed author recognition computer system.

Author Contributions

Conceptualization, E.P. and R.A.; methodology, E.P. and R.A.; software, R.A.; validation, R.A.; formal analysis, E.P.; investigation, E.P. and R.A.; resources, R.A.; data curation, R.A.; writing—original draft preparation, E.P. and R.A.; writing—review and editing, E.P. and R.A.; visualization, R.A.; supervision, E.P.; project administration, E.P.; funding acquisition, E.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was carried out under the Erasmus+ 2022-2024 program in KA171 for learning mobility for students and staff in higher education between EU Member States and third countries [Agreement number: 2022-1-EL01-KA171-HED-000074817] between the University of Thessaly (G VOLOS01) and the Institute of Control Systems of Azerbaijan National Academy of Sciences (E10291793).

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study and development process of a software for authorship attribution of texts in Azerbaijani language.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Stamatatos, E. A survey of modern authorship attribution methods. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 538–556. [Google Scholar] [CrossRef]

- He, X.; Lashkari, A.H.; Vombatkere, N.; Sharma, D.P. Authorship Attribution Methods, Challenges, and Future Research Directions: A Comprehensive Survey. Information 2024, 15, 131. [Google Scholar] [CrossRef]

- Mosteller, F.; Wallace, D.L. Inference in an authorship problem: A comparative study of discrimination methods applied to the authorship of the disputed Federalist Papers. J. Am. Stat. Assoc. 1963, 58, 275–309. [Google Scholar]

- Diederich, J.; Kindermann, J.; Leopold, E.; Paass, G. Authorship attribution with support vector machines. Appl. Intell. 2003, 19, 109–123. [Google Scholar] [CrossRef]

- Doghan, S.; Diri, B. A new N-gram based classification (Ng-ind) for Turkish documents: Author, genre and gender. Turk. Inform. Found. J. Comput. Sci. Eng. 2010, 3, 11–19. (In Turkish) [Google Scholar]

- Yasdi, M.; Diri, B. Author recognition by Abstract Feature Extraction. In Proceedings of the 20th Signal Processing and Communications Applications Conference (IEEE Xplore), Mugla, Turkey, 18–20 April 2012; pp. 1–4. (In Turkish). [Google Scholar] [CrossRef]

- Bilgin, M. A new method proposal to increase the classification success of Turkish texts. Uludağ Univ. Fac. Eng. J. 2019, 24, 125–136. (In Turkish) [Google Scholar] [CrossRef][Green Version]

- Erdoghan, I.; Gullu, M.; Polat, H. Developing an End-to-End Author Recognition Application with Machine Learning Algorithms. El-Cezerî J. Sci. Eng. 2022, 9, 1303–1314. (In Turkish) [Google Scholar] [CrossRef]

- Graovac, J. Text categorization using n-gram based language independent technique. Intell. Data Anal. 2014, 18, 677–695. [Google Scholar] [CrossRef]

- Halvani, O.; Winter, C.; Pflug, A. Authorship verification for different languages, genres and topics. In Proceedings of the 3rd Annual DFRWS Europe, Lausanne, Switzerland, 29–31 March 2016; pp. 33–43. [Google Scholar]

- Fedotova, A.; Romanov, A.; Kurtukova, A.; Shelupanov, A. Digital Authorship Attribution in Russian-Language Fanfiction and Classical Literature. Algorithms 2023, 16, 13. [Google Scholar] [CrossRef]

- Kešelj, V.; Peng, F.; Cercone, N.; Thomas, C. N-gram-based author profiles for authorship attribution. In Proceedings of the PACLING—The Conference Pacific Association for Computational Linguistics (PACLING), Halifax, NV, Canada, 22–25 August 2003; pp. 255–264. [Google Scholar]

- Mendenhall, T.C. A Mechanical Solution of a Literary Problem. Pop. Sci. Mon. 1901, 60, 97–105. [Google Scholar]

- Zhao, Y.; Zobel, J. Searching with style: Authorship attribution in classic literature. In Proceedings of the Thirtieth Australasian Conference on Computer Science, Ballarat, Australia, 30 January–2 February 2007; pp. 59–68. [Google Scholar]

- Anisimov, A.V.; Porkhun, E.V.; Taranukha, V.Y. Algorithm for construction of parametric vectors for solution of classification problems by a feed-forward neural network. Cybern. Syst. Anal. 2007, 43, 161–170. [Google Scholar] [CrossRef]

- Howedi, F.; Mohd, M. Text classification for authorship attribution using Naive Bayes classifier with limited training data. Comput. Eng. Intell. Syst. 2014, 5, 48–56. [Google Scholar]

- Ayda-zade, K.; Talibov, S. Analysis of The Methods for The Authorship Identification of the Text in the Azerbaijani Language. Probl. Inf. Technol. 2017, 8, 14–23. [Google Scholar] [CrossRef]

- Marchenko, O.; Anisimov, A.; Nykonenko, A.; Rossada, T.; Melnikov, E. Authorship attribution system. In Natural Language Processing and Information Systems, Proceedings of the 22nd International Conference on Applications of Natural Language to Information Systems, Liège, Belgium, 21–23 June 2017; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 227–231. [Google Scholar]

- Mouratidis, D.; Kermanidis, K.L. Ensemble and Deep Learning for Language-Independent Automatic Selection of Parallel Data. Algorithms 2019, 12, 26. [Google Scholar] [CrossRef]

- Borisov, E.S. Using artificial neural networks for classification of black-and-white images. Cybern. Syst. Anal. 2008, 44, 304–307. [Google Scholar] [CrossRef]

- Aida-zade, K.R.; Rustamov, S.S.; Clements, M.A.; Mustafayev, E.E. Adaptive neuro-fuzzy inference system for classification of texts. In Recent Developments and the New Direction in Soft-Computing Foundations and Applications, 1st ed.; Zadeh, L., Yager, R., Shahbazova, S., Reformat, M., Kreinovich, V., Eds.; Studies in Fuzziness and Soft Computing; Springer: Cham, Switzerland, 2018; pp. 63–70. [Google Scholar]

- Dabagh, R.M. Authorship attribution and statistical text analysis. Adv. Methodol. Stat. 2007, 4, 149–163. [Google Scholar]

- Orucu, F.; Dalkilich, G. Author identification Using N-grams and SVM. In Proceedings of the ISCSE—The 1st International Symposium on Computing in Science & Engineering, Izmir, Turkey, 3–5 June 2010; pp. 3–5. [Google Scholar]

- Stamatatos, E. Author identification using imbalanced and limited training texts. In Proceedings of the DEXA 2007—The 18th International Workshop on Database and Expert Systems Applications (IEEE), Regensburg, Germany, 3–7 September 2007; pp. 237–241. [Google Scholar]

- Stamatatos, E. Ensemble-based author identification using character n-grams. In Proceedings of the 3rd International Workshop on Text-Based Information Retrieval, Riva del Garda, Italy, 29 August 2006; pp. 41–46. [Google Scholar]

- Lupei, M.; Mitsa, A.; Repariuk, V.; Sharkan, V. Identification of authorship of Ukrainian-language texts of journalistic style using neural networks. East.-Eur. J. Enterp. Technol. 2020, 1, 30–36. [Google Scholar] [CrossRef][Green Version]

- Ulianovska, Y.; Firsov, O.; Kostenko, V.; Pryadka, O. Study of the process of identifying the authorship of texts written in natural language. Technol. Audit. Prod. Reserves 2024, 2, 32–37. [Google Scholar] [CrossRef]

- Silva, K.; Can, B.; Blain, F.; Sarwar, R.; Ugolini, L.; Mitkov, R. Authorship attribution of late 19th century novels using GAN-BERT. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 4: Student Research Workshop), Toronto, ON, Canada, 10–12 July 2023; pp. 310–320. [Google Scholar]

- Yule, G.U. On sentence-length as a statistical characteristic of style in prose: With application to two cases of disputed authorship. Biometrika 1939, 30, 363–390. [Google Scholar]

- Wilcox, R.R. Fundamentals of Modern Statistical Methods: Substantially Improving Power and Accuracy, 2nd ed.; Springer: New York, NY, USA, 2010; 249p. [Google Scholar]

- Ayedh, A.; Tan, G.; Alwesabi, K.; Rajeh, H. The Effect of Preprocessing on Arabic Document Categorization. Algorithms 2016, 9, 27. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow, 2nd ed.; O’Reilly Media: Sebastopol, CA, USA, 2019; 510p. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras. (accessed on 27 April 2024).

- Vujović, Ž. Classification model evaluation metrics. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 599–606. [Google Scholar] [CrossRef]

- Gore, R.J.; Diallo, S.; Padilla, J. You are what you tweet: Connecting the geographic variation in America’s obesity rate to twitter content. PLoS ONE 2015, 10, 9. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).