Ensembling Supervised and Unsupervised Machine Learning Algorithms for Detecting Distributed Denial of Service Attacks

Abstract

1. Introduction

1.1. Study Motivation

1.2. Contributions

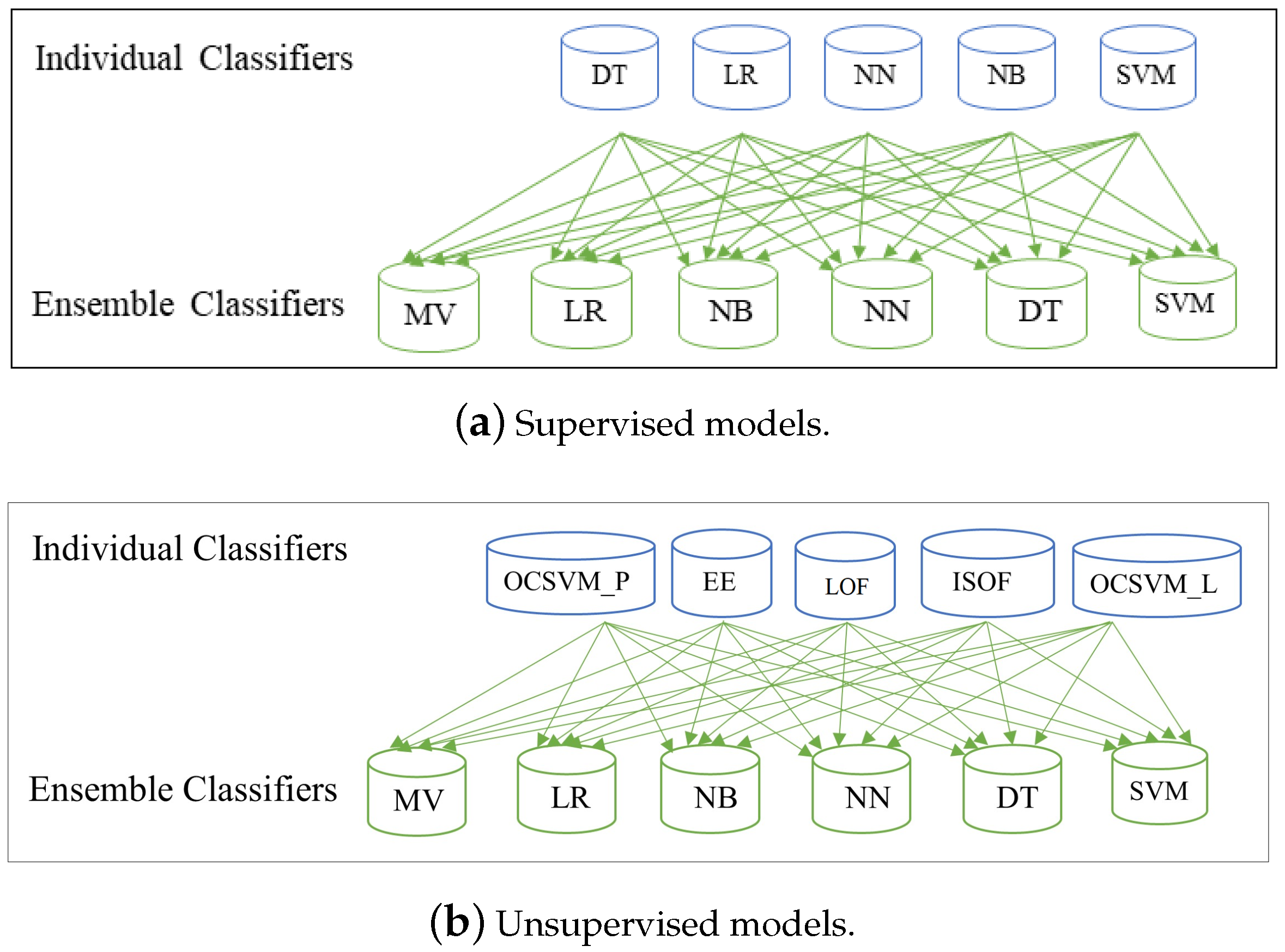

- Five standalone supervised and five unsupervised classifications were used, and their performance in detecting DDoS attacks were recorded.

- The classification results of individual supervised models were combined to create six ensemble models using (i) majority voting, (ii) logistic regression, (iii) naïve Bayes, (iv) neural network, (v) decision tree, and (vi) support vector machine.

- Similarly, the classification results of individual unsupervised models were combined to create six ensemble models.

- Comparative analyses demonstrated that, for both the supervised and unsupervised cases, the ensemble models outperformed the corresponding individual models.

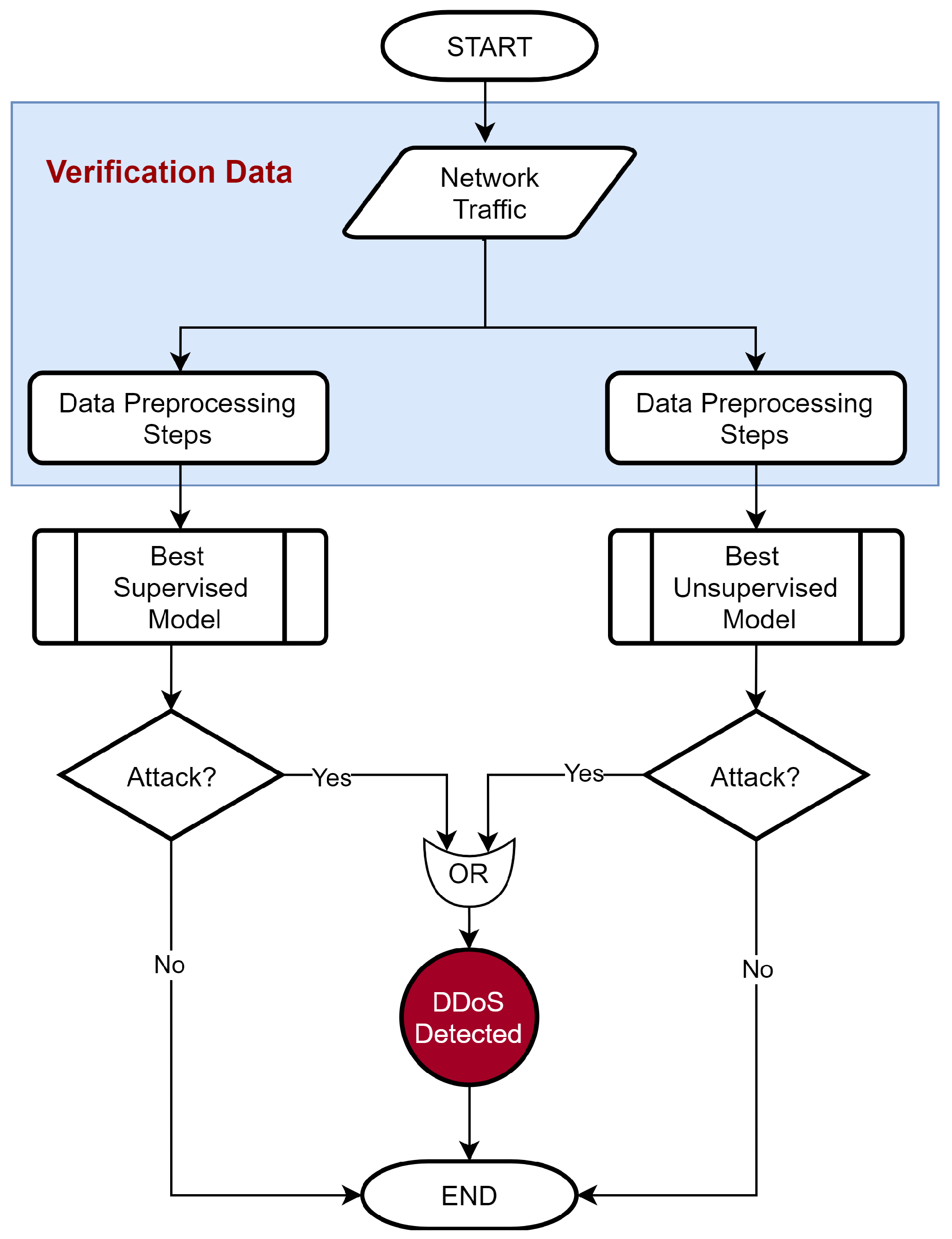

- These supervised and unsupervised ensemble frameworks were then combined, providing results that detect both seen and unseen DDoS attacks with higher accuracy.

- Three well-known IDS benchmark datasets were used for experimentation and to validate the scheme. The detailed performance evaluation results with these datasets proved the robustness and effectiveness of the scheme.

- The scheme iwass further verified using a verification dataset consisting of ground truths.

1.3. Organization

2. Related Work

3. Threat Model

- The DDoS traffic originates from many different compromised hosts or botnets.

- The attacks can be caused by IP spoofing or flooding requests.

- The attacks can target the different OSI layers of the target network.

- Either high-volume traffic (including DNS amplification) or low-volume traffic can cause a DDoS attack.

- The attackers can initiate various attack vectors, including Back Attack, Land Attack, Ping of Death, Smurf Attack, HTTP Flood, Heartbleed, etc. (more information about these DDoS attacks can be found in Balaban [29]).

4. Machine Learning Algorithms

4.1. Supervised Learning Algorithms

- Logistic regression;

- Support vector machine;

- Naïve Bayes with Gaussian function;

- Decision tree

- Neural networks.

4.2. Unsupervised Learning Algorithms

- One-class SVM with linear kernel;

- One-class SVM with polynomial kernel;

- Isolation forests;

- Elliptic envelope;

- Local outlier factor.

4.3. Ensemble Learning Method

5. Proposed Method

5.1. Data Collection

5.2. Data Preprocessing

5.3. Feature Selection

5.4. Data Classification

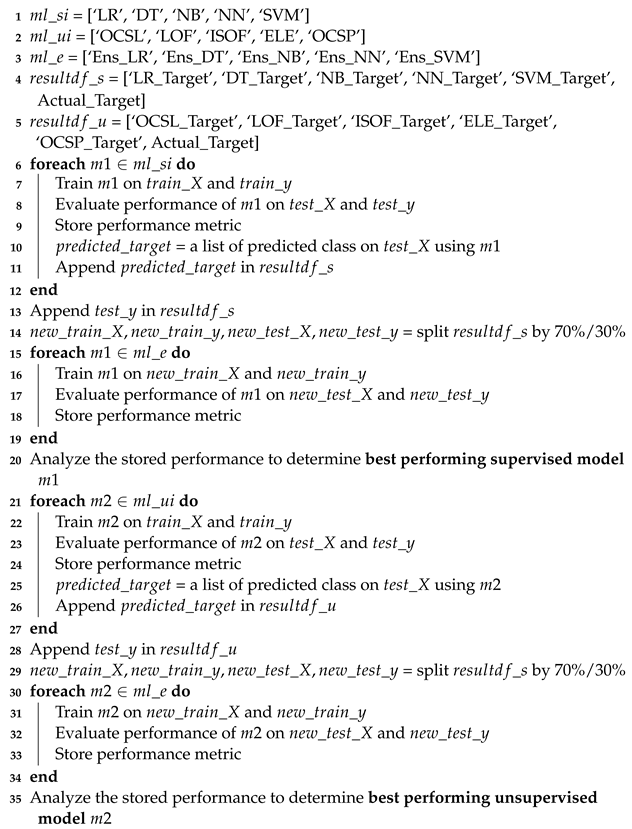

The Ensemble Algorithm

5.5. DDoS Detection

| Algorithm 1: Combined ensemble (supervised and unsupervised) classification |

| Input: train features, train target, test features, test target |

| Output: , best-performing supervised and unsupervised models |

|

6. Experiments

6.1. Software and Hardware Preliminaries

6.2. Datasets

6.2.1. NSL-KDD Dataset

6.2.2. UNSW-NB15 Dataset

6.2.3. CICIDS2017 Dataset

6.3. Data Preprocessing

6.4. Feature Selection

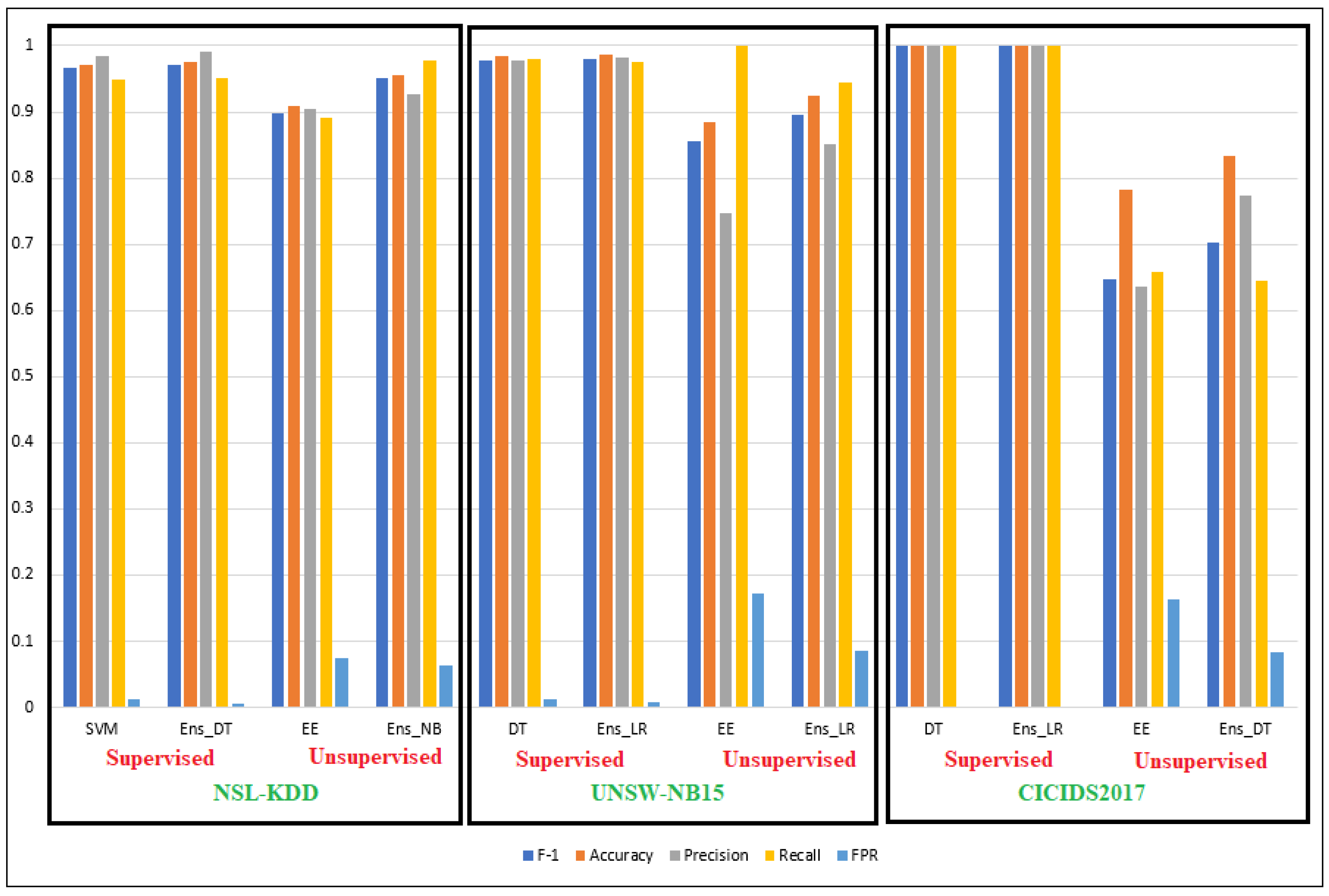

6.5. Model Selection

7. Results and Discussion

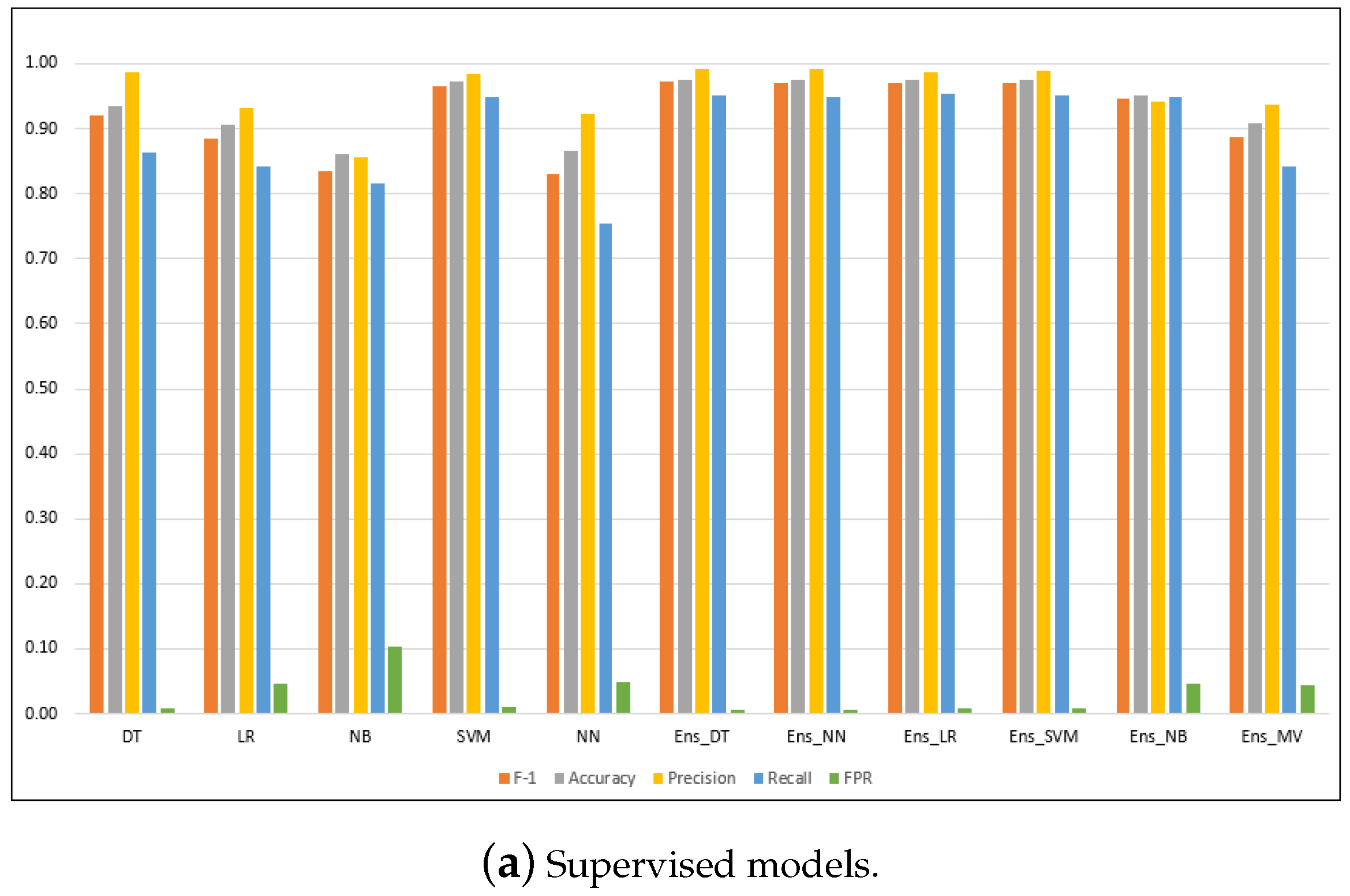

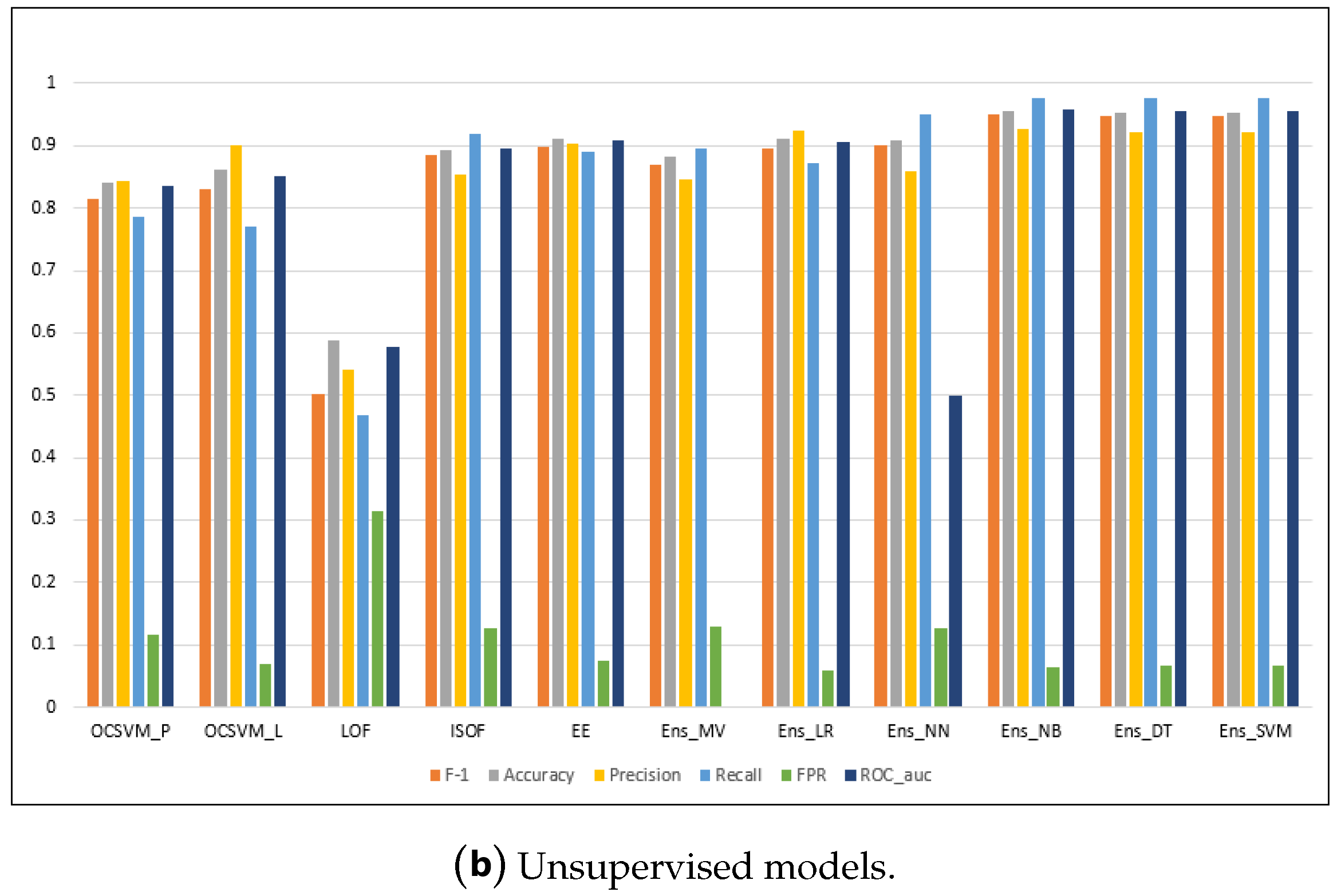

7.1. Evaluation Metrics

- Accuracy []: the percentage of true DDoS attack data detection over total data instances.

- Precision []: the measurement of how often the model correctly identifies a DDoS attack.

- False-positive rate (FPR) []: how often the model raises a false alarm by identifying a normal data instance as a DDoS attack.

- Recall []: the measurement of how many of the DDoS attacks the model does identify correctly. Recall is also known as the true-positive rate, sensitivity, or DDoS detection rate.

- F1 score []: the harmonic average of precision and recall.

7.2. Discussion of Results

8. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Results

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| LR | 0.885 | 0.905 | 0.933 | 0.842 | 0.046 | 0.950 | 4.489 |

| NB | 0.836 | 0.861 | 0.857 | 0.815 | 0.104 | 0.935 | 0.566 |

| NN | 0.830 | 0.866 | 0.923 | 0.754 | 0.048 | 0.909 | 7.317 |

| DT | 0.920 | 0.935 | 0.986 | 0.863 | 0.009 | 0.927 | 1.032 |

| SVM | 0.967 | 0.972 | 0.984 | 0.949 | 0.012 | 0.959 | 468.648 |

| Ens_MV | 0.888 | 0.907 | 0.938 | 0.843 | 0.043 | N/A | 470.748 |

| Ens_LR | 0.970 | 0.974 | 0.988 | 0.953 | 0.009 | 0.975 | 468.800 |

| Ens_NB | 0.946 | 0.952 | 0.942 | 0.950 | 0.046 | 0.969 | 468.683 |

| Ens_NN | 0.970 | 0.974 | 0.992 | 0.949 | 0.006 | 0.972 | 468.880 |

| Ens_DT | 0.971 | 0.975 | 0.991 | 0.952 | 0.006 | 0.977 | 468.684 |

| Ens_SVM | 0.970 | 0.974 | 0.989 | 0.952 | 0.009 | 0.974 | 470.438 |

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| OCSVM_P | 0.814 | 0.841 | 0.843 | 0.787 | 0.116 | 0.835 | 148.683 |

| OCSVM_L | 0.831 | 0.861 | 0.9 | 0.771 | 0.068 | 0.852 | 171.334 |

| LOF | 0.502 | 0.589 | 0.540 | 0.468 | 0.315 | 0.576 | 728.318 |

| ISOF | 0.885 | 0.894 | 0.853 | 0.919 | 0.125 | 0.897 | 24.529 |

| EE | 0.897 | 0.91 | 0.904 | 0.891 | 0.075 | 0.908 | 49.590 |

| Ens_MV | 0.87 | 0.882 | 0.846 | 0.896 | 0.129 | N/A | 1124.507 |

| Ens_LR | 0.896 | 0.91 | 0.923 | 0.871 | 0.059 | 0.906 | 1122.519 |

| Ens_NN | 0.902 | 0.907 | 0.859 | 0.95 | 0.127 | 0.912 | 1122.669 |

| Ens_NB | 0.902 | 0.955 | 0.926 | 0.978 | 0.063 | 0.957 | 1122.498 |

| Ens_DT | 0.949 | 0.953 | 0.921 | 0.978 | 0.068 | 0.955 | 1122.536 |

| Ens_SVM | 0.949 | 0.953 | 0.921 | 0.978 | 0.068 | 0.955 | 1122.518 |

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| LR | 0.946 | 0.964 | 0.963 | 0.930 | 0.019 | 0.956 | 1.24 |

| NB | 0.800 | 0.837 | 0.684 | 0.964 | 0.227 | 0.868 | 0.19 |

| NN | 0.831 | 0.900 | 0.974 | 0.724 | 0.010 | 0.87 | 1.29 |

| DT | 0.978 | 0.985 | 0.977 | 0.979 | 0.012 | 0.984 | 0.28 |

| SVM | 0.969 | 0.979 | 0.958 | 0.981 | 0.022 | 0.980 | 23.04 |

| Ens_MV | 0.972 | 0.981 | 0.964 | 0.981 | 0.019 | N/A | 28.25 |

| Ens_LR | 0.979 | 0.986 | 0.982 | 0.976 | 0.009 | 0.983 | 26.29 |

| Ens_NB | 0.971 | 0.980 | 0.961 | 0.982 | 0.021 | 0.981 | 26.25 |

| Ens_NN | 0.978 | 0.985 | 0.964 | 0.993 | 0.019 | 0.987 | 26.66 |

| Ens_DT | 0.978 | 0.985 | 0.961 | 0.996 | 0.021 | 0.987 | 26.26 |

| Ens_SVM | 0.978 | 0.985 | 0.961 | 0.996 | 0.021 | 0.988 | 26.70 |

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| OCSVM_P | 0.561 | 0.608 | 0.451 | 0.742 | 0.461 | 0.641 | 35.58 |

| OCSVM_L | 0.565 | 0.741 | 0.654 | 0.498 | 0.135 | 0.682 | 15.69 |

| LOF | 0.499 | 0.514 | 0.383 | 0.716 | 0.590 | 0.563 | 11.83 |

| ISOF | 0.596 | 0.541 | 0.424 | 1.000 | 0.693 | 0.653 | 246.25 |

| EE | 0.855 | 0.885 | 0.747 | 1.000 | 0.173 | 0.913 | 3.890 |

| Ens_MV | 0.624 | 0.676 | 0.514 | 0.795 | 0.385 | N/A | 315.4 |

| Ens_LR | 0.896 | 0.925 | 0.851 | 0.945 | 0.085 | 0.930 | 313.6 |

| Ens_NB | 0.855 | 0.884 | 0.746 | 1.000 | 0.176 | 0.912 | 313.6 |

| Ens_NN | 0.841 | 0.875 | 0.744 | 0.967 | 0.172 | 0.897 | 313.5 |

| Ens_DT | 0.896 | 0.925 | 0.851 | 0.945 | 0.085 | 0.930 | 313.6 |

| Ens_SVM | 0.896 | 0.925 | 0.851 | 0.945 | 0.085 | 0.930 | 315.6 |

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| LR | 0.929 | 0.956 | 0.903 | 0.957 | 0.045 | 0.990 | 64.146 |

| NB | 0.753 | 0.872 | 0.903 | 0.646 | 0.030 | 0.963 | 15.689 |

| NN | 0.964 | 0.977 | 0.936 | 0.993 | 0.030 | 0.997 | 126.845 |

| DT | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 0.999 | 60.469 |

| SVM | 0.966 | 0.979 | 0.947 | 0.987 | 0.024 | 0.997 | 112,330.711 |

| Ens_MV | 0.970 | 0.981 | 0.951 | 0.989 | 0.022 | N/A | 112,600.650 |

| Ens_LR | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 1.000 | 112,599.961 |

| Ens_NB | 0.970 | 0.981 | 0.952 | 0.988 | 0.022 | 0.999 | 112,598.241 |

| Ens_NN | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 1.000 | 112,601.044 |

| Ens_DT | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 1.000 | 112,598.194 |

| Ens_SVM | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 0.999 | 112,610.225 |

| Models | F1 Score | Accuracy | Precision | Recall | FPR | ROC AUC | Elapsed Time (s) |

|---|---|---|---|---|---|---|---|

| OCSVM_P | 0.145989 | 0.393467 | 0.127333 | 0.17105 | 0.509807 | 0.330621 | 27,085.11 |

| OCSVM_L | 0.242523 | 0.618004 | 0.303909 | 0.201769 | 0.200981 | 0.500394 | 15,592.11 |

| LOF | 0.355704 | 0.477056 | 0.283843 | 0.476289 | 0.52261 | 0.476839 | 1056.684 |

| ISOF | 0.640336 | 0.779639 | 0.633589 | 0.647229 | 0.162778 | 0.742225 | 114.8583 |

| EE | 0.647782 | 0.782862 | 0.637112 | 0.658815 | 0.163191 | 0.747812 | 179.6919 |

| Ens_MV | 0.466815 | 0.688627 | 0.485242 | 0.449736 | 0.207482 | N/A | 44,033.58 |

| Ens_LR | 0.701033 | 0.831363 | 0.765918 | 0.646284 | 0.087061 | 0.779612 | 44,029.45 |

| Ens_NB | 0.659011 | 0.793603 | 0.666235 | 0.651942 | 0.143957 | 0.753992 | 44,028.93 |

| Ens_NN | 0.703398 | 0.83355 | 0.773203 | 0.645153 | 0.08341 | 0.780871 | 44,039.26 |

| Ens_DT | 0.703398 | 0.83355 | 0.773203 | 0.645153 | 0.08341 | 0.780871 | 44,028.88 |

| Ens_SVM | 0.703398 | 0.83355 | 0.773203 | 0.645153 | 0.08341 | 0.780871 | 46,415.09 |

References

- Calem, R.E. New York’s Panix Service is Crippled by Hacker Attack. The New York Times, 14 September 1996; pp. 1–3. [Google Scholar]

- Famous DDoS Attacks: The Largest DDoS Attacks of All Time. Cloudflare 2020. Available online: https://www.cloudflare.com/learning/ddos/famous-ddos-attacks/ (accessed on 14 February 2024).

- Aburomman, A.A.; Reaz, M.B.I. A survey of intrusion detection systems based on ensemble and hybrid classifiers. Comput. Secur. 2017, 65, 135–152. [Google Scholar] [CrossRef]

- Gogoi, P.; Bhattacharyya, D.; Borah, B.; Kalita, J.K. A survey of outlier detection methods in network anomaly identification. Comput. J. 2011, 54, 570–588. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Das, S.; Venugopal, D.; Shiva, S. A Holistic Approach for Detecting DDoS Attacks by Using Ensemble Unsupervised Machine Learning. In Proceedings of the Future of Information and Communication Conference, San Francisco, CA, USA, 5–6 March 2020; pp. 721–738. [Google Scholar]

- Das, S.; Mahfouz, A.M.; Venugopal, D.; Shiva, S. DDoS Intrusion Detection Through Machine Learning Ensemble. In Proceedings of the 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 22–26 July 2019; pp. 471–477. [Google Scholar]

- Ashrafuzzaman, M.; Das, S.; Chakhchoukh, Y.; Shiva, S.; Sheldon, F.T. Detecting stealthy false data injection attacks in the smart grid using ensemble-based machine learning. Comput. Secur. 2020, 97, 101994. [Google Scholar] [CrossRef]

- Belavagi, M.C.; Muniyal, B. Performance evaluation of supervised machine learning algorithms for intrusion detection. Procedia Comput. Sci. 2016, 89, 117–123. [Google Scholar] [CrossRef]

- Ashfaq, R.A.R.; Wang, X.Z.; Huang, J.Z.; Abbas, H.; He, Y.L. Fuzziness based semi-supervised learning approach for intrusion detection system. Inf. Sci. 2017, 378, 484–497. [Google Scholar] [CrossRef]

- MeeraGandhi, G. Machine learning approach for attack prediction and classification using supervised learning algorithms. Int. J. Comput. Sci. Commun. 2010, 1, 11465–11484. [Google Scholar]

- Lippmann, R.; Haines, J.W.; Fried, D.J.; Korba, J.; Das, K. The 1999 DARPA off-line intrusion detection evaluation. Comput. Netw. 2000, 34, 579–595. [Google Scholar] [CrossRef]

- Perez, D.; Astor, M.A.; Abreu, D.P.; Scalise, E. Intrusion detection in computer networks using hybrid machine learning techniques. In Proceedings of the 2017 XLIII Latin American Computer Conference (CLEI), Cordoba, Argentina, 4–8 September 2017; pp. 1–10. [Google Scholar]

- Villalobos, J.J.; Rodero, I.; Parashar, M. An unsupervised approach for online detection and mitigation of high-rate DDoS attacks based on an in-memory distributed graph using streaming data and analytics. In Proceedings of the Fourth IEEE/ACM International Conference on Big Data Computing, Applications and Technologies, Austin, TX, USA, 5–8 December 2017; pp. 103–112. [Google Scholar]

- Jabez, J.; Muthukumar, B. Intrusion detection system (IDS): Anomaly detection using outlier detection approach. Procedia Comput. Sci. 2015, 48, 338–346. [Google Scholar] [CrossRef]

- Bindra, N.; Sood, M. Detecting DDoS attacks using machine learning techniques and contemporary intrusion detection dataset. Autom. Control. Comput. Sci. 2019, 53, 419–428. [Google Scholar] [CrossRef]

- Lima Filho, F.S.d.; Silveira, F.A.; de Medeiros Brito Junior, A.; Vargas-Solar, G.; Silveira, L.F. Smart detection: An online approach for DoS/DDoS attack detection using machine learning. Secur. Commun. Netw. 2019, 2019. [Google Scholar] [CrossRef]

- Idhammad, M.; Afdel, K.; Belouch, M. Semi-supervised machine learning approach for DDoS detection. Appl. Intell. 2018, 48, 3193–3208. [Google Scholar] [CrossRef]

- Suresh, M.; Anitha, R. Evaluating machine learning algorithms for detecting DDoS attacks. In Proceedings of the International Conference on Network Security and Applications, Chennai, India, 15–17 July 2011; pp. 441–452. [Google Scholar]

- Usha, G.; Narang, M.; Kumar, A. Detection and Classification of Distributed DoS Attacks Using Machine Learning. In Computer Networks and Inventive Communication Technologies; Springer: Berlin/Heidelberg, Germany, 2021; pp. 985–1000. [Google Scholar]

- Zhang, N.; Jaafar, F.; Malik, Y. Low-rate DoS attack detection using PSD based entropy and machine learning. In Proceedings of the 2019 6th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud)/2019 5th IEEE International Conference on Edge Computing and Scalable Cloud (EdgeCom), Paris, France, 21–23 June 2019; pp. 59–62. [Google Scholar]

- Yuan, X.; Li, C.; Li, X. DeepDefense: Identifying DDoS attack via deep learning. In Proceedings of the 2017 IEEE International Conference on Smart Computing (SMARTCOMP), Hong Kong, China, 29–31 May 2017; pp. 1–8. [Google Scholar]

- Hou, J.; Fu, P.; Cao, Z.; Xu, A. Machine learning based DDoS detection through netflow analysis. In Proceedings of the MILCOM 2018-2018 IEEE Military Communications Conference (MILCOM), Los Angeles, CA, USA, 29–31 October 2018; pp. 1–6. [Google Scholar]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Smyth, P.; Wolpert, D. Stacked density estimation. In Proceedings of the Advances in neural information processing systems, Denver, CO, USA, 30 November–5 December 1998; pp. 668–674. [Google Scholar]

- Hosseini, S.; Azizi, M. The hybrid technique for DDoS detection with supervised learning algorithms. Comput. Netw. 2019, 158, 35–45. [Google Scholar] [CrossRef]

- Ao, X.; Luo, P.; Ma, X.; Zhuang, F.; He, Q.; Shi, Z.; Shen, Z. Combining supervised and unsupervised models via unconstrained probabilistic embedding. Inf. Sci. 2014, 257, 101–114. [Google Scholar] [CrossRef]

- Mittal, M.; Kumar, K.; Behal, S. Deep learning approaches for detecting DDoS attacks: A systematic review. Soft Comput. 2023, 27, 13039–13075. [Google Scholar] [CrossRef]

- Balaban, D. Are you Ready for These 26 Different Types of DDoS Attacks? Secur. Mag. 2020. Available online: https://www.securitymagazine.com/articles/92327-are-you-ready-for-these-26-different-types-of-ddos-attacks (accessed on 14 February 2024).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2008. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems. In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, ACT, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. In Proceedings of the 4th International Conference on Information Systems Security and Privacy (ICISSP 2018), Funchal, Madeira, Portugal, 22–24 January 2018; pp. 108–116. [Google Scholar] [CrossRef]

- Das, S.; Venugopal, D.; Shiva, S.; Sheldon, F.T. Empirical evaluation of the ensemble framework for feature selection in DDoS attack. In Proceedings of the 2020 7th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud)/2020 6th IEEE International Conference on Edge Computing and Scalable Cloud (EdgeCom), New York, NY, USA, 1–3 August 2020; pp. 56–61. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

| Dataset | Class | Training | Testing | Verification | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Total | Benign | DDoS | Total | Benign | DDoS | Total | Benign | DDoS | ||

| NSL-KDD | Sup | 113,268 | 67,341 | 45,927 | 16,164 | 9608 | 6556 | 1000 | 900 | 100 |

| (59.45%) | (40.55%) | (59.44%) | (40.56%) | |||||||

| Unsup | 68,014 | 67,341 | 673 | |||||||

| (99%) | (1%) | |||||||||

| UNSW-NB15 | Sup | 25,265 | 13,000 | 12,265 | 12,090 | 8000 | 4090 | 1000 | 900 | 100 |

| (51.4%) | (48.6%) | (66.17%) | (33.83%) | |||||||

| Unsup | 23,230 | 23,000 | 230 | |||||||

| (99%) | (1%) | |||||||||

| CICIDS2017 | Sup | 641,974 | 375,101 | 265,873 | 179,549 | 125,728 | 53,821 | 1000 | 900 | 100 |

| (58.59%) | (41.41%) | (70%) | (30%) | |||||||

| Unsup | 379,861 | 376,101 | 3760 | |||||||

| (99%) | (1%) | |||||||||

| Feature | Algorithm | Feature | |

|---|---|---|---|

| Set | Used | Count | Features |

| FS-1 | Degree of dependency | 24 | 2, 3, 4, 5, 7, 8, 10, 13, 23, 24, 25, 26, 27, 28, 29, 30, |

| and dependency ratio | 33, 34, 35, 36, 38, 39, 40, 41 | ||

| FS-2 | FS-3 ∩ FS-4 ∩ FS-5 ∩ FS-6 | 13 | 3, 4, 29, 33, 34, 12, 39, 5, 30, 38, 25, 23, 6 |

| FS-3 | Information gain | 14 | 5, 3, 6, 4, 30, 29, 33, 34, 35, 38, 12, 39, 25, 23 |

| FS-4 | Gain ratio | 14 | 12, 26, 4, 25, 39, 6, 30, 38, 5, 29, 3, 37, 34, 33 |

| FS-5 | Chi-squared | 14 | 5, 3, 6, 4, 29, 30, 33, 34, 35, 12, 23, 38, 25, 39 |

| FS-6 | ReliefF | 14 | 3, 29, 4, 32, 38, 33, 39, 12, 36, 23, 26, 34, 40, 31 |

| FS-7 | Mutual information gain | 12 | 23, 5, 3, 6, 32, 24, 12, 2, 37, 36, 8, 31 |

| FS-8 | Domain knowledge | 16 | 2, 4, 10, 14, 17, 19, 21, 24, 25, 26, 27, 30, 31, 34, 35, 39 |

| FS-9 | Gain ratio | 35 | 9, 26, 25, 4, 12, 39, 30, 38, 6, 29, 5, 37, 11, 3, 22, 35, 34, 14, 33, |

| 23, 8, 10, 31, 27, 28, 32, 1, 36, 2, 41, 40, 17, 13, 16, 19 | |||

| FS-10 | Information gain | 19 | 3, 4, 5, 6, 12, 23, 24, 25, 26, 29, 30, 32, 33, 34, 35, 36, 37, 38, 39 |

| FS-11 | Genetic algorithm | 15 | 4, 5, 6, 8, 10, 12, 17, 23, 26, 29, 30, 32, 37, 38, 39 |

| FS-12 | Full set | 41 | All features |

| Predicted | Predicted | |

|---|---|---|

| Normal | Attack | |

| Actual Normal | TN | FP |

| Actual Attack | FN | TP |

| Dataset | Learning | Classifier | Model | F1 Score | Accuracy | Precision | Recall | FPR | ROC | Elapsed |

|---|---|---|---|---|---|---|---|---|---|---|

| Type | Category | AUC | Time (s) | |||||||

| NSL-KDD | Supervised | Individual | SVM | 0.967 | 0.972 | 0.984 | 0.949 | 0.012 | 0.959 | 468.64 |

| Ensemble | Ens_DT | 0.971 | 0.975 | 0.991 | 0.952 | 0.006 | 0.977 | 468.68 | ||

| Unsupervised | Individual | EE | 0.897 | 0.910 | 0.904 | 0.891 | 0.075 | 0.908 | 49.59 | |

| Ensemble | Ens_NB | 0.951 | 0.955 | 0.926 | 0.978 | 0.063 | 0.957 | 1122.49 | ||

| UNSW-NB15 | Supervised | Individual | DT | 0.978 | 0.985 | 0.977 | 0.979 | 0.012 | 0.984 | 0.282 |

| Ensemble | Ens_LR | 0.979 | 0.986 | 0.982 | 0.976 | 0.009 | 0.983 | 26.29 | ||

| Unsupervised | Individual | EE | 0.855 | 0.885 | 0.747 | 1.000 | 0.173 | 0.913 | 3.890 | |

| Ensemble | Ens_LR | 0.896 | 0.925 | 0.851 | 0.945 | 0.085 | 0.930 | 313.56 | ||

| CICIDS2017 | Supervised | Individual | DT | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 0.999 | 60.46 |

| Ensemble | Ens_LR | 0.999 | 0.999 | 0.999 | 0.999 | 0.001 | 1.000 | 112,599.96 | ||

| Unsupervised | Individual | EE | 0.648 | 0.783 | 0.637 | 0.659 | 0.163 | 0.748 | 203.87 | |

| Ensemble | Ens_DT | 0.703 | 0.834 | 0.773 | 0.645 | 0.083 | 0.781 | 54,780.97 |

| Dataset | Method | Best Model | Instances | Predicted Results | F1-Score | Accuracy | Precision | Recall | FPR | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DDoS | Benign | ||||||||||||

| DDoS | Benign | TP | FP | TN | FN | ||||||||

| NSL-KDD | Sup En | Ens_DT | 900 | 100 | 861 | 1 | 99 | 39 | 0.977 | 0.960 | 0.999 | 0.957 | 0.010 |

| Unsup En | Ens_NB | 859 | 5 | 95 | 41 | 0.974 | 0.954 | 0.994 | 0.954 | 0.050 | |||

| OR’ed | N/A | 861 | 2 | 98 | 39 | 0.977 | 0.959 | 0.998 | 0.957 | 0.020 | |||

| UNSW-NB15 | Sup En | Ens_LR | 900 | 100 | 863 | 2 | 98 | 37 | 0.978 | 0.961 | 0.998 | 0.959 | 0.020 |

| Unsup En | Ens_LR | 739 | 7 | 93 | 161 | 0.898 | 0.832 | 0.991 | 0.821 | 0.070 | |||

| OR’ed | N/A | 863 | 4 | 96 | 37 | 0.977 | 0.959 | 0.995 | 0.959 | 0.040 | |||

| CICIDS2017 | Sup En | Ens_LR | 900 | 100 | 892 | 1 | 99 | 8 | 0.995 | 0.991 | 0.999 | 0.991 | 0.010 |

| Unsup En | Ens_DT | 723 | 9 | 91 | 177 | 0.886 | 0.814 | 0.988 | 0.803 | 0.090 | |||

| OR’ed | N/A | 892 | 5 | 95 | 8 | 0.993 | 0.987 | 0.994 | 0.991 | 0.050 | |||

| Methodology | Ensemble Mechanism | Dataset | Accuracy | Precision | Recall | FPR |

|---|---|---|---|---|---|---|

| Ensemble[6] | Adaptive Voting | NSL-KDD | 85.20% | - | - | - |

| Ensemble[7] | Bagging | NSL-KDD | 84.25% | - | - | - |

| Ensemble[8] | DAREnsemble | NSL-KDD | 78.80% | - | - | - |

| Supervised [16] | RF | CIC-IDS2017 | 96.13% | - | - | - |

| Ensemble [23] | Adaboosting | CIC-IDS2017 | 99% | - | - | 0.50% |

| Semisupervised [18] | Extra-Trees | UNSW-NB15 | 93.71% | - | - | 0.46% |

| Our Work | Supervised and Unsupervised | NSL-KDD | 97.50% | 99.10% | 95.20% | 0.60% |

| Our Work | Supervised and Unsupervised | UNSW-NB15 | 98.60% | 98.20% | 97.60% | 0.09% |

| Our Work | Supervised and Unsupervised | CICIDS-2017 | 99.90% | 99.90% | 99.90% | 0.10% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Das, S.; Ashrafuzzaman, M.; Sheldon, F.T.; Shiva, S. Ensembling Supervised and Unsupervised Machine Learning Algorithms for Detecting Distributed Denial of Service Attacks. Algorithms 2024, 17, 99. https://doi.org/10.3390/a17030099

Das S, Ashrafuzzaman M, Sheldon FT, Shiva S. Ensembling Supervised and Unsupervised Machine Learning Algorithms for Detecting Distributed Denial of Service Attacks. Algorithms. 2024; 17(3):99. https://doi.org/10.3390/a17030099

Chicago/Turabian StyleDas, Saikat, Mohammad Ashrafuzzaman, Frederick T. Sheldon, and Sajjan Shiva. 2024. "Ensembling Supervised and Unsupervised Machine Learning Algorithms for Detecting Distributed Denial of Service Attacks" Algorithms 17, no. 3: 99. https://doi.org/10.3390/a17030099

APA StyleDas, S., Ashrafuzzaman, M., Sheldon, F. T., & Shiva, S. (2024). Ensembling Supervised and Unsupervised Machine Learning Algorithms for Detecting Distributed Denial of Service Attacks. Algorithms, 17(3), 99. https://doi.org/10.3390/a17030099