Abstract

The paper is devoted to the theoretical and numerical analysis of the two-step method, constructed as a modification of Polyak’s heavy ball method with the inclusion of an additional momentum parameter. For the quadratic case, the convergence conditions are obtained with the use of the first Lyapunov method. For the non-quadratic case, sufficiently smooth strongly convex functions are obtained, and these conditions guarantee local convergence.An approach to finding optimal parameter values based on the solution of a constrained optimization problem is proposed. The effect of an additional parameter on the convergence rate is analyzed. With the use of an ordinary differential equation, equivalent to the method, the damping effect of this parameter on the oscillations, which is typical for the non-monotonic convergence of the heavy ball method, is demonstrated. In different numerical examples for non-quadratic convex and non-convex test functions and machine learning problems (regularized smoothed elastic net regression, logistic regression, and recurrent neural network training), the positive influence of an additional parameter value on the convergence process is demonstrated.

1. Introduction

Nowadays, many problems in machine learning [1], optimal control [2], applied linear algebra [3], system identification [4], and other applications lead to the problems of unconstrained convex optimization. The theory of convex optimization is well-developed [5,6,7], but methods that can be additionally analyzed or improved exist. A typical example of an improvement of the standard gradient descent method is the heavy ball method (HBM), proposed by B.T. Polyak in [7,8], which is based on the inclusion of a momentum term. The local convergence of this method for functions from (twice continuously differentiable, l-strongly convex functions with Lipschitz gradient) was proved in [7]. Recently, Ghadimi et al. [9] formulated the conditions of global linear convergence. Aujol et al. [10] analyzed the dynamical system associated with the HBM in order to obtain optimal convergence rates for convex functions with some additional properties, such as quasi-strong and strong convexity.

In the last few decades, extended modifications of the HBM have been developed, and interesting results on their behavior have been obtained. Bhaya and Kaszkuremicz [11] demonstrated that the HBM for minimization of quadratic functions can be considered a stationary version of the conjugate gradient method. Recently, Goujand et al. [12] proposed an adaptive modification of the HBM with Polyak stepsizes and demonstrated that this method can be considered a variant of the conjugate gradient method for quadratic problems, having many advantages, such as finite-time convergence and instant optimality. Danilova et al. [13] demonstrated the non-monotonic convergence of the HBM and analyzed the peak effect for ill-conditioned problems. In order to carry out the damping of this effect in [14], an averaged HBM was constructed. A global and local convergence of momentum method for semialgebraic functions with locally Lipschitz gradients was demonstrated in [15]. Wang et al. [16] used the theory of PID controllers for the construction of momentum methods for deep neural network training. A quasi-hyperbolic momentum method with two parameters, momentum and parameter, which performs a sort of interpolation between gradient descent and the HBM, was presented in [17]. A complete analysis of such algorithms for deterministic and stochastic cases was performed in [18], where the influence of parameters on stability and convergence rate was analyzed. Sutskever et al. [19] proposed a stochatic version of Nesterov’s method, where the momentum was included bilinearly with the step. An improved accelerated momentum method for stochastic optimization was presented in [20].

In [21], the authors investigated the ravine method and momentum methods from dynamical system perspectives. A high-resolution differential equation describing these methods was proposed, and the damping effect of the additional term driven by the Hessian was demonstrated. Similar results for Hessian damping were obtained in [22] for the proximal methods.A continuous system with damping for primal-dual convex problems was constructed in [23]. Alecsa et al. [24] investigated a perturbed heavy ball system with a vanishing damping term that contained a Tikhonov regularization term. It was demonstrated that the presence of a regularization term led to a strong convergence of the descent trajectories in the case of smooth functions. An analysis of momentum methods from the positions of Hamiltonian dynamical systems was presented in [25].

Yan et al. [26] proposed a modification of the HBM with an additional parameter and an additional internal stage. In [27], a method with three momentum parameters (the so-called triple momentum method) was presented. This method has been classified as the fastest known globally convergent first-order method. In [28], the integral quadratic constraint method used in robust control theory was applied to the construction of first-order methods. A method with two momentum parameters was introduced. In [29], this scheme was analyzed for a strongly convex function with a Lipschitz gradient, and the range of the possible convergence rate was presented.

Despite the results obtained for different methods with momentum mentioned above, there is a lack of correct understanding of the roles of parameters in computational schemes with momentum. As mentioned by investigators, understanding the role of momentum remains important for practical problems. For example, in [19], the authors demonstrated that momentum is critical for good performance in deep learning problems. However, in another modification of the HBM, Ma and Yarats [17] demonstrated that momentum in practice can have a minor effect, which is insufficient for acceleration of convergence. Therefore, additional theoretical analysis of methods with momentum is important in our time.

The presented paper is devoted to the analysis of a method with two momentum parameters, as proposed in [28]. For the functions from (l-strongly convex L-smooth functions), this method was analyzed in [29], where global convergence for the special choice of parameters was proven. In the presented paper, we try to focus our attention on the case of quadratic functions from , in order to obtain the inequalities for parameters that guarantee global convergence, to obtain optimal values of the parameters, and to understand the effect of an additional momentum parameter on the convergence rate. Convergence conditions are obtained, and corresponding theorems are formulated. The constrained optimization problem for obtaining optimal parameters is stated. As demonstrated in numerical experiments, in the quadratic case, the inclusion of an additional parameter does not improve the convergence rate. The role of this parameter is demonstrated with the use of the ordinary differential equation (ODE), which is equivalent to the method. This parameter provides an additional damping effect on the oscillations, typical for the HBM, according to its non-monotonic convergence, and can be useful in practice. In the numerical experiments for non-quadratic functions, it is demonstrated that this parameter also provides damping of the oscillations and leads to faster convergence to the minimum in comparison with the standard HBM. Additionally, the effect of this parameter is demonstrated for the non-convex function that arises in recurrent neural network training.

The paper has the following structure: Section 2 is devoted to the theoretical analysis method in application to strongly convex quadratic functions. The effect of an additional momentum parameter is analyzed. The results of the numerical experiments for non-quadratic, strongly convex, and non-convex functions are presented in Section 3. Some concluding remarks are made in Section 4.

2. Analysis of Two-Step Method

Let the scalar function from be considered. We try to find its minimizer . So the unconstrained minimization problem is stated:

The gradient descent method (GD) for numerical solution of (1) is written as

where is a step. If we additionally propose that , the optimal step and convergence rate for (2) are presented as in [7]

where is the condition number and can be associated with the minimum and maximum eigenvalues of a Hessian of .

Polyak’s heavy ball method is presented as in [7,8]

where is the momentum. The optimal values in the case of strongly convex quadratic function are written as in [7]

Lessard et al. [28] proposed the following method with an additional momentum parameter:

As can be seen, for the case of , method (4) leads to (3). In [29], the global convergence of this method for with the convergence rate is demonstrated for the following specific choice of parameters:

In the theoretical part of the presented paper, we try to analyze the influence of parameter on the convergence of method (4) for the case of a quadratic function, written as

where , A is a positive definite and symmetric matrix with eigenvalues . The gradient of this function is computed as , and is treated as the solution of the linear system . The obtained results can be considered as the results of the local convergence of method (4), applied to , because in the neighborhood of from this class can be presented as (5) with . This approach for obtaining local convergence conditions and optimal parameters values is widely used in literature [7,18].

Method (4), when applied to (5), leads to the following difference system:

where E is the unity matrix.

2.1. Convergence Conditions

The following theorem on the convergence of an iterative method, as presented by (6), can be formulated

Theorem 1.

Proof of Theorem 1.

- (1)

- Let the new variable be introduced. Then, method (6) can be rewritten as a single-step method:where matrix T is written asThis method converges if, and only if, (spectral radius of matrix T) is strictly less than unity [3].Matrix A can be represented by the spectral decomposition , where is the diagonal matrix of eigenvalues of A, S is a matrix of eigenvectors, and . The following transformation of T can be introduced: , whereMatrix has the same eigenvalues, as matrix T.Let us demonstrate that has the same spectrum as the following matrix:where are matrices, which are presented asMatrix is presented aswhere , , , . The determinant of this matrix is computed by the following rule [30]:where , .The determinant of the block-diagonal matrix is written asand, as can be seen, it is equal to . So, both matrices have the same eigenvalues , and these eigenvalues are computed as eigenvalues of matrices .

- (2)

- According to the result presented above, the analysis of eigenvalues of T leads to the analysis of roots of an algebraic equation:In order to guarantee convergence, parameters should be chosen in a way which guarantees that . For obtaining these conditions, we perform conformal mapping of the interior of the unit circle to the set with use of the following function:The conditions on coefficients of (10) guarantee roots are provided by the Routh–Hurwitz criterion [30,31]. The Hurwitz matrix for (10) is written asThe conditions of the Routh–Hurwitz criterion lead to two inequalities:Inequity (11) is valid , according to the ranges of values of stated in the conditions of the theorem. Inequity (12) is rewritten asand it is valid for values of h chosen from Inequity (7). This, condition (7) guarantees that , and as a consequence that under the stated conditions. This leads to the convergence of (6) for any .

□

2.2. Analysis of Convergence Rate

Let us analyze the convergence rate of method (6). At first, let us obtain the expression for the spectral radius of matrix T. Let and the spectral radius be presented as the function , where . The expression for r can be obtained with the use of an expression for the roots of (8):

where , , and is written as

If is considered a function of , the following theorem on its extremal property can be formulated:

Theorem 2.

The maximum value of as a function of takes place for or .

Proof of Theorem 2.

- (1)

- Let us obtain the expression for . For in the case of , the following inequality takes place: and if , and in this case . If , we obtain that and . So, if and , we have that .If and , we have that , and for we have that if , then . If , then and . So if and , we obtain that .For , it is easy to see that . The case of and case are trivial to analyze. Thus, it is demonstrated that

- (2)

- Let us analyze the behavior of for . The expression for D is written asSo, the non-negative values of D are associated with the solutions of the following inequality:The corresponding discriminant is equal to . As can be seen, solutions to this inequality exist, whenThe opposite inequality guarantees that it is valid for all . For analysis of the general situation of the sign of D this restriction is too strict, so we consider the case of condition (14).The case of leads to the investigation of function . Condition leads to the restriction , which is valid for . It should be noted that this condition correlates with (7) for values of , under condition . The derivative of is written asand for , it is strictly negative, so decreases on the considered interval and its maximum is equal to .For , we obtain that . The case corresponds to the interval , where r decreases, and case corresponds to , where r increases. So, the maximum of r in this situations is realized in point or .For , two situations should be considered. For , the behavior of function should be analyzed. Its derivative is written asand according to , we can see that if , will be negative, so decreases. Let us determine where this inequality is valid:soFunction is strictly positive, whenAs can be seen, this inequality is valid when condition (14) is realized on values of . So, in the interval , function decreases.Positive values of can be realized when the following inequality is valid:which leads to . According to , this leads to the evident inequality , which takes place for under condition (15).Let us demonstrate that (16) correlates with (14): Inequity (16) leads to , which leads to the following inequality:which is equivalent towhich is equivalent to (14).It is easy to see thatso, when (corresponds to ), r decreases when and increases when and its maximum is realized in the boundary point.The case leads to the analysis of function on the interval, defined by inequality (see case )The first derivative of is written asAccording to , we obtain that if (this takes place when ), this derivative is strictly positive. According to (17), it is valid for the interval defined by (18), so and, as a consequence, function r increases in the case of corresponding to (18) and its maximum takes place in the right boundary point , if intervals and (18) have an intersection.Thus, for all values of D, we can see that r reaches its maximum value at the boundaries of interval .

□

Notation 1.

Formulated theorems for the case of function (5) provide the conditions that guarantee global convergence [7]:

where .

If the non-quadratic is considered, then these conditions provide a local convergence (see Theorem 1 from subsection 2.1.2 in [7]). Any sufficiently smooth function in the neighborhood of can be presented as

and according to the following property:

we can see that if ∃ , , then for method (4) the following inequality is obtained :

Notation 2.

Theorem 2 provides an approach to obtain optimal parameters with the solution of the following problem for obtaining an optimal convergence rate:

where Σ is defined as:

Similar minimax problems arise in the theory of the standard HBM (3) [7] and multiparametric method in [18].

2.3. Optimal Parameters

In this subsection, we discuss the solution of minimization problems (19) and (20) and the following problem, which is stated in order to analyze the effect of parameter :

where

So in (21) is treated as an external parameter, which can be varied. In our computations, problem (21) is solved using the following approach: in the first stage, we obtain three ’good’ initial points in by random search, and in the second stage, we apply the Nelder–Mead method in order to obtain the optimal point more precisely than in the first stage. For computations at any value of , we use random points in and the accuracy for the Nelder–Mead method. The use of a large number of random points provides the possibility of obtaining the initial points in the small neighborhood of the optimal point, and the points obtained with the Nelder–Mead method do not leave . This approach to solving the problem is very simple to realize and eliminates the need to use methods of unconstrained optimization. All computations were realized with the use of codes implemented in Matlab 2021a.

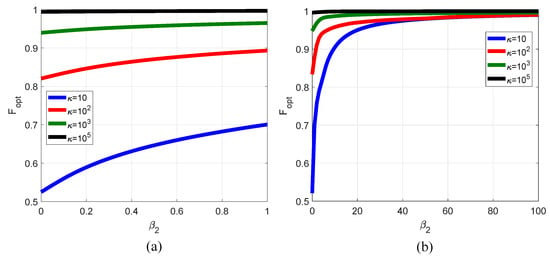

In Figure 1, the plots of optimal values of F are presented for the cases of interval (Figure 1a) and (Figure 1b) for four values of : 10, , , and . As can be seen, for both intervals and all considered values of , the minimum values of takes place for . The value of becomes smaller at smaller values of . The last feature is also mentioned for the multi-parametric method of [18].

Figure 1.

Plots of the dependence of optimal values of F on the value of : (a) ; (b) .

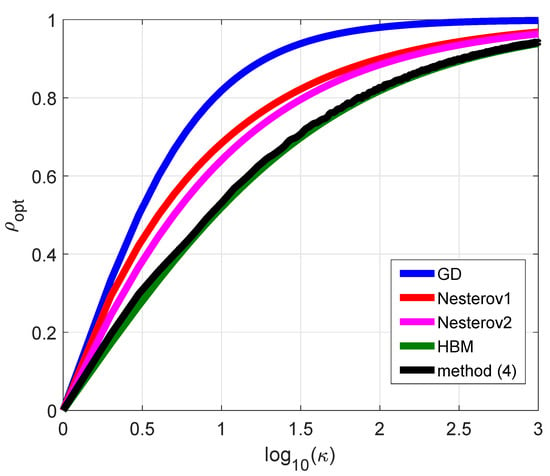

In addition, we try to compare the optimal convergence rate as a function of for method (4) with the optimal rates for the GD method (2), the HBM (3), and the following Nesterov methods:

- (1)

- Nesterov’s accelerated gradient method for (Nesterov1) [6,28]:

- (2)

- Nesterov’s accelerated gradient method for a strongly convex quadratic function (Nesterov2) [28]:The numerical solution to problem (19) is realized using the same method as for problem (21), but for the Nelder–Mead method, four ’good’ points are obtained with a random search. The interval on is bounded by 0.5, according to the behavior, illustrated in Figure 1.

Plots of are presented in Figure 2. As can be seen, the minimum values of took place for methods (3) and (4), and they were very close. So, from the results of the computations, the following conclusion can be drawn: for the quadratic function , parameter does not provide an additional acceleration effect in comparison with the standard HBM (3).

Figure 2.

Plots of the dependence of the optimal convergence rate on logarithm of .

2.4. Equivalent ODE

For an additional analysis of the influence of on the convergence of (4), we consider an approach based on the ODE, which is constructed as a continuous analogue of the iterative method. At present, this approach is widely used for the analysis of optimization methods [7,21,22,23,24,32,33].

Let method (4) for quadratic function , where , , be considered. This function can be treated as a quadratic approximation of the arbitrarily smooth function, which has its minimum zero value in point . Application of (4) leads to the following difference equation:

Let us introduce function , where t is defined as , so and , . Equation (22) can be rewritten as

Let the new parameters , be introduced: , and the following new variable is considered:

So, (23) is rewritten as

For , we find that (24) is rewritten as and with the use of , we obtain the following second-order ODE:

The case of HBM corresponds to [7] and the ODE describes the dynamics of a material point with unit mass under a force with a potential represented by and under a resistive force with coefficient . Thus, if , we have the following mechanical meaning of : this presents an additional damping effect on the solution of the ODE (25) and, as a consequence, on the behavior of method (4). With the use of proper values of , we can realize the damping of oscillations related to the non-monotonic convergence of the method. This is typical for the case of [13]. In Section 3, this will also be illustrated for the minimization of non-quadratic convex and non-convex functions.

3. Numerical Experiments and Discussion

In this section, we tried to apply method (4) to the minimization of non-quadratic functions that arise in test problems for optimization solvers and in machine learning. The main purpose of these numerical experiments was to demonstrate the effect of on the convergence of method (4) in comparison with the standard HBM (3). The initial point for all test examples (except the RNN) was chosen as a fixed (not random) point, for better illustration of the convergence process. It was chosen far from the minimum points, but not so far that the method had a large number of iterations.

For the numerical examples, only a comparison of method (4) with the HBM (3) was realized, because (4) was treated as an improvement of the HBM, so it was decided to only perform a comparison with this method, in order to demonstrate the practical effect of such an improvement.

3.1. Rosenbrock Function

Let the 2D Rosenbrock function be considered:

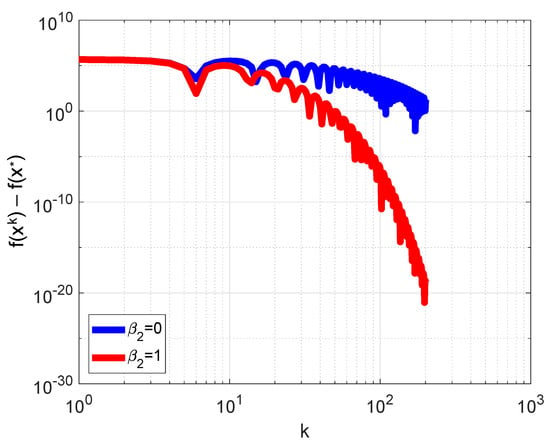

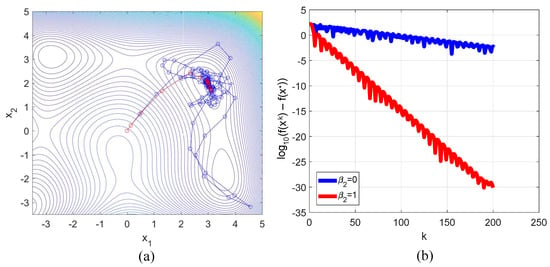

This function has a minimum at the point . For the numerical simulation, we used the following values: , , , . The descent trajectories for the methods (3) and (4) are presented in Figure 3a. The plots of the dependence of the logarithm of error, computed as on the iteration number are presented in Figure 3b. From both figures, it can be seen that the inclusion of led to the damping of oscillations typical for the HBM, and, as a consequence, to a faster entry of the trajectory in the neighborhood of the minimum point.

Figure 3.

Plots of the descent trajectories (a) and dependence of the error logarithm on iteration number (b) for the minimization of the 2D Rosenbrock function. Blue line corresponds to the HBM, red line—to method (4).

The Rosenbrock function considered in this example can be classified as a ravine function, so the traditional gradient methods (without the application of the ravine method) converge slowly to the minimum point and they need many iterations. As can be seen from Figure 3b, both methods converged in the neighborhood of the minimum point with good accuracy, but method (4) converged faster according to the damping of the oscillations.

3.2. Himmelblau Function

For the minimization of the non-convex Himmelblau function

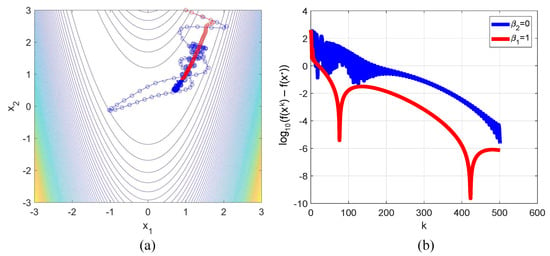

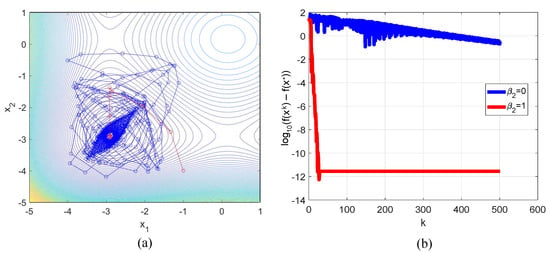

which has four local minima, the following parameters were used: , , . For the initial point both methods converged to the local minimum . The trajectories are presented in Figure 4a, and the plots of the error logarithm are presented in Figure 4b. As can be seen, the damping effect realized with the proper choice led to a faster convergence in comparison with the standard HBM.

Figure 4.

Plots of the descent trajectories (a) and dependence of the error logarithm on iteration number (b) for the minimization of the Himmelblau function. Blue line corresponds to the HBM, red line—to method (4).

3.3. Styblinski–Tang Function

Let the following non-convex function be considered:

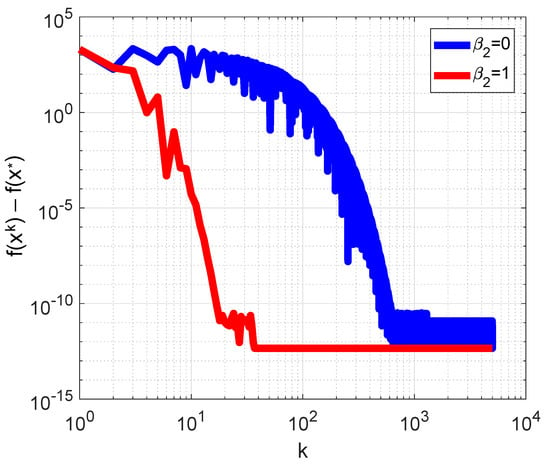

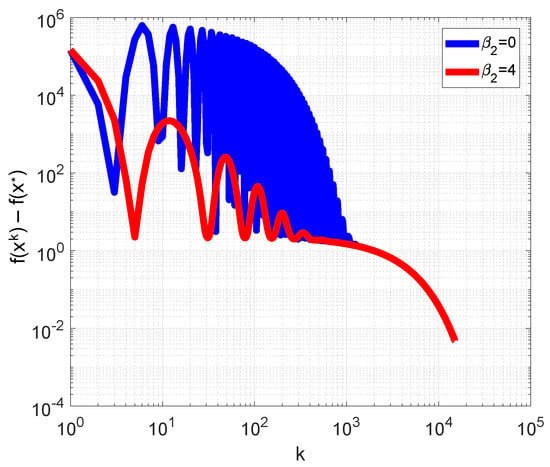

which has a local minimum at and . For the case of , we used , , , . The trajectories for both methods are presented in Figure 5a and plots of the logarithms of error are presented in Figure 5b. As can be seen, for this situation, parameter had a positive influence on the convergence. For , we used the initial vector and the parameters , , . Plots of the dependence of error on iteration number in log–log scale are presented in Figure 6. As can be seen, method (4) for converged to faster than the HBM.

Figure 5.

Plots of the descent trajectories (a) and dependence of the error logarithm on iteration number (b) for the minimization of the Styblinski–Tang function. Blue line corresponds to the HBM, red line—to method (4).

Figure 6.

Plots of the dependence of error on iteration number for minimization of Styblinski–Tang function for in log–log axes. Blue line corresponds to the HBM, red line—to method (4).

3.4. Zakharov Function

This convex function is presented as

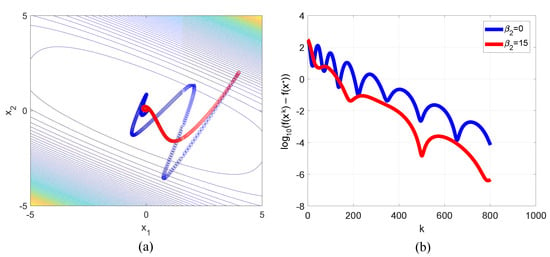

It has a unique minimum point . For , we chose as and performed computations with the following parameter values: , , . The trajectories are presented in Figure 7a and plots of the error logarithm dependence on the iteration number are presented in Figure 7b. As can be seen, the selected value of led to a damping of oscillations typical for the HBM and led to a faster entry of the trajectory into the neighborhood of . For , computations were performed for , selected as the vector of units, , , . Plots of the dependence of error on the iteration number in log–log axes are presented in Figure 8. As can be seen, the value of led to the damping of oscillations, as in the 2D case.

Figure 7.

Plots of the descent trajectories (a) and the dependence of the error logarithm on the iteration number (b) for the minimization of the Zakharov function. Blue line corresponds to the HBM, red line—to method (4).

Figure 8.

Plots of the dependence of the error on iteration number for the minimization of the Zakharov function for in log–log axes. Blue line corresponds to the HBM, red line—to method (4).

3.5. Non-Convex Function in Multidimensional Space

Let the following function be considered:

This function has a unique minimum point . We performed computations with chosen as a vector of units and for , , . Plots of the error’s dependence on the iteration number in log–log axes are presented in Figure 9. As in the previous examples, the inclusion of led to a faster convergence in comparison with the standard HBM.

3.6. Smoothed Elastic Net Regularization

The following function that arises in machine learning was considered [34]:

where , is the vector of values, is a matrix of features, , are the regularization parameters, function , is the smooth approximation of -norm (so-called pseudo-Huber function [35]):

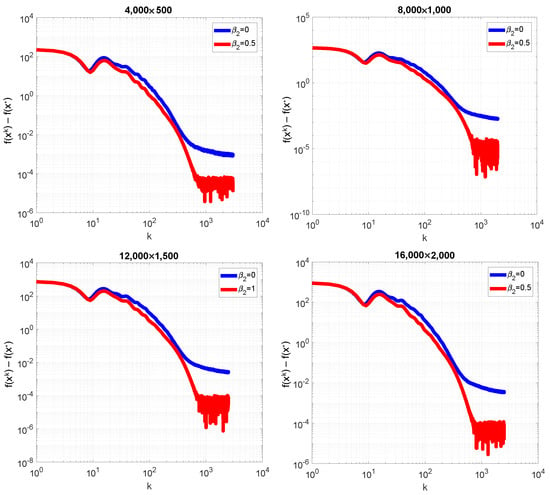

As mentioned in [34,35] , where , . Datasets, represented by A and b at various values of m and d were simulated using the function randn() in Matlab: matrix A was simulated as a random matrix from the Gaussian distribution normalized by , and b was simulated as a random vector from the same distribution. Computations were performed with the following parameter values: , . Steps h and were computed as optimal values for the quadratic case, and was chosen to as equal to 0.5. Condition number for all model datasets was approximately equal to . The error was computed as , where was the benchmark solution, obtained by method (4) for iterations. For all cases, was chosen as a vector of units. In Figure 10, the plots of the dependence of error on the iteration number are presented in log–log axes. As can be seen, the presence of led to an improvement in convergence.

Figure 10.

Plots of the dependence of the error on the iteration number for the regression problem with smoothed elastic net regularization in log–log axes for different model datasets. Blue line corresponds to the HBM, red line—to method (4).

3.7. Logistic Regression

For the binary classification, the following convex function related to the model of logistic regression is widely used:

where represents the rows of matrix , and , . Matrix and vector y represent the training dataset.

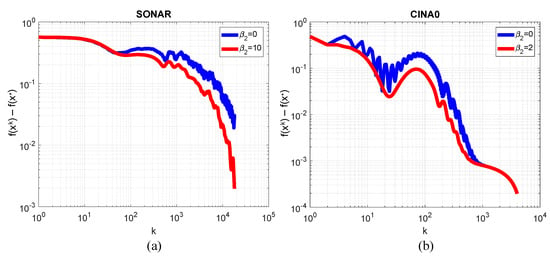

For the computations, we used two datasets: SONAR (, ) and CINA0 (, ). The first was used for a comparison of different methods in [36]. The second is a well-known test dataset, which can be downloaded from https://www.causality.inf.ethz.ch/challenge.php?page=datasets (accessed on 14 March 2024). The error was computed as . For the SONAR dataset, the values , , and were used, and a benchmark solution was obtained with method (4) in the case of iterations. For CINA0, the following parameters were used: , , and a benchmark solution was obtained for iterations of method (4). For both datasets, was chosen as a vector of zeroes.

In Figure 11, plots of the dependence of error on the iteration number in log–log axes are presented. As can be seen, the adding of led to the damping of oscillations typical for the standard HBM.

Figure 11.

Plots of the dependence of error on the iteration number for the logistic regression problem in log–log axes for datasets SONAR (a) and CINA0 (b). Blue line corresponds to the HBM, red line—to method (4).

3.8. Recurrent Neural Network

Let us consider the model recurrent neural network (RNN) used for the analysis of phrase tone. For details of its architecture and realization, see https://python-scripts.com/recurrent-neural-network (accessed on 16 March 2024). This RNN was realized using the following recurrent relations:

where M is the number of words of vocabulary in the phrase; is a vector, which represents the s-th word in the phrase; is a vector used for iterations in the hidden layer; y is the output vector; ,, are the matrices of weights; and and are the vectors of biases. The vector of probabilities of the ’good’ or ’bad’ tone of the phrase was computed as . The training dataset consisted of 67 phrases from the vocabulary, with 19 unique words. The following dimensions of vectors were used: , , the dimension of h was chosen as 64 (the maximum number of words from vocabulary in the phrase; this number can be varied).

As a result of forward propagation, we obtained a 2D vector of probabilities for the phrase tone, computed with the use of the softmax function. The loss function used for the training of this RNN was computed as

where X is a matrix of vectors , which represents the phrase with M words, is a label of phrase; represented by X; is the probability of the phrase tone; is a proper component of a cross-entropy function

and is a vector of parameters of RNN. The objective function is written as

where is the size of the training dataset (number of phrases). With all considered dimensions, we minimized the function of variables.

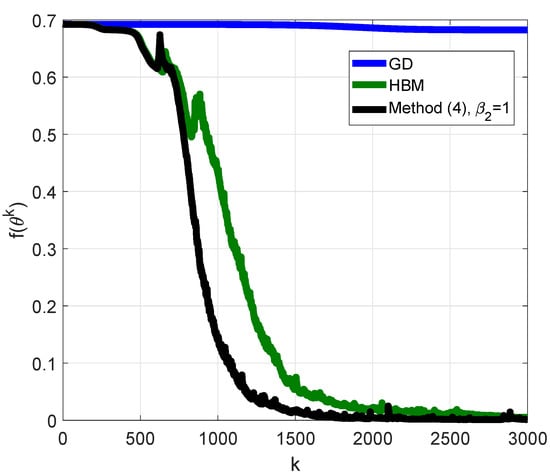

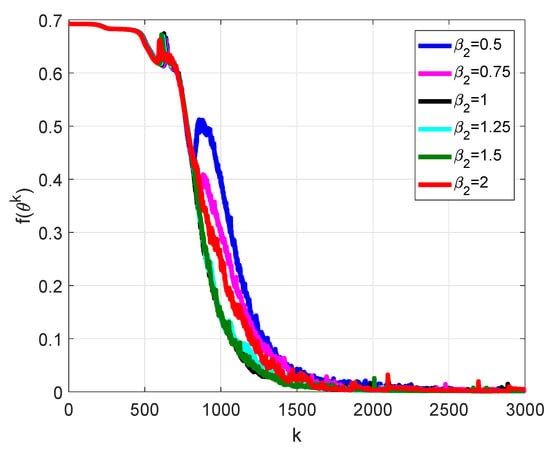

For minimization, we applied deterministic methods, as was considered in the theoretical part of the presented paper and despite the use of stochastic methods in most works on the training of neural networks. The computations were performed with , and we tried to vary the value of in order to analyze its effect on the convergence. We realized a numerical experiment for 250 random initializations of weights and biases and performed computations for epochs. In Figure 12, the plots of the dependence of the objective function value on the epoch number averaged at all random initializations are presented for the standard GD (2), HBM (3), and method (4) in the case of . As can be seen, methods with momentum led to a faster convergence in comparison with the standard GD, as mentioned by many authors (e.g., see [19]), and the presence of led to a faster convergence to the minimum in practice. In Figure 13, the plots obtained for different values of are presented. As can be seen, the value of had an effect on the convergence of method (4).

Figure 12.

Plots of the dependence of the objective function value on the epoch number for the problem of RNN training.

Figure 13.

Plots of the dependence of the objective function value on epoch number for the problem of RNN training for method (4) at different values of .

4. Conclusions

In the presented paper, we tried to perform an analysis of the properties of method (4) in theory and practice. Despite the results of the investigations presented in [28,29], this method requires further analysis, so we tried to realize this in the presented paper.

The following new results were obtained:

- It was demonstrated that, in the case of the quadratic function, method (4) can be easily investigated using the first Lyapunov method. As a result of its application, the convergence conditions presented in Theorem 1 were obtained. Such conditions led to the conditions for the HBM (3) in the case of (see [7]). For functions from , such conditions can be treated as the conditions of local convergence.

- In comparison with the HBM, optimal parameters for method (4) can only be obtained numerically by the solution of the 3D constrained problems (19) and (20). As demonstrated, for the quadratic case, the optimal value of was equal to zero, so method (4) did not provide additional acceleration in comparison to the standard HBM.

- The ’mechanical’ role of was demonstrated by the consideration of the ODE (25), which is equivalent to (4) in the 1D case. This ODE describes the descent process in the neighborhood of . As can be seen from (25), the presence of realized an additional damping of oscillations associated with non-monotone convergence of the HBM [13].

- In numerical examples from different applications, it was demonstrated that, with the use of proper values of , a decrease in oscillation amplitudes typical of the HBM can be realized.

The following remarks on future investigations can be made:

- In this paper, a local convergence analysis was presented. For , global convergence for a specific choice of the parameters was demonstrated in [29]. It is imperative to obtain the general conditions for the parameters that guarantee global convergence.As is known for the HBM (e.g., see [28]), the convergence conditions obtained for strongly convex quadratic functions can lead to a lack of global convergence for .

- An analysis of method (4) was performed for the case of constant values of and . But as known [18], it is effective to use methods with adaptive momentum, whose value is dependent on k in order to improve the convergence. Thus, the construction of extensions of method (4) to the case of adaptive parameters is a perspective for future research.

- In this paper, all methods were considered in their deterministic formulations. However, in modern problems, especially those arising in machine learning, stochastic gradient methods are used according to the size of the datasets. Therefore, the extension of method (4) and its modifications for stochastic optimization has potential for future investigation, especially for applications in machine learning.

Author Contributions

Conceptualization, G.V.K.; methodology, G.V.K.; software, G.V.K. and V.Y.S.; validation, G.V.K. and V.Y.S.; formal analysis, G.V.K.; investigation, G.V.K. and V.Y.S.; writing—original draft preparation, G.V.K.; writing—review and editing, G.V.K. and V.Y.S.; visualization, G.V.K. and V.Y.S.; supervision, G.V.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are included in the article.

Acknowledgments

The authors wish to thank anonymous reviewers for their useful comments and discussions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bishop, C. Pattern Recognition and Machine Learning; Springer: Berlin, Germany, 2006. [Google Scholar]

- Leonard, D.; van Long, N.; Ngo, V.L. Optimal Control Theory and Static Optimization in Economics; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Saad, Y. Iterative Methods for Sparse Linear Systems; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- Ljung, L. System Identification: Theory for the User; Prentice Hall PTR: Hoboken, NJ, USA, 1999. [Google Scholar]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer: Berlin, Germany, 2004. [Google Scholar]

- Polyak, B. Introduction to Optimization; Optimization Software Inc.: New York, NY, USA, 1987. [Google Scholar]

- Polyak, B. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Ghadimi, E.; Feyzmahdavian, H.R.; Johansson, M. Global convergence of the heavy-ball method for convex optimization. In Proceedings of the 2015 European Control Conference (ECC), Linz, Austria, 15–17 July 2015; pp. 310–315. [Google Scholar]

- Aujol, J.-F.; Dossal, C.; Rondepierre, A. Convergence rates of the heavy ball method for quasi-strongly convex optimization. SIAM J. Optim. 2022, 32, 1817–1842. [Google Scholar] [CrossRef]

- Bhaya, A.; Kaszkurewicz, E. Steepest descent with momentum for quadratic functions is a version of the conjugate gradient method. Neural Netw. 2004, 17, 65–71. [Google Scholar] [CrossRef] [PubMed]

- Goujaud, B.; Taylor, A.; Dieuleveut, A. Quadratic minimization: From conjugate gradients to an adaptive heavy-ball method with Polyak step-sizes. In Proceedings of the OPT 2022: Optimization for Machine Learning (NeurIPS 2022 Workshop), New Orleans, LA, USA, 3 December 2022. [Google Scholar]

- Danilova, M.; Kulakova, A.; Polyak, B. Non-monotone behavior of the heavy ball method. In Difference Equations and Discrete Dynamical Systems with Applications. ICDEA 2018. Springer Proceedings in Mathematics and Statistics; Bohner, M., Siegmund, S., Simon Hilscher, R., Stehlik, P., Eds.; Springer: Berlin, Germany, 2020; pp. 213–230. [Google Scholar]

- Danilova, M.; Malinovskiy, G. Averaged heavy-ball method. Comput. Res. Model. 2022, 14, 277–308. [Google Scholar] [CrossRef]

- Josz, C.; Lai, L.; Li, X. Convergence of the momentum method for semialgebraic functions with locally Lipschitz gradients. SIAM J. Optim. 2023, 33, 3012–3037. [Google Scholar] [CrossRef]

- Wang, H.; Luo, Y.; An, W.; Sun, Q.; Xu, J.; Zhang, L. PID controller-based stochastic optimization acceleration for deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5079–5091. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Yarats, D. Quasi-hyperbolic momentum and Adam for deep learning. In Proceedings of the ICLR 2019: International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Gitman, I.; Lang, H.; Zhang, P.; Xiao, L. Understanding the role of momentum in stochastic gradient methods. In Proceedings of the NeurIPS 2019: Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. In Proceedings of the International Conference on Machine Learning, PMLR, Atlanta, GA, USA, 17–19 June 2013; Volume 28, pp. 1139–1147. [Google Scholar]

- Kidambi, R.; Netrapalli, P.; Jain, P.; Kakade, S. On the insufficiency of existing momentum schemes for Stochastic Optimization. In Proceedings of the NeurIPS 2018: Neural Information Processing Systems, Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Attouch, H.; Fadili, J. From the ravinemethod to the Nesterov method and vice versa: A dynamical system perspective. SIAM J. Optim. 2022, 32, 2074–2101. [Google Scholar] [CrossRef]

- Attouch, H.; Laszlo, S.C. Newton-like inertial dynamics and proximal algorithms governed by maximally monotone operators. SIAM J. Optim. 2020, 30, 3252–3283. [Google Scholar] [CrossRef]

- He, X.; Hu, R.; Fang, Y.P. Convergence rates of inertial primal-dual dynamical methods for separable convex optimization problems. SIAM J. Control Optim. 2020, 59, 3278–3301. [Google Scholar] [CrossRef]

- Alecsa, C.D.; Laszlo, S.C. Tikhonov regularization of a perturbed heavy ball system with vanishing damping. SIAM J. Optim. 2021, 31, 2921–2954. [Google Scholar] [CrossRef]

- Diakonikolas, J.; Jordan, M.I. Generalized momentum-based methods: A Hamiltonian perspective. SIAM J. Optim. 2021, 31, 915–944. [Google Scholar]

- Yan, Y.; Yang, T.; Li, Z.; Lin, Q.; Yang, Y. A unified analysis of stochastic momentum methods for deep learning. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Van Scoy, B.; Freeman, R.; Lynch, K. The fastest known globally convergent first-order method for minimizing strongly convex functions. IEEE Control Syst. Lett. 2018, 2, 49–54. [Google Scholar] [CrossRef]

- Lessard, L.; Recht, B.; Packard, A. Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 2016, 26, 57–95. [Google Scholar] [CrossRef]

- Cyrus, S.; Hu, B.; Van Scoy, B.; Lessard, L. A robust accelerated optimization algorithm for strongly convex functions. In Proceedings of the 2018 Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018. [Google Scholar]

- Gantmacher, F.R. The Theory of Matrices; Chelsea Publishing Company: New York, NY, USA, 1984. [Google Scholar]

- Gopal, M. Control Systems: Principles and Design; McGraw Hill: New York, NY, USA, 2002. [Google Scholar]

- Su, W.; Boyd, S.; Candes, J. A differential equation for modeling Nesterov’s accelerated gradient method: Theory and insights. J. Mach. Learn. Res. 2016, 17, 1–43. [Google Scholar]

- Luo, H.; Chen, L. From differential equation solvers to accelerated first-order methods for convex optimization. Math. Program. 2022, 195, 735–781. [Google Scholar] [CrossRef]

- Eftekhari, A.; Vandereycken, B.; Vilmart, G.; Zygalakis, K.C. Explicit stabilised gradient descent for faster strongly convex optimisation. BIT Numer. Math. 2021, 61, 119–139. [Google Scholar] [CrossRef]

- Fountoulakis, K.; Gondzio, J. A second-order method for strongly convex ℓ1-regularization problems. Math. Program. 2016, 156, 189–219. [Google Scholar] [CrossRef]

- Scieur, D.; d’Aspremont, A.; Bach, F. Regularized nonlinear acceleration. Math. Program. 2020, 179, 47–83. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).