Abstract

This paper analyses the solution of a specific quadratic sub-problem, along with its possible applications, within both constrained and unconstrained Nonlinear Programming frameworks. We give evidence that this sub–problem may appear in a number of Linesearch Based Methods (LBM) schemes, and to some extent it reveals a close analogy with the solution of trust–region sub–problems. Namely, we refer to a two-dimensional structured quadratic problem, where five linear inequality constraints are included. Finally, we detail how to compute an exact global solution of our two-dimensional quadratic sub-problem, exploiting first order Karush-Khun-Tucker (KKT) conditions.

1. Introduction

There are plenty of real problems where the minimization of a twice continuously differentiable functional is sought, (possibly) subject to several linear and nonlinear constraints. Among authoritative textbooks, where such problems are widely detailed, we can surely find [1,2,3]. Such general problems typically require the solution to a sequence of simple sub-problems with the following pattern:

where , , , , and . Furthermore, represents a model of the smooth function at the current iterate and the feasible set represents a linearization of the constraints.

As is well known, affine and quadratic polynomials based on Taylor’s expansion are often adopted to represent the models , but valid alternatives also include least squares approximations, Radial Basis Functions, metamodels based on Splines, B-Splines, Kriging, etc. [4,5]. We remark that the advantage of solving the sequence of sub-problems (1) in place of the original nonlinearly constrained problem, within a suitable convergence framework, essentially relies on their simplicity. In particular, in this paper our focus is on investigating the role and the properties of the next problem (2), that represents a special sub-case of the more general problem (1). More specifically, we consider the case where in (1) the feasible set includes only a finite number of inequalities, and the function is a quadratic functional, i.e., we focus on the sub-problem and drop the dependency on k

where , , , are given n-real search directions, and , . Despite the apparent specific structure of (2), a number of real applications may benefit from its solution, as partly described in Section 4 (see also [6] for a general perspective and [7] for a more recent similar viewpoint within neural network frameworks).

As an example of versatility for the structure of (2), both in TRMs and LBMs, we will shortly consider how it may be possibly successfully embedded within the framework of Truncated Newton’s methods (TNMs—see Table 1).

Table 1.

A standard framework for linesearch-based TNMs for large-scale problems. The alternative of possibly using negative curvature directions allows for convergence to stationary limit points which fulfill second-order necessary optimality conditions.

Where (see also [8,9,10,11,12,13,14])

- represents an approximate Newton-type direction, at the current feasible point ;

- represents a negative curvature direction for the nonlinear function , at the current feasible point ;

- represents the exact/approximate Hessian matrix of at ;

- represents the exact/approximate Gradient vector of at ;

- and are steplengths along the directions (i.e., following the taxonomy of Table 1, we have and ), with and . The constraint potentially plays a multi-purpose role, modeling for instance the gradient-related property for the search direction at , i.e.,

The availability of an (exact) global solution for (2) may also suggest some alternatives to Table 1, either selecting a TRM or a LBM framework, or combining the two approaches. In particular, the scheme in Table 2 represents an immediate acceleration scheme for linesearch-based TNMs with respect to Table 1, in case the global convergence of to stationary limit points is simply sought. Note that selecting negative values for and positive ones for allows us to possibly perform the following:

- reverse the directions and ;

- use (2) in the light of simulating a dogleg-like [3] procedure for TRMs, also in LBM nonconvex frameworks.

As a further alternative case for considering the exact global solution of (2), with respect to Table 1 and Table 2, we have the scheme in Table 3, where we suitably combine the strategies used in TRMs and LBMs to ensure global convergence (in particular, TRMs require the fulfillment of a sufficient reduction of the model in order to force a sufficient decrease in the objective function, so that they do not need any linesearch procedure, possibly implying a reduced computational burden with respect to LBMs. Conversely, LBMs easily compute an effective search direction but they need to perform a linesearch procedure, because they do not include any -direct- function reduction mechanism based on the local quadratic model). In particular, if the test is fulfilled, there is no need to perform a linesearch procedure, since the global convergence for is preserved by the trust-region framework. We also remark that, in Table 3, the computation of both and is required, regardless of the outcomes of the test , since in any case these quantities must be computed.

Finally, there is a chance to further exploit the scheme (2) in a TNM framework based on the linesearch procedure, in order to ensure global convergence properties for the sequence to stationary limit points satisfying the second-order necessary optimality conditions (namely, those stationary points where the Hessian matrix is positive and semidefinite). The resulting scheme is proposed in Table 4 and potentially does not require additional comments. The above examples give an overview of the possible basic contexts where the solution of the sub-problem (2) is sought. Hence, to some extent, specifically exploiting issues on its solution may yield a tool for practitioners working in Nonlinear Programming frameworks. We remark that, both in Section 3 and Section 6, the reader may find additional guidelines for possible alternatives and extensions to the use of global solutions of (2).

Table 4.

A framework of linesearch-based approaches within TNMs for large-scale problems: solving the sub-problem (2) successfully allows for the convergence of the sequence to limit points satisfying second-order necessary optimality conditions. Differences with respect to Table 1, Table 2 and Table 3 are quite evident.

We also highlight that our perspective both differs from SQP (Sequential Quadratic Programming) methods—see, for example, the seminal paper [15], and approaches from the literature where LSMs and TRMs have been combined. Indeed, in the basic structure of SQPs (see also [16,17]), inner and outer iterations are performed. At each outer iteration, the pair given by primal-dual variables is computed, and a problem similar to (2) is addressed. On the contrary, we do not intend to propose a (novel) framework of global convergence for Nonlinear Programming, but rather we suggest the generality of the scheme (2) within a number of cases from the literature. Furthermore, we specifically focus on the exact solution of the quadratic sub-problem (2) as well.

On the other hand, in the seminal paper [18] and in the more recent ones [19,20,21], linesearch and trust-region techniques are integrated in a unified framework. Conversely, our point of view merely intends to bridge the gap between them.

The structure of the present paper is as follows. In Section 2, we describe the conditions ensuring the feasibility of our problem. In Section 3, we reveal the basic motivations for our analysis and outcomes. Section 4 reports relevant remarks, highlighting how general our proposal can be. Section 5 includes the Karush-Kuhn-Tucker conditions associated with problem (2), along with precise guidelines to find a global minimum for it. Finally, Section 6 provides some conclusions and suggestions for future work.

As regards the symbols adopted in the paper, , and are, respectively, used to indicate the 1-norm, the 2-norm, and the ∞-norm of the vector or the real matrix x. Given the n-real vectors x and y, we indicate their standard inner product with . Given the matrix , we then indicate by its Moore-Penrose pseudoinverse matrix, i.e., the unique matrix, such that , , , . With (), we indicate a positive semidefinite (positive definite) matrix A.

2. Feasibility Issues for Our Quadratic Problem

Here, we consider some feasibility issues for the linear inequality constrained quadratic problem (2). Clearly, (2) just includes the two real unknowns and . Moreover, as regards the existence of solutions for (2), we have the following result.

Lemma 1

- Cond. I: and .

- Cond. II: and ; moreover,

- –

- if , then

- –

- if , then

- Cond. III: and ; moreover,

- –

- if , then

- –

- if , then

- Cond. IV: , , , moreover,

- –

- if and , then

- –

- if and , then .

- Cond. V: , , , moreover,

- –

- if and , then

- –

- if and , then .

Proof of Lemma 1.

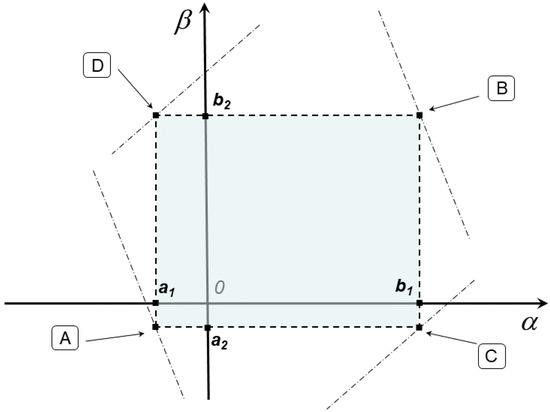

For the sake of simplicity, we refer to Figure 1. The objective function in (2) is continuous, so that the existence of solutions follows from the compactness and nonemptiness of the feasible region. In this regard, the compactness is a consequence of assuming finite. Furthermore, it is not difficult to realize that the feasible set of (2) is nonempty as long as at least one among the five conditions, Cond. I–Cond. V, is fulfilled, where the dashed-dotted line in Figure 1 represents the line associated with the last inequality constraint in (2). In particular, Cond. IV refers to the corner points A and B of Figure 1, while Cond. V refers to the vertices C and D. □

3. On the Use of Quadratic Sub-Problems Within TRMs and LBMs, in Large-Scale Optimization

Here, we give details about a possible motivation for our proposal, in order to reduce the gap between two renowned classes of optimization methods, namely TRMs and LBMs. We are indeed persuaded that such a viewpoint may suggest a number of possible enhancements, to improve both the last classes of methods.

In this regard, observe that a TRM for large-scale problems is an iterative procedure that generates the sequence of n-real iterates , and seeks at any step k for the solution of the trust-region sub-problem

where is the current iterate, represents the exact/approximate Hessian matrix , and represents the radius of the trust-region, i.e., the compact subset where the model needs to be validated (for an exhaustive description of TRMs for Nonlinear Programming, the reader can refer to [2]). A number of possible variants of (4) can be introduced when n is large, including iterative updating strategies for both and , and a number of approximate/sophisticated/refined schemes for its solution are available in the literature.

A distinguishing feature of TRMs, with respect to LBMs, is that at iteration k the methods in the first class attempt to determine the stepsize and the search direction at once, so that , where indeed approximately/exactly solves (4). Conversely, in LBMs, the computations of and are independent, as detailed later on in this paper. In particular, (see also [3]) the effective computation of in TRMs properly attempts to comply with the following issues:

- can be computed by either an exact (small- and medium-scale problems) or an approximate (large-scale problems) procedure;

- In order to prove the global convergence of the sequence to stationary limit points satisfying either first- or second-order necessary optimality conditions, is required to provide a sufficient reduction of the quadratic model , i.e., the difference is asked to satisfy a condition like ()

- can be computed by an approximate procedure, e.g., by adopting a Cauchy step or using the Steihaug conjugate gradient (see [22,23]), regardless of signature. Then, the approximate solution of (4) is merely sought on a linear manifold of a dimension of one or at most two, rather than on the entire subset ;

- Depending on a number of additional assumptions, TRMs can prove to be globally convergent to either a simple stationary limit point, or to a point which satisfies second-order necessary optimality conditions [2];

- Finding the exact/accurate solution of the sub-problem (4) is in general quite a cumbersome task in large-scale problems, representing a difficult goal that is often (when possible) skipped.

On the other hand, to some extent, LBMs represent the counterpart of TRMs. Indeed, to yield the next iterate , they perform the computation of the steplength and the direction as separate tasks. Furthermore, unlike for TRMs, the novel iterate in LBMs can be also obtained by adopting the more general update

with and now being two search directions summarizing different information on the function , and and being stepsizes. In particular:

- when (or for any k), then represents a Newton-type direction, being typically computed by approximately solving Newton’s equation at the current iterate . Then, an Armijo-type linesearch procedure is applied along to compute , provided that is gradient-related (see e.g., [3]) at ;

- when , then represents a Newton-type direction again, while is typically a negative curvature direction for at , which approximates an eigenvector associated with the least negative eigenvalue of . The vector plays an essential role, when LBMs’ convergence to stationary points satisfying the second-order necessary optimality conditions needs to be proved. In the last case, the computation of the steplengths and is often carried out at once, (as in curvilinear linesearch procedures—see [24]), or the steplength computation is carried out by pursuing independent tasks (see, for example, [25]). We highlight that in (5), when both and , we may experience difficulties related to properly scaling the two search directions.

As a general class of efficient algorithms within LBMs for large-scale problems, we find Truncated Newton methods (TNMs) coupled with a linesearch procedure (see Table 1). Similarly to general TRMs, they are evidently based on possibly computing and after exploiting the second-order Taylor’s expansion of at . However, a couple of quite disappointing issues arise when applying linesearch-based TNMs, namely:

- Unlike trust-region based TNMs, at iterate , the search of a stationary point for a quadratic polynomial model of (i.e., Newton’s equation) is performed on , so that the quadratic expansion is not trusted on a more reliable compact subset (trust-region) of . Thus, the search direction might show poor performance when the iterates in the sequence are far from a stationary limit point . More specifically, note that in case ; then, solving Newton’s equation and the trust-region sub-problemfor any yields the same solutions. Conversely, when is indefinite, then Newton’s equation provides a saddle point for , that might be interpreted as a solution to a trust-region sub-problem (the interested reader may consider the paper [26] for some extensions). Furthermore, from this perspective, we remark that in LBMs, solving (2) where , , and , is to a to large extent equivalent to computing the Cauchy step when solving (4). Indeed, in the last case, the trust-region constraint in (4), in principle, can be equivalently replaced by the compact feasible set (box constraints) in (2), after setting . On the other hand, in case , and setting (2) and , along with , then, with similar reasoning, the solution to (2) closely resembles the application of the dogleg method when solving (4). Finally, since the coefficients in (2) may have negative values, we may potentially reverse the directions and when solving (2). Thus, following the idea behind (3), the scheme (2) suggests that, in case is also indefinite, (2) easily generalizes the proposals in [27]. In fact, following (3), we are able to exactly compute a global minimum for (2), regardless of the signature of Q, so that the resulting direction is gradient-related at .

- As in (5), the search directions and might be suitably combined in a curvilinear framework (see, for example, [24]). However, to our knowledge, the selection of and in the literature is seldom performed with a joint procedure to separately assess and , i.e., and are rarely chosen as independent parameters. Hence, in the literature of linesearch-based TNMs, the linesearch procedure that starts from and yields explores a one-dimensional manifold (regular curve), rather than considering as a two-dimensional manifold with independent real coefficients and .

In this regard, using (2) within LBMs tends to partially compensate for the drawbacks in the last two items, in light of the great success that TRMs have achieved in the last decade. In particular, using (2) within linesearch-based TNMs, our aim is that of developing a simple tool which could possibly carry out the following:

- Adaptively updates the parameters , , , in (2), when the iterate changes, following the rationale behind the update of in (4), and retaining the strong convergence properties of TRMs. This fact is of remarkable interest, since in (2) the information associated with the search directions and is suitably trusted in a compact subset of (namely, the box constraints , );

- Exactly computes a cheap global minimum for (2), so that the vector is then provided to a standard linesearch procedure such as the Armijo rule, to ensure that the global convergence of the sequence to stationary (limit) points is preserved;

- Allows for the convergence of subsequences of the iterates to stationary limit points, where either first- or second-order necessary optimality conditions are fulfilled;

- Preserves generality within a wide range of optimization frameworks, as reported in the next Section 4;

- Combines the effects of and , skipping all the drawbacks related to a possible different scaling between these directions. We recall that since and are generated through the application of different methods, then the comparison of their performances may be biased by the latter generating methods.

4. How General Is the Model (2) in Nonlinear Programming Frameworks?

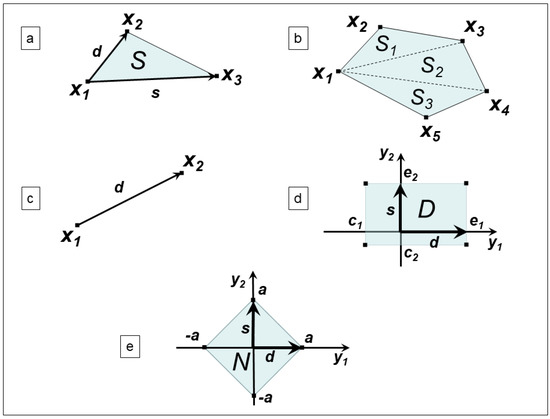

This section is devoted to reporting a number of real constrained optimization schemes from Nonlinear Programming, whose formulation is encompassed in (2). We can see that for some of the following schemes (see Figure 2), it is possible that more than one reformulation can be considered in the framework (2).

Figure 2.

Examples where the structure of the feasible set in (2) is helpful: case (a) is treated in Section 4.1, case (b) is treated in Section 4.2, case (c) is treated in Section 4.3, case (d) is treated in Section 4.4 and case (e) is treated in Section 4.5.

4.1. Minimization over a Bounded Simplex

We consider the problem of minimizing a quadratic functional over the simplex , such that

where . Figure 2a reports an example of a simplex. In this regard, by simply setting in (2)

- , ,

- ,

- , , , ,

- ,

4.2. Minimization over a Bounded Polygon

We consider the problem of minimizing a quadratic functional over a polygon , described by a finite number m of vertices (observe that the points in the polygon P must belong to a hyperplane , with , , , so that for any .), i.e.,

where . Figure 2b reports an example of a polygon with . In this regard, the problem (7) can be split into to solution of the sub-problems

where

which are of the form (6). Thus, solving the problem (7) corresponds to solve a sequence of instances of the problem (2).

4.3. Minimization over a Bounded Segment

We consider the problem of minimizing a quadratic functional over a segment , i.e.,

where . Figure 2c reports an example of a segment. In this regard, by simply setting in (2)

- , ,

- ,

- , , , ,

- ,

4.4. Minimization over a Bounded Box in

We consider the problem of minimizing a quadratic functional over a box domain , i.e.,

where . Figure 2d reports an example of a box domain. In this regard, by simply setting in (2)

- ,

- , , , ,

- ,

- ,

- , , , ,

- .

4.5. Minimization Including a 1-Norm Inequality Constraint in

We consider the problem of minimizing a quadratic functional subject to the 1-norm inequality constraint , with , i.e.,

which is . Figure 2e reports an example of such a constraint. In this regard, it suffices to recast (11) as in (8), where

- , , , , ,

- so that four instances of the problem (2) need to be solved.

4.6. Minimization Including an ∞-Norm Inequality Constraint in

We consider the problem of minimizing a quadratic functional subject to the ∞-norm inequality constraint , with , i.e.,

which is . In this regard, we obtain similar results with respect to Section 4.5. Indeed, by simply setting in (2)

- ,

- , , , ,

- ,

4.7. Minimization Including a 2-Norm Inequality Constraint in

We consider the problem of minimizing a quadratic functional in subject to the 2-norm inequality constraint , with , i.e.,

In this regard, it suffices to observe that the solution of (2) provides both a

- –

- ,

- –

- –

- , , , ,

- –

- ,

- UPPER bound: to the solution of (13), as long as we follow the indications in Section 4.5, i.e., we recast and solve (11) as in (8), where

- –

- –

- –

- , , , , ,

so that four instances of the problem (2) need to be solved.

5. KKT Conditions and the Fast Solution of Problem (2)

Replacing the expression of the vector x in (2) within the objective function, we easily obtain the equivalent problem

where

Observe that transforming (2) into (14) only requires the computation of two additional matrix-vector products (i.e., and ), along with six inner products. The problem (14) is a constrained quadratic problem, such that first-order Fritz-John optimality conditions do not require additional constraint qualifications (since all the constraints are linear). Thus, after considering its Lagrangian function

we have the next set of equalities/inequalities representing the associated KKT conditions:

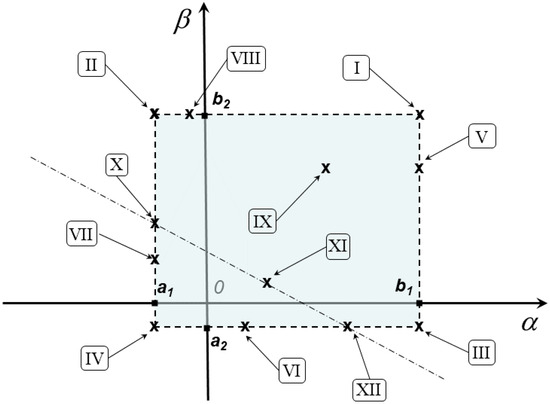

The remaining part of the present section will be devoted to analyze all the possible solutions of (15), with the aim of possibly computing a global minimum for (2). In this regard, exploiting the solutions of (15) evidently undergoes a reduction, allowing us to analyze the cases (I)–(XII) in Figure 3.

Figure 3.

Overview of possible solutions (I)–(XII) for KKT conditions in (15).

Observing that in (15) the multipliers and must fulfill nonnegativity conditions, it is not difficult to realize that computing all the KKT points satisfying (15) can turn out to be a burdensome task, including a number of sub-cases depending on the possible combinations of signs for the parameters , , , , , and . Conversely, a global minimizer for (2) can be equivalently exploited by analyzing all the possible solutions of (15) uniquely in terms of and , without requiring the computation of the multipliers as well. Hence, we limit our analysis to consider the computation of and in the cases (I)–(XII) of Figure 3, where

- Cases (I), (II), (III), (IV) are associated with possible solutions in the vertices of the box constraints;

- Cases (V), (VI), (VII), (VIII) are associated with possible solutions on the edges of the box constraints;

- Case (IX) represents a possible feasible unconstrained minimizer for the objective function in (2);

- Cases (X), (XI), (XII) are associated with possible solutions, making the last inequality constraint in (14) active.

Then, in Lemma 2, we will provide a simple theoretical result which justifies our simplification, with respect to computing all the KKT points. In this regard, we preliminarily set and consider the next cases from Figure 3, with being the sequence of tentative solution points of (14):

- Case (I): We set , . If , then set

- Case (II): We set , . If , then set

- Case (III): We set , . If , then set

- Case (IV): We set , . If , then set

- Case (V): We set and possibly compute the solution of the equationso that:

- –

- if , then set

- –

- if , then there is no solution for Case (V);

- –

- if , then set as any value satisfying , and compute as in (20);

- Case (VI): We set and possibly compute the solution of the equationso that:

- –

- if , then set

- –

- if , then there is no solution for Case (VI);

- –

- if , then set as any value satisfying , and compute as in (21);

- Case (VII): We set and possibly compute the solution of the equationso that:

- –

- if , then set

- –

- if , then there is no solution for Case (VII);

- –

- if , then set as any value satisfying , and compute as in (22);

- Case (VIII): We set and possibly compute the solution of the equationso that:

- –

- if , then set

- –

- if , then there is no solution for Case (VIII);

- –

- if , then set as any value satisfying , and compute as in (23);

- Case (IX): If , we compute the solutionof the linear systemotherwise, in case , then there is no solution for Case (IX);otherwise, in case , then we have three sub-cases:

- : then, recalling that we are in the sub-case where equations and yield the same information, we exploit equation and we set . Thus, from the bounds and the last inequality in (14), we obtainwhich yield the next three cases:

- –

- : admitting other three sub-cases, namely

- ∗

- , so that we set

- ∗

- , so that we set

- ∗

- , so that we set

- –

- : admitting no solution for Case (IX) as long as the condition holds. Conversely, in case , we have the three cases:

- ∗

- , so that we set

- ∗

- , so that we set

- ∗

- , so that we set

- –

- : corresponding to the three cases:

- ∗

- , so that we set

- ∗

- , so that we set

- ∗

- , so that we set

- : then, recalling that we are in the sub-case where equations and yield the same information, with , we exploit equation with . Therefore, we havewhich yield the next two cases:

- –

- This case implies that the objective function is constant (i.e., ), so that we set

- –

- : admitting no solution for Case (IX)

- : then, recalling that we are again in the sub-case where equations and yield the same information, we exploit equation and we set . Thus, from the bounds and the last inequality in (14), we obtainwhich yield the next three cases:

- –

- : admitting other three cases, namely

- ∗

- , so that we set

- ∗

- , so that is always fulfilled and we set

- ∗

- , so that we set

- –

- : admitting no solution for Case (IX) as long as the condition holds. Conversely, in case we have the three cases:

- ∗

- , so that we set

- ∗

- , so that we set

- ∗

- , so that we set

- –

- : corresponding to the three cases

- ∗

- , so that we set

- ∗

- , so that we set

- ∗

- , so that we set

Thus, overall, for Case (IX), if , we setalong withotherwise, if , there is no solution for Case (IX); - Case (X): We set with , and we distinguish among three cases:

- –

- if , then set ;

- –

- if , then there is no solution for Case (X);

- –

- if , then set

Set with - Case (XI): We distinguish among the next four cases:

- –

- if , then set , ; otherwise, there is no solution for Case (XI);

- –

- if , then and we analyze three sub-cases:

- If , then set

- If , then set ;

- If , then set

- –

- if , then set , ; if ( OR ), then there is no solution for Case (XI);

- –

- if , then and we analyze three sub-cases:

- If , then set

- If , then set ;

- If , then set

Set and ; if , then setotherwise, there is no solution for Case (XI); - Case (XII): We set with , and we distinguish among three cases:

- –

- if , then set ;

- –

- if , then there is no solution for Case (XII);

- –

- if , then set

Set with

The next lemma justifies the role of the last analysis for the computation of possible solutions of (14).

Lemma 2.

Proof of Lemma 2.

The existence of a global minimum and the corresponding value for (14) is ensured by Lemma 1. Moreover, each global minimum of (14) naturally fulfills KKT conditions, so that each global minimum must belong to the sequence . Now, assume by contradiction that there exists a point , with , but is not a global minimum. This yields the contradictory fact that . □

6. Conclusions and Future Work

We have considered a very relevant issue within Nonlinear Programming, namely the solution of a specific constrained quadratic problem, whose exact global solution can be easily computed after analyzing the first-order KKT conditions associated with it. We also highlighted that our proposal may, to a large extent, suggest guidelines for the research of novel LBMs, by drawing inspiration from TRMs. This last observation represents a promising tool, in order to provide algorithms which guarantee global convergence to stationary limit points, satisfying either first- or second-order necessary optimality conditions. In particular, we can summarize the following promising lines of research, for large-scale problems which iteratively generate the sequences of points

which are , , and search directions at the current iterate :

- Developing novel iterative LBMs (e.g., linesearch-based TNMs), where the search direction (e.g., a Newton-type direction) is possibly combined with another direction (e.g., the steepest descent at , a negative curvature direction at , etc.) through the use of (14). Then, comparing the efficiency of the novel methods with more standard linesearch-based approaches from the literature could give indications of the reliability of the ideas in this paper;

- Developing novel hybrid methods where the rationale behind alternating trust-region or linesearch-based techniques is exploited. In particular, the iterative scheme (respectively, ) might be considered, where the search directions and , along with the steplengths and (respectively, and ), are alternatively computed by solving

- A trust-region sub-problem like (4), so that a sufficient reduction in the quadratic model is ensured;

- A sub-problem like (14), so that the solution is a promising gradient-related direction to be used within a linesearch procedure.

In order to preserve the global convergence to stationary points satisfying either first- or second-order necessary optimality conditions; - Specifically, comparing the use of dogleg methods (within TRMs) vs. the application of (14) coupled with a linesearch technique. This issue is tricky, since dogleg methods are applied to trust-region sub-problems like (4), including a general quadratic constraint (i.e., the trust-region constraint), while in (14) all the constraints are linear, so that the exact global solution of (14) is easily computed. Moreover, the last issue might shed light also on the opportunity (possibly) of privileging an efficient linesearch procedure applied to a (coarsely computed) gradient-related search direction, in place of a precise computation of the search direction in LBMs, using an inexpensive linesearch procedure. In other words, it is at present questionable if coupling a coarse computation of the vectors and with an accurate linesearch procedure would be more preferable than coupling the accurately computed vectors and with a cheaper linesearch procedure;

- Introducing nonmonotone stabilization techniques (see e.g., [28]) combining nonmonotonicity with any of the above ideas, for both TRMs and LBMs.

Author Contributions

G.F., C.P. and M.R. have equally contributed to this paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are contained within the article.

Acknowledgments

Giovanni Fasano and Massimo Roma thank INδAM (Istituto Nazionale di Alta Matematica) for the support they received.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Ben-Tal, A.; Nemirovski, A. Lectures on Modern Convex Optimization: Analysis, Algorithms, and Engineering Applications. In MPS-SIAM Series on Optimization; SIAM: Philadelphia, PA, USA, 2001. [Google Scholar]

- Conn, A.R.; Gould, N.I.M.; Toint, P.L. Trust-region methods. In MPS-SIAM Series on Optimization; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Nocedal, J.; Wright, S.J. Numerical Optimization, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Micchelli, C.A. Interpolation of scattered data: Distance matrices and conditionally positive definite functions. Constr. Approx. 1986, 2, 11–22. [Google Scholar] [CrossRef]

- Myers, D.E. Kriging, cokriging, radial basis functions and the role of positive definiteness. Comput. Math. Appl. 1992, 24, 139–148. [Google Scholar] [CrossRef]

- Conn, A.R.; Gould, N.I.M.; Sartenaer, A.; Toint, P.L. On Iterated-Subspace Minimization Methods for Nonlinear Optimization. In Proceedings on Linear and Nonlinear Conjugate Gradient-Related Methods; Adams, L., Nazareth, L., Eds.; SIAM: Philadelphia, PA, USA, 1996; pp. 50–78. [Google Scholar]

- Shea, B.; Schmidt, M. Why line search when you can plane search? SO-friendly neural networks allow per-iteration optimization of learning and momentum rates for every layer. arXiv 2024, arXiv:2406.17954. [Google Scholar]

- Caliciotti, A.; Fasano, G.; Nash, S.; Roma, M. An adaptive truncation criterion for Newton-Krylov methods in large scale nonconvex optimization. Oper. Res. Lett. 2018, 46, 7–12. [Google Scholar] [CrossRef]

- McCormick, G.P. A modification of Armijo’s step-size rule for negative curvature. Math. Program. 1977, 13, 111–115. [Google Scholar] [CrossRef]

- Moré, J.J.; Sorensen, D.C. On the use of directions of negative curvature in a modified Newton method. Math. Program. 1979, 16, 1–20. [Google Scholar] [CrossRef]

- Nash, S.G. A survey of truncated-Newton methods. J. Comput. Appl. Math. 2000, 124, 45–59. [Google Scholar] [CrossRef]

- Fasano, G.; Roma, M. Iterative computation of negative curvature directions in large scale optimization. Comput. Optim. Appl. 2007, 38, 81–104. [Google Scholar] [CrossRef]

- De Leone, R.; Fasano, G.; Roma, M.; Sergeyev, Y.D. Iterative Grossone-Based Computation of Negative Curvature Directions in Large-Scale Optimization. J. Optim. Theory Appl. 2020, 186, 554–589. [Google Scholar] [CrossRef]

- Curtis, F.E.; Robinson, D.P. Exploiting negative curvature in deterministic and stochastic optimization. Math. Program. 2019, 176, 69–94. [Google Scholar] [CrossRef]

- Gill, P.E.; Wong, E. Sequential Quadratic Programming Methods. In Mixed Integer Nonlinear Programming; Lee, J., Leyffer, S., Eds.; The IMA Volumes in Mathematics and Its Applications; Springer: New York, NY, USA, 2012; Volume 154. [Google Scholar]

- Fletcher, R.; Gould, N.I.; Leyffer, S.; Toint, P.; Wächter, A. Global convergence of a trust-region SQP-filter algorithm for general nonlinear programming. SIAM J. Optim. 2002, 13, 635–659. [Google Scholar] [CrossRef]

- Wang, J.; Petra, C.G. A Sequential Quadratic Programming Algorithm for Nonsmooth Problems with Upper-Objective. SIAM J. Optim. 2023, 33, 2379–2405. [Google Scholar] [CrossRef]

- Nocedal, J.; Yuan, Y. Combining trust-region and line-search techniques. In Advances in Nonlinear Programming; Yuan, Y., Ed.; Kluwer: Boston, MA, USA, 1998; pp. 157–175. [Google Scholar]

- Tong, X.; Zhou, S. Combining Trust Region and Line Search Methods for Equality Constrained Optimization. Numer. Funct. Anal. Optim. 2006, 24, 143–162. [Google Scholar] [CrossRef]

- Waltz, R.A.; Morales, J.L.; Nocedal, J.; Orban, D. An interior algorithm for nonlinear optimization that combines line search and trust region steps. Math. Program. 2006, 107, 391–408. [Google Scholar] [CrossRef]

- Pei, Y.; Zhu, D. A trust-region algorithm combining line search filter technique for nonlinear constrained optimization. Int. J. Comput. Math. 2014, 91, 1817–1839. [Google Scholar] [CrossRef]

- Dembo, R.S.; Eisenstat, S.C.; Steihaug, T. Inexact Newton methods. SIAM J. Numer. Anal. 1982, 19, 400–408. [Google Scholar] [CrossRef]

- Steihaug, T. The Conjugate Gradient method and Trust Regions in large scale optimization. SIAM J. Numer. Anal. 1983, 20, 626–637. [Google Scholar] [CrossRef]

- Lucidi, S.; Rochetich, F.; Roma, M. Curvilinear stabilization techniques for truncated Newton methods in large scale unconstrained optimization. SIAM J. Optim. 1998, 8, 916–939. [Google Scholar] [CrossRef]

- Gould, N.I.M.; Lucidi, S.; Roma, M.; Toint, P.L. Solving the trust-region subproblem using the Lanczos method. SIAM J. Optim. 1999, 9, 504–525. [Google Scholar] [CrossRef]

- De Leone, R.; Fasano, G.; Sergeyev, Y.D. Planar methods and Grossone for the Conjugate Gradient breakdown in Nonlinear Programming. Comput. Optim. Appl. 2018, 71, 73–93. [Google Scholar] [CrossRef]

- Grippo, L.; Lampariello, F.; Lucidi, S. A truncated Newton method with nonmonotone linesearch for unconstrained optimization. J. Optim. Theory Appl. 1989, 60, 401–419. [Google Scholar] [CrossRef]

- Grippo, L.; Lampariello, F.; Lucidi, S. A class of nonmonotone stabilization methods in unconstrained optimization. Numer. Math. 1991, 59, 779–805. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).