Discrete versus Continuous Algorithms in Dynamics of Affective Decision Making

Abstract

:1. Introduction

2. Affective Decision Making by Individuals

3. Discrete Dynamics in Affective Decision Making

4. Two Groups with Binary Choice

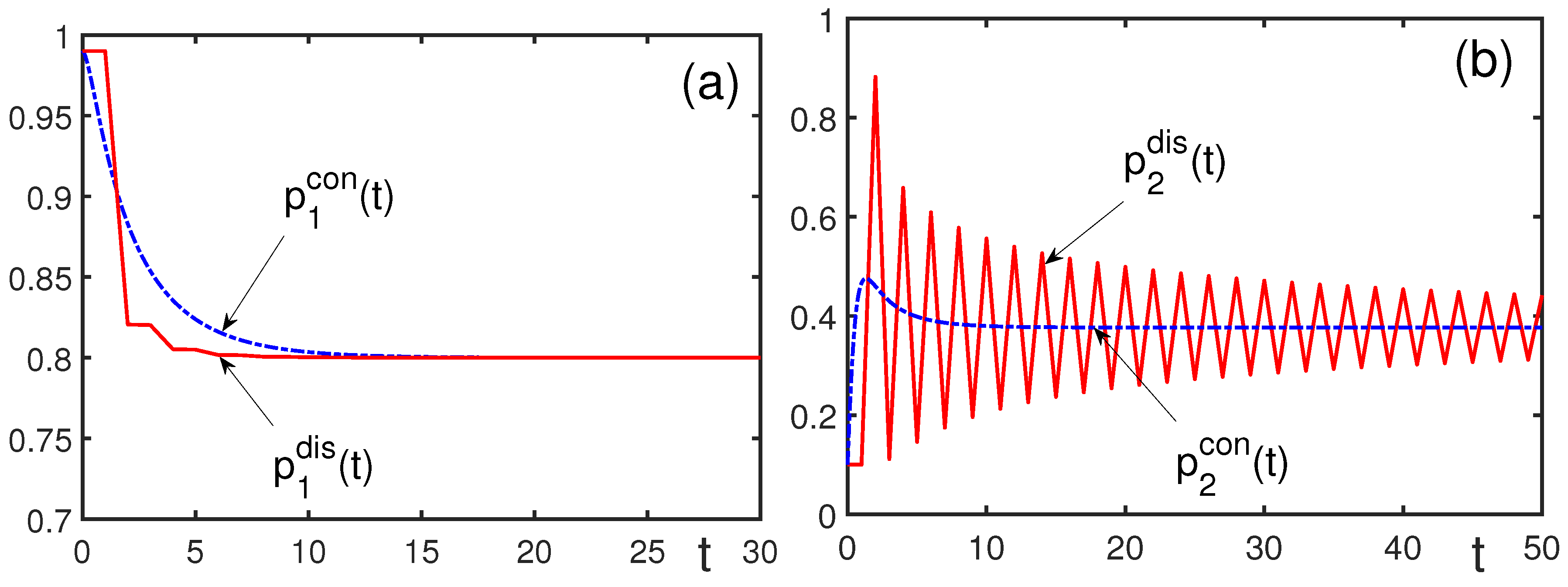

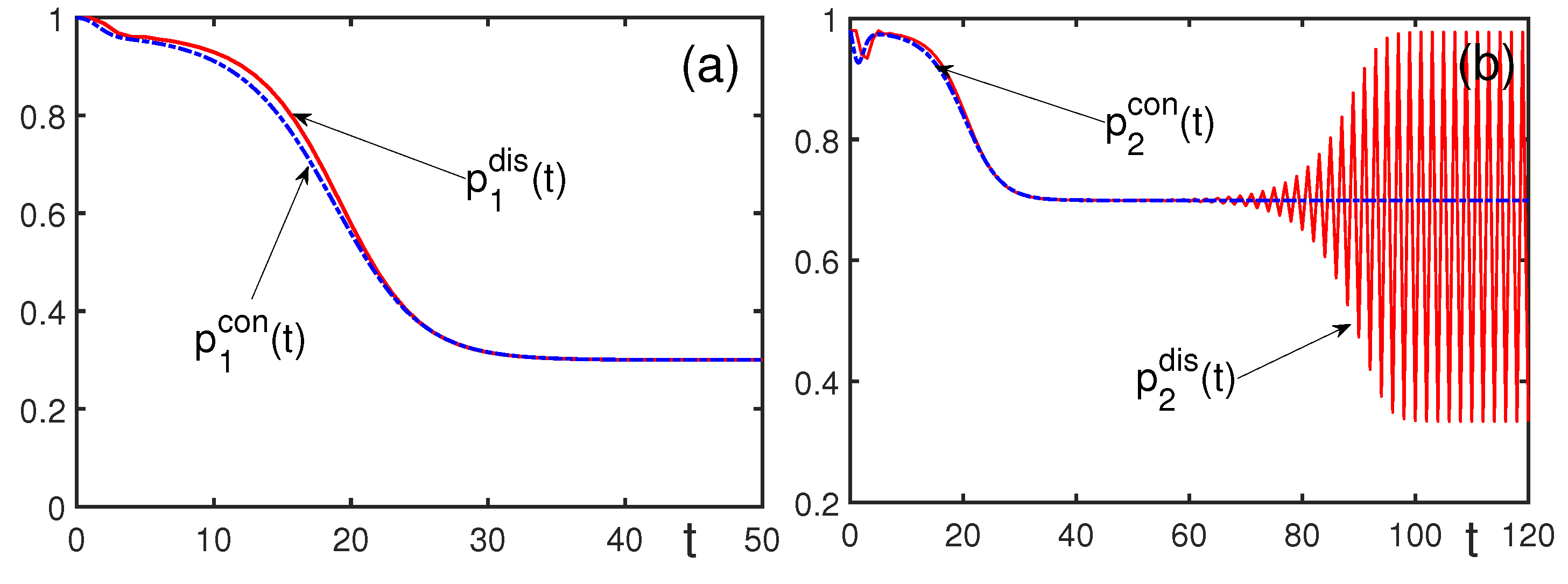

5. Continuous Dynamics of Affective Decision Making

6. Comparison of Discrete versus Continuous Algorithms

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Turkle, S. The Second Self: Computers and the Human Spirit; Granada: London, UK, 1984. [Google Scholar]

- Brehmer, B. Dynamic decision making: Human control of complex systems. Psychologica 1992, 81, 211–241. [Google Scholar] [CrossRef] [PubMed]

- Beresford, B.; Sloper, T. Understanding the Dynamics of Decision-Making and Choice: A Scoping Study of Key Psychological Theories to Inform the Design and Analysis of the Panel Study; University of York: York/Heslington, UK, 2008. [Google Scholar]

- Evertsz, R.; Thangarajah, J.; Ly, T. Practical Modelling of Dynamic Decision Making; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Perc, M.; Gomez-Gardenes, J.; Szolnoki, A.; Floria, L.M.; Moreno, Y. Evolutionary dynamics of group interactions on structured populations: A review. J. R. Soc. Interface 2013, 10, 20120997. [Google Scholar] [CrossRef] [PubMed]

- Perc, M.; Jordan, J.J.; Rand, D.G.; Wang, Z.; Boccaletti, S.; Szolnoki, A. Statistical physics of human cooperation. Phys. Rep. 2017, 687, 1–51. [Google Scholar] [CrossRef]

- Capraro, V.; Perc, M. Mathematical foundations of moral preferences. J. R. Soc. Interface 2021, 18, 20200880. [Google Scholar] [CrossRef] [PubMed]

- Jusup, M.; Holme, P.; Kanazawa, K.; Takayasu, M.; Romic, I.; Wang, Z.; Gecek, S.; Lipic, T.; Podobnik, B.; Wang, L.; et al. Social physics. Phys. Rep. 2022, 948, 1–148. [Google Scholar] [CrossRef]

- Yukalov, V.I. A resolution of St. Petersburg paradox. J. Math. Econ. 2021, 97, 102537. [Google Scholar] [CrossRef]

- Yukalov, V.I. Quantification of emotions in decision making. Soft Comput. 2022, 26, 2419–2436. [Google Scholar] [CrossRef]

- Yukalov, V.I. Quantum operation of affective artificial intelligence. Laser Phys. 2023, 33, 065204. [Google Scholar] [CrossRef]

- Gonzalez, C.; Vanyukov, P.; Martin, M.K. The use of microworlds to study dynamic decision making. Comput. Hum. Behav. 2005, 21, 273–286. [Google Scholar] [CrossRef]

- Barendregt, N.W.; Josić, K.; Kilpatrick, Z.P. Analyzing dynamic decision-making models using Chapman-Kolmogorov equations. J. Comput. Neurosci. 2019, 47, 205–222. [Google Scholar] [CrossRef]

- Behrens, T.E.; Woolrich, M.W.; Walton, M.E.; Rushworth, M.F. Learning the value of information in an uncertain world. Nat. Neurosci. 2007, 10, 1214. [Google Scholar] [CrossRef] [PubMed]

- Ossmy, O.; Moran, R.; Pfeffer, T.; Tsetsos, K.; Usher, M.; Donner, T.H. The timescale of perceptual evidence integration can be adapted to the environment. Curr. Biol. 2013, 23, 981–986. [Google Scholar] [CrossRef]

- Yu, A.J.; Cohen, J.D. Sequential effects: Superstition or rational behavior? Adv. Neural Inform. Process. Syst. 2008, 21, 1873–1880. [Google Scholar]

- Brea, J.; Urbanczik, R.; Senn, W. A normative theory of forgetting: Lessons from the fruit fly. PLoS Comput. Biol. 2014, 10, 1003640. [Google Scholar] [CrossRef] [PubMed]

- Urai, A.E.; Braun, A.; Donner, T.H. Pupil-linked arousal is driven by decision uncertainty and alters serial choice bias. Nature Commun. 2017, 8, 14637. [Google Scholar] [CrossRef]

- Baddeley, A. Working Memory, Thought, and Action; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Albrecht, S.V.; Christianos, F.; Schäfer, L. Multi-Agent Reinforcement Learning: Foundations and Modern Approaches; Massachusetts Institute of Technology: Cambridge, MA, USA, 2023. [Google Scholar]

- Von Neumann, J.; Morgenstern, O. Theory of Games and Economic Behavior; Princeton University Press: Princeton, NJ, USA, 1953. [Google Scholar]

- Savage, L.J. The Foundations of Statistics; Wiley: New York, NY, USA, 1954. [Google Scholar]

- Kurtz-David, V.; Persitz, D.; Webb, R.; Levy, D.J. The neural computation of inconsistent choice behaviour. Nat. Commun. 2019, 10, 1583. [Google Scholar] [CrossRef]

- Yaari, M.E. The dual theory of choice under risk. Econometrica 1987, 55, 95–115. [Google Scholar] [CrossRef]

- Reynaa, V.F.; Brainer, C.J. Dual processes in decision making and developmental neuroscience: A fuzzy-trace model. Developm. Rev. 2011, 31, 180–206. [Google Scholar] [CrossRef] [PubMed]

- Woodford, M. Modeling imprecision in perception, valuation and choice. Annu. Rev. Econ. 2020, 12, 579–601. [Google Scholar] [CrossRef]

- Luce, R.D. Individual Choice Behavior: A Theoretical Analysis; Wiley: New York, NY, USA, 1959. [Google Scholar]

- Luce, R.D.; Raiffa, R. Games and Decisions: Introduction and Critical Survey; Dover: New York, NY, USA, 1989. [Google Scholar]

- Brandt, R.B. The concept of rational belief. Monist 1985, 68, 3–23. [Google Scholar] [CrossRef]

- Swinburne, R. Faith and Reason; Oxford University: Oxford, UK, 2005. [Google Scholar]

- Steuer, R.E. Multiple Criteria Optimization: Theory, Computation and Application; Wiley: New York, NY, USA, 1986. [Google Scholar]

- Triantaphyllou, E. Multi-Criteria Decision Making: A Comparative Study; Kluwer: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Köksalan, M.; Wallenius, J.; Zionts, S. Multiple Criteria Decision Making: From Early History to the 21st Century; World Scientific: Sinapore, 2011. [Google Scholar]

- Basilio, M.P.; Pereira, V.; Costa, H.G.; Santos, M.; Ghosh, A. A systematic review of the applications of multi-criteria decision aid methods (1977–2022). Electronics 2022, 11, 1720. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Yukalova, E.P.; Sornette, D. Information processing by networks of quantum decision makers. Phys. A 2018, 492, 747–766. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Yukalova, E.P.; Sornette, D. Role of collective information in networks of quantum operating agents. Phys. A 2022, 598, 127365. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Yukalova, E.P. Self-excited waves in complex social systems. Physica D 2022, 433, 133188. [Google Scholar] [CrossRef]

- Martin, E.D. The Behavior of Crowds: A Psychological Study; Harper & Brothers: New York, NY, USA, 1920. [Google Scholar]

- Sherif, M. The Psychology of Social Norms; Harper & Brothers: New York, NY, USA, 1936. [Google Scholar]

- Smelser, N.J. Theory of Collective Behavior; Macmillan: New York, NY, USA, 1965. [Google Scholar]

- Merton, R.K. Social Theory and Social Structure; Macmillan: New York, NY, USA, 1968. [Google Scholar]

- Turner, R.H.; Killian, L.M. Collective Behavior; Prentice-Hall: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Hatfield, E.; Cacioppo, J.T.; Rapson, R.L. Emotional Contagion; Cambridge University Press: New York, NY, USA, 1993. [Google Scholar]

- Brunnermeier, M.K. Asset Pricing under Asymmetric Information: Bubbles, Crashes, Technical Analysis, and Herding; Oxford University Press: New York, NY, USA, 2001. [Google Scholar]

- Sornette, D. Why Stock Markets Crash; Princeton University Press: Princeton, NJ, USA, 2003. [Google Scholar]

- Yukalov, V.I. Selected topics of social physics: Equilibrium systems. Physics 2023, 5, 590–635. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Sornette, D. Manupulating decision making of typical agents. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 1155–1168. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Sornette, D. Quantitative predictions in quantum decision theory. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 366–381. [Google Scholar] [CrossRef]

- Read, D.; Loewenstein, G. Time and decision: Introduction to the special issue. J. Behav. Decis. Mak. 2000, 13, 141–144. [Google Scholar] [CrossRef]

- Frederick, S.; Loewenstein, G.; O’Donoghue, T. Time discounting and time preference: A critical review. J. Econ. Liter. 2002, 40, 351–401. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Sornette, D. Role of information in decision making of social agents. Int. J. Inform. Technol. Decis. Mak. 2015, 14, 1129–1166. [Google Scholar] [CrossRef]

- Kühberger, A.; Komunska, D.; Perner, J. The disjunction effect: Does it exist for two-step gambles? Org. Behav. Human Decis. Process. 2001, 85, 250–264. [Google Scholar] [CrossRef]

- Charness, G.; Rabin, M. Understanding social preferences with simple tests. Quart. J. Econ. 2002, 117, 817–869. [Google Scholar] [CrossRef]

- Cooper, D.; Kagel, J. Are two heads better than one? Team versus individual play in signaling games. Am. Econ. Rev. 2005, 95, 477–509. [Google Scholar] [CrossRef]

- Blinder, A.; Morgan, J. Are two heads better than one? An experimental analysis of group versus individual decision-making. J. Money Credit Bank. 2005, 37, 789–811. [Google Scholar]

- Sutter, M. Are four heads better than two? An experimental beauty-contest game with teams of different size. Econ. Lett. 2005, 88, 41–46. [Google Scholar] [CrossRef]

- Tsiporkova, E.; Boeva, V. Multi-step ranking of alternatives in a multi-criteria and multi-expert decision making environment. Inform. Sci. 2006, 176, 2673–2697. [Google Scholar] [CrossRef]

- Charness, G.; Karni, E.; Levin, D. Individual and group decision making under risk: An experimental study of Bayesian updating and violations of first-order stochastic dominance. J. Risk Uncert. 2007, 35, 129–148. [Google Scholar] [CrossRef]

- Charness, G.; Rigotti, L.; Rustichini, A. Individual behavior and group membership. Am. Econ. Rev. 2007, 97, 1340–1352. [Google Scholar] [CrossRef]

- Chen, Y.; Li, S. Group identity and social preferences. Am. Econ. Rev. 2009, 99, 431–457. [Google Scholar] [CrossRef]

- Liu, H.H.; Colman, A.M. Ambiguity aversion in the long run: Repeated decisions under risk and uncertainty. J. Econ. Psychol. 2009, 30, 277–284. [Google Scholar] [CrossRef]

- Charness, G.; Karni, E.; Levin, D. On the conjunction fallacy in probability judgement: New experimental evidence regarding Linda. Games Econ. Behav. 2010, 68, 551–556. [Google Scholar] [CrossRef]

- Sung, S.Y.; Choi, J.N. Effects of team management on creativity and financial performance of organizational teams. Org. Behav. Human Decis. Process. 2012, 118, 4–13. [Google Scholar] [CrossRef]

- Schultze, T.; Mojzisch, A.; Schulz-Hardt, S. Why groups perform better than individuals at quantitative judgement tasks. Org. Behav. Human Decis. Process. 2012, 118, 24–36. [Google Scholar] [CrossRef]

- Xu, Z. Approaches to multi-stage multi-attribute group decision making. Int. J. Inf. Technol. Decis. Mak. 2011, 10, 121–146. [Google Scholar] [CrossRef]

- Tapia Garcia, J.M.; Del Moral, M.J.; Martinez, M.A.; Herrera-Viedma, E. A consensus model for group decision-making problems with interval fuzzy preference relations. Int. J. Inf. Technol. Decis. Mak. 2012, 11, 709–725. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Kullback, S. Information Theory and Statistics; Peter Smith: Gloucester, MA, USA, 1978. [Google Scholar]

- James, W. The Principles of Psychology; Holt: New York, NY, USA, 1890. [Google Scholar]

- Fitts, P.M.; Posner, M.I. Human Performance; Brooks/Cole: Boston, MA, USA, 1967. [Google Scholar]

- Cowan, N. What are the differences between long-term, short-term, and working memory. Prog. Brain Res. 2008, 169, 323–338. [Google Scholar]

- Camina, E.; Güell, F. The neuroanatomical, neurophysiological and psychological basis of memory: Current models and their origins. Front. Pharmacol. 2017, 8, 438. [Google Scholar] [CrossRef]

- Gershenfeld, N.A. The Nature of Mathematical Modeling; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Matsumoto, A.; Szidarovszky, F. Dynamic Oligopolicies with Time Delays; Springer: Singapore, 2018. [Google Scholar]

- Yukalov, V.I. Selected topics of social physics: Nonequilibrium systems. Physics 2023, 5, 704–751. [Google Scholar] [CrossRef]

- Baumol, W.; Benhabib, J. Chaos: Significance, mechanism, and economic applications. J. Econ. Perspect. 1989, 3, 77–105. [Google Scholar] [CrossRef]

- Mayer-Kress, G.; Grossman, S. Chaos in the international arms race. Nature 1989, 337, 701–704. [Google Scholar]

- Richards, D. Is strategic decision making chaotic? Behav. Sci. 1990, 35, 219–232. [Google Scholar] [CrossRef]

- Radzicki, M.J. Institutional dynamics, deterministic chaos, and self-organizing systems. J. Econ. Issues 1990, 24, 57–102. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Rigney, D.R.; West, B.J. Chaos and fractals in physiology. Sci. Am. 1990, 263, 43–49. [Google Scholar]

- Cartwright, T.J. Planning and chaos theory. J. Am. Plann. Assoc. 1991, 57, 44–56. [Google Scholar] [CrossRef]

- Levy, D. Chaos theory and strategy: Theory, application, and managerial implications. Strateg. Manag. J. 1994, 15, 167–178. [Google Scholar] [CrossRef]

- Barton, S. Chaos, self-organization, and psychology. Am. Psychol. 1994, 49, 5–14. [Google Scholar] [CrossRef]

- Krippner, S. Humanistic psychology and chaos theory: The third revolution and the third force. J. Human. Psychol. 1994, 34, 48–61. [Google Scholar] [CrossRef]

- Marion, R. The Edge of Organisations: Chaos and Complexity Theories of Formal Social Systems; Sage Publications: Thousand Oaks, CA, USA, 1999. [Google Scholar]

- McKenna, R.J.; Martin-Smith, B. Decision making as a simplification process: New conceptual perspectives. Manag. Decis. 2005, 43, 821–836. [Google Scholar] [CrossRef]

- McBride, N. Chaos theory as a model for interpreting information systems in organisations. Inform. Syst. J. 2005, 15, 233–254. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yukalov, V.I.; Yukalova, E.P. Discrete versus Continuous Algorithms in Dynamics of Affective Decision Making. Algorithms 2023, 16, 416. https://doi.org/10.3390/a16090416

Yukalov VI, Yukalova EP. Discrete versus Continuous Algorithms in Dynamics of Affective Decision Making. Algorithms. 2023; 16(9):416. https://doi.org/10.3390/a16090416

Chicago/Turabian StyleYukalov, Vyacheslav I., and Elizaveta P. Yukalova. 2023. "Discrete versus Continuous Algorithms in Dynamics of Affective Decision Making" Algorithms 16, no. 9: 416. https://doi.org/10.3390/a16090416

APA StyleYukalov, V. I., & Yukalova, E. P. (2023). Discrete versus Continuous Algorithms in Dynamics of Affective Decision Making. Algorithms, 16(9), 416. https://doi.org/10.3390/a16090416