Abstract

In this paper, we present a new third-order family of iterative methods in order to compute the multiple roots of nonlinear equations when the multiplicity is known in advance. There is a plethora of third-order point-to-point methods, available in the literature; but our methods are based on geometric derivation and converge to the required zero even though derivative becomes zero or close to zero in vicinity of the required zero. We use the exponential fitted curve and tangency conditions for the development of our schemes. Well-known Chebyshev, Halley, super-Halley and Chebyshev–Halley are the special members of our schemes for . Complex dynamics techniques allows us to see the relation between the element of the family of iterative schemes and the wideness of the basins of attraction of the simple and multiple roots, on quadratic polynomials. Several applied problems are considered in order to demonstrate the performance of our methods and for comparison with the existing ones. Based on the numerical outcomes, we deduce that our methods illustrate better performance over the earlier methods even though in the case of multiple roots of high multiplicity.

1. Introduction

Finding the solution of nonlinear models that often come from Engineering, Chemistry, Economics and Applied Science is one of the most fascinating and difficult problems in the field of Numerical Analysis. Locating exactly such solutions is not possible, in general. Then, we focus on iterative methods. One of the most celebrated and popular methods with second-order of convergence is Newton–Raphson method, which is given by

However, it has two major issues: the first one is that (1) fails whether first derivative equals to zero or is close to it in the vicinity of the required zero of ; the second one is that it looses the second-order of convergence in the case of multiple roots. In order to overcome both problems, Schröder in [1,2] suggested a second-order modification of Newton’s method for multiple roots when multiplicity () of the required zero is known in advance, which is defined as

Later on, several high order modification of scheme (2) have been suggested and analyzed. A third-order Chebyshev’s method for multiple zeros suggested in [3,4], was defined as

We denote this method by , for the computational comparison. A detailed local convergence analysis of this method, for polynomial zeros, has been presented in [5]. Other variants of Chebyshev’s scheme for multiple zeros can be found at [6].

In addition, Obreshkov in [3] and later Hansen and Patrick in [7], proposed the following modified Halley’s method for multiple zeros:

where and , that is denoted by . Theorem 4.4 of [8] gives exact bounds of the convergence domain together with error estimates and an estimate of the asymptotic error constant of Halley’s method for multiple roots.

Ostrowski in [9], presented the scheme method for multiple roots given by

Method (5) is denoted by in the computational work.

Further, Osada [10], suggested the following well known third-order method for multiple roots:

For computational issues, we denote method (6) by .

Chun and Neta in [11] proposed another third-order method, which is defined by

Expression (7) is denoted by , for the sake of computational comparison. For a detailed historical survey about these methods we refer to [12].

However, the first problem in schemes (2) to (7), is that first-order derivative should neither be zero or nor close to zero in a close point to the required root. More information about these and other one-point schemes for simple and multiple roots can be found in texts from Traub [4], Amat and Busquier [13], Ortega and Rheinboldt [14], Ostrowski [15], Petković et al. [16], among others.

So, in order to overcome these problems, we suggest a new third-order family of iterative methods in the next section. The beauty of our scheme is that Chebyshev, Halley, super-Halley all are not only special case of our scheme, when . But, they also work, for multiple roots and failure cases ( at some different from the root). The development and order of convergence of our scheme are depicted in Section 2. Their stability is analyzed under the technique of complex discrete dynamics in Section 3. In Section 4, we assume several real life problems for checking the effectiveness and comparison of ours methods with the existing ones. We finish in Section 5 with some conclusions.

2. Construction of Higher-Order Scheme

The proposed iterative scheme is defined by fitting function in the following form:

where . By adopting the following tangency conditions at

we can easily obtain the values of disposable parameters and , which are given by

Therefore, at an estimation of the root

and it follows from (13) that the iterative expression that estimates the root is

This is one-parameter family of modified Newton’s method. In order to obtain quadratic convergence, the entity in the denominator should be largest in magnitude. For and , it reduces to classical Newton’s method.

So, the general iterative expression of our parametric class of iterative procedures is

where is a parameter and

This scheme does not fail if is very small or zero in the vicinity of the root.

For particular values of in (16), one can obtain

- 1.

- For , we have

- 2.

- For , we obtain

- 3.

- For , it yields

- 4.

- For , we get

- 5.

It can be checked that, for and , Formulas (15), (17)–(20) are reduced to Newton, Chebyshev, Halley, super-Halley and Chebyshev-Halley classical methods, respectively. In general, parameter is chosen to give a larger value to the denominator. We can further derive some new families of multipoint iterative methods free from second derivative by discretizing the second-order derivative involved in family (14).

In the next result, we prove that scheme (16) attains third-order of convergence for all .

Theorem 1.

Let us suppose is a multiple solution of multiplicity of function f. Consider that function is analytic in surrounding the required zero ξ. Then, scheme (16) has third-order of convergence, and it satisfies the error equation

Proof.

Let be a multiple zero of and , be the asymptotic error constants. Expanding , and about by Taylor’s series, we obtain

and

respectively.

Expression (26) demonstrates the third-order of convergence for all and . □

3. Stability Analysis

In the previous section, we have designed a modification of Chebyshev-Halley scheme able to find multiple roots of nonlinear equations, holding the third-order of convergence of their original partners. Our aim in this section is to check the role of the parameter of the new class of iterative method in the stability of the resulting scheme: are these methods capable to find also single roots? how far can be the initial estimation in order to assure convergence? are these situations different depending on the value of ? that is, is the stability different for the members of the set of parametric procedures?

In order to arrange this analysis, we apply our proposed schemes on the nonlinear function , with as multiple root of multiplicity m and a simple root at . In all cases, , although qualitatively similar results are found for other values of . This is the most simple nonlinear function containing two roots, one simple and one m-multiple. Although the results cannot be directly extrapolated to any nonlinear function, several analysis on different nonlinear problems confirm, in the numerical section, these results.

First, we introduce some dynamical terms mentioned in this paper (see, for instance, [17]). Let be a rational function, where is the Riemann sphere, the orbit of a point is given as:

where the k-th composition of the map R with itself is denoted by .

We analyze map R by classifying the starting points from the asymptotical performance of the orbits. In these terms, a point is called fixed point of R if ; it is a periodic point of period if and , for .

On the other hand, a point is called critical point of R if . The asymptotic behavior of the critical points is a key fact for analyzing the stability of the method: a classical result from Fatou and Julia (see, for instance, [18]) each immediate basin of attraction holds at least one critical point, that is, in the connected component of the basin of attraction holding the attractor there is also a critical point.

Moreover, a fixed point of R, , is said to be attracting if , or superattracting if ; it is repulsive if and parabolic if .

Indeed, when R depends also on one or several parameters, then is not a scalar, but a function of , for . Then, is called stability function of the fixed point and it gives us the character of the fixed point in terms of the value of , for .

On the other hand, let us also remark that when fixed and critical points are found such that they are not equivalent to the roots of the polynomial , then they are called strange fixed and free critical points, respectively.

The basin of attraction of an attractor is defined as:

The Fatou set of the rational function R, is the set of points whose orbits tend to an attractor (fixed point or periodic point). Its complement in is the Julia set, . So the basin of attraction of any fixed point belongs to the Fatou set and the boundary of the basin of attraction belongs to the Julia set.

3.1. Fixed Points and Stability

When the general class (20) is applied on polynomial with multiplicity , rational function is obtained depending of free complex parameter , that we denote by . This rational operator is

where . In order to get bounded the set of converging seeds of the multiple root, a Möbius transformation is made, getting the conjugate rational function of M by h, that we denote by ;

where . It sends the multiple root to , the simple one to , and the divergence of the original scheme to . Operators M and have the same qualitative properties of stability.

By solving equation , the fixed points of operator are obtained. Two of them, and , come from the roots of polynomial ; the rest of them, if exist, are strange fixed points. The asymptotical behavior of all the fixed points (both multiple and simple, strange or not) plays a key role in the stability of the iterative methods involved, as the convergence to fixed points different from the roots means an important drawback for an iterative method; so, we proceed below with this analysis.

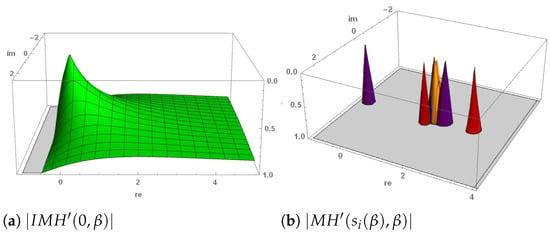

To analyze the stability of the fixed points of , we calculate its first derivative and evaluate it at every fixed point. Let us recall that the absolute value of this operator, is the stability function of fixed point . This stability function gives us information about the asymptotical behavior of . In general, the stability of other fixed points than the multiple root of depends on the value of parameter . From them, the following result can be stated.

Theorem 2.

Rational function has seven fixed points:

- (a)

- Coming from the roots of , is always superattracting and is a fixed point only if ; moreover, is attracting only if . It is parabolic if and repulsive in other cases.

- (b)

- is a parabolic strange fixed point, for any .

- (c)

- There exist also four strange fixed points, denoted by , , corresponding to the roots of the fourth-degree polynomial . These points can be attracting in small sets of the area of the complex plane.

Proof.

By solving equation , it is found that,

So, , (double) and the roots of polynomial are fixed points of . To check if (coming from the simple root of ) is a fixed point, the Inverse MH (IMH) operator

is defined. Therefore, it can be checked that and then, is a fixed point of .

Now, the stability of these fixed points must be analyzed. It is straightforward to check that , so is superattracting; moreover, what yields to the parabolic character of (the divergence of the original operator, previous to Möbius map). To check the stability of the fixed point coming from the simple root of , we use again the inverse operator, getting that

This allows us to conclude that the simple root of () is superattracting if , attracting if , but also that it is not a fixed point of if . Regarding the rest of strange fixed points, , , they can be attracting or even superattracting in some small areas of the complex plane, where their stability functions satisfy for any . □

In Figure 1, the stability functions of the fixed points are presented. The code color is as follows: is attracting for values of in orange regions, is attracting where parameter belongs to red areas; blue color corresponds to values of where is attracting and those where is attracting are presented in purple color. In all cases, the vertices of the cones correspond to the values of where the related strange fixed point is superattracting.

Figure 1.

Stability functions of and strange fixed points of MH operator.

So, it has been proven that the multiple root is always a superattracting fixed point, whereas the simple root can be repulsive (if ), parabolic for and attracting elsewhere. Moreover, there exist strange fixed points that can be attracting in small areas close to .

Let us remark that, pretending to converge only to the multiple root, values of will assure us the avoiding of basins of attraction of strange fixed points. However, if both simple and multiple root are searched, then the best values of the parameters to be chosen are (where the simple root is also superattracting, as the multiple one) or , where all the strange fixed points are repulsive.

3.2. Dynamical Planes

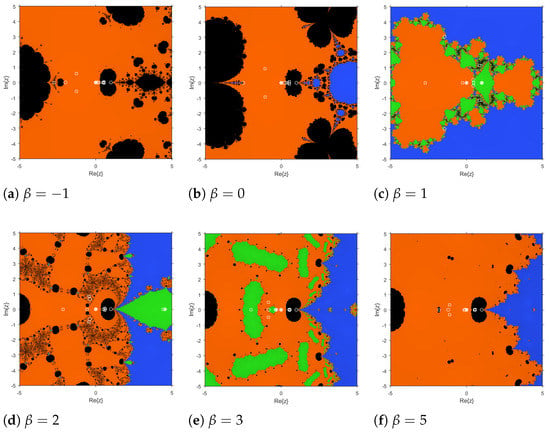

Each value of the parameter corresponds with a member of the class of iterative methods. When is fixed, the iterative process can be visualized in a dynamical plane. It is obtained by iterating the chosen element of the family under study, with each point of the complex plane as initial estimation. In this section, we have used a rectangular mesh of , that is, we have considered a partition of the real and imaginary intervals in 800 subintervals. The nodes of this rectangular mesh are our starting points in the iterative processes. We represent in blue color those points whose orbit converges to infinity and in orange color the points converging to , corresponding to the multiple root of (with a tolerance of ). Other colors (green, red, etc.) are used for those points whose orbit converges to one of the strange fixed points, marked as a white star in the figures if they are attracting or by a white circle if they are repulsive or parabolic. Moreover, a mesh point appears in black if it reaches the maximum number of 200 iterations without converging to any of the fixed points. The routines used appear in [19].

We plot the dynamical planes related to rational function , for . These planes can be visualized in Figure 2. In Figure 2a, orange area is the basin of attraction of (multiple root) and black points correspond to the attracting area of the parabolic fixed point and the basin of a periodic orbit of period 2; in cases of and (Figure 2b and f, respectively), the basin of attraction of the simple root () appears in blue, and in black the attracting area of the parabolic fixed point ; in Figure 2c–e, the basin of attraction of an strange fixed point appears in green color.

Figure 2.

Dynamical planes corresponding to operator.

The information deduced from these planes confirm the theoretical results from Theorem 2 regarding the stability of the strange fixed points. The parabolic character of is the main drawback of the methods, as it is placed in the Julia set. However, in practice the rounding error will yield an initial estimation in the basin of to another the attracting fixed point.

4. Numerical Results

Here, we check the effectiveness of our newly proposed iterative methods. We employ some elements of our schemes: specifically scheme (18) for , , and (19) for , , , denoted by , , , , and , respectively, to solve nonlinear equations mentioned in Examples 1–5.

We contrast our methods with existing schemes of same order: (3), (4), (5), (6) and (7), denoted by , , , and , respectively. In Table 1, Table 2 and Table 3, we display the values of absolute residual errors , number of iterations in order to attain the desired accuracy, and the absolute errors . Further, it is known that formula [20],

displays the computational order of convergence, COC. In this estimation, we need to know the exact zero of the nonlinear function to be solved. But, there are several practical situations where the exact solution is not accessible. Therefore, Cordero and Torregrosa in [21], suggested the following estimation of the order of convergence, known as ACOC

where and exact zero is not needed. In tables, *, and F stand for converge to undesired root, case of divergence and case of failure, respectively. Moreover, stands for does not exist.

Table 1.

Comparison of distinct iterative functions based on absolute residual errors and absolute difference between two consecutive iterations. * stands for convergence to undesired root.

Table 2.

Contrast of distinct iterative methods based on number of iterations. * stands for convergence to undesired root.

Table 3.

Contrast of distinct iterative methods based on Computational order of convergence. * stands for convergence to undesired root.

During the current numerical experiments with programming language Mathematica (Version-9), all computations have been done with 1000 digits of mantissa, which minimize round-off errors. In tables, denotes . The configuration of the computer used is given below:

- Processor: Intel(R) Core(TM) i7-4790 CPU @ 3.60GHz

- RAM: 8:00GB

- System type: 64-bit-Operating System, x64-based processor.

Now, we explore the performance of our proposed methods, in comparison with known ones, on some chemical and engineering problems, that can be found in [22,23,24,25], among others.

Example 1.

Van der Waals equation of state (Case of failure):

describes the nature of a real gas between two gases and , when we introduce the ideal gas equations. For calculating the volume V of the gases, we need the solution of preceding expression in terms of remaining constants,

By choosing the particular values of gases and , we can easily obtain the values for n, P and T. Then, it yields

Function has 3 zeros, and among them is a multiple zero of multiplicity and is a simple zero. At the initial guess which is quite close to our desired zero, all the known methods fail to work because the derivative turns zero at this initial point. But, our methods work even if the derivative is zero or close to zero. So, our methods are better than the existing one-point third-order methods. The results can be found in Table 1, Table 2 and Table 3.

Example 2.

Planck’s radiation problem (Case of failure and divergence):

Consider the Planck’s radiation equation that determines the spectral density of electromagnetic radiations released by a black-body at a given temperature, and at thermal equilibrium as

where T, y, k, h, and c denotes the absolute temperature of the black-body, wavelength of radiation, Boltzmann constant, Plank’s constant, and speed of light in the medium (vacuum), respectively. To evaluate the wavelength y which results to the maximum energy density , set . Then, we obtain the following equation,

Further, the nonlinear equation is reformulated by setting as follows:

The exact zero of multiplicity is , and with this solution one can easily find the wavelength y form the relation . Here, we can see that derivative is zero at . So, obviously the methods like , , , and fail to work. With another choice of initial guess , we found that the derivative is not zero but very close to zero. In this case, methods , and diverge from the required root. On the other hand, our method is able to converge under these conditions. Methods and also converge to the required root but they are slower than our method , because they are consuming 12 and 6 numbers of iterations respectively, as compared to our method that takes only 5 iterations. In this regards, please see the computational results in Table 1, Table 2 and Table 3.

Example 3.

Jumping and Oscillating problem, when we have infinite numbers of roots:

Here, we choose the following function for the computational results:

Function has infinite number of zeros but our desired zero is with multiplicity five. With the help this example, we aim to show that the convergence of an iterative method is not always guaranteed, even though we choose a nearest initial point. For example, methods and with are unsuitable because of numerical instability, which occurs due to large value of the correction factor and it converges to and , respectively, far away from the required zero. On the other hand, Osada’s method diverges for the starting point . Therefore, care must be taken to ensure that the root obtained is the desired one. But, there is no such problems with our methods. The details of the computational results can be found in Table 1, Table 2 and Table 3.

Example 4.

Convergence to the undesired root problem:

So, we consider an academical problem, which is given as follows:

Expression (31) has an infinite number of roots with multiplicity three, being our desired root . It can be seen that , , and converge to undesired zero , after finite number of iteration when . On the other hand, with initial guess methods , , and consume 1280 number of iterations as compared to 6. The computational outcomes are given in Table 1, Table 2 and Table 3.

Example 5.

Finally, we consider an academical problem with higher order of multiplicity , which is defined by

Expression (32) has a zero of multiplicity 100. We show the computational results based on the function in Table 1, Table 2 and Table 3. From the numerical results, we conclude that our methods and need the same number of iterations in order to reach the desired accuracy, but they have smaller residual errors and smaller differences between two consecutive iterations as compared to other existing methods.

5. Concluding Remarks

In this paper, we have suggested a new family of point-to-point third-order iterative methods that works for multiple roots (), where the multiplicity of the root is known in advance; moreover, they converge to the required root even in the case of failure or divergence, or oscillation, retaining the third-order of convergence. In addition to this, one of the most popular third-order methods namely Chebyshev, Halley and super-Halley all are the special cases of our scheme, when . Our scheme is based on a geometrical derivation rather than derivation on some interpolations or weight function or analytic approaches, etc. Complex dynamics has been used to check the stability of the class of iterative methods. Finally, based on the numerical results, we concluded that our methods not only perform better in the case of failure, divergence and oscillations but also in the convergence cases. In the future, we will work on the multi-point variants of our family (16) by estimating the second-order derivatives.

Author Contributions

Conceptualization, V.K. and R.B.; software, M.R.; validation, A.C. and J.R.T.; formal analysis, V.K.; investigation, R.B. and M.R.; writing—original draft preparation, M.R.; writing—review and editing, A.C. and J.R.T.; supervision, V.K. and R.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers for their comments and suggestions, as they have improved the final version of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schröder, E. Über unendlich viele Algorithmen zur Auflösung der Gleichungen. Math. Ann. 1870, 2, 317–365. [Google Scholar] [CrossRef]

- Proinov, P.D.; Ivanov, S.I. Convergence of Schröder’s method for polynomial zeros of unknown multiplicity. C. R. Acad. Bulg. Sci. 2013, 66, 1073–1080. [Google Scholar] [CrossRef]

- Obreshkov, N. On the numerical solution of equations. Annuaire Univ. Sofia Fac. Sci. Phys. Math. 1963, 56, 73–83. (In Bulgarian) [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall Series in Automatic Computation: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Ivanov, S.I. On the convergence of Chebyshev’s method for multiple polynomial zeros. Results. Math. 2016, 69, 93–103. [Google Scholar] [CrossRef]

- Neta, B. New third order nonlinear solvers for multiple roots. Appl. Math. Comput. 2008, 202, 162–170. [Google Scholar] [CrossRef]

- Hansen, T.; Patrick, M. A family of root finding methods. Numer. Math. 1977, 27, 257–269. [Google Scholar] [CrossRef]

- Ivanov, S.I. A general approach to the study of the convergence of Picard iteration with an application to Halley’s method for multiple zeros of analytic functions. J. Math. Anal. Appl. 2022, 513, 126238. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solution of Equations in Euclidean and Banach Spaces; Academic Press: New York, NY, USA, 1973. [Google Scholar]

- Osada, N. An optimal multiple root-finding method of order three. Comput. Appl. Math. 1994, 51, 131–133. [Google Scholar] [CrossRef]

- Chun, C.; Neta, B. A third-order modification of Newton’s method for multiple roots. Appl. Math. Comput. 2009, 211, 474–479. [Google Scholar] [CrossRef]

- Ivanov, S.I. Unified Convergence Analysis of Chebyshev-Halley Methods for Multiple Polynomial Zeros. Mathematics 2022, 10, 135. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S. Advances in Iterative Methods for Nonlinear Equations; Springer: Switzerland, Switzerland, 2016. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solutions of Nonlinears Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Ostrowski, A.M. Solution of Equations and System of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Petković, M.; Neta, B.; Petković, L.; Džunić, J. Multipoint Methods for Solving Nonlinear Equations; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Blanchard, P. Complex Analytic Dynamics on the Riemann Sphere. Bull. AMS 1984, 11, 85–141. [Google Scholar] [CrossRef]

- Beardon, A.F. Iteration of rational functions. In Graduate Texts in Mathematics; Springer: New York, NY, USA, 1991. [Google Scholar]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R. Drawing Dynamical and Parameters Planes of Iterative Families and Methods. Sci. World J. 2013, 2013, 780153. [Google Scholar] [CrossRef] [PubMed]

- Weerakoon, S.; Fernando, T.G.I. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Constantinides, A.; Mostoufi, N. Numerical Methods for Chemical Engineers with MATLAB Applications; Prentice Hall PTR: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Douglas, J.M. Process Dynamics and Control; Prentice Hall: Englewood Cliffs, NJ, USA, 1972; Volume 2. [Google Scholar]

- Shacham, M. An improved memory method for the solution of a nonlinear equation. Chem. Eng. Sci. 1989, 44, 1495–1501. [Google Scholar] [CrossRef]

- Balaji, G.V.; Seader, J.D. Application of interval Newton’s method to chemical engineering problems. Rel. Comput. 1995, 1, 215–223. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).