Automatic Generation of Literary Sentences in French

Abstract

1. Introduction

2. Related Work

3. The MegaLitefr Corpus

4. A Literary ATG Model

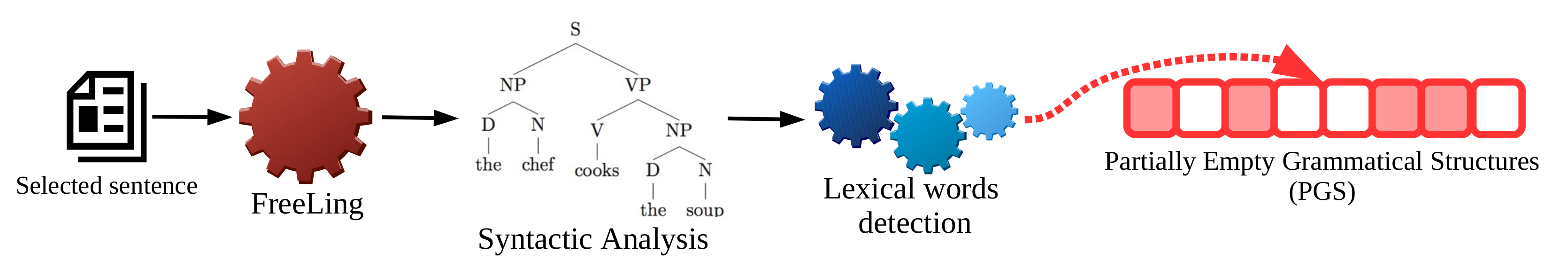

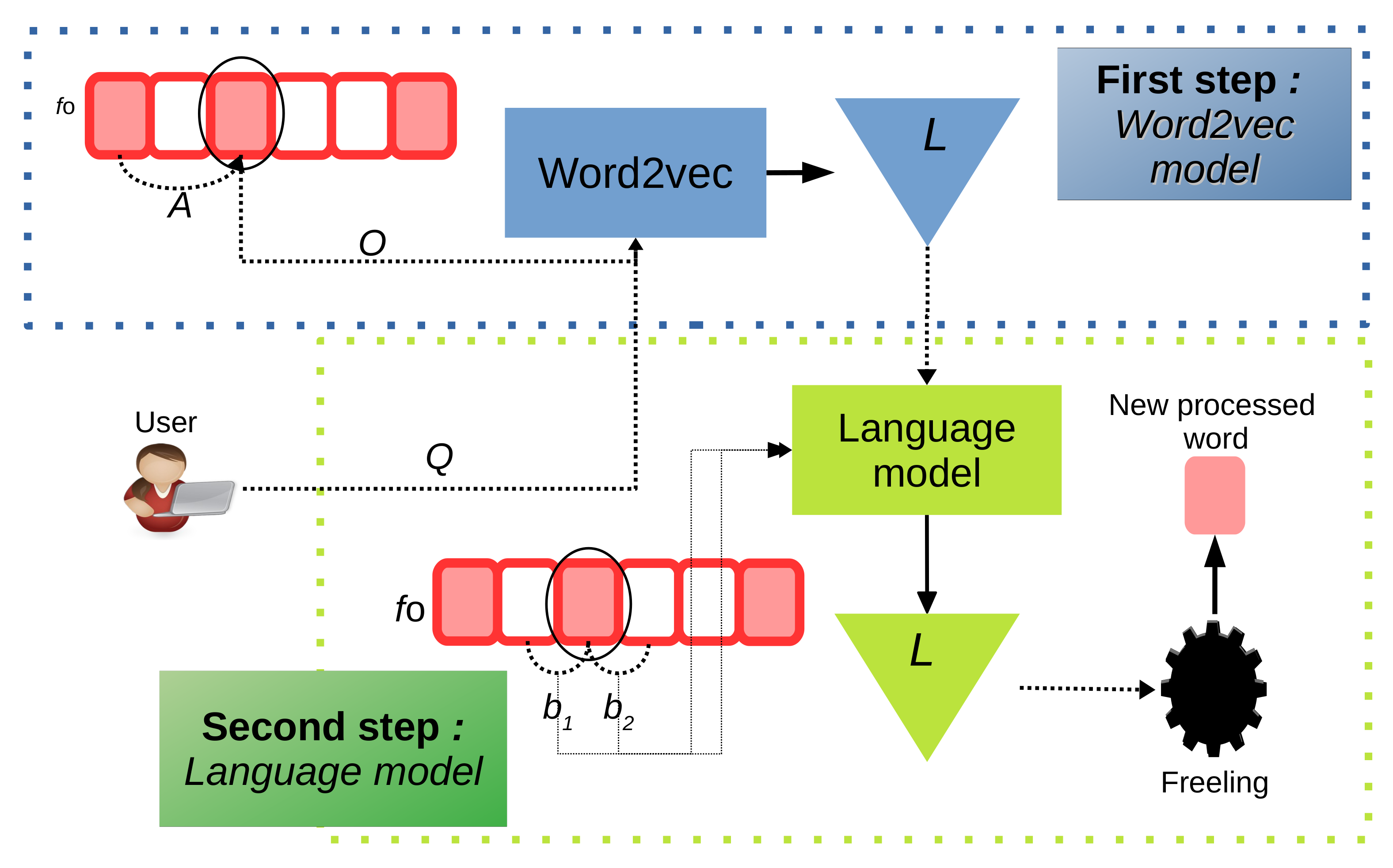

- The first step consists of generating Partially empty Grammatical Structures (PGSs) corresponding to the words of a chosen sentence. For this purpose, we implemented a procedure based on the canned text method, which is efficient for parsing in ATG tasks [29].

- In the second step, each tag (morpho-syntactic label) of the PGS generated in the first step is substituted by an alternative word, which is selected by means of a semantic analysis carried out with the aid of a procedure based on Word2vec [7].

4.1. Canned-Text-Based Procedure

- -

- Sentences must convey a specific, clear message that does not require a prior context to be understood.

- -

- The length N of each sentence must be in the interval .

- -

- Sentences must have three or more lexical words.

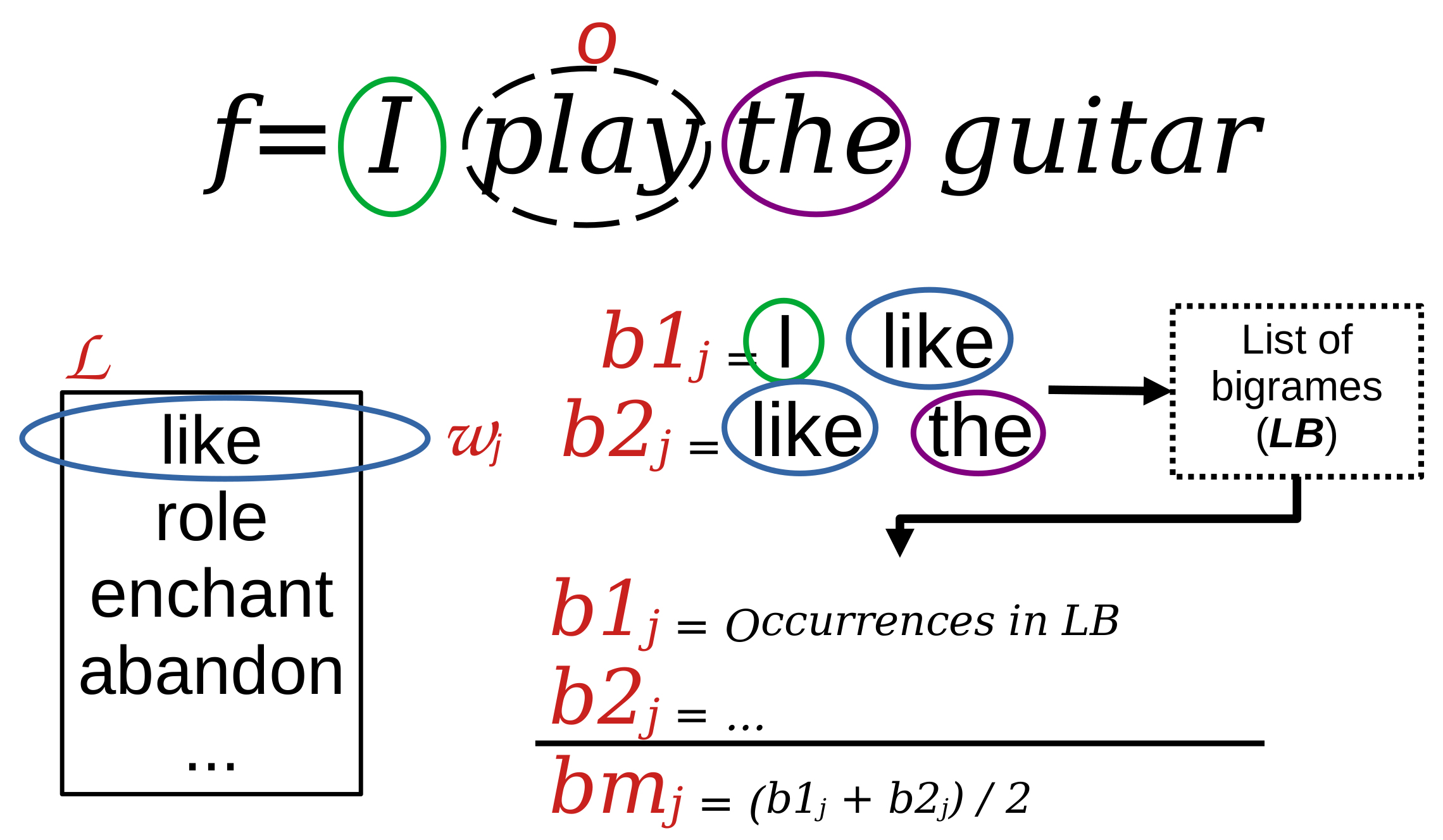

4.2. Choice of Vocabulary to Generate the New Phrase

- -

- Q represents the context specified by the user;

- -

- o is the original word in f, whose POS tag is to be replaced to generate the new phrase;

- -

- A is the word previous to o in f.

Bigram Analysis

5. Experiments

- -

- The number of learning periods executed with MegaLitefr, iterations = 10;

- -

- The least number of times a word must appear in MegaLitefr, to be included in the model vocabulary, minimum count = 3;

- -

- The dimension of the embedding vectors, vector size = 100;

- -

- The number of words adjacent to a specific word in a sentence that will be considered during the training phase, window size = 5.

- Il n’y a pas de passion sans impulsif. (There is no passion without impulse.)

- Il n’y a pas d’affection sans estime. (There is no affection without esteem.)

- Il n’y a ni aimante ni mélancolie en sérénité. (There is neither love nor melancholy in serenity.)

- Il n’y a ni fraternelle ni inquiétude en anxiété. (There is no brotherhood or concern in anxiety.)

- En solitude, la première tendresse est la plus forte. (In solitude, the first tenderness is the strongest.)

- Il n’y a pas de confusion sans amour. (There is no confusion without love.)

- Il n’y a pas de liaison sans ordre. (There is no connection without order.)

- En douleur, la première mélancolie est la plus grande. (In pain, the first melancholy is the greatest.)

- En union, la première sollicitude est la plus belle. (In union, the first concern is the most beautiful.)

- Il n’y a ni fraternelle ni faiblesse en impuissance. (There is no brotherhood or weakness in powerlessness.)

- Il n’y a pas de sympathie sans émoi. (There is no sympathy without emotion.)

- Il n’y a ni amie ni honte en peur. (There is no friend or shame in fear.)

Evaluation Protocol and Results

6. Conclusions

Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sharples, M. How We Write: Writing as Creative Design; Routledge: London, UK, 1996. [Google Scholar]

- Sridhara, G.; Hill, E.; Muppaneni, D.; Pollock, L.; Vijay-Shanker, K. Towards automatically generating summary comments for Java methods. In Proceedings of the IEEE/ACM International Conference on Automated Software Engineering, Antwerp, Belgium, 20–24 September 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 43–52. [Google Scholar] [CrossRef]

- Mikolov, T.; Zweig, G. Context dependent recurrent neural network language model. In Proceedings of the 2012 IEEE Spoken Language Technology Workshop (SLT), Miami, FL, USA, 2–5 December 2012; IEEE: New York, NY, USA, 2012; pp. 234–239. [Google Scholar] [CrossRef]

- Boden, M.A. The Creative Mind: Myths and Mechanisms; Routledge: London, UK, 2004. [Google Scholar]

- De Carvalho, L.A.V.; Mendes, D.Q.; Wedemann, R.S. Creativity and Delusions: The dopaminergic modulation of cortical maps. In Proceedings of the 2003 International Conference on Computational Science (ICCS 2003), St. Petersburg, Russia, 2–4 June 2003; Sloot, P.M.A., Abramson, D., Bogdanov, A.V., Dongarra, J.J., Zomaya, A.Y., Gorbachev, Y.E., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2657, pp. 511–520. [Google Scholar] [CrossRef]

- Moreno-Jiménez, L.G.; Torres-Moreno, J.M.; Wedemann, R.S.; SanJuan, E. Generación automática de frases literarias. Linguamática 2020, 12, 15–30. [Google Scholar] [CrossRef]

- Mikolov, T.; Yih, W.t.; Zweig, G. Linguistic regularities in continuous space word representations. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL—HLT 2013), Atlanta, GA, USA, 9–14 June 2013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 746–751. [Google Scholar]

- Ormazabal, A.; Artetxe, M.; Agirrezabal, M.; Soroa, A.; Agirre, E. PoeLM: A meter-and rhyme-controllable language model for unsupervised poetry generation. arXiv 2022, arXiv:2205.12206. [Google Scholar]

- Ta, H.T.; Rahman, A.B.S.; Majumder, N.; Hussain, A.; Najjar, L.; Howard, N.; Poria, S.; Gelbukh, A. WikiDes: A Wikipedia-based dataset for generating short descriptions from paragraphs. Inf. Fusion 2023, 90, 265–282. [Google Scholar] [CrossRef]

- Bena, B.; Kalita, J. Introducing aspects of creativity in automatic poetry generation. In Proceedings of the 16th International Conference on Natural Language Processing, Alicante, Spain, 28–30 June 2019; NLP Association of India: International Institute of Information Technology: Hyderabad, India, 2019; pp. 26–35. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2022, 1–36. [Google Scholar] [CrossRef]

- Szymanski, G.; Ciota, Z. Hidden Markov models suitable for text generation. In Proceedings of the WSEAS International Conference on Signal, Speech and Image Processing (WSEAS ICOSSIP 2002), Budapest, Hungary, 10–12 December 2002; Mastorakis, N., Kluev, V., Koruga, D., Eds.; WSEAS Press: Athens, Greece, 2002; pp. 3081–3084. [Google Scholar]

- Molins, P.; Lapalme, G. JSrealB: A bilingual text realizer for Web programming. In Proceedings of the 15th European Workshop on Natural Language Generation (ENLG), Brighton, UK, September 2015; Association for Computational Linguistics: Stroudsburg PA, USA, 2015; pp. 109–111. [Google Scholar] [CrossRef]

- Van de Cruys, T. Automatic poetry generation from prosaic text. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 2471–2480. [Google Scholar] [CrossRef]

- Clark, E.; Ji, Y.; Smith, N.A. Neural text generation in stories using entity representations as context. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL—HLT 2018), New Orleans, LA, USA, 1–6 June 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; Volume 1, pp. 2250–2260. [Google Scholar] [CrossRef]

- Fan, A.; Lewis, M.; Dauphin, Y. Hierarchical neural story generation. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; Volume 1, pp. 889–898. [Google Scholar] [CrossRef]

- Lin, C.Y. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL’02), Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume 1 (Long and Short Papers), pp. 4171–4186. [Google Scholar] [CrossRef]

- Oliveira, H.G. A survey on intelligent poetry generation: Languages, features, techniques, reutilisation and evaluation. In Proceedings of the 10th International Conference on Natural Language Generation (CNLG), Santiago de Compostela, Spain, 4–7 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 11–20. [Google Scholar] [CrossRef]

- Oliveira, H.G.; Cardoso, A. Poetry generation with PoeTryMe. In Computational Creativity Research: Towards Creative Machines; Atlantis Press: Paris, France, 2015; Volume 7, pp. 243–266. [Google Scholar] [CrossRef]

- Agirrezabal, M.; Arrieta, B.; Astigarraga, A.; Hulden, M. POS-tag based poetry generation with WordNet. In Proceedings of the 14th European Workshop on Natural Language Generation (ENLG), Sofia, Bulgaria, 8–9 August 2013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 162–166. [Google Scholar]

- Zhang, X.; Lapata, M. Chinese poetry generation with recurrent neural networks. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 24–29 October 2014; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 670–680. [Google Scholar] [CrossRef]

- Moreno-Jiménez, L.G.; Torres-Moreno, J.M. MegaLite-2: An extended bilingual comparative literary corpus. In Proceedings of the Intelligent Computing; Arai, K., Ed.; Springer: Cham, Switzerland, 2022; pp. 1014–1029. [Google Scholar] [CrossRef]

- Moreno-Jiménez, L.G.; Torres-Moreno, J.M.; Wedemann, R.S. Literary natural language generation with psychological traits. In Natural Language Processing and Information Systems—NLDB 2020; Métais, E., Meziane, F., Horacek, H., Cimiano, P., Eds.; Springer: Cham, Switzerland, 2020; Volume 12089. [Google Scholar] [CrossRef]

- Moreno-Jiménez, L.G.; Torres-Moreno, J.M.; Wedemann, R.S. Generación automática de frases literarias: Un experimento preliminar. Proces. Del Leng. Nat. 2020, 65, 29–36. [Google Scholar]

- Moreno-Jiménez, L.G.; Torres-Moreno, J.M.; Wedemann, R.S. A preliminary study for literary rhyme generation based on neuronal representation, semantics and shallow parsing. In Proceedings of the XIII Brazilian Symposium in Information and Human Language Technology and Collocated Events (STIL 2021), Online, 29 Novemeber–3 December 2021; Ruiz, E.E.S., Torrent, T.T., Eds.; Sociedade Brasileira de Computação: Rio de Janeiro, Brasil, 2021; pp. 190–198. [Google Scholar] [CrossRef]

- Morgado, I.; Moreno-Jiménez, L.G.; Torres-Moreno, J.M.; Wedemann, R.S. MegaLitePT: A corpus of literature in Portuguese for NLP. In Proceedings of the 11th Brazilian Conference on Intelligent Systems, Part II (BRACIS 2022), Campinas, Brasil, 28 November–1 December 2022; LNAI, Xavier-Junior, J.C., Rios, R.A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; Volume 13654, pp. 251–265. [Google Scholar] [CrossRef]

- Van Deemter, K.; Theune, M.; Krahmer, E. Real versus template-based natural language generation: A false opposition? Comput. Linguist. 2005, 31, 15–24. [Google Scholar] [CrossRef]

- Padró, L.; Stanilovsky, E. FreeLing 3.0: Towards wider multilinguality. In Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12), Istanbul, Turkey, 23–25 May 2012; European Language Resources Association (ELRA): Luxemburg, 2012; pp. 2473–2479. [Google Scholar]

- Drozd, A.; Gladkova, A.; Matsuoka, S. Word embeddings, analogies, and machine learning: Beyond king - man + woman = queen. In Proceedings of the 26th International Conference on Computational Linguistics: Technical Papers (COLING 2016), Osaka, Japan, 11–16 December 2016; The COLING 2016 Organizing Committee: Osaka, Japan, 2016; pp. 3519–3530. [Google Scholar]

- Kiddon, C.; Zettlemoyer, L.; Choi, Y. Globally coherent text generation with neural checklist models. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing (EMNLP’16), Austin, TX, USA, 1–5 November 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 329–339. [Google Scholar] [CrossRef]

- Turing, A.M. Computing machinery and intelligence. In Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer; Epstein, R., Roberts, G., Beber, G., Eds.; Springer: Dordrecht, The Netherlands, 2009; pp. 23–65. [Google Scholar] [CrossRef]

- Torres-Moreno, J.M.; Molina, A.; Sierra, G. La energía textual como medida de distancia en agrupamiento de definiciones. In Proceedings of the 10th International Conference on Statistical Analysis of Textual Data (JADT 2010), Rome, Italy, 6–11 June 2010; pp. 215–226. [Google Scholar]

| Documents | Phrases | Words | Characters | Authors | |

|---|---|---|---|---|---|

| MegaLitefr | 2690 | 10 M | 182 M | 1081 M | 620 |

| Mean per document | - | 3.6 K | 67.9 K | 401 K |

| Plays | Poems | Narratives | |

|---|---|---|---|

| MegaLitefr | 97 (3.61%) | 55 (2.04%) | 2538 (94.35%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreno-Jiménez, L.-G.; Torres-Moreno, J.-M.; Wedemann, R.S. Automatic Generation of Literary Sentences in French. Algorithms 2023, 16, 142. https://doi.org/10.3390/a16030142

Moreno-Jiménez L-G, Torres-Moreno J-M, Wedemann RS. Automatic Generation of Literary Sentences in French. Algorithms. 2023; 16(3):142. https://doi.org/10.3390/a16030142

Chicago/Turabian StyleMoreno-Jiménez, Luis-Gil, Juan-Manuel Torres-Moreno, and Roseli Suzi. Wedemann. 2023. "Automatic Generation of Literary Sentences in French" Algorithms 16, no. 3: 142. https://doi.org/10.3390/a16030142

APA StyleMoreno-Jiménez, L.-G., Torres-Moreno, J.-M., & Wedemann, R. S. (2023). Automatic Generation of Literary Sentences in French. Algorithms, 16(3), 142. https://doi.org/10.3390/a16030142