Abstract

Hyperparameters in machine learning (ML) have received a fair amount of attention, and hyperparameter tuning has come to be regarded as an important step in the ML pipeline. However, just how useful is said tuning? While smaller-scale experiments have been previously conducted, herein we carry out a large-scale investigation, specifically one involving 26 ML algorithms, 250 datasets (regression and both binary and multinomial classification), 6 score metrics, and 28,857,600 algorithm runs. Analyzing the results we conclude that for many ML algorithms, we should not expect considerable gains from hyperparameter tuning on average; however, there may be some datasets for which default hyperparameters perform poorly, especially for some algorithms. By defining a single hp_score value, which combines an algorithm’s accumulated statistics, we are able to rank the 26 ML algorithms from those expected to gain the most from hyperparameter tuning to those expected to gain the least. We believe such a study shall serve ML practitioners at large.

1. Introduction

In machine learning (ML), a hyperparameter is a parameter whose value is given by the user and used to control the learning process. This is in contrast to other parameters, whose values are obtained algorithmically via training.

Hyperparameter tuning, or optimization, is often costly and software packages invariably provide hyperparameter defaults. Practitioners will often tune these—either manually or through some automated process—to gain better performance. They may resort to previously reported “good” values or perform some hyperparameter-tuning experiments.

In recent years, there has been increased interest in software that performs automated hyperparameter tuning, such as Hyperopt [1] and Optuna [2]. The latter, for example, is a state-of-the-art hyperparameter tuner which formulates the hyperparameter optimization problem as a process of minimizing or maximizing an objective function that takes a set of hyperparameters as an input and returns its (validation) score. It also provides pruning, i.e., automatic early stopping of unpromising trials. Moreover, our experience has shown it to be fairly easy to set up, and indeed we used it successfully in our research [3,4].

A number of recent works, which we shall review, have tried to assess the importance of hyperparameters through experimentation. We propose herein to examine the issue of hyperparameter tuning through a significantly more extensive empirical study than has been performed to date, involving multitudinous algorithms, datasets, metrics, and hyperparameters. Our aim is to assess just how much of a performance gain can be had per algorithm by employing a performant tuning method.

2. Previous Work

There has been a fair amount of work on hyperparameters and it is beyond this paper’s scope to provide a detailed review. For that, we refer the reader to the recent comprehensive review: “Hyperparameter Optimization: Foundations, Algorithms, Best Practices and Open Challenges” [5].

Interestingly, Ref. [5] wrote that “we would like to tune as few HPs [hyperparameters] as possible. If no prior knowledge from earlier experiments or expert knowledge exists, it is common practice to leave other HPs at their software default values⋯”.

Ref. [5] also noted that “more sophisticated HPO [hyperparameter optimization] approaches in particular are not as widely used as they could (or should) be in practice” (the paper does not include an empirical study). We shall use a sophisticated HPO approach herein.

We present below only recent papers that are directly relevant to ours, “ancestors” of the current study, as it were.

A major work by [6] formalized the problem of hyperparameter tuning from a statistical point of view, defined data-based defaults, and suggested general measures quantifying the tunability of hyperparameters. The overall tunability of an ML algorithm or that of a specific hyperparameter was essentially defined by comparing the gain attained through tuning with some baseline performance, usually attained when using default hyperparameters. They also conducted an empirical study involving 38 binary classification datasets from OpenML, and six ML algorithms: elastic net, decision tree, k-nearest neighbors, support vector machine, random forest, and xgboost. Tuning was performed through a random search. They found that some algorithms benefited from tuning more than others, with elastic net and svm showing the highest improvement and random forest showing the lowest.

Ref. [7] presented a methodology to determine the importance of tuning a hyperparameter based on a non-inferiority test and tuning risk, i.e., the performance loss that is incurred when a hyperparameter is not tuned, but set to a default value. They performed an empirical study involving 59 datasets from OpenML and two ML algorithms: support vector machine and random forest. Tuning was performed through random search. Their results showed that leaving particular hyperparameters at their default value is noninferior to tuning these hyperparameters. In some cases, leaving the hyperparameter at its default value even outperformed tuning it.

Finally, Ref. [8] recently presented results and insights pertaining to the black-box optimization (BBO) challenge at NeurIPS 2020. Analyzing the performance of 65 submitted entries, they concluded that, “Bayesian optimization is superior to random search for machine learning hyperparameter tuning” (indeed this is the paper’s title) (NB: a random search is usually better than a grid search, e.g., [9]). We shall use Bayesian optimization herein.

The Current Study

After examining these recent studies, we made the following decisions regarding the experiments that we shall carry out herein:

- Consider significantly more algorithms;

- Consider significantly more datasets;

- Consider Bayesian optimization, rather than weaker-performing random search or grid search.

3. Experimental Setup

Our setup involves numerous runs across a plethora of algorithms and datasets, comparing tuned and untuned performance over six distinct metrics. Below, we detail the following setup components:

- Datasets;

- Algorithms;

- Metrics;

- Hyperparameter tuning;

- Overall flow.

3.1. Datasets

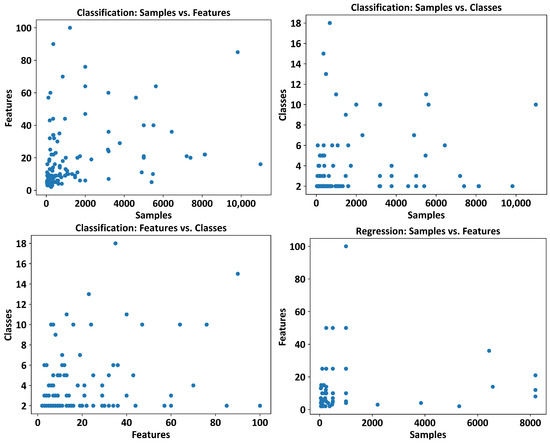

We used the recently introduced PMLB repository [10], which includes 166 classification datasets and 122 regression datasets. As we were interested in performing numerous runs, we retained the 144 classification datasets with number of samples ≤ 10,992 and number of features ≤ 100, and the 106 regression datasets with number of samples ≤ 8192 and number of features ≤ 100. Figure 1 presents a summary of dataset characteristics. Note that classification problems are both binary and multinomial.

Figure 1.

Characteristics of the 144 classification datasets and 106 regression datasets used in our study.

3.2. Algorithms

We investigated 26 ML algorithms—13 classifiers and 13 regressors—using the following software packages: scikit-learn [11], xgboost [12], and lightgbm [13]. The algorithms are listed in Table 1, along with the hyperparameter ranges or sets used in the hyperparameter search (described below).

Table 1.

Value ranges or sets used by Optuna for hyperparameter tuning. For ease of reference, we use the function names of the respective software packages: scikit-learn, xgboost, and lightgbm. Values sampled from a range in the log domain are marked as ‘log’, otherwise sampling is linear (uniform).

3.3. Metrics

We used three separate metrics for classification problems:

- Accuracy: a fraction of correct predictions (∈).

- Balanced accuracy: an accuracy score that takes into account class imbalances, essentially the accuracy score with class-balanced sample weights [14] (∈).

- F1 score: a harmonic mean of precision and recall; in the multi-class case, this is the average of the F1 score per class with weighting (∈)

We used three separate metrics for regression problems:

- R2 score: an (coefficient of determination) regression score function (∈).

- Adjusted R2 score: a modified version of the R2 score that adjusts for the number of predictors in a regression model. It is defined as , with being the R2 score, n being the number of samples, and p being the number of features (∈).

- Complement RMSE: a complement of root mean squared error (RMSE), defined as (∈). This has the same range as the previous two metrics.

3.4. Hyperparameter Tuning

For hyperparameter tuning, we used Optuna, a state-of-the-art automatic hyperparameter optimization software framework [2]. Optuna offers a define-by-run-style user API where one can dynamically construct the search space, and an efficient sampling algorithm and pruning algorithm. Moreover, our experience has shown it to be fairly easy to set up. Optuna formulates the hyperparameter optimization problem as a process of minimizing or maximizing an objective function that takes a set of hyperparameters as an input and returns its (validation) score. We used the default tree-structured Parzen estimator (TPE) Bayesian sampling algorithm. Optuna also provides pruning, i.e., the automatic early stopping of unpromising trials [2].

3.5. Overall Flow

Algorithm 1 presents the top-level flow of the experimental setup. For each combination of algorithm and dataset, we perform 30 replicate runs. Each replicate separately assesses model performance over the respective three classification or regression metrics. A replicate begins by splitting the dataset into training and test sets, and scaling them. Then, for each metric:

- Optuna is run over the training set for 50 trials to tune the model’s hyperparameters, the best model is retained, and the best model’s test-set metric score is computed.

- Fifty models are evaluated over the training set with default parameters, the best model is retained, and the best model’s test-set metric score is computed. Strictly speaking, a few algorithms—decision tree, KNN, Bayesian—are essentially deterministic. For consistency, we still performed the 50 default hyperparameter trials. Further, our examination of the respective implementations revealed possible randomness, e.g., for decision tree, when max_features < n_features, the algorithm will select max_features at random; though the default is max_features = n_features we still took no chances of there being some hidden randomness deep within the code.

An evaluation of the model is carried out through five-fold cross-validation. At the end of each replicate, the test-set percent improvement in Optuna’s best model is computed over the default’s best model.

| Algorithm 1 Experimental setup (per algorithm and dataset) |

|

| # 'metric1', 'metric2', 'metric3' are, respectively: # · For classification: accuracy, balanced accuracy, F1 # · For regression: R2, adjusted R2, complement RMSE #eval_score is 5-fold cross-validation score |

|

4. Results

A total of 96,192 replicates were performed, each comprising 300 algorithm runs (3 metrics × 50 Optuna trials, 3 metrics × 50 default trials), with the final tally thus being 28,857,600 algorithm runs. Note that for each run, we used the fit method of the respective algorithm five times during five-fold cross validation, i.e., the learning algorithm was executed five times. Table 2 presents our results.

Table 2.

Compendium of final results over 26 ML algorithms, 250 datasets, 96,192 replicates, and 28,857,600 algorithm runs. A table row presents results of a single ML algorithm, showing a summary of all replicates and datasets. A table cell summarizes the results of an algorithm–metric pair. Cell values show median and mean(std), where median: median over all replicates and datasets of Optuna’s percent improvement over default; mean(std): mean (with standard deviation) over all replicates and datasets of Optuna’s percent improvement over default. The total number of replicates for which these statistics were computed is also shown. Acc: accuracy score; Bal: balanced accuracy score; F1: F1 score; R2: R2 score; Adj R2: adjusted R2 score; C-RMSE: complement RMSE; Reps: total number of replicates †.

Table 2 shows several interesting points. First, regressors are somewhat more susceptible to hyperparameter tuning, i.e., there is more to be gained by tuning vis-a-vis the default hyperparameters.

For most classifiers and—to a lesser extent—regressors, the median value shows little to be gained from tuning, yet the mean value along with the standard deviation suggests that for some algorithms there is a wide range in terms of tuning effectiveness. Indeed, by examining the collected raw experimental results, we noted that there was a “low-hanging fruit” case at times. The default hyperparameters yielded very poor performance on some datasets, leaving room for considerable improvement through tuning.

It would seem useful to define a “bottom-line” measure—a summary score, as it were, which essentially summarizes an entire table row, i.e., an ML algorithm’s sensitivity to hyperparameter tuning. We believe any such measure would be inherently arbitrary to some extent; that said, we nonetheless put forward the following definition of hp_score:

- The 13 algorithms and 9 measures of Table 2 are considered (separately for classifiers and regressors) as a dataset with 13 samples and the following 9 features: metric1_median, metric2_median, metric3_median, metric1_mean, metric2_mean, metric3_mean, metric1_std, metric2_std, metric3_std.

- Scikit-learn’s RobustScaler is applied, which scales features using statistics that are robust to outliers: “This Scaler removes the median and scales the data according to the quantile range (defaults to IQR: Interquartile Range). The IQR is the range between the 1st quartile (25th quantile) and the 3rd quartile (75th quantile). Centering and scaling happen independently on each feature…” [14].

- The hp_score of an algorithm is then simply the mean of its nine scaled features.

This hp_score is unbounded because improvements or impairments can be arbitrarily high or low. A higher value means that the algorithm is expected to gain more from hyperparameter tuning, while a lower value means that the algorithm is expected to gain less from hyperparameter tuning (on average).

Table 3 presents the hp_scores of all 26 algorithms, sorted from highest to lowest per algorithm category (classifier or regressor). While simple and immanently imperfect, hp_score nonetheless seems to summarize the trends observable in Table 2 fairly well.

Table 3.

The hp_score of each ML algorithm, computed from the values in Table 2. A higher value means that the algorithm is expected to gain more from hyperparameter tuning, while a lower value means that the algorithm is expected to gain less from hyperparameter tuning.

5. Discussion

The main takeaway from Table 2 and Table 3 is as follows. For most ML algorithms, we should not expect huge gains from hyperparameter tuning on average; however, there may be some datasets for which default hyperparameters perform poorly, especially for some algorithms. In particular, those algorithms at the bottom of the lists in Table 3 would likely not benefit greatly from a significant investment in hyperparameter tuning. Some algorithms are robust to hyperparameter selection, while others are somewhat less robust.

Perhaps the main limitation of this work (as in others involving hyperparameter experimentation) pertains to the somewhat subjective choice of value ranges (Table 1). This is, ipso facto, unavoidable in empirical research such as this. While this limitation cannot be completely overcome, it can be offset given that the code is publicly available at https://github.com/moshesipper (accessed on 1 September 2022), and we and others may enhance our experiment and add additional findings. Indeed, we hope this to be the case.

Table 3 can be used in practice by an ML practitioner to:

- Decide how much to invest in hyperparameter tuning of a particular algorithm;

- Select algorithms that require less tuning to hopefully save time—as well as energy [15].

6. Concluding Remarks

We performed a large-scale experiment of hyperparameter-tuning effectiveness, across multiple ML algorithms and datasets. We found that for many ML algorithms, we should not expect considerable gains from hyperparameter tuning on average; however, there may be some datasets for which default hyperparameters perform poorly, especially for some algorithms. By defining a single hp_score value, which combines an algorithm’s accumulated statistics, we were able to rank the 26 ML algorithms from those expected to gain the most from hyperparameter tuning to those expected to gain the least. We believe such a study may serve ML practitioners at large, in several ways, as noted above.

There are many avenues for future work:

- Algorithms may be added to the study.

- Datasets may be added to the study.

- Hyperparameters that have not been considered herein may be added.

- Specific components of the setup may be managed (e.g., the metrics and the scaler of Algorithm 1).

- Additional summary scores, like the hp_score, may be devised.

- For algorithms at the top of the lists in Table 3, we may inquire as to whether particular hyperparameters are the root cause of their hyperparameter sensitivity; further, we may seek out better defaults. For example, [16] recently focused on hyperparameter tuning for KernelRidge, which is at the top of the regressor list in Table 3. Ref [6] discussed the tunability of a specific hyperparameter, though they noted the problem of hyperparameter dependency.

Given the findings herein, it seems that, more often than not, hyperparameter tuning will not provide huge gains over the default hyperparameters of the respective software packages examined. A modicum of tuning would seem to be advisable, though other factors will likely play a stronger role in final model performance, including, to name a few, the quality of raw data, the solidity of data preprocessing, and the choice of ML algorithm (curiously, the latter can be considered a tunable hyperparameter [3]).

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Acknowledgments

I thank Raz Lapid for helpful comments.

Conflicts of Interest

The author declares no conflict of interest.

References

- Bergstra, J.; Yamins, D.; Cox, D.D. Hyperopt: A python library for optimizing the hyperparameters of machine learning algorithms. In Proceedings of the 12th Python in Science Conference, Austin, TX, USA, 11–17 July 2013; Volume 13, p. 20. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-Generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2623–2631. [Google Scholar]

- Sipper, M.; Moore, J.H. AddGBoost: A gradient boosting-style algorithm based on strong learners. Mach. Learn. Appl. 2022, 7, 100243. [Google Scholar] [CrossRef]

- Sipper, M. Neural networks with à la carte selection of activation functions. SN Comput. Sci. 2021, 2, 1–9. [Google Scholar] [CrossRef]

- Bischl, B.; Binder, M.; Lang, M.; Pielok, T.; Richter, J.; Coors, S.; Thomas, J.; Ullmann, T.; Becker, M.; Boulesteix, A.L.; et al. Hyperparameter Optimization: Foundations, Algorithms, Best Practices and Open Challenges. arXiv 2021, arXiv:2107.05847. [Google Scholar]

- Probst, P.; Boulesteix, A.L.; Bischl, B. Tunability: Importance of Hyperparameters of Machine Learning Algorithms. J. Mach. Learn. Res. 2019, 20, 1–32. [Google Scholar]

- Weerts, H.J.P.; Mueller, A.C.; Vanschoren, J. Importance of Tuning Hyperparameters of Machine Learning Algorithms. arXiv 2020, arXiv:2007.07588. [Google Scholar]

- Turner, R.; Eriksson, D.; McCourt, M.; Kiili, J.; Laaksonen, E.; Xu, Z.; Guyon, I. Bayesian Optimization is Superior to Random Search for Machine Learning Hyperparameter Tuning: Analysis of the Black-Box Optimization Challenge 2020. In Proceedings of the NeurIPS 2020 Competition and Demonstration Track, Virtual Event/Vancouver, BC, Canada, 6–12 December 2020; Volume 133, pp. 3–26. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-Parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Romano, J.D.; Le, T.T.; La Cava, W.; Gregg, J.T.; Goldberg, D.J.; Chakraborty, P.; Ray, N.L.; Himmelstein, D.; Fu, W.; Moore, J.H. PMLB v1.0: An open source dataset collection for benchmarking machine learning methods. arXiv 2021, arXiv:2012.00058v2. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

- Scikit-Learn: Machine Learning in Python. 2022. Available online: https://scikit-learn.org/ (accessed on 22 June 2022).

- García-Martín, E.; Rodrigues, C.F.; Riley, G.; Grahn, H. Estimation of energy consumption in machine learning. J. Parallel Distrib. Comput. 2019, 134, 75–88. [Google Scholar] [CrossRef]

- Stuke, A.; Rinke, P.; Todorović, M. Efficient hyperparameter tuning for kernel ridge regression with Bayesian optimization. Mach. Learn. Sci. Technol. 2021, 2, 035022. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).