Abstract

We address the problem of defending predictive models, such as machine learning classifiers (Defender models), against membership inference attacks, in both the black-box and white-box setting, when the trainer and the trained model are publicly released. The Defender aims at optimizing a dual objective: utility and privacy. Privacy is evaluated with the membership prediction error of a so-called “Leave-Two-Unlabeled” LTU Attacker, having access to all of the Defender and Reserved data, except for the membership label of one sample from each, giving the strongest possible attack scenario. We prove that, under certain conditions, even a “naïve” LTU Attacker can achieve lower bounds on privacy loss with simple attack strategies, leading to concrete necessary conditions to protect privacy, including: preventing over-fitting and adding some amount of randomness. This attack is straightforward to implement against any model trainer, and we demonstrate its performance against MemGaurd. However, we also show that such a naïve LTU Attacker can fail to attack the privacy of models known to be vulnerable in the literature, demonstrating that knowledge must be complemented with strong attack strategies to turn the LTU Attacker into a powerful means of evaluating privacy. The LTU Attacker can incorporate any existing attack strategy to compute individual privacy scores for each training sample. Our experiments on the QMNIST, CIFAR-10, and Location-30 datasets validate our theoretical results and confirm the roles of over-fitting prevention and randomness in the algorithms to protect against privacy attacks.

1. Introduction

Large companies are increasingly reluctant to let any information out, for fear of privacy attacks and possible ensuing lawsuits. Even government agencies and academic institutions, whose charter is to disseminate data and results publicly, must be careful. Hence, we are in great need of simple and provably effective protocols to protect data, while ensuring that some utility can be derived from them. Though critical sensitive data must never leave the source organization (Source)—company, government, or academia—an authorized researcher (Defender) may gain access to them within a secured environment to analyze them and produce models (Product). The Source may desire to release the Product, provided that desired levels of utility and privacy are met. We consider the most complete release of model information, including the Defender trainer, with all its settings, and the trained model. This enables “white-box attacks” from potential attackers [1]. We devise an evaluation apparatus to help the Source in its decision whether or not to release the Product (Figure 1). The setting considered is that of “membership inference attack”, in which an attacker seeks to uncover whether given samples, distributed similarly as the Defender training dataset, belong or not to such dataset [2]. The apparatus includes an Evaluator and an LTU Attacker. The Evaluator performs a hold-out leave-two-unlabeled (LTU) evaluation, giving the LTU Attacker access to extensive information: all the Defender and Reserved data, except for the membership label of one sample from each. The contributions of our paper include this new evaluation apparatus. Its soundness is backed by some initial theoretical analyses and by preliminary experimental results. These indicate that even naïve attacks can defeat privacy protections with this framework, and yet some Defender models can protect data privacy while retaining utility in such extreme attack conditions.

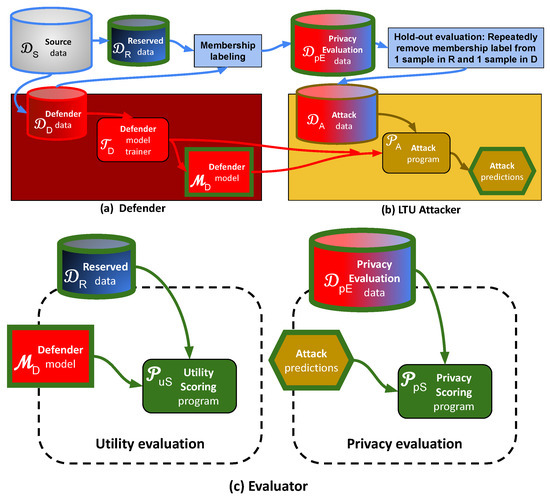

Figure 1.

Methodology flow chart. (a) Defender: Source data are divided into Defender data, to train the model under attack (Defender model) and Reserved data to evaluate such a model. The Defender model trainer creates a model optimizing a utility objective, while being as resilient as possible to attacks. (b) LTU Attacker: The evaluation apparatus includes an LTU Attacker and an Evaluator: The evaluation apparatus performs a hold-out evaluation leaving two unlabeled examples (LTU) by repeatedly providing the LTU Attacker with ALL of the Defender and Reserved data samples, together with their membership origin, hiding only the membership label of 2 samples. The LTU Attacker must turn in the membership label (Defender data or Reserved data) of these 2 samples (Attack predictions). (c) Evaluator: The Evaluator computes two scores: LTU Attacker prediction error (Privacy metric), and Defender model classification performance (Utility metric).

2. Related Work

Membership inference attacks (MIA) have been extensively studied in recent years. Ref. [3] developed a privacy framework called “Membership Privacy”, establishing a family of related privacy definitions. Ref. [2] explored the first MIA scenario, in which an attacker has black-box query access to a classification model f and can obtain the prediction vector of the data record x given as input. Ref. [4] proposed a metric inspired from Differential Privacy to measure the privacy risk of each training record, based on the impact it has on the learning algorithm. Ref. [5] derive a tighter bound on the membership inference attack accuracy against models that are differentially private. Similarly, Ref. [6] incorporates a fine-grained analysis on the systematic evaluation of privacy risk. The Bayesian metric proposed is defined as the posterior probability that a given input sample is from the training set after observing the target model’s behavior over that sample. Ref. [7] explores a more realistic scenario. They consider skewed priors where only a small fraction of the samples belong to the training set, and its attack strategy is focused on selecting the best inference thresholds. In contrast, our LTU Attacker is focused on a worst-case analysis, rather than on the most practical scenarios.

Ref. [8] studied the connection between overfitting and membership inference, showing that overfitting is a sufficient condition to guarantee success of the adversary. Ref. [9] continued exploring MIAs in the black-box model setting, considering different scenarios according to the prior knowledge that the adversary has about the training data: black-box, grey-box, and white-box. Recent work also addressed membership inference attacks against generative models [10,11,12]. This paper focuses on the attack of discriminative models in an all ‘knowledgeable scenario’, both from the point of view of model and data.

Several frameworks have been proposed to mitigate attacks, among which Differential Privacy [13] has become a reference method. Work in [14,15] shows how to implement this technique in deep learning. Using DP to protect against attacks comes at the cost of decreasing the model’s utility. Regularization approaches have been investigated in an effort to increase model robustness against privacy attacks while retaining most utility. One of them inspired our idea to defend against attacks in an adversarial manner: Domain-adversarial training [16] introduced in the context of domain adaptation. Ref. [17] will later use this technique to defend against MIA. Ref. [18] helped bridge the gap between membership inference and domain adaptation.

Most literature addressing MIA considers a black-box scenario, where the adversary only has access to the model through an API and very little knowledge about the training data. Closest to the scenario considered in this paper, the work of [1] analyzes attackers having all information about the neural network under attack, including inner layer outputs, allowing them to exploit privacy vulnerabilities of the SGD algorithm. However, contrary to the LTU Attacker we are introducing, the authors’ adversary executes the attack in an unsupervised way, without having access to membership labels of any data sample. Additionally, the work of [19] consider a similar scenario of giving an attacker two datasets differing by one sample, but focus their work on DP-SGD, and give the attacker access to intermediate computations performed during training. We assume that the attacker had no access to the trainer or model during training. Bayes optimal strategies have been examined in [20]; showing that, under some assumptions, the optimal inference depends only on the loss. Recent work in [21] also aims to design the best possible adversary, defined in terms of the Bayes Optimal Classifier, to estimate privacy leakage of a model.

3. Problem Statement and Methodology

We consider the scenario in which an owner of a data Source wants to create a predictive model trained on some of those data, but needs to ensure that privacy is preserved. In particular, we focus on privacy from membership inference. The data owner entrusts an agent called Defender with creating such a model, giving him access to a random sample (Defender dataset). We denote by the trained model (Defender model) and by the algorithm used to train it (Defender trainer). The data owner wishes to release , and eventually , provided that certain standards of privacy and utility of and are met. To evaluate such utility and privacy, the data owner reserves a dataset , disjointed from , and gives both and to a trustworthy Evaluator agent. The Evaluator tags the samples with dataset “membership labels”: Defender or Reserved. Then, the Evaluator performs repeated rounds, consisting in randomly selecting one Defender sample d and one Reserved sample r, and giving to an LTU Attacker an almost perfect attack dataset , removing only the membership labels of the two selected samples. The two unlabeled samples are referred to as and , with each being equally likely to be from the Defender dataset. We refer to this procedure as “Leave Two Unlabeled” (LTU), see Figure 1. The LTU Attacker also has access to the Defender trainer (with all its hyper-parameter settings), and the trained Defender model . This is the worst-case scenario in terms of attacker knowledge/access, because the only other information that can be used to attack the membership, would be to already have the membership labels. The attacker is tasked with correctly predicting which of the two samples d and r belongs to (independently for each LTU round, forgetting everything at the end of a round).

We use the LTU membership classification accuracy from N independent LTU rounds (as defined above), to define a global privacy score as:

where the error bar is an estimator of the standard error of the mean (approximating the Binomial law with the Normal law, see, e.g., [22]). The weaker the performance of the LTU Attacker ( for random guessing), the larger Privacy, and the better should be protected from attacks. We can also determine an individual membership inference privacy score for any sample by using that sample for all N rounds, and only drawing at random (Similarly, we can determine an individual non-membership inference privacy score for any sample by using that sample for all N rounds, and only drawing d at random). See the example in Appendix C.

The Evaluator also uses to evaluate the utility of the Defender model . We focus on multi-class classification for c classes, and measure utility with the classification accuracy of , defining utility as:

Although the LTU Attacker is all knowledgeable, we still need to endow it with an algorithm to make membership predictions. In Figure 2 we propose a taxonomy of LTU Attackers. In each LTU round, let and be the samples that were deprived of their labels. The taxonomy has 2 branches:

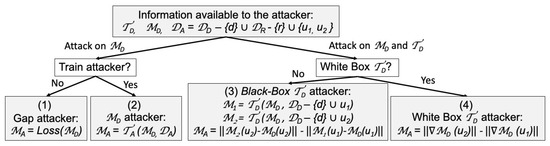

Figure 2.

Taxonomy of LTU Attacker. Top: Any LTU Attacker has available the Defender trainer , the trained Defender model , and attack data including (almost) all the Defender data and Reserved data . However, it may use only part of this available knowledge to conduct attacks. r and d are two labeled examples belonging and , respectively, and and are two unlabeled examples, one from and one from (ordered randomly). Bottom Left: Attacker targets only the trained Defender model . Bottom Right: targets both and its trainer .

- Attack on alone: (1) Simply use a generalization Gap-attacker, which classifies as belonging to if the loss function of is smaller than that of (works well if overfits ); (2) Train a -attacker to predict membership, using as input any internal state or the output of , and using as training data. Then use to predict the labels of and .

- Attack on and : Depending on whether the Defender trainer is a white-box from which gradients can be computed, define by: (3) Training two mock Defender models and , one using and the other using , with the trainer . If is deterministic and independent of sample ordering, either or should be identical to , and otherwise one of them should be “closer” to . The sample corresponding to the model closest to is classified as being a member of . (4) Performing one gradient learning step with either or using , starting from the trained model , and compare the gradient norms.

A variety of Defender strategies might be considered:

- Applying over-fitting prevention (regularization) to ;

- Applying Differential Privacy algorithms to ;

- Training in a semi-supervised way (with transfer learning) or using synthetic data (generated with a simulator trained with a subset of );

- Modifying to optimize both utility and privacy.

4. Theoretical Analysis of Naïve Attackers

We present several theorems outlining weaknesses of the Defender that are particularly easy to exploit by a black-box LTU Attacker, not requiring training a sophisticated attack model (we refer to such attackers as “naïve”). First, we prove, in the context of the LTU procedure, theorems related to an already known result connecting privacy and over-fitting: Defender trainers that overfit the Defender data lend themselves to easy attacks [8]. The attacker can simply exploit the loss function of the Defender (which should be larger on Reserved data than on Defender data). The last theorem concerns deterministic trainers : We show that the LTU Attacker can defeat them with 100% accuracy, under mild assumptions. Thus, Defenders must introduce some randomness in their training algorithm to be robust against such attacks [23].

Throughout this analysis, we use the fact that our LTU methodology simplifies the work for the LTU Attacker since it is always presented with pairs of samples for which exactly one is in the Defender data. This can give it very simple attack strategies. For example, for any real valued function , with , let r be drawn uniformly from and d be drawn uniformly from , and define:

Thus, is the probability that discriminant function f “favors” Reserved data while is the probability with which it favors the Defender data. occurs if for a larger number of random pairs is larger for Reserved data than for Defender data. If the probability of a tie is zero, then .

Theorem 1.

If there is any function f for which , an LTU Attacker exploiting that function can achieve an accuracy .

Proof.

A simple attack strategy would be predict that the unlabeled sample with the smaller value of belongs to the Defender data, with ties (i.e., when ) decided by tossing a fair coin. This strategy would give a correct prediction when , which occurs with probability , and would be correct half of the time when , which occurs with probability . This gives a classification accuracy:

□

This is similar to the threshold adversary of [8], except that the LTU Attacker does not need to know the exact conditional distributions, since it can discriminate pairwise. The most obvious candidate function f is the loss function used to train (we call this a naïve attacker), but the LTU Attacker can mine to potentially find more discriminative functions, or multiple functions to bag, and use to compute very good estimates for and . We verify that an LTU Attacker using an f function making perfect membership predictions (e.g., having the knowledge of the entire Defender dataset and using the nearest neighbor method) would get , if there are no ties. Indeed, in that case, and .

In our second theorem, we show that the LTU Attacker can attain an analogous lower bound on accuracy connected to overfitting as the bounded loss function (BLF) adversary of [8].

Theorem 2.

If the loss function used to train the Defender model is bounded for all x, without loss of generality (since loss functions can always be re-scaled), and if , the expected value of the loss function on the Reserved data, is larger than , the expected value of the loss function on the Defender data, then a lower bound on the accuracy of the LTU Attacker is given by the following function of the generalization error gap :

Proof.

If the order of the pair is random and the loss function is bounded by , then the LTU Attacker could predict with probability , by drawing and predicting if , and otherwise. This gives the desired lower bound, derived in more detail in Appendix A:

□

This is only a lower bound on the accuracy of the attacker, connected to the main difficulty in machine learning-overfitting of the loss function. Other attack strategies may be more accurate. However, neither of the attack strategies in Theorems 1 and 2 is dominant over the other, as shown in Appendix B. The strategy in Theorem 1 is more widely applicable, since it does not require the function to be bounded.

In the special case when the loss function used to train the Defender model is the 0–1 loss, and that is used to attack (i.e., ), the strategies in Theorems 1 and 2 are different, but have the same accuracy:

Note that u is a dummy variable. The first line of the derivation is due to the fact that the only way the loss on the Reserved set can be greater than the loss on the Defender set is if the loss on the Reserved set is 1, which has probability , and the loss on the Defender set is zero, which has probability . The second line is derived similarly.

Theorem 3.

If the Defender trainer is deterministic, invariant to the order of the training data, and injective, then the LTU Attacker has an optimal attack strategy, which achieves perfect accuracy.

Proof.

The proof uses the fact that the LTU Attacker knows all of the Defender dataset except one sample, and knows that the missing sample is either or . Therefore, the attack strategy is to create two models, one trained on combined with the rest of the Defender dataset, and the other trained on combined with the rest of the Defender dataset. Since the Defender trainer is deterministic, one of those two models will match the Defender model, revealing which unlabeled sample belonged in the Defender dataset.

Formally, denote the subset of labeled “Defender” as , and the two membership unlabeled samples as and . The attacker can use the Defender trainer with the same hyper-parameters on to produce model and on to produce model .

By definition of the LTU Attacker, the missing sample d is either or , and , so . There are two possible cases. If , then , so that , since is deterministic and invariant to the order of the training data. However, , since , so , since is also injective. Therefore, the LTU Attacker can know, with no uncertainty, that has membership label “Defender” and has membership label “Reserved”. The other case, for , has a symmetric argument. □

Under the hypotheses above, the LTU Attacker achieves the optimal Bayesian classifier using:

5. Data and Experimental Setting

We are using three datasets in our experiments: CIFAR-10 [24], QMNIST [25], and Location-30 [2]. CIFAR-10 is an object classification dataset with 10 different classes, well-known as a benchmark for membership inference attacks [2,11,26]. QMNIST [25] is a handwritten digit recognition dataset, similarly preprocessed as the well-known MNIST [27], but including the whole original NIST Special Database 19 (https://www.nist.gov/srd/nist-special-database-19 accessed on 4 May 2022) data (402,953 images). QMNIST includes meta-data that MNIST was deprived of, including writer IDs and its origin (high-school students or Census Bureau employees), which could be used in future studies of attribute or property inference attack. They are not used in this work. Location-30 [2] was created from “check-ins” on the Foursquare social network. It is a sample of 5010 users with 446 binary features, grouped into 30 clusters (labeled by cluster).

To speed up our experiments, we preprocessed the data using a backbone neural network pretrained on some other dataset, and used the representation of the second last layer of the network. For QMNIST we used VGG19 [28] pretrained on Imagenet [29]. For CIFAR-10, we rely on Efficient-netv2 [30] pretrained on Imagenet21k and finetuned on CIFAR-100.

The data were then split as follows: The 402,953 QMNIST images were shuffled, then separated into 200,000 samples for Defender data and 202,953 for Reserved data. The CIFAR-10 data were also shuffled and split evenly (30,000/30,000 approximately).

6. Results

6.1. Black-Box Attacker

For our first set of experiments, we trained and evaluated various algorithms of the scikit-learn library accessed on 4 May 2022 as Defender model and evaluated the utility and privacy, based on two subsets of data: (1) 1600 random examples from the Defender (training) data and (2) 1600 random examples from the Reserved data (used to evaluate utility and privacy). We performed independent LTU rounds and then computed Privacy based on the LTU membership classification accuracy through Equation (1). The utility of the model was obtained with Equation (2). We used a Black-box LTU Attacker (number (3) in Figure 2). The results shown in Table 1 are averaged over 3 trials (Code is available at https://github.com/JiangnanH/ppml-workshop/blob/master/generate_table_v1.py accessed on 4 May 2022). The first few lines (gray shaded) are deterministic methods (whose trainer yields to the same model regardless of random seeds and sample order). For these lines, consistent with Theorem 3, Privacy is zero, in all columns (Results may vary depending upon which scikit-learn output method is used (predict_proba(), decision_function(), density_function()), or predict(). To achieve zero Privacy, consistent with the theory, the method predict() should be avoided). The algorithms use default scikit-learn hyper-parameter values. In the first two result columns, the Defender trainers are forced to be deterministic by seeding all random number generators. In the first column, the sample order is fixed to the order used by the Defender trainer, while in the second one it is not. Privacy in the first column is near zero, consistent with the theory. In the second column, this is also verified for methods independent of sample order. The third result column corresponds to varying the random seed, hence algorithms including some level of randomness have an increased level of privacy.

Table 1.

Utility and privacy of QMNIST and CIFAR-10 of different scikit-learn models with three levels of randomness: original sample order + fixed random seed (no randomness); random sample order + fixed random seed; random sample order + random seed. The Defender data and Reserved data have both 1600 examples. All numbers shown in the table have at least two significant digits (standard error lower than 0.004). For model implementations, we use scikit-learn (version 0.24.2) with default values. Shaded in gray: fully deterministic models with Privacy .

The results of Table 1 show that there is no difference between the column 2 and 3; suggesting that just the randomness associated to altering the order of the training samples is enough to make the strategy fail. These results also expose one limitation of black-box attacks: example-based methods (e.g., SVC), which store examples in the model, obviously violate privacy. However, this is not detected by a black-box LTU Attacker, if they are properly regularized and/or involve some degree or randomness. White-box attackers solve this problem.

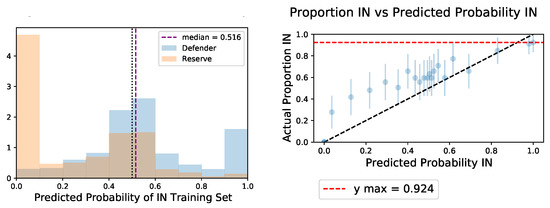

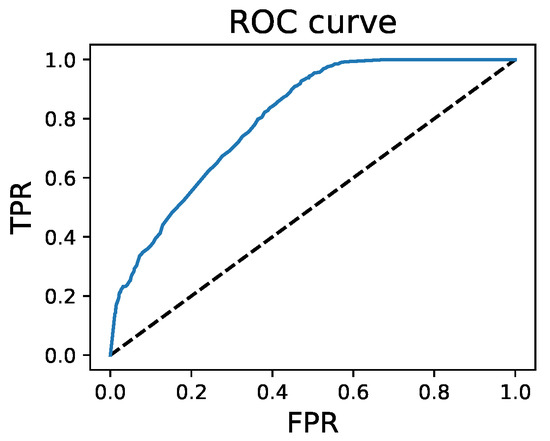

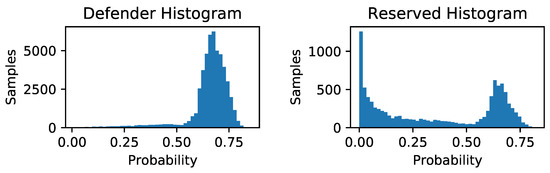

In our next set of experiments, we attack a neural network classifier defended with MemGuard [31]. We use again use “naïve” white-box attack (method (3) in Figure 2). This time our attack program is to use the Defender model trainer to train many candidate models on random splits of half of the data, after which IN predictions are made on the half used for training and OUT predictions are made on the other half. These are used to create distributions for each sample of the predicted logits of the true class when the sample was IN or OUT of the training set. From these, we can use the Bayes theorem to attack the predictions of the Defender model. We find that even this straightforward black-box attack in the LTU framework can predict the membership of some samples with high confidence, as can be seen in Figure 3. The AUROC for the membership predictions was 0.8, and the TPR was much higher than the FPR in the low FPR regime as seen in Figure 4, indicating samples whose membership can be inferred with very low error.

Figure 3.

Left: Histograms of predicted probabilities of membership IN the Defender set, for the Defender set and the Reserve set, each of size 1000. Right: Scatter plot comparing the actual proportions of being IN the Defender set with the predicted probabilities of membership IN the Defender set, for groups of 40 samples with similar predictions (with error bars for proportion). Many Defender samples are correctly predicted members with high confidence.

Figure 4.

The ROC curve for the membership predictions from a model trained on Location-30 and defended by MemGuard. The AUROC was 0.8, and the TPR was much higher than the FPR in the low FPR regime.

6.2. White-Box Attacker

We implemented a white-box attacker based on gradient calculations (method (4) in Figure 2). We evaluated the effect of the proposed attack with QMNIST on two types of Defender models: a deep neural network (DNN) trained with supervised learning or with unsupervised domain adaptation (UDA) [32] (Code is available at https://github.com/JiangnanH/ppml-workshop#white-box-attacker accessed on 4 May 2022).

For supervised learning, we used ResNet50 [33] as the backbone neural network, which is pre-trained on ImageNet [29]. We then retrained all its layers on the Defender set of QMNIST. The results are reported in Table 2, line “Supervised”. With the very large Defender dataset we are using for training (200,000 examples), regardless of variations on regularization hyper-parameters, we could make ResNet50 to overfit. Consequently, both utility and privacy are good.

Table 2.

Utility and privacy of DNN ResNet50 Defender models trained on QMNIST.

In an effort to still improve Privacy, we used unsupervised domain adaptation (UDA). To that end, we use as source domains a synthetic dataset, called large Fake MNIST [34], which are similar to MNIST. Large fake MNIST has 50,000 white-on-black images for each digit, which results in 500,000 images in total. The target domain is the Defender set of QMNIST. The chosen UDA method is DSAN [32,35], which optimizes the neural network with the sum of a cross-entropy loss (classification loss) and a local MMD loss (transfer loss) [35]. We tried three variants of attacks of this UDA model. The simplest is the most effective: attack the model as if it were trained with supervised learning. Unfortunately, UDA did not yield improved performance. We attribute that to the fact that the supervised model under attack performs well on this dataset and already has a very good level of privacy.

7. Discussion and Further Work

Although an LTU Attacker is all knowledgeable, it must make efficient use of available information to be powerful. We proposed a taxonomy based on information available or used (Figure 2). The most powerful Attackers use both the trained Defender model and its trainer .

When the Defender trainer is a black box, like in our first set of experiments on scikit-learn algorithms, we see clear limitations of the LTU Attacker which include the fact that it is not possible to diagnose whether the algorithm is example-based.

Unfortunately, white-box attacks cannot be conducted in a generic way, but must be tailored to the trainer (e.g., gradient descent algorithms for MLP). In contrast, black-box methods can attack (and ) regardless of mechanism. Still, we obtain necessary conditions for privacy protection by analyzing black-box methods. Both theoretical and empirical results using black-box attackers (on a broad range of algorithms of the scikit-learn library on the QMNIST and CIFAR-10 data), indicate that Defender algorithms are vulnerable to an LTU Attacker if it overfits the training Defender data or if it is deterministic. Additionally, the degree of stochasticity of the algorithm must be sufficient to obtain a desired level of privacy.

We explored white-box attacks neural networks trained with gradient descent. In our experiments on the large QMNIST dataset (200,000 training examples), deep CNNs, such as ResNet, seem to exhibit both good utility and privacy in their “native form”, according to our white-box attacker. We were pleasantly surprised with our white box attack results, but, in light of the fact that other authors found similar networks vulnerable to attack [1], we conducted the following sanity check. We performed the same supervised learning experiment by modifying of the class labels (to another class label chosen randomly), in both the Defender set and Reserved set. Then we incited the neural network to overfit the Defender set. Although the training accuracy (on Defender data) was still nearly perfect, we obtained a loss of test accuracy (on Reserved data): . According Theorem 2, this should result in a loss of privacy. This allowed us to verify that our white-box attacker correctly detected a loss of privacy. Indeed, we obtained a privacy of 0.55.

We are in the process of conducting comparison experiments between our white-box attacker and that of [1]. However, their method does not easily lend itself to be used with the LTU framework, because it requires training a neural network for each LTU round (i.e., on each ). We are considering doing only one data split to evaluate privacy, with and using the rest of the data for privacy evaluation. However, we can still use the pairwise testing of the LTU methodology, i.e., the evaluator queries the attacker with pairs of samples, one from the Defender data and the other from the Reserved data. In Appendix C, we show on an example that this results in an increased accuracy of the attacker.

In Appendix C, we use the same example to illustrate how we can visualize the privacy protection of individuals. Further work includes comparing this approach with [6].

Further work also includes testing LTU Attacker on a wider variety of datasets and algorithms, varying the number of training examples, training new white-box attack variants to possibly increase the power of the attacker, and testing various means of improving the robustness of algorithms against attacks by LTU Attacker. We are also in the process of designing a competition of membership inference attacks.

8. Conclusions

In summary, we presented an apparatus for evaluating the robustness of machine learning models (Defenders) against membership inference attack, involving an “all knowledgeable” LTU Attacker. This attacker has access to the trained model of the Defender, its learning algorithm (trainer), all the Defender data used for training, minus the label of one sample, and all the similarly distributed non-training Reserved data (used for evaluation), minus the label of one sample. The Evaluator repeats this Leave-Two-Unlabeled (LTU) procedure for many sample pairs, to compute the efficacy of the Attacker, whose charter is to predict the membership of the unlabeled samples (training or non-training data). We call such a LTU Attacker the LTU-attacker for short. The LTU framework helped us analyze privacy vulnerabilities both theoretically and experimentally.

The main conclusions of this paper are that a number of conditions are necessary for a Defender to protect privacy:

- Avoid storing examples (a weakness of example-based method, such as Nearest Neighbors);

- Ensure that for all f, following Theorem 1 ( is the probability that discriminant function f “favors” Reserved data while is the probability with which it favors the Defender data);

- Ensure that , following Theorem 2 ( is the expected value of the loss on Reserved data and on Defender data);

- Include some randomness in the Defender trainer algorithm, after Theorem 3.

Author Contributions

Conceptualization, All; Methodology, All; Software, J.P., R.M.-G., J.H. and H.S.; Validation, J.P., R.M.-G., J.H. and H.S.; Formal analysis, J.P.; Investigation, J.P., R.M.-G., J.H. and H.S.; Resources, I.G.; Data curation, J.P., R.M.-G., J.H. and H.S.; Writing—original draft preparation, All; Writing—review and editing, J.P., R.M.-G., H.S., I.G. and W.-W.T.; Visualization, J.P. and I.G.; Supervision, I.G. and W.-W.T.; Project administration, I.G. and W.-W.T.; Funding acquisition, I.G. and W.-W.T.; All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded in part by the ANR (Agence Nationale de la Recherche, National Agency for Research) under AI chair of excellence HUMANIA, grant number ANR-19-CHIA-0022.

Data Availability Statement

The QMNIST dataset can be obtained at https://github.com/facebookresearch/qmnist accessed on 4 May 2022. The CIFAR10 dataset can be obtained at https://www.cs.toronto.edu/~kriz/cifar.html accessed on 4 May 2022. Our code used to create the training/test splits of the data is available at https://github.com/JiangnanH/ppml-workshop accessed on 4 May 2022.

Acknowledgments

We are grateful to our colleagues Kristin Bennett and Jennifer He for stimulating discussion.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Derivation of the Proof of Theorem 2

If the loss function used to train the Defender model is bounded for all x, without loss of generality (since loss functions can always be re-scaled), and if , the expected value of the loss function on the Reserved data, is larger than , the expected value of the loss function on the Defender data, then a lower bound on the expected accuracy of the LTU Attacker is given by the following function of the generalization error :

Proof.

If the order of the pair is random and the loss function is bounded by , then the LTU Attacker could predict with probability , by drawing and predicting if , and otherwise. This gives the desired lower bound on the expected accuracy, derived as follows:

When is drawn uniformly from , then:

Similarly, when is drawn uniformly from , then:

Substituting in these expected values gives:

□

Appendix B. Non-Dominance of Either Strategy in Theorem 1 or Theorem 2

Here we present two simple examples to show that neither of the strategies in Theorem 1 or Theorem 2 dominates over the other.

For example 1, assume that for the loss function takes the two values 0 or 0.5 with equal probability, and that for the loss function takes the two values 0.3 or 0.4 with equal probability. Then, so that . However, and , so .

For example 2, the joint probability mass function below can be used to compute that .

Table A1.

Example joint PMF of bounded loss function, for and . The attack strategy in Theorem 1 outperforms the attack strategy in Theorem 2 on these data.

Table A1.

Example joint PMF of bounded loss function, for and . The attack strategy in Theorem 1 outperforms the attack strategy in Theorem 2 on these data.

| 0 | 1/2 | 1 | Row Sum | |

|---|---|---|---|---|

| 0.24 | 0.24 | 0.12 | 0.6 | |

| 0.12 | 0.12 | 0.06 | 0.3 | |

| 0.04 | 0.04 | 0.02 | 0.1 | |

| column sum | 0.4 | 0.4 | 0.2 |

Appendix C. LTU Global and Individual Privacy Scores

The following small example illustrates that the pairwise prediction accuracy in the LTU methodology is not a function of the accuracy, false positive rate, or false negative rate of predictions made on individual samples.

Let be the discriminative function trained to predict the probability that a sample is in the Reserved set (i.e., predictions made using a threshold of 0.5), and for simplicity consider Defender and Reserved sets with three samples each, such that:

If c is either , , or , then in all three cases the overall accuracy for individual sample predictions is , and the false positive rate and false negative rate are both . However, in the LTU methodology, the LTU Attacker would obtain 9 different pairs to predict, and for those values of c, its accuracy would be , , or , respectively.

We used the ML Privacy Meter python library of Shokri et al. (https://github.com/privacytrustlab/ml_privacy_meter accessed on 4 May 2022) to run their attack of AlexNet, which achieved an attack accuracy of 74.9%. Their attack model predicts the probability that each sample was in the Defender dataset. The histograms of the predictions over the Defender dataset and Reserved dataset follow:

Figure A1.

Histogram of predicted probabilities.

Using their attack model predictions in the LTU methodology, so that the attacker is always shown pairs of points for which exactly one was from the Defender dataset, increased the overall attack accuracy to 81.3%.

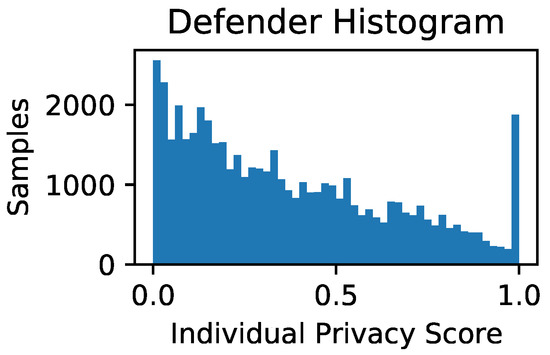

Furthermore, by evaluating the accuracy of the attacker on each Defender sample individually (against all Reserved samples), we computed easy to interpret individual privacy scores for each sample in the Defender dataset:

Figure A2.

Histogram of individual privacy scores for each sample in the Defender dataset.

References

- Nasr, M.; Shokri, R.; Houmansadr, A. Comprehensive privacy analysis of deep learning: Passive and active white-box inference attacks against centralized and federated learning. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 739–753. [Google Scholar]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 3–18. [Google Scholar]

- Li, N.; Qardaji, W.; Su, D.; Wu, Y.; Yang, W. Membership privacy: A unifying framework for privacy definitions. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, Berlin, Germany, 4–8 November 2013; pp. 889–900. [Google Scholar]

- Long, Y.; Bindschaedler, V.; Gunter, C.A. Towards measuring membership privacy. arXiv 2017, arXiv:1712.09136. [Google Scholar]

- Thudi, A.; Shumailov, I.; Boenisch, F.; Papernot, N. Bounding Membership Inference. arXiv 2022, arXiv:2202.12232. [Google Scholar] [CrossRef]

- Song, L.; Mittal, P. Systematic evaluation of privacy risks of machine learning models. In Proceedings of the 30th {USENIX} Security Symposium ({USENIX} Security 21), Virtual Event, 11–13 August 2021; pp. 2615–2632. [Google Scholar]

- Jayaraman, B.; Wang, L.; Knipmeyer, K.; Gu, Q.; Evans, D. Revisiting membership inference under realistic assumptions. arXiv 2020, arXiv:2005.10881. [Google Scholar] [CrossRef]

- Yeom, S.; Giacomelli, I.; Fredrikson, M.; Jha, S. Privacy risk in machine learning: Analyzing the connection to overfitting. In Proceedings of the 2018 IEEE 31st Computer Security Foundations Symposium (CSF), Oxford, UK, 9–12 July 2018; pp. 268–282. [Google Scholar]

- Truex, S.; Liu, L.; Gursoy, M.E.; Yu, L.; Wei, W. Demystifying membership inference attacks in machine learning as a service. IEEE Trans. Serv. Comput. 2019, 14, 2073–2089. [Google Scholar] [CrossRef]

- Hayes, J.; Melis, L.; Danezis, G.; Cristofaro, E.D. LOGAN: Membership Inference Attacks Against Generative Models. arXiv 2018, arXiv:1705.07663. [Google Scholar] [CrossRef] [Green Version]

- Hilprecht, B.; Härterich, M.; Bernau, D. Reconstruction and Membership Inference Attacks against Generative Models. arXiv 2019, arXiv:1906.03006. [Google Scholar]

- Chen, D.; Yu, N.; Zhang, Y.; Fritz, M. Gan-leaks: A taxonomy of membership inference attacks against generative models. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, 9–13 November 2020; pp. 343–362. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography Conference; Springer: Berlin, Germany, 2006; pp. 265–284. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Xie, L.; Lin, K.; Wang, S.; Wang, F.; Zhou, J. Differentially private generative adversarial network. arXiv 2018, arXiv:1802.06739. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Nasr, M.; Shokri, R.; Houmansadr, A. Machine learning with membership privacy using adversarial regularization. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 634–646. [Google Scholar]

- Huang, H.; Luo, W.; Zeng, G.; Weng, J.; Zhang, Y.; Yang, A. DAMIA: Leveraging Domain Adaptation as a Defense against Membership Inference Attacks. IEEE Trans. Dependable Secur. Comput. 2021. [Google Scholar] [CrossRef]

- Nasr, M.; Song, S.; Thakurta, A.; Papernot, N.; Carlini, N. Adversary Instantiation: Lower Bounds for Differentially Private Machine Learning. In Proceedings of the 2021 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021. [Google Scholar] [CrossRef]

- Sablayrolles, A.; Douze, M.; Schmid, C.; Ollivier, Y.; Jégou, H. White-box vs black-box: Bayes optimal strategies for membership inference. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 5558–5567. [Google Scholar]

- Liu, X.; Xu, Y.; Tople, S.; Mukherjee, S.; Ferres, J.L. Mace: A flexible framework for membership privacy estimation in generative models. arXiv 2020, arXiv:2009.05683. [Google Scholar]

- Guyon, I.; Makhoul, J.; Schwartz, R.; Vapnik, V. What size test set gives good error rate estimates? IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 52–64. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. J. Priv. Confidentiality 2017, 7, 17–51. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Technical Report TR-2009; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Yadav, C.; Bottou, L. Cold Case: The Lost MNIST Digits. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Rahman, M.A.; Rahman, T.; Laganière, R.; Mohammed, N.; Wang, Y. Membership Inference Attack against Differentially Private Deep Learning Model. Trans. Data Priv. 2018, 11, 61–79. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnetv2: Smaller models and faster training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Jia, J.; Salem, A.; Backes, M.; Zhang, Y.; Gong, N.Z. MemGuard: Defending against Black-Box Membership Inference Attacks via Adversarial Examples; CCS’19; Association for Computing Machinery: New York, NY, USA, 2019; pp. 259–274. [Google Scholar] [CrossRef]

- Wang, J.; Hou, W. DeepDA: Deep Domain Adaptation Toolkit. Available online: https://github.com/jindongwang/transferlearning/tree/master/code/DeepDA (accessed on 4 May 2022).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sun, H.; Tu, W.W.; Guyon, I.M. OmniPrint: A Configurable Printed Character Synthesizer. arXiv 2022, arXiv:2201.06648. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep Subdomain Adaptation Network for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1713–1722. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).