Abstract

The finite-difference method is widely used in seismic wave numerical simulation, imaging, and waveform inversion. In the finite-difference method, the finite difference operator is used to replace the differential operator approximately, which can be obtained by truncating the spatial convolution series. The properties of the truncated window function, such as the main and side lobes of the window function’s amplitude response, determine the accuracy of finite-difference, which subsequently affects the seismic imaging and inversion results significantly. Although numerical dispersion is inevitable in this process, it can be suppressed more effectively by using higher precision finite-difference operators. In this paper, we use the krill herd algorithm, in contrast with the standard PSO and CDPSO (a variant of PSO), to optimize the finite-difference operator. Numerical simulation results verify that the krill herd algorithm has good performance in improving the precision of the differential operator.

1. Introduction

Seismic wave numerical modeling is a widely used method to simulate the propagation of seismic waves in a known geological landscape. So far, it has been used in seismic exploration and seismology, e.g., earthquake disaster prediction [1], seismic performance assessment of roads, buildings, bridges, and other materials [2,3], seismic oceanography [4], ship detection, and identification [5].

The wave equation is of fundamental importance for describing the propagation of seismic waves [6]. The methods of seismic wave numerical modeling based on the wave equation include the finite-difference (FD) method [7], the finite element method [8,9], and the pseudo spectrum method [10]. The FD method, first presented in 1968 [7], simulates the propagation of seismic waves in various media of the earth and thus can help predicate what happens during the propagation process [11]. Compared to other techniques, the FD method is more efficient and easily implemented [6].

Generally, the wave equation of seismic wave propagation is a partial differential equation. The FD method transforms the continuous problem into the discrete problem, and one can obtain the approximate solution of the original wave equation by solving the difference equation. As the pseudospectral method is considered as the highest order finite-difference scheme [12], we shall calculate the finite-difference operator by truncating the convolution series of the pseudospectral method with the window function. However, the discretization of wave equation inevitably introduces numerical dispersion, which deteriorates the accuracy of seismic wave simulation. The window function method and optimization method are two essential ways to suppress numerical dispersion.

The size of the window function grid plays an influential role in the modeling precision. Although the refined difference grids offer better performance, sometimes, coarse difference grids are preferably used to reduce the computational cost. For this reason, choosing a suitable window function is demanding in practice. The traditional window functions include Hanning [13], Gaussian [14], Kaiser [15], and so on. In the past decades, some new window functions were also reported in the literature. Wang et al. [16] proposed a Chebyshev auto-convolution combined window. Zheng et al. [17] developed a cosine modulated Chebyshev window. Wang and Hong [18] constructed a new window function by combining the cosine function with the original window function.

Optimizing the difference operator is another strategy. In this category, the Newton method, the least squares method, and the swarm intelligence algorithm are frequently used. For example, Zhang and Yao [19] applied the simulated annealing algorithm to search for the operator that best satisfies the constraints. Z.Y. Wang et al. [20] used the improved particle swarm optimization (PSO) algorithm to optimize the finite-difference operator. Ren et al. [21] developed the least-squares method to optimize the staggered-grid finite-difference operator.

Both the window function and optimization method aim to find the optimal window function coefficients. The difference is that window functions are directly designed parameters, while optimization methods generate the optimal coefficients through the iteration. In this paper, we use the nature-inspired optimization (NIO) algorithms to find the fittest finite-difference coefficients. The NIO algorithms are widely used to solve the optimal solution problem in continuous and discrete space. In this paper, we implement the krill herd algorithm (KH), which is a recently proposed NIO algorithm, the PSO, and the center–decenter PSO, which is a variant of PSO. We derive the finite-difference operator through sinc function interpolation and Taylor series expansions. We define the objective function as the error function of the first and second derivatives. Compared with Wang’s Chebyshev auto-convolution combined window, all the NIO algorithms show outstanding performances in seismic wave numerical simulation. Among them, KH exhibits greater stability at most orders.

The rest of the paper is structured as follows. Section 2 introduces the finite-difference method and deduces the objective function of the optimization method. The seismic numerical modeling and the three NIO algorithms are also introduced in this section. Section 3 presents the numerical simulations. Section 4 concludes the paper.

2. Methods

2.1. Finite-Difference Method

We start with a brief introduction of the FD method. Based on studies of Chu and Stoffa [22] and Bai et al. [23], we describe the derivation process of FD operators as follows. First, the FD operators can be derived by the sinc interpolator. According to Nyquist’s theorem, a continuous signal with limited bandwidth can be reconstructed by interpolating the normalized sinc function. Roughly speaking, the FD method represents waveforms with discrete samples.

where is the sampling interval. Thus, is the sampling frequency, and is the infinite uniformly spaced discrete sequence of .

The first and second derivatives of f are derived from Equation (1). The derivation can be simplified by letting (see Appendix A.1).

We use a window function with length to truncate the derivative function Equations (2) and (3). N is an even number, which is related to the accuracy. Windowing is a weighting process that truncates the function of infinite length to [, ]. The FD operators can be calculated as:

Chu and Stoffa [22] pointed out that in the conventional FD operator is a binomial constant coefficient, and they referred to the conventional as a binomial window. One method to obtain a better FD operator is to design a more suitable function, which requires more control over the parameters. For the optimization algorithms, we need to set an objective function. Consider the coefficients of the function Equations (4) and (5) as a whole:

where

and are the finite-difference coefficients for the first and second derivative, respectively. They are related by .

The FD weights can also be obtained through the Taylor series expansions (Thongyoy and Boonyasiriwat [24]). It reflects the relation between finite-difference operators and the pseudospectral method. The finite-difference operator is formulated as (see Appendix A.2):

where , and are defined similarly as before. , , . Hence, for ,

Performing the Fourier transform on Equations (7) and (8), the data can be transformed from the conventional spatial and time domains to the frequency-wavenumber domain, which is also known as the F-k domain (see Appendix A.3). We obtain the first derivative in the F-k domain:

and the second derivative:

where is the wavenumber corresponding to the spatial derivative and /, which is the variable in the F-k domain.

The truncation error of the first derivative and second derivative can be expressed as:

The FD method replaces the differential in the wave equation with difference, namely, the difference form of the derivative obtained above. To minimize the numerical dispersion introduced by the replacement, we shall find the optimal coefficients and that minimize the truncation errors and . The optimization problem of seismic wave simulation has been transformed into a coefficient optimization problem. Since or can be derived from each other, we attempt to obtain the optimal using the NIO algorithms. Zhang and Yao [19] expressed the objective function of the optimization method as follows:

where is the max wavenumber the FD can reach, and is the error limitation. We list the frequently used symbols as in Table 1.

Table 1.

List of symbols.

2.2. Modeling

We use the elastic wave equation to simulate the seismic wave propagation. The earthquake hypocenter function is given as follows:

where is the dominant frequency, and is the propagation cycle. We set Hz.

The max iteration n is calculated by:

where is the simulation duration and is the discrete time interval. Let s and be ms; then, .

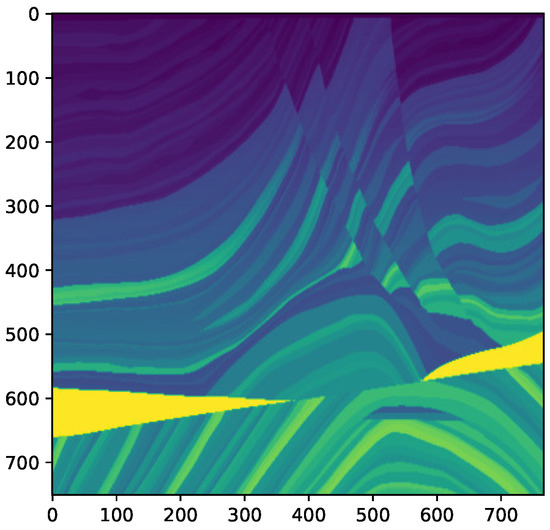

We use the homogeneous medium model and the more complex Marmousi velocity model [25] as the basic model of propagation geology. The Marmousi velocity model is an anisotropic media with very large velocity variations in the horizontal and vertical directions. The grid size of the homogeneous medium model is , and that of Marmousi is , as shown in Figure 1. Different media are distinguished by different colors. The position of the earthquake hypocenter is at the center and that of the receiver is at 50 grids right to the center. The grid spacing is set to 10 m.

Figure 1.

The landscape of the seismic wave propagation. Different colors in the model represent different media which impact the speed of wave propagation.

2.3. Three Optimization Algorithms

We optimize the finite-difference operator by minimizing the difference between the optimizing difference operator and the differential operator. A smaller truncation error means lower numerical dispersion. Next, we briefly introduce the three NIO algorithms: PSO, CDPSO, and KH.

2.3.1. Particle Swarm Optimization

PSO is a well-reputed nature-inspired algorithm proposed by Kennedy and Eberhart [26], which mimics bird flocking or fish schooling. In the PSO algorithm, each agent has two attributes: the position and the velocity. The new position of the agent is updated by the velocity. Each agent has a current fittest position . represents the global fittest position of all agents. The maximum velocity method is used as the update rule [27]. Specifically, the position is updated as follows:

where is the current position of the agents at the ith iteration, and is the velocity at the ith iteration that is given as:

where w is the inertia weight coefficient; and are the acceleration constants of individual and society; and are random numbers between 0 and 1.

2.3.2. Center–Decenter PSO

CDPSO, proposed by Wang [23], is a variant of PSO that has the advantage of avoiding premature convergence and local trap in PSO. It combines two learning strategies: centralized learning and decentralized learning. The agent switches its strategy to update the velocity.

In the centralized learning strategy, the velocity of the ith iteration is updated as follows, and the meaning of the same coefficients is the same as that of PSO.

where L is a random number, which represents the number of partial optimal solutions.

The velocity in decentralized learning is updated as follows:

where is the serial number of the particle, and P is the number of agents in the range of all possible solutions.

2.3.3. Krill Herd Algorithm

The Krill herd (KH) algorithm was proposed in 2012 by Gandomi and Alavi [28]. KH is inspired by the herding behavior of the krills. The position of the ith krill individuals is influenced by three main factors: movement induced by the presence of other individuals , foraging activity , and random diffusion , respectively:

The individual motion is induced by the neighbors within a certain range and the position of the elite individual. The formula for induced speed N is given as follows:

where is the inertia weight, is the direction of the induced, is the influence of neighbors, and is the influence of individuals with optimal fitness values.

The foraging motion is relevant to the location of food and previous experience. The foraging speed F is formulated as follows:

where w is the inertia weight; is the direction of the forage.

The physical diffusion is the random movement behavior of krill populations. The diffusion speed D is as follows:

where is a random value.

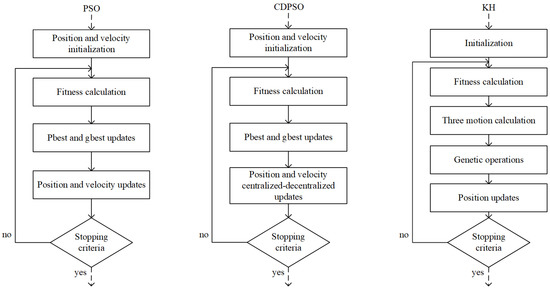

We give the flow charts of the above-mentioned algorithms in Figure 2. PSO and CDPSO have similar processes. The difference between them is that CDPSO alters the updating strategy of the velocity.

Figure 2.

The flow charts of PSO, CDPSO, and KH.

3. Numerical Simulation

3.1. Simulation Setup

The parameters regarding the seismic wave landscape have been given previously. We let population = 30, iteration = 500 for PSO and KH, population = 100, and iteration = 1500 for CDPSO. The coefficients of PSO and CDPSO are assigned as: , , and the velocity is between and .

We use the first-order derivative truncation error in Equation (12) as the objective. The value of E is corresponding to the value of . We denote this one–one relation as . To make the image of coefficient convergence more demonstrative, the above error value is used to calculate the number of agents that meet the requirement of the accuracy. Given the accuracy , the cost function value (CFV) is determined as follows:

where is the cutoff wavenumber, corresponding to the upper limit of . As and the sampling interval is set to , . , then the is an increasing sequence 0, 0.001, ⋯, .

We choose the order N as with for the following tests of the three algorithms.

3.2. Coefficients Convergence

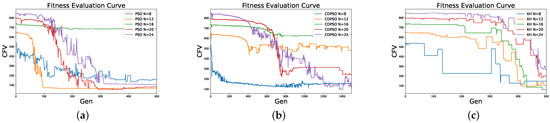

To test the performance of the algorithms, we use CFV in Equation (26) to evaluate the fitness of the solution. The fitness convergence curves of the three NIO algorithms are illustrated in Figure 3. The closer CFV value is to 0, the better the precision of the algorithm is.

Figure 3.

The convergence curves of PSO (a), CDPSO (b), and KH (c).

As illustrated in Figure 3, the KH algorithm with 500 generations shows better accuracy than the CDPSO algorithm with 1500 generations. When the order , the KH algorithm is slightly better than the PSO algorithm. However, when , the KH algorithm converges to a good optimum, while PSO and CDPSO do not converge.

We give the first finite-difference coefficients optimized by the three algorithms in Table 2, Table 3 and Table 4.

Table 2.

The finite-difference coefficients for the first derivative optimized by PSO.

Table 3.

The finite-difference coefficients for the first derivative optimized by CDPSO.

Table 4.

The finite-difference coefficients for the first derivative optimized by KH.

3.3. Stability

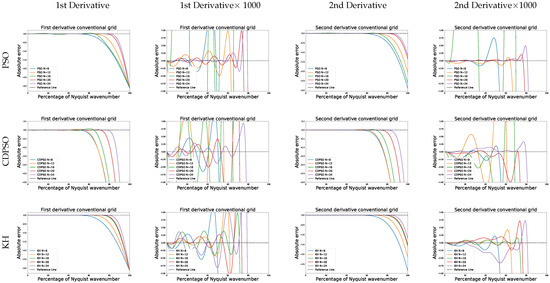

We calculate second coefficients by using the formula mentioned in Section 2.1: (), . Then, we use the absolute error (Equation (12)) and (Equation (13)) to evaluate the stability of the optimized difference operators. The absolute error is illustrated in Figure 4.

Figure 4.

The first and second derivative accuracy of PSO, CDPSO, and KH. The horizontal and vertical coordinates are the percentage of Nyquist wavenumber and absolute error , respectively.

The curve is flat from the beginning. It decreases after the wavenumber reaches about 60. To make it easier to observe, the figure is magnified a thousand times (columns 2 and 4). The closer the curve is to 0, the higher the error stability is. It is observed that the curve fluctuates up and down at 0 and extends abruptly to negative infinity at a certain point. PSO performs well at and 24 but not very well at N and 16. CDPSO is good at , but the overall fluctuation is large. The second derivative error of KH is between plus and minus 0.00075. The KH algorithm shows better stability than PSO and CDPSO. When the percentage of Nyquist wavenumber is less than 40 in the first derivative and less than 50 in the second derivative, the KH algorithm shows excellent stability.

3.4. Model Test

We choose the finite-difference coefficients of the second derivative for wave field simulation. Take Wang’s [16] data as a baseline, which are denoted as ‘source’. They proposed a Chebyshev auto-convolution combined window. The four finite-difference coefficients of the second derivative are illustrated in the Table 5.

Table 5.

Finite-difference coefficients of the second derivative optimized by PSO, CDPSO, and KH ().

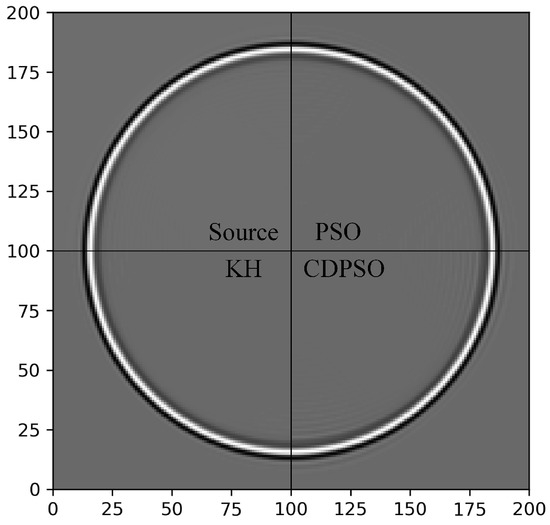

Take the wave field simulation of the homogeneous medium first. With the earthquake hypocenter obtained from Equation (15), we choose the snapshot at 150 ms, as shown in Figure 5. Since the homogeneous medium is isotropic, the entire wave field can be known by looking at the graph in only one direction. Therefore, we stitched the four figures together to facilitate the comparison. The operator with less waviness suppressed the numerical dispersion better, and the parameters calculated are better, too. It is observed that PSO is quite similar to the source, while KH is the best to have less wave. KH has significantly suppressed numerical dispersion.

Figure 5.

Impulse response of CDPSO, KH, PSO, and source in homogeneous medium at 150 ms.

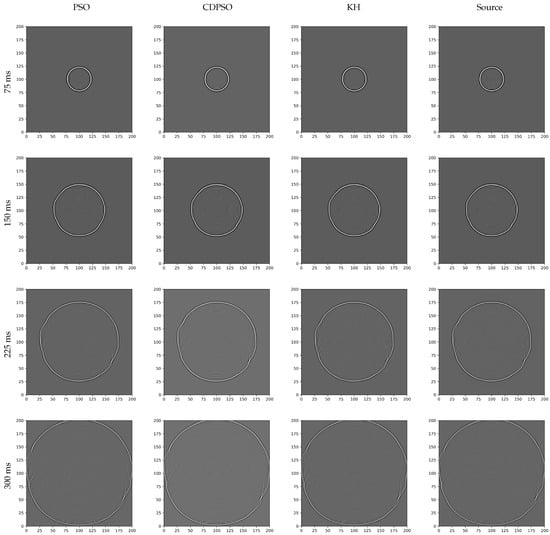

We take the simulation on the Marmousi model shown in Figure 1. Figure 6 gives four wave field snapshots taken at 75 ms intervals.

Figure 6.

The Chebyshev auto-convolution windows of CDPSO, KH, and PSO.

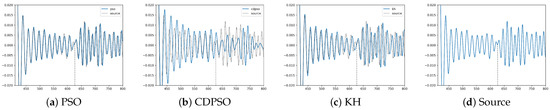

Due to the complexity of the model and the small difference between the optimized parameters, the obtained wave field information is mixed and difficult to distinguish. It is not obvious which one is better. Therefore, we make the single traces figure from the receiver to show the differences. A portion of the trace after the arrival of the main waver is captured and shown in Figure 7. To see the differences more clearly, we zoomed in on the waveform after the arrival of the main frequency. CDPSO has a better suppression of numerical dispersion in the later stages, although the suppression is not as good as the source data in the early stage. The overall trend of PSO is the same as source data, while KH is slightly different from the source in the late stage. It is observed from the wave of about 625 that both KH and PSO have a better suppression effect than the source. KH is much better than CDPSO and slightly better than PSO. The differences caused by parameter optimization become less noticeable as the complexity of the model increases.

Figure 7.

The trace of PSO, CDPSO, KH, and source within 300 ms.

In a nutshell, the KH shows the best performance among the three algorithms.

4. Conclusions

The finite-difference method uses the difference formulas to replace the derivatives approximately. We introduced the process of obtaining the FD operator and set the error as the optimization objective. We used the NIO algorithms to optimize the first and second derivative coefficients. We compared the performance of the three optimization algorithms (PSO, CDPSO, and KH) in terms of convergence and stability, and we compared them with Chebyshev auto-convolution combined window in a model test. Compared with the Chebyshev window, KH and PSO have better performance. Despite the fact that we did not perform further tuning operation, the NIO algorithms still have a good performance in spectral suppression. KH is the most stable algorithm among the three, which performs well at all N orders. It shows promising prospect in optimizing a finite-difference operator in seismic wave numerical modeling.

Author Contributions

Conceptualization, H.L. and Y.F.; methodology, H.L. and Y.F.; software, Z.H.; validation, H.L., Y.F. and Z.H.; formal analysis, Y.F., Z.H. and Q.W.; investigation, H.L., Y.F., Z.H. and M.Z.; resources, H.L., Z.H and Q.W.; data curation, Z.H.; writing—original draft preparation, Y.F., Z.H. and M.Z.; writing—review and editing, H.L., Y.F. and M.Z.; visualization, Z.H.; supervision, H.L.; project administration, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key R&D Program of China (No. 2021YFB3802200).

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. FD Operator

Appendix A.1. The First and Second Derivatives of f

While , . The derivatives can be simplified as:

Appendix A.2. The FD Operator through Taylor’s Expansion

According to Wisart and Chaiwoot [24], the finite-difference formulas for the derivatives can be obtained from Taylor’s expansion.

is a differentiable point on f. is the sampling interval, is the approximate error. Next, we calculate the normal derivative formula.

where are constant coefficients. For the calculation derivative, the constant A should be 0. For first derivative:

where are the finite-difference coefficients for the first derivative. Let ; then, we can get .

For the second derivative

where are the finite-difference coefficients for the second derivative. Let ; then, we can get .

Then, the normal derivative formula is summarized as

where , N is the order, and are the FD coefficients. The higher the N, the more approximate the result.

Appendix A.3. Fourier Transform

The Fourier transform in the wavenumber domain is

where is the wavenumber, and i is the imaginary number.

Hence,

The second derivative is

Hence,

References

- Favorskaya, A.; Petrov, I.; Golubev, V.; Khokhlov, N. Numerical simulation of earthquakes impact on facilities by grid-characteristic method. Matem. Mod. 2017, 112, 1206–1215. [Google Scholar] [CrossRef]

- Sarchi, L.; Varum, H.; Monteiro, R.; Silveira, D. Seismic behavior of two Portuguese adobe buildings: Part II—Numerical modeling and fragility assessment. Int. J. Archit. Herit. 2018, 12, 936–950. [Google Scholar] [CrossRef]

- Chen, X.; Fu, J.; Xue, F.; Wang, X. Comparative Numerical Research on the Seismic Behavior of RC Frames Using Normal and High-Strength Reinforcement. Int. J. Civ. Eng. 2017, 15, 531–547. [Google Scholar] [CrossRef]

- Chen, J.; Tong, S.; Han, T.; Song, H.; Pinheiro, L.; Xu, H.; Azevedo, L.; Duan, M.; Liu, B. Modelling and detection of submarine bubble plumes using seismic oceanography. J. Mar. Syst. 2020, 209, 103375. [Google Scholar] [CrossRef]

- Lu, Z.; Zhang, Z.; Gu, J. Modeling of low-frequency seismic waves in a shallow sea using the staggered grid difference method. Chin. J. Oceanol. Limnol. 2017, 35, 1010–1017. [Google Scholar] [CrossRef]

- Yao, G.; Wu, D.; Debens, H.A. Adaptive finite difference for seismic wavefield modelling in acoustic media. Sci. Rep. 2016, 6, 30302. [Google Scholar] [CrossRef]

- Alterman, Z.; Karal, F. Propagation of elastic waves in layered media by Finite Difference methods. Bull. Seismol. Soc. Am. 1968, 58, 367–398. [Google Scholar]

- Lysmer, J.; Drake, L.A. A Finite Element Method for Seismology. Methods Comput. Phys. Adv. Res. Appl. 1972, 11, 181–216. [Google Scholar]

- SU, B.; Li, H.; Liu, S.; Yang, D. Modified symplectic scheme with finite element method for seismic wavefield modeling. Chin. J. Geophys. 2019, 62, 1440–1452. [Google Scholar]

- Jiang, X.; Adeli, H. Pseudospectra, MUSIC, and dynamic wavelet neural network for damage detection of highrise buildings. Int. J. Numer. Methods Eng. 2007, 71, 606–629. [Google Scholar] [CrossRef]

- Kalyani, V.K.; Sinha, A.; Pallavika; Chakraborty, S.K.; Mahanti, N.C. Finite difference modeling of seismic wave propagation in monoclinic media. Acta Geophys. 2008, 56, 1074–1089. [Google Scholar] [CrossRef]

- Fornberg, B. The pseudospectral method; comparisons with finite differences for the elastic wave equation. Geophysics 1987, 52, 483–501. [Google Scholar] [CrossRef]

- Zhou, B.; Greenhalgh, S. Seismic scalar wave equation modeling by a convolutional differentiator. Bull. Seismol. Soc. Am. 1992, 82, 289–303. [Google Scholar]

- Igel, H.; Mora, P.; Riollet, B. Anisotropic wave propagation through finite-difference grids. Geophysics 1995, 60, 1203–1216. [Google Scholar] [CrossRef]

- Hicks, G.J. Arbitrary source and receiver positioning in finite-difference schemes using Kaiser windowed sinc functions. Geophysics 2002, 67, 156–165. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, H.; Tang, X.D.; Wang, Y. Optimized Finite-Difference Operators Based on Chebyshev Auto-Convolution Combined Window Function. Chin. J. Geophys. 2015, 58, 628–642. [Google Scholar]

- Zheng, W.Q.; Meng, X.H.; Liu, J.H.; Wang, J.H. High precision elastic wave equation forward modeling based on cosine modulated Chebyshev window function. Chin. J. Geophys. 2016, 59, 2650–2662. [Google Scholar]

- Wang, J.; Hong, L. Stable optimization of finite-difference operators for seismic wave modeling. Stud. Geophys. Geod. 2020, 64, 452. [Google Scholar] [CrossRef]

- Zhang, J.H.; Yao, Z.X. Optimized finite-difference operator for broadband seismic wave modeling. Geophysics 2012, 78, A13–A18. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.Y.; Bai, W.L.; Liu, H. An optimized finite-difference scheme based on the improved PSO algorithm for wave propagation. In SEG Technical Program Expanded Abstracts 2019; Society of Exploration Geophysicists: Houston, TX, USA, 2019. [Google Scholar]

- Ren, Y.J.; Huang, J.P.; Peng, Y.; Liu, M.L.; Chao, C.; Yang, M.W. Optimized staggered-grid finite-difference operators using window functions. Appl. Geophys. 2018, 15, 253–260. [Google Scholar] [CrossRef]

- Chu, C.; Stoffa, P. Determination of finite-difference weights using scaled binomial windows. Geophysics 2012, 77, W17–W26. [Google Scholar] [CrossRef]

- Bai, W.; Wang, Z.; Liu, H.; Yu, D.; Chen, C.; Zhu, M. Optimisation of the finite-difference scheme based on an improved PSO algorithm for elastic modelling. Explor. Geophys. 2020, 52, 419–430. [Google Scholar] [CrossRef]

- Thongyoy, W.; Boonyasiriwat, C. Least-Squares Finite Difference Operator. 2015. Available online: https://mcsc.sc.mahidol.ac.th/publications/reports/2015/2015-01.pdf (accessed on 8 April 2022).

- Lailly, P.; Rocca, F.; Versteeg, R. The Marmousi experience: Synthesis. In Proceedings of the EAEG Workshop-Practical Aspects of Seismic Data Inversion, Copenhagen, Denmark, 28 May–1 June 1990. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, MHS’95, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).