Abstract

At present, the unsupervised visual representation learning of the point cloud model is mainly based on generative methods, but the generative methods pay too much attention to the details of each point, thus ignoring the learning of semantic information. Therefore, this paper proposes a discriminative method for the contrastive learning of three-dimensional point cloud visual representations, which can effectively learn the visual representation of point cloud models. The self-attention point cloud capsule network is designed as the backbone network, which can effectively extract the features of point cloud data. By compressing the digital capsule layer, the class dependence of features is eliminated, and the generalization ability of the model and the ability of feature queues to store features are improved. Aiming at the equivariance of the capsule network, the Jaccard loss function is constructed, which is conducive to the network distinguishing the characteristics of positive and negative samples, thereby improving the performance of the contrastive learning. The model is pre-trained on the ShapeNetCore data set, and the pre-trained model is used for classification and segmentation tasks. The classification accuracy on the ModelNet40 data set is 0.1% higher than that of the best unsupervised method, PointCapsNet, and when only 10% of the label data is used, the classification accuracy exceeds 80%. The mIoU of part segmentation on the ShapeNet data set is 1.2% higher than the best comparison method, MulUnsupervised. The experimental results of classification and segmentation show that the proposed method has good performance in accuracy. The alignment and uniformity of features are better than the generative method of PointCapsNet, which proves that this method can learn the visual representation of the three-dimensional point cloud model more effectively.

1. Introduction

A point cloud is an interactive point set with an unchanged sparse order defined in coordinate space and samples from the surface of objects to capture their spatial semantic information [1]. Point clouds are obtained through 3D sensors (such as LiDAR scanners and RGB-D cameras). They can be used in human–machine interaction [2], automatic driving vehicles [3], and robot technology [4], and have a high practical application value.

The manual labeling of point cloud targets is very expensive. Unsupervised visual representation learning can learn the effective visual representation of 3D point cloud targets without labeling information. Therefore, the unsupervised visual representation learning of point clouds [5] has become a research hotspot.

The unsupervised visual representation learning of point clouds is mainly based on generative methods. Architecture such as autoencoders and generative adversarial networks have achieved success in learning the visual representation of point cloud targets and generating real samples from complex underlying distributions. Yang et al. proposed FoldingNet [6] and trained an end-to-end depth autoencoder to directly use disordered point clouds. A new decoding operation called folding is also proposed, which can achieve a low reconstruction error even for objects of fine structure, and theoretically shows that it is universal in point cloud reconstruction. Achlioptas et al. proposed LatentGAN [7], a self-encoder trained to learn a potential representation, and then trained a generation model, l-GAN, in a fixed potential space and realized shape editing through simple algebraic operations, such as semantic part editing, shape analogies and shape interpolation, and shape completion. This is easier to train than the original countermeasure generation network and achieves better reconstruction and coverage data distribution.

However, the generative method of point clouds is trained by reconstructing the difference between the point cloud and the original point cloud. The generative method pays more attention to the details of each point, rather than abstract semantic information [8]. Point-based analysis usually assumes independence between each point, which reduces the ability to model correlations or complex structures.

Discriminant methods in unsupervised representation learning use objective functions, similar to supervised learning, to learn visual representations. Compared with generative methods, discriminative methods focus more on the feature space rather than the details of each point. Discriminant methods generally train the network by customizing one or more pretext tasks [9] where the input and labels are from unlabeled data sets and the excuse task can provide powerful supervision signals for semantic information, thereby promoting learning semantic information. Doersch et al. [5] extracted random patch pairs from each image and trained a discriminant model to predict their relative positions in the image. Experiments show that using this feature representation of intra-image context prediction task learning can indeed capture the visual similarity between images. Komodakis et al. [10] took recognizing the two-dimensional rotation of the input image as a pretext task to learn the semantic information on the image. Compared with the attention map generated by supervised learning method, it was proven that the region of interest in this method is the same as that of the supervised learning method. Doersch et al. [11] used a very large ResNet-101 network to jointly train the network of four different pretext tasks. Lasso regularization is also explored to encourage the network to decompose information on its representation and coordinate the network input in order to learn a more unified representation. The experimental results show that the deeper network effect is better and the performance can be improved even on a naïve, multi-head architecture.

As a discriminative method, contrastive learning based on latent space [12] can effectively learn semantic similarity and can solve the problem of ignoring abstract semantic information learning by generative methods [8]. Recently, contrastive learning has shown great prospects, and unprecedented achievements have been made in two-dimensional visual representation learning. Hinton et al. proposed a comparative learning method, SimCLR [8]. On ImageNet, the trained linear classifier is close to supervised. The performance of ResNet50 reaches a top-1 accuracy rate of 76.5% and even surpasses some supervised learning methods on some data sets. However, the SimCLR method relies on a huge batch of data and a deeper network, which consumes computing resources. Kaiming He et al. proposed a contrastive learning method, MoCo [13], which uses contrastive learning as a way to look up a dictionary. It constructs a dynamic dictionary with a queue and a moving average encoder, which makes it possible to construct a large and consistent dictionary that is conducive to training small batches of data and promotes the development of contrastive learning. Xie et al. [14] proposed PointContrast, which performs contrastive learning on a large number of three-dimensional point cloud scene data sets and achieves the best results of segmentation and classification on six different benchmarks of indoor and outdoor and real and synthetic data sets, and experiments show that the learned representations can generalize across domains.

In the field of two-dimensional images, ResNet [15] is mainly used as the backbone network of the contrast learning framework for feature extraction. Due to the disorder of point clouds, the ordering of points does not affect the properties of objects.The network of extracting point cloud features must be a symmetric function, so the commonly used ResNet is not suitable for direct application to point cloud. Qi et al. proposed PointNet [16], which uses a symmetric function to solve the problem of point cloud disorder. Subsequently, Qi et al. proposed PointNet++ [17], which extracts local features of fine geometric structures from the neighborhood and solves the problem of extracting local features, which PointNet cannot do. The proposal of PointNet++ has caused deep learning methods based on this work to widely appear in three-dimensional point cloud processing. Most of the unsupervised visual representation learning frameworks of point clouds also use networks similar to PointNet++ as the backbone network, such as LatentGAN [7], 3DAAE [18], So-Net [19], and other methods.

However, the scalar features used by PointNet++ do not contain the spatial arrangement information in the point cloud data and do not consider the geometric relationship between parts [20], which is very important for the interpretation and description of three-dimensional shapes. The capsule network proposed by Hinton et al. [21] replaces the scalar features of CNN with vector features. The vector features save the feature information of different dimensions through a dynamic routing method, which can preserve the geometric relationship between components in the image. However, the dynamic routing in the capsule network will damage the robustness of the input affine transformation [22]. The input of the comparative learning framework generates positive and negative sample pairs through affine transformation. Therefore, the dynamic routing in the capsule network will affect the performance of contrastive learning.

To solve the above problems, this paper proposes a contrastive learning method for three-dimensional point cloud visual representation. Combining the ideas of PointNet++ and the capsule network, a self-attention point cloud capsule network is designed as the backbone network of the contrastive learning framework. The FM (factorization machines) routing algorithm [23] is used to replace the traditional dynamic routing algorithm so that the network can follow the geometric relationship between components, showing better learning capabilities and generalization characteristics. At the same time, the FM routing algorithm is non-iterative. The routing algorithm can speed up the calculation speed of the network. The self-attention mechanism [24] is introduced to make the capsules input into the FM routing algorithm correlated, which, combined with the FM routing algorithm, improves the network’s ability to learn three-dimensional point cloud representation. By compressing the digital capsule layer output by the backbone network, the ability of the queue to store capsules is improved so that the compressed capsules pay more attention to the transformation operation of the sample, and thus the generalization ability of the model is improved. Aiming at the equivariance of the capsule network, this paper proposes a Jaccard contrast loss using Jaccard similarity coefficients to describe the similarity between features, which is conducive to the model’s distinction between positive and negative samples and improves the performance of the contrast learning method.

2. Materials and Methods

Inspired by the contrastive learning method in the two-dimensional image field, this paper proposes a three-dimensional point cloud visual representation contrastive learning method for learning the visual representation of the three-dimensional point cloud model. As a discriminative method, this method learns representations by maximizing the consistency of the features of different augmented views of the same data example by contrastive loss. Compared with mainstream generative methods, this method pays more attention to the semantic information of point clouds, rather than details of each point. This chapter is divided into two parts. The first is the detailed design of the three-dimensional point cloud momentum contrast learning framework, and the second is the design of the backbone network of the three-dimensional point cloud data based on the characteristics of the three-dimensional point cloud data.

2.1. Three-Dimensional Point Cloud Momentum Contrast Learning Framework

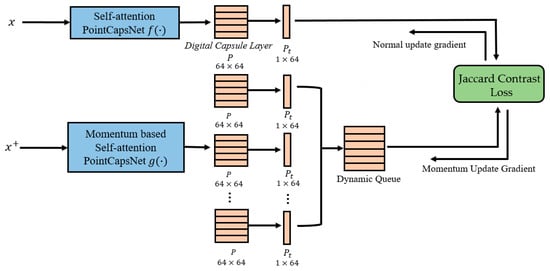

The three-dimensional point cloud momentum contrast learning framework is shown in Figure 1. The whole framework is divided into upper and lower parts, and also divided into a self-attention point cloud capsule network, and a momentum-based self-attention point cloud capsule network, . These are both self-attention point cloud capsule networks with the same structure, but different parameters. The design of the self-attention point cloud capsule network is introduced in Section 2.2. The input of is the point cloud , and the input of is the positive sample of the point cloud obtained by three data augmentation and mixing methods. In order to maintain the consistency of the feature of each iteration of , the parameters of are updated using momentum. In order to use the sample information of past small batches, a dynamic feature queue of first-in, first-out is maintained to store the features of positive and negative samples. For each iteration of training, the feature queue will store the features of the positive sample of the current iteration sample . As the iteration continues, the features in the feature queue will continue to increase on a first-in, first-out principle. The digital capsule layer output is compressed by the backbone network to obtain , which is used to improve the feature queue’s ability to store features, and at the same time, makes features more focused on point cloud transformation operations. Finally, for the equivariance of the capsule network, the Jaccard coefficient is used to measure the similarity between the capsules, thus constructing the Jaccard contrast loss as the loss function of contrastive learning.

Figure 1.

Contrastive learning framework.

2.1.1. Momentum Contrast Learning Algorithm

Algorithm 1 provides pseudocode for the momentum contrast learning algorithm. First, the self-attention point cloud capsule networks and are initialized. A mini patch size point cloud data is loaded, and multiple data augmentation hybrid methods (Section 2.1.2) are used to generate positive sample pairs. The positive samples obtain the features of the input networks and and compress them (Section 2.1.4), and the gradient back-propagation of the network is stopped. Then, the Jaccard contrast loss (Section 2.1.5) is calculated for the feature, the feature is stored in the feature queue, and the contrastive learning is carried out according to the loss. The parameters of network are updated by the Adam update method, and the parameters of network are updated by the momentum update method (Section 2.1.3). Finally, to update the queue, the current feature is put into the queue and the earliest feature is taken out of the queue.

| Algorithm 1 Pseudocode in Momentum Contrast Learning in a PyTorch-like style |

| # f, g: Self-attention PointCapsNet and # queue: dynamic queue # m: momentum # t: temperature |

| f.params = g.params # Initialize for x in loader: # Load a minibatch x with N samples #Using multiple data augment hybrid methods to generate positive sample pairs x_f = MulAug(x) x_g = MulAug(x) |

| # Input to network r_f = f.forward(x_f) s_f = compress(r_f) # Compressed digital capsule layer r_g = g.forward(x_g) s_g = compress(r_g) r_g = r_g.detach() # No gradient to r_g |

| # Jaccard Contrast Loss J_pos = Jaccard(s_f, s_g) # Calculate Jaccard similarity coefficient # Summation of Jaccard similarity coefficients for negative samples J_neg = 0 for neg in queue: J_neg+ = Jaccard(s_f, neg) loss = −1 log(J_pos/J_neg) |

| # Adam update: loss.backward() update(f.params) |

| g.params = m g.params + (1 − m) f.params |

| # Update queue enqueue(queue, s_g) # Enqueue the current queue dequeue(queue) # Dequeue the earliest minibatch |

2.1.2. Input Pre-Processing

The point cloud performs data augmentation operations to generate positive and negative sample pairs as the input of the contrast learning framework. Contrastive learning is performed to learn semantic similarity by increasing the similarity of positive samples to features and reducing the similarity of negative samples to features.

This paper uses three data augmentation hybrid methods: rotation transformation, random movement of the point cloud, and random scaling of the point cloud size. After experimental verification, the use of multiple data augmentation methods can significantly improve the linear evaluation of the contrastive learning model. The random rotation operation is based on the upward direction of each shape of the point cloud. The random movement operation adds the movement distance value to each element of the point cloud tensor. Randomly scaling the point cloud scale multiplies the element of the point cloud tensor by the scaling factor. The same point cloud is obtained by this augmentation method, is the positive sample of the point cloud , and obtained by this augmentation method for other, different point clouds is the negative sample of the point cloud .

2.1.3. Momentum Update Parameters

In order to reduce computing resources, a dynamic feature queue is defined to store the features output from the momentum self-attention point cloud capsule network so that a small batch of samples can be used for input, thereby avoiding huge computing resources. In each iteration, a small batch number of positive samples obtained from the point cloud through three data augmentation hybrid methods are input to the backbone network to obtain the positive sample feature, and, finally, the feature is input to the queue. When the queue is full, the first-in, first-out principle is adopted to remove the earliest features from the queue.

The gradient back-propagation will update all the feature vectors stored in the dynamic queue, which will cause the current input feature and the previous input feature to be inconsistent. In order to solve this problem, the parameter of is updated by the momentum update method, as follows in Formula (1):

where is the self-attention point cloud capsule network in the upper half of the frame in Figure 1, and is the momentum parameter, . When is 0, ; and when the value of is close to 1, the network update is very slow. After experiments, the value of is generally 0.998. The update speed of the parameter of the network is slowed by the momentum update, while the parameter of the network is updated normally, solving the problem of inconsistent features.

2.1.4. Digital Capsule Layer Vectorization

The digital capsule layer obtained by the self-attention point cloud capsule network is class-related and contains the activity vectors of all classes. represents the number of classes of the last class capsule, represents the dimension of the capsule, and represents the digital capsule covered by the class with the highest probability, as shown in the following Formulas:

where is the -th position of the -th capsule and is the label of the capsule with the highest predicted probability in the digital capsule . According to Formula (2), only the -th digital capsule has a non-zero value and all other digital capsules have a zero value.

The capsule network obtains class information from the distribution of specific dimensions and indirectly provides class information to the digital capsule layer, making the digital capsule layer class-dependent. This paper uses the digital capsule layer vectorization method to cancel this class of dependence, and only uses the active capsule in the digital capsule with the highest predicted probability as the final feature for training. Thus, in each instance, the learning of the transformation parameters all come from the same joint distribution and the entities encapsulated by any given instance of the contrastive learning will be the same, regardless of the point cloud label. In addition, this process makes the activity vector pay more attention to the transformation operations of the data set, such as rotating, scaling, and moving the point cloud, while the local changes in the point cloud components are not very important, which can improve the generalization ability of contrastive learning.

In addition, due to the large size of the digital capsule layer , directly inputting into the feature queue will result in a complex similarity calculation, and the size of the feature queue is limited. Therefore, the compressed digital capsule layer method reduces the complexity of calculation and increases the number of stored features in the feature queue, which is equivalent to increasing the samples of each training and improving the performance of comparative learning.

2.1.5. Jaccard Contrast Loss

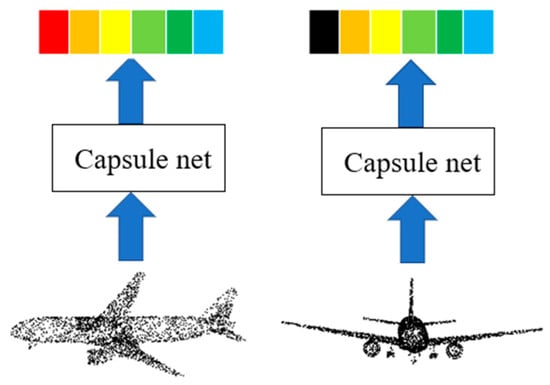

The equivariance of the capsule network makes the output feature pay more attention to the transformation operation of the sample [21], and a certain dimension in the feature vector may represent a certain attribute of the sample. As shown in Figure 2, the feature vector obtained by inputting a positive sample that has undergone rotation transformation to the capsule network is roughly the same, and only the value of the first dimension representing the rotation attribute may be different. In this paper, the Jaccard coefficient is used to measure the similarity of two capsules. The Jaccard coefficient is used to compare the similarity and difference between a limited sample set. The larger the value of the Jaccard coefficient, the higher the similarity of the sample set. Compared with the traditional two vector dot product representing similarity, it can better reflect the characteristics of the equivariance of the capsule network.

Figure 2.

The equivariance of the capsule network.

Given the two n-dimensional capsule vectors A and B, the Jaccard coefficient is as set out in Formula (4):

where and are the -th digit of the capsule vectors and , respectively. According to the Jaccard coefficient, this paper proposes the Jaccard contrast loss as the loss function of the network, and the Jaccard contrast loss is as set out in Formula (5):

where is the temperature hyperparameter that controls the local separation and global uniformity of the feature distribution [25]. The number of features stored in the queue is , is the feature of the network output in each iteration, is the feature of the positive sample of in the queue, and is the -th feature in the queue.

Using Jaccard contrast loss as an unsupervised objective function, the distance between the feature obtained by and all the negative sample features in the feature queue is increased, thereby training the backbone networks and . Compared with traditional contrast loss, Jaccard contrast loss pays more attention to the similarity and difference of features, so it is more conducive to the network to distinguish between positive and negative samples.

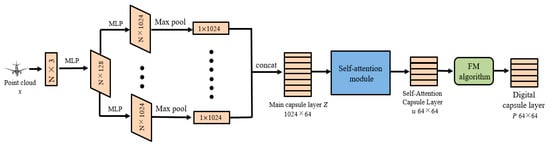

2.2. Self-Attention Point Cloud Capsule Network

While considering the spatial arrangement of point clouds, in order to prevent dynamic routing in the capsule network from impairing the robustness of the affine transformation, a self-attention point cloud capsule network is designed. As shown in Figure 3, the N × 3 point cloud is input to the MLP for feature extraction and N × 128 features are obtained. In order to diversify the learning of the capsule network, this feature is sent to 64 independent layers with different weights, and each convolutional layer is pooled to a maximum size of 1024. The pooled features are then connected to form the main capsule layer z ∈ . In order to solve the problem of dynamic routing, this paper uses FM routing as the routing algorithm of capsule network. Using a self-attention mechanism, there is a correlation between the capsules input to the routing algorithm.

Figure 3.

Self-attention point cloud capsule network.

2.2.1. Self-Attention Module

Through the self-attention mechanism, any two capsules in the self-attention capsule layer have mutual information so that more abundant global information can be learned. The three weight matrices are initialized as , and . According to the weight matrix, the feature spaces , and can be obtained, where:

The dot product of two capsules is used to express the similarity of the two capsules. The self-attention weight matrix is obtained by multiplying and and then applying to softmax, and the self-attention weight matrix is obtained. The expression is as shown in Formula (9):

This article uses the dot product attention function, which is faster and more space-efficient than the additive attention function that uses a feed-forward network with a single hidden layer to calculate the compatibility function. Finally, is multiplied with the self-attention weight matrix and then transposed to obtain the self-attention capsule layer , where is as shown in Formula (10):

The self-attention mechanism is used to effectively extract specific information from different capsules to form the self-attention capsule layer . The self-attention capsule layer is used as the input of the FM routing layer due to the reduction of the dimensionality of each capsule and the specific correlation between the capsules compared with the direct input of the main capsule layer, which can strengthen the relevance of the capsules in the digital capsule layer and is more conducive to learning semantic similarity.

2.2.2. FM Routing Algorithm

Taking the self-attention capsule layer as the input of the FM routing layer, the FM routing algorithm does not need to iteratively calculate the parent capsule and its consistency with the prediction vector. Therefore, the FM routing algorithm is more efficient than the traditional dynamic routing and EM routing algorithms. Algorithm 2 provides the FM routing algorithm steps.

Each capsule in the self-attention capsule layer is represented by , and the prediction vector of each capsule in the self-attention capsule layer is calculated. Assuming that the prediction vector set is , the consistency between capsules is through the paired interaction between capsules in the same layer. The pairwise product expression is as shown in Formula (11):

where the sum of each element of gives the size of the consistency and the direction and posture of the capsule . The pairwise interaction between all capsules in the self-attention capsule layer and the digital capsule in the output layer can be expressed as:

where n represents the total number of prediction vectors. Then, the capsule is defined as:

The summation operation in Formula (12) may cause gradient explosion. Therefore, the prediction vector is divided by for scaling to obtain Formula (14):

The orientation of the posture vector determines the entity’s posture, direction, size, rotation, and other attributes. The posture vector is defined as:

We use capsule to determine which class is activated and by how much it has been activated. Combining all instances of to get the digital capsule layer , the digital capsule layer is used as a feature to train the backbone network for contrastive loss.

| Algorithm 2 FM Routing Algorithm |

| Input: Prediction vector |

| Output: |

| 1: |

| 2: |

| 3: |

| 4: |

3. Experiment and Results

3.1. Experimental Environment

This paper uses the three data augmentation methods, rotation transformation, random moving point cloud, and random scaling of point cloud size, to generate positive and negative sample pairs. The rotation angle is set to {60°, 120°, 180°, 240°, 300°}, the range of the random moving distance value is set to [−0.1, 0.1], and the range of the scaling factor is set to [0.8, 1.2].

To speed up the convergence of the network and prevent vanishing gradients, batch normalization and activation function ReLU are applied at each MLP layer. The model is implemented using the PyTorch framework on an Nvidia 2080 Ti server equipped with two 8 G video memory cards. Limited by the size of the GPU’s video memory, the number of random sampling points is 2048. An Adam optimizer with small memory requirements is used for pre-training with a learning rate of 0.001, a batch size of 8, and a training cycle of 100. The maximum length of the feature queue is 640, which can store up to 640 features of batch size. According to the results of many experiments, the performance of the network is optimal when the momentum update parameter is 0.998 and the temperature coefficient in the Jaccard contrast loss is 0.07.

The pre-training data set is ShapeNetCore, which covers 55 categories and contains about 57,000 three-dimensional models. According to this setting, the model needs about 41 min to train in ShapeNetCore for one cycle, and it takes 68 h to train 100 cycles. After the model is pre-trained, the weights of the contrastive learning network are frozen and the model is transferred to two downstream tasks, including shape classification and part segmentation.

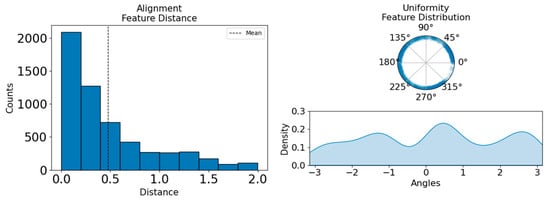

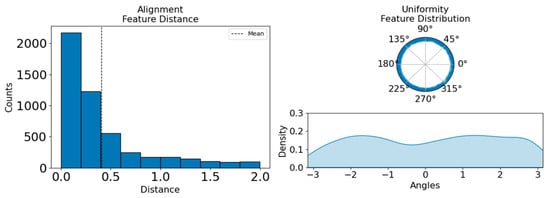

3.2. Performance of Representation Space

Tongzhou Wang et al. [26] proposed that alignment and uniformity are the key attributes for contrastive learning to effectively learn image representation. Alignment indicates the closeness of the features of the positive sample, and uniformity indicates the uniformity of the feature distribution. This paper uses these two attributes to evaluate the performance of the representation space.

This article visualizes ModelNet40 to verify the alignment and uniformity of the centralized data features. ModelNet40 contains 13,843 models in 40 categories, which are divided into 9843 training samples and 3991 test samples. The positive sample pairs of the ModelNet40 verification set are obtained through the three data augmentation hybrid methods in this article and input to the network calculation features after the contrastive learning training is completed. The positive sample uses the distance for the distance between the features to visualize the alignment of the features. The uniformity of the feature is visualized by the Gaussian kernel density estimation method on the unit circle, the feature distribution is drawn, and the angle of all the points on the unit circle is counted (for each point (x, y), arctan2(y, x) is used). The von Mises-Fisher Gaussian kernel density estimation method is used to draw the probability distribution on the circle.

By visualizing the alignment and uniformity, the performance of the representation space learned by the generative method PointCapsNet [20] and the method in this paper are compared, as shown in Figure 4 and Figure 5. The method in this paper can make the distance between the positive sample pairs as close as possible, and the feature distribution on the unit circle is very uniform. The feature alignment and uniformity of our method are better than the generative method PointCapsNet, which shows that our method can learn better three-dimensional representation than the generative method PointCapsNet.

Figure 4.

The generative method, PointCapsNet.

Figure 5.

The method described in this article.

3.3. Classification Performance

The performance of unsupervised visual representation learning is reflected in the completion of downstream tasks. The model after learning is compared to the classification task, and the performance of the model is evaluated according to the classification accuracy. The digital capsule layer of the self-attention capsule network is reconstructed into one-dimensional features as the input of the linear SVM classifier, and the SVM classifier is trained on ModelNet40.

In order to compare the superiority of the classification performance of the proposed method, the commonly used unsupervised learning methods VConv-DAE [27], LatentGAN [7], FoldingNet [6], and PointCapsNet [20] are compared, as are the classical supervised methods PointNet [16] and PointNet++ [17]. The results in Table 1 show that the classification performance of the proposed method on ModelNet40 is better than that of the commonly used unsupervised methods, and it is close to classical supervised learning.

Table 1.

ModelNet40 classification accuracy.

Unsupervised visual representation learning may not be better than supervised learning of the model directly. In order to verify the effectiveness of the method in this paper, the backbone network is not compared and learned, and the linear SVM classifier directly performs supervised learning on ModelNet40. The results in Table 1 show that the backbone network can increase the classification accuracy by 3.3% after the contrastive learning training of this article, which shows that the contrastive learning of this model can effectively improve the quality of visual representation.

The same method is used to train SVM on the different data sets of ShapeNetParts. ShapeNetParts contains 16,881 models in 16 categories, which are divided into 12,149 training samples and 2874 test samples, using only 20% of the training samples for training. The results in Table 2 show that the model achieves the advanced accuracy of shape classification in ShapeNetParts, which is an increase of 2.6% compared with the best comparison method, MulUnsupervised [1]. It shows that the visual representation obtained by contrast learning of the model can handle smaller data sets and can be better generalized to new tasks.

Table 2.

ShapeNetParts classification accuracy of 20% of the training data.

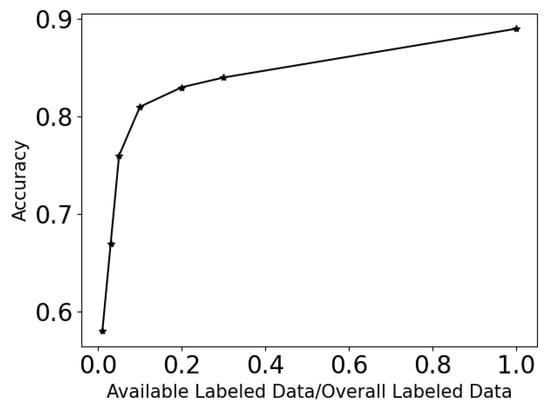

One of the main ways for studying unsupervised classification problems is to perform pre-training on a large amount of unlabeled data and perform transfer learning on a small amount of labeled data. The experiment is very consistent with this setting. The data volume of the unlabeled data set ShapeNet for contrastive learning is relatively large, with a sample size of about 57,000, while the data volume of the labeled ModelNet data set used for transfer learning is relatively small, with about 13,800 samples. Since it is usually difficult to obtain manually labeled data, we want to test how the performance of the model decreases when there is less labeled data. The ShapeNet data set is still used to train the contrastive learning network. Then, only a% of all training data is used in the ModelNet40 dataset to train the linear SVM, where a can be 1, 2, 5, 10, 20 and 30. The test data still uses all the data in the test data of the ModelNet data set.

The results of this experiment are shown in Figure 6. It can be seen that when only 1% of the label samples are used, the classification accuracy is still above 50%. When using 10% of the label data, the classification accuracy exceeds 80%, which is close to most of the unsupervised learning methods in Table 1. It can be proven that the features obtained by the capsule network are linearly separable, the amount of labeled data required for training SVM can be small, and the model still has very good performance on a small amount of labeled data.

Figure 6.

SVM classification accuracy and the number of labeled samples.

3.4. Part Segmentation Performance

Part segmentation is a fine-grained, point-by-point classification task. Its goal is to predict the part category label of each point in a given shape. The data set used in this article is ShapeNetParts, which contains 16,881 objects in 16 categories, which are divided into 12,149 training objects, 2874 test objects, and 1858 verification objects. Each object consists of 2 to 6 parts, and there are a total of 50 different parts in all categories.

This article uses mIoU as the evaluation criterion for part segmentation. For each shape of category A, the mIoU of the shape is calculated. For each component type in category A, the IoU between the real and predicted is calculated. Then, the average IoU for all component types in category A to obtain the mIoU of the shape is calculated. In order to calculate the mIoU of a category, this paper takes the average of the mIoU of all the shapes in the category.

The method in this paper is used to pre-train the network in ShapeNetCore, randomly sample 1% and 5% of ShapeNetParts’ training samples, copy the digital capsule layer of the self-attention capsule network 32 times to obtain 2048 point features (at each point, four layers of MLP (2048, 4096, 1024, and 50) on the features are trained), and evaluate the test data. Figure 7 shows the visualized results of part segmentation.

Figure 7.

The visualized results of part segmentation.

In order to compare the superiority of the classification performance of the proposed method, the unsupervised methods SO-Net [19], PointCapsNet [20], and MulUnsupervised [1] are compared. The results in Table 3 show that when 1% and 5% of the training data are used for components segmentation, the model is better than the best comparison method, indicating that the model still has a very good part segmentation performance on a small amount of labeled data. Table 4 shows the comparison results of the part segmentation between this model and the supervised learning model. The results show that the mIoU achieved by this model is 2.2% smaller than the classic method, PointNet, and 6.2% smaller than the best supervised model, thus narrowing the gap with the supervised model.

Table 3.

Part segmentation performance results on 1% and 5% of the training data.

Table 4.

The segmentation results of the model and the supervised learning method.

3.5. Comparative Experiment

The factors that may affect the performance of the method include the data augmentation mode, contrastive loss, and the routing algorithm. This paper compares the computational complexity of other unsupervised methods and analyzes them through comparative experiments.

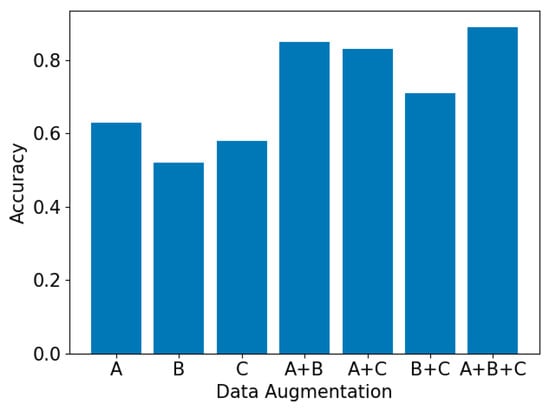

3.5.1. Data Augmentation Methods

In order to study the influence of data augmentation methods on the results, three data augmentation methods (random rotation, random scaling, and random movement) were applied to the input point cloud separately and then mixed for training. Figure 8 shows the impact of the data augmentation methods on the classification accuracy of ModelNet40, where A is a random rotation operation, B is a random scaling operation, C is a random movement operation, A + B refers to the mixed operation of random rotation and random scaling, A + C refers to the mixed operation of random rotation and random movement, B + C refers to the mixed operation of random scaling and random movement, and A + B + C refers to three mixed operations.

Figure 8.

Multiple data augmentation methods.

As shown in Figure 8, the random rotation operation works best when only a single data augmentation operation is used. When the random rotation operation is combined with the other two operations, the accuracy rate is more than 80%, while the accuracy rate of the combined random scaling and random moving operations is about 70%. When the three data augmentation methods are combined, the classification accuracy is slightly improved, which shows that the performance of the network using multiple data augmentation methods is better than using a single data augmentation method for training. The classification accuracy using only random scaling or random moving point cloud is not high because the origin cloud and the transformed point cloud have a similar spatial distribution. When using the combination of rotation prediction and other transformations, it is easier for the neural network to distinguish positive and negative samples. Therefore, in order to learn generalizable features, it is very important to combine rotation prediction with other groups of data augmentation methods.

3.5.2. Contrastive Loss

In order to verify the advancement of the Jaccard contrast loss proposed in this paper, the contrastive losses in the MoCo [13] and SimCLR [8] methods are compared. The loss function in MoCo uses the vector dot product to represent the similarity between capsules, and the loss function in SimCLR uses the cosine similarity to measure the similarity. The comparative loss of the model in this paper is replaced with these two loss functions and trained separately, and the training of the trained model is performed to calculate the average distance between the features of the positive samples and the classification task. The experimental setup is the same as in Section 3.2 and Section 3.3.

Table 5 shows the impact of different contrastive losses on the average distance and classification accuracy between positive samples. Compared with the loss functions in MoCo and SimCLR, the Jaccard contrast loss proposed in this paper can make the distance between positive sample features closer, improve the ability of the contrastive learning method to distinguish positive and negative sample features, and increase the classification accuracy by 1.4%, indicating that the Jaccard contrast loss is more suitable for the backbone network as it improves the performance of contrastive learning.

Table 5.

Contrastive loss.

3.5.3. Routing Algorithm

In order to verify the advanced nature of the FM routing algorithm used in this paper, we compare it with other commonly used routing algorithms. According to the original contrastive learning architecture, different routing algorithms (three-iteration dynamic routing, three-iteration EM routing, and FM routing algorithms) are used to train in ShapeNetCore with a cycle of 100. The trained models are classified on ModelNet40 using the method described in Section 3.3.

Table 6 shows the impact of the different routing methods on time consumption and classification accuracy. In terms of time consumption, the FM routing algorithm training cycle requires 41 min, which saves 12 min compared to the best comparison method. This shows that the computational complexity of this method is lower than that of the dynamic routing and EM routing methods. In terms of classification accuracy, the FM routing algorithm, when compared with the other two methods, achieves the best results, indicating that this method enables the model to learn visual representations better.

Table 6.

Routing algorithms.

3.5.4. Computational Complexity

In addition to its good performance on the standard data set, this algorithm has advantages in terms of the amount of model parameters and training speed because it chooses the non-iterative routing algorithm and it compresses the data capsule layer. In Table 7, the model parameters and training time of the proposed algorithm and the two point cloud unsupervised learning methods are compared. The tests were conducted under the same experimental setting with a batch size of four, and the time to train one epoch on the ShapeNet data set was calculated.

Table 7.

Computational complexity.

The comparison experiment in Table 7 shows that, compared with the best comparison method, PointCapsule, the parameter quantity of this method is reduced by 28.3% and the training time is reduced by 17.8%. This algorithm effectively reduces the model parameters and the amount of model calculation, which has more advantages in practical applications. Benefiting from the lower amounts of parameters and computation, and under the condition of making full use of the 8 G of video memory, the algorithm in this paper has higher concurrency capability and can process point cloud models with a batch size of 10 at the same time, which proves the efficiency of the algorithm.

4. Discussion

Our method shows the positive results of unsupervised learning. A few open questions are worth discussing. We hope to improve the effect of contrastive learning with larger data sets and more computing resources. Most studies on contrastive learning do not consider the aspect of data augmentation. Designing a backbone network of focus cloud data augmentation will be more conducive to the distinction between positive and negative samples. We hope that our method will be useful to other studies involving contrastive learning.

5. Conclusions

The study of unsupervised visual representation learning of point clouds has important practical significance because the manual labeling of point cloud targets is relatively expensive. The three-dimensional point cloud visual representation contrast learning method proposed in this paper is very effective in classifying a small number of labeled data sets. When using only 10% of the labeled data of ModelNet40, the classification accuracy exceeds 80%. As a discriminant method, the method in this paper can learn features of better alignment and uniformity than the mainstream generative method, PointCapsNet. Aiming at the characteristics of point cloud data, a self-attention point cloud capsule network is designed as the backbone of the method in this paper using an FM routing algorithm to replace the traditional dynamic routing, combined with a self-attention mechanism to improve the network’s ability to learn visual representation. Experiments show that the model has achieved good results in processing classification tasks and segmentation tasks, indicating that the model can effectively learn the visual representation of a three-dimensional point cloud.

In the two-dimensional image field, contrastive learning is generally performed on the ImageNet data set, but there is no large-scale data set similar to ImageNet in the three-dimensional point cloud data sets. It is difficult for the performance of contrastive learning to reach the level of the two-dimensional image field. In order to further improve the performance of the contrastive learning of three-dimensional point clouds, we will try to perform cross-domain contrastive learning in the three-dimensional scene segmentation data set.

Author Contributions

Conceptualization, J.Z. and F.Z.; methodology, F.Z.; software, F.Z.; validation, F.Z. and Z.C.; formal analysis, J.Z.; investigation, J.Z.; resources, F.Z.; data curation, F.Z.; writing—original draft preparation, F.Z.; writing—review and editing, F.Z.; visualization, F.Z.; supervision, J.Z.; project administration, J.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (No. 62071260, No. 62006131) and the Natural Science Foundation of Zhejiang Province (LZ22F020001, LQ21F020009).

Data Availability Statement

Not applicable, the study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hassani, K.; Haley, M. Unsupervised multi-task feature learning on point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 8160–8171. [Google Scholar]

- Ge, L.; Cai, Y.; Weng, J.; Yuan, J. Hand pointnet: 3d hand pose estimation using point sets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8417–8426. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Doersch, C.; Gupta, A.; Efros, A.A. Unsupervised visual representation learning by context prediction. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1422–1430. [Google Scholar]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point cloud auto-encoder via deep grid deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 206–215. [Google Scholar]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 40–49. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Jing, L.; Tian, Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4037–4058. [Google Scholar] [CrossRef] [PubMed]

- Komodakis, N.; Gidaris, S. Unsupervised representation learning by predicting image rotations. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Doersch, C.; Zisserman, A. Multi-task self-supervised visual learning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2051–2060. [Google Scholar]

- Mukherjee, S.; Asnani, H.; Lin, E.; Kannan, S. Clustergan: Latent space clustering in generative adversarial networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 4610–4617. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Xie, S.; Gu, J.; Guo, D.; Qi, C.R.; Guibas, L.; Litany, O. Pointcontrast: Unsupervised pre-training for 3d point cloud understanding. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 574–591. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 770–778. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Zamorski, M.; Zięba, M.; Klukowski, P.; Nowak, R.; Kurach, K.; Stokowiec, W.; Trzciński, T. Adversarial autoencoders for compact representations of 3D point clouds. Comput. Vis. Image Underst. 2020, 193, 102921. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Chen, B.M.; Lee, G.H. So-net: Self-organizing network for point cloud analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9397–9406. [Google Scholar]

- Zhao, Y.; Birdal, T.; Deng, H.; Tombari, F. 3D point capsule networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1009–1018. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. arXiv 2017, arXiv:1710.09829. [Google Scholar]

- Gu, J.; Tresp, V.; Hu, H. Capsule network is not more robust than convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14309–14317. [Google Scholar]

- Zhao, L.; Wang, X.; Huang, L. An efficient agreement mechanism in CapsNets by pairwise product. arXiv 2020, arXiv:2004.00272. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Wang, F.; Liu, H. Understanding the behaviour of contrastive loss. In Proceedings of the IEEE/CVF Conference on Computer vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2495–2504. [Google Scholar]

- Wang, T.; Isola, P. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 9929–9939. [Google Scholar]

- Sharma, A.; Grau, O.; Fritz, M. Vconv-dae: Deep volumetric shape learning without object labels. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 236–250. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).