Whispered Speech Conversion Based on the Inversion of Mel Frequency Cepstral Coefficient Features

Abstract

:1. Introduction

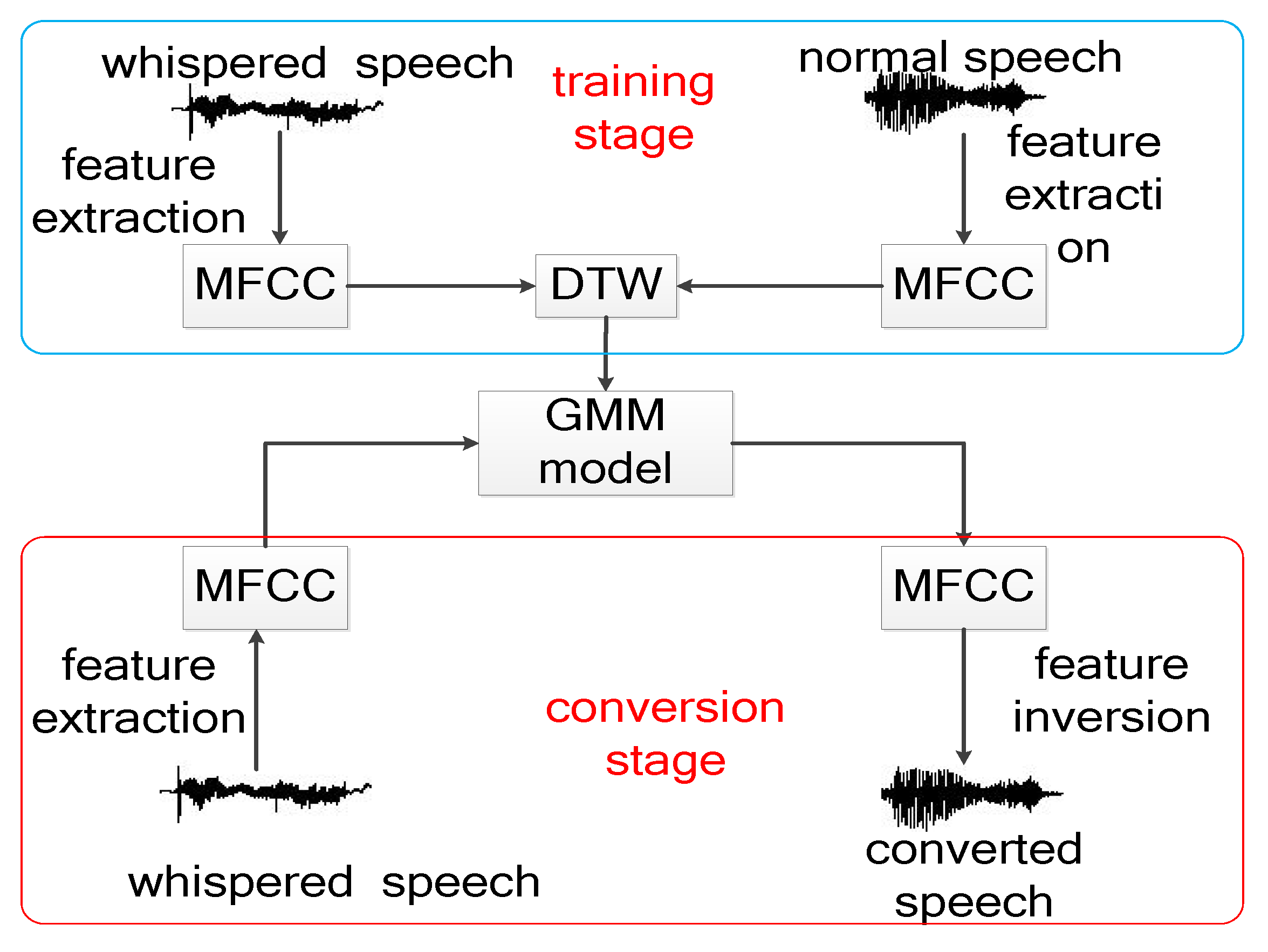

2. Whispered Speech Conversion Process

2.1. GMM-Based Whispered Speech Conversion Model

2.2. Inversion of Speech Features

- Estimation of energy spectrum Y

- 2.

- Phase spectrum estimation

| Algorithm 1 LSE-ISTFTM | |

| 1: | Input: Amplitude spectrum, ; Number of iterations, M |

| 2: | Output: Estimated speech frame, |

| 3: | Initialize to white noise |

| 4: | While , do |

| 5: | |

| 6: | |

| 7: | |

| 8: | |

| 9: | End while |

3. Simulation Experiment

3.1. Experimental Conditions

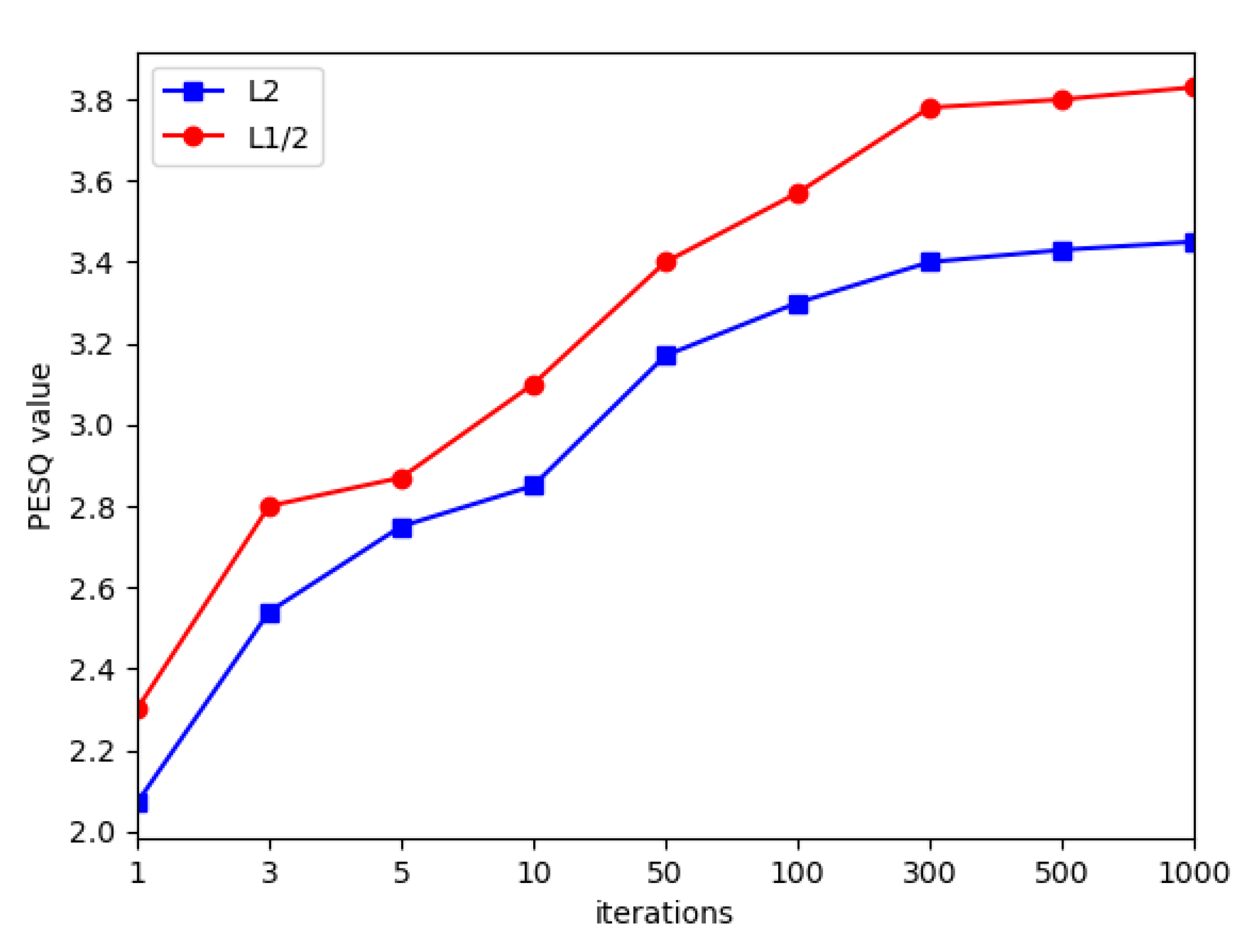

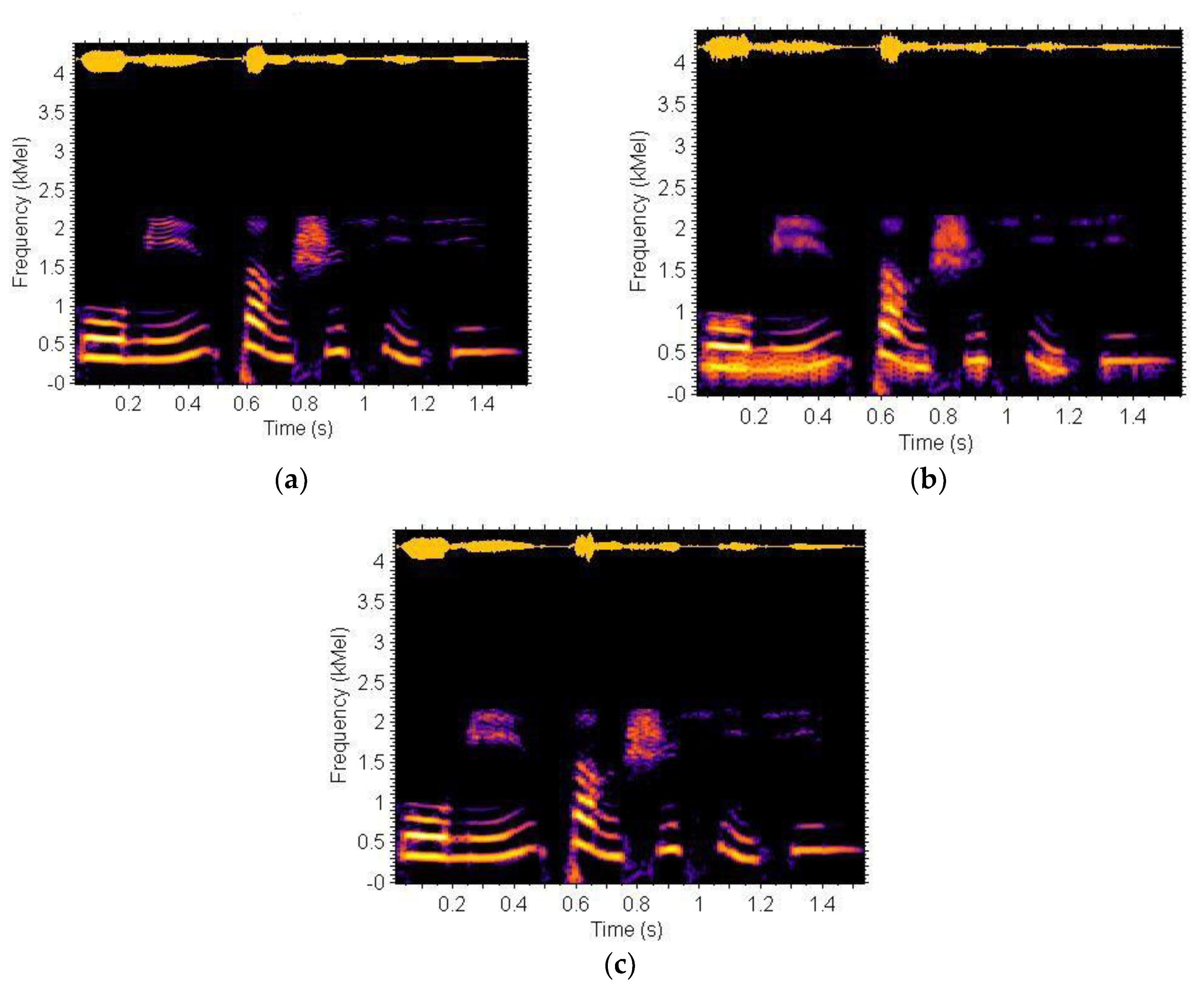

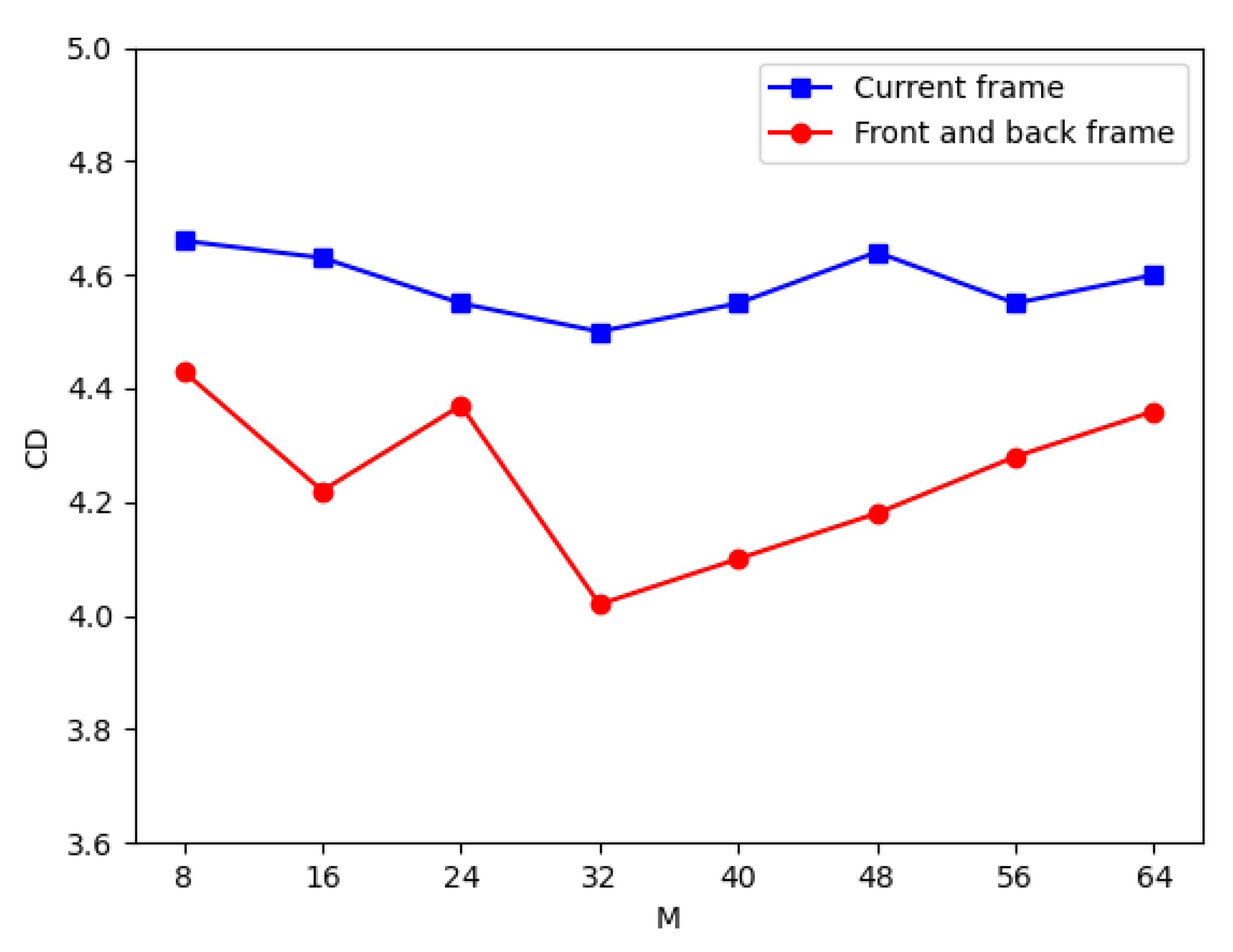

3.2. Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Grozdić, Đ.T.; Jovičić, S.T. Whispered speech recognition using deep denoising autoencoder and inverse filtering. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2313–2322. [Google Scholar] [CrossRef]

- Deng, J.; Frühholz, S.; Zhang, Z.; Schuller, B. Recognizing emotions from whispered speech based on acoustic feature transfer learning. IEEE Access 2017, 5, 5235–5246. [Google Scholar] [CrossRef]

- Wang, J.C.; Chin, Y.H.; Hsieh, W.C.; Lin, C.-H.; Chen, Y.-R.; Siahaan, E. Speaker identification with whispered speech for the access control system. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1191–1199. [Google Scholar] [CrossRef]

- Kelly, F.; Hansen, J.H.L. Analysis and calibration of Lombard effect and whisper for speaker recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 927–942. [Google Scholar] [CrossRef]

- Raeesy, Z.; Gillespie, K.; Ma, C.; Drugman, T.; Gu, J.; Maas, R.; Rastrow, A.; Hoffmeister, B. Lstm-based whisper detection. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 139–144. [Google Scholar]

- Galić, J.; Popović, B.; Pavlović, D.Š. Whispered speech recognition using hidden markov models and support vector machines. Acta Polytech. Hung. 2018, 15, 11–29. [Google Scholar]

- Deng, J.; Xu, X.; Zhang, Z.; Frühholz, S.; Schuller, B. Exploitation of phase-based features for whispered speech emotion recognition. IEEE Access 2016, 4, 4299–4309. [Google Scholar] [CrossRef] [Green Version]

- Sardar, V.M.; Shirbahadurkar, S.D. Timbre features for speaker identification of whispering speech: Selection of optimal audio descriptors. Int. J. Comput. Appl. 2021, 43, 1047–1053. [Google Scholar] [CrossRef]

- Houle, N.; Levi, S.V. Acoustic differences between voiced and whispered speech in gender diverse speakers. J. Acoust. Soc. Am. 2020, 148, 4002–4013. [Google Scholar] [CrossRef]

- Nakamura, K.; Toda, T.; Saruwatari, H.; Shikano, K. Speaking-aid systems using GMM-based voice conversion for electrolaryngeal speech. Speech Commun. 2012, 54, 134–146. [Google Scholar] [CrossRef]

- Lian, H.; Hu, Y.; Yu, W.; Zhou, J.; Zheng, W. Whisper to normal speech conversion using sequence-to-sequence mapping model with auditory attention. IEEE Access 2019, 7, 130495–130504. [Google Scholar] [CrossRef]

- Huang, C.; Tao, X.Y.; Tao, L.; Zhou, J.; Bin Wang, H. Reconstruction of whisper in Chinese by modified MELP. In Proceedings of the 2012 7th International Conference on Computer Science & Education (ICCSE), Melbourne, VIC, Australia, 14–17 July 2012; pp. 349–353. [Google Scholar]

- Li, J.; McLoughlin, I.V.; Song, Y. Reconstruction of pitch for whisper-to-speech conversion of Chinese. In Proceedings of the 9th International Symposium on Chinese Spoken Language Processing, Singapore, 12–14 September 2014; pp. 206–210. [Google Scholar]

- Jovičić, S.T.; Šarić, Z. Acoustic analysis of consonants in whispered speech. J. Voice 2008, 22, 263–274. [Google Scholar] [CrossRef] [PubMed]

- Perrotin, O.; McLoughlin, I.V. Glottal flow synthesis for whisper-to-speech conversion. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 889–900. [Google Scholar] [CrossRef]

- Sharifzadeh, H.R.; Mcloughlin, I.V.; Ahmadi, F. Regeneration of Speech in Speech-Loss Patients. In Proceedings of the 13th International Conference on Biomedical Engineering, Singapore, 12–14 September 2014; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1065–1068. [Google Scholar]

- Fan, X.; Lu, J.; Xu, B.L. Study on the conversion of Chinese whispered speech into normal speech. Audio Eng. 2005, 12, 44–47. [Google Scholar]

- Li, X.L.; Ding, H.; Xu, B.L. Phonological segmentation of whispered speech based on the entropy function. Acta Acustica 2005, 1, 69–75. [Google Scholar]

- Toda, T.; Black, A.W.; Tokuda, K. Speech Conversion Based on Maximum-Likelihood Estimation of Spectral Parameter Trajectory. Audio Speech Lang. Process. IEEE Trans. 2007, 15, 2222–2235. [Google Scholar] [CrossRef]

- Janke, M.; Wand, M.; Heistermann, T.; Schultz, T.; Prahallad, K. Fundamental frequency generation for whisper-to-audible speech conversion. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 2579–2583. [Google Scholar]

- Grimaldi, M.; Cummins, F. Speaker identification using instantaneous frequencies. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 1097–1111. [Google Scholar] [CrossRef]

- Toda, T.; Nakagiri, M.; Shikano, K. Statistical voice conversion techniques for body-conducted unvoiced speech enhancement. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 2505–2517. [Google Scholar] [CrossRef]

- Li, J.; McLoughlin, I.V.; Dai, L.R.; Ling, Z. Whisper-to-speech conversion using restricted Boltzmann machine arrays. Electron. Lett. 2014, 50, 1781–1782. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Yu, Y.; Zhao, H. F0 prediction from linear predictive cepstral coefficient. In Proceedings of the 2014 Sixth International Conference on Wireless Communications and Signal Processing (WCSP), Hefei, China, 23–25 October 2014; pp. 1–5. [Google Scholar]

- Boucheron, L.E.; De Leon, P.L.; Sandoval, S. Low bit-rate speech coding through quantization of mel-frequency cepstral coefficients. IEEE Trans. Audio Speech Lang. Process. 2011, 20, 610–619. [Google Scholar] [CrossRef]

- Wenbin, J.; Rendong, Y.; Peilin, L. Speech reconstruction for MFCC-based low bit-rate speech coding. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Borde, P.; Varpe, A.; Manza, R.; Yannawar, P. Recognition of isolated words using Zernike and MFCC features for audio visual speech recognition. Int. J. Speech Technol. 2015, 18, 167–175. [Google Scholar] [CrossRef]

- Xu, Z.; Chang, X.; Xu, F.; Zhang, H. L1/2 regularization: A thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1013–1027. [Google Scholar] [PubMed]

- Liu, J.X.; Wang, D.; Gao, Y.L.; Zheng, C.-H.; Xu, Y.; Yu, J. Regularized non-negative matrix factorization for identifying differentially expressed genes and clustering samples: A survey. IEEE/ACM Trans. Comput. Biol. Bioinform. 2017, 15, 974–987. [Google Scholar] [CrossRef] [PubMed]

- Parchami, M.; Zhu, W.P.; Champagne, B.; Plourde, E. Recent developments in speech enhancement in the short-time Fourier transform domain. IEEE Circuits Syst. Mag. 2016, 16, 45–77. [Google Scholar] [CrossRef]

- Yang, M.; Qiu, F.; Mo, F. A linear prediction algorithm in low bit rate speech coding improved by multi-band excitation model. Acta Acust. 2001, 26, 329–334. [Google Scholar]

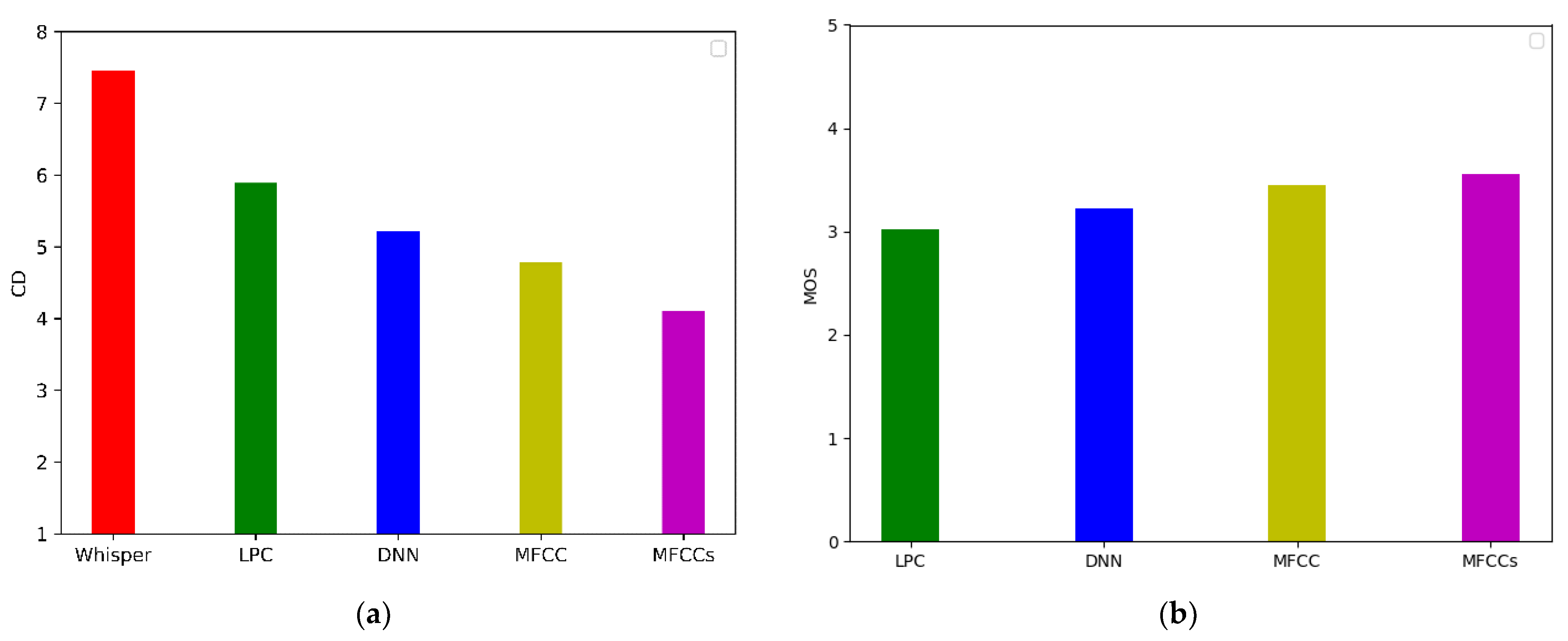

| Conversion Method | Linear Predictive Coefficient (LPC) | DNN | MFCC | MFCCs |

|---|---|---|---|---|

| MOS mean | 3.02 | 3.22 | 3.45 | 3.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Q.; Wang, Z.; Dou, Y.; Zhou, J. Whispered Speech Conversion Based on the Inversion of Mel Frequency Cepstral Coefficient Features. Algorithms 2022, 15, 68. https://doi.org/10.3390/a15020068

Zhu Q, Wang Z, Dou Y, Zhou J. Whispered Speech Conversion Based on the Inversion of Mel Frequency Cepstral Coefficient Features. Algorithms. 2022; 15(2):68. https://doi.org/10.3390/a15020068

Chicago/Turabian StyleZhu, Qiang, Zhong Wang, Yunfeng Dou, and Jian Zhou. 2022. "Whispered Speech Conversion Based on the Inversion of Mel Frequency Cepstral Coefficient Features" Algorithms 15, no. 2: 68. https://doi.org/10.3390/a15020068

APA StyleZhu, Q., Wang, Z., Dou, Y., & Zhou, J. (2022). Whispered Speech Conversion Based on the Inversion of Mel Frequency Cepstral Coefficient Features. Algorithms, 15(2), 68. https://doi.org/10.3390/a15020068