Application of the Reed-Solomon Algorithm as a Remote Sensing Data Fusion Tool for Land Use Studies

Abstract

1. Introduction

1.1. Radiometric and Spectral Resolution

1.2. Image Data Fusion

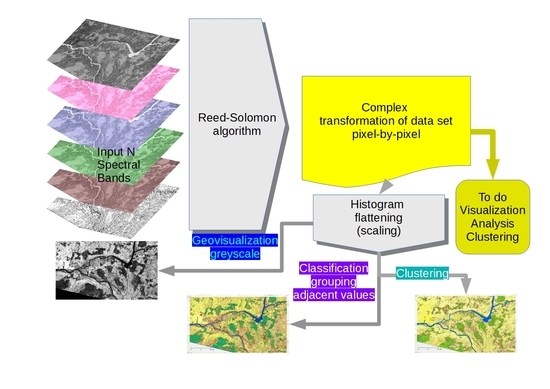

2. Methods and Materials

2.1. Reed-Solomon Codes (RS Algorithm)

2.1.1. Experience and Reinvention of the Algorithm

2.1.2. Deeper Insight into the Reed-Solomon Algorithm

2.1.3. Application of the Reed-Solomon Algorithm for Remote Sensing Data Fusion

| Algorithm 1 Reed-Solomon Pixel-Level Algorithm for Remote Sensing Data Fusion |

| 1: input a = 256//integer, number of values of radiometric resolution 2: input k = 9 //integer, number of spectral bands (raster layers) 3: input x (row, col, i) //integer, current pixel value in a stack of raster layers for certain row and column 4: output PX //integer, outcome pixel final value 5: intermediate output ppx[k] //set of integers 6: PX = 0 7: for i in range (k) 8: ppx(i) = x (row, col, i) //get current pixels value in a stack of raster layers for certain row and column 9: for i in range (k) 10: PX = PX + ppx(i)*(a**(i − 1)) 11: print PX |

2.2. Empirical Verification Case Study of Neighborhood of Legionowo Area

3. Results

Visualizations

4. Remarks

5. Discussion

| Algorithm 2 Reed-Solomon pixel-level reverse algorithm for remote sensing data fusion |

| 1: input a = 256 //integer, maximal value of radiometric resolution, divisor 2: input k = 9 //integer, number of spectral bands (raster layers) 3: input PX //integer, data fusion pixel final value 4: Output ppx(k) //integers, original recalculated (reconstructed) values 5: for i in range (k) 6: ppx(i) = 0 //reset current pixels’ values in a stack of raster layers 7: for i in range (k) 8: ppx(i) = PX%a //calculate remainder, original reconstructed pixel value for certain raster layer 9: PX = PX//a //calculate dividend for next raster layer 10: for i in range (k) 11: print ppx(i) |

6. Conclusions

Funding

Conflicts of Interest

References

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Lewiński, S. Obiektowa Klasyfikacja Zdjęć Satelitarnych Jako Metoda Pozyskiwania Informacji o Pokryciu i Użytkowaniu Ziemi; Instytut Geodezji i Kartografii: Warszawa, Poland, 2007; ISBN 978-83-60024-10-2. [Google Scholar]

- Sterian, P.; Pop, F.; Iordache, D. Advance Multispectral Analysis for Segmentation of Satellite Image. In Computational Science and Its Applications—ICCSA 2018; Springer International Publishing: Cham, Switzerland, 2018; Volume 10960, pp. 701–709. ISBN 978-3-319-95161-4. [Google Scholar]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal Data Fusion: An Overview of Methods, Challenges, and Prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Guanter, L.; Kaufmann, H.; Segl, K.; Foerster, S.; Rogass, C.; Chabrillat, S.; Kuester, T.; Hollstein, A.; Rossner, G.; Chlebek, C.; et al. The EnMAP Spaceborne Imaging Spectroscopy Mission for Earth Observation. Remote Sens. 2015, 7, 8830–8857. [Google Scholar] [CrossRef]

- Meher, B.; Agrawal, S.; Panda, R.; Abraham, A. A survey on region based image fusion methods. Inf. Fusion 2019, 48, 119–132. [Google Scholar] [CrossRef]

- Ardeshir Goshtasby, A.; Nikolov, S. Image fusion: Advances in the state of the art. Inf. Fusion 2007, 8, 114–118. [Google Scholar] [CrossRef]

- Ma, X.; Hu, S.; Liu, S.; Fang, J.; Xu, S. Remote Sensing Image Fusion Based on Sparse Representation and Guided Filtering. Electronics 2019, 8, 303. [Google Scholar] [CrossRef]

- Wu, H.; Zhao, S.; Zhang, J.; Lu, C. Remote Sensing Image Sharpening by Integrating Multispectral Image Super-Resolution and Convolutional Sparse Representation Fusion. IEEE Access 2019, 7, 46562–46574. [Google Scholar] [CrossRef]

- Van Mechelen, I.; Smilde, A.K. A generic linked-mode decomposition model for data fusion. Chemom. Intell. Lab. Syst. 2010, 104, 83–94. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Shivappa, S.T.; Trivedi, M.M.; Rao, B.D. Audiovisual Information Fusion in Human–Computer Interfaces and Intelligent Environments: A Survey. Proc. IEEE 2010, 98, 1692–1715. [Google Scholar] [CrossRef]

- McIntosh, A.R.; Bookstein, F.L.; Haxby, J.V.; Grady, C.L. Spatial Pattern Analysis of Functional Brain Images Using Partial Least Squares. NeuroImage 1996, 3, 143–157. [Google Scholar] [CrossRef] [PubMed]

- Mcgurk, H.; Macdonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Tiede, D.; Lang, S.; Füreder, P.; Hölbling, D.; Hoffmann, C.; Zeil, P. Automated damage indication for rapid geospatial reporting. Photogramm. Eng. Remote Sens. 2011, 77, 933–942. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised Deep Feature Extraction for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1349–1362. [Google Scholar] [CrossRef]

- Witharana, C.; Civco, D.L.; Meyer, T.H. Evaluation of pansharpening algorithms in support of earth observation based rapid-mapping workflows. Appl. Geogr. 2013, 37, 63–87. [Google Scholar] [CrossRef]

- Ehlers, M.; Klonus, S.; Johan Åstrand, P.; Rosso, P. Multi-sensor image fusion for pansharpening in remote sensing. Int. J. Image Data Fusion 2010, 1, 25–45. [Google Scholar] [CrossRef]

- Karathanassi, V.; Kolokousis, P.; Ioannidou, S. A comparison study on fusion methods using evaluation indicators. Int. J. Remote Sens. 2007, 28, 2309–2341. [Google Scholar] [CrossRef]

- Kim, M.; Holt, J.B.; Madden, M. Comparison of global-and local-scale pansharpening for rapid assessment of humanitarian emergencies. Photogramm. Eng. Remote Sens. 2011, 77, 51–63. [Google Scholar] [CrossRef]

- Ling, Y.; Ehlers, M.; Usery, E.L.; Madden, M. FFT-enhanced IHS transform method for fusing high-resolution satellite images. Isprs J. Photogramm. Remote Sens. 2007, 61, 381–392. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G. Comparison of nine fusion techniques for very high resolution data. Photogramm. Eng. Remote Sens. 2008, 74, 647–659. [Google Scholar] [CrossRef]

- Vijayaraj, V.; Younan, N.H.; O’Hara, C.G. Quantitative analysis of pansharpened images. Opt. Eng. 2006, 45, 046202. [Google Scholar]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Ranchin, T.; Aiazzi, B.; Alparone, L.; Baronti, S.; Wald, L. Image fusion—The ARSIS concept and some successful implementation schemes. ISPRS J. Photogramm. Remote Sens. 2003, 58, 4–18. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the third conference “Fusion of Earth data: Merging point measurements, raster maps and remotely sensed images”, Sophia Antipolis, France, 26–28 January 2000; Ranchin, T., Wald, L., Eds.; SEE/URISCA: Nice, France, 2000; pp. 99–103. [Google Scholar]

- Pohl, C.; van Genderen, J. Remote sensing image fusion: An update in the context of Digital Earth. Int. J. Digit. Earth 2014, 7, 158–172. [Google Scholar] [CrossRef]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef]

- SceneSharp Technologies Inc. fuze go—SceneSharp. Available online: https://scenesharp.com/fuze-go/ (accessed on 22 June 2020).

- Fang, F.; Li, F.; Zhang, G.; Shen, C. A variational method for multisource remote-sensing image fusion. Int. J. Remote Sens. 2013, 34, 2470–2486. [Google Scholar] [CrossRef]

- Huang, B.; Zhang, H.; Yu, L. Improving Landsat ETM+ Urban Area Mapping via Spatial and Angular Fusion With MISR Multi-Angle Observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 101–109. [Google Scholar] [CrossRef]

- Berger, C.; Voltersen, M.; Eckardt, R.; Eberle, J.; Heyer, T.; Salepci, N.; Hese, S.; Schmullius, C.; Tao, J.; Auer, S.; et al. Multi-Modal and Multi-Temporal Data Fusion: Outcome of the 2012 GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1324–1340. [Google Scholar] [CrossRef]

- Simone, G.; Farina, A.; Morabito, F.C.; Serpico, S.B.; Bruzzone, L. Image fusion techniques for remote sensing applications. Inf. Fusion 2002, 3, 3–15. [Google Scholar] [CrossRef]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of Multispectral Images to High Spatial Resolution: A Critical Review of Fusion Methods Based on Remote Sensing Physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, B.; Li, S.; Dong, M. Perceptual fusion of infrared and visible images through a hybrid multi-scale decomposition with Gaussian and bilateral filters. Inf. Fusion 2016, 30, 15–26. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Dong, J.; Zhuang, D.; Huang, Y.; Fu, J. Advances in Multi-Sensor Data Fusion: Algorithms and Applications. Sensors 2009, 9, 7771–7784. [Google Scholar] [CrossRef]

- Gomez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal Classification of Remote Sensing Images: A Review and Future Directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Mertens, T.; Kautz, J.; Van Reeth, F. Exposure Fusion. In Proceedings of the 15th Pacific Conference on Computer Graphics and Applications (PG’07); IEEE: Maui, HI, USA, 2007; pp. 382–390. [Google Scholar]

- Ben Hamza, A.; He, Y.; Krim, H.; Willsky, A. A multiscale approach to pixel-level image fusion. ICA 2005, 12, 135–146. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.; Wang, Y. Using the discrete wavelet frame transform to merge Landsat TM and SPOT panchromatic images. Inf. Fusion 2002, 3, 17–23. [Google Scholar] [CrossRef]

- Pajares, G.; Manuel De La Cruz, J. A wavelet-based image fusion tutorial. Pattern Recognit. 2004, 37, 1855–1872. [Google Scholar] [CrossRef]

- Lewis, J.J.; O’Callaghan, R.J.; Nikolov, S.G.; Bull, D.R.; Canagarajah, N. Pixel- and region-based image fusion with complex wavelets. Inf. Fusion 2007, 8, 119–130. [Google Scholar] [CrossRef]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Yang, S.; Wang, M.; Jiao, L.; Wu, R.; Wang, Z. Image fusion based on a new contourlet packet. Inf. Fusion 2010, 11, 78–84. [Google Scholar] [CrossRef]

- Li, T.; Wang, Y. Biological image fusion using a NSCT based variable-weight method. Inf. Fusion 2011, 12, 85–92. [Google Scholar] [CrossRef]

- Wang, L.; Li, B.; Tian, L. Multi-modal medical image fusion using the inter-scale and intra-scale dependencies between image shift-invariant shearlet coefficients. Inf. Fusion 2014, 19, 20–28. [Google Scholar] [CrossRef]

- Wang, L.; Fan, D.; Li, W.; Liao, Y.; Zhang, X.; Liu, M.; Yang, Z. Grain-size effect of biogenic silica in the surface sediments of the East China Sea. Cont. Shelf Res. 2014, 81, 29–37. [Google Scholar] [CrossRef]

- Bin, Y.; Shutao, L. Multifocus Image Fusion and Restoration With Sparse Representation. IEEE Trans. Instrum. Meas. 2010, 59, 884–892. [Google Scholar] [CrossRef]

- Kim, M.; Han, D.K.; Ko, H. Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion 2016, 27, 198–214. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.; Wang, Y. Multifocus image fusion using artificial neural networks. Pattern Recognit. Lett. 2002, 23, 985–997. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.-Y.; Tsang, I.W.-H.; Wang, Y. Fusing Images With Different Focuses Using Support Vector Machines. IEEE Trans. Neural Netw. 2004, 15, 1555–1561. [Google Scholar] [CrossRef]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A Fast Intensity–Hue–Saturation Fusion Technique With Spectral Adjustment for IKONOS Imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. Patent US6011875A, 2000. [Google Scholar]

- Shutao, L.; Bin, Y. Hybrid Multiresolution Method for Multisensor Multimodal Image Fusion. IEEE Sens. J. 2010, 10, 1519–1526. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, M. Image fusion with morphological component analysis. Inf. Fusion 2014, 18, 107–118. [Google Scholar] [CrossRef]

- Wang, J.; Peng, J.; Feng, X.; He, G.; Wu, J.; Yan, K. Image fusion with nonsubsampled contourlet transform and sparse representation. J. Electron. Imaging 2013, 22, 043019. [Google Scholar] [CrossRef]

- Daneshvar, S.; Ghassemian, H. MRI and PET image fusion by combining IHS and retina-inspired models. Inf. Fusion 2010, 11, 114–123. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fusion 2005, 6, 225–234. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Baker, B.A.; Warner, T.A.; Conley, J.F.; McNeil, B.E. Does spatial resolution matter? A multi-scale comparison of object-based and pixel-based methods for detecting change associated with gas well drilling operations. Int. J. Remote Sens. 2013, 34, 1633–1651. [Google Scholar] [CrossRef]

- D’Oleire-Oltmanns, S.; Marzolff, I.; Tiede, D.; Blaschke, T. Detection of Gully-Affected Areas by Applying Object-Based Image Analysis (OBIA) in the Region of Taroudannt, Morocco. Remote Sens. 2014, 6, 8287–8309. [Google Scholar] [CrossRef]

- Goodin, D.G.; Anibas, K.L.; Bezymennyi, M. Mapping land cover and land use from object-based classification: An example from a complex agricultural landscape. Int. J. Remote Sens. 2015, 36, 4702–4723. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Deuber, M. Building Change Detection from Historical Aerial Photographs Using Dense Image Matching and Object-Based Image Analysis. Remote Sens. 2014, 6, 8310–8336. [Google Scholar] [CrossRef]

- Du, P.; Xia, J.; Zhang, W.; Tan, K.; Liu, Y.; Liu, S. Multiple Classifier System for Remote Sensing Image Classification: A Review. Sensors 2012, 12, 4764–4792. [Google Scholar] [CrossRef] [PubMed]

- Werner, P. Symulacja zmian zasięgu obszaru zurbanizowanego aglomeracji Warszawy. Eksperyment zastosowania automatów komórkowych. In Wspólczesne Problemy i Koncepcje Teoretyczne Badan Przestrzenno-Ekonomicznych = Contemporary Problems and Theoretical Conceptions in Spatial-Economic Research; Biuletyn KPZK; Polska Akademia Nauk, Komitet Przestrzennego Zagospodarowania Kraju: Warszawa, Poland, 2005; Volume 219, pp. 212–219. ISBN 83-89693-45-3. [Google Scholar]

- Werner, P. Simulation of changes of the Warsaw Urban Area 1969–2023 (Application of Cellular Automata). Misc. Geogr. 2006, 12, 329–335. [Google Scholar] [CrossRef]

- Werner, P. Application of Cellular Automata and Map Algebra in Studies of Land Use Changes The Neighborhood Coefficients Method’. Geoinformatica Pol. 2009, 9, 7–20. [Google Scholar]

- Werner, P.A. Neighbourhood coefficients of cellular automata for research on land use changes with map algebra. Misc. Geogr. Reg. Stud. Dev. 2012, 16, 57–63. [Google Scholar] [CrossRef]

- Werner, P.; Korcelli, P.; Kozubek, E. Land Use Change and Externalities in Poland’s Metropolitan Areas Application of Neighborhood Coefficients. In Computational Science and Its Applications ICCSA 2014; Beniamino, M., Sanjay, M., Ana Maria, A.C.R., Carmelo, T., Jorge, G.R., Maria, I.F., David, T., Bernady, O.A., Osvaldo., G., Eds.; Springer International Publishing: Cham, Switzerland, 2014; ISBN 978-3-319-09146-4. [Google Scholar]

- Korcelli, P.; Kozubek, E.; Werner, P. Zmiany Użytkowania Ziemi a Interakcje Przestrzenne na Obszarach Metropolitalnych Polski; Prace Geograficzne; Instytut Geografii i Przestrzennego Zagospodarowania PAN: Warsaw, Poland, 2016; Volume 254, ISBN 978-83-61590-81-1. [Google Scholar]

- Reed, I.S.; Solomon, G. Polynomial Codes Over Certain Finite Fields. J. Soc. Ind. Appl. Math. 1960, 8, 300–304. [Google Scholar] [CrossRef]

- Reed–Solomon Error Correction. Available online: https://en.wikipedia.org/wiki/Reed-Solomon_error_correction (accessed on 6 June 2020).

- Banerjee, A.; Jana, B. A robust reversible data hiding scheme for color image using reed-solomon code. Multimed Tools Appl. 2019, 78, 24903–24922. [Google Scholar] [CrossRef]

- Konyar, M.Z.; Öztürk, S. Reed Solomon Coding-Based Medical Image Data Hiding Method against Salt and Pepper Noise. Symmetry 2020, 12, 899. [Google Scholar] [CrossRef]

- Kim, S. Reversible Data-Hiding Systems with Modified Fluctuation Functions and Reed-Solomon Codes for Encrypted Image Recovery. Symmetry 2017, 9, 61. [Google Scholar] [CrossRef]

- GIOŚ-Corine. Available online: http://clc.gios.gov.pl/ (accessed on 17 June 2020).

- Image courtesy of the U.S. Geological Survey Landsat L7_ETM ID=LE71880232000128EDC00, 2017.

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big Data for Remote Sensing: Challenges and Opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

| Name of file: LE07_ABCDEFGHI.grd Size: 939 rows and 1641 columns EPSG = 32634 PROJ_DESC = UTM Zone 34/WGS84/meters PIXEL WIDTH = 30 m PIXEL HEIGHT = 30 m | ||

| Grid Data Maximum: 4.43776518668 × 1021 (4,437,765,163,592,664,000,000) | Grid Data Minimum: 0 | Grid No-Data Value: 1.70141 × 1038 |

| Input | a = 256 k = 9 | Divisor = a | Reconstruction from Outcome |

|---|---|---|---|

| No of Spectral Band | .x Pixel Value (Random) | Dividend | Modulo Division |

| 1 | 150 | 6.79246 × 1020 | 150 |

| 2 | 75 | 2.65330 × 1018 | 75 |

| 3 | 56 | 1.03645 × 1016 | 56 |

| 4 | 188 | 4.04862 × 1013 | 188 |

| 5 | 173 | 1.58149 × 1011 | 173 |

| 6 | 204 | 617,770,444 | 204 |

| 7 | 109 | 2,413,165 | 109 |

| 8 | 210 | 9426 | 210 |

| 9 | 36 | 36 | 36 |

| RS algorithm outcome pixel value | 6.79246 × 1020 |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Werner, P.A. Application of the Reed-Solomon Algorithm as a Remote Sensing Data Fusion Tool for Land Use Studies. Algorithms 2020, 13, 188. https://doi.org/10.3390/a13080188

Werner PA. Application of the Reed-Solomon Algorithm as a Remote Sensing Data Fusion Tool for Land Use Studies. Algorithms. 2020; 13(8):188. https://doi.org/10.3390/a13080188

Chicago/Turabian StyleWerner, Piotr A. 2020. "Application of the Reed-Solomon Algorithm as a Remote Sensing Data Fusion Tool for Land Use Studies" Algorithms 13, no. 8: 188. https://doi.org/10.3390/a13080188

APA StyleWerner, P. A. (2020). Application of the Reed-Solomon Algorithm as a Remote Sensing Data Fusion Tool for Land Use Studies. Algorithms, 13(8), 188. https://doi.org/10.3390/a13080188