Abstract

In this study, a new modification of the meta-heuristic approach called Co-Operation of Biology-Related Algorithms (COBRA) is proposed. Originally the COBRA approach was based on a fuzzy logic controller and used for solving real-parameter optimization problems. The basic idea consists of a cooperative work of six well-known biology-inspired algorithms, referred to as components. However, it was established that the search efficiency of COBRA depends on its ability to keep the exploitation and exploration balance when solving optimization problems. The new modification of the COBRA approach is based on other method for generating potential solutions. This method keeps a historical memory of successful positions found by individuals to lead them in different directions and therefore to improve the exploitation and exploration capabilities. The proposed technique was applied to the COBRA components and to its basic steps. The newly proposed meta-heuristic as well as other modifications of the COBRA approach and components were evaluated on three sets of various benchmark problems. The experimental results obtained by all algorithms with the same computational effort are presented and compared. It was concluded that the proposed modification outperformed other algorithms used in comparison. Therefore, its usefulness and workability were demonstrated.

1. Introduction

Many real-world problems can be formulated as optimization problems, which are characterized by different properties such as, for example, many local optima, non-separability, asymmetricity, etc. These problems arise from various scientific fields, such as engineering and related areas. For solving such kinds of problems, researchers have presented different methods over recent years, and heuristic optimization methods and their modifications are among them [1,2]. Random search-based and nature-inspired algorithms are faster and more efficient than traditional methods (Newton’s method, bisection method, Hooke-Jeeves method, etc.) while solving high-dimensional complex multi-modal optimization problems, for example [3]. However, they also have difficulties in keeping the balance between exploration (the procedure of finding completely new areas of the search space) and exploitation (the procedure of finding the regions of a search space close to previously visited points) when solving these problems [4,5,6].

Biology-inspired (population-based) algorithms such as Particle Swarm Optimization (PSO) [7], Ant Colony Optimization (ACO) [8], the Artificial Bee Colony (ABC) [9], the Whale Optimization Algorithm (WOA) [10], the Grey Wolf Optimizer (GWO) [11], the Artificial Algae Algorithm (AAA) [12], Moth-Flame Optimization (MFO) [13] and others are among the most popular and frequently used heuristic optimization methods. These algorithms imitate the behavior of a group of animals (individual or social) or some of their features. Thus, the process of optimization consists of generating a set of random solutions (called individuals in other words) and leading them to the optimal solution for a given problem.

Biology-inspired algorithms have found a variety of applications for real-world problems from different areas due to their high efficiency, for example [14,15,16]. These algorithms gained popularity among research due to the fact that they can be used for solving various optimization problems regardless of the objective function’s features. Nevertheless, according to the No Free Lunch (NFL) theorem there is no universal method for solving all optimization problems [17]. Therefore, researchers propose new optimization algorithms to increase the efficiency of the currently existing algorithms for solving a wider range of optimization problems.

One way to modify currently existing techniques consists of developing collective meta-heuristics, which use the advantages of several techniques at the same time and, therefore, are more efficient [18,19,20]. In [21] the meta-heuristic approach COBRA (Co-Operation of Biology-Related Algorithms) based on parallel functioning of several populations was proposed for solving unconstrained real-valued optimization problems. Its main idea can be described as the simultaneous work of several biology-inspired algorithms with similar schemes, which compete and cooperate with each other.

The original version of the COBRA consisted of six popular biology-inspired component algorithms, namely the Particle Swarm Optimization Algorithm (PSO) [7], the Cuckoo Search Algorithm (CSA) [22], the Firefly Algorithm (FFA) [23], the Bat Algorithm (BA) [24], the Fish School Search Algorithm (FSS) [25] and, finally, the Wolf Pack Search Algorithm (WPS) [26]. However, various other heuristics can be used as component algorithms (for example, the ones mentioned above) for COBRA as well as previously conducted experiments demonstrating that even the already chosen bio-inspired algorithms can be combined differently [27].

Later, the fuzzy logic-based controllers [28] were proposed for the automated selection of biology-inspired algorithms to be included in the ensemble from a predefined set, and the number of individuals in each population [29]. The idea of using fuzzy controllers for parameter adaptation of the heuristic was previously explored by researchers, for instance in [30,31], and their usefulness was established. The fuzzy-controlled COBRA modification was named COBRA-f and its efficiency was demonstrated in [32].

The COBRA-f approach, like the original COBRA algorithm, was developed for continuous optimization [29], but despite its effectiveness compared to the mentioned biology-inspired algorithms (in other words its components), the COBRA-f meta-heuristic still needs to address the problem of exploitation and exploration [33]. As was noted before, a variety of ideas has been proposed to find the exploration-exploitation balance in the population-based biology-related algorithms, including methods of parameter adaptation [34,35,36], island models [37,38], population size control [39,40], and many others. One of the most valuable ideas proposed for the Differential Evolution (DE) [41] algorithm in the study [42] is to use an external archive of potentially good solutions, which has limited size and updated during the optimization process. This idea is similar to the one used in multi-objective optimizers such as SPEA or SPEA2 [43], where an external archive of non-dominated solutions is maintained.

The main idea of the archive is to save promising solutions that may have valuable data about the search space and its potentially good areas, thereby highlighting the algorithms’ successful search history [43]. The idea of applying this information could be used to any biology-related optimization heuristic, for instance [44,45]. In this study, the idea of applying a success history-based archive of potentially good solutions is implemented for the COBRA-f algorithm.

This paper is an extended version of our paper published in the proceedings of the 8th International Workshop on Mathematical Models and their Applications (IWMMA 2019) (Krasnoyarsk, Russian Federation, 18–21 November 2019) [46]. Algorithm introduced in [46] was tested on two additional sets of benchmark functions. Moreover, population size changes were observed while solving various benchmark problems with 10 and 30 variables. It should be noted that in this study several modifications were discussed and the number of compared algorithms increased.

Therefore, in this paper, first original COBRA meta-heuristic and its version COBRA-f are presented, and then a description of the newly proposed method for the fuzzy-controlled COBRA is presented. The next section contains the experimental results obtained by the original COBRA algorithm, the COBRA-f with fuzzy controller and the proposed approach as well as the results obtained by the COBRA’s components with and without external archive are presented and discussed. The conclusions are given in the last section.

2. Co-Operation of Biology-Related Algorithms (COBRA)

Five biology-inspired optimization methods, to be more specific, Particle Swarm Optimization (PSO) [7], Wolf Pack Search (WPS) [26], the Firefly Algorithm (FFA) [23], the Cuckoo Search Algorithm (CSA) [22] and the Bat Algorithm (BA) [24] were used as basis for the meta-heuristic approach called Co-Operation of Biology-Related Algorithms or COBRA [21]. These algorithms are referred to as “component algorithms” of the COBRA approach. It should be noted that the number of component algorithms can be changed (increased or decreased), and it affects the workability of the COBRA meta-heuristic, which was proved in [27].

All mentioned population-based algorithms have their advantages and disadvantages. So, the possibility of using all of them simultaneously while solving any given optimization problem (namely their advantages) was the reason for the development of a new potentially better cooperative approach. Also experimental results show that it is hard to determine which algorithm should be used for a given problem, thus using a cooperative meta-heuristic means that there is no longer the necessity to choose one of the mentioned biology-inspired algorithms [21].

The optimization process of the COBRA approach starts with generating one population for each biology-inspired component algorithm, and therefore, with generating five (or six later when the Fish School Search (FSS) [25] algorithm was added to the collective) populations. After that all populations are executed in parallel or in other words are executed simultaneously, cooperating with each other.

All listed component algorithms are population-based heuristics, and thus, for each of them the population size or number of individuals (potential solutions) should be chosen beforehand, and this number does not change during the optimization process. However, the COBRA approach is a self-tuning meta-heuristic. Thus, first the minimum and maximum numbers of individuals throughout all populations are defined, and then the initial sizes of populations. Then the population size for each component algorithm changes automatically during the optimization process.

The number of individuals in the population for each component depends on the fitness values of these individuals, namely the population size can increase or decrease during the optimization process. If the overall population fitness value was not improved during a given number of iterations, then the size of each population increased, and vice versa, if the overall population fitness value was constantly improved during a given number of iterations, then the size of each population decreased. Moreover, a population size can increase by accepting individuals removed from other populations in case if its average fitness value is better than the average fitness value of all other populations. Thus, the “winner algorithm” can be determined as an algorithm whose population has the best average fitness value at every step.

The original algorithm COBRA additionally has a migration operator, which allows “communication” between the populations in ensemble. To be more specific, “communication” was determined in the following way: populations exchange individuals in such a way that a part of the worst individuals of each population is replaced by the best individuals of other populations. Thus, the group performance of all algorithms can be improved.

The performance of the COBRA meta-heuristic approach was evaluated on a set of various benchmark and real-world problems and the experiments showed that COBRA works successfully and is reliable on different optimization problems [21]. Moreover, the simulations showed that COBRA outperforms its component algorithms when the dimension grows or when complicated problems are solved, and therefore, it should be used instead of them [21].

3. Fuzzy-Controlled COBRA

As was mentioned in the previous section, the original COBRA approach has six similar biology-inspired component algorithms, which mimic the collective behavior of their corresponding animal groups, thereby allowing the global optima of real-valued functions to be found. Performance analysis showed that all of them are sufficiently effective for solving optimization problems, and their workability has been established [21,47].

However, there are various other algorithms which can be used as components for COBRA as well as previously conducted experiments demonstrating that even the biology-inspired algorithms already chosen can be combined in different ways. For example, in [27] five different combinations of the population-based heuristics for the COBRA algorithm were presented, and their efficiency was examined on test problems from the CEC 2013 competition [48]. It was established that three of them show the best results on test functions depending on the number of variables [27].

The described problem was solved by controllers based on fuzzy logic [28]. The fuzzy controller implements a more flexible parameter tuning algorithm, compared to the original approach used in COBRA [29]. The fuzzy controller operates by using special fuzzification, inference and defuzzification schemes [28], which allow generating real-valued outputs. In the mentioned study [29], component algorithms are rated with success values, which were used as the fuzzy-controller inputs, and the amount of population size changes as its outputs.

The controller based on fuzzy logic used in this study had 7 inputs, including 6 success rates of component algorithms and the success rate of the whole population, and 6 outputs, including the number of individuals to add or remove from every heuristic component algorithm. The success of every component was determined as the best achieved fitness value of the corresponding component. This choice was made in accordance with the research presented in [29]. The 7-th input variable was determined as the ratio of the number of steps, during which the best-found fitness value (found by all algorithms together) was improved, to the adaptation period, which was a parameter.

To obtain the output values, the Mamdani fuzzy inference procedure was used, and the rules had the following form:

where is the q-th fuzzy rule, ... is the set of input values in n-dimensional space ( in this case), ... is the set of outputs (), is the fuzzy set for the i-th input variable, is the fuzzy set for the j-th output variable. The rule base consisted of 21 fuzzy rules and was structured as follows: the rules were each three rules were organized to describe the case when one of the components achieved better fitness values than the others (as there are six components, a total of 18 rules were set); the last 3 rules used the total success rate for all components (variable 7) to determine if solutions should be added or removed from all components, thus regulating the amount of available computational resources [29]. Part of the described rules base is presented in Table 1.

Table 1.

Part of the rule base.

The input variables were set to be in , and the fixed triangular fuzzy terms were used for this case. The fourth fuzzy term was added, “larger than 0” (opposite to ) in addition to three classical terms , and and the “Don’t Care” () condition. The and ‘ are needed to simplify the rules and decrease their number [29].

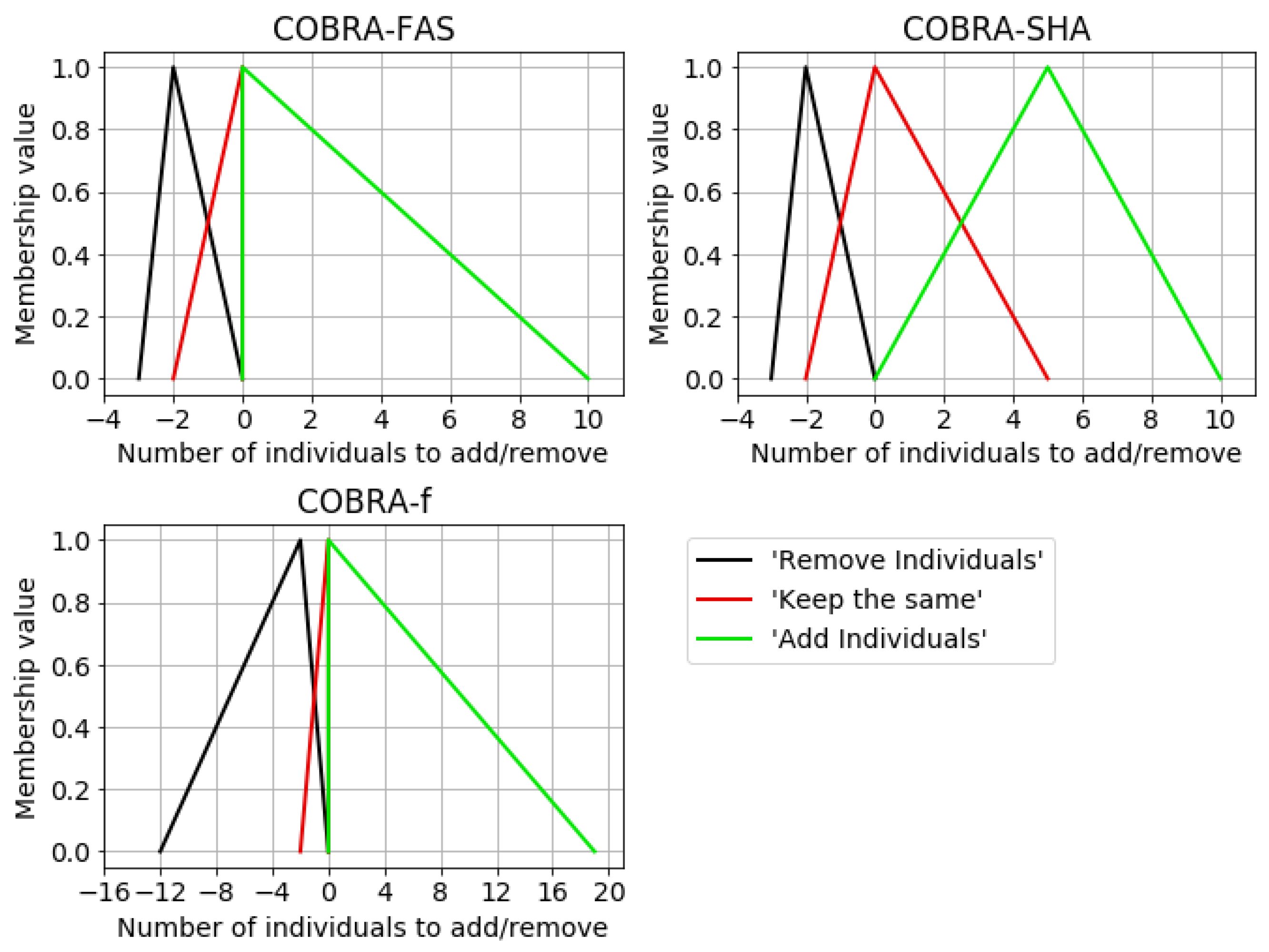

The output variables were also set using 3 triangular fuzzy terms. These terms were symmetrical, and their positions were determined according to 2 pairs of values, which encoded the right and left positions of the central term, and the middle position of the left and right terms, and the minimal and maximal values for the side terms. These values were especially optimized with the PSO heuristic [7] and the following parameters were found: according to [29]. The defuzzification was performed by calculating using the center of mass approach for the shape obtained after the fuzzy inference.

The “communication”, or in other words the migration operator, did not have any changes. The fuzzy-controlled COBRA performance was evaluated on a set of benchmark optimization problems with 10 and 30 variables from [48]. The experimental results have shown that the COBRA-f algorithm can find best solutions for many benchmark problems. Moreover, the COBRA-f meta-heuristic algorithm was compared to its components, as well as original COBRA. Thus, the simulations and the comparison have shown that the COBRA-f algorithm is superior to the previously proposed biology-related component algorithms, especially with the growth of the dimension [29].

4. Proposed Approach

In this study, a new modification consisting of using the success history-based position adaptation of potential solutions (SHPA) is introduced. The main idea is to improve the search diversity of biology-inspired component algorithms of the fuzzy-controlled COBRA meta-heuristic approach and consequentially COBRA’s efficiency. The key concept of the proposed technique is described below.

First, one population for each component algorithm is generated, namely the set of potential solutions called individuals and represented as real-valued vectors with length D is randomly generated, where D is the number of dimensions for a given optimization problem. It should be noted that on this step the population size for each component is chosen beforehand and will be changed later automatically by the fuzzy controller. Also, additionally for each population (component algorithm) an external archive for best-found positions is created. At the beginning the external archive is empty and then its size can increase to the maximum value, which is chosen by the end-user and stays the same during the work of the component algorithm.

The best position found by a given individual or in other words the local best-found position in the search space for each individual in each population is saved. Initially each individual’s current coordinates are used as its local best. If later a better position is found, then it will be used as the local best and the previous one will be stored in the mentioned external archive.

The pseudo-code introduced in Algorithm 1 for a minimization problem can describe the process of updating the external archive for each component algorithm.

| Algorithm 1 The process of updating the external archive for component algorithms |

|

As was already mentioned, all component algorithms are executed in parallel after generating of six populations (one for each of them) and creation of the external archives. Thus, when individuals change their positions in the search space according to the formulas given for the considered component algorithm they can use with some probability the potential solutions stored in the i-th external archive, where ....

It should be noted that the value of the probability depends on the considered biology-inspired component algorithm. More specifically, previously conducted research showed that only three components of the COBRA approach, namely the Firefly Algorithm, the Cuckoo Search Algorithm and the Bat Algorithm, demonstrate statistically better results by using an archive for the individual’s position adaptation [49]. Thus, only these three algorithms use archives during their execution.

First, let us consider the Bat Algorithm [24]. Each i-th individual from the population in the Bat Algorithm is represented by its coordinates ... and velocity ..., where D is the number of dimensions of the search space. The following formulas are used for updating velocities and locations/solutions in the BA approach:

where t and are the numbers indicating the current and the next iterations, is the current best-found solution by the whole population, and is the frequency of the emitted pulses for the i-th individual [24]. Thus, with the probability instead of the randomly chosen individual from the external archive (if it is not empty) will be used. It should be noted that the external archive is also selected randomly (it is not necessarily the external archive created for the BA population). It is done with the expectation that individuals will move in multiple directions and, therefore, will be able to find better solutions.

For the other two biology-inspired component algorithms, FFA and CSA, the external archives were used in the similar way: with a given probability the current point of attraction was changed to a stored in the archive solution (from a randomly chosen archive). To be more specific, in the CSA approach individuals were sorted according to the objective function [22]. Then part of the worst ones was removed from the population and new individuals instead of them were generated by using the external archives with a given probability . On the other hand, in the FFA approach a firefly or individual moves towards another firefly or individual if the latest has a better objective function value [23]. Thus, while using the proposed technique for the FFA approach the firefly can be moved also towards individuals from the external archives.

There are two basic steps after the simultaneous execution of all component algorithms: the fuzzy controller makes a decision about the population sizes of components (this step is called competition) and migration, or in other words the exchanging of individuals between populations (co-operation). To be more specific, the size of each population can decrease by removing some of individuals from the population to the minimal value chosen by the end-user or increase (the overall maximum size of all populations together is also established by the end-user beforehand). While increasing the population size or in other words adding new individuals, these new individuals can be generated by using the scheme in Algorithm 2.

| Algorithm 2 Generating of the new individuals |

|

As was already noted, all populations communicate with each other by exchanging individuals. However, in this modification of the fuzzy-controlled COBRA, part of the worst individuals of each population is replaced by the new individuals generated by a scheme similar to the one described above (using normal distribution), but instead of the current best-found position by all populations is used and the external archive is also randomly chosen.

Thus, the proposed success history-based position adaptation method of the potential solutions depends on the probability (there are three values for this probability, or more specifically, one value for each component algorithm that uses its archive during the execution), the maximum archive size and probabilities (one for each component algorithm).

5. Results and Discussions

5.1. Numerical Benchmarks

To check the efficiency of the proposed algorithm, the modified fuzzy-controlled COBRA algorithm is tested on three different sets of test problems, which are 23 classical problems [50], nine standard benchmark problems with 10 and 30 variables [50], and 16 problems taken from the CEC 2014 competition [51]. These functions have been widely used in the literature [49] or [52], for example.

These functions are known as SET-1, SET-2 and SET-3, respectively. These functions are based on a set of classical benchmark functions such as Ackley’s, Rastrigin’s, Katsuura’s, Griewank’s, Weierstrass’s, Sphere’s, HappyCat’s, Swefel’s, HGBat and Rosenbrock’s functions. They span a diverse set of features such as noise in the fitness function, non-separable, multimodality, ill-conditioning and rotation, among others. The functions in the stated sets of test problems are separated into three groups: unimodal, high-dimensional and low-dimensional multi-modal benchmark functions.

5.2. Compared Algorithms and Parametric Setup

The performance of the suggested modification of the COBRA algorithm (which will be called COBRA-SHA hereinafter) was compared with other state-of-the-art algorithms like PSO [7], WPS [26], FFA [23], CSA [22], BA [24] and FSS [25]. These algorithms have several parameters that should be initialized before running. The optimal control parameters usually depend on problems and they are unknown without prior knowledge. Therefore, the initial values of the necessary parameters for all algorithms were taken from original papers dedicated to them and proposed by authors.

Furthermore, the proposed approach was compared with modifications of the FFA, CSA and BA algorithms, which also use the external archives, as it was established previously that their usage improves the workability of the listed heuristics [48]. Let us denote them as FFA-a, CSA-a and BA-a, respectively.

To show the advantage of the proposed modification more clearly, it was also compared with the fuzzy-controlled COBRA-f [29] and also with a similar modification of the COBRA meta-heuristic, in which unlike COBRA-SHA, each component algorithm can use only its own external archive (this modification was named COBRA-fas) [53]. Parameters of the fuzzy controllers for the COBRA-fas and COBRA-SHA approaches were found by PSO in the same way as for the COBRA-f algorithm [10], namely the following parameters were obtained: , and respectively. Thus, the fuzzy sets for the outputs of the obtained controllers can be represented by Figure 1.

Figure 1.

Fuzzy terms for all 6 outputs.

For all mentioned biology-inspired component algorithms, the initial population size was equal to 100 on each of 23 benchmark functions from SET-1 for comparison, while the maximum number of iterations was equal to 1000. Thus, to check the efficiency of the proposed algorithm COBRA-SHA, the maximum number of function evaluations was set to . The same number of function evaluations was used for the fuzzy-controlled COBRA-f and modification COBRA-fas. There were also 30 program runs of all algorithms, included in the comparison, for benchmark problems from SET-1.

While solving optimization problems from SET-2, the maximum generation number was 5000 and the population size for each component algorithm as well as for the FFA-a, CSA-a and BA-a modifications was equal to 100. Therefore, the maximum number of function evaluations for the COBRA-f, COBRA-fas and COBRA-SHA algorithms was set to . It should be noted that the number of programs runs of all algorithms for benchmark problems from SET-2 was the same as for the problems from SET-1.

Finally, 16 test functions taken from the CEC 2014 Special Session on Real-Parameter Optimization [51] were solved 51 times by all mentioned heuristics. All these functions are minimization problems; they are all also shifted and scaled. The same search ranges were defined for all of them: , where is the number of dimensions. For all algorithms included in the comparison, the maximum number of function evaluations was equal to . The population size for component algorithms and their modifications was set to 100.

During the experiments, the maximum archive size for each component of the COBRA-fas and COBRA-SHA meta-heuristics as well as for the FFA-a, CSA-a and BA-a algorithms was equal to 50. In addition, previously conducted experiments showed that the probability of using the external archive should have the following values for FFA-a, CSA-a and BA-a: , and respectively [49]. The same probabilities were used for the respective components of the COBRA-fas and COBRA-SHA approaches. For the rest of their component algorithms, the probability of using the external archive was set to 0 (the archive was not used specifically during the execution of a given component algorithm but was updated if conditions applied). Finally, the probability for the i-th (... component algorithm of the COBRA-SHA meta-heuristic was set to .

For the collective meta-heuristic COBRA-f and its modifications mentioned in this study, while solving problems from SET-1, SET-2 and SET-3 the minimum population size for each component was set to 0, but if the total sum of population sizes was equal to 0 then all population sizes increased to 10. Additionally, the maximum total sum of population sizes was set to 300.

5.3. Numerical Analysis on Benchmark Functions

5.3.1. Numerical Results for SET-1

Each of the 23 problems was solved by all the stated algorithms, and experimental results such as mean value (), standard deviation (), median value () and worst () of the best-so-far solution in the last iteration are reported. The obtained results are presented in Table 2. The outcomes, namely the mean and standard deviation values, are averaged over the number of program runs, which was equal to 30, and the best results are shown in bold type in Table 2.

Table 2.

Minimization results of 23 benchmark functions from SET-1 for compared algorithms.

From Table 2 it can be observed that the proposed approach COBRA-SHA outperformed other compared state-of-art approaches and their modifications as well as COBRA-f and the similar modification COBRA-fas on the first two unimodal functions ( and ) in terms of the mean, standard deviation, median, and worst value of the results. Regarding function , COBRA-SHA was outperformed only by the modification COBRA-fas in terms of the median value, while it was the best among the compared algorithms according to the other statistical results.

The fuzzy-controlled COBRA outperformed the other algorithms on the function . Regarding the fifth unimodal function, while the CSA modification with the external archive demonstrated the best results in terms of the mean, standard deviation and the worst values, the median value obtained by the proposed approach COBRA was better. Several algorithms, including COBRA-f, COBRA-fas and COBRA-SHA, were able to find the optimum value for the function during each program run. Finally, regarding function , COBRA-fas and CSA-a outperformed the other algorithms.

For multi-modal functions – with many local minima, the final results are more important because this function can reflect the algorithm’s ability to escape from poor local optima and obtain the near global optimum. For functions , and , COBRA-SHA was successful in finding the global minimum as well as the fuzzy-controlled COBRA and the similar modification COBRA-fas. For function , CSA with the external archive (CSA-a) outperformed the other algorithms included in the comparison. Regarding , the proposed approach COBRA-SHA was the best in terms of the median value, while CSA-a outperformed all the compared algorithms according to the other statistical results. Moreover, for functions the proposed modification COBRA-SHA produced better results compared to the others.

For – with only a few local minima, the dimension of the function is also small. For functions , , , , , and , COBRA-SHA was successful in finding the global minimum. Regarding and , PSO, COBRA-f, COBRA-fas and COBRA-SHA produced the same results. For function , PSO, FSS, COBRA-f, COBRA-fas and COBRA-SHA also gave the same values. Regarding COBRA-f, COBRA-fas and COBRA-SHA produced the same mean, standard deviation, median and worst values. Finally, for function the two similar modifications proposed in this study, namely COBRA-fas and COBRA-SHA, demonstrated the same results.

From Table 2, it can be observed that the COBRA-SHA approach performs better than the other algorithms on the multi-modal low-dimensional benchmarks. For example, regarding function , the COBRA-SHA approach outperformed other algorithms included in the comparison in terms of the mean, median and worst values. However, for function COBRA-f and COBRA-fas were able to find the optimum value during each program run and they outperformed COBRA-SHA. Finally, regarding function , the best mean and median values were found by the proposed modifications COBRA-fas and COBRA-SHA.

Additionally, in Table 3 the results of the comparison between COBRA-SHA and the other mentioned algorithms according to the Mann-Whitney statistical test with significance level are presented. The following notations are used in Table 3: “+” means that COBRA-SHA was better compared to a given algorithm, similarly “−” means that the proposed algorithm was statistically worse, and ”=” means that there was no significant difference between their results.

Table 3.

Results of the Mann-Whitney statistical test with for SET-1, comparison of COBRA-SHA with other approaches.

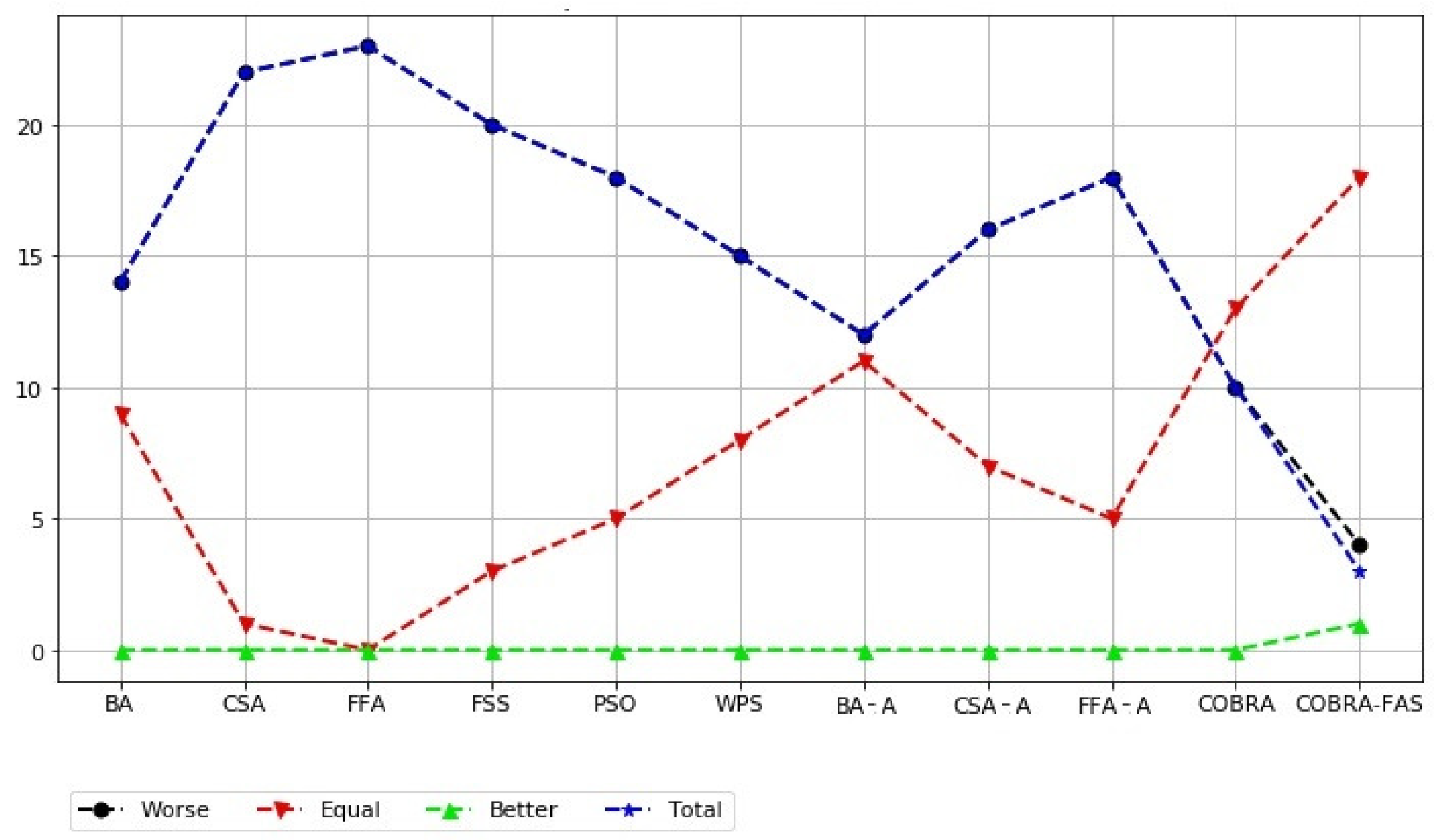

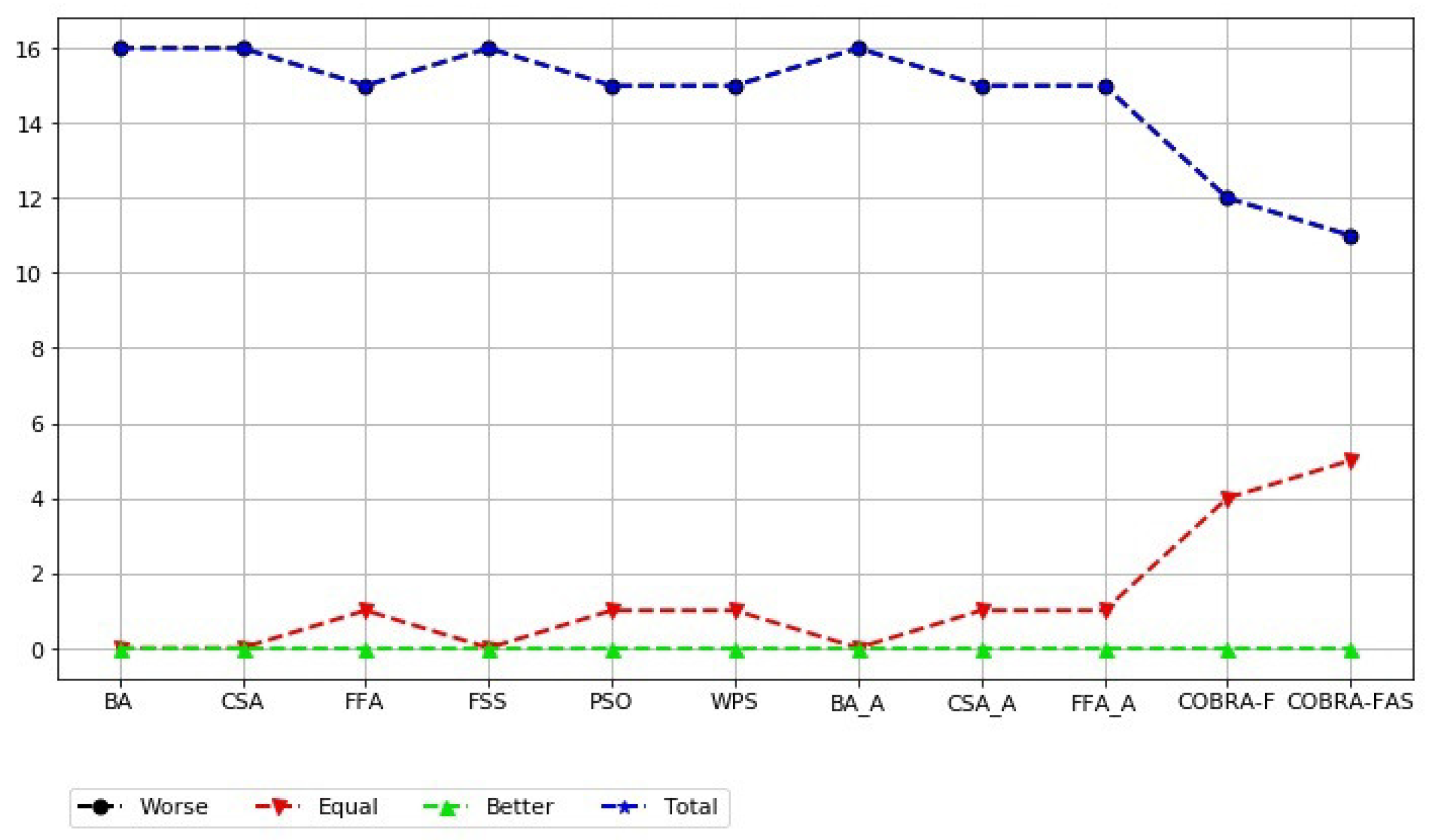

The results of the Mann-Whitney statistical test are presented in Figure 2. The values on the graph represent the total score, i.e., number of improvements, deteriorations and non-significant differences between COBRA-SHA and other approaches.

Figure 2.

Results of the Mann-Whitney statistical test with , comparison of COBRA-SHA with other approaches (SET-1).

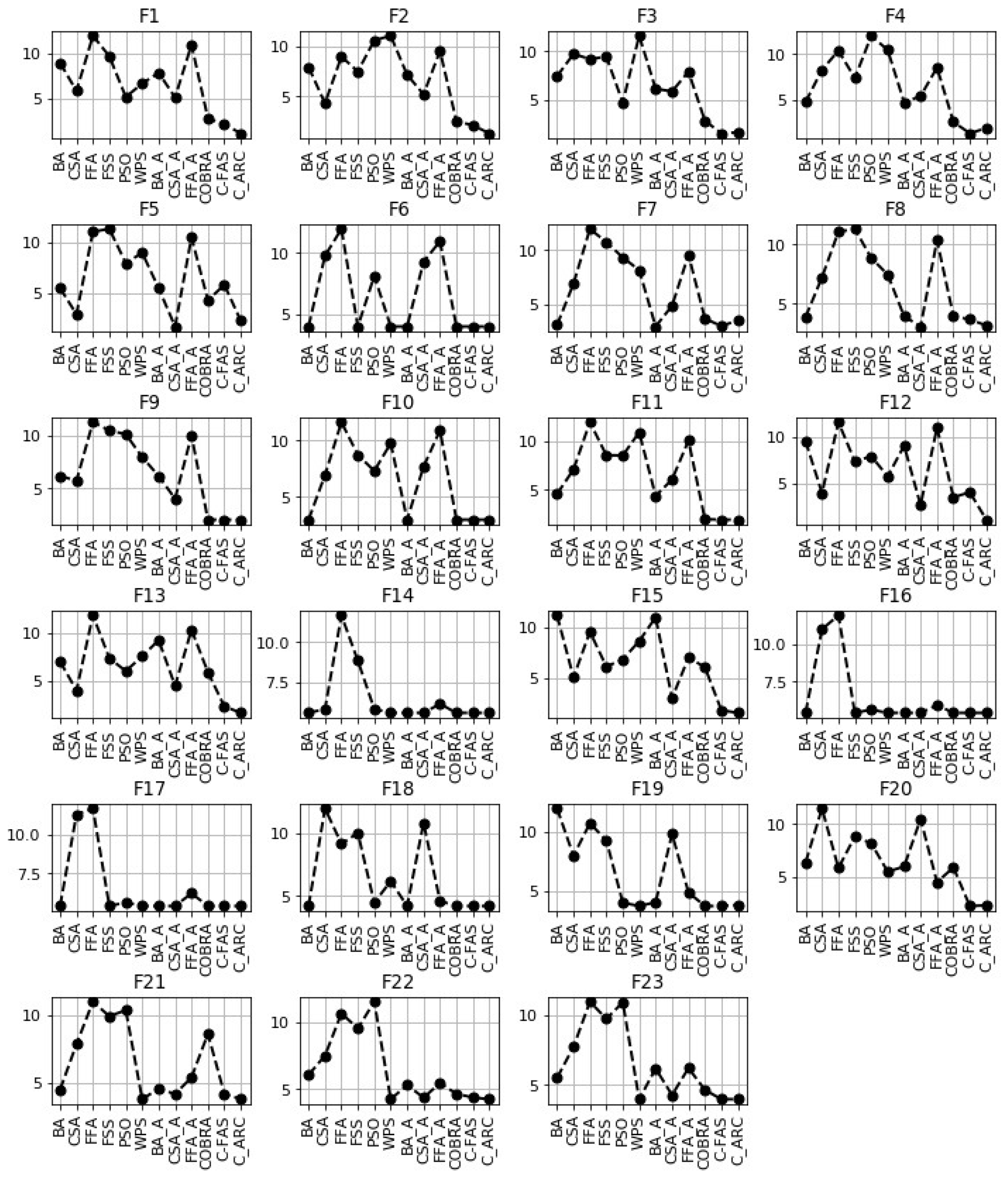

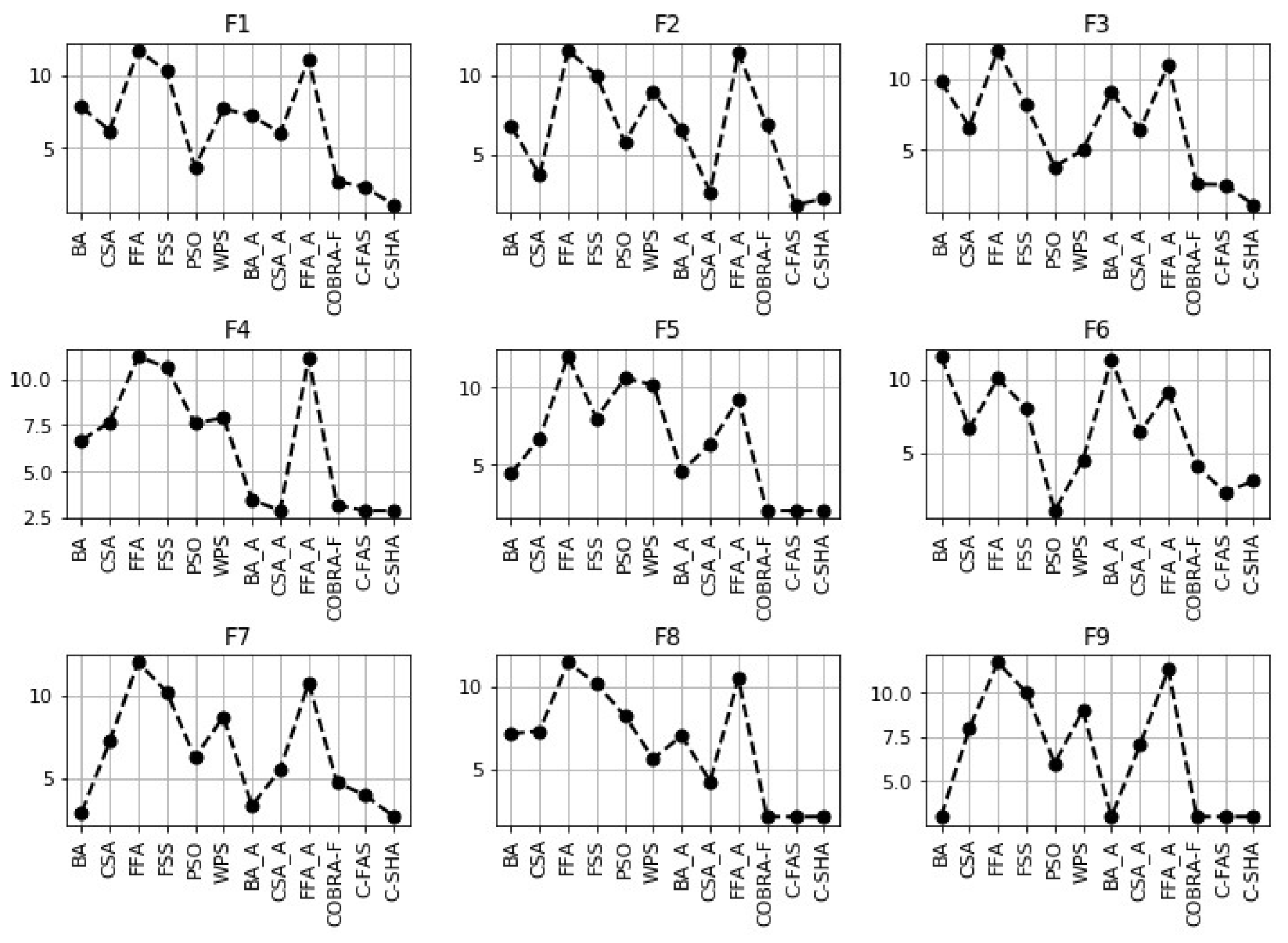

In addition, all the mentioned algorithms were compared with the proposed modification COBRA-SHA according to the Friedman statistical test. The obtained results are demonstrated in Figure 3. The following notations were used in Figure 3: COBRA-f was denoted as “COBRA”, COBRA-fas was denoted as “C-FAS” and for COBRA-SHA the notation “C ARC” was used. The Friedman ranking was performed for every test function separately and used the results of all runs for ranking.

Figure 3.

Results of the Friedman statistical test for SET-1.

Thus, it was established that the results obtained by the proposed approach are statistically better according to the Friedman and Mann-Whitney tests than the results obtained by the stated biology-inspired algorithms (PSO, WPS, FSS, FFA, CSA and BA) and their modifications with the external archive (FFA-a, CSA-a, BA-a). Despite this, it can be seen that the results achieved by FFA-a, CSA-a and BA-a are statistically better than the ones found by their original versions. Moreover, COBRA-SHA statically outperformed the fuzzy-controlled COBRA-f. However, there is almost no difference between the results obtained by COBRA-SHA and the similar modification COBRA-fas on functions from SET-1.

5.3.2. Numerical Results for SET-2

To show the advantage of the proposed modification COBRA-SHA more clearly, it was compared with the same algorithms (mentioned previously) by using benchmark functions from SET-2. The functions used in SET-2 are Sphere, Rosenbrock, Quadric, Schwefel, Griewank, Weierstrass, Quartic, Rastrigin and Ackley, which are frequently used benchmark functions to test the performance of various optimization algorithms. These functions can be described as continuous, differentiable, separable, scalable and multi-modal.

The experimental results obtained for 10- and 30-dimensional functions by the listed biology-inspired algorithms and their modifications are shown in Table 4 and Table 5. From these tables, it can be observed that the COBRA-SHA approach performs better than the other algorithms included in the comparison.

Table 4.

Experimental results for 10-dimensional functions from SET-2.

Table 5.

Experimental results for 30-dimensional functions from SET-2.

For example, regarding function , the COBRA-SHA approach outperformed the other algorithms included in the comparison in terms of the mean, best and worst values when . However, for the same function with COBRA-f was able to find the best value during 51 program runs, while COBRA-SHA was still better than the others in terms of the mean and worst values. Similarly, for function the best value was found by COBRA-f, and COBRA-SHA was able to achieve better mean and worst values both with and with .

Regarding functions and with 10 and 30 variables, COBRA-f, COBRA-fas and COBRA-SHA were able to find the optimum solutions during each program run. It should be noted that for function with 10 variables, the proposed modifications CSA-a, COBRA-fas and COBRA-SHA also achieved the optimum value during each program run, while the modification BA-a and the original algorithm COBRA-f found the optimum several times. On the other hand, for the same function but with 30 variables COBRA-SHA outperformed the other algorithms included in comparison. Additionally, for the last function both with and with COBRA-f, COBRA-fas, COBRA-SHA, BA and its modification BA-a demonstrated the same good results.

As for the second function ( and ), CSA-a outperformed the other algorithms in terms of mean and worst values, but the best value was found by the COBRA-fas approach. Regarding function with 10 variables, the PSO algorithm demonstrated the best results, while for that benchmark problem with 30 variables COBRA-fas outperformed every algorithm included in comparison. Finally, for function with , BA and BA-a gave better results, and with COBRA-SHA did the same.

Additionally, in Table 6 the results of the comparison between COBRA-SHA and the other mentioned algorithms according to the Mann-Whitney statistical test with significance level are presented. The same notations as in Table 3 are used in Table 6. The results of the Mann-Whitney statistical test are presented in Figure 4 and Figure 5.

Table 6.

Results of the Mann-Whitney statistical test with for SET-2, comparison of COBRA-SHA with other approaches.

Figure 4.

Results of the Mann-Whitney statistical test with , comparison of COBRA-SHA with other approaches (SET-2, ).

Figure 5.

Results of the Mann-Whitney statistical test with , comparison of COBRA-SHA with other approaches (SET-2, ).

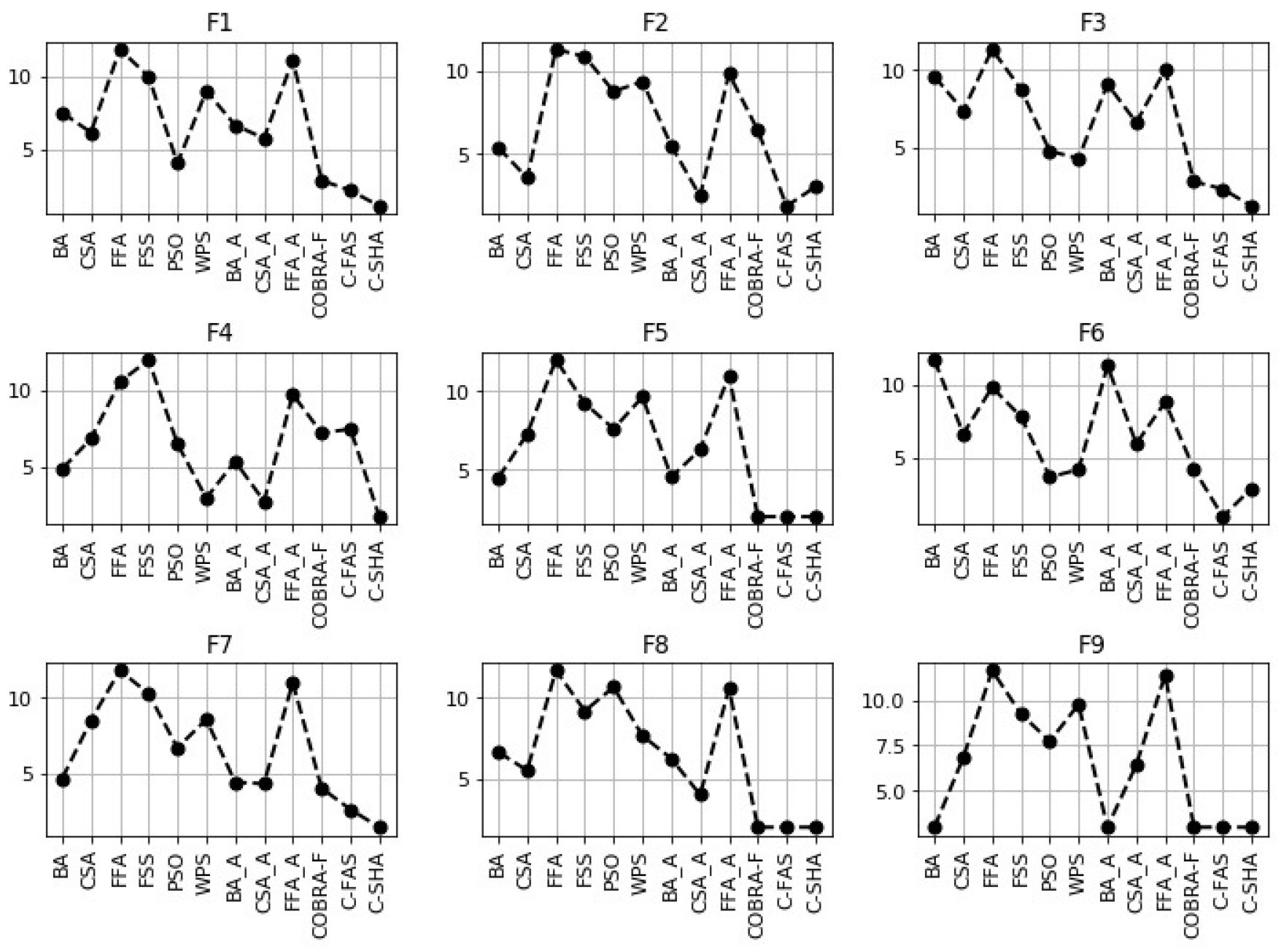

In addition, all the stated algorithms were compared with the proposed modification COBRA-SHA according to the Friedman statistical test. The obtained results are demonstrated in Figure 6 and Figure 7. The following notations were used in Figure 6 and Figure 7: COBRA-fas was denoted as “C-FAS” and for COBRA-SHA the notation “C-SHA” was used.

Figure 6.

Results of the Friedman statistical test for SET-2 ().

Figure 7.

Results of the Friedman statistical test for SET-2 ().

It was again established that the results obtained by the proposed approach are statistically better according to the Friedman and Mann-Whitney tests than the results obtained by the mentioned biology-inspired algorithms (PSO, WPS, FSS, FFA, CSA and BA) and their modifications with the external archive (FFA-a, CSA-a, BA-a). Moreover, COBRA-SHA statically outperformed the fuzzy-controlled COBRA-f in 11 out of 18 cases. However, as for SET-1 there is almost no difference between the results obtained by COBRA-SHA and the similar modification COBRA-fas on functions from SET-2.

5.3.3. Numerical Results for SET-3

The next step was to test and compare the stated biology-inspired algorithms and their modifications by using benchmark functions from SET-3. The 16 functions with used in SET-3 were taken from the CEC 2014 competition [51]. All these functions are minimization problems with a shifted and rotated global optimum, which is randomly distributed in . The search range for all problems was . The statistical results in terms of mean, standard deviation and best solution of different algorithms for functions from CEC 2014 are listed in Table 10. The best results are shown in bold.

From Table 7, it can be observed that the COBRA-SHA approach in most cases performs better than the other algorithms included in the comparison in terms of the mean value. To be more specific, this happened for the first three unimodal functions , and . Moreover, for function COBRA-SHA outperformed the other algorithms by all criteria. However, for and the best results (out of 51 program runs) were found by the COBRA-fas approach.

Table 7.

Minimization results of 16 benchmark functions from SET-3 for compared algorithms.

Regarding the multi-modal functions , , , and , COBRA-SHA was able to outperform all the biology-inspired algorithms included in the comparison in terms of mean and best values. The COBRA-SHA modification performed better than the other algorithms for the rest of the multi-modal functions (namely , , , , , and ) except the fifth benchmark problem, but it was able to find the best value for . The fuzzy-controlled COBRA-f found the best solution for function and gave the best mean value for function . As with the COBRA-SHA modification, COBRA-fas gave the best values for functions and . For functions and , the best values were found by the WPS algorithm, while for function it was found by the FFA algorithm. Finally, the modification CSA-a was able to achieve the best value for function .

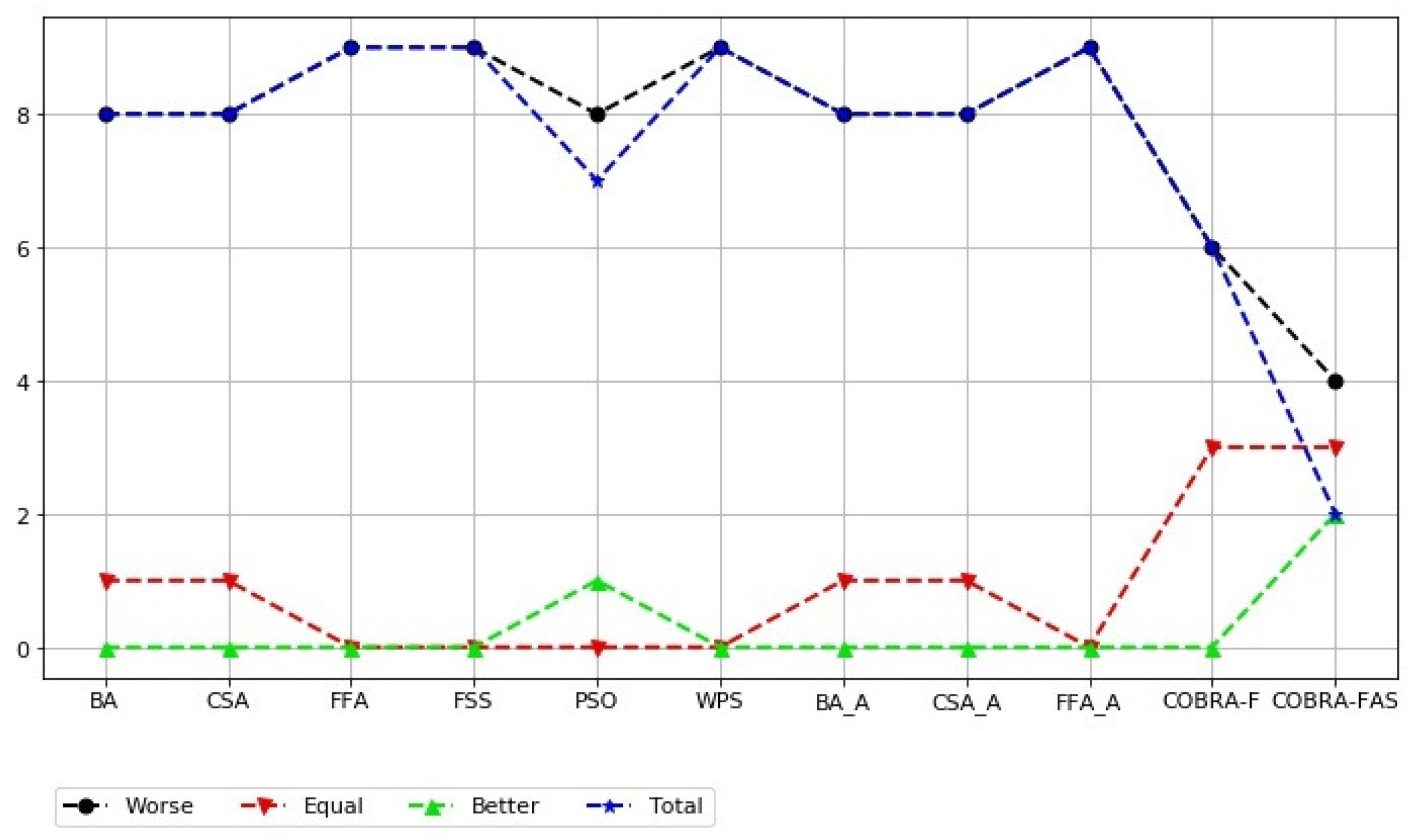

The results of the comparison between COBRA-SHA and the other mentioned algorithms according to the Mann-Whitney statistical test with significance level are presented in Table 8 (the same notations are used). The results of the Mann-Whitney statistical test are presented in Figure 8. Then all the stated algorithms were compared with the proposed modification COBRA-SHA according to the Friedman statistical test. The obtained results are demonstrated in Figure 9 (the same notations as in Figure 6 and Figure 7 are used).

Table 8.

Results of the Mann-Whitney statistical test with for SET-3, comparison of COBRA-SHA with other approaches.

Figure 8.

Results of the Mann-Whitney statistical test with for SET-3, comparison of COBRA-SHA with other approaches.

Figure 9.

Results of the Friedman statistical test for SET-3.

Thus, it was established that the results obtained by the proposed approach are statistically better according to the Friedman and Mann-Whitney tests than the results obtained by the mentioned biology-inspired algorithms (PSO, WPS, FSS, FFA, CSA and BA) and their modifications with the external archive (FFA-a, CSA-a, BA-a). Moreover, COBRA-SHA statically outperformed the fuzzy-controlled COBRA-f. Furthermore, the experimental results for the benchmark problems from SET-3 showed that the COBRA-SHA approach is more useful for solving complex multi-modal optimization problems than the similar modification COBRA-fas. Therefore, the workability and usefulness of the proposed COBRA-SHA algorithm were demonstrated.

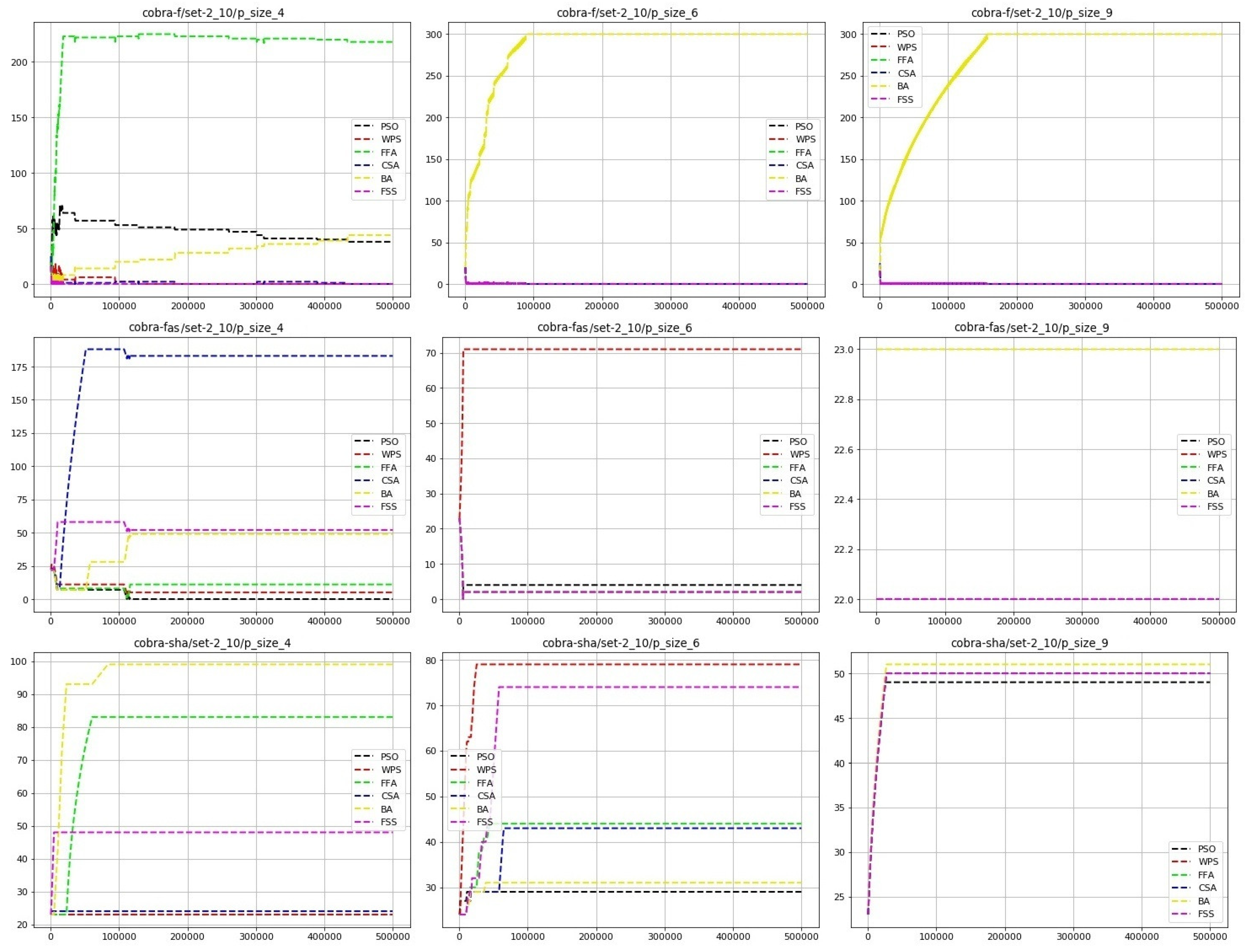

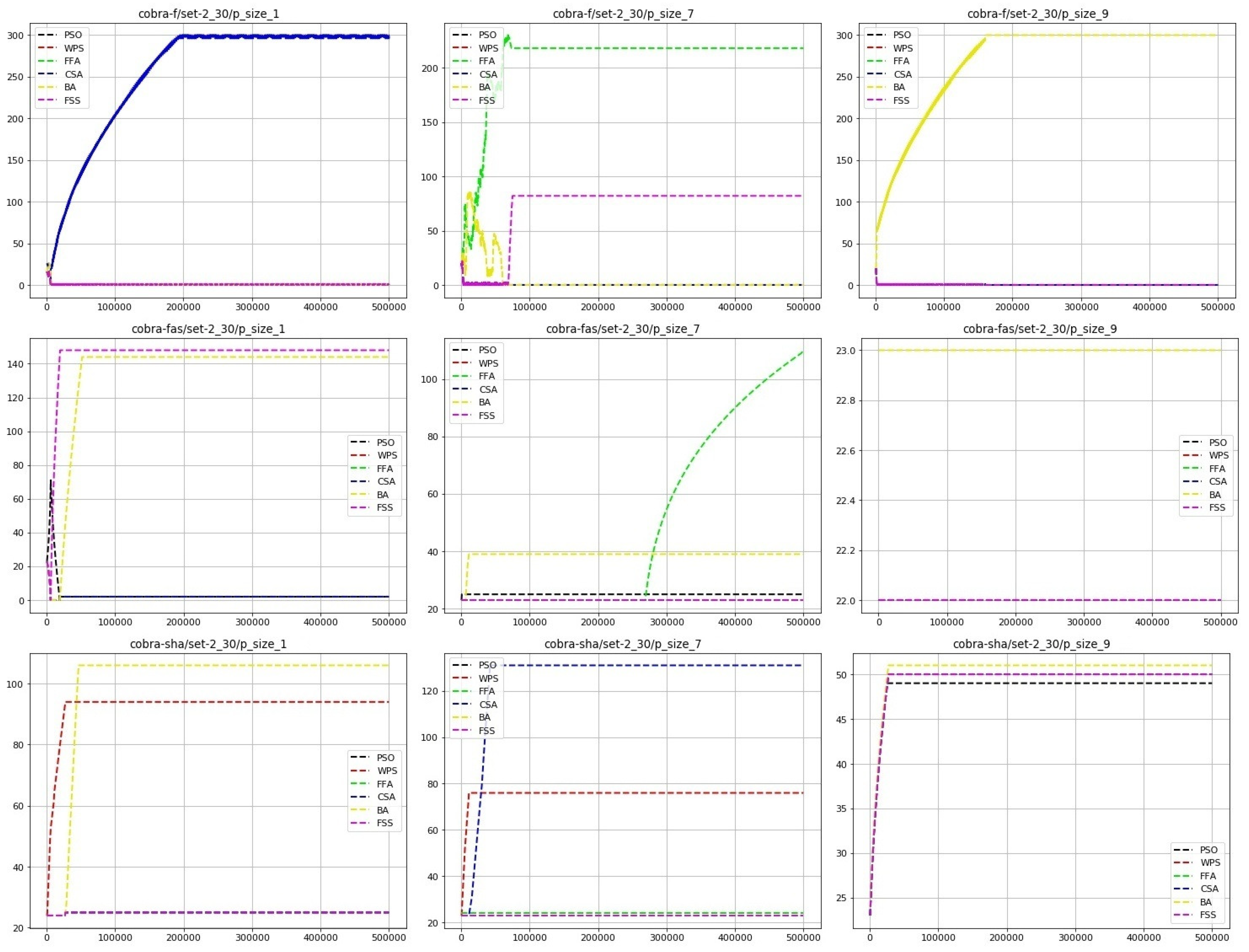

5.3.4. Population Sizes Change

Additionally, in this study, population size changes were observed while solving benchmark problems from SET-2 and SET-3 with 10 and 30 variables. Figure 10 shows the change of the COBRA-f, COBRA-fas and COBRA-SHA component population sizes during the optimization process on three functions from SET-2 with 10 variables, namely Schwefel’s function (the first column), Weierstrass’s function (the second column) and Ackley’s function (the third column), with the best-found fuzzy-controller parameters.

Figure 10.

Population size changes for SET-2 with 10 variables.

The figures on the first row demonstrate the original fuzzy-controlled COBRA-f tuning procedure behavior, the figures on the second row show the COBRA-fas modification, and finally the figures on the third row show the proposed COBRA-SHA approach. The behavior of these three tuning methods is quite different. The standard COBRA-f tends to give all resources to one component (which can be seen for Weierstrass’s and Ackley’s functions). However, for Schwefel’s function, which is a complex optimization problem with many local minima, there was competition between the PSO and BA approaches for resources while the FFA component still had the biggest population size.

The COBRA-fas modification demonstrated similar behavior for Schwefel’s function (CSA had the largest amount of resources while FSS and BA competed for “second place”). However, for Ackley’s function all the components had population sizes with the number of individuals within the range during the optimization process. It should be noted that the same solutions were found by the COBRA-f and COBRA-fas approaches, but while COBRA-f was able to find a solution with 300 individuals throughout all populations, the COBRA-fas modification used only 133.

Finally, the proposed algorithm COBRA-SHA increased all population sizes simultaneously (but differently): each population contained at least 20 individuals. Nevertheless, the largest amounts of resources were usually given to two components: for example, in the case of Schwefel’s function the winners were the FFA and BA algorithms, but for Weierstrass’s function, they were the WPS and FSS algorithms. It should be noted that while solving Ackley’s problem by COBRA-SHA all components had 49–51 individuals in their populations.

Next, Figure 11 shows the change of the COBRA-f, COBRA-fas and COBRA-SHA component population sizes during the optimization process on three functions from SET-2 with 30 variables, namely the Sphere function (the first column), the Quartic function (the second column) and Ackley’s function (the third column) with the best-found fuzzy-controller parameters. The algorithms demonstrate the same behavior as in the previous case (benchmark problems from SET-2 but with 10 variables).

Figure 11.

Population size changes for SET-2 with 30 variables.

However, let us consider the optimization process while solving the Quartic problem with the COBRA-fas approach. First, the BA algorithm appeared to be the best choice for the fuzzy controller. Thus, it increased the BA’s population to 40 individuals, when other populations had minimal sizes. After that, the population sizes did not change, and only after more than calculations was the FFA approach able to improve the optimization process, with its population size starting to increase gradually. Therefore, in the end FFA had the largest amount of resources.

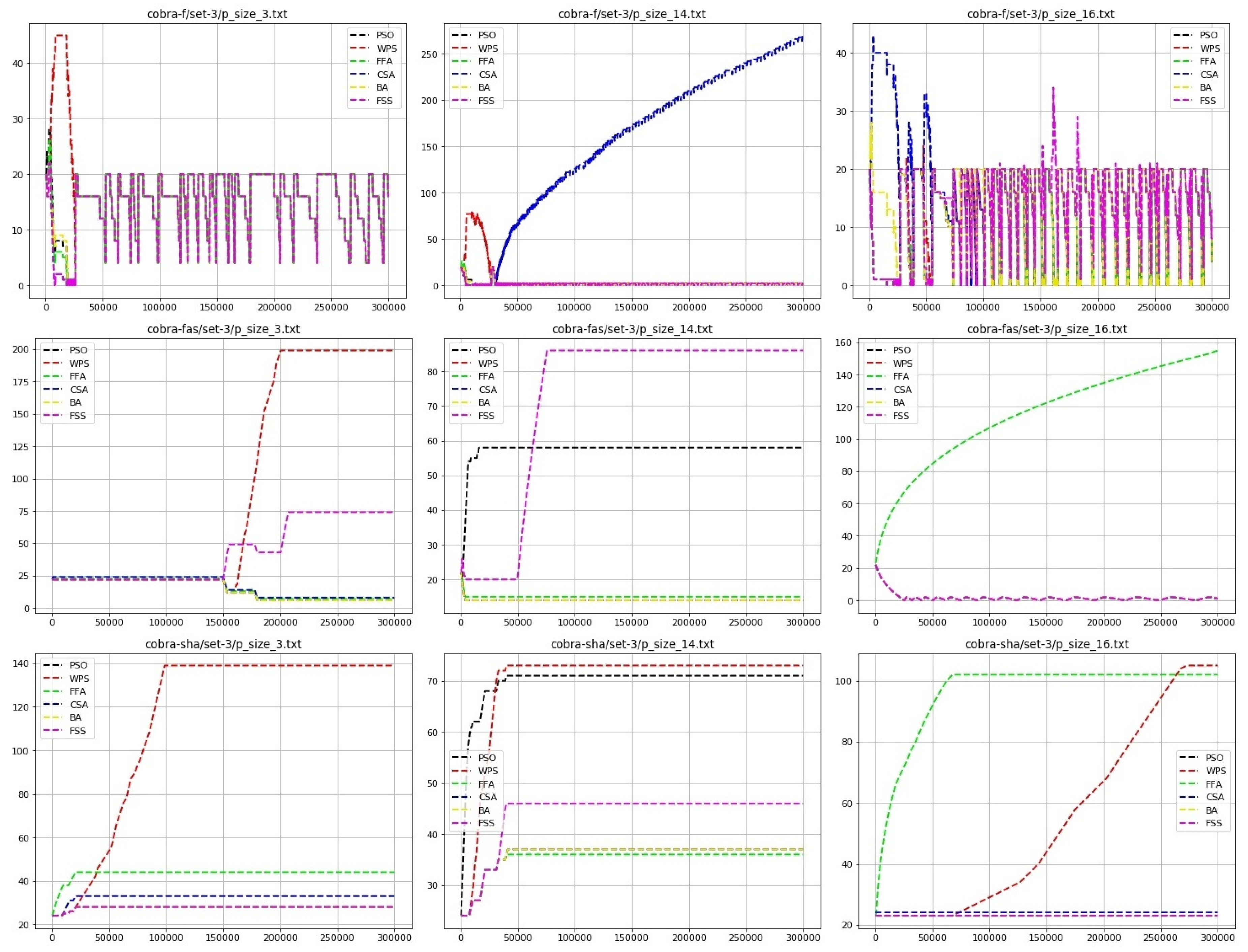

Finally, Figure 12 shows the change in the COBRA-f, COBRA-fas and COBRA-SHA component population sizes during the optimization process on three functions from SET-3, namely Rotated Discus Function (the first column), Shifted and Rotated HGBat Function (the second column) and Shifted and Rotated Expanded Scaffer’s F6 Function (the third column) with the best-found fuzzy-controller parameters. The first problem is unimodal, and the others are multi-modal.

Figure 12.

Population size changes for SET-3.

The standard COBRA-f tuning method usually makes multiple oscillations, but the winning component is changed over time. The COBRA-fas method with the first problem could not make a decision regarding which component was the best during the first calculations, but later the population of the WPS algorithm started to increase gradually and the population of the FSS algorithm became three times greater than it was initially. For the second stated problem, the PSO component appeared to be the most successful at the beginning of the optimization process. However, its population size did not change after calculations. On the other hand, the population size of the FSS component increased after calculations and it had the largest amount of resources by the end of the optimization process. For the last problem, as with the Discuss function, COBRA-fas could not determine the winner component algorithm at first, but then it increased the population of the FFA approach and minimized the sizes of all other populations down to zero simultaneously.

As for the COBRA-SHA modification, it did not minimize population sizes down to zero, thus, provided a more diverse set of potential solutions. Regarding the Discuss problem, even though the FFA component gave better results than other biology-inspired algorithms at first, the WPS component started to outperform it quite early. Therefore, by the end of the optimization process the WPS component had the largest amount of resources, and the FFA approach the second largest, while the populations of other components had at least 20 individuals. A similar situation can be observed for the HGBat Function with FSS and PSO components as winners. As for the last benchmark problem, during the first calculations the population of the FFA algorithm increased and consisted of more than 100 individuals, while at the same time, the population sizes of other algorithms were close to 20 and did not change. Nevertheless, the population size of the WPS component then started to increase significantly, still delivering goal function improvements. By the end of the optimization process, the WPS component algorithm had the largest number of individuals, yet that number was close to the number of individuals of the FFA’s population.

Thus, the demonstrated cases represent different scenarios where the resource tuning is helpful, as it is able to change the algorithm structure in accordance with the current requirements.

6. Conclusions

In this paper, a new modification of the meta-heuristic COBRA, namely the COBRA-SHA meta-heuristic, is proposed for solving real-valued optimization problems. To be more specific, a new modification is based on an alternative way of generating potential solutions. The stated technique uses a historical memory of successful positions found by individuals to guide them in different directions and thus to improve their exploration and exploitation abilities. The proposed method was applied to the components of the COBRA approach and to its basic procedures. The COBRA-SHA algorithm was tested using the three sets of benchmark functions. The experimental results show that the performance of the proposed algorithm is superior to that of the other biology-inspired algorithms in exploiting the optimum and it also has advantages in exploration.

Still, in this study several of the simplest variants of the biology-inspired component algorithms have been used for the proposed approach. Thus, further work should be focused on implementing their newer versions in the collective of the COBRA-SHA algorithm, as well as on comparisons with them. Moreover, there are still several parameters introduced for this modification, which were chosen empirically. Therefore, the performance of the COBRA-SHA approach should be tested for different parameter adaptation schemes. Moreover, the proposed modification should be applied for other optimization problem types (constrained, large-scale, multi-objective and so on).

Author Contributions

Conceptualization, S.A.; Methodology, S.A.; Software, S.A., V.S.; Validation, S.A., V.S., D.E.; Formal analysis, O.S.; Investigation, S.A., V.S., D.E.; Resources, S.A.; Data curation, S.A.; Writing—original draft preparation, S.A.; Writing—review and editing, V.S.; Visualization, V.S.; Supervision, O.S.; Project administration, S.A., O.S.; Funding acquisition, O.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the internal grant No299 for young researchers in Reshetnev Siberian State University of Science and Technology in 2020.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Eberhart, R.C. Computational Intelligence: Concepts to Implementations; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2007. [Google Scholar]

- Winker, P.; Gilli, M. Applications of optimization heuristics to estimation and modelling problems. Comput. Stat. Data Anal. 2004, 47, 211–223. [Google Scholar] [CrossRef]

- Nazari-Heris, M.; Mohammadi-Ivatloo, B.; Gharehpetian, G.B. A comprehensive review of heuristic optimization algorithms for optimal combined heat and power dispatch from economic and environmental perspectives. Renew. Sustain. Energy Rev. 2018, 81, 2128–2143. [Google Scholar] [CrossRef]

- Crepinsek, M.; Mernik, M.; Liu, S.H. Analysis of Exploration and Exploitation in Evolutionary Algorithms by Ancestry Trees. Int. J. Innov. Comput. Appl. 2011, 3, 11–19. [Google Scholar] [CrossRef]

- Žilinskas, A.; Gimbutienė, G. A hybrid of Bayesian approach based global search with clustering aided local refinement. Commun. Nonlinear Sci. Numer. Simul. 2019, 78, 104857. [Google Scholar] [CrossRef]

- Pepelyshev, A.; Zhigljavsky, A.; Žilinskas, A. Performance of global random search algorithms for large dimensions. J. Glob. Optim. 2018, 71, 57–71. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant Colony Optimization. Comp. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Uymaz, S.A.; Tezel, G.; Yel, E. Artificial Algae Algorithm (AAA) for Nonlinear Global Optimization. Appl. Soft Comput. 2015, 31, 153–171. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame Optimization Algorithm. Know.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Wang, J. A New Cat Swarm Optimization with Adaptive Parameter Control. In Genetic and Evolutionary Computing; Sun, H., Yang, C.Y., Lin, C.W., Pan, J.S., Snasel, V., Abraham, A., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 69–78. [Google Scholar]

- Abbasi-ghalehtaki, R.; Khotanlou, H.; Esmaeilpour, M. Fuzzy Evolutionary Cellular Learning Automata model for text summarization. Swarm Evol. Comput. 2016, 30. [Google Scholar] [CrossRef]

- Lim, Z.Y.; Ponnambalam, S.G.; Izui, K. Nature Inspired Algorithms to Optimize Robot Workcell Layouts. Appl. Soft Comput. 2016, 49, 570–589. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. Trans. Evol. Comp 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Parsopoulos, K.E. Parallel Cooperative Micro-particle Swarm Optimization: A Master-slave Model. Appl. Soft Comput. 2012, 12, 3552–3579. [Google Scholar] [CrossRef]

- Van den Bergh, F.; Engelbrecht, A.P. Training product unit networks using cooperative particle swarm optimisers. In Proceedings of the IJCNN’01. International Joint Conference on Neural Networks. Proceedings (Cat. No.01CH37222), Washington, DC, USA, 15–19 July 2001; Volume 1, pp. 126–131. [Google Scholar] [CrossRef]

- Mohammed, E.A.; Mohamed, K. Cooperative Particle Swarm Optimizers: A Powerful and Promising Approach. In Stigmergic Optimization; Springer: Berlin/Heidelberg, Germany, 2006; pp. 239–259. [Google Scholar] [CrossRef]

- Akhmedova, S.; Semenkin, E. Co-Operation of Biology Related Algorithms. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 2207–2214. [Google Scholar] [CrossRef]

- Yang, X.; Deb, S. Cuckoo search via levy flights. In Proceedings of the World Congress on Nature and Biologically Inspired Computing, Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar] [CrossRef]

- Yang, X. Firefly algorithms for multimodal optimization. In Proceedings of the 5th Symposium on Stochastic Algorithms, Foundations and Applications, Sapporo, Japan, 26–28 October 2009; pp. 169–178. [Google Scholar] [CrossRef]

- Yang, X. A new metaheuristic bat-inspired algorithm. Nat. Inspired Coop. Strateg. Optim. Stud. Comput. Intell. 2010, 284, 65–74. [Google Scholar] [CrossRef]

- Filho, C.J.A.B.; De Lima Neto, F.B.; Lins, A.J.C.C.; Nascimento, A.I.S.; Lima, M.P. Fish School Search. In Nature-Inspired Algorithms for Optimisation; Chiong, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 261–277. [Google Scholar] [CrossRef]

- Yang, C.; Tu, X.; Chen, J. Algorithm of marriage in honey bees optimization based on the wolf pack search. In Proceedings of the International Conference on Intelligent Pervasive Computing, Jeju City, Korea, 11–13 October 2007; pp. 462–467. [Google Scholar] [CrossRef]

- Akhmedova, S.; Semenkin, E. Investigation into the efficiency of different bionic algorithm combinations for a COBRA meta-heuristic. IOP Conf. Ser. Mater. Sci. Eng. 2017, 173, 012001. [Google Scholar] [CrossRef]

- Lee, C.C. Fuzzy logic in control systems: Fuzzy logic controller. I. IEEE Trans. Syst. Man Cybern. 1990, 20, 404–418. [Google Scholar] [CrossRef]

- Akhmedova, S.; Semenkin, E.; Stanovov, V.; Vishnevskaya, S. Fuzzy Logic Controller Design for Tuning the Cooperation of Biology-Inspired Algorithms. In Advances in Swarm Intelligence; Tan, Y., Takagi, H., Shi, Y., Niu, B., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 269–276. [Google Scholar]

- Shi, Y.; Eberhart, R.C. Fuzzy adaptive particle swarm optimization. In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No.01TH8546), Seoul, Korea, 27–30 May 2001; Volume 1, pp. 101–106. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Y. Fuzzy logic controlled particle swarm for reactive power optimization considering voltage stability. In Proceedings of the 2005 International Power Engineering Conference, Singapore, 29 November–2 December 2005; pp. 1–555. [Google Scholar] [CrossRef]

- Akhmedova, S.; Semenkin, E.; Stanovov, V. Semi-supervised SVM with Fuzzy Controlled Cooperation of Biology Related Algorithms. In Proceedings of the 14th International Conference on Informatics in Control, Automation and Robotics, ICINCO 2017, Madrid, Spain, 26–28 July 2017; pp. 64–71. [Google Scholar] [CrossRef]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Exploration and Exploitation in Evolutionary Algorithms: A Survey. ACM Comput. Surv. 2013, 45, 35:1–35:33. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, B.; Zhou, S. An Improved Cuckoo Search Optimization Algorithm for the Problem of Chaotic Systems Parameter Estimation. Intell. Neurosci. 2016, 2016, 2959370. [Google Scholar] [CrossRef]

- Tian, Y.; Gao, W.; Yan, S. An Improved Inertia Weight Firefly Optimization Algorithm and Application. In Proceedings of the 2012 International Conference on Control Engineering and Communication Technology, Liaoning, China, 7–9 December 2012; pp. 64–68. [Google Scholar] [CrossRef]

- Gao, Y.; An, X.; Liu, J. A Particle Swarm Optimization Algorithm with Logarithm Decreasing Inertia Weight and Chaos Mutation. In Proceedings of the 2008 International Conference on Computational Intelligence and Security, Suzhou, China, 13–17 December 2008; Volume 1, pp. 61–65. [Google Scholar] [CrossRef]

- Abadlia, H.; Smairi, N.; Ghedira, K. Particle Swarm Optimization Based on Dynamic Island Model. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017; pp. 709–716. [Google Scholar] [CrossRef]

- Kushida, J.; Hara, A.; Takahama, T.; Kido, A. Island-based differential evolution with varying subpopulation size. In Proceedings of the 2013 IEEE 6th International Workshop on Computational Intelligence and Applications (IWCIA), Hiroshima, Japan, 13 July 2013; pp. 119–124. [Google Scholar] [CrossRef]

- Lacerda, M.; Neto, H.; Ludermir, T.; Kuchen, H.; Lima Neto, F. Population Size Control for Efficiency and Efficacy Optimization in Population Based Metaheuristics. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Alander, J.T. On optimal population size of genetic algorithms. In Proceedings of the CompEuro 1992 Proceedings Computer Systems and Software Engineering, The Hague, The Netherlands, 4–8 May 1992; pp. 65–70. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive Differential Evolution With Optional External Archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Zitzler, E.; Thiele, L. Multiobjective evolutionary algorithms: A comparative case study and the strength Pareto approach. IEEE Trans. Evol. Comput. 1999, 3, 257–271. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Z.; Zhou, X.; Rahnamayan, S. Accelerating artificial bee colony algorithm by using an external archive. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 517–521. [Google Scholar] [CrossRef]

- Xue, B.; Qin, A.K.; Zhang, M. An archive based particle swarm optimisation for feature selection in classification. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 3119–3126. [Google Scholar] [CrossRef]

- Akhmedova, S.; Stanovov, V.; Erokhin, D.; Semenkina, O. Ensemble of the Nature-Inspired Algorithms with Success-History Based Position Adaptation; IOP Publishing: Bristol, UK, 2020; Volume 734, p. 012089. [Google Scholar] [CrossRef]

- Singh, S.; Arora, S. A Conceptual Comparison of Firefly Algorithm, Bat Algorithm and Cuckoo Search. In Proceedings of the 2013 International Conference on Control, Computing, Communication and Materials (ICCCCM), Allahabad, India, 3–4 August 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Liang, J.; Qu, B.Y.; Suganthan, P.; Hernández-Díaz, A. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization; Technical Report 201212; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2013. [Google Scholar]

- Akhmedova, S.; Stanovov, V.; Erokhin, D.; Semenkin, E. Position adaptation of candidate solutions based on their success history in nature-inspired algorithms. Int. J. Inf. Technol. Secur. 2019, 11, 21–32. [Google Scholar]

- Nenavath, H.; Jatoth, R.; Das, S. A synergy of the sine-cosine algorithm and particle swarm optimizer for improved global optimization and object tracking. Swarm Evol. Comput. 2018. [Google Scholar] [CrossRef]

- Liang, J.; Qu, B.Y.; Suganthan, P. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Computational Intelligence Laboratory, Zhengzhou University, Zhengzhou China and Technical Report; Nanyang Technological University: Singapore, 2013. [Google Scholar]

- Jamil, M.; Yang, X.S. A Literature Survey of Benchmark Functions For Global Optimization Problems. Int. J. Math. Model. Numer. Optim. 2013, 4. [Google Scholar] [CrossRef]

- Akhmedova, S.; Stanovov, V.; Erokhin, D.; Semenkin, E. Success History Based Position Adaptation in Co-Operation of Biology Related Algorithms. In Proceedings of the The Tenth International Conference on Swarm Intelligence, Chiang Mai, Thailand, 26–30 July 2019. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).