Practical Grammar Compression Based on Maximal Repeats †

Abstract

1. Introduction

- We show interesting relations between maximal repeats and grammars constructed by RePair.

- We propose MR-RePair, which is a novel variant of RePair based on replacing the most frequent maximal repeats.

- We implement MR-RePair and experimentally demonstrate that MR-RePair produces smaller grammars than all tested implementations of RePair. For a highly repetitive text used in the experiments, MR-RePair decreased the size of the constructed grammar to about 55% of that of RePair.

- We discuss how the sizes of generated grammars differ depending on the implementation of RePair, and prove that a lower bound of the maximum difference of the sizes is (Definition 1 and Theorem 3).

- We describe Naïve-MR-RePair, which is a naïve version of our MR-RePair. Furthermore, we prove that there is the case where the grammar size of Naïve-MR-RePair becomes larger than that of RePair in logarithmic order of the length of input string (Theorem 4).

- We performed our experiment again following the advice of a DCC reviewer.

- For some of the lemmas we omitted the proofs in the previous version, in this version, we show them all.

2. Preliminaries

2.1. Basic Notations and Terms

2.2. Maximal Repeats

2.3. Grammar Compression

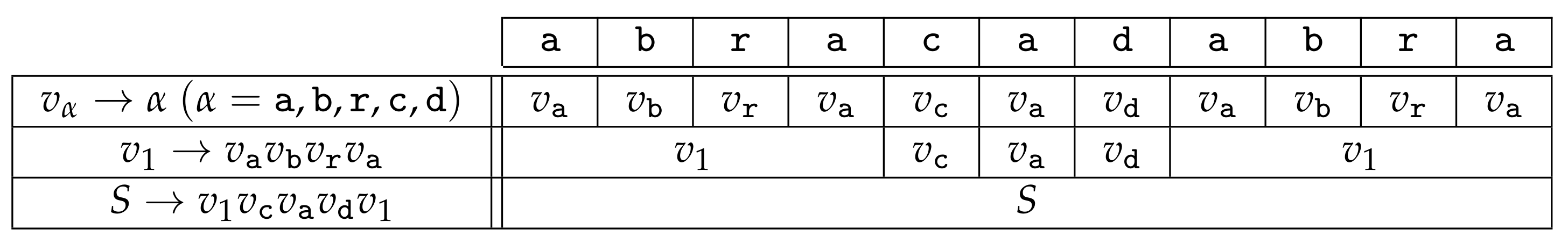

2.4. RePair

- Step 1. Replace each symbol with a new variable and add to R.

- Step 2. Find the most frequent pair p in T.

- Step 3. Replace every occurrence (or, as many occurrences as possible, when p is a pair consisting of the same symbol) of p with a new variable v, and then, add to R.

- Step 4. Re-evaluate the frequencies of pairs for the updated text generated in Step 3. If the maximum frequency is 1, add to R, and terminate. Otherwise, return to Step 2.

3. Analysis of RePair

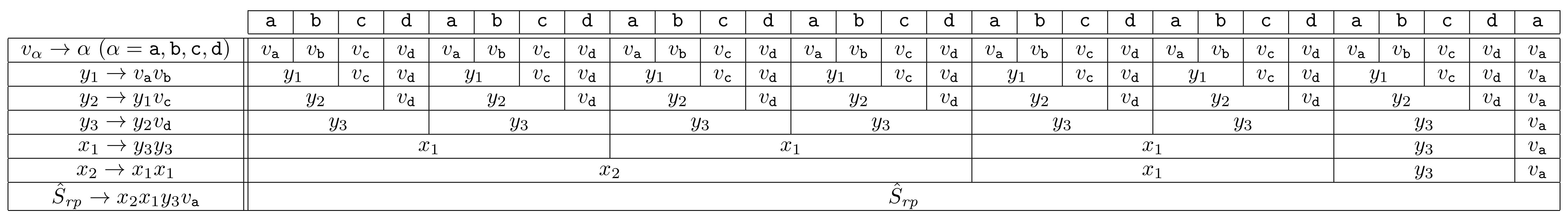

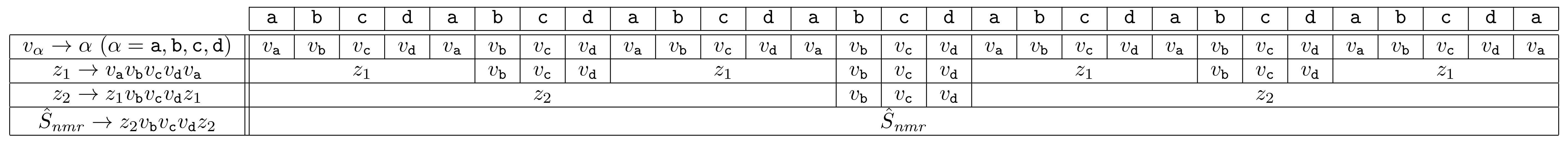

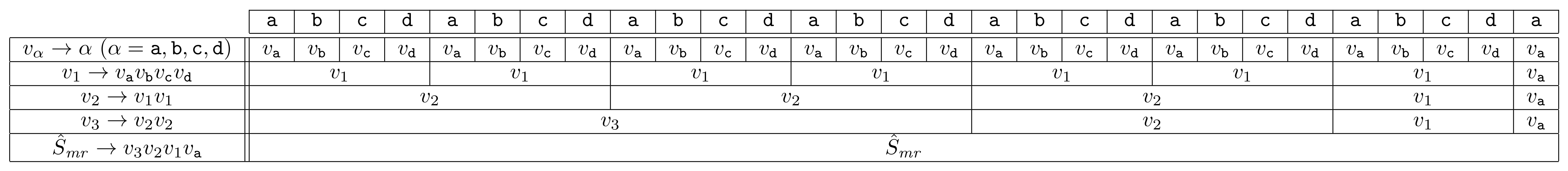

3.1. RePair and Maximal Repeats

3.2. MR-Order

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- .

3.3. Greatest Size Difference of RePair

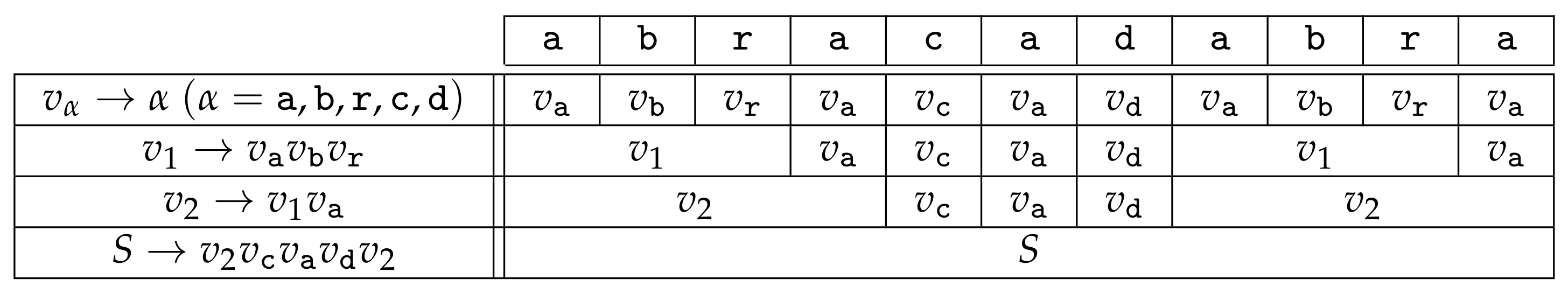

4. MR-RePair

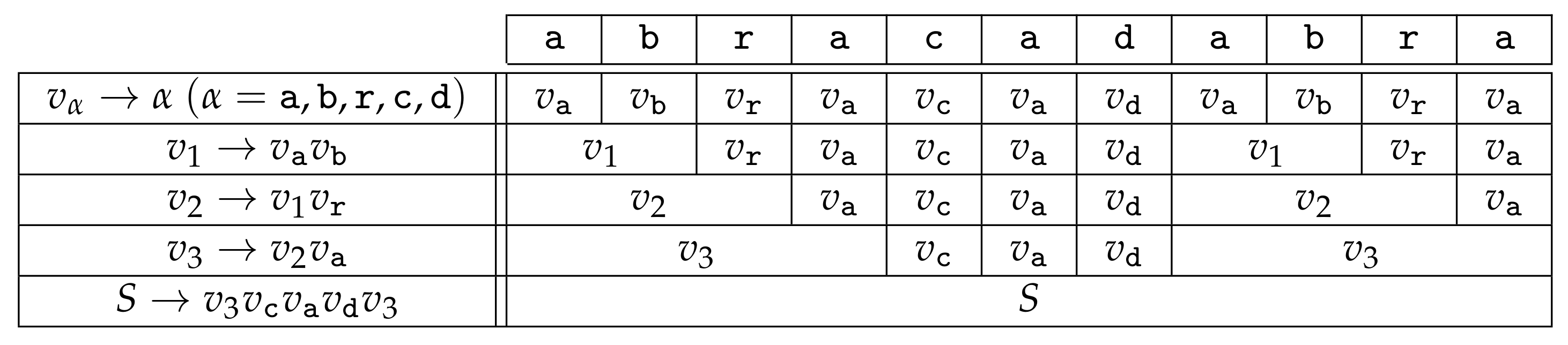

4.1. Naïve-MR-RePair

- Step 1.Replace each symbol with a new variable and add to R.

- Step 2.Find the most frequent maximal repeat r in T.

- Step 3.Replace every occurrence (or as many occurrences as possible, when there are overlaps) of r in T with a new variable v and then add to R.

- Step 4.Re-evaluate the frequencies of maximal repeats for the updated text generated inStep 3. If the maximum frequency is 1, add to R and terminate. Otherwise, return toStep 2.

- :

- Assume that for and for , then consists of

- rules with ,

- one rule and rules for , and

- one rule .

- :

- Assume that and for , then consists of

- one rule , and

- rules for and .

4.2. MR-RePair

- Step 1.Replace each symbol with a new variable and add to R.

- Step 2.Find the most frequent maximal repeat r in T.

- Step 3.Check if and , and if so, use instead of r inStep 4.

- Step 4.Replace every occurrence of r with a new variable v and then add to R.

- Step 5.Re-evaluate the frequencies of maximal repeats for the updated text generated inStep 4. If the maximum frequency is 1, add to R and terminate. Otherwise, return toStep 2.

5. Experiments

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Charikar, M.; Lehman, E.; Liu, D.; Ring, P.; Prabhakaran, M.; Sahai, A.; Shelat, A. The smallest grammar problem. IEEE Trans. Inf. Theory 2005, 51, 2554–2576. [Google Scholar] [CrossRef]

- Larsson, N.J.; Moffat, A. Off-line dictionary-based compression. Proc. IEEE 2000, 88, 1722–1732. [Google Scholar] [CrossRef]

- Claude, F.; Navarro, G. Fast and compact web graph representations. ACM Trans. Web 2010, 4, 16:1–16:31. [Google Scholar] [CrossRef]

- González, R.; Navarro, G. Compressed text indexes with fast locate. In Annual Symposium on Combinatorial Pattern Matching (CPM 2007); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4580, pp. 216–227. [Google Scholar]

- Wan, R. Browsing and Searching Compressed Documents. Ph.D. Thesis, The University of Melbourne, Melbourne, Australia, 2003. [Google Scholar]

- Masaki, T.; Kida, T. Online Grammar Transformation Based on Re-Pair Algorithm. In Proceedings of the Data Compression Conference (2016 DCC), Snowbird, UT, USA, 29 March–1 April 2016; pp. 349–358. [Google Scholar]

- Bille, P.; Gørtz, I.L.; Prezza, N. Space-Efficient Re-Pair Compression. In Proceedings of the Data Compression Conference (DCC 2017), Snowbird, UT, USA, 4–7 April 2017; pp. 171–180. [Google Scholar]

- Sekine, K.; Sasakawa, H.; Yoshida, S.; Kida, T. Adaptive dictionary sharing method for Re-Pair algorithm. In Proceedings of the Data Compression Conference (DCC 2014), Snowbird, UT, USA, 26–28 March 2014; p. 425. [Google Scholar]

- Lohrey, M.; Maneth, S.; Mennicke, R. XML tree structure compression using RePair. Inf. Syst. 2013, 38, 1150–1167. [Google Scholar] [CrossRef]

- Tabei, Y.; Saigo, H.; Yamanishi, Y.; Puglisi, S.J. Scalable partial least squares regression on grammar-compressed data matrices. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD 2016), San Francisco, CA, USA, 13–17 August 2016; pp. 1875–1884. [Google Scholar]

- Navarro, G.; Russo, L.M. Re-pair Achieves High-Order Entropy. In Proceedings of the Data Compression Conference (DCC 2008), Snowbird, UT, USA, 25–27 March 2008; p. 537. [Google Scholar]

- Ochoa, C.; Navarro, G. RePair and All Irreducible Grammars are Upper Bounded by High-Order Empirical Entropy. IEEE Trans. Inf. Theory 2018, 1–5. [Google Scholar] [CrossRef]

- Belazzougui, D.; Cunial, F.; Gagie, T.; Prezza, N.; Raffinot, M. Composite Repetition-Aware Data Structures. In Annual Symposium on Combinatorial Pattern Matching (CPM 2015); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9133, pp. 26–39. [Google Scholar]

- Belazzougui, D.; Cunial, F. Fast label extraction in the CDAWG. In International Symposium on String Processing and Information Retrieval (SPIRE 2017); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10508, pp. 161–175. [Google Scholar]

- Belazzougui, D.; Cunial, F. Representing the Suffix Tree with the CDAWG. In 28th Annual Symposium on Combinatorial Pattern Matching (CPM 2017); Leibniz International Processings in Informatics; Kärkkäinen, J., Radoszewski, J., Rytter, W., Eds.; Schloss Dagstuhl—Leibniz-Zentrum fuer Informatik: Wadern, Germany, 2017; Volume 78, pp. 7:1–7:13. [Google Scholar] [CrossRef]

- Takagi, T.; Goto, K.; Fujishige, Y.; Inenaga, S.; Arimura, H. Linear-Size CDAWG: New Repetition-Aware Indexing and Grammar Compression. In 24th International Symposium on String Processing and Information Retrieval (SPIRE 2017); Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10508, pp. 304–316. [Google Scholar]

- Apostolico, A.; Lonardi, S. Off-line compression by greedy textual substitution. Proc. IEEE 2000, 88, 1733–1744. [Google Scholar] [CrossRef]

- Inenaga, S.; Funamoto, T.; Takeda, M.; Shinohara, A. Linear-time off-line text compression by longest-first substitution. In 10th International Symposium on String Processing and Information Retrieval (SPIRE 2003); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2857, pp. 137–152. [Google Scholar]

- Nakamura, R.; Bannai, H.; Inenaga, S.; Takeda, M. Simple Linear-Time Off-Line Text Compression by Longest-First Substitution. In Proceedings of the Data Compression Conference (DCC 2007), Snowbird, UT, USA, 27–29 March 2007; pp. 123–132. [Google Scholar]

- Gańczorz, M.; Jeż, A. Improvements on Re-Pair Grammar Compressor. In Proceedings of the Data Compression Conference (DCC 2017), Snowbird, UT, USA, 4–7 April 2017; pp. 181–190. [Google Scholar]

- Claude, F.; Navarro, G. Self-Indexed Grammar-Based Compression. Fundam. Inform. 2011, 111, 313–337. [Google Scholar] [CrossRef]

- Claude, F.; Navarro, G. Improved Grammar-Based Compressed Indexes. In Proceedings of the 19th International Symposium on String Processing and Information Retrieval (SPIRE 2012), Cartagena de Indias, Colombia, 21–25 October 2012; pp. 180–192. [Google Scholar] [CrossRef]

- Gawrychowski, P. Faster Algorithm for Computing the Edit Distance between SLP-Compressed Strings. In Proceedings of the 19th International Symposium on String Processing and Information Retrieval (SPIRE 2012), Cartagena de Indias, Colombia, 21–25 October 2012; pp. 229–236. [Google Scholar]

- Goto, K.; Bannai, H.; Inenaga, S.; Takeda, M. Fast q-gram mining on SLP compressed strings. J. Discret. Algorithms 2013, 18, 89–99. [Google Scholar] [CrossRef][Green Version]

- Bille, P.; Cording, P.H.; Gørtz, I.L. Compact Q-gram Profiling of Compressed Strings. Theor. Comput. Sci. 2014, 550, 51–58. [Google Scholar] [CrossRef]

- Tomohiro, I.; Nishimoto, T.; Inenaga, S.; Bannai, H.; Takeda, M. Compressed automata for dictionary matching. Theor. Comput. Sci. 2015, 578, 30–41. [Google Scholar] [CrossRef]

- Jeż, A. Faster Fully Compressed Pattern Matching by Recompression. ACM Trans. Algorithms 2015, 11, 20:1–20:43. [Google Scholar] [CrossRef]

- Bille, P.; Cording, P.H.; Gørtz, I.L. Compressed Subsequence Matching and Packed Tree Coloring. Algorithmica 2017, 77, 336–348. [Google Scholar] [CrossRef]

- Furuya, I.; Takagi, T.; Nakashima, Y.; Inenaga, S.; Bannai, H.; Kida, T. MR-RePair: Grammar Compression based on Maximal Repeats. In Proceedings of the Data Compression Conference (DCC 2019), Snowbird, UT, USA, 26–29 March 2019; pp. 508–517. [Google Scholar]

| Text | Size (bytes) | Content | |

|---|---|---|---|

| rand77.txt | 2,097,152 | 77 | 32 copies of 1024 random patterns with a length of 64 |

| fib41 | 267,914,296 | 2 | Fibonacci string from the Pizza&Chili Corpus |

| einstein.de.txt | 92,758,441 | 117 | Edit history of the Wikipedia for Albert Einstein |

| E.coli | 4,638,690 | 4 | Complete genome of the E. Coli bacterium |

| bible.txt | 4,047,392 | 63 | The King James version of the bible |

| world192.txt | 2,473,400 | 94 | The CIA world fact book |

| Text File | RePair | Re-Pair | MR- | ||||

|---|---|---|---|---|---|---|---|

| Maruyama | Navarro | Prezza | Wan | Imp | RePair | ||

| rand77.txt | Rules | 41,651 | 41,642 | 41,632 | 41,675 | 41,661 | 4,492 |

| Total length | 83,302 | 83,284 | 83,264 | 83,350 | 83,322 | 46,143 | |

| Start variable | 9 | 2 | 7 | 2 | 2 | 9 | |

| Grammar size | 83,311 | 83,286 | 83,271 | 83,352 | 83,324 | 46,152 | |

| Execution time | 0.22 | 0.34 | 2.94 | 0.94 | 2.48 | 0.20 | |

| fib41 | Rules | 38 | 38 | 38 | 38 | - | 38 |

| Total length | 76 | 76 | 76 | 76 | - | 76 | |

| Start variable | 3 | 3 | 3 | 3 | - | 3 | |

| Grammar size | 79 | 79 | 79 | 79 | - | 79 | |

| Execution time | 9.99 | 14.38 | 48.85 | 85.39 | - | 14.88 | |

| einstein.de.txt | Rules | 49,968 | 49,949 | 50,218 | 50,057 | 49,933 | 21,787 |

| Total length | 99,936 | 99,898 | 100,436 | 100,114 | 99,866 | 71,709 | |

| Start variable | 12,734 | 12,665 | 13,419 | 12,610 | 12,672 | 12,683 | |

| Grammar size | 112,670 | 112,563 | 113,855 | 112,724 | 112,538 | 84,392 | |

| Execution time | 9.04 | 13.74 | 136.49 | 40.24 | 213.73 | 9.73 | |

| E.coli | Rules | 66,664 | 66,757 | 66,660 | 67,368 | 66,739 | 62,363 |

| Total length | 133,328 | 133,514 | 133,320 | 134,736 | 133,478 | 129,138 | |

| Start variable | 651,875 | 649,660 | 650,538 | 652,664 | 650,209 | 650,174 | |

| Grammar size | 785,203 | 783,174 | 783,858 | 787,400 | 783,687 | 779,312 | |

| Execution time | 0.52 | 0.65 | 9.82 | 2.00 | 11.29 | 0.58 | |

| bible.txt | Rules | 81,193 | 81,169 | 80,999 | 81,229 | 81,282 | 72,082 |

| Total length | 162,386 | 162,338 | 161,998 | 162,458 | 162,564 | 153,266 | |

| Start variable | 386,514 | 386,381 | 386,992 | 386,094 | 385,989 | 386,516 | |

| Grammar size | 548,900 | 548,719 | 548,990 | 548,552 | 548,553 | 539,782 | |

| Execution time | 0.51 | 0.65 | 8.41 | 1.85 | 11.32 | 0.57 | |

| world192.txt | Rules | 55,552 | 55,798 | 55,409 | 55,473 | 55,437 | 48,601 |

| Total length | 111,104 | 111,596 | 110,812 | 110,946 | 110,874 | 104,060 | |

| Start variable | 213,131 | 213,962 | 213,245 | 212,647 | 212,857 | 212,940 | |

| Grammar size | 324,235 | 325,558 | 324,057 | 323,593 | 323,731 | 317,000 | |

| Execution time | 0.32 | 0.55 | 4.92 | 1.09 | 6.81 | 0.36 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Furuya, I.; Takagi, T.; Nakashima, Y.; Inenaga, S.; Bannai, H.; Kida, T. Practical Grammar Compression Based on Maximal Repeats. Algorithms 2020, 13, 103. https://doi.org/10.3390/a13040103

Furuya I, Takagi T, Nakashima Y, Inenaga S, Bannai H, Kida T. Practical Grammar Compression Based on Maximal Repeats. Algorithms. 2020; 13(4):103. https://doi.org/10.3390/a13040103

Chicago/Turabian StyleFuruya, Isamu, Takuya Takagi, Yuto Nakashima, Shunsuke Inenaga, Hideo Bannai, and Takuya Kida. 2020. "Practical Grammar Compression Based on Maximal Repeats" Algorithms 13, no. 4: 103. https://doi.org/10.3390/a13040103

APA StyleFuruya, I., Takagi, T., Nakashima, Y., Inenaga, S., Bannai, H., & Kida, T. (2020). Practical Grammar Compression Based on Maximal Repeats. Algorithms, 13(4), 103. https://doi.org/10.3390/a13040103