1. Introduction

We first introduce the notion of clustering, the solution that is suggested by k-means++, and the generalization of the problem to support outliers in the input. We then describe our contribution in this context.

1.1. Clustering

For a given similarity measure, clustering is the problem of partitioning an input set of

n objects into subsets, such that objects in the same group are more similar to each other than to objects in the other sets. As mentioned in [

1], clustering problems arise in many different applications, including data mining and knowledge discovery [

2], data compression and vector quantization [

3], and pattern recognition and classification [

4]. However, for most of its variants, it is an NP-Hard problem when the number

k of clusters is part of the input, as elaborated and proved in [

5,

6]. In fact, the exponential dependency in

k is unavoidable even for approximating the (regular) Euclidean

k-means for clustering

n points in the plane [

7,

8].

Hence, constant or near-constant (logarithmic in n or k) multiplicative factor approximations to the desired cost function were suggested over the years, whose running time is polynomial in both n and k. Arguably, the most common version in both academy and industry is the k-means cost function, where the input is a set of n points in a metric space, and the goal is to compute the set of k centers (points) that minimizes the sum of squared distances over each input point to its closest center.

The

k-means++ method that was suggested independently by [

9,

10] is an extremely common and simple solution with probable approximation bounds. It provides an

multiplicative approximation in time

, e.g., for the Euclidean

d-dimensional space. It was also shown in [

10] that the approximation factor is

, if the input data is well separated in some formal sense. Recently, Ref. [

11] provided an

approximation for points in the

d-dimensional Euclidean space via exactly

k centers in time complexity of

.

The k-means++ algorithm is based on the intuition that the centroids should be well spread out. Hence, it samples k centers iteratively, via a distribution that is called -sampling and is proportional to the distance of each input point to the centers that were already chosen. The first center is chosen uniformly at random from the input.

The

k-means++ algorithm supports any distance to the power of

as explained in [

9]. Deterministic and other versions for the sum of (nonsquared) distances were suggested in [

12,

13,

14].

A more general approximations, called

bicriteria or

approximations, guarantee multiplicative factor

-approximation but the number of used center (for approximating the optimal

k centers) is

for some

. The factors

and

might be depended on

k and

n, and different methods give different dependencies and running times. For example, Ref. [

15] showed that sampling

different randomized centers yields an

-approximation and leverage it to support streaming data. Similarly, it was proved in [

16] that

-sampling

centers yields an

approximation.

The analysis of [

17] explores the value of

as a function of

.

A coreset for the k-median/mean problem is a small weighted set (usually subset) of the input points that approximates the sum of distances or sum of squared distances from the original (big) set P to every given set of k centers, usually up to multiplicative factor. In particular, we may compute an -approximation on the coreset to obtain -approximation for the original data.

For the special case of

k-means in the Euclidean space we can replace

d by

, including for coresets constructions, as explained in [

18]. Deterministic coresets for

k-means of size independent of

d were suggested in [

19].

1.2. Seeding

As explained in [

10], Lloyd [

20,

21,

22] suggested a simple iterative heuristic that aims to minimize the clustering cost, assuming a solution to the case

is known. It is a special case of the Expected Maximization (EM) heuristic for computing a local minimum. The algorithm is initialized with

k random points (seeds, centroids). At each iteration, each of the input points is classified to its closest centroid. A new set of

k centroids is constructed by taking the mean (or solving the problem for

, in the general case) of each of the current

k clusters. This method is repeated until convergence or any given stopping condition.

Due to its simplicity, and the convergence to a local minimum [

23], this method is very common; see [

3,

24,

25,

26,

27,

28,

29] and references therein. The method has further improved in [

1,

30,

31,

32].

The drawback of this approach is that it converges to a local minimum—the one which is closest to the initial centers that had been chosen and may be arbitrarily far from the global minimum. There is also no upper bound for the convergence rate and number of iterations. Therefore, a lot of research has been done to choose good initial points, called “seeds” [

33,

34,

35,

36,

37,

38,

39]. However, very few analytical guarantees were found to prove convergence.

A natural solution is to use provable approximation algorithms such as k-means++ above, and then apply Lloyd’s algorithm as a heuristic that hopefully improves the approximation in practice. Since Lloyd’s algorithm can only improve the initial solution, the provable upper bound on the approximation factor is still preserved.

1.3. Clustering with Outliers

In practice, data sets include some noise measurements which do not reflect a real part of the data. These are called outliers, and even a single outlier may completely change the optimal solution that is obtained without this outlier. One option to handle outliers is to change the distance function to a function that is more robust to outliers, such as M-estimators, e.g., where the distance between a pair of points is replaced by for some fixed that may depend on the scaling or spread of the data. Another option is to compute the set of k centers that minimizes the objective function, excluding the farthest m points from the candidate k centers. Here, is a given parameter for the number of outliers. Of course, given the optimal k centers for this problem, the m outliers are simply the farthest m input points, and given these m outliers the optimal solution is the k-means for the rest of the points. However, the main challenge is to approximate the global optimum, i.e., compute the optimal centers and outliers simultaneously. Guessing m is less challenging since it is an integer between 1 and n, and the cost function is monotonic in m, which enables the usage of binary search.

As explained in [

40], detecting the outliers themselves is also an NP-hard problem [

41]. Intensive research has been done on this problem, as explained in [

42], since the problem of outliers is known in numerous applications [

43,

44]). In the context of data mining, Ref. [

45] proposed a definition of distance-based outlier, which is free of any distributional assumptions and it can be generalized to multidimensional datasets. Following [

45], further variations have been proposed [

46,

47]. Consequently, Ref. [

48] introduced paradigm of local outlier factor (LOF). This paradigm has been extended in [

43,

49] in different directions.

As explained in [

50], and following the discussion in [

51,

52] provided an algorithm based on Lagrange-relaxation technique. Several algorithms [

51,

53,

54] were also developed. The work of [

55] gives a factor of

and a running time of

for the problem of

k-means with

m outliers. Another heuristic was developed by [

56]. Finally, [

50] provided an

-approximation in

time. In the context of

k-means, Ref. [

57] provided several algorithms of such constant factor approximation. However, the number of the points which approximate the outliers is much greater than

m, and is dependent on the data, as well as the algorithm running time.

2. Our Contribution

A natural open question is: “can we generalize the k-means++ algorithm to handle outliers”?

This paper answers this question affirmably in three senses that may be combined together:

- (i)

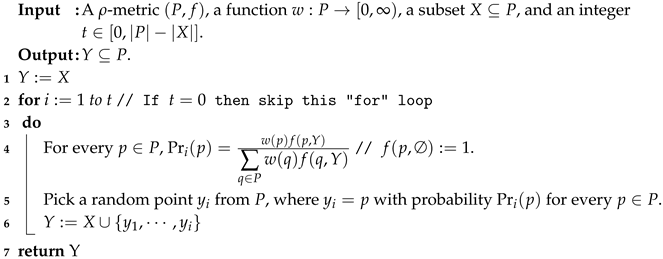

A small modification of k-means++ that supports M-estimators for handling outliers, with similar provable guarantees for both the running time and approximation factor. This family of functions includes most of the M-estimators, including nonconvex functions such as for some constant c, where is the distance between an input point and its closest center c. In fact, our version in Algorithm 1 supports any pseudodistance function or -metric that approximates the triangle inequality, as formalized in Definition 1 and Theorem 1.

- (ii)

A generalization of this solution to the case of k-mean/median problem with m outliers that takes time for constants k and m. To our knowledge, this is the first nontrivial approximation algorithm that takes time linear or even near-linear in n. The algorithm supports all the pseudodistance functions and -metric spaces, including the above M-estimators. See Corollary 4.

- (iii)

A Weak coreset of size and a larger strong coreset for approximating the sum of distances to any k-centers ignoring their farthest m input points. For details and exact definition see next subsection and Theorem 2.

Novel reduction: from k-means to -means. While the first result is a natural generalization of the original

k-means++, the second result uses a simple but powerful general reduction from

k-means with

m outliers to

-means (without outliers), that we did not find in previous papers. More precisely, in

Section 5, we prove that an approximation to the

-median with appropriate positive weight for each center (the size of its cluster), can be used to approximate the sum of distances from

P to

any k centers, excluding their farthest

m points in

P; see Corollary 3. This type of reductions may be considered as “coreset” and we suggest two types of them.

In the first coreset, the approximation error is additive, although the final result is a multiplicative constant factor. Nevertheless, the size of the suggested coreset is only , i.e., independent of both n and d, for constant -metric as the Euclidean space; see Theorem 2 for details. In particular, applying exhaustive search (in time that is exponential in k) on this coreset implies an -factor approximation for the k-median with m outliers, for any -metric in time when the parameters m and k are constants; see Corollary 4.

As stated in the previous section, the exponential dependency in

k is unavoidable even for the (regular)

k-means in the plane [

7,

8]. Nevertheless, constant factor approximations that take time that is polynomial in both

k and

m may be doable by applying more involved approximation algorithms for the

k-median with

m outliers on our small “coreset” which contains only

points. e.g., the polynomial time algorithm of Ke Chen [

50].

Theorem 2 also suggests a second “traditional coreset” that yields

-approximation for the

k-median with

m outliers, i.e., that obtains

-approximation for the sum of distances from

any set of

k centers and their farthest

m outliers in the original set

P. The price is that we need

approximation to the

-median. As was proved in [

58], this is doable in the Euclidean

d-dimensional space by running

k-means++ for

iterations instead of only

iterations (number of resulting centers). It was also proved in [

58] that the exponential dependency on

d is unavoidable in the worst case. See

Section 5 for details.

For the special case of

k-means in the Euclidean space we can replace

d by

, including for the coresets constructions above, as explained in [

18]. Deterministic version of our coresets for

k-median with

m outliers may be obtained via [

19].

3. Triangular Calculus

The algorithms in this paper support a large family of distance and nondistance functions. To exactly define this family, and their dependency on both the approximation factors and running times of the algorithms, we need to introduce the notion of -metric that generalizes the definition of a metric space. For a point and a set , we define . The minimum or sum over an empty set is defined to be zero.

Definition 1 (

-metric).

Let , P be a finite set and be a symmetric function such that for every . The pair is a ρ-metric if for every the following “approximated” triangle inequality holds: For example, metric space

is a

-metric for

. If we define

, as in the

k-means case, it is easy to prove (

1) for

.

The approximated triangle inequality also holds for sets as follows.

Lemma 1. (See 6.1 of [

59])

Let be a ρ-metric. Then, for every pair of points and a subset we have Proof. For every

, and a center

that is closest to

q, i.e.,

, we have

□

Our generalization of the

k-means++ algorithm for other distance functions needs only the above definition of

-metric. However, to improve the approximation bounds for the case of

k-means with

m outliers in

Section 5, we introduce the following variant of the triangle inequality.

Definition 2 (

metric).

Let be a ρ-metric. For , the pair is a -metric if for every we have For example, for a metric space

) the inequality holds by the triangle inequality for

and every

. For squared distance, we have

for every

; see [

18].

The generalization for sets is a bit more involved as follows.

Lemma 2. Letbe a-

metric.

For every setwe have Proof. Let

such that

and

. Without loss of generality, we assume

. We have

where the last inequality is by (

3).

The last term is bounded by

via Definition 2, but this bound is useless for the case

. Instead, we use (

1) to obtain

Plugging the last inequality in (

4) proves the case

as

□

Any -metric is also a -metric for some other related constants as follows.

Lemma 3. Let be a ρ-metric. Then is a -metric, where and .

Proof. Let

. We need to prove that

Without loss of generality,

, otherwise the claim is trivial. We then have

where the first inequality is by the approximated triangle inequality in (

1), and the second inequality is by the assumption

. □

How can we prove that a given function satisfies the condition of -metric or -metric? If f is some function of a metric distance, say, or most M-estimators functions such as , this may be easy via the following lemma.

Lemma 4 (Log-Log Lipschitz condition).

Let be a monotonic nondecreasing function that satisfies the following (Log-Log Lipschitz) condition: there is such that for every and we haveLet be a metric space, and be a mapping from every to . Then is a -metric where

- (i)

,

- (ii)

and, if , and

- (iii)

and, if .

Proof. We denote , and .

(i) We need to prove that

. If

then

, so

and Claim (i) holds for any

. Similarly, Claim (i) holds for

by symmetry. So we assume

. For every

we have

where (

7) is by the triangle inequality, and (8) is by substituting

x and

y respectively in (

5). Substituting

yields

We now compute the maximum of the factor

, whose derivative is zero when

i.e., when

. In this case

. The other extremal values of

h are

and

where

. Hence,

for

and

for

. Plugging these bounds in (

9) yields Claim (i)

(ii)–(iii) We prove that . If then .

If

, we need to prove that

. If

then

by (

6), and thus for every

and

we have

as desired. We thus need to prove the last inequality for

.

We assume

and

(so

and thus

), otherwise the claim trivially holds. Let

. Hence,

where the first inequality is by (

5) and the second is by the definition of

q and the assumption

. Rearranging gives

, so

Indeed, if

then

,

,

and (

12) trivially holds with equality. Otherwise

), we let

so that

where the inequality is by Young’s inequality

for every

and

such that

. We then obtain (

12) by substituting

so that

Next, we bound the rightmost expression of (

12). We have

for every

, since the linear function

over

is tangent to the concave function

at the point

, which is their only intersection point. By substituting

we obtain

By the triangle inequality,

Combining (

14) and (

15) yields

Since

by (

10) and the definition of

, we have

, i.e.,

by the monotonicity of

g. Hence,

by (

5), so

By combining the last inequalities we obtain the desired result

where (

18) holds by (

11), (19) is by (

12), (20) holds by (

16), and (21) is by (

17). □

4. k-Means++ for ρ-Metric

In this section we suggest a generalization of the k-means++ algorithm for every -metric and not only distance to the power of as claimed in the original version. In particular, we consider nonconvex M-estimator functions. Our goal is then to compute an -approximation to the k-median problem for .

Let

be called the

weight function over

P. For a nonempty set

we define

If Q is empty then . For brevity, we denote , and . For an integer we denote .

Definition 3 (

k-median for

-metric spaces).

Let be a ρ-metric, and be an integer. A set is a k-median

of P ifand this optimum is denoted by . For , a set is an -approximation

for the k-median of P ifNote that Y might have less or more than k centers.

The following lemma implies that sampling an input point, based on the distribution of w, gives a 2-approximation to the 1-mean.

Lemma 5. For every nonempty set , Proof. Let

be the weighted median of

Q, i.e.,

. By (1), for every

,

Summing over every weighted

yields

Summing again over the weighted points of

Q yields

□

We also need the following approximated triangle inequality for weighted sets.

Corollary 1 (Approximated triangle inequality).

Let , and be nonempty sets. Then Proof. Summing (

2) over every weighted

yields

□

The following lemma states that if we use sampling from P and hit a point in an uncovered cluster Q, then it is a 2-approximation for .

Lemma 6. Let such that . Then Proof. By Corollary 1, for every

,

Multiplying this by

yields

After summing over every weighted point

we obtain

□

Finally, this lemma is the generalization of the original lemma of the k-means++ versions.

Lemma 7. Let be a pair of integers. If and then the following holds.

Let be a partition of P such that . Let denote the union of the first u sets. Let be a set that covers , i.e., for every integer , and . Let Y be the output of a call to KMeans++; see Algorithm 1. Then where , and the randomness is over the t sampled points in .

| Algorithm 1: KMeans++(); see Theorem 1. |

![Algorithms 13 00311 i001 Algorithms 13 00311 i001]() |

Proof. The proof is by the following induction on : (i) the base case (and any ), and (ii) the inductive step (and any ).

(i) Base Case: . We then have

where the first two equalities hold since

is not random, and the inequality holds since

,

and

. Hence, the lemma holds for

and any

.

(ii) Inductive step: . Let

denote the first sampled point that was inserted to

during the execution of Line 5 in Algorithm 1. Let

. Let

such that

, and

denote the remaining “uncovered”

clusters, i.e,

. The distribution of

Y conditioned on the known sample

y is the same as the output of a call to KM

eans++

where

. Hence, we need to bound

We will bound each of the last two terms by expressions that are independent of or .

Bound on

. Here

,

(independent of

j), and by the inductive assumption that the lemma holds after replacing

t with

, we obtain

Bound on

. In this case,

and

. Hence,

Put and . We remove the above dependency of upon x and then m.

We have

where the first inequality holds by the inductive assumption if

, or since

if

. The second inequality holds since

, and since

.

Only the term

depends on

x and not only on

m. Summing it over every possible

yields

where the inequalities follow by substituting

in Lemma 6 and Lemma 5, respectively. Hence, the expected value of (

27) over

x is nonpositive as

Combining this with (

26) and then (

27) yields a bound on

that is independent upon

x,

It is left to remove the dependency on

m, which occurs in the last term

of (

28). We have

By Jensen’s (or power-mean) inequality, for every convex function

, and a real vector

we have

. Specifically, for

and

,

Plugging this in (

29) gives the desired bound on the term

,

where the last inequality holds since

. Plugging the last inequality in (

28) bounds

by

Bound on

. Plugging (

30) and (

25) in (

24) yields

Firstly, we have

where the last inequality holds as

.

Hence, we can bound (

31) by

This proves the inductive step and bounds (

31) by

□

Corollary 2. Let and let be a point that is sampled at random from a nonempty set such that with probability . Then with probability at least , Proof. By Markov’s inequality, for every non-negative random variable

G and

we have

Substituting

yields

Plugging (

35) in (

34) yields,

□

The following theorem is a particular case of Lemma 7. It proves that the output of KMeans++; see Algorithm 1, is a -approximation of its optimum.

Theorem 1. Let be a ρ-metric, and be an integer; See Definition 1. Let , and let Y be the output of a call to KMeans++; See Algorithm 1. Then , and with probability at least , Moreover, can be computed in time.

Proof. Let

and let

be an optimal partition of

P, i.e.,

. Let

be a point that is sampled at random from

such that

with probability

. Applying Lemma 7 with

and

yields,

where (38) holds by the definition of

f* and

. By plugging

in Corollary 2, with probability at least

over the randomness of

, we have

and since

, with probability at least

we also have

Plugging (

40) in (38) yields that with probability at least

over the randomness of

,

Relating to the randomness of

Y, by Markov’s inequality we have

Using the union bound on (

42) and (

43) we obtain

and thus

□

5. k-Means with m Outliers

In this section we consider the problem of k-means with m outliers of a given set P, i.e., where the cost function for a given set X of k centers is instead of , and is the subset of the closest points to the centers. Ties are broken arbitrarily. That is, can be considered as the set of m outliers that are ignored in the summation of errors.

Definition 4 (

k-median with

m outliers).

Let be a ρ-metric, , and be a pair of integers. For every subset of points in P and a set of centers, denote by the closest points to P. A set of k centers (points in P) is a k-median with m outliers of P ifFor , a set Y is an α-approximation to the k-median of P with m outliers if Note that we allow Y to have centers.

This is a harder and more general problem, since for we get the k-median problem from the previous sections.

We prove in this section that our generalized k-means++ can be served as a “weaker” type of coreset which admits an additive error that yields a constant factor approximation for the k-means with m outliers if is constant.

In fact, we prove that any approximated solution to the

-median of

P implies such a coreset, but Algorithm 1 is both simple and general for any

-distance. Moreover, by taking more than

centers, e.g., running Algorithm 1 additional iterations, we may reduce the approximation error

of the coreset to obtain “strong” regular coreset, i.e., that introduces a

-approximation for any given query set

X of

k centers. This is by having an

approximation for the

median; see Theorem 2. Upper and lower bounds for the number

of centers to obtain such

is the subject of [

58]. The authors prove that a constant approximation to the

-median of

P yields such

-approximation to the

k-median. This implies that running Algorithm 1

iterations would yield a coreset that admits

-approximation error for any given set of

k-centers with their farthest

m outliers, as traditional coresets. Unfortunately, the lower bounds of [

58] show that the exponential dependency in

d is unavoidable. However, the counter example is extremely artificial. In real world data, we may simply run Algorithm 1 until the approximation error

is sufficiently small and hope this will happen after few

iterations due to the structure of the data.

We state our result for the metric case and unweighted input. However, the proof essentially uses only the triangle inequality and its variants of

Section 3. For simplicity of notation, we use the term multiset

C instead of a weighted set

, where

, and

denote the number of copies of each item in

C. The size

of a multiset denotes its number of points (including duplicated points), unless stated otherwise.

Unlike the case of

k-means/median, there are few solutions to

k means with

m outliers. Chen [

50] provided a (multiplicative) constant factor approximation for the

k-median with

m outliers on any metric space (that satisfies the triangle inequality, i.e., with

= 1) that runs in time

, i.e., polynomial in both

k and

m. Applying this solution on our suggested coreset as explained in Corollary 3 might reduce this dependency on

n from cubic to linear, due to the fact that its construction time which is linear in

n and also polynomial in

. In order to obtain a more general result for any

-distance, as in the previous section, we use a simple exhaustive search that takes time exponential in

but still

for every constant

and

.

Our solution also implies streaming, parallel algorithm for k-median with m outliers on distributed data. This is simply because many k-median algorithms exist for these computation models, and they can be used to construct the “coreset” in Theorem 2 after replacing k with . An existing offline nonparallel solution for the k-median with m outliers, e.g., from the previous paragraph, may then be applied on the resulting coreset to extract the final approximation as explained in Corollary 4.

For every point , let denote its closest point in Y. For a subset Q of P, define to be the union (multiset) of its projection on Y. Ties are broken arbitrarily. Recall the definition of and -approximation from Definition 4. We now ready to prove the main technical result for the k-median with m outliers.

Theorem 2 (coreset for

k-median with

m outliers).

Let be a metric space, and let . Let such that , and let Y be an α-approximation for the -median of P (without outliers). Let . Then for every set of centers, we have Proof. Let be a set of centers. The multiset contains points that are contained in the k centers of Y. However, we do not know how to approximate the number of copies of each center in without using . One option is to guess approximation to the weight of each of these points, by observing that it is for some , and then using exhaustive search. However, the running time would be exponential in and the weights depend on the query X.

Instead, we observe that while we cannot compute

via

C, we have the upper bound

. It follows from the fact that

and

, so

We now bound the rightmost term.

For a single point

we have

where (

47) holds by substituting

and

(or vice vera) and

in Lemma 2, and (48) holds by the definition of

.

Summing (48) over every

for some set

yields

where (

49) holds by the definition of

f, (50) holds by the triangle inequality, and (51) is by (48). The term

is bounded by

where the first inequality holds since

, and the last inequality holds since

Y is an

-approximation for the

-median of

P. Plugging (

53) in (51) yields

The rest of the proof is by the following case analysis: (i) , and (ii) .

Case (i): . The bound for this case is

where (

55) holds by (

46), and (56) holds by substituting

in (

54). This bounds (

44) for the case that

.

Case (ii):. In this case, denote the

corresponding points to

in

P by

Similarly to (

46),

and

, so

Hence,

where (

58) holds by (

57), (59) is by substituting

in (

54), and (60) follows from the assumption

of Case (ii).

Combining all together, using (56) and (60) we can bound (

44) by

and (

45) follows from (59), assuming

(otherwise (

45) trivially holds). □

The motivation for (

44) is to obtain a traditional coreset, in the sense of

-approximation to any given set of

k centers. To this end, we need to have

. For the natural case where

this implies

approximation to the

-means

of

P. This is doable for every input set

P in the Euclidean space and many others as was proved in [

58] but in the price of taking

centers.

This is why we also added the bound

45, which suffices to obtain a “weaker” coreset that yields only constant factor approximation to the

k-means with

m outliers, but using only

centers, as explained in the following corollary.

Corollary 3 (From

-median to

k-median with

m outliers ).

Let be a -metric, , and such that . Suppose that, in time, we can compute an α-approximation Y for the -median of P (without outliers). Suppose also that we can compute in time, a β-approximation of the k-median with m outliers for any given multiset of (possibly duplicated) points. Then, X is aapproximation for the k-median with m outliers of P and can be computed in time. Proof. We compute

Y in

time and then project

P onto

Y to obtain a set

of

distinct points, as defined in the proof of Theorem 2. We then compute a

approximation

for the

k-median with

m outliers of

P,

in

time. For a given set

of centers and a subset

of points, we denote by

the closest

points in

Q to

X. Let

denote the

k-median with

m outliers of

P. Hence,

where (

65) holds by (

45), (66) holds by (

64), and (67) holds by plugging

in (

44). □

To get rid of the so many parameters in the last theorems, in the next corollary we assume that they are all constant and suggest a simple solution to the

k-median with

m outliers by running exhaustive search on our weaker coreset. The running time is exponential in

k but this may be fixed by running the more involved polynomial-time approximation of Chen [

50] for

k-means with

m outliers on our coreset.

Corollary 4. Let such that , and let be constants. Let be a ρ-metric. Then, a set can be computed in time such that, with probability at least 0.99, X is a -approximation for the k-median with m outliers of P.

Proof. Given a set Q of points, it is easy to compute its k-median with m outliers in time as follows. We run exhaustive search over every subset Y of size in Q. For each such subset Y, we compute its farthest m points Z in Q. The set Y that minimizes is an optimal solution, since one of these sets of k centers is a k-median with m outliers of P. Since there are such subsets, and each check requires time, the overall running time is .

Plugging in Theorem 1 implies that we can compute a set of size which is, with probability at least , an -approximation to the -median of P in time . By Lemma 3, is a -metric for , and . Plugging and in Corollary 3 then proves Corollary 4. □