Citywide Cellular Traffic Prediction Based on a Hybrid Spatiotemporal Network

Abstract

1. Introduction

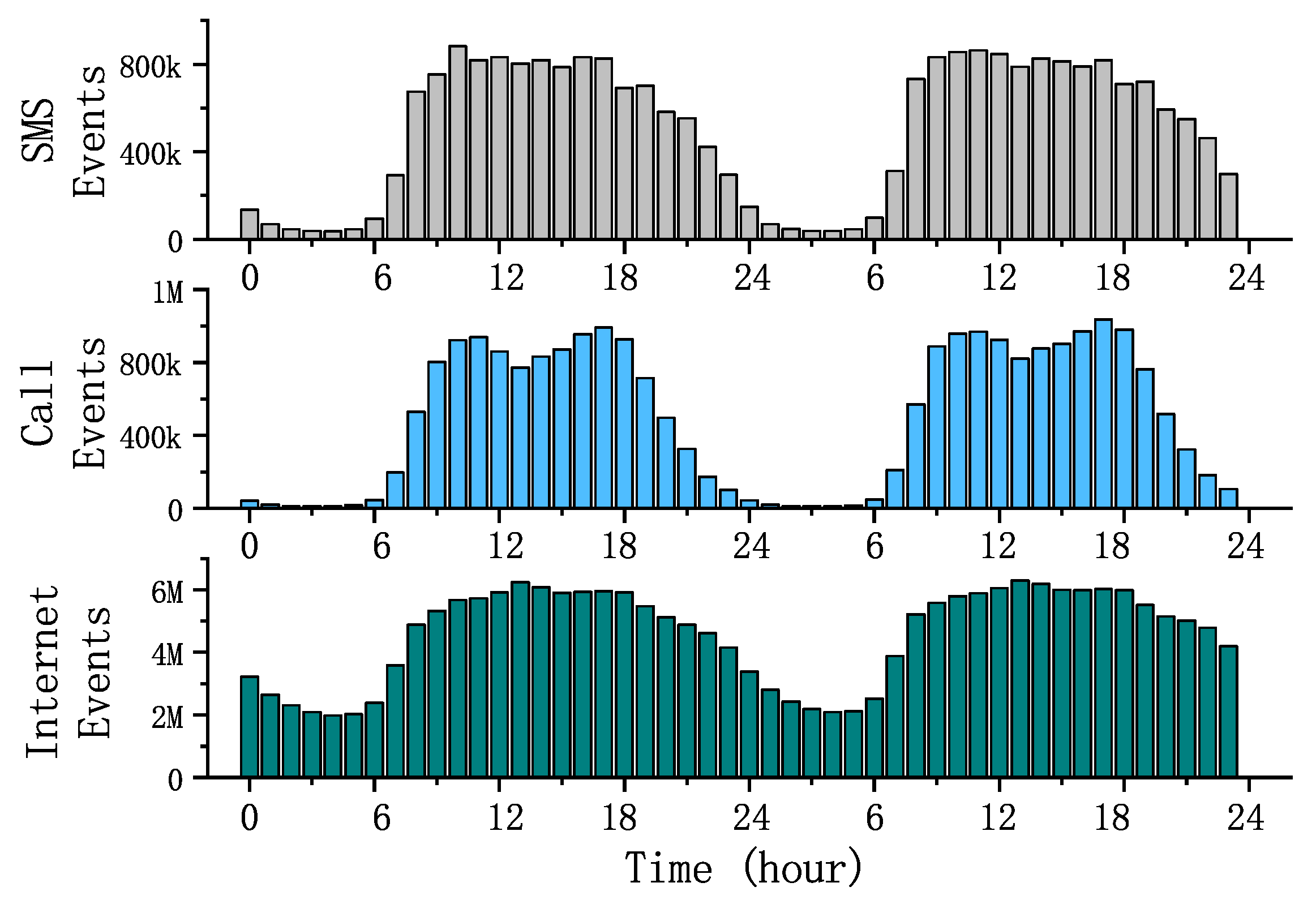

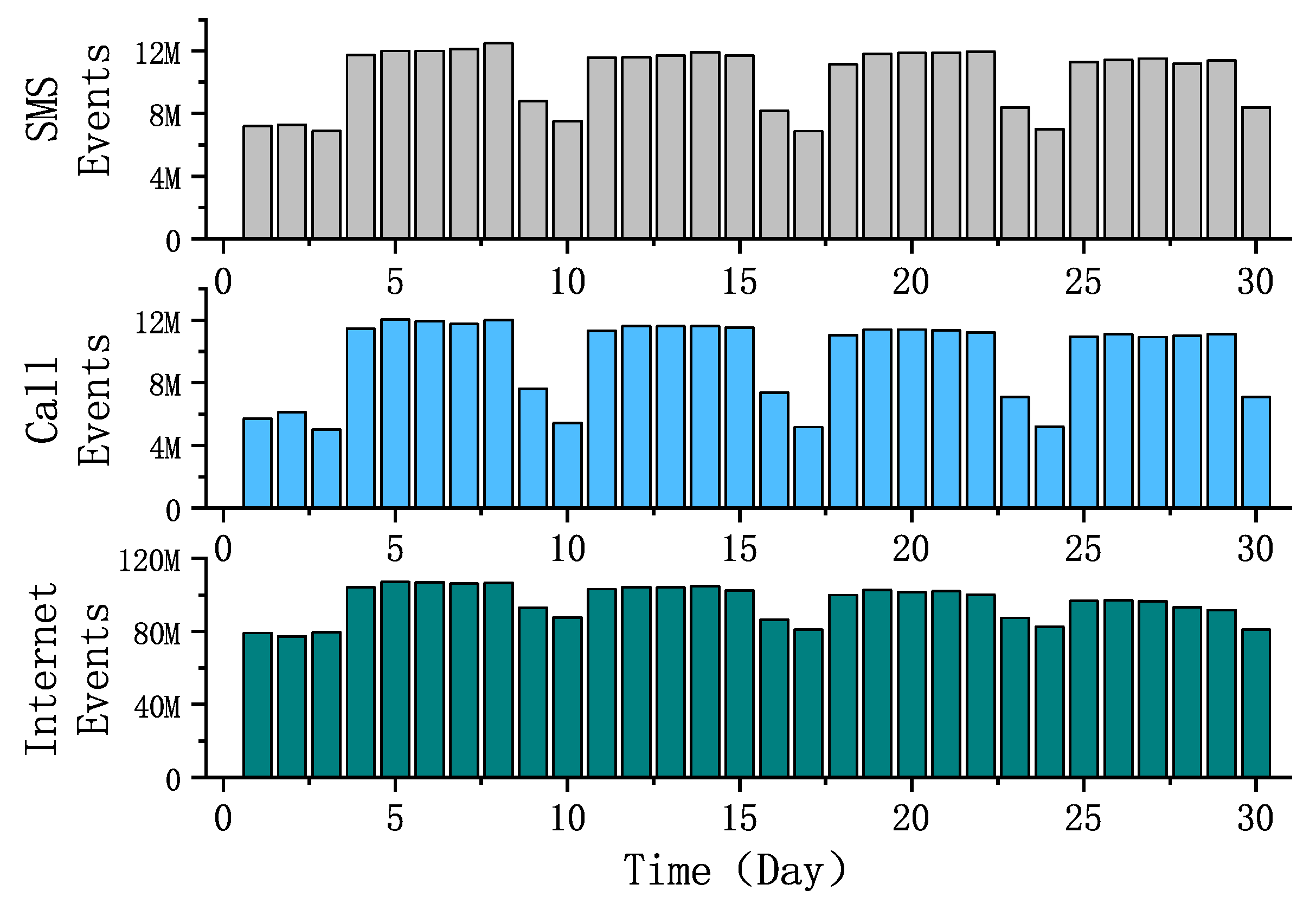

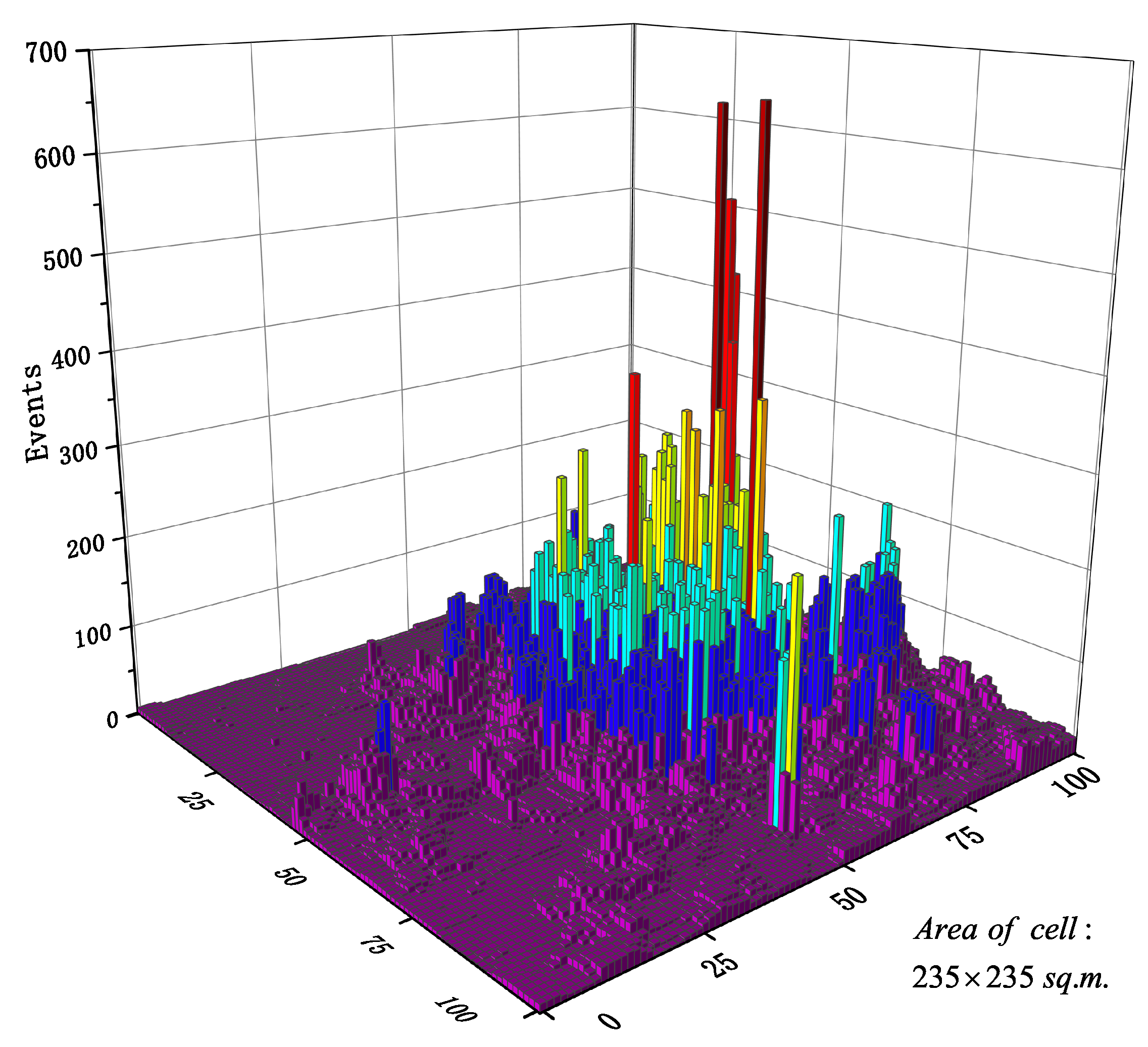

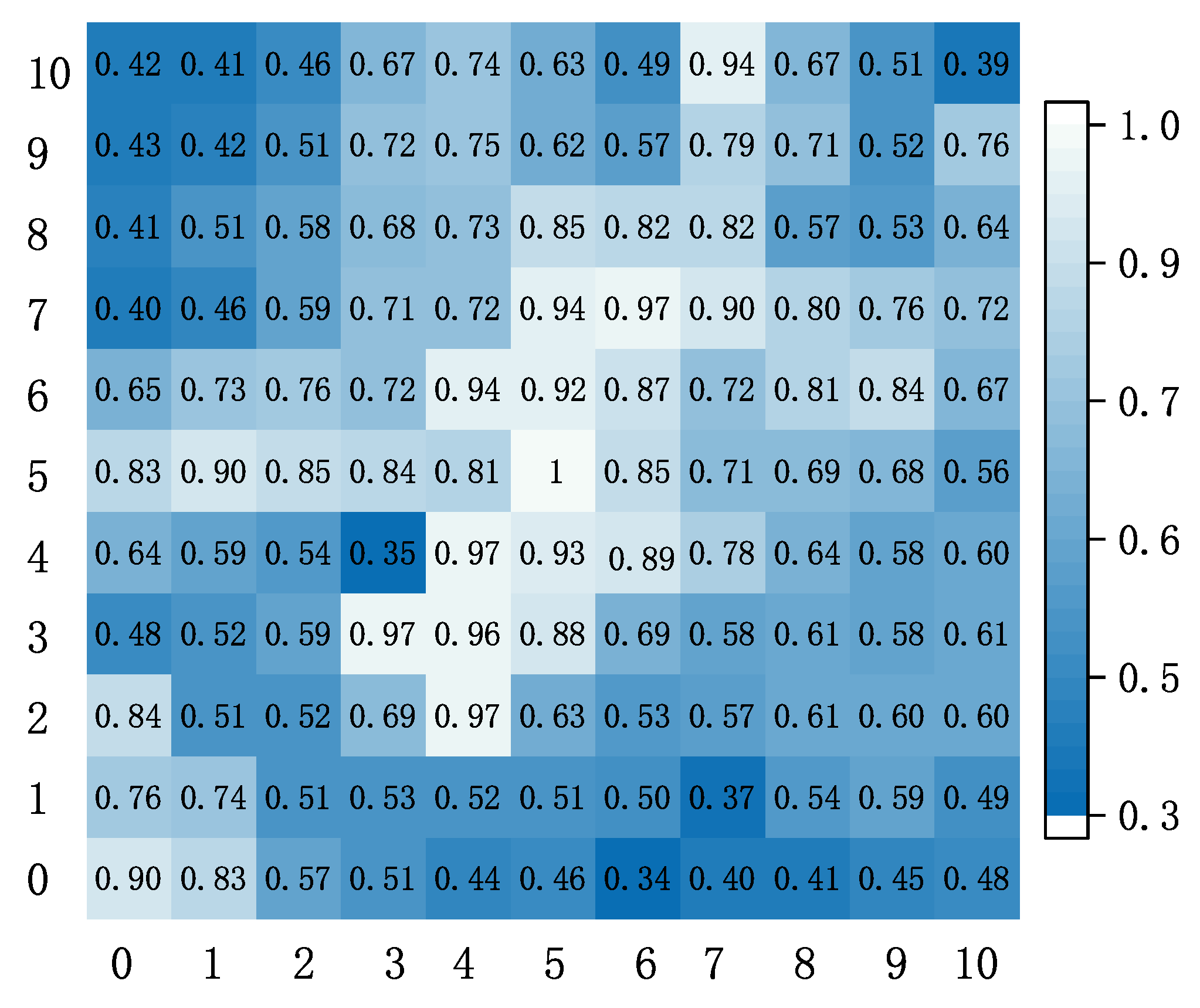

2. Data Observation and Analysis

2.1. Temporal Domain

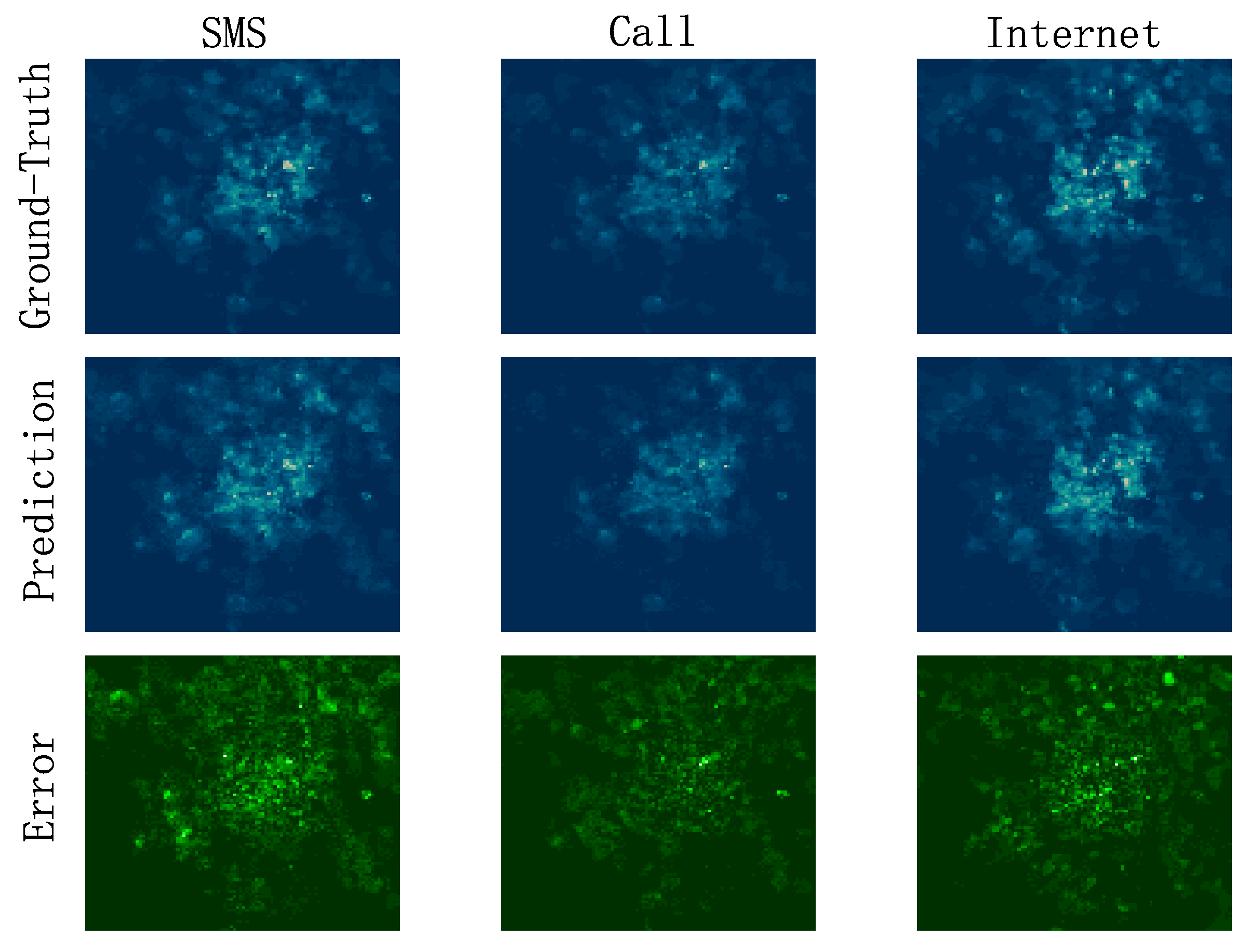

2.2. Spatial Domain

3. Cellular Traffic Prediction Model

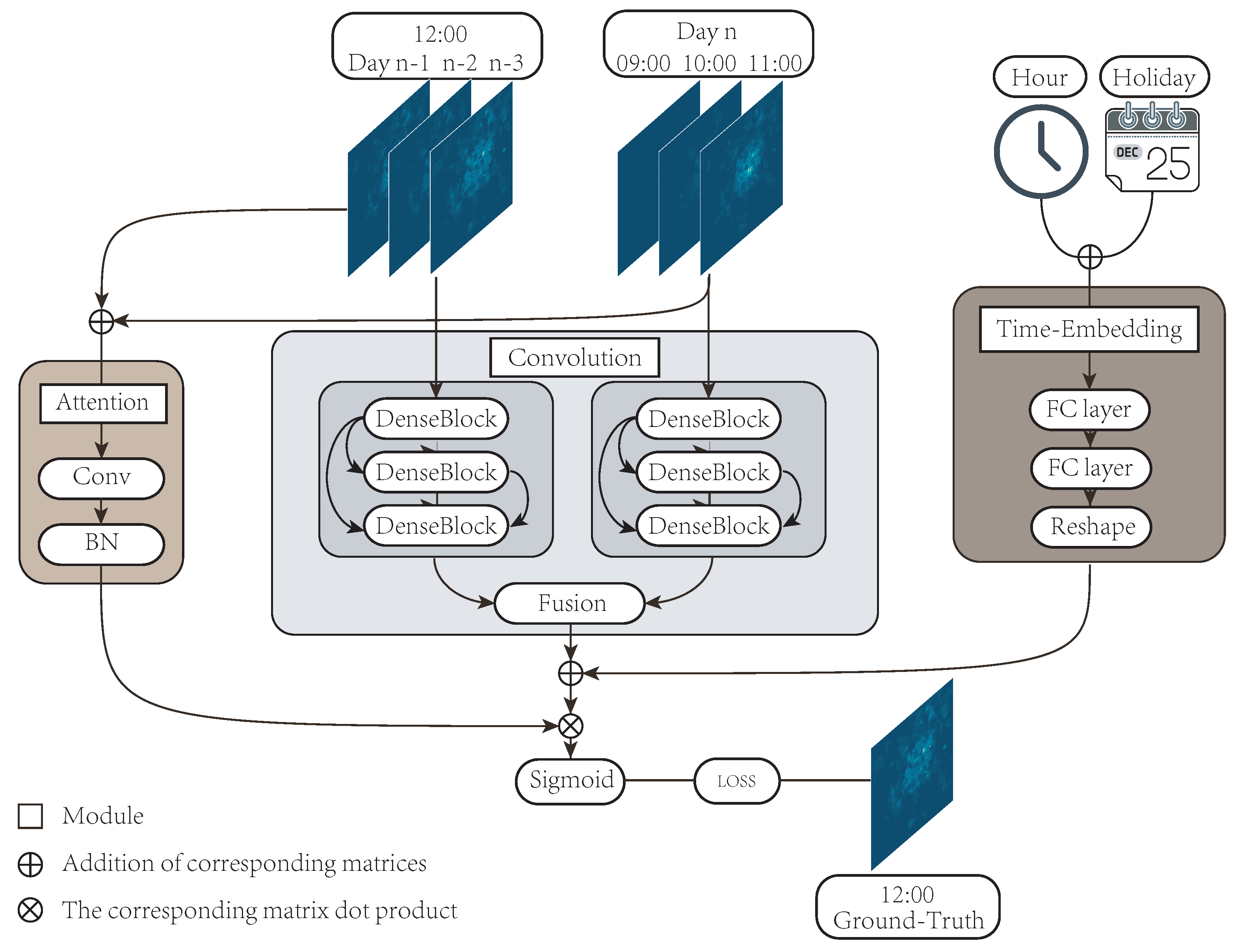

3.1. Model Framework Introduction

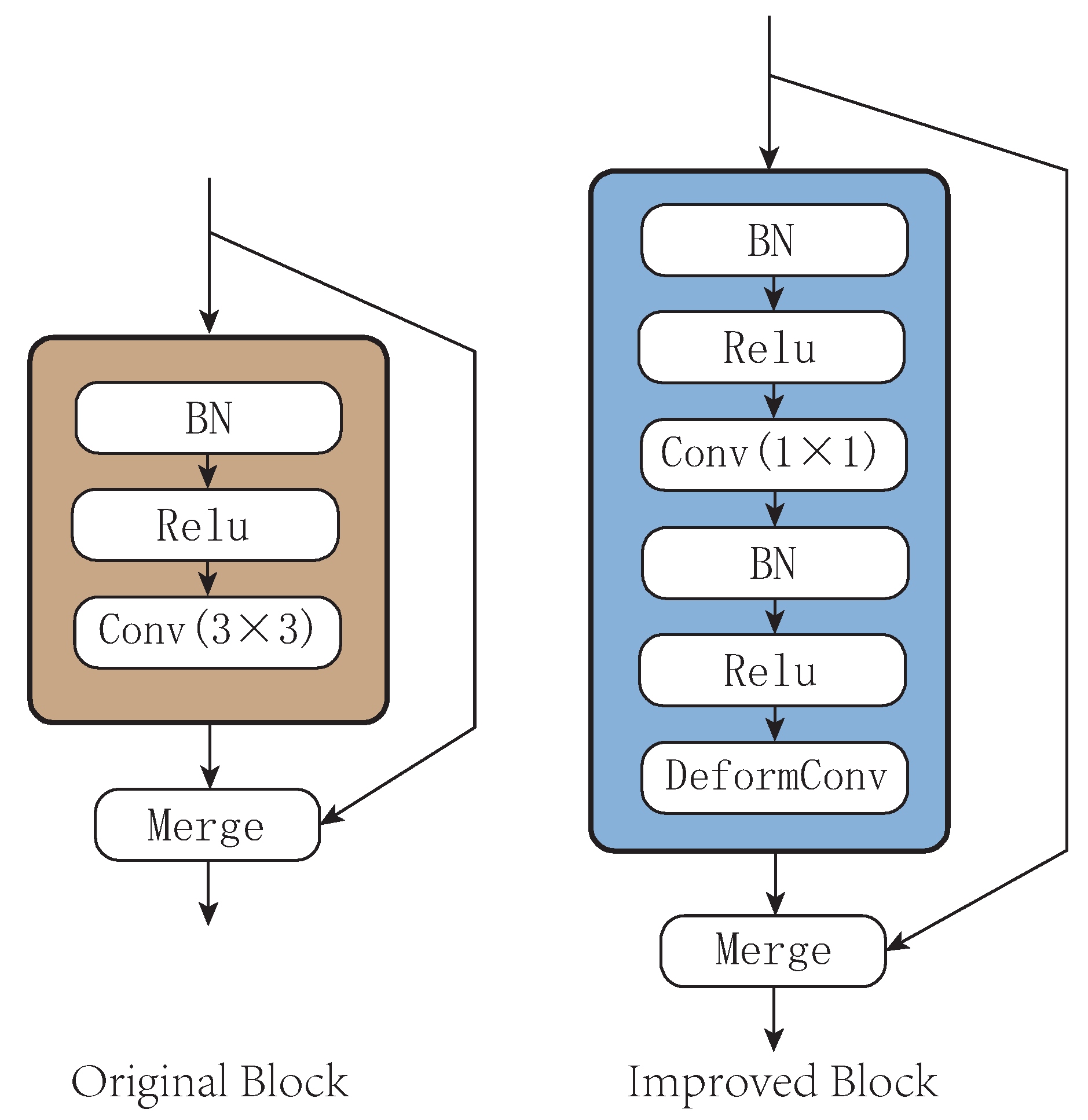

3.2. Convolution Module

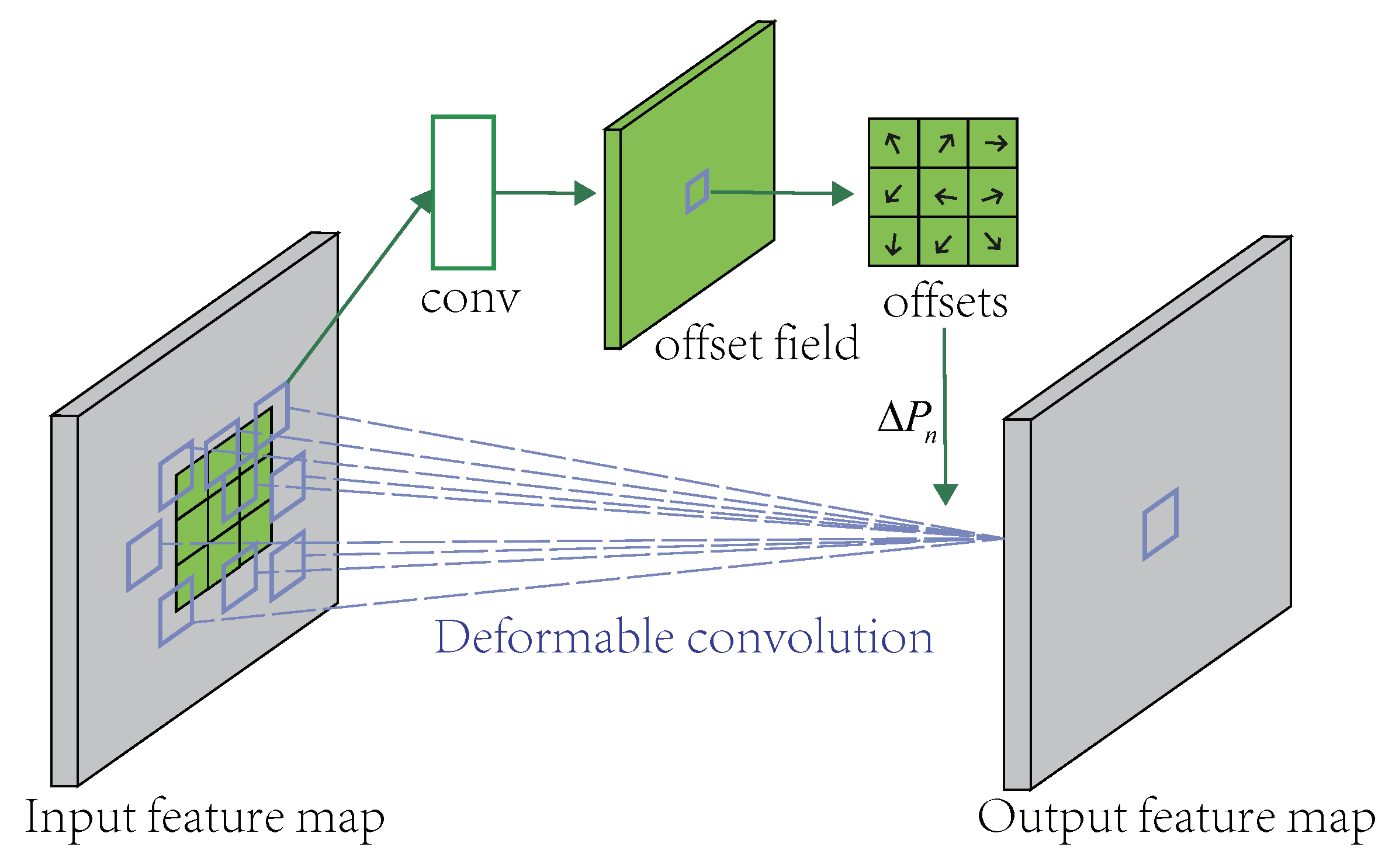

3.3. Deformable Convolution

3.4. Time Embedding Module

- Dividing the time period of the day into 24 segments, representing 24 h, the time attribute of each data was represented by a 24-dimensional one-hot vector (Hour_of_Day).

- Holiday (including weekends and Italian festivals) is represented by a one-dimensional vector (Is_of_Holiday) and is entered with 0 or 1, 1 indicating that the day is a holiday and 0 indicating that the day is a working day.

3.5. Attention Module

4. Experimental Results and Analysis

4.1. Experimental Process and Parameter Setting

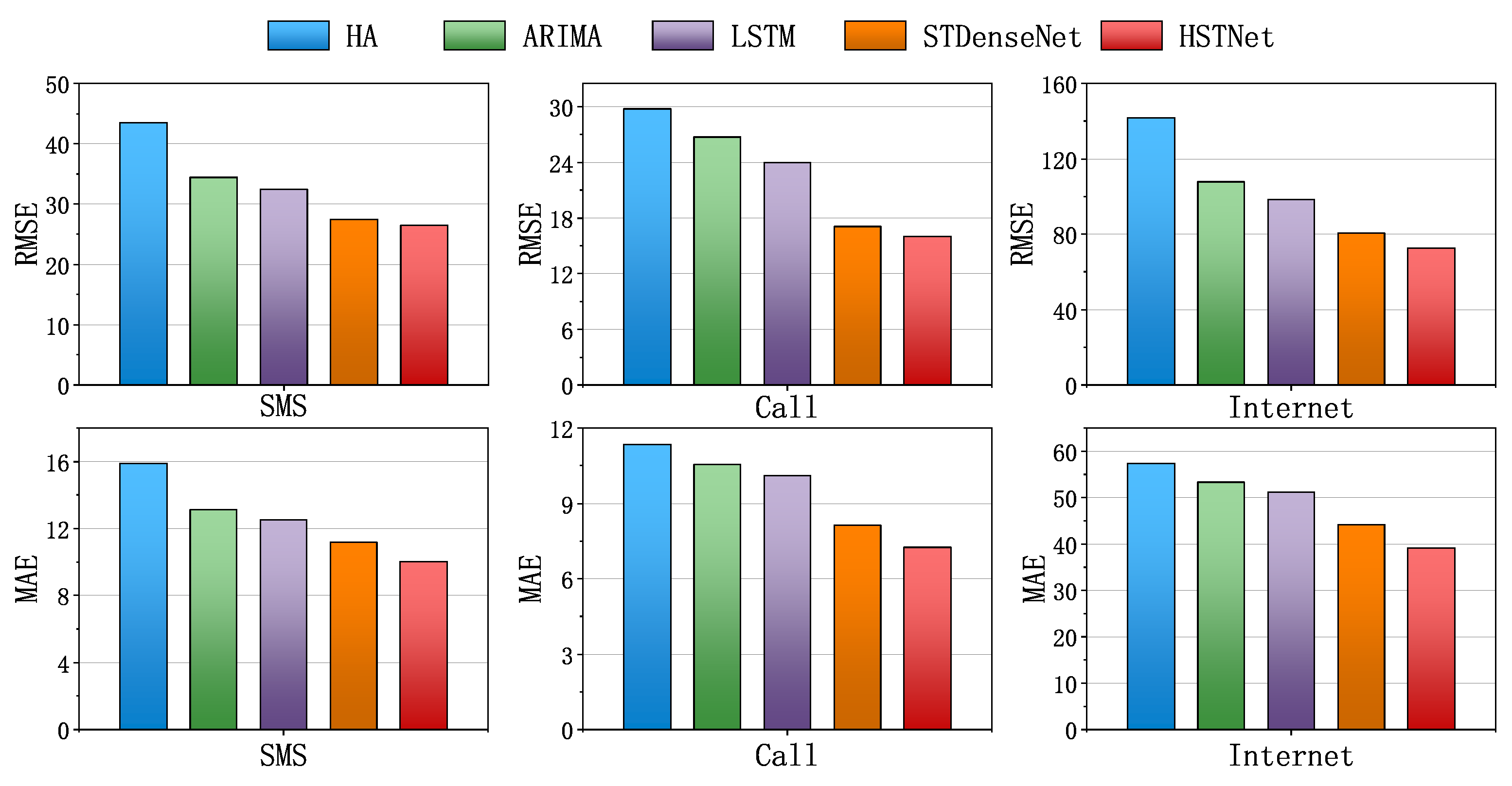

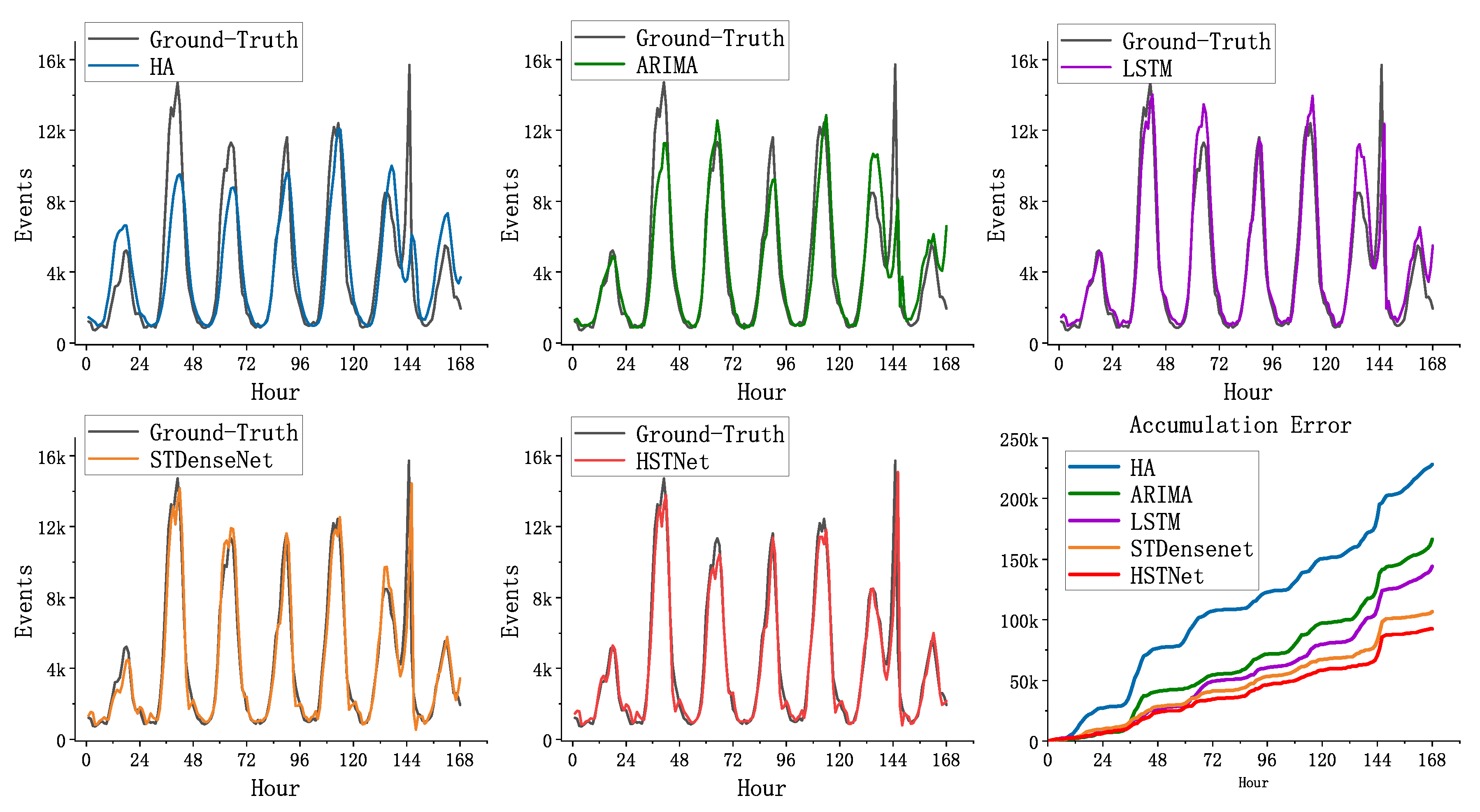

4.2. Experiment Analysis

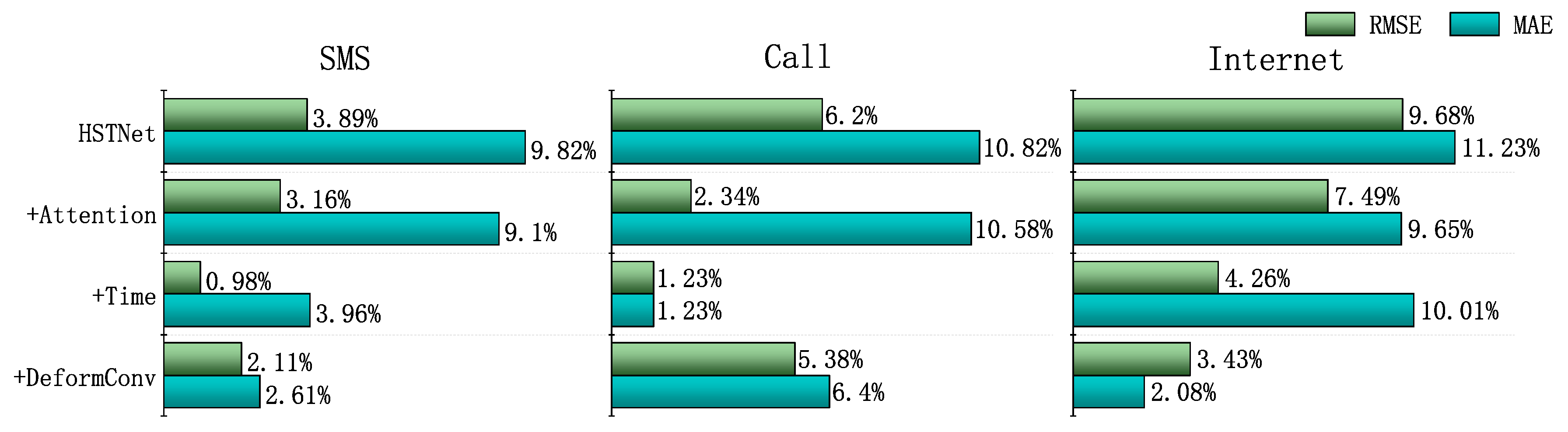

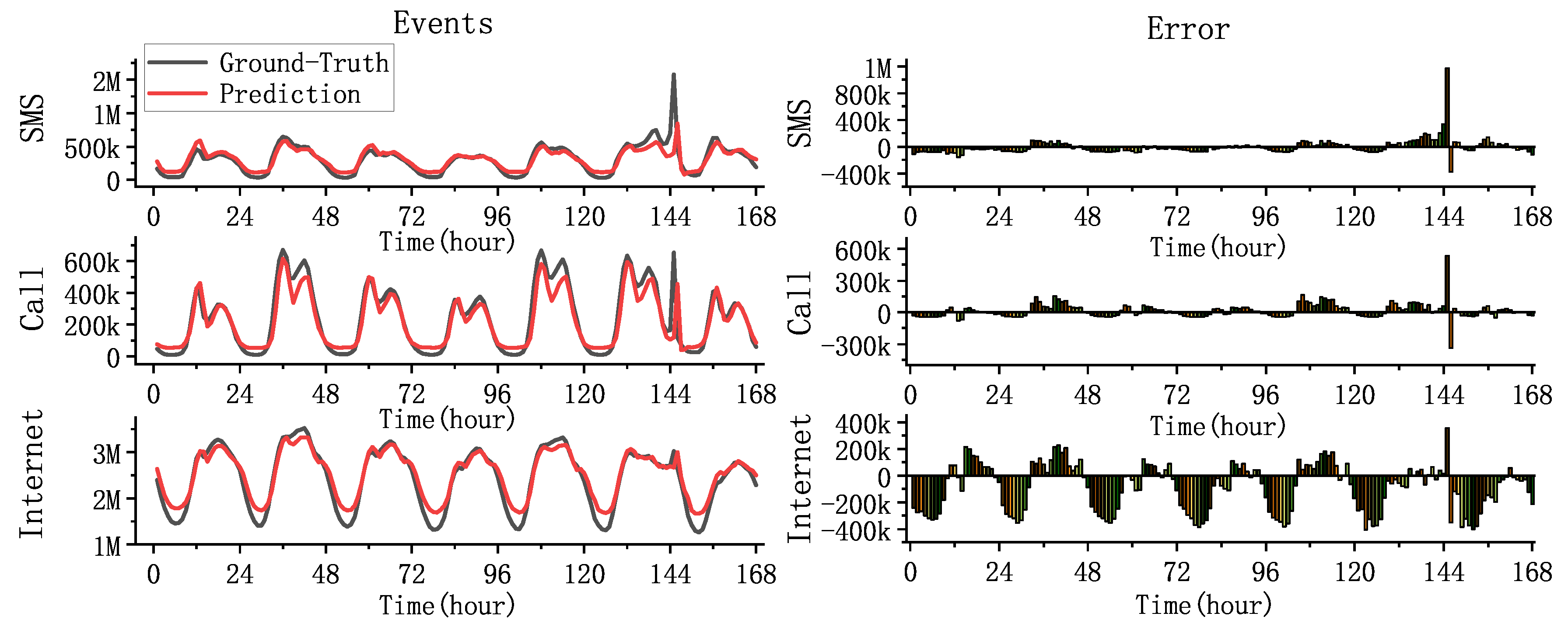

4.3. Experimental Result

5. Conclusions

- This work used DenseNet with deformable convolution to extract the spatiotemporal characteristics of traffic.

- We introduced hour and holiday information to aid traffic forecasting.

- We proposed an attention module based on historical data to adjust the weight of the predicted traffic.

- The model did not have a good ability to respond to fluctuations caused by emergencies.

- The forecast performance of the large scale traffic volume (total traffic volume of the entire city) needs to be improved.

- There are many external factors that we did not consider that could have a potential impact on cellular traffic changes.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, S.; Da Xu, L.; Zhao, S. 5G Internet of Things: A survey. J. Ind. Inf. Integr. 2018, 10, 1–9. [Google Scholar] [CrossRef]

- Javaid, N.; Sher, A.; Nasir, H.; Guizani, N. Intelligence in IoT-based 5G networks: Opportunities and challenges. IEEE Commun. Mag. 2018, 56, 94–100. [Google Scholar] [CrossRef]

- Wollschlaeger, M.; Sauter, T.; Jasperneite, J. The future of industrial communication: Automation networks in the era of the internet of things and industry 4.0. IEEE Ind. Electron. Mag. 2017, 11, 17–27. [Google Scholar] [CrossRef]

- Akpakwu, G.A.; Silva, B.J.; Hancke, G.P.; Abu-Mahfouz, A.M. A survey on 5G networks for the Internet of Things: Communication technologies and challenges. IEEE Access 2017, 6, 3619–3647. [Google Scholar] [CrossRef]

- Rao, S.K.; Prasad, R. Impact of 5G technologies on industry 4.0. Wirel. Pers. Commun. 2018, 100, 145–159. [Google Scholar] [CrossRef]

- Huang, Y.; Tan, J.; Liang, Y.C. Wireless big data: Transforming heterogeneous networks to smart networks. J. Commun. Inf. Netw. 2017, 2, 19–32. [Google Scholar] [CrossRef][Green Version]

- Bi, S.; Zhang, R.; Ding, Z.; Cui, S. Wireless communications in the era of big data. IEEE Commun. Mag. 2015, 53, 190–199. [Google Scholar] [CrossRef]

- Yao, C.; Yang, C.; Chih-Lin, I. Data-driven resource allocation with traffic load prediction. J. Commun. Inf. Netw. 2017, 2, 52–65. [Google Scholar] [CrossRef]

- Shu, Y.; Yu, M.; Yang, O.; Liu, J.; Feng, H. Wireless traffic modeling and prediction using seasonal ARIMA models. IEICE Trans. Commun. 2005, 88, 3992–3999. [Google Scholar] [CrossRef]

- Zhou, B.; He, D.; Sun, Z. Traffic predictability based on ARIMA/GARCH model. In Proceedings of the 2006 2nd Conference on Next Generation Internet Design and Engineering, Valencia, Spain, 3–5 April 2006. [Google Scholar]

- Li, R.; Zhao, Z.; Zheng, J.; Mei, C.; Cai, Y.; Zhang, H. The learning and prediction of application-level traffic data in cellular networks. IEEE Trans. Wirel. Commun. 2017, 16, 3899–3912. [Google Scholar] [CrossRef]

- Chen, X.; Jin, Y.; Qiang, S.; Hu, W.; Jiang, K. Analyzing and modeling spatio-temporal dependence of cellular traffic at city scale. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015. [Google Scholar]

- Wang, J.; Gong, B.; Liu, H.; Li, S. Multidisciplinary approaches to artificial swarm intelligence for heterogeneous computing and cloud scheduling. Appl. Intell. 2015, 43, 662–675. [Google Scholar] [CrossRef]

- Sun, H.; Liu, H.X.; Xiao, H.; He, R.R.; Ran, B. Use of local linear regression model for short-term traffic forecasting. Transp. Res. Rec. 2003, 1836, 143–150. [Google Scholar] [CrossRef]

- Sapankevych, N.I.; Sankar, R. Time series prediction using support vector machines: A survey. IEEE Comput. Intell. Mag. 2009, 4, 24–38. [Google Scholar] [CrossRef]

- Wang, J.; Tang, J.; Xu, Z.; Wang, Y.; Xue, G.; Zhang, X.; Yang, D. Spatiotemporal modeling and prediction in cellular networks: A big data enabled deep learning approach. In Proceedings of the IEEE INFOCOM 2017—IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017. [Google Scholar]

- Zhang, C.; Zhang, H.; Yuan, D.; Zhang, M. Citywide cellular traffic prediction based on densely connected convolutional neural networks. IEEE Commun. Lett. 2018, 22, 1656–1659. [Google Scholar] [CrossRef]

- Barlacchi, G.; De Nadai, M.; Larcher, R.; Casella, A.; Chitic, C.; Torrisi, G.; Antonelli, F.; Vespignani, A.; Pentland, A.; Lepri, B. A multi-source dataset of urban life in the city of Milan and the Province of Trentino. Sci. Data 2015, 2, 150055. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Dataset | Model | MAE | RMSE |

|---|---|---|---|

| SMS | STDenseNet | 11.10 | 27.49 |

| +DeformConv | 10.81 | 26.91 | |

| +Time-property | 10.66 | 27.22 | |

| +Attention | 10.09 | 26.62 | |

| HSTNet | 10.01 | 26.42 | |

| Call | STDenseNet | 8.13 | 17.10 |

| +DeformConv | 7.61 | 16.18 | |

| +Time-property | 8.03 | 16.89 | |

| +Attention | 7.27 | 16.70 | |

| HSTNet | 7.25 | 16.04 | |

| Internet | STDenseNet | 44.15 | 80.51 |

| +DeformConv | 43.23 | 77.75 | |

| +Time-property | 39.73 | 77.08 | |

| +Attention | 39.89 | 74.48 | |

| HSTNet | 39.19 | 72.72 |

| Model | Time | Parameters |

|---|---|---|

| STDenseNet | 22s | 239K |

| +DeformConv | 34s | 170K |

| +Time-property | 23s | 350K |

| +Attention | 22s | 243K |

| HSTNet | 35s | 284K |

| Input Dimension | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| SMS | 27.51 | 27.18 | 26.42 | 26.83 |

| Call | 16.86 | 16.23 | 16.04 | 16.62 |

| Internet | 80.10 | 75.38 | 72.72 | 78.32 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Liu, L.; Xie, C.; Yang, B.; Liu, Q. Citywide Cellular Traffic Prediction Based on a Hybrid Spatiotemporal Network. Algorithms 2020, 13, 20. https://doi.org/10.3390/a13010020

Zhang D, Liu L, Xie C, Yang B, Liu Q. Citywide Cellular Traffic Prediction Based on a Hybrid Spatiotemporal Network. Algorithms. 2020; 13(1):20. https://doi.org/10.3390/a13010020

Chicago/Turabian StyleZhang, Dehai, Linan Liu, Cheng Xie, Bing Yang, and Qing Liu. 2020. "Citywide Cellular Traffic Prediction Based on a Hybrid Spatiotemporal Network" Algorithms 13, no. 1: 20. https://doi.org/10.3390/a13010020

APA StyleZhang, D., Liu, L., Xie, C., Yang, B., & Liu, Q. (2020). Citywide Cellular Traffic Prediction Based on a Hybrid Spatiotemporal Network. Algorithms, 13(1), 20. https://doi.org/10.3390/a13010020