When 5G Meets Deep Learning: A Systematic Review

Abstract

1. Introduction

2. Systematic Review

2.1. Activity 1: Identify the Need for the Review

2.2. Activity 2: Define Research Questions

- RQ. 1: What are the main problems deep learning is being used to solve?

- RQ. 2: What are the main learning types used to solve 5G problems (supervised, unsupervised, and reinforcement)?

- RQ. 3: What are the main deep learning techniques used in 5G scenarios?

- RQ. 4: How the data used to train the deep learning models is being gathered or generated?

- RQ. 5: What are the main research outstanding challenges in 5G and deep learning field?

2.3. Activity 3: Define Search String

2.4. Activity 4: Define Sources of Research

2.5. Activity 5: Define Criteria for Inclusion and Exclusion

2.6. Activity 6: Identify Primary Studies

2.7. Activity 7: Extract Relevant Information

2.8. Activity 8: Present an Overview of the Studies

2.9. Activity 9: Present the Results of the Research Questions

3. Results

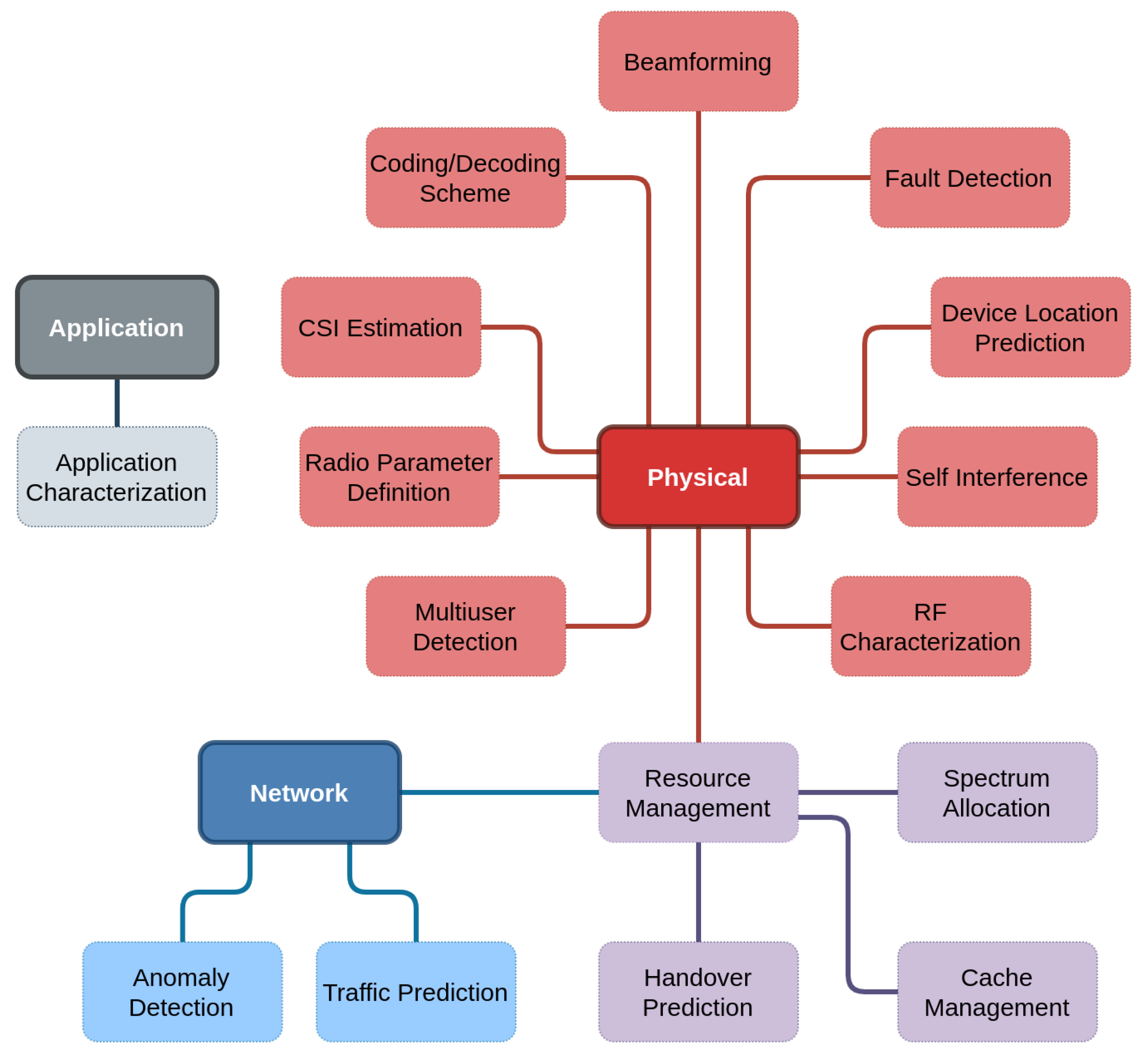

3.1. What are the Main Problems Deep Learning Is Being Used to Solve?

3.1.1. Channel State Information Estimation

3.1.2. Coding/Decoding Scheme Representation

3.1.3. Fault Detection

3.1.4. Device Location Prediction

3.1.5. Anomaly Detection

3.1.6. Traffic Prediction

3.1.7. Handover Prediction

3.1.8. Cache Optimization

3.1.9. Resource Allocation/Management

3.1.10. Application Characterization

3.1.11. Other Problems

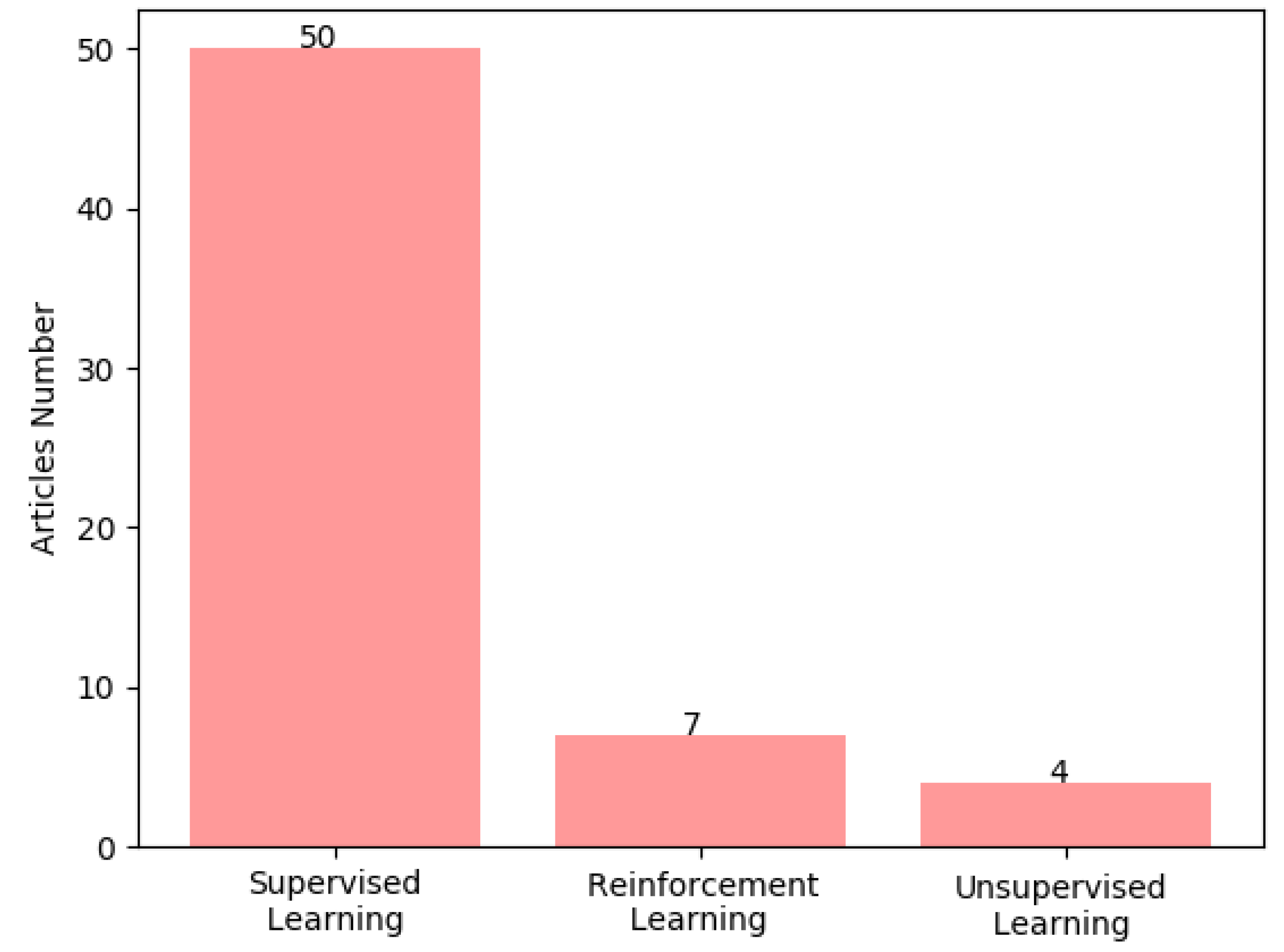

3.2. What Are the Main Types of Learning Techniques Used to Solve 5G Problems?

3.2.1. Supervised Learning

3.2.2. Reinforcement Learning

3.2.3. Unsupervised Learning

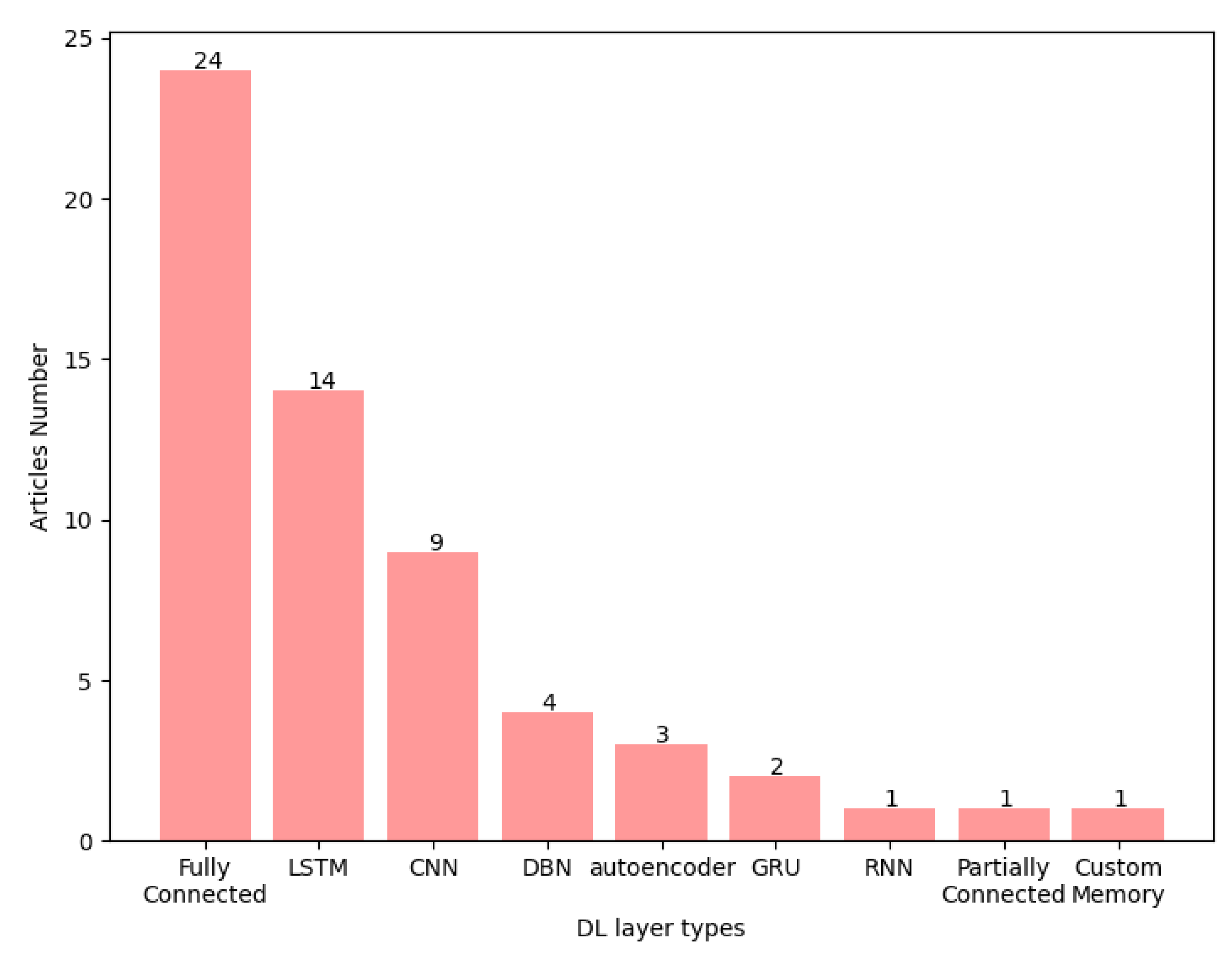

3.3. What Are the Main Deep Learning Techniques Used in 5G Scenarios?

3.3.1. Fully Connected Models

3.3.2. Recurrent Neural Networks

3.3.3. CNN

3.3.4. DBN

3.3.5. Autoencoder

3.3.6. Combining Models

3.4. How the Data Used to Train the Deep Learning Models Was Gathered/Generated?

3.4.1. Telecom Italia Big Challenge Dataset

3.4.2. CTU-13 Dataset

3.4.3. 4G LTE Dataset with Channel from University College Cork (UCC)

3.5. What Are the Most Common Scenarios Used to Evaluate the Integration between 5G and Deep Learning?

3.6. What Are the Main Research Challenges in 5G and Deep Learning Field?

3.7. Discussions

4. Final Considerations

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Article | Layer Type | Learning Type | Data Source | Paper Objective |

|---|---|---|---|---|

| [56] | fully connected | supervised | simulation | to use a deep learning approach to reduce the network energy consumption and the transmission delay via optimizing the placement of content in heterogeneous networks. |

| [61] | DBN | supervised | simulation | a deep learning model was used to find the approximated optimal joint resource allocation strategy to minimize the energy consumption |

| [76] | fully connected | supervised | synthetic | the paper proposed a deep learning model to multiuser detection problem in the scenario of SCMA |

| [25] | autoencoder | unsupervised | simulation | the paper proposed the use of autoencoders to reduce PAPR in OFDM techniques called PRNet. The model is used to map constellation mapping and demapping of symbols on each subcarrier in an OFDM system, while minimize BER |

| [19] | residual network | supervised | synthetic | a deep-learning model was proposed for CSI estimation in FBMC systems. The traditional CSI estimation and equalization and demapping module are replaced by deep learning model |

| [67] | not described | supervised | real data | the paper propose a solution for optimize the self-organization in LTE networks. The solution, called APP-SON, makes the optimization based on the applications characteristics |

| [70] | a memory with custom memory | supervised and unsupervised | not described | the work proposed a digital cancellation scheme eliminating linear and non-linear interference based on deep learning |

| [38] | DBN | supervised | real data | the paper proposed a deep learning-based solution for anomaly detection on 5G network flows |

| [26] | fully connected and LSTM | supervised | not described | the authors proposed a deep learning model for channel decoding. The model is based on polar and LDPC mechanisms for decode signals in the receiver devices |

| [59] | LSTM | supervised | simulation | the authors proposed a machine learning-based solution to predict the medium usage for network slices in 5G environments meeting some SLA requirements |

| [34] | CNN | supervised | simulation | the authors proposed a system to to convert the received millimeter wave radiation into the device’s position using CNN |

| [71] | biLSTM | supervised | real data | a BiLSTM model was used to represent the effects of non-linear PAs, which is a promising technology for 5G. The authors defined the map between the digital baseband stimuluses and the response as a non-linear function. |

| [6] | CNN | supervised | real data | the authors proposed a framework based on CNN models to predict traffic in a city environment taking into account spatial and temporal aspects |

| [21] | fully connected | supervised | not described | the authors proposed a deep learning scheme to represent a constellation-domain multiplexing at the transmitter. This scheme was used to parameterize the bit-to-symbol mapping as well as the symbol detector |

| [23] | autoencoder | supervised | not described | the paper proposes a deep learning model to learning automatically the codebook SCMA. The codebook is responsible to code the transmitted bits into multidimensional codewords. Thus, the model proposed maps the bits into a resource (codebook) after the transmission and decode the signal received into bits at the receiver |

| [51] | LSTM | supervised | simulation | the paper proposed deep learning based scheme to avoid handover failures based on early prediction. This scheme can be used to evaluate the signal condition and make the handover before a failure happen |

| [7] | CNN and LSTM | supervised | real data | the authors proposed an online framework to estimate CSI based on deep learning models called OCEAN. OCEAN is able to find CSI for a mobile device during a period ate a specific place |

| [3] | not described | deep learning and reinforcement learning | not described | the authors proposed a beamforming scheme based on deep reinforcement learning. The problem addressed was the beamforming performance in dynamic environments. Depends on the number of users concentrated in a area, the beamforming configuration is produce a more directed signal, on the other hand a signal with wide coverage is sent. The solution proposed is composed of three different models. The first one, is a model that generated synthetic user mobility patterns. The second model tries to response with a more appropriated antenna diagram (beamforming configuration). The third model evaluates the performance of results obtained by the models and returns a reward for the previous models. The authors did not make any experiments about the scheme proposed |

| [15] | fully connected | supervised | simulation | the authors proposed a deep learning scheme for DD-CE in MIMO systems. The core part of DD-CE is the channel prediction, where the ”current channel state is estimated base on the previous estimate and detected symbols”. Deep learning can avoid the need of complex mathematical models for doppler rates estimation |

| [16] | fully connected | supervised | simulation | the authors combined deep learning and superimposed coding techniques for CSI feedback. In a traditional superimposed coding-based CSI feedback system, the main goal of a base station is to recover downlink CSI and detect user data |

| [63] | fully connected | supervised | simulation | the authors proposed an algorithm to allocate carrier in MCPA dynamically, taking into account the energy efficiency and the implementation complexity. The main idea is to minimize the total power consumption finding the optimal carrier to MPCA allocation. To solve this problem, two approaches were used: convex relaxation and deep learning |

| [29] | CNN | supervised | not described | the authors presented a deep learning model to fault detection and fault location in wireless communication systems through deep learning, focusing in mmWave systems |

| [44] | 3D CNN | supervised | real data | the authors proposed a deep learning-based solution for allocation resources previously based on data analytic. The solution is called DeepCog, which receives as input measurement data of a specific network slice, make a prediction of network flow and allocate resources in data center to meet the demand |

| [17] | fully connected and RNN | supervised | simulation | the authors proposed a systematic review about CSI and then presented some evaluations using deep learning models. The solutions presented in the systematic review have a focus on “linear correlations such as sparse spatial steering vectors or frequency response, and Gauss-Markov time correlations” |

| [36] | LSTM | supervised | simulation | the authors proposed a deep learning-based algorithm for handover mechanism. The model is used to predict the user mobility and anticipate the handover preparation previously. The algorithm will estimate the future position of the an user based on its historical data |

| [62] | fully connected | deep learning and reinforcement learning | simulation | the authors proposed a solution to improve the energy efficiency of user equipment in MEC environments in 5G. In the work, two different types of applications were considered: URLLC and high data rate delay tolerant applications. The solution uses a ”digital twin” of the real network to train the neural network models |

| [11] | fully connected | supervised | synthetic (through genetic algorithm) | the authors proposed a deep learning model for resource allocation to maximize the network throughput by performing joint resource allocation (i.e., both power and channel). Firstly a review about deep learning techniques applied to wireless resource allocations problem was presented. After, a deep learning model was presented. This model takes as input the CQI and the location indicator (position between the user from the base stations) of users for all base stations and predicts the power and sub-band allocations |

| [68] | fully connected | supervised | simulation | the work proposed a pilot allocation scheme based on deep learning for massive MIMO systems. The model was used to learn the relationship between the users’ location and the near-optimal pilot assignment with low computational complexity |

| [65] | fully connected | supervised | not described | the authors proposed a deep learning model for smart communication systems for highly density D2D mmWave environments using beamforming. The model can be used to predict the best relay for relaying data taking into account several reliability metrics for select the relay node (e.g., another device or a base station) |

| [64] | fully connected | supervised | simulation | the authors proposed a deep learning-based solution for downlink CoMP in 5G environments. The model receives as input some physical layer measurements from the connected user equipment and ”formulates a modified CoMP trigger function to enhance the downlink capacity”. The output of the model is the decision to enable/disable the CoMP mechanism |

| [22] | fully connected | supervised | not described | the authors proposed a deep learning-based scheme for precoding and SIC decoding for scheme for the MIMO-NOMA system |

| [57] | LSTM | supervised and reinforcement learning | simulation | the authors proposed a framework to resource scheduling allocation based on deep learning and reinforcement learning. The main goal is to minimize the resource consumption at the same time guaranteeing the required performance isolation degree. A LSTM and reinforcement learning are used in cooperation to do this task. A LSTM model was used to predict the traffic based on the historical data. |

| [45] | LSTM, 3D CNN, and CNN+LSTM | supervised | real data | the authors proposed a multitask learning based on deep learning for predict data flow in 5G environments. The model is able to predict the minimum, maximum, and average traffic (multitask learn) of the next hour based on the traffic of the current hour. |

| [30] | DBN | unsupervised and supervised | real data | the authors proposed a DBN model for fault location in optical fronthaul networks. The model proposed identify faults and false alarms in alarm information considering single link connections |

| [41] | fully connected | supervised | real data | the paper proposed a deep learning model to detect anomalies in the network traffic, considering two types of behavior as network anomalies: sleeping cells and soared traffic. |

| [47] | LSTM | supervised | simulation | the authors proposed a deep learning model to predict traffic in base stations in order to avoid flow congestion in 5G ultra dense networks |

| [52] | fully connected and LSTM | supervised | real data | the authors proposed a analytical model for holistic handover cost and a deep learning model to handover prediction. The holistic handover cost model takes into account signaling overhead, latency, call dropping, and radio resource wastage |

| [48] | LSTM | supervised | real data | a system model that combine mobile edge computing and mobile data offloading was proposed in the paper. In order to improve the system performance, a deep learning model was proposed to predict the traffic and decide if the offloading can be performed on the base station |

| [55] | - | reinforcement learning | simulation | the authors proposed a network architecture that integrates MEC and C-RAN. In order to reduce the latency, a caching mechanism can be adopted in the MEC. Thus, reinforcement learning was used to maximize the cache hit rate the cache use |

| [46] | LSTM | supervised | real data | the paper proposed a framework to cluster RRHs and map them into BBU pools using predicted data of mobile traffic. Firstly, the future traffic of the RRHs are estimated using a deep learning model based on the historical traffic data, then these RRHs are grouped according with their complementarity |

| [40] | DBN | supervised | real data | the paper proposes a deep learning-based approach to analyze network flows and detect network anomalies. This approach executes in a MEC in 5G networks. A system based on NFV and SDN was proposed to detect and react to anomalies in the network |

| [77] | - | reinforcement learning | simulation | the paper proposed two schemes based on Q-learning to choose the best downlink and uplink configuration in dynamic TDD systems. The main goal is to optimize the MOS, which is a QoE measure that correspond a better experience of users. |

| [35] | CNN, LSTM, and temporal convolutional network | supervised | simulation | the authors proposed a deep learning-based approach to predict the user position for mmwave systems based on beamformed fingerprint |

| [2] | LSTM | supervised and reinforcement learning | simulation | the authors deal with physical layer control problem. A reinforcement learning-based solution was used to learn the optimal physical-layer control parameters of different scenarios. The scheme proposed use reinforcement learning to choose the best configuration for the scenario. In the scheme proposed, a radio designer need to specify the network configuration that varies according with the scenario specification |

| [58] | X-LSTM | supervised | real data | the paper proposed models to predict the mount of PRBs available to allocate network slices in 5G networks |

| [66] | fully connected | supervised | real data | the authors proposed a algorithm to achieve self-optimization in LTE and 5G networks trough wireless analysis. The deep learning model is used to perform a regression to derive the relationship between the engineering parameters and the performance indicators |

| [10] | fully connected | supervised | real data | the paper proposed a deep learning-based solution to detect anomalies in 5G networks powered by MEC. The model detects sleeping cells events and soared traffic as anomalies |

| [60] | fully connected | supervised | simulation | the paper proposed a framework to optimize the energy consumption of NOMA systems in a resource allocation problem. |

| [72] | fully connected | supervised | simulation | the paper proposed an auction mechanism for spectrum sharing using deep learning models in order to improve the channel capacity |

| [73] | fully connected | supervised and reinforcement learning | simulation | the paper proposed a deep reinforcement learning mechanism for packet scheduler in multi-path networks. |

| [5] | Generative adversarial networks (GAN) with LSTM and CNN layers | supervised | real data | the paper proposed a deep learning-based framework for address the problem of the network slicing scheme for the mobile network. The deep learning model is used to predict network flow in other to make resource allocation |

| [27] | Autoencoder with Bi-GRU layers | supervised | not described | the paper proposed a deep learning-based solution for channel coding in low-latency scenarios. The idea was to create a robust and adaptable mechanism for generic codes for future communications |

| [74] | fully connected | supervised | synthetic | the paper proposed a deep learning model for physical layer security. The model was used to optimize the value of the power allocation factor in a secure communication system |

| [75] | CNN and fully connected | supervised | simulation | the paper proposed a radio propagation model based on deep learning. The model maps geographical area in the radio propagation (path loss) |

| [24] | partially and fully connected layers | unsupervised | not described | a deep learning model was proposed to represent a MU-SIMO system. The main purpose is to reduce the difference between the signal transmitted and the signal received |

| [43] | GRU | supervised | real data | the paper proposed a deep learning-based framework for traffic prediction in order to enable proactive adjustment in network slice |

References

- Cisco. Global—2021 Forecast Highlights. 2016. Available online: https://www.cisco.com/c/dam/m/en_us/solutions/service-provider/vni-forecast-highlights/pdf/Global_2021_Forecast_Highlights.pdf (accessed on 19 August 2020).

- Joseph, S.; Misra, R.; Katti, S. Towards self-driving radios: Physical-layer control using deep reinforcement learning. In Proceedings of the 20th International Workshop on Mobile Computing Systems and Applications, Santa Cruz, CA, USA, 27–28 February 2019; pp. 69–74. [Google Scholar]

- Maksymyuk, T.; Gazda, J.; Yaremko, O.; Nevinskiy, D. Deep Learning Based Massive MIMO Beamforming for 5G Mobile Network. In Proceedings of the 2018 IEEE 4th International Symposium on Wireless Systems within the International Conferences on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS-SWS), Lviv, Ukraine, 20–21 September 2018; pp. 241–244. [Google Scholar]

- Arteaga, C.H.T.; Anacona, F.B.; Ortega, K.T.T.; Rendon, O.M.C. A Scaling Mechanism for an Evolved Packet Core based on Network Functions Virtualization. IEEE Trans. Netw. Serv. Manag. 2019, 17, 779–792. [Google Scholar] [CrossRef]

- Gu, R.; Zhang, J. GANSlicing: A GAN-Based Software Defined Mobile Network Slicing Scheme for IoT Applications. In Proceedings of the 2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Zhang, C.; Zhang, H.; Yuan, D.; Zhang, M. Citywide cellular traffic prediction based on densely connected convolutional neural networks. IEEE Commun. Lett. 2018, 22, 1656–1659. [Google Scholar] [CrossRef]

- Luo, C.; Ji, J.; Wang, Q.; Chen, X.; Li, P. Channel state information prediction for 5G wireless communications: A deep learning approach. IEEE Trans. Netw. Sci. Eng. 2018, 7, 227–236. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Hussain, B.; Du, Q.; Zhang, S.; Imran, A.; Imran, M.A. Mobile Edge Computing-Based Data-Driven Deep Learning Framework for Anomaly Detection. IEEE Access 2019, 7, 137656–137667. [Google Scholar] [CrossRef]

- Ahmed, K.I.; Tabassum, H.; Hossain, E. Deep learning for radio resource allocation in multi-cell networks. IEEE Netw. 2019, 33, 188–195. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep learning in mobile and wireless networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Coutinho, E.F.; de Carvalho Sousa, F.R.; Rego, P.A.L.; Gomes, D.G.; de Souza, J.N. Elasticity in cloud computing: A survey. Ann. Telecommun. 2015, 70, 289–309. [Google Scholar] [CrossRef]

- Caire, G.; Jindal, N.; Kobayashi, M.; Ravindran, N. Multiuser MIMO achievable rates with downlink training and channel state feedback. IEEE Trans. Inf. Theory 2010, 56, 2845–2866. [Google Scholar] [CrossRef]

- Mehrabi, M.; Mohammadkarimi, M.; Ardakani, M.; Jing, Y. Decision Directed Channel Estimation Based on Deep Neural Network k-Step Predictor for MIMO Communications in 5G. IEEE J. Sel. Areas Commun. 2019, 37, 2443–2456. [Google Scholar] [CrossRef]

- Qing, C.; Cai, B.; Yang, Q.; Wang, J.; Huang, C. Deep learning for CSI feedback based on superimposed coding. IEEE Access 2019, 7, 93723–93733. [Google Scholar] [CrossRef]

- Jiang, Z.; Chen, S.; Molisch, A.F.; Vannithamby, R.; Zhou, S.; Niu, Z. Exploiting wireless channel state information structures beyond linear correlations: A deep learning approach. IEEE Commun. Mag. 2019, 57, 28–34. [Google Scholar] [CrossRef]

- Prasad, K.S.V.; Hossain, E.; Bhargava, V.K. Energy efficiency in massive MIMO-based 5G networks: Opportunities and challenges. IEEE Wirel. Commun. 2017, 24, 86–94. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, D.; Zhu, Z.; Shi, W.; Li, Y. A ResNet-DNN based channel estimation and equalization scheme in FBMC/OQAM systems. In Proceedings of the 2018 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 18–20 October 2018; pp. 1–5. [Google Scholar]

- Ez-Zazi, I.; Arioua, M.; El Oualkadi, A.; Lorenz, P. A hybrid adaptive coding and decoding scheme for multi-hop wireless sensor networks. Wirel. Pers. Commun. 2017, 94, 3017–3033. [Google Scholar] [CrossRef]

- Jiang, L.; Li, X.; Ye, N.; Wang, A. Deep Learning-Aided Constellation Design for Downlink NOMA. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 1879–1883. [Google Scholar]

- Kang, J.M.; Kim, I.M.; Chun, C.J. Deep Learning-Based MIMO-NOMA With Imperfect SIC Decoding. IEEE Syst. J. 2019. [Google Scholar] [CrossRef]

- Kim, M.; Kim, N.I.; Lee, W.; Cho, D.H. Deep learning-aided SCMA. IEEE Commun. Lett. 2018, 22, 720–723. [Google Scholar] [CrossRef]

- Xue, S.; Ma, Y.; Yi, N.; Tafazolli, R. Unsupervised deep learning for MU-SIMO joint transmitter and noncoherent receiver design. IEEE Wirel. Commun. Lett. 2018, 8, 177–180. [Google Scholar] [CrossRef]

- Kim, M.; Lee, W.; Cho, D.H. A novel PAPR reduction scheme for OFDM system based on deep learning. IEEE Commun. Lett. 2017, 22, 510–513. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Z.; Zhang, S.; Cao, S.; Xu, S. A unified deep learning based polar-LDPC decoder for 5G communication systems. In Proceedings of the 2018 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 18–20 October 2018; pp. 1–6. [Google Scholar]

- Jiang, Y.; Kim, H.; Asnani, H.; Kannan, S.; Oh, S.; Viswanath, P. Learn codes: Inventing low-latency codes via recurrent neural networks. In Proceedings of the 2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Hu, P.; Zhang, J. 5G Enabled Fault Detection and Diagnostics: How Do We Achieve Efficiency? IEEE Internet Things J. 2020, 7, 3267–3281. [Google Scholar] [CrossRef]

- Chen, K.; Wang, W.; Chen, X.; Yin, H. Deep Learning Based Antenna Array Fault Detection. In Proceedings of the 2019 IEEE 89th Vehicular Technology Conference (VTC2019), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Yu, A.; Yang, H.; Yao, Q.; Li, Y.; Guo, H.; Peng, T.; Li, H.; Zhang, J. Accurate Fault Location Using Deep Belief Network for Optical Fronthaul Networks in 5G and Beyond. IEEE Access 2019, 7, 77932–77943. [Google Scholar] [CrossRef]

- Xiong, H.; Zhang, D.; Zhang, D.; Gauthier, V.; Yang, K.; Becker, M. MPaaS: Mobility prediction as a service in telecom cloud. Inf. Syst. Front. 2014, 16, 59–75. [Google Scholar] [CrossRef]

- Cheng, Y.; Qiao, Y.; Yang, J. An improved Markov method for prediction of user mobility. In Proceedings of the 2016 12th International Conference on Network and Service Management (CNSM), Montreal, QC, Canada, 31 October–4 November 2016; pp. 394–399. [Google Scholar]

- Qiao, Y.; Yang, J.; He, H.; Cheng, Y.; Ma, Z. User location prediction with energy efficiency model in the Long Term-Evolution network. Int. J. Commun. Syst. 2016, 29, 2169–2187. [Google Scholar] [CrossRef]

- Gante, J.; Falcão, G.; Sousa, L. Beamformed fingerprint learning for accurate millimeter wave positioning. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar]

- Gante, J.; Falcão, G.; Sousa, L. Deep Learning Architectures for Accurate Millimeter Wave Positioning in 5G. Neural Process. Lett. 2019. [Google Scholar] [CrossRef]

- Wang, C.; Zhao, Z.; Sun, Q.; Zhang, H. Deep learning-based intelligent dual connectivity for mobility management in dense network. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar]

- Santos, J.; Leroux, P.; Wauters, T.; Volckaert, B.; De Turck, F. Anomaly detection for smart city applications over 5g low power wide area networks. In Proceedings of the 2018 IEEE/IFIP Network Operations and Management Symposium, Taipei, Taiwan, 23–27 April 2018; pp. 1–9. [Google Scholar]

- Maimó, L.F.; Gómez, Á.L.P.; Clemente, F.J.G.; Pérez, M.G.; Pérez, G.M. A self-adaptive deep learning-based system for anomaly detection in 5G networks. IEEE Access 2018, 6, 7700–7712. [Google Scholar] [CrossRef]

- Parwez, M.S.; Rawat, D.B.; Garuba, M. Big data analytics for user-activity analysis and user-anomaly detection in mobile wireless network. IEEE Trans. Ind. Inform. 2017, 13, 2058–2065. [Google Scholar] [CrossRef]

- Maimó, L.F.; Celdrán, A.H.; Pérez, M.G.; Clemente, F.J.G.; Pérez, G.M. Dynamic management of a deep learning-based anomaly detection system for 5G networks. J. Ambient Intell. Humaniz. Comput. 2019, 10, 3083–3097. [Google Scholar] [CrossRef]

- Hussain, B.; Du, Q.; Ren, P. Deep learning-based big data-assisted anomaly detection in cellular networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Li, R.; Zhao, Z.; Zheng, J.; Mei, C.; Cai, Y.; Zhang, H. The learning and prediction of application-level traffic data in cellular networks. IEEE Trans. Wirel. Commun. 2017, 16, 3899–3912. [Google Scholar] [CrossRef]

- Guo, Q.; Gu, R.; Wang, Z.; Zhao, T.; Ji, Y.; Kong, J.; Gour, R.; Jue, J.P. Proactive Dynamic Network Slicing with Deep Learning Based Short-Term Traffic Prediction for 5G Transport Network. In Proceedings of the 2019 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 3–7 March 2019; pp. 1–3. [Google Scholar]

- Bega, D.; Gramaglia, M.; Fiore, M.; Banchs, A.; Costa-Perez, X. DeepCog: Cognitive network management in sliced 5G networks with deep learning. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 280–288. [Google Scholar]

- Huang, C.W.; Chiang, C.T.; Li, Q. A study of deep learning networks on mobile traffic forecasting. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar]

- Chen, L.; Yang, D.; Zhang, D.; Wang, C.; Li, J. Deep mobile traffic forecast and complementary base station clustering for C-RAN optimization. J. Netw. Comput. Appl. 2018, 121, 59–69. [Google Scholar] [CrossRef]

- Zhou, Y.; Fadlullah, Z.M.; Mao, B.; Kato, N. A deep-learning-based radio resource assignment technique for 5G ultra dense networks. IEEE Netw. 2018, 32, 28–34. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, K.; Chen, Q.; Peng, D.; Jiang, H.; Xu, X.; Shuang, X. Deep learning based mobile data offloading in mobile edge computing systems. Future Gener. Comput. Syst. 2019, 99, 346–355. [Google Scholar] [CrossRef]

- Hosny, K.M.; Khashaba, M.M.; Khedr, W.I.; Amer, F.A. New vertical handover prediction schemes for LTE-WLAN heterogeneous networks. PLoS ONE 2019, 14, e0215334. [Google Scholar] [CrossRef] [PubMed]

- Svahn, C.; Sysoev, O.; Cirkic, M.; Gunnarsson, F.; Berglund, J. Inter-frequency radio signal quality prediction for handover, evaluated in 3GPP LTE. In Proceedings of the 2019 IEEE 89th Vehicular Technology Conference (VTC2019), Kuala Lumpur, Malaysia, 28 April–1 May 2019; pp. 1–5. [Google Scholar]

- Khunteta, S.; Chavva, A.K.R. Deep learning based link failure mitigation. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 806–811. [Google Scholar]

- Ozturk, M.; Gogate, M.; Onireti, O.; Adeel, A.; Hussain, A.; Imran, M.A. A novel deep learning driven, low-cost mobility prediction approach for 5G cellular networks: The case of the Control/Data Separation Architecture (CDSA). Neurocomputing 2019, 358, 479–489. [Google Scholar] [CrossRef]

- Wen, J.; Huang, K.; Yang, S.; Li, V.O. Cache-enabled heterogeneous cellular networks: Optimal tier-level content placement. IEEE Trans. Wirel. Commun. 2017, 16, 5939–5952. [Google Scholar] [CrossRef]

- Serbetci, B.; Goseling, J. Optimal geographical caching in heterogeneous cellular networks with nonhomogeneous helpers. arXiv 2017, arXiv:1710.09626. [Google Scholar]

- Chien, W.C.; Weng, H.Y.; Lai, C.F. Q-learning based collaborative cache allocation in mobile edge computing. Future Gener. Comput. Syst. 2020, 102, 603–610. [Google Scholar] [CrossRef]

- Lei, L.; You, L.; Dai, G.; Vu, T.X.; Yuan, D.; Chatzinotas, S. A deep learning approach for optimizing content delivering in cache-enabled HetNet. In Proceedings of the 2017 International Symposium on Wireless Communication Systems (ISWCS), Bologna, Italy, 28–31 August 2017; pp. 449–453. [Google Scholar]

- Yan, M.; Feng, G.; Zhou, J.; Sun, Y.; Liang, Y.C. Intelligent resource scheduling for 5G radio access network slicing. IEEE Trans. Veh. Technol. 2019, 68, 7691–7703. [Google Scholar] [CrossRef]

- Gutterman, C.; Grinshpun, E.; Sharma, S.; Zussman, G. RAN resource usage prediction for a 5G slice broker. In Proceedings of the 20th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Catania, Italy, 2–5 July 2019; pp. 231–240. [Google Scholar]

- Toscano, M.; Grunwald, F.; Richart, M.; Baliosian, J.; Grampín, E.; Castro, A. Machine Learning Aided Network Slicing. In Proceedings of the 2019 21st International Conference on Transparent Optical Networks (ICTON), Angerrs, France, 9–13 July 2019; pp. 1–4. [Google Scholar]

- Lei, L.; You, L.; He, Q.; Vu, T.X.; Chatzinotas, S.; Yuan, D.; Ottersten, B. Learning-assisted optimization for energy-efficient scheduling in deadline-aware NOMA systems. IEEE Trans. Green Commun. Netw. 2019, 3, 615–627. [Google Scholar] [CrossRef]

- Luo, J.; Tang, J.; So, D.K.; Chen, G.; Cumanan, K.; Chambers, J.A. A deep learning-based approach to power minimization in multi-carrier NOMA with SWIPT. IEEE Access 2019, 7, 17450–17460. [Google Scholar] [CrossRef]

- Dong, R.; She, C.; Hardjawana, W.; Li, Y.; Vucetic, B. Deep learning for hybrid 5G services in mobile edge computing systems: Learn from a digital twin. IEEE Trans. Wirel. Commun. 2019, 18, 4692–4707. [Google Scholar] [CrossRef]

- Zhang, S.; Xiang, C.; Cao, S.; Xu, S.; Zhu, J. Dynamic Carrier to MCPA Allocation for Energy Efficient Communication: Convex Relaxation Versus Deep Learning. IEEE Trans. Green Commun. Netw. 2019, 3, 628–640. [Google Scholar] [CrossRef]

- Mismar, F.B.; Evans, B.L. Deep Learning in Downlink Coordinated Multipoint in New Radio Heterogeneous Networks. IEEE Wirel. Commun. Lett. 2019, 8, 1040–1043. [Google Scholar] [CrossRef]

- Abdelreheem, A.; Omer, O.A.; Esmaiel, H.; Mohamed, U.S. Deep learning-based relay selection in D2D millimeter wave communications. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Aljouf, Saudi Arabia, 10–11 April 2019; pp. 1–5. [Google Scholar]

- Ouyang, Y.; Li, Z.; Su, L.; Lu, W.; Lin, Z. APP-SON: Application characteristics-driven SON to optimize 4G/5G network performance and quality of experience. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 1514–1523. [Google Scholar]

- Ouyang, Y.; Li, Z.; Su, L.; Lu, W.; Lin, Z. Application behaviors Driven Self-Organizing Network (SON) for 4G LTE networks. IEEE Trans. Netw. Sci. Eng. 2018, 7, 3–14. [Google Scholar] [CrossRef]

- Kim, K.; Lee, J.; Choi, J. Deep learning based pilot allocation scheme (DL-PAS) for 5G massive MIMO system. IEEE Commun. Lett. 2018, 22, 828–831. [Google Scholar] [CrossRef]

- Jose, J.; Ashikhmin, A.; Marzetta, T.L.; Vishwanath, S. Pilot contamination problem in multi-cell TDD systems. In Proceedings of the 2009 IEEE International Symposium on Information Theory, Seoul, Korea, 21–26 June 2009; pp. 2184–2188. [Google Scholar]

- Zhang, W.; Yin, J.; Wu, D.; Guo, G.; Lai, Z. A Self-Interference Cancellation Method Based on Deep Learning for Beyond 5G Full-Duplex System. In Proceedings of the 2018 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Qingdao, China, 14–17 September 2018; pp. 1–5. [Google Scholar]

- Sun, J.; Shi, W.; Yang, Z.; Yang, J.; Gui, G. Behavioral modeling and linearization of wideband RF power amplifiers using BiLSTM networks for 5G wireless systems. IEEE Trans. Veh. Technol. 2019, 68, 10348–10356. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, Y.; Wang, Q. Multi-slot spectrum auction in heterogeneous networks based on deep feedforward network. IEEE Access 2018, 6, 45113–45119. [Google Scholar] [CrossRef]

- Roselló, M.M. Multi-path Scheduling with Deep Reinforcement Learning. In Proceedings of the 2019 European Conference on Networks and Communications (EuCNC), Valencia, Spain, 18–21 June 2019; pp. 400–405. [Google Scholar]

- Jameel, F.; Khan, W.U.; Chang, Z.; Ristaniemi, T.; Liu, J. Secrecy analysis and learning-based optimization of cooperative NOMA SWIPT systems. In Proceedings of the 2019 IEEE International Conference on Communications Workshops (ICC Workshops), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Imai, T.; Kitao, K.; Inomata, M. Radio propagation prediction model using convolutional neural networks by deep learning. In Proceedings of the 2019 13th European Conference on Antennas and Propagation (EuCAP), Krakow, Poland, 31 March–5 April 2019; pp. 1–5. [Google Scholar]

- Lu, C.; Xu, W.; Shen, H.; Zhang, H.; You, X. An enhanced SCMA detector enabled by deep neural network. In Proceedings of the 2018 IEEE/CIC International Conference on Communications in China (ICCC), Beijing, China, 16–18 August 2018; pp. 835–839. [Google Scholar]

- Tsai, C.H.; Lin, K.H.; Wei, H.Y.; Yeh, F.M. QoE-aware Q-learning based approach to dynamic TDD uplink-downlink reconfiguration in indoor small cell networks. Wirel. Netw. 2019, 25, 3467–3479. [Google Scholar] [CrossRef]

- Wang, D.; Khosla, A.; Gargeya, R.; Irshad, H.; Beck, A.H. Deep learning for identifying metastatic breast cancer. arXiv 2016, arXiv:1606.05718. [Google Scholar]

- Kachuee, M.; Fazeli, S.; Sarrafzadeh, M. Ecg heartbeat classification: A deep transferable representation. In Proceedings of the 2018 IEEE International Conference on Healthcare Informatics (ICHI), New York, NY, USA, 4–7 June 2018; pp. 443–444. [Google Scholar]

- Patil, K.; Kulkarni, M.; Sriraman, A.; Karande, S. Deep learning based car damage classification. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 50–54. [Google Scholar]

- Song, Q.; Zhao, L.; Luo, X.; Dou, X. Using deep learning for classification of lung nodules on computed tomography images. J. Healthc. Eng. 2017, 2017, 1–7. [Google Scholar] [CrossRef]

- Kendall, A.; Cipolla, R. Geometric loss functions for camera pose regression with deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5974–5983. [Google Scholar]

- Liu, C.; Wang, Z.; Wu, S.; Wu, S.; Xiao, K. Regression Task on Big Data with Convolutional Neural Network. In Proceedings of the International Conference on Advanced Machine Learning Technologies and Applications, Cairo, Egypt, 28–30 March 2019; pp. 52–58. [Google Scholar]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-based vision meets deep learning on steering prediction for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5419–5427. [Google Scholar]

- Fahrettin Koyuncu, C.; Gunesli, G.N.; Cetin-Atalay, R.; Gunduz-Demir, C. DeepDistance: A Multi-task Deep Regression Model for Cell Detection in Inverted Microscopy Images. arXiv 2019, arXiv:1908.11211. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A brief survey of deep reinforcement learning. arXiv 2017, arXiv:1708.05866. [Google Scholar] [CrossRef]

- Zaheer, M.; Ahmed, A.; Smola, A.J. Latent LSTM allocation joint clustering and non-linear dynamic modeling of sequential data. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 3967–3976. [Google Scholar]

- Niu, D.; Liu, Y.; Cai, T.; Zheng, X.; Liu, T.; Zhou, S. A Novel Distributed Duration-Aware LSTM for Large Scale Sequential Data Analysis. In Proceedings of the CCF Conference on Big Data, Wuhan, China, 26–28 September 2019; pp. 120–134. [Google Scholar]

- Yildirim, Ö. A novel wavelet sequence based on deep bidirectional LSTM network model for ECG signal classification. Comput. Biol. Med. 2018, 96, 189–202. [Google Scholar] [CrossRef] [PubMed]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Jiang, M.; Liang, Y.; Feng, X.; Fan, X.; Pei, Z.; Xue, Y.; Guan, R. Text classification based on deep belief network and softmax regression. Neural Comput. Appl. 2018, 29, 61–70. [Google Scholar] [CrossRef]

- Barlacchi, G.; De Nadai, M.; Larcher, R.; Casella, A.; Chitic, C.; Torrisi, G.; Antonelli, F.; Vespignani, A.; Pentland, A.; Lepri, B. A multi-source dataset of urban life in the city of Milan and the Province of Trentino. Sci. Data 2015, 2, 150055. [Google Scholar] [CrossRef]

- Garcia, S.; Grill, M.; Stiborek, J.; Zunino, A. An empirical comparison of botnet detection methods. Comput. Secur. 2014, 45, 100–123. [Google Scholar] [CrossRef]

- Raca, D.; Quinlan, J.J.; Zahran, A.H.; Sreenan, C.J. Beyond throughput: A 4G LTE dataset with channel and context metrics. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; pp. 460–465. [Google Scholar]

- Borges, V.C.; Cardoso, K.V.; Cerqueira, E.; Nogueira, M.; Santos, A. Aspirations, challenges, and open issues for software-based 5G networks in extremely dense and heterogeneous scenarios. EURASIP J. Wirel. Commun. Netw. 2015, 2015, 1–13. [Google Scholar] [CrossRef]

- Ge, X.; Li, Z.; Li, S. 5G software defined vehicular networks. IEEE Commun. Mag. 2017, 55, 87–93. [Google Scholar] [CrossRef]

- Ye, H.; Liang, L.; Li, G.Y.; Kim, J.; Lu, L.; Wu, M. Machine learning for vehicular networks: Recent advances and application examples. IEEE Veh. Technol. Mag. 2018, 13, 94–101. [Google Scholar] [CrossRef]

- Li, S.; Da Xu, L.; Zhao, S. 5G Internet of Things: A survey. J. Ind. Inf. Integr. 2018, 10, 1–9. [Google Scholar] [CrossRef]

- Kusume, K.; Fallgren, M.; Queseth, O.; Braun, V.; Gozalvez-Serrano, D.; Korthals, I.; Zimmermann, G.; Schubert, M.; Hossain, M.; Widaa, A.; et al. Updated scenarios, requirements and KPIs for 5G mobile and wireless system with recommendations for future investigations. In Mobile and Wireless Communications Enablers for the Twenty-Twenty Information Society (METIS) Deliverable, ICT-317669-METIS D; METIS: Stockholm, Sweden, 2015; Volume 1. [Google Scholar]

| Problem Type | Number of Articles | References |

|---|---|---|

| Classification | 32 | [2,10,11,16,17,19,21,22,23,26,27,29,30,34,38,40,41,52,56,60,61,62,63,64,65,66,68,71,72,74,75,76] |

| Regression | 19 | [5,6,7,15,17,35,36,43,44,45,46,47,48,51,57,58,59,67,70] |

| Data Source | Number of Articles | References |

|---|---|---|

| Generated through simulation | 24 | [2,15,16,17,25,34,35,36,47,51,55,56,57,59,60,61,62,63,64,68,72,73,75,77] |

| Real data (generated using prototypes or public dataset) | 18 | [5,6,7,10,30,38,40,41,43,44,45,46,48,52,58,66,67,71] |

| Synthetic (generated randomly) | 4 | [11,19,74,76] |

| Not described (the work did not provide information about the dataset used) | 10 | [3,21,22,23,24,26,27,29,65,70] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos, G.L.; Endo, P.T.; Sadok, D.; Kelner, J. When 5G Meets Deep Learning: A Systematic Review. Algorithms 2020, 13, 208. https://doi.org/10.3390/a13090208

Santos GL, Endo PT, Sadok D, Kelner J. When 5G Meets Deep Learning: A Systematic Review. Algorithms. 2020; 13(9):208. https://doi.org/10.3390/a13090208

Chicago/Turabian StyleSantos, Guto Leoni, Patricia Takako Endo, Djamel Sadok, and Judith Kelner. 2020. "When 5G Meets Deep Learning: A Systematic Review" Algorithms 13, no. 9: 208. https://doi.org/10.3390/a13090208

APA StyleSantos, G. L., Endo, P. T., Sadok, D., & Kelner, J. (2020). When 5G Meets Deep Learning: A Systematic Review. Algorithms, 13(9), 208. https://doi.org/10.3390/a13090208