1. Introduction

The derivative estimation of a measured signal has considerable importance in signal processing, numerical analysis, control engineering, and failure diagnostics, among others [

1]. Derivatives and structures using derivatives of signals are often used in industrial applications, for example PD controllers. These kinds of controllers are often used practically in different fields of application.

In applications, signals are corrupted by measurement and process noise, therefore a filtering procedure needs to be implemented. A number of different approaches have been proposed based on least-squares polynomial fitting or interpolation for off-line applications [

1,

2]. Another common approach is based on high-gain observers [

3,

4,

5]. These observers adjust the model by weighting the observer output deviations from the output of the system to be controlled.

In [

6], a sliding mode control (SMC) using an extended Kalman filter (EKF) as an observer for stimulus-responsive polymer fibers as soft actuator was proposed. Because of the slow velocity of the fiber, the EKF produces poor estimation results. Therefore, a derivative approximation structure is proposed to estimate the velocity through the measurement of the position. This approach realized the approximation of the derivative using also a high gain observer. The method presented in the different applications cited above is a method that approximates the derivative in an infinite horizon of time. In this sense, the proposed differentiator is an asymptotic estimator of the derivative. Several researchers studied this problem by applying the SMC approach.

This paper emphasizes some mathematical aspects of an algorithm that was used in the past for practical applications such as, for instance, in [

7,

8]. In particular, in [

7] this algorithm is used in designing a velocity observer. In [

8], a similar algorithm is used to estimate parameter identification in an application in which a synchronous motor is proposed.

In recent years, real-time robust exact differentiation has become the main problem of output-feedback high order of sliding mode (HOSM) control design. Even the most modern differentiators [

9] do not provide for exact differentiation with finite-time convergence and without considering noise.

The derivatives may be calculated by successive implementation of a robust exact first-order differentiator [

10] with finite-time convergence but without considering noise, as in [

11,

12]. In [

13], an arbitrary-order finite-time-convergent exact robust differentiator is constructed based on the HOSM technique.

In [

10], the proposed differentiator provides the proportionality of the maximal differentiation error to the square root of the maximal deviation of the measured input signal from the base signal. Such an order of the differentiation error is shown to be the best possible when the only information known of the base signal is an upper bound for Lipschitz’s constant of the derivative. According to Theorem 2, the proposed algorithm to produce the optimal approximation needs to work the knowledge of the maximal Lipschitz’s constant. More recently in [

14], sliding mode (SM)-based differentiation is shown to be exact for a large class of functions and robust with respect to noise. In this case, Lipschitz’s constant must be known to apply the algorithm. Those methods, using the maximal Lipschitz’s constant, perform an approximation in a finite horizon of time. In practical applications, the presence of noise and faults does not allow a Lipschitz’s constant to be set. In fact, the noise is not distinguishable from the input signal. In this sense, it appears impossible to apply these algorithms in real applications.

This paper proposes an approximated derivative structure to be taken into account for such types of applications, so that spikes, noise, and any other kind of undesired signals that occur from the derivatives can be reduced. Thus, the problem of the approximation of the derivative is formulated in the presence of white Gaussian noise and in a infinity horizon of time as in KF. Therefore, the comparison is shown just with the performances of the approximation of the derivative performed by an adaptive KF. After the problem formulation, this paper proves a proposition which allows us to build this possible approximation of the derivative using a dynamic system.

The paper is structured as follows. In

Section 2, the problem formulation and a possible solution are proposed. How to approximate a derivative controller using an adaptive KF is presented in

Section 3. The results of the simulations are discussed in

Section 4, and the conclusion closes the paper.

2. An Approximated Derivative Structure

Using the derivative structures, imprecision occurs. The imprecision is due to spikes generating power dissipation. The idea is to find an approximated structure of general derivatives as they occur in mathematical calculations, and which are often used also in technical problems as proportional derivative controllers. The following formulation states the problem in a mathematical way.

Problem 1. Assume the following differential is given: Function is the function to be differentiated, where represents the time variable. The aim of the proposed approach is to look for an approximating expression , where is a parameter, such that:where represents the real derivative function. Proposition 1. Considering (1), then there exists a function such that if the following dynamic system is considered:where represents a twice differentiable real function and the approximated derivative function. Ifandthen Proof. Considering the following approximate dynamic system:

where

can be a function of

or a parameter with

, if

then

After inserting (

7) into (

9), it follows that

if (

1) is taken into consideration, then (

10) becomes as follows:

If the following Lyapunov function is considered:

and considering that:

according to (

11), it is possible to write the following expression:

and thus from (

13), it follows that:

Considering (

9) and multiplying by

, then

and

if

as stated by the hypothesis in (

4). Then

and thus

Considering that

as stated by the hypothesis in (

5), then:

Thus, (

11) is uniformly asymptotically stable and (

6) is proven. □

Proposition 2. The dynamic systemwhere function , solves the problem defined in Problem 1. A supplementary variable is defined as:

where

is a function to be designed with

. Let

If

, then

. Then the asymptotical stability is always guaranteed for

and the rate of convergence can also be specified by

. From (

24), the second part of (

23) follows:

Differentiating (

26), it follows that

Considering (

27) with

from (

7) with

combined with (

26), the first part of (

23) follows:

If (

23) is transformed by forward Euler, the following expression is obtained:

and is a discrete differential equation, where

indicates the sampling time with

, and

,

, and

are discrete variables with

.

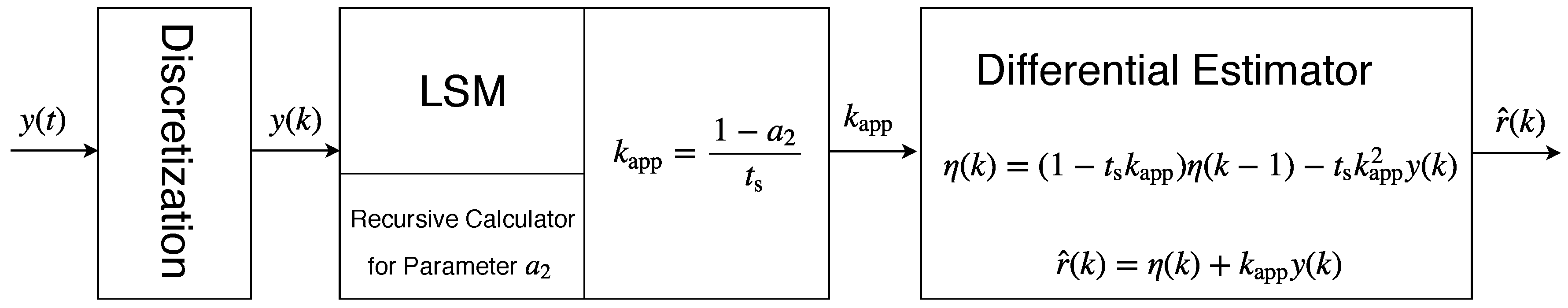

Figure 1 presents a graphical representation of the proposed algorithm structure with the discrete input signal

, the recursive calculator for the parameter

of the linear least squares method (LSM) and the differential estimator with the discrete approximated derivative function

.

Transforming the equations represented by (

29) with

-transform, the following forms are obtained:

which yields to

As described earlier, the objective of a minimum variance approach is to minimize the variation of an output of a system with respect to a desired output signal in the presence of noise. The following theorem gives a result to determine a suboptimal to achieve a defined suboptimality.

Theorem 1. Consideringand according to the forward Euler discretization by -transform of (1), it follows that Then it is possible to find a unique value of parameter of (31), which guarantees a suboptimal

minimum of at each k, where is a parameter to be calculated recursively using the linear least squares method (LSM). Proof. Assuming the following model:

where coefficients

,

,

,

, and

,

belong to

and need to be estimated,

denotes the white noise. At the next sample, (

34) becomes:

The prediction at time

k is:

Considering that

and assuming that the noise is not correlated to signal

, it follows that

where

is defined as the variance of the white noise. The goal is to find

such that:

It is possible to write (

34) as:

Considering the effect of the noise as follows:

and transforming (

39) using

-transform, then

and

where

and represents the well-known complex variable. The approximation in (

40) is equivalent to considering the following assumption:

In other words, the assumption stated in (

43) means that the noise model of (

39) is assumed to be a model of the first order. Considering the

-transform of (

36) with

, then

Considering that

and inserting (

45) into (

44), it follows that

According to (

38), then

, and through some calculations the following expression is obtained:

From (

47), it follows that

Considering that

then relation (

48) becomes

and thus the derivative approximation according to the forward method is

It follows that

thus

and finally,

which can be written as

Recalling (

31),

and comparing (

54) with (

56), the denominator constraints are

and

together with

Parameter can be calculated by LSM.

The numerator constraint is the following:

Considering the conditions of the denominator, we obtain

being, in our context, a function of time

, it is possible to write in

-domain as follows:

and thus consider the back

-transform

If

is small enough,

. This implies

□

Remark 1. Conditions (57), (59), and (60) guarantee that signal equals . Nevertheless, obtaining the rejection of the noise coefficients , , and should be adaptively calculated using LSM. In our tests, in order to reduce the calculation load, the following conditions are considered: . 3. Using an Adaptive Kalman Filter to Approximate a Derivative Controller

Assuming that the polynomial that approximates the derivative of the signal is of the first order as follows:

in which

represents the polynomial approximation of the signal

. The following adaptive Kalman filter (KF) can be implemented in which at each sampling time, constant parameters

and

should be calculated. The following state representation is obtained:

It should be noted that

represents the approximation of the derivative of the signal as proposed in Proposition 1. In this sense, according to the following general notation,

,

, and

, then:

where

and

. Considering the discretization of system (

63), the following discrete system is obtained:

where

is the process noise covariance matrix, and

is the measurement noise covariance. The discrete forms of matrix

of (

64) are represented by

, respectively. If the forward Euler method with the sampling time

is applied, the following matrices are obtained:

The a priori predicted state is

and the a priori predicted covariance matrix is

The following equations state the correction (a posteriori prediction) of the adaptive KF:

where

is the Kalman gain.

Remark 2. It should be noted that matrix (process covariance noise) consists of the following structure:in which states a squared variance. The reason for this structure is that the first equation corresponding to matrix of (64) is a definition of the velocity, and in this sense, no uncertainty should be set. The second equation is an equation which considers a comfortable condition, but of course it is not true. In this case, an uncertainty variable must be set. According to our experience, a very wide system needs a very wide uncertainty variable to be set. Remark 3. Parameter is adapted by directly using the definition of the covariance as follows:in which a mean value with the last N values of error are calculated. 4. Results and Discussion

The following section presents the results using a derivative realized through the adaptive KF and the proposed algorithm. The results are compared with the exact mathematical derivative. In order to reduce the calculation load in the case study, the following conditions were considered: . This approach allows us to also compare the results with a polynomial adaptive KF method.

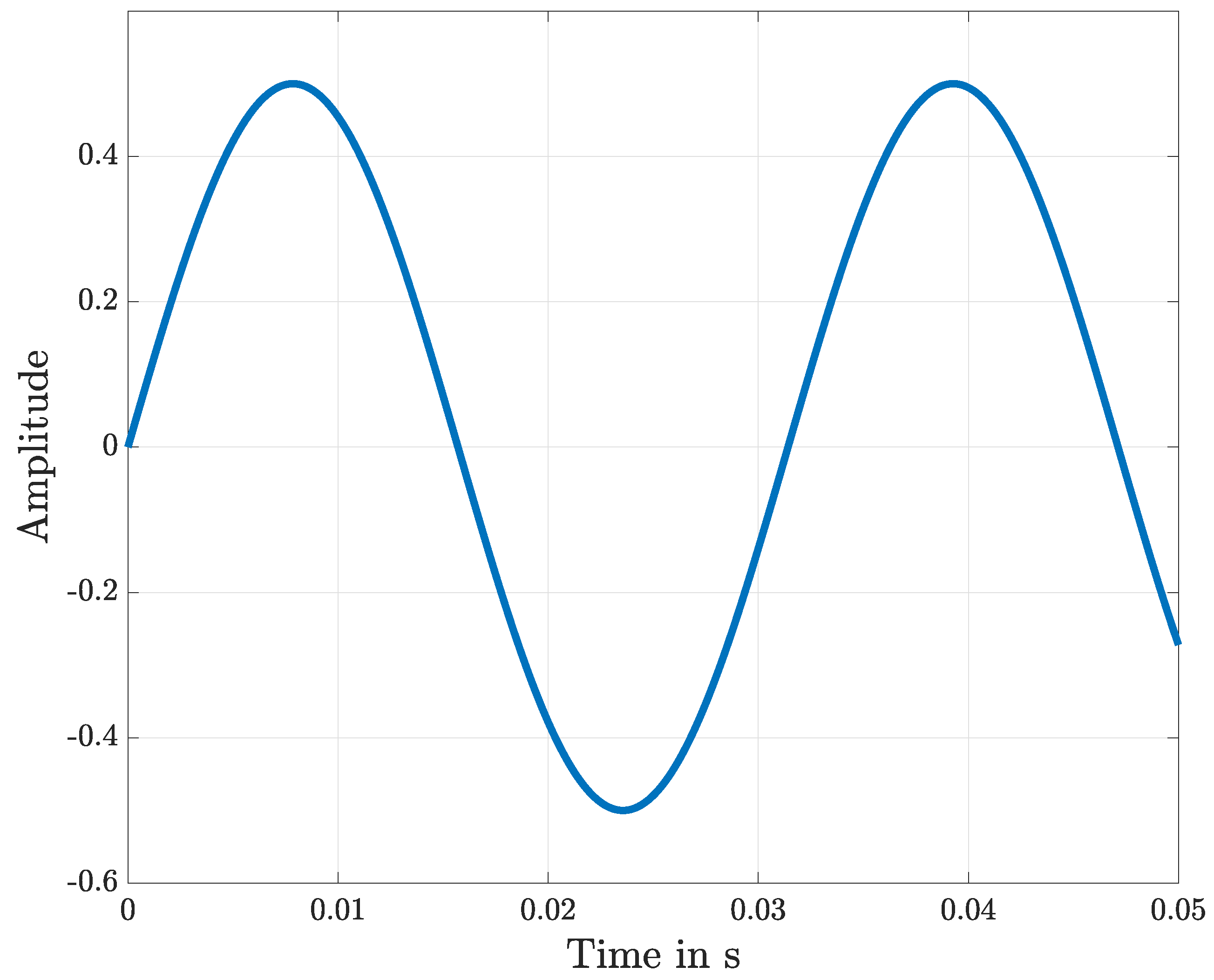

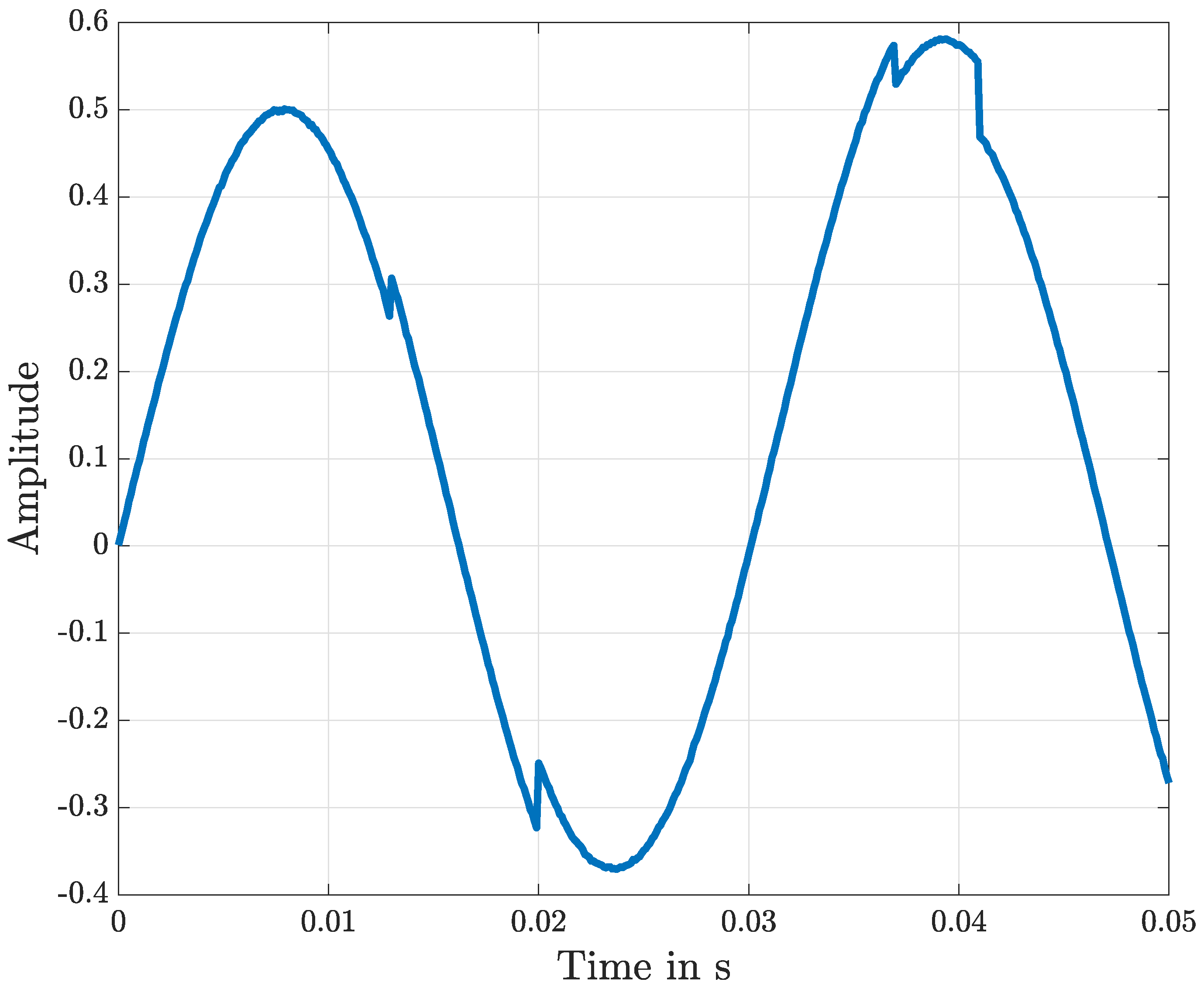

Figure 2 shows an ideal measured position

signal. Therefore neither noise nor faults are present in the measured data.

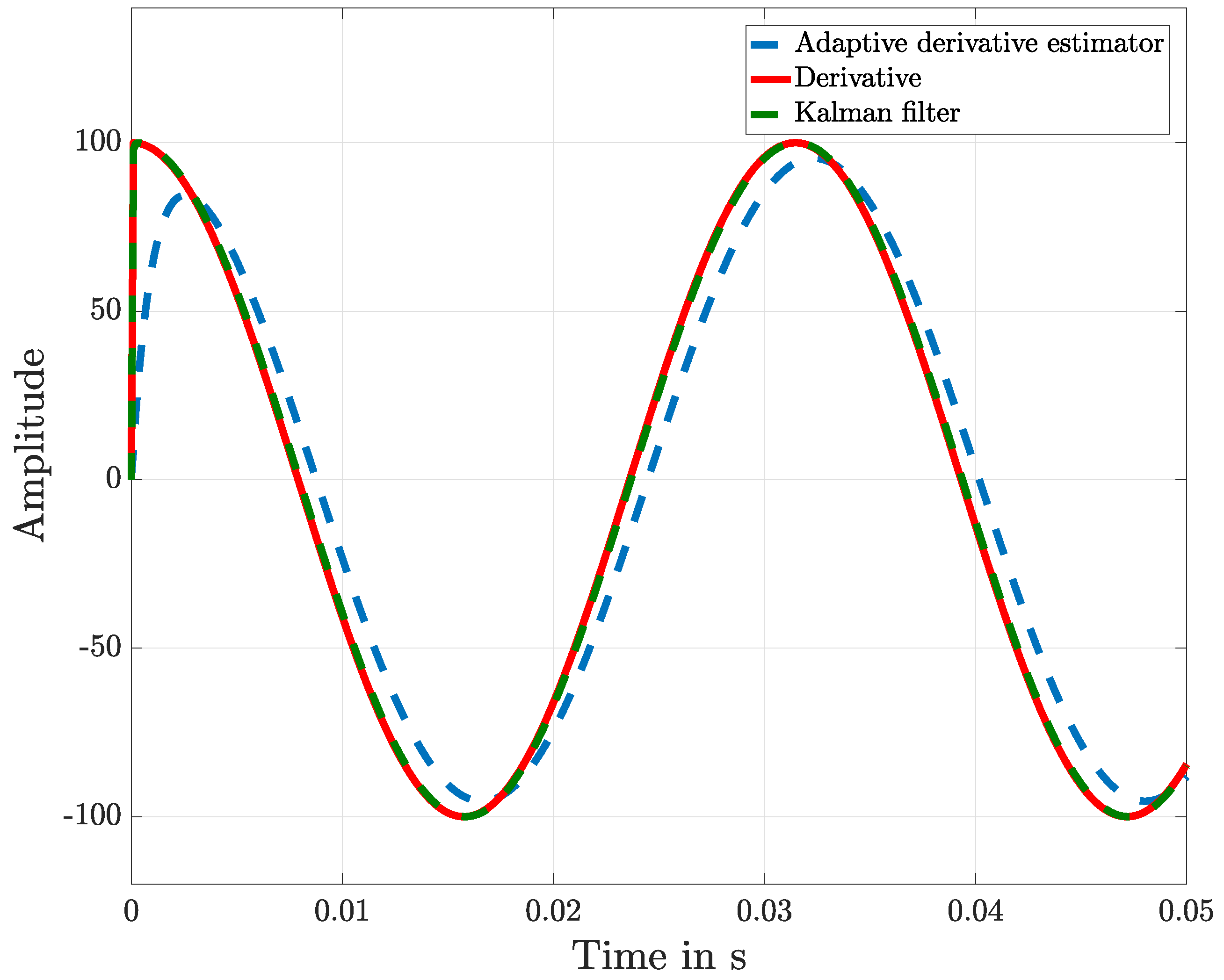

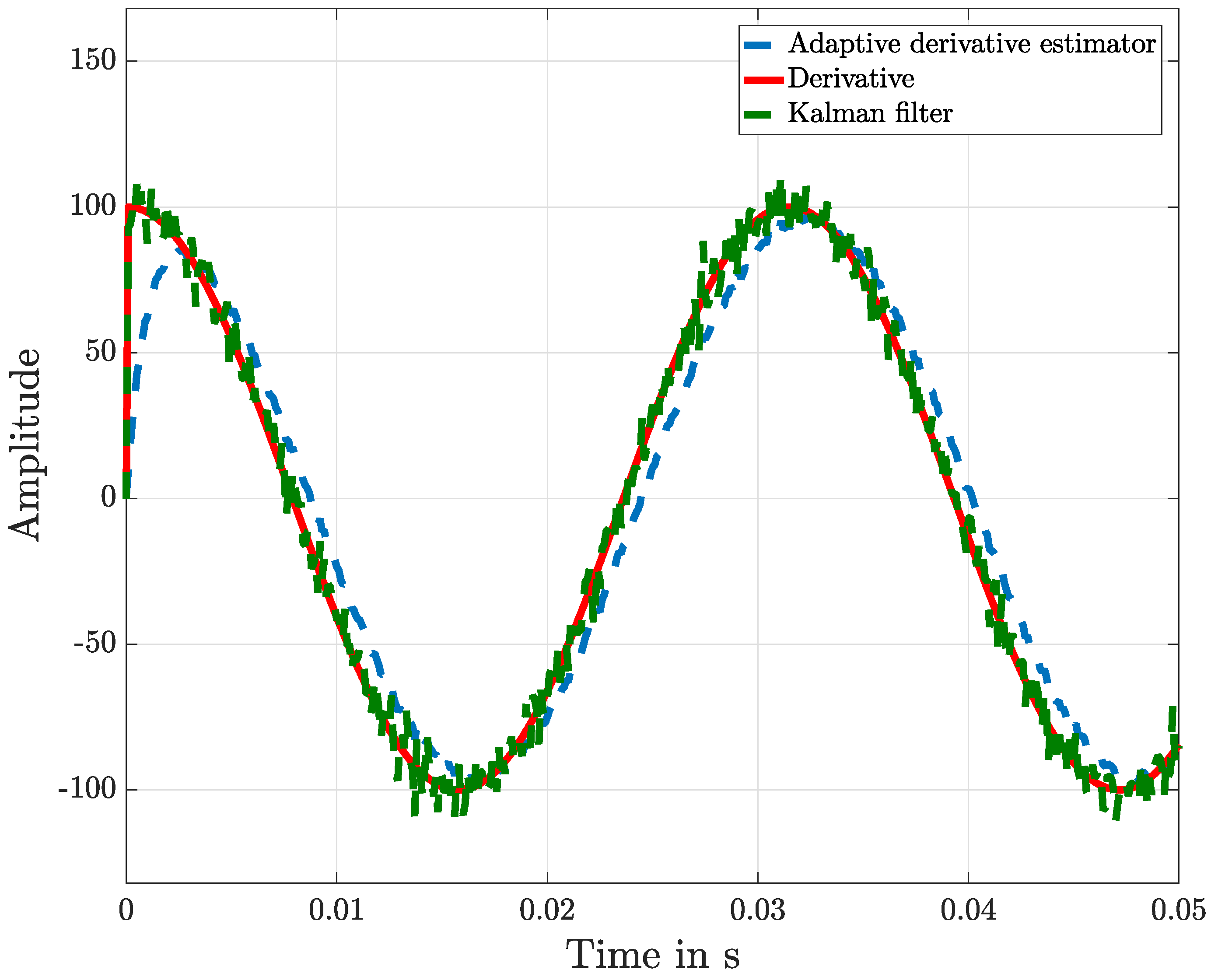

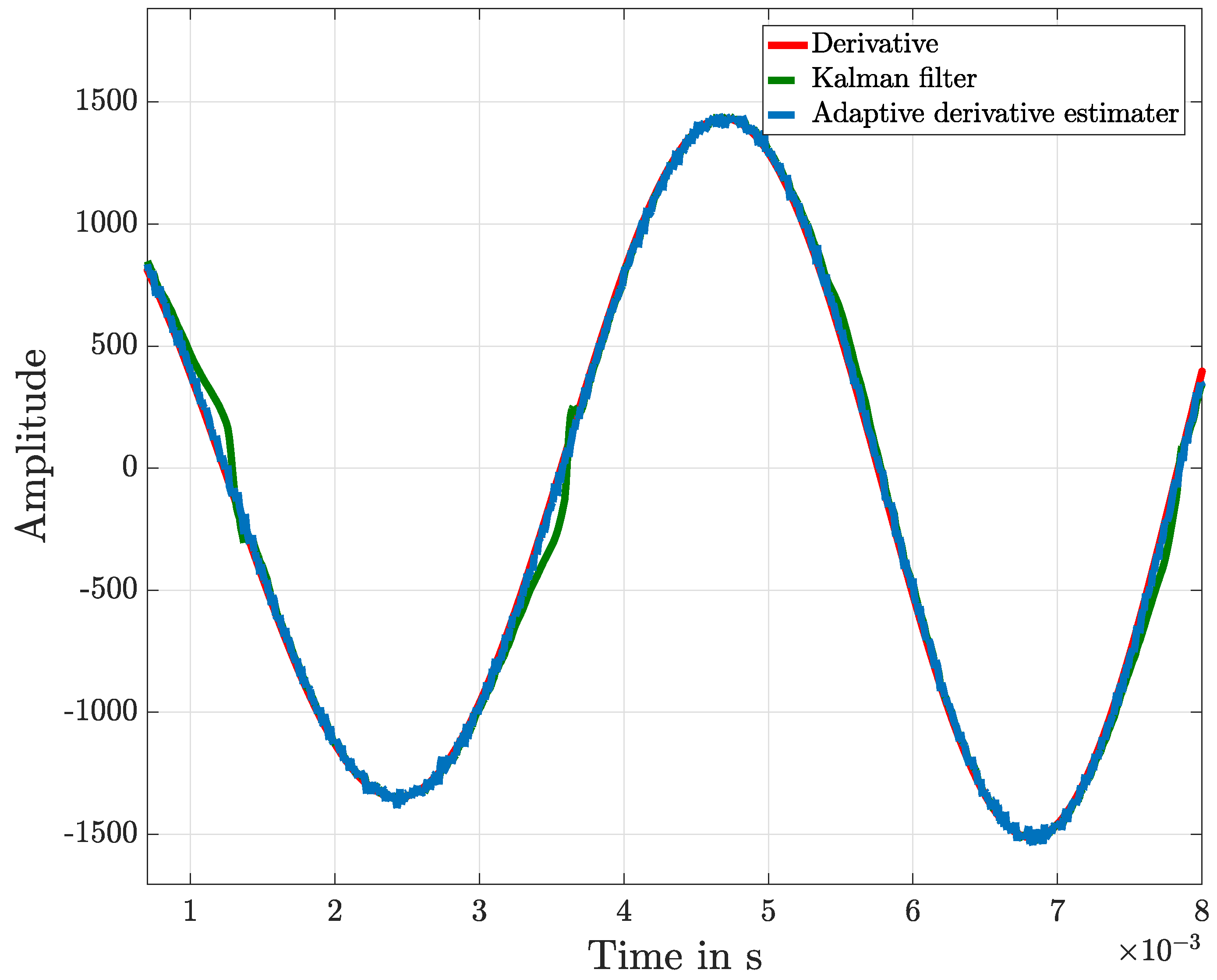

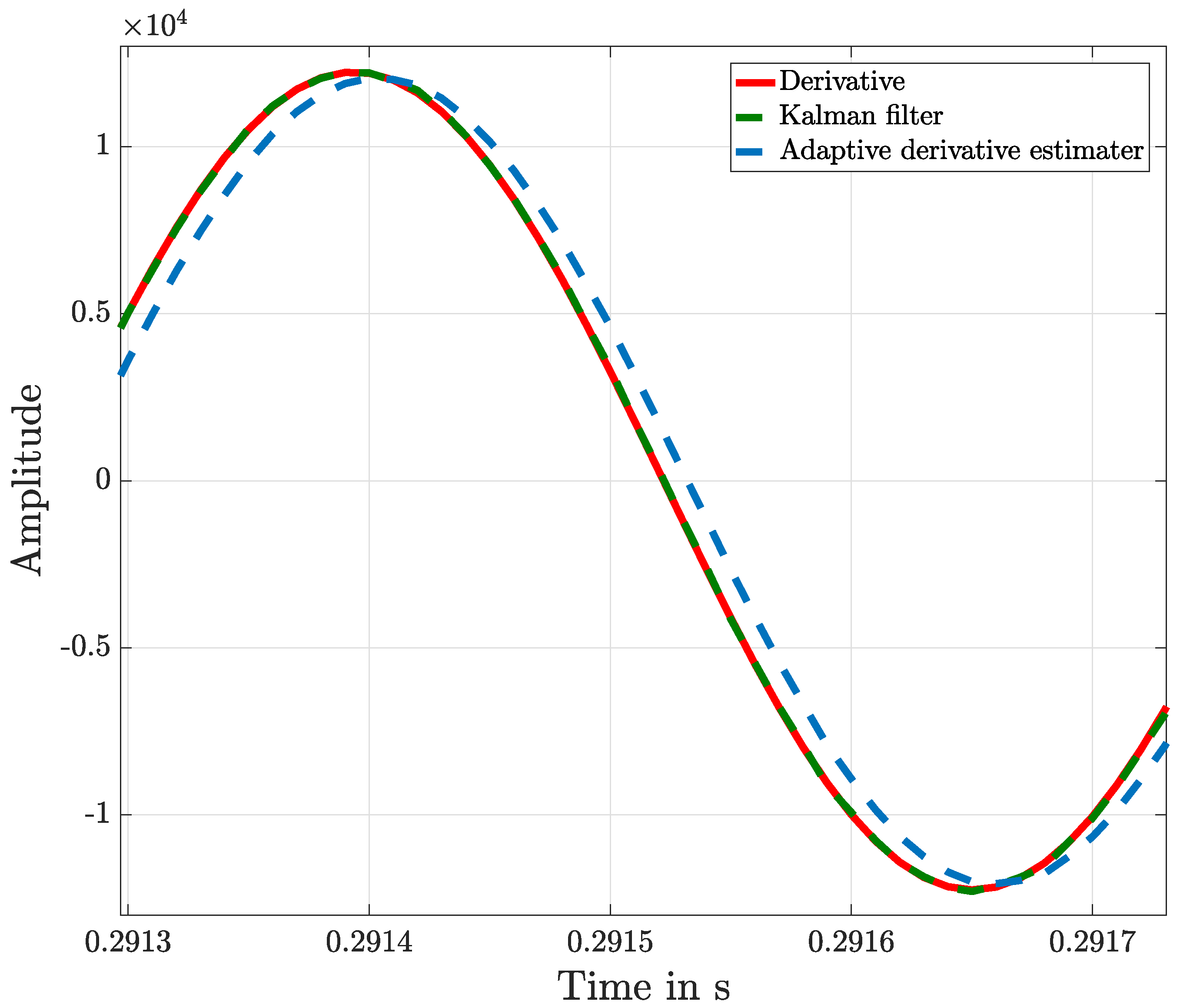

Figure 3 shows the approximated derivative of the measured sine function, and the result of this is shown in detail in

Figure 4. With this graphical representation of the result, it is visible that the adaptive KF, compared with the proposed algorithm structure, shows a better performance.

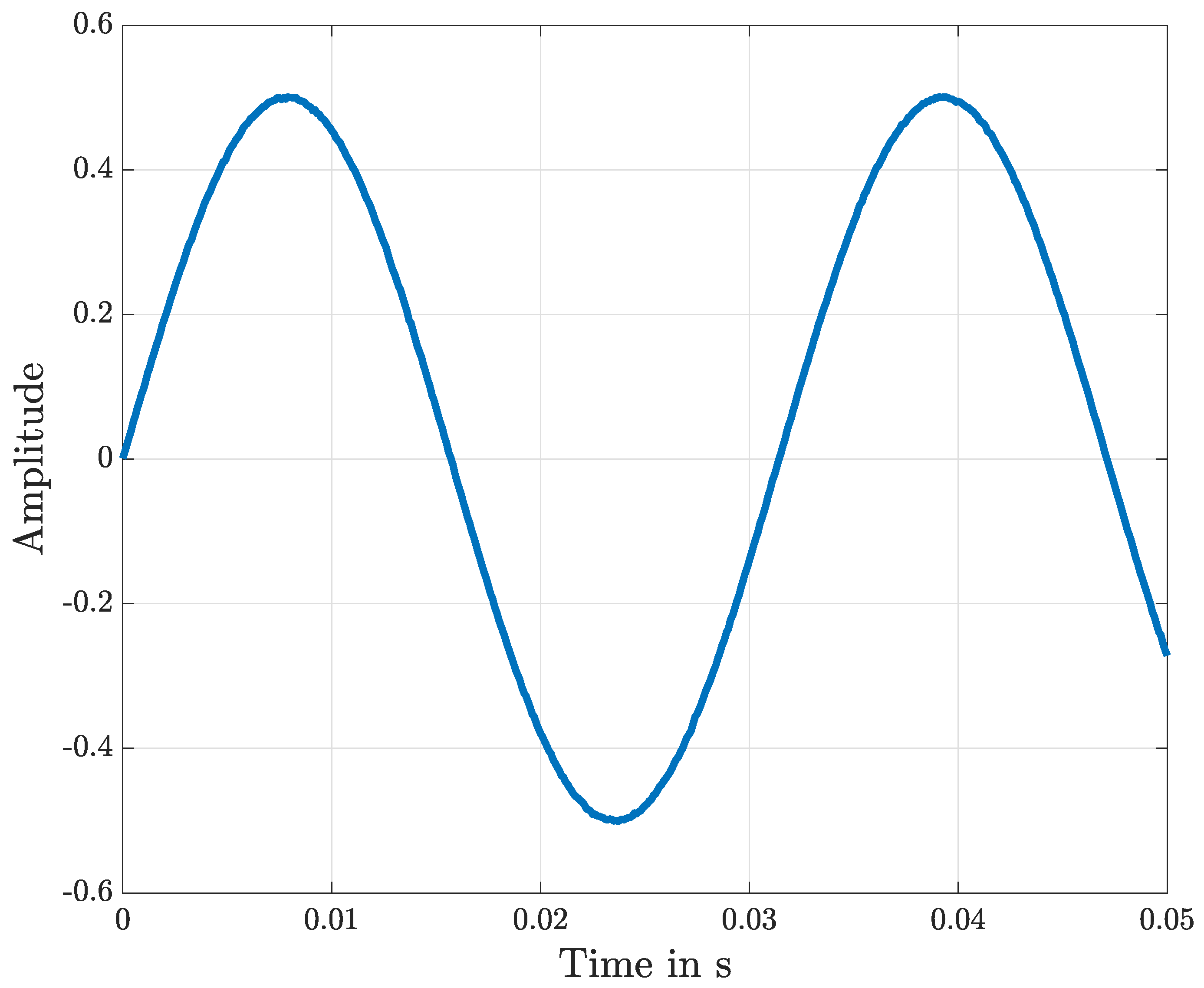

Figure 5 shows the position signal used,

, in which noise in superposition is added, and the graphical representation of the resulting velocity is shown in

Figure 6. The signal used,

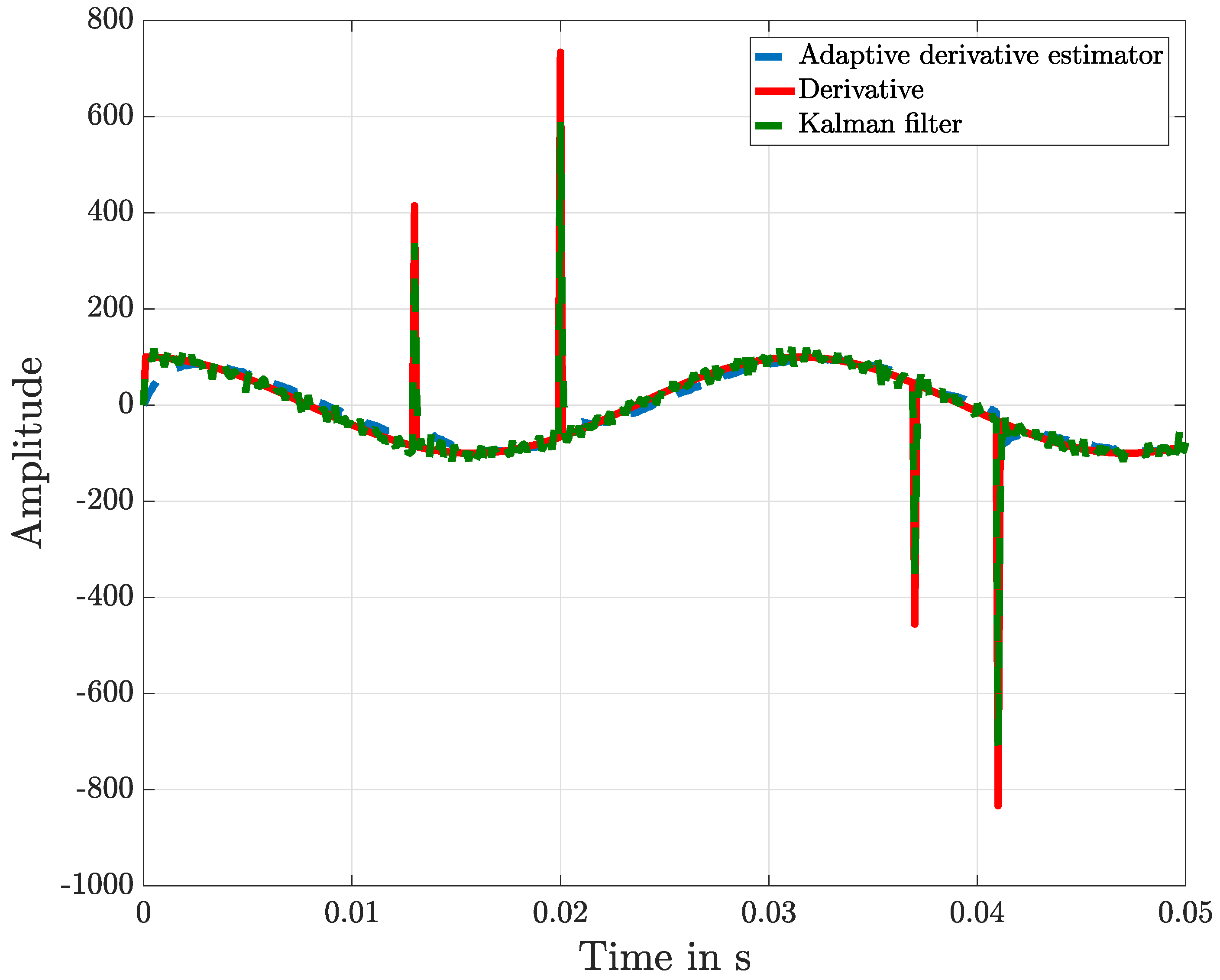

, is represented in

Figure 7 together with a fault in its measurement.

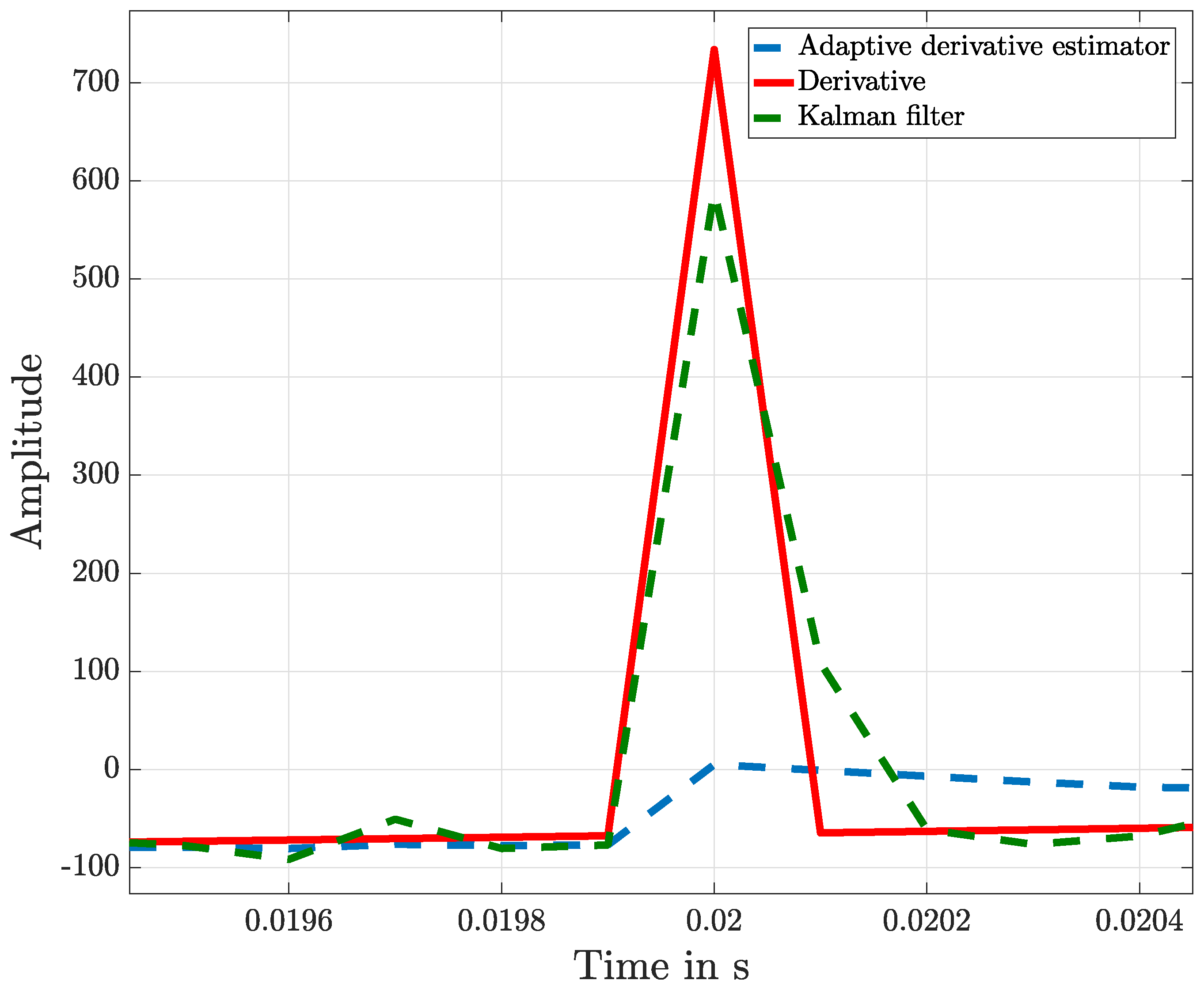

Figure 8 shows the approximation derivative of the measured signal, and details of this result are shown in

Figure 9. Also in the case of the presence of faults and using a more appropriate adaption of the adaptive KF, the two methods offer similar results.

Table 1 presents the results of different input signals and the estimation errors of both adaptive estimators based on the Euclidean norm.

The adaptive derivative estimator shows worse results with respect to the results obtained by the derivative obtained through the adaptive KF in the case of missing noise and faults.

In case of the presence of noise and using a more appropriate adaption of the adaptive KF, the two methods offer similar results. The proposed algorithm structure shows better results in the presence of faults.

Figure 10 shows that a part of a chirp input signal starts from a frequency of 200 Hz and in 0.3 s reaches to 2 kHz. Because of the presence of the derivative, the signal to be approximated changes its amplitude linearly as a function of the frequency over time. The low-frequency part of the chirp input signal is presented in

Figure 11, and the high-frequency part is shown in

Figure 12. It also shows the resulting tracking of the polynomial adaptive KF and the proposed algorithm structure.

A fault is defined as an abrupt change in the amplitude over time, and in this sense is characterized by a high amplitude and high frequencies. In this context, it is clear that the proposed algorithm can better localize the faults because it is tuned through the choice of on the desired signal.

The proposed algorithm structure does not need to be tuned or, in other words, is tuned using the sample time, which in general is fixed in terms of upper bound by the Shannon Theorem. Now represents the time constant of our approximating derivative, which is calculated adaptively through the least squares method. The simulation shows that once the KF is tuned, this shows better results at high frequencies with respect to the algorithm structure, but in the range of the frequency in which is consistently chosen, the proposed algorithm shows similar results as those offered by KF.

5. Conclusions

This paper deals with an approximation of a first derivative of a input signal using a dynamic system of the first order to avoid spikes and noise. It presents an adaptive derivative estimator for fault-detection using a suboptimal dynamic system in detail. After formulating the problem, a proposition and a theorem were proven for a possible approximation structure, which consists of a dynamic system. In particular, a proposition based on a Lyapunov approach was proven to show the convergence of the approximation. The proven theorem is constructive and directly shows the suboptimality condition in the presence of noise. A comparison of simulation results with the derivative realized using an adaptive KF and with the exact mathematical derivative were presented. It was shown that the proposed adaptive suboptimal auto-tuning algorithm structure does not depend on the setting of the parameters. Based on these results, an adaptive algorithm was conceived to calculate the derivative of an input signal with convergence in infinite time. The proposed algorithm showed worse results with respect to the results obtained by the derivative obtained through the adaptive KF in the case without noise and faults. In case of the presence of noise and using a more appropriate version of the adaptive KF, the two methods offer similar results. In the presence of faults, the proposed algorithm structure showed better results.

Author Contributions

Conceptualization, M.S. and P.M.; software, M.S. and P.M.; validation, M.S. and P.M.; formal analysis, M.S. and P.M.; investigation, M.S. and P.M.; resources, M.S. and P.M.; data curation, M.S. and P.M.; writing–original draft preparation, M.S. and P.M.; writing–review and editing, M.S. and P.M.; visualization, M.S. and P.M.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| Adaptive least square parameter |

| State vector of Kalman filter |

| Derivative error |

| k | Discrete variable |

| Kalman gain |

| Parameter |

| Noise signal |

| -transformed noise signal |

| Process noise covariance matrix |

| Derivative function |

| Approximated derivative function |

| -transformed derivative function |

| Approximated derivative function |

| -transformed signal to be derivated with or without noise |

| Signal to be derivated |

| Polynomial expression of the signal |

| Position signal with noise |

| Sampling time |

| Measurement noise covariance matrix |

References

- Rafael Morales Fernando, R.J.D.G.; López, J.C. Real-Time Algebraic Derivative Estimations Using a Novel Low-Cost Architecture Based on Reconfigurable Logic. Sensors 2014, 14, 9349–9368. [Google Scholar]

- Ibrir, S.; Diop, S. A numerical procedure for filtering and efficient high-order signal differentiation. Int. J. Appl. Math. Comput. Sci. 2004, 14, 201–208. [Google Scholar]

- Estafandiari, F.; Khalil, K. Output feedback stabilization of fully linearizable systems. Int. J. Control 1992, 56, 1007–1037. [Google Scholar] [CrossRef]

- Dabroom, A.M.; Khalil, H.K. Discrete-time implementation of high-gain observers for numerical differentiation. Int. J. Control 1999, 72, 1523–1537. [Google Scholar] [CrossRef]

- Chitour, Y. Time-varying high-gain observers for numerical differentiation. IEEE Trans. Autom. Control 2002, 47, 1565–1569. [Google Scholar] [CrossRef]

- Schimmack, M.; Mercorelli, P. A sliding mode control using an extended Kalman filter as an observer for stimulus-responsive polymer fibres as actuator. Int. J. Model. Identif. Control 2017, 27, 84–91. [Google Scholar] [CrossRef]

- Mercorelli, P. Robust feedback linearization using an adaptive PD regulator for a sensorless control of a throttle valve. Mechatron. J. IFAC 2009, 19, 1334–1345. [Google Scholar] [CrossRef]

- Mercorelli, P. A Decoupling Dynamic Estimator for Online Parameters Indentification of Permanent Magnet Three-Phase Synchronous Motors. In Proceedings of the IFAC 16th Symposium on System Identification, Brussels, Belgium, 11–13 July 2012; Volume 45, pp. 757–762. [Google Scholar]

- Dabroom, A.; Khalil, H.K. Numerical differentiation using high-gain observers. In Proceedings of the 36th IEEE Conference on Decision and Control, San Diego, CA, USA, 12 December 1997; Volume 5, pp. 4790–4795. [Google Scholar]

- Levant, A. Robust exact differentiation via sliding mode technique. Automatica 1998, 34, 379–384. [Google Scholar] [CrossRef]

- Levant, A. Universal single-input-single-output (SISO) sliding-mode controllers with finite-time convergence. IEEE Trans. Autom. Control 2001, 46, 1447–1451. [Google Scholar] [CrossRef]

- Yu, X.; Xu, J. Nonlinear derivative estimator. Electron. Lett. 1996, 32, 1445–1447. [Google Scholar] [CrossRef]

- Levant, A. Higher order sliding modes and arbitrary-order exact robust differentiation. In Proceedings of the 2001 European Control Conference (ECC), Porto, Portugal, 4–7 September 2001; pp. 996–1001. [Google Scholar]

- Levant, A.; Livne, M.; Yu, X. Sliding-Mode-Based Differentiation and Its Application. In Proceedings of the 20th IFAC World Congress, Toulouse, France, 9–14 July 2017; Volume 50, pp. 1699–1704. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).