In this paper, we propose a novel network representation algorithm, MVNR, to learn multi-view representations of vertices in a network. Firstly, we introduce the multi-view strategy to define the k-step network features. Meanwhile, we introduce link prediction index to evaluate the link weights for existing and non-existing edges. Based on the link weights, we then propose a new approach to calculate the corresponding weights for each view’s representations. Finally, we propose a new approach to jointly learn the representations based on the different views and weight information. Consequently, our model integrates rich local structural information associated with the network, capturing the global structural properties of the network.

3.2. Feature Extraction for Different k-Step Networks

DeepWalk uses Skip-Gram model for big-scale network representation learning, which captures context vertices by random walk algorithm. The objective of the DeepWalk is to maximize the following average log probability:

where

denotes the number of vertices,

denotes the current vertex and

denotes the context vertices of the current vertex

.

is the number of context vertices before and after the current vertex

.

indicates the context window size to be 2

t + 1. Moreover, the conditional probability

can be defined by

where

and

is the input and output latent variable, namely, the input and output representation vectors of

.

Based on DeepWalk and PageRank, the research achievements from TADW [

29] show that DeepWalk aims to factorize the following matrix:

where

, if

, and

otherwise.

is the degree value of vertex

.

As shown in Equation (3), matrix

captures the

t-th step neighboring vertices. Node2vec adopts BFS and DFS to capture higher order neighboring vertices, GraRep captures higher order network feature matrix by the

k-step probability transition matrix

, and

is the multiplication form of multiple

, where

,

is an adjacency matrix,

is a diagonal matrix. One shortage of GraRep is that the inverse matrix

does not exist when the network is sparse. Based on the Equation (2), TADW [

29] presents a simplified target matrix and finds a balance between speed and accuracy. Consequently, matrix

can be formulated as follows:

Matrix captures the first-order and second-order neighbors for vertices with small computation complexity.

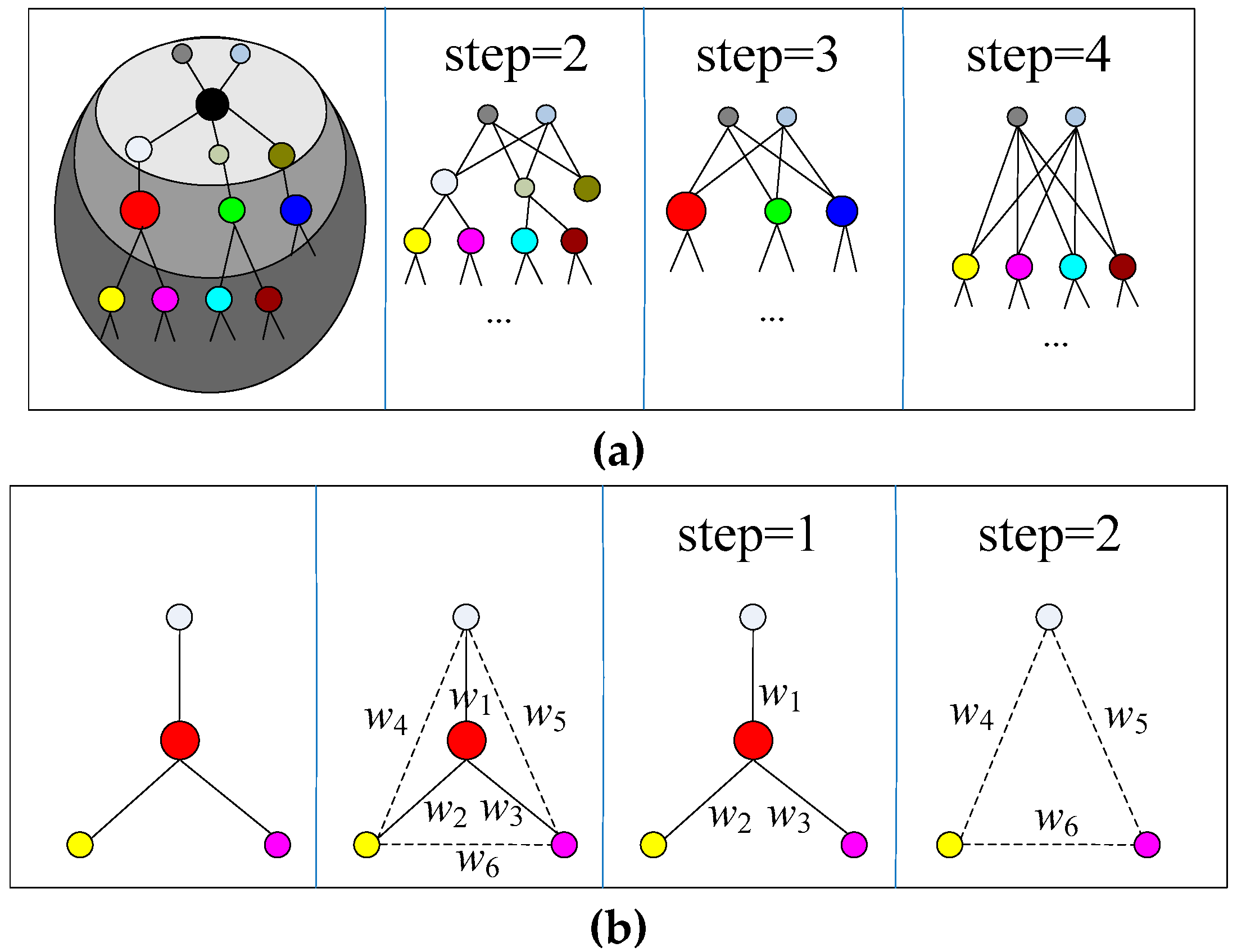

GraRep uses the matrix to represent higher order feature matrix. In MVNR, we capture higher order features by defining the adjacency matrix as GraRep, but we perform some optimization operations for the matrix . Unlike GraRep algorithm, is the adjacency matrix of k-step network. In GraRep algorithm, can be regarded as the variation form of . can be regarded as the reachability matrix within k steps. Therefore, the optimization operations of adopt a similar form as GraRep, but the essence of matrix and is very different. Moreover, the structure feature matrix constructions based on and are also very different. These differences are the optimization operations of in this paper.

Here, we first adopt to denote the adjacency matrix of k-step network and .

Matrix

can be formulated as follows:

where

is the adjacency matrix of the

k-step network,

is the matrix elements of matrix

and adjacency matrix

consists of 0 and 1, so we set the element value as 0 or 1 in

, thus

if

, and

otherwise, where

is the matrix elements of matrix

. The Equation (4) is different from the transition matrix of GraRep, for each

k-step network, we reconstruct the

k-step network based on matrix

and

. In fact,

is the probability of reachability.

For each step network, we can get the network structural features as follows:

Here, is the adjacency matrix of the k-th network, which is different from the probability transition matrix in Equation (3). The Equation (5) and Equation (3) have the same form, but they contain different elements. only consists of element 0 and 1. , if , and otherwise. is the degree value of vertex . Both transition probability matrix and adjacency matrix are the structure feature matrices of the network. Moreover, GraRep factorizes the transition probability matrix based on adjacency matrix and achieves better network representation performance. The factorization objective matrix of SGNS algorithm is the Equation (2), but the TADW algorithm factorizes the matrix in Equation (3) simplified by Equation (2) and achieves excellent network representation learning performance. Therefore, we replace the transition probability matrix in Equation (3) with the adjacency matrix . On the one hand, it is based on the above comparative analysis, on the other hand, it is based on such consideration that the factorization of adjacency matrix has lower computational complexity.

Therefore, matrix

can be regarded as the structural features of

k-step network, which is essentially different from the feature matrix of GraRep. The structure features of GraRep is as follows:

Here, is the number of vertices in graph G, is the element from i-th row and j-th column of the matrix , and .

3.3. Feature Weighting for Different k-Step Networks

For the different k-step networks, the same vertex pairs should be given the different weight values. However, DeepWalk, GraRep and node2vec neglect the weight information for higher order neighbors. Therefore, the proposed MVNR in this paper solves this problem by following procedures.

We first introduce Matrix Forest Index (MFI) to evaluate the weights between vertices for 1-step network. For the weights between vertices on k-step network. We compare the performance of the MFI algorithm with some classical link prediction algorithms, such as the algorithms based on common neighbor, the algorithms based on random walk and the algorithms based on path. We find that MFI achieves the best link prediction performance on several real citation network datasets. In addition, MFI can be calculated only through the Laplacian matrix of the network. The input of Laplacian matrix is the adjacency matrix of the network. The input matrix is exactly the same as that of the proposed MVNR algorithm. Unlike other link prediction algorithms, the input, calculation procedure and result of MFI can be embedded in the learning framework of the MVNR algorithm. Therefore, we choose the MFI algorithm to measure the weights between vertices.

MFI is formulated as follows:

Here, is a matrix which is constructed by the matrix forest index. is the Laplacian matrix of G, is an identity matrix. can be calculated based on adjacency matrix, its detailed calculation method can be found in the Algorithm 1.

We do not use MFI algorithm and adjacency matrix of

k-step to calculate the weights between vertices in

k-step network. We calculate the weights between vertices in

k-step network by using the weights between vertices in 1-step network and the adjacency matrix of

k-step network. Because the weights calculated by MFI in 1-step network include the weights of existing edges and the future connection probabilities of non-existing edges between vertices. Only through such calculation can the weights of different

k-step networks be different and hierarchical and also play a role in adjusting the weights of different

k-step network representations. For the

k-step network, we define its weight matrix as follows:

where

,

,

.

is the element of the adjacency matrix

.

As mentioned above, the link weights consist of the certainty degrees of existing edges and the link probabilities of non-existing edges. The certainty degrees of existing edges are applied to 1-step network and the link probabilities of non-existing edges are used to k-step networks where k > 2. Through the Equations (7) and (8), we find that we only compute the value of MFI for one time to different k-step networks and then through the MFI matrix, we construct the weight matrices of different k-step networks. For the 1-step network, we only retain the similarity values between two vertices with one edge in the MFI matrix and delete the similarity values between two vertices without any edge in the MFI matrix. Therefore, we define the similarity value between two vertices with one edge as the certainty degrees of existing edges. For the 2-step network, we only retain the similarity values between two vertices, where these two vertices are reachable within two steps and the similarity value is the future link probabilities of non-existing edges of the 1-step network. Specifically, the weights of the 2-step network are the similarity values between the current central vertex and the neighbor’s neighboring vertices. Therefore, we regard this kind of similarity value as the future link probabilities between two vertices without any edge. The certainty degrees of existing edges and the link probabilities of non-existing edges are calculated by the MFI matrix of 1-step network. The specific calculation process is shown in the Equation (8) and Algorithm 1.

For each k-step network, we reconstruct the k-step weight matrix based on matrix S and matrix . We only retain the link weights of existing edges and neglect the weights of non-existing edges between vertices. The weight matrix factor is not only to balance k-step network representation but also it can be regarded as network weight features, which can be integrated into network representations.

In the k-step network, the edge relationship has been established between vertices, where the edge relationship does not exist in the original network. Weight matrix of k-step network can not only balance network representation vectors of different k-step networks but also can be regarded as network weight features of the network, which can be integrated into network representation framework. Specifically, we construct the edge weight matrix of k-step network and the weight matrix of k-step network is different. The weights of the edges of the original network are larger. The edge relationship of the 2-step network is the reachability relationship within 2 step between two vertices in the original network, which can be set as 1 and 0. By MFI similarity calculation, the edge weights of 2-step network are the similarity values between the current vertex and the neighbor’s neighboring vertices in the original network. Therefore, the weights in the 2-step network is less than that in the 1-step network. So, the higher the order of the network, the smaller the edge weight in weight matrix is. By using the hierarchical weight matrix, we can combine the weight matrix with the network structure feature matrix for joint network representation learning. Thus, different weight factors are assigned to different k-step network representation vectors by weight matrix.

3.4. Joint Learning of MVNR

Suppose that matrix

admits an approximation of low rank

k, where

. Based on the matrix factorization, Yu et al. [

33] propose a matrix factorization approach with a penalty term constraint, which aims to find

and

and minimize the likelihood

where

is a harmonic factor to balance two components in Equation (9). Specifically, Equation (9) aims at factorizing

into two matrices

and

, where

. Here, matrix

can be regarded as the feature matrix of the network

G for the task of network representation learning. Matrix

can be regarded as the learnt representation matrix of the network

G. We do not use the model proposed by Equation (9) in MVNR algorithm.

We use the Inductive Matrix Completion (IMC) algorithm presented by Natarajan and Dhillon [

34], which adopts two known matrices to factorize matrix

as follows:

where

denotes the sample set of matrix

, here,

and

are two known feature matrices.

is the hyper-parameter to balance two components

and

. IMC aim at learning the matrix

and matrix

to meet

. The Equation (10) is originally applied to complete gene-disease matrix using gene and disease feature matrix. Motivated by IMC, we integrate the link weights of existing edges into Equation (10), the purpose of MVNR is to solve matrices

and

to minimize the objective function as follows:

Here,

is the weight matrix of the

k-th step network,

is the hyper-parameter to balance two components

and

. For the Equation (10), we denote the matrix

as the identity matrix

. Consequently, we get the Equation (11) based on Equation (10). For each

k-step network, we denote

as representation matrix of the

k-th network. The final representation vectors can be concatenated as follows:

where

k = {1,2, …,

K} and

K is a pre-selected constant. As Equation (12), we concatenate all

k-step representations to form the global representations, which can be used in various machine learning tasks.

We introduce matrix to adjust the weights of the different k-step networks and remedy the defect of ignoring the weight information for GraRep. For the k-step network representation , we apply L2 Norm to normalize the learnt network representations which can show better performance in some evaluation tasks, such as network classification and link prediction.

We give the algorithm description of MVNR in Algorithm 1.

3.5. Complexity Analysis

In the proposed MVNR, the training procedure can be divided into the following parts: M construction, M factorization using IMC and representations concatenation. In matrix M, the number of rows equals the number of columns, meanwhile, the number of rows and columns equals the number of vertices in the network, which is defined as |V|. Thus, the time complexity of constructing matrix M is .

For the Equation (11), we introduce the optimization approach proposed by Yu et al. [

33]. For each

k-step network, we use the known weight matrix

W to factorize matrix

M of network feature based on IMC algorithm. The output of the Equation (11) is the matrices

X and

Y, where

. Therefore, the time complexity of each iteration of minimizing

X and

Y is

in Equation (10), where nnz (

M) denotes the number of non-zero elements in

M,

d denotes the vector length of the network representations. The time complexity of the representations concatenation is

.

| Algorithm 1 MVNR Algorithm. |

| Input: |

| Adjacency matrix A on network. |

| Maximum transition step K. |

| Harmonic factor λ. |

| Dimension of representation vector d. |

| Output: |

| Matrix of the network representation R. |

| Content: |

|