Improved Bilateral Filtering for a Gaussian Pyramid Structure-Based Image Enhancement Algorithm

Abstract

:1. Introduction

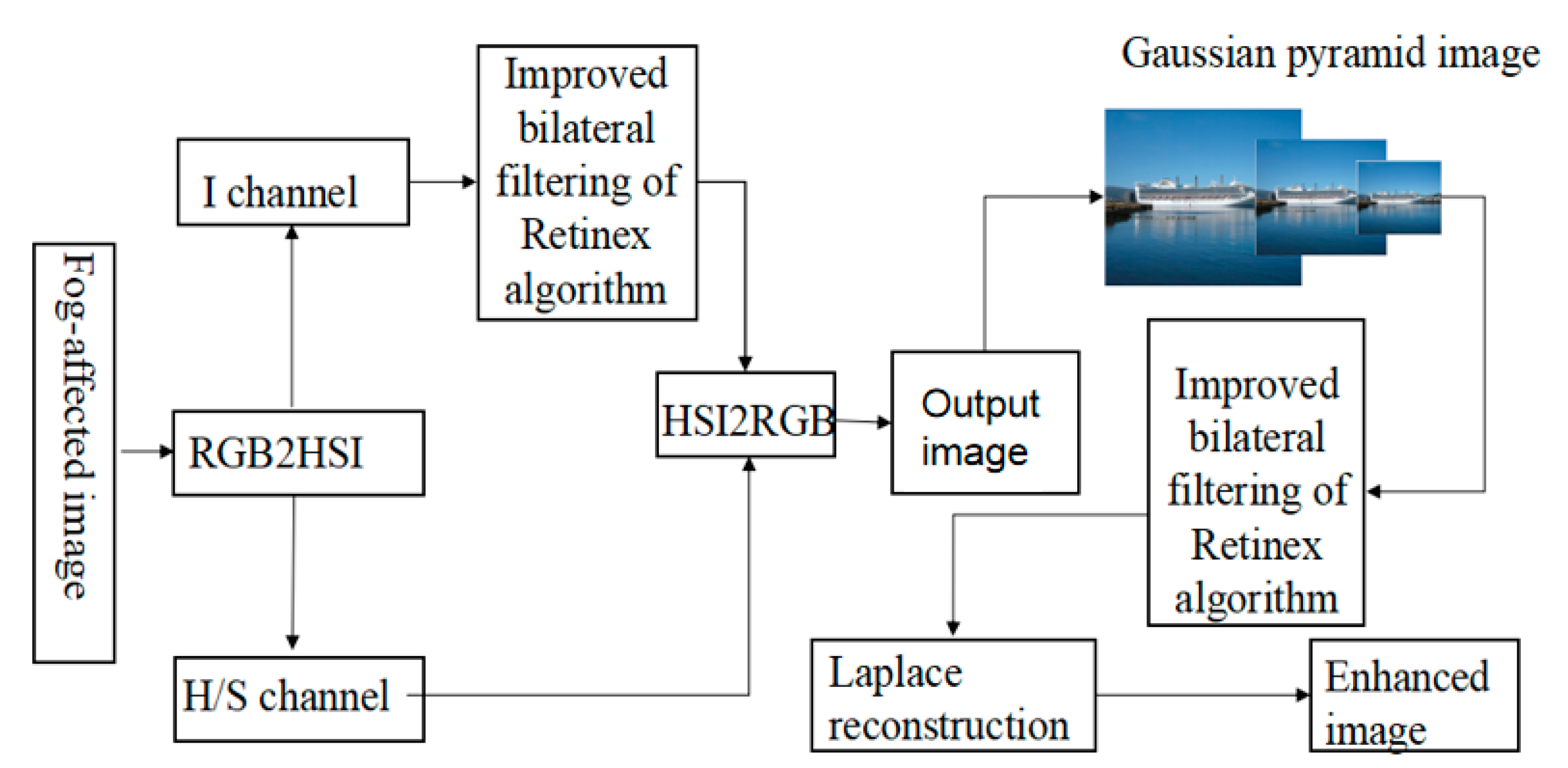

2. Improved Retinex Model

2.1. Single-Scale Retinex Model

2.2. HSI Color Space

2.3. The Improved Retinex Algorithm

3. Improved Bilateral Filtering Function

3.1. Traditional Bilateral Filtering Functions

3.2. Improved Spatial Domain Kernel Function

3.3. Improved Selection of Pixel Difference Scale Parameters

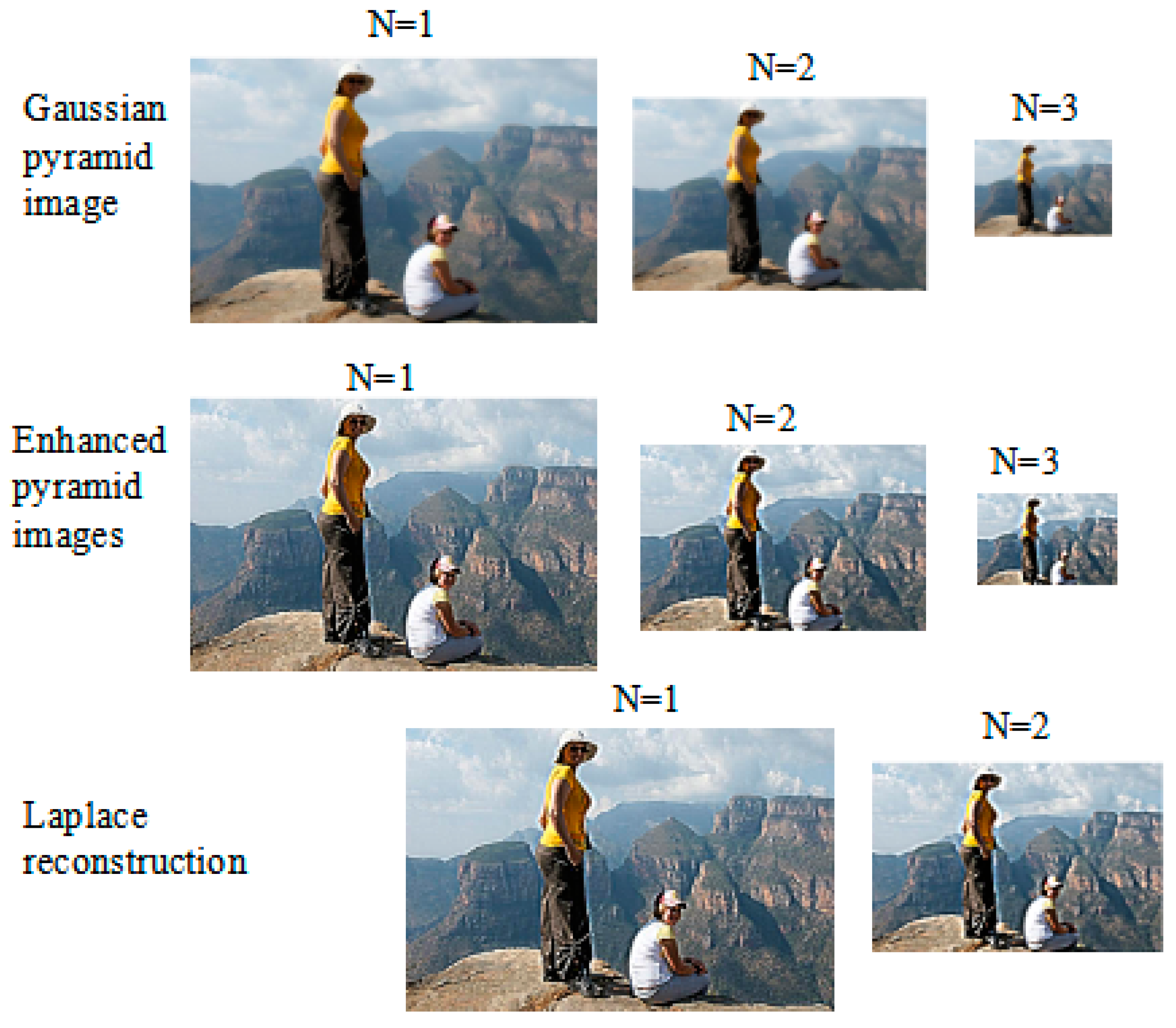

4. Gaussian–Laplacian Multi-Scale Pyramid Algorithm

- (1)

- The topmost image in the Gaussian pyramid, , is interpolated to obtain an image termed , which has the same resolution as the image in the preceding layer ().

- (2)

- is subtracted from , with the difference, , stored in the Laplace residual set. is subsequently added to to yield , which is interpolated for reconstruction of the image in the preceding layer.

- (3)

- Computation of the Laplacian and image interpolation continues iteratively, until the reconstructed image is of the same resolution as the original input. Using the terminology described above, the process can be described as (12). A Gaussian–Laplacian multi-scale pyramid from intermediate results of different pyramid levels is presented in Figure 4. In our experiment, the sampling value of the pyramid was 3.

5. Experiments and Results

5.1. Results of Improved Bilateral Filtering

5.2. Subjective Analysis of Image Enhancement Algorithms

5.3. Objective Evaluation of Image Enhancement Algorithms

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chang, H.; Ng, M.K.; Wang, W.; Zeng, T. Retinex image enhancement via a learned dictionary. Opt. Eng. 2015, 54, 013107. [Google Scholar] [CrossRef]

- Soori, U.; Yuen, P.W.; Han, J.W.; Ibrahim, I.; Chen, W.; Hong, K.; Merfort, C.; James, D.B.; Richardson, M.A. Target recognitions in multiple-camera closed-circuit television using color constancy. Opt. Eng. 2013, 52, 047202. [Google Scholar] [CrossRef]

- Yoon, J.; Choi, J.; Choe, Y. Efficient image enhancement using sparse source separation in the Retinex theory. Opt. Eng. 2017, 56, 113103. [Google Scholar] [CrossRef]

- Land, E.H. Recent advances in retinex theory and some implications for cortical computations: Color vision and the natural image. Proc. Natl. Acad. Sci. USA 1989, 80, 5163–5169. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. An alternative technique for the computation of the designator in the retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef]

- Land, E.H. Recent Advances in Retinex Theory; Vision Research: Wayne, NJ, USA, 1986; Volume 26, pp. 7–21. [Google Scholar]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. Properties and Performance of a Center/Surround Retinex. IEEE Trans. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Jobson, D.; Rahman, Z.; Woodell, G. A multiscale retinex for bridging the gap between color images and the human observation of scenes. Image Proc. IEEE Trans. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.U.; Woodell, G.A. Retinex image processing: Improved fidelity to direct visual observation. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 19–22 November 1996; No. 1. pp. 124–125. [Google Scholar]

- Jiang, B.; Rahman, Z. Runway hazard detection in poor visibility conditions. Proc. SPIE 2012, 8300, 83000H. [Google Scholar]

- Zavalaromero, O.; Meyerbaese, A.; Meyerbaese, U. Multiplatform GPGPU implementation of the active contours without edges algorithm. Proc. SPIE 2012, 8399, 1083990E. [Google Scholar]

- Rahman, Z.; Woodell, G.A.; Jobson, D.J. Retinex image enhancement: Application to medical images. In Proceedings of the NASA Workshop on New Partnerships in Medical Diagnostic Imaging, Greenbelt, MD, USA, 17–18 July 2001; pp. 1–23. [Google Scholar]

- Jobson, D.J.; Rahman, Z.; Woodell, G. Spatial aspect of color and scientific implications of retinex image processing. Vis. Inf. Process. X 2001, 4388, 117–128. [Google Scholar]

- Wang, Y.X.; Diao, M.; Han, C. Underwater imaging enhancement algorithm under conventional light source based on iterative histogram equalization. Acta Photonica Sin. 2018, 47, 1101002. [Google Scholar] [CrossRef]

- Woodell, G.; Rahman, Z.; Jobson, D.J.; Hines, G. Enhanced images for checked and carry-on baggage and cargo screening. Proc. SPIE 2004, 5403, 582. [Google Scholar]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Retinex processing for automatic image enhancement. J. Electron. Imaging 2004, 13, 100–111. [Google Scholar]

- Loh, Y.P.; Liang, X.; Chan, C.S. Low-light image enhancement using Gaussian Process for features retrieval. Signal Process. Image Commun. 2019, 74, 175–190. [Google Scholar] [CrossRef]

- Tao, F.; Yang, X.; Wu, W.; Liu, K.; Zhou, Z.; Liu, Y. Retinex-based image enhancement framework by using region covariance filter. Soft Comput. 2018, 22, 1399–1420. [Google Scholar] [CrossRef]

- Karacan, L.; Erdem, E.; Erdem, A. Structure-preserving image smoothing via region covariances. ACM Trans. Graph. (TOG) 2013, 32, 176. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, J.; Kou, X.; Xie, Y. Night View Road Scene Enhancement Based on Mixed Multi-scale Retinex and Fractional Differentiation. In Proceedings of the International Conference on Brain Inspired Cognitive Systems, Xi’an, China, 7–8 July 2018; Springer: Cham, Switzerland; pp. 818–826. [Google Scholar]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-revealing low-light image enhancement via robust Retinex model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 4–7 January 1998; Volume 98, p. 1. [Google Scholar]

- Shi, K.Q.; Wei, W.G. Image denoising method of surface defect on cold rolled aluminum sheet by bilateral filtering. Surf. Technol. 2018, 47, 317–323. [Google Scholar]

- Burt, P.; Adelson, E. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Zosso, D.; Tran, G.; Osher, S.J. Non-local retinex—A Unifying Framework and Beyond. SIAM J. Imaging Sci. 2015, 8, 787–826. [Google Scholar] [CrossRef]

- Zosso, D.; Tran, G.; Osher, S. A unifying retinex model based on non-local differential operators. Comput. Imaging XI 2013, 8657, 865702. [Google Scholar]

- The INface Toolbox v2.0 for Illumination Invariant Face Recognition. Available online: https://www.mathworks.com/matlabcentral/fileexchange/26523-the-inface-toolbox-v2-0-for-illumination-invariant-face-recognition (accessed on 10 October 2019).

- Liu, Y.; Zhao, G.; Gong, B.; Li, Y.; Raj, R.; Goel, N.; Tao, D. Improved techniques for learning to dehaze and beyond: A collective study. arXiv 2018, arXiv:1807.00202. [Google Scholar]

- Kodak Image Dataset. Available online: http://www.cs.albany.edu/~xypan/research/snr/Kodak.html (accessed on 15 October 2019).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Proc. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multi-scale structural similarity for image quality assessment; Invited Paper. In Proceedings of the IEEE Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Chandler, D.M.; Hemami, S.S. VSNR: A wavelet-based visual signal-to-noise ratio for natural images. IEEE Trans. Image Process. 2007, 16, 2284–2298. [Google Scholar] [CrossRef]

- Image-Quality-Tools. Available online: https://github.com/sattarab/image-quality-tools/tree/master/metrix_mux (accessed on 15 October 2019).

- Wang, Z.; Li, Q. Information Content Weighting for Perceptual Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 1185–1198. [Google Scholar] [CrossRef]

| Gaussian White Noise | Algorithm | PSNR | MSE | SSIM | MSSIM | VIF | FR-VIF | VSNR |

|---|---|---|---|---|---|---|---|---|

| Original algorithm | 22.493 | 376.772 | 0.542 | 0.912 | 0.361 | 0.231 | 18.221 | |

| Modified algorithm | 27.034 | 129.231 | 0.799 | 0.964 | 0.566 | 0.379 | 26.314 | |

| Original algorithm | 20.505 | 587.780 | 0.488 | 0.898 | 0.327 | 0.205 | 17.375 | |

| Modified algorithm | 28.414 | 93.752 | 0.928 | 0.993 | 0.794 | 0.463 | 34.386 | |

| Original algorithm | 18.014 | 1035.936 | 0.451 | 0.881 | 0.284 | 0.182 | 16.101 | |

| Modified algorithm | 27.220 | 123.450 | 0.946 | 0.991 | 0.691 | 0.469 | 30.743 | |

| Original algorithm | 16.222 | 1560.708 | 0.431 | 0.869 | 0.257 | 0.170 | 15.226 | |

| Modified algorithm | 27.507 | 115.525 | 0.970 | 0.995 | 0.713 | 0.502 | 32.404 | |

| Original algorithm | 16.127 | 1610.014 | 0.418 | 0.856 | 0.249 | 0.165 | 14.992 | |

| Modified algorithm | 22.286 | 481.923 | 0.860 | 0.952 | 0.446 | 0.365 | 21.867 |

| Algorithm | PSNR | MSE | SSIM | MSSIM | VIF | IWSSIM | VSNR |

|---|---|---|---|---|---|---|---|

| OI | N/A | 0.000 | 1.000 | 1.000 | 1.000 | 1.000 | N/A |

| SSR | 18.584 | 1097.904 | 0.734 | 0.761 | 1.192 | 0.728 | 7.194 |

| MSRCR | 12.034 | 4438.279 | 0.547 | 0.761 | 0.826 | 0.731 | 12.376 |

| NLR | 13.751 | 3424.179 | 0.767 | 0.799 | 0.867 | 0.662 | 6.932 |

| INF | 10.357 | 6132.800 | 0.632 | 0.480 | 0.355 | 0.736 | 7.956 |

| Our | 24.071 | 333.602 | 0.851 | 0.914 | 0.994 | 0.894 | 13.224 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.; Zhou, H.-f.; Chen, W. Improved Bilateral Filtering for a Gaussian Pyramid Structure-Based Image Enhancement Algorithm. Algorithms 2019, 12, 258. https://doi.org/10.3390/a12120258

Lin C, Zhou H-f, Chen W. Improved Bilateral Filtering for a Gaussian Pyramid Structure-Based Image Enhancement Algorithm. Algorithms. 2019; 12(12):258. https://doi.org/10.3390/a12120258

Chicago/Turabian StyleLin, Chang, Hai-feng Zhou, and Wu Chen. 2019. "Improved Bilateral Filtering for a Gaussian Pyramid Structure-Based Image Enhancement Algorithm" Algorithms 12, no. 12: 258. https://doi.org/10.3390/a12120258

APA StyleLin, C., Zhou, H.-f., & Chen, W. (2019). Improved Bilateral Filtering for a Gaussian Pyramid Structure-Based Image Enhancement Algorithm. Algorithms, 12(12), 258. https://doi.org/10.3390/a12120258