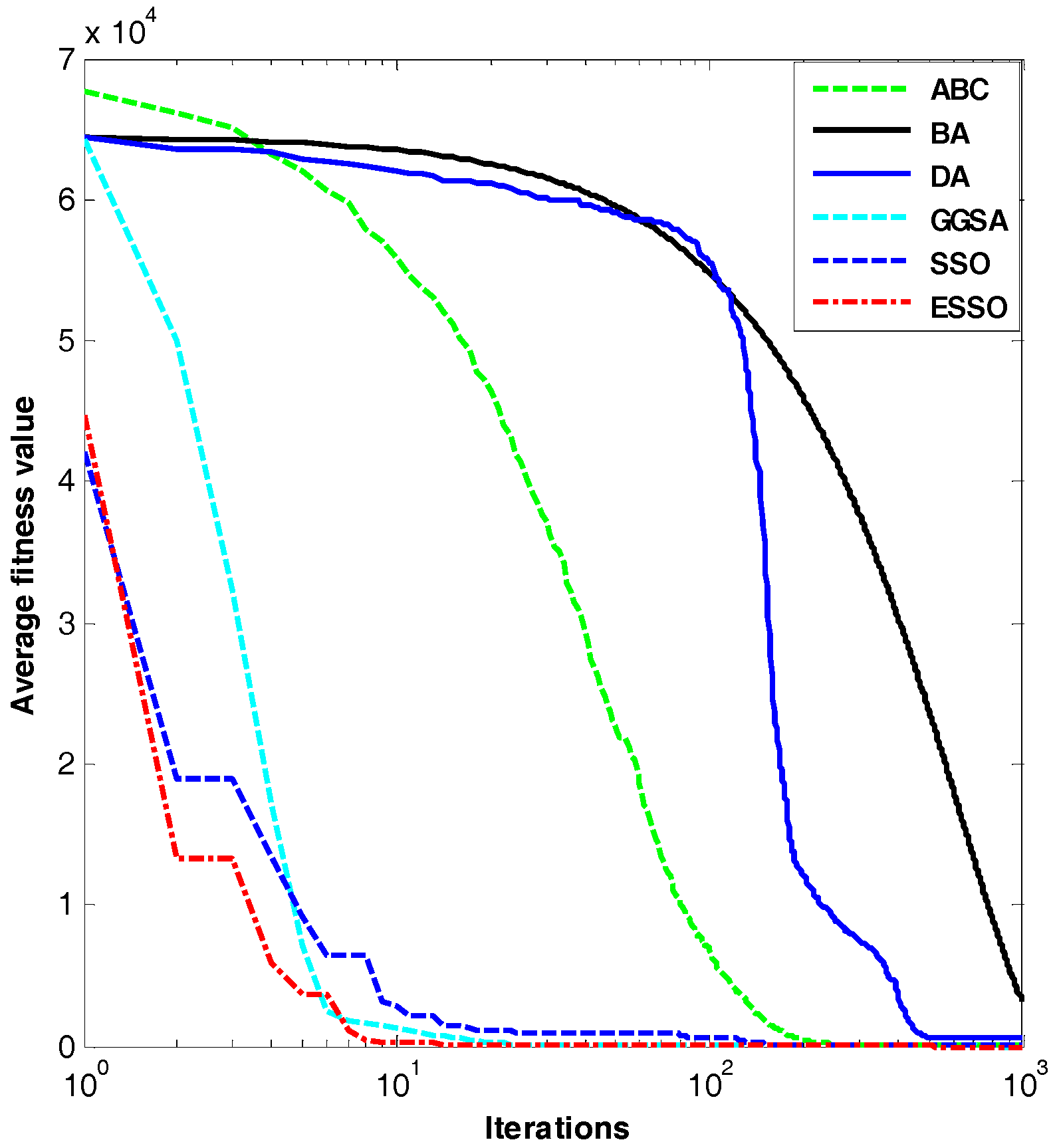

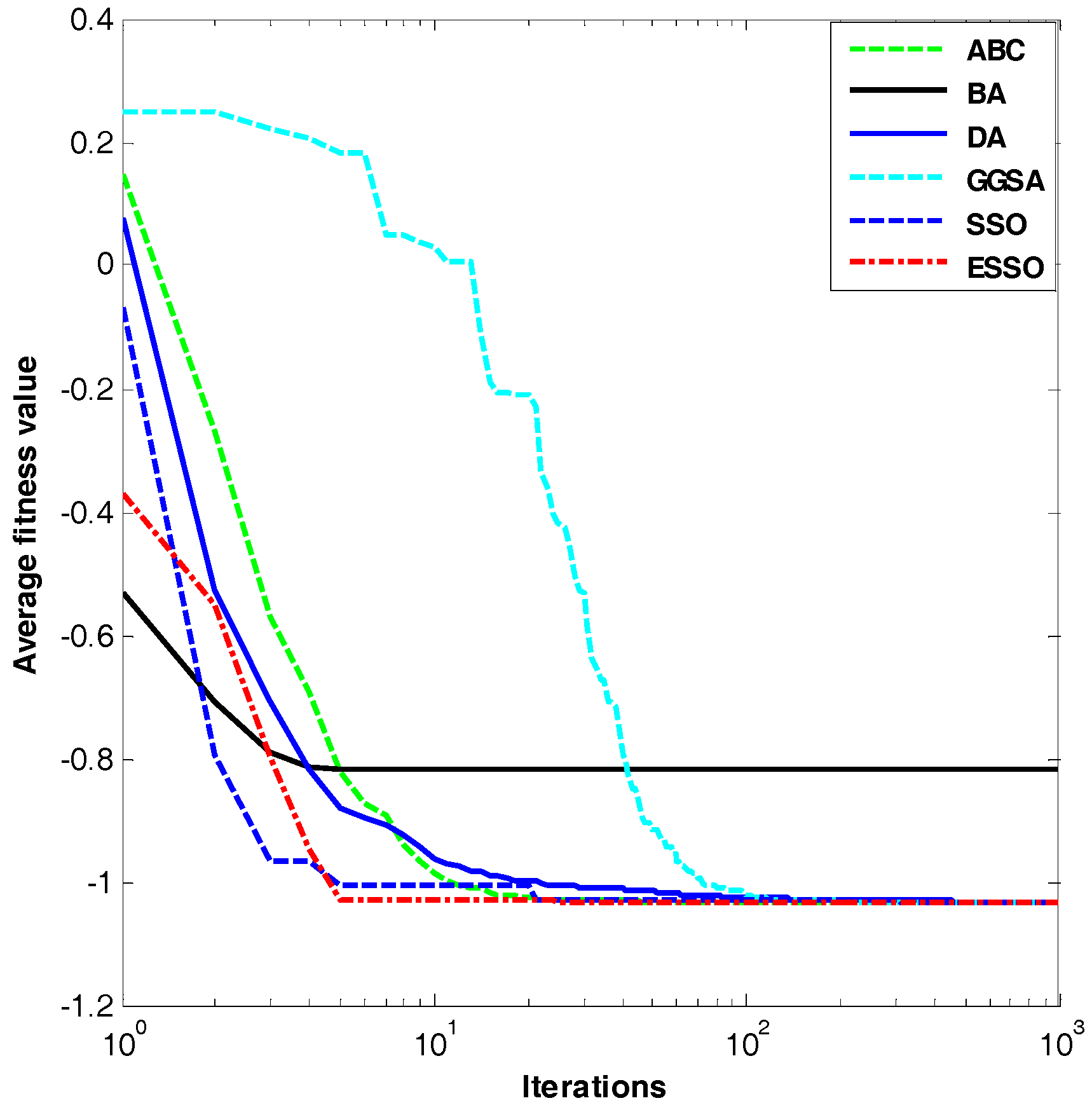

Figure 1.

Dim = 30, evolution curves of fitness value for .

Figure 1.

Dim = 30, evolution curves of fitness value for .

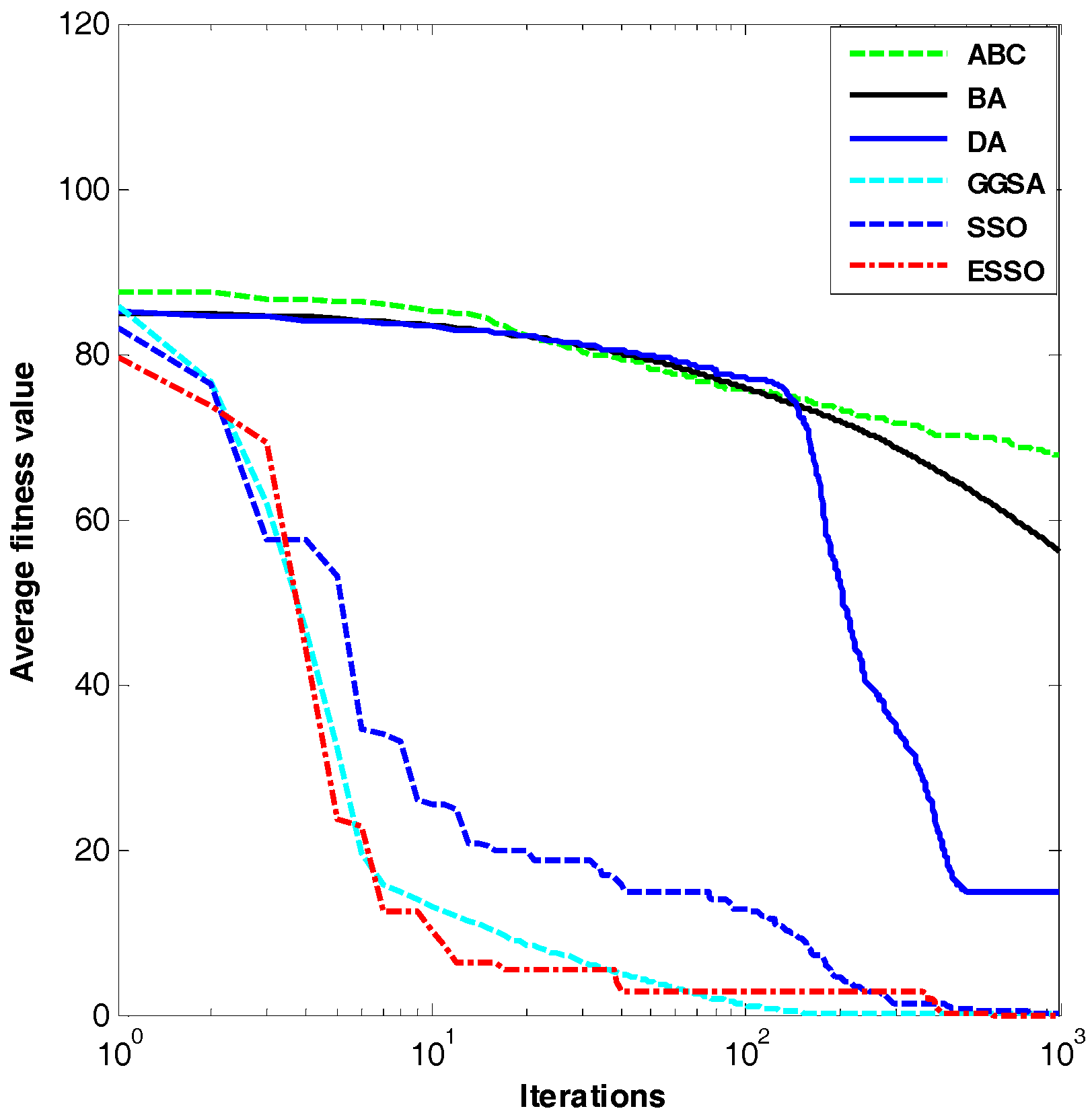

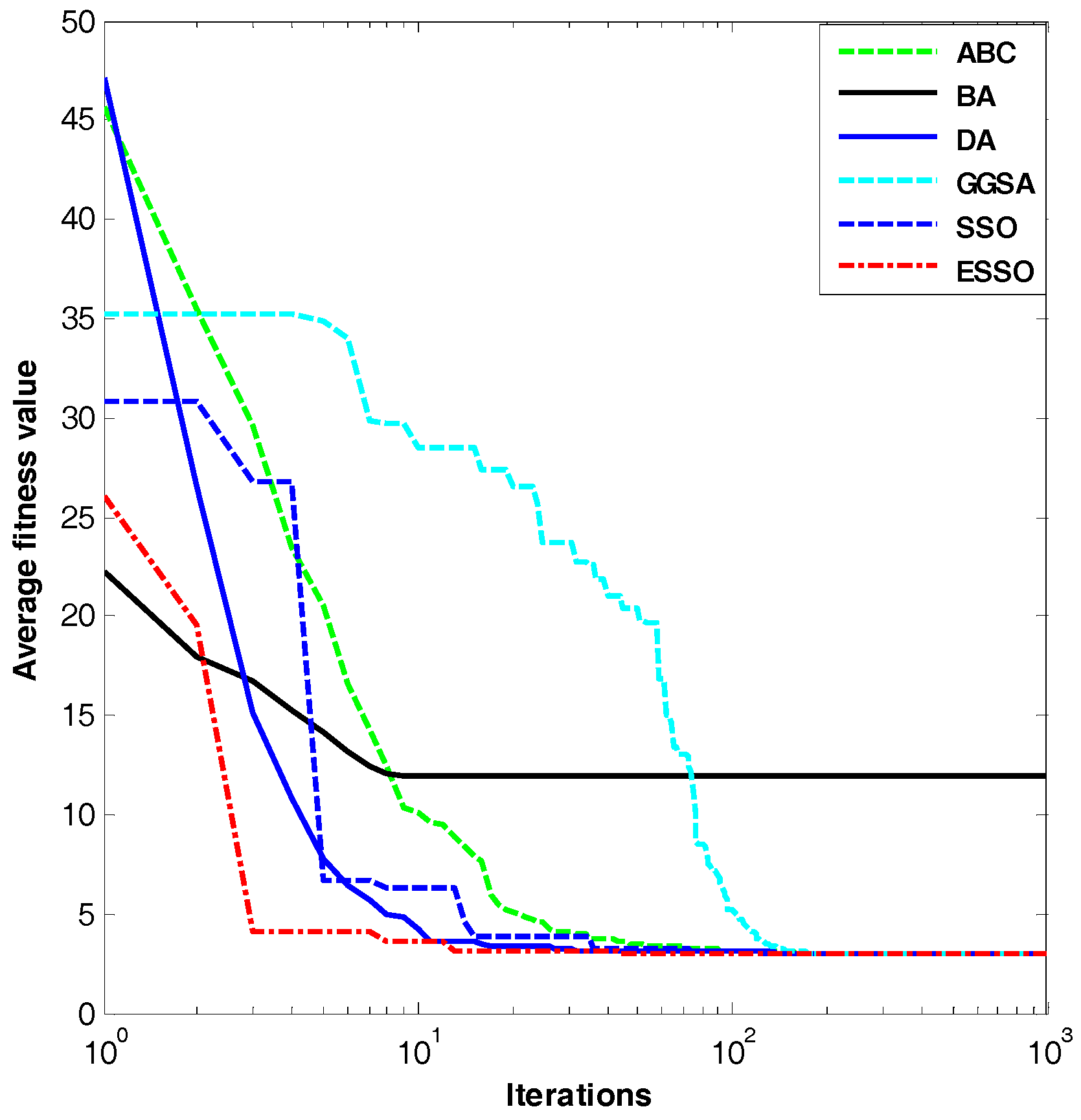

Figure 2.

Dim = 30, evolution curves of fitness value for .

Figure 2.

Dim = 30, evolution curves of fitness value for .

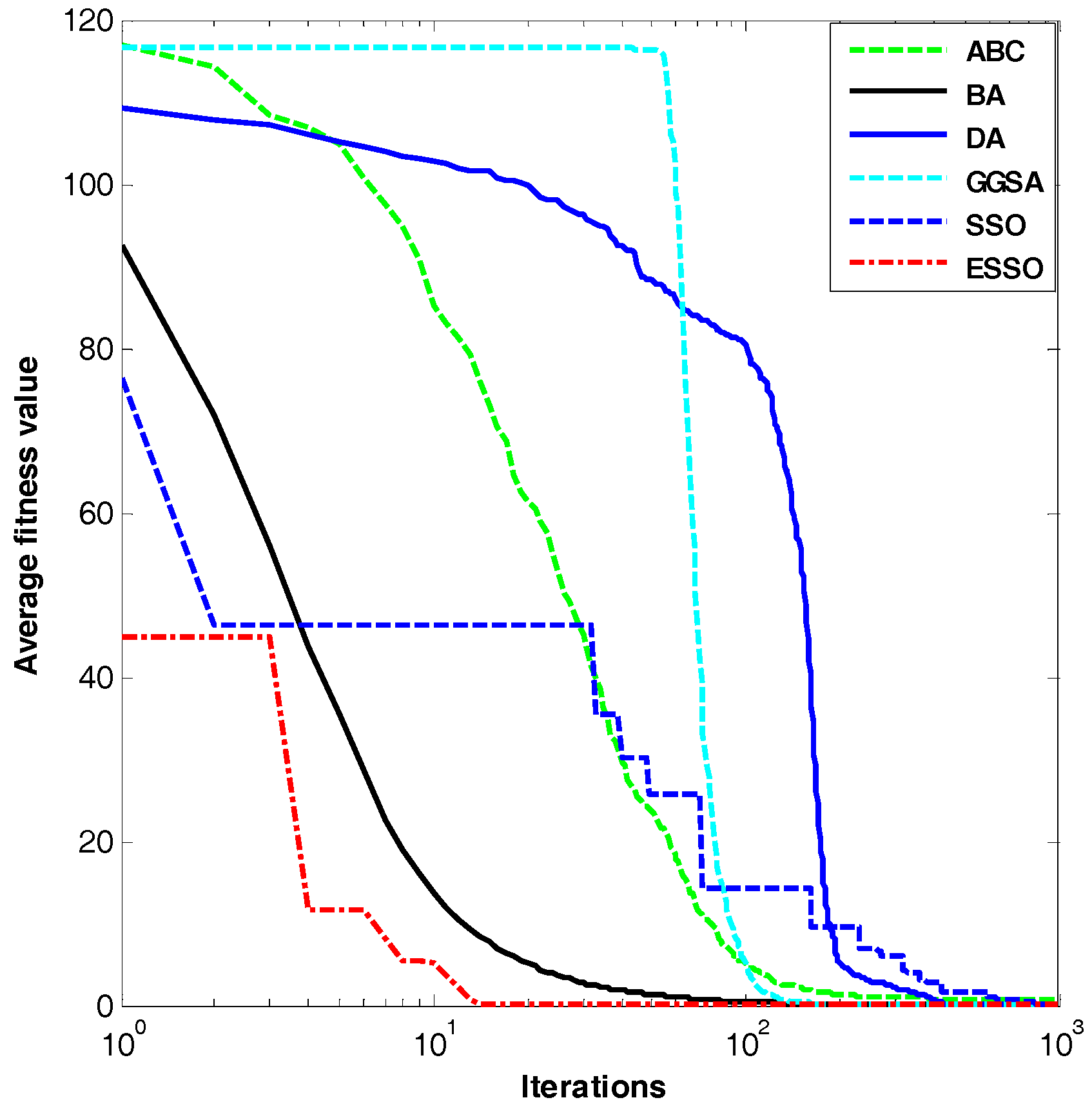

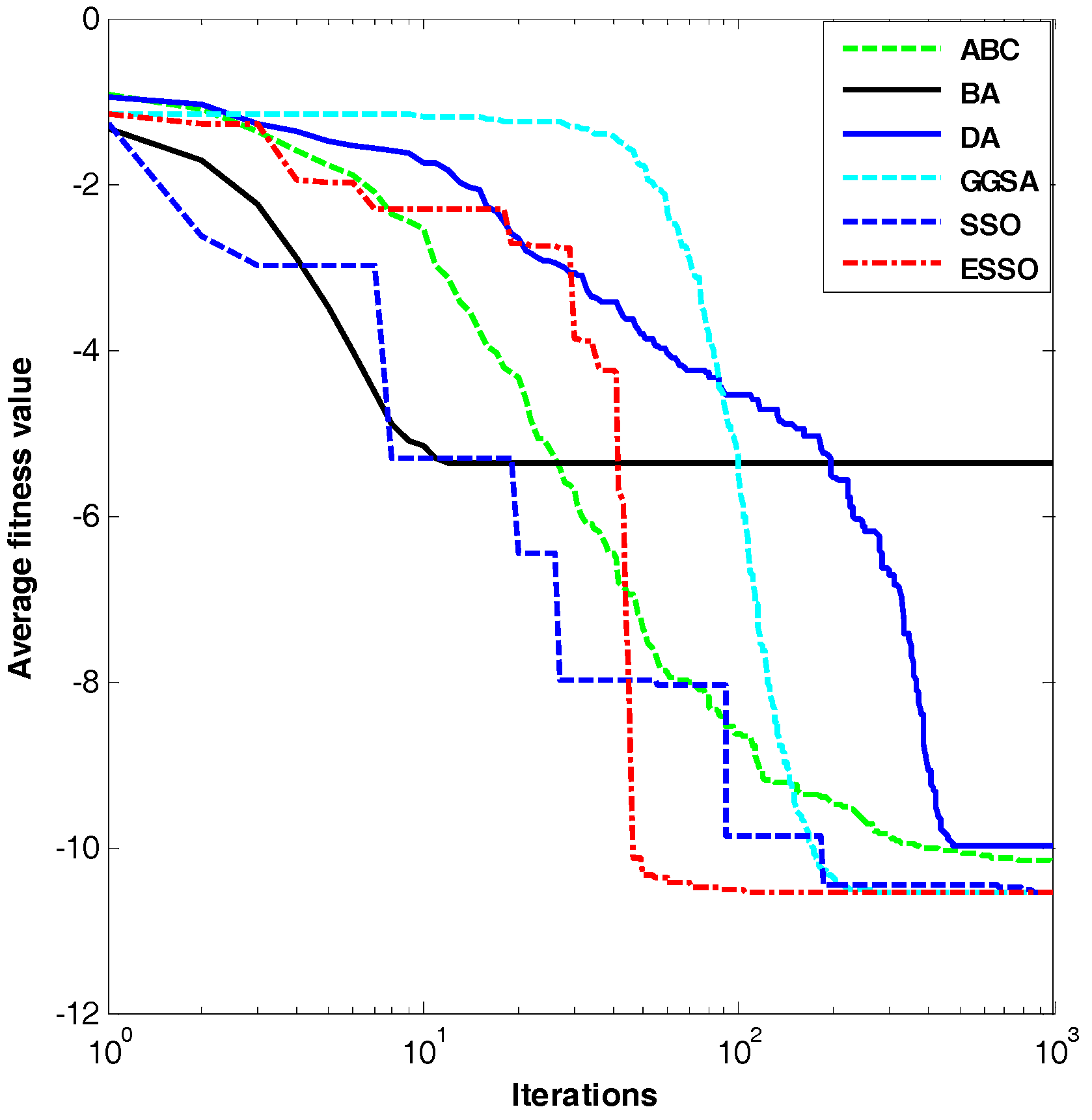

Figure 3.

Dim = 30, evolution curves of fitness value for .

Figure 3.

Dim = 30, evolution curves of fitness value for .

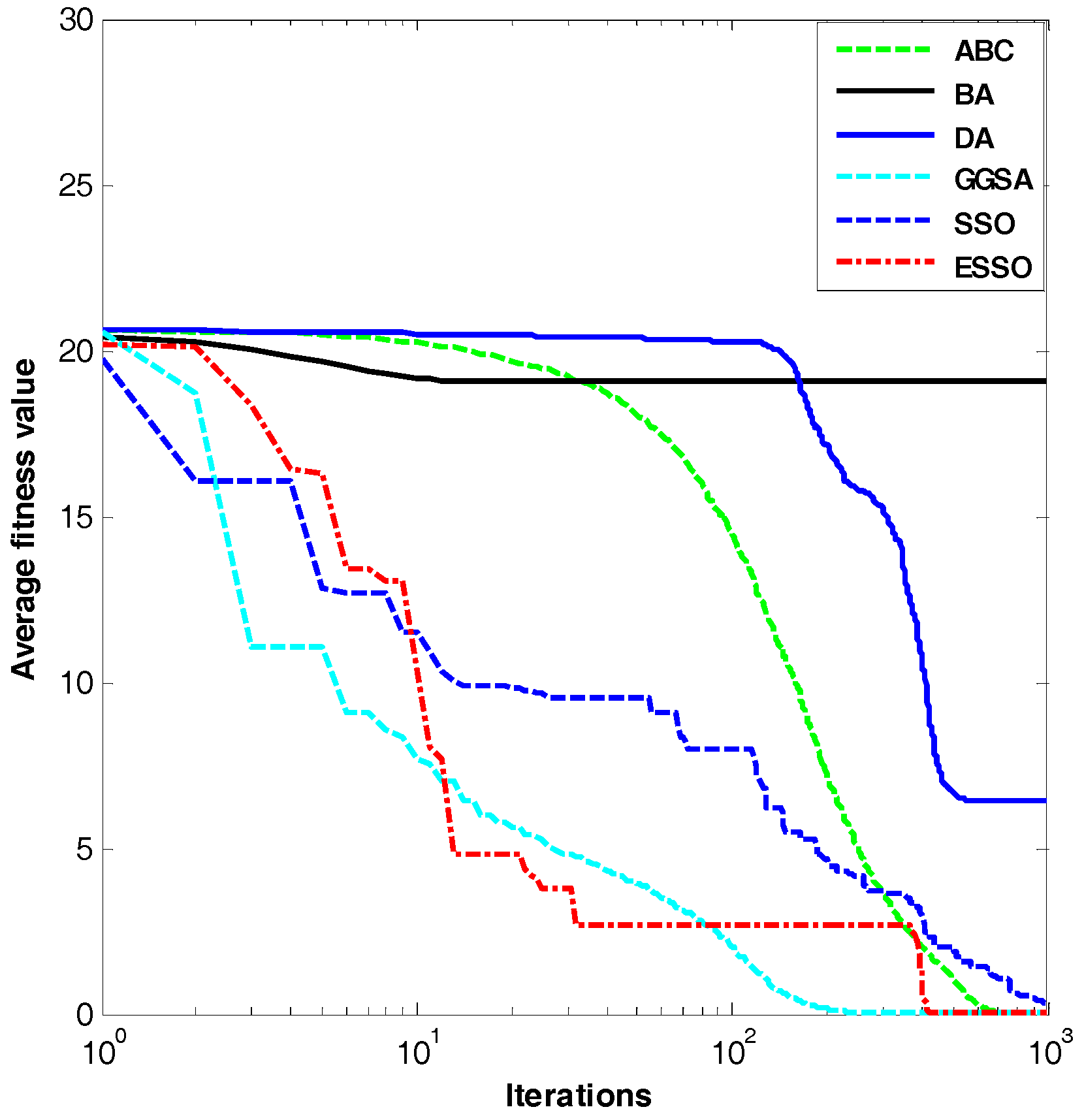

Figure 4.

Dim = 30, evolution curves of fitness value for .

Figure 4.

Dim = 30, evolution curves of fitness value for .

Figure 5.

Dim = 30, evolution curves of fitness value for .

Figure 5.

Dim = 30, evolution curves of fitness value for .

Figure 6.

Dim = 30, evolution curves of fitness value for .

Figure 6.

Dim = 30, evolution curves of fitness value for .

Figure 7.

Dim = 2, evolution curves of fitness value for .

Figure 7.

Dim = 2, evolution curves of fitness value for .

Figure 8.

Dim = 2, evolution curves of fitness value for .

Figure 8.

Dim = 2, evolution curves of fitness value for .

Figure 9.

Dim = 3, evolution curves of fitness value for .

Figure 9.

Dim = 3, evolution curves of fitness value for .

Figure 10.

Dim = 4, evolution curves of fitness value for .

Figure 10.

Dim = 4, evolution curves of fitness value for .

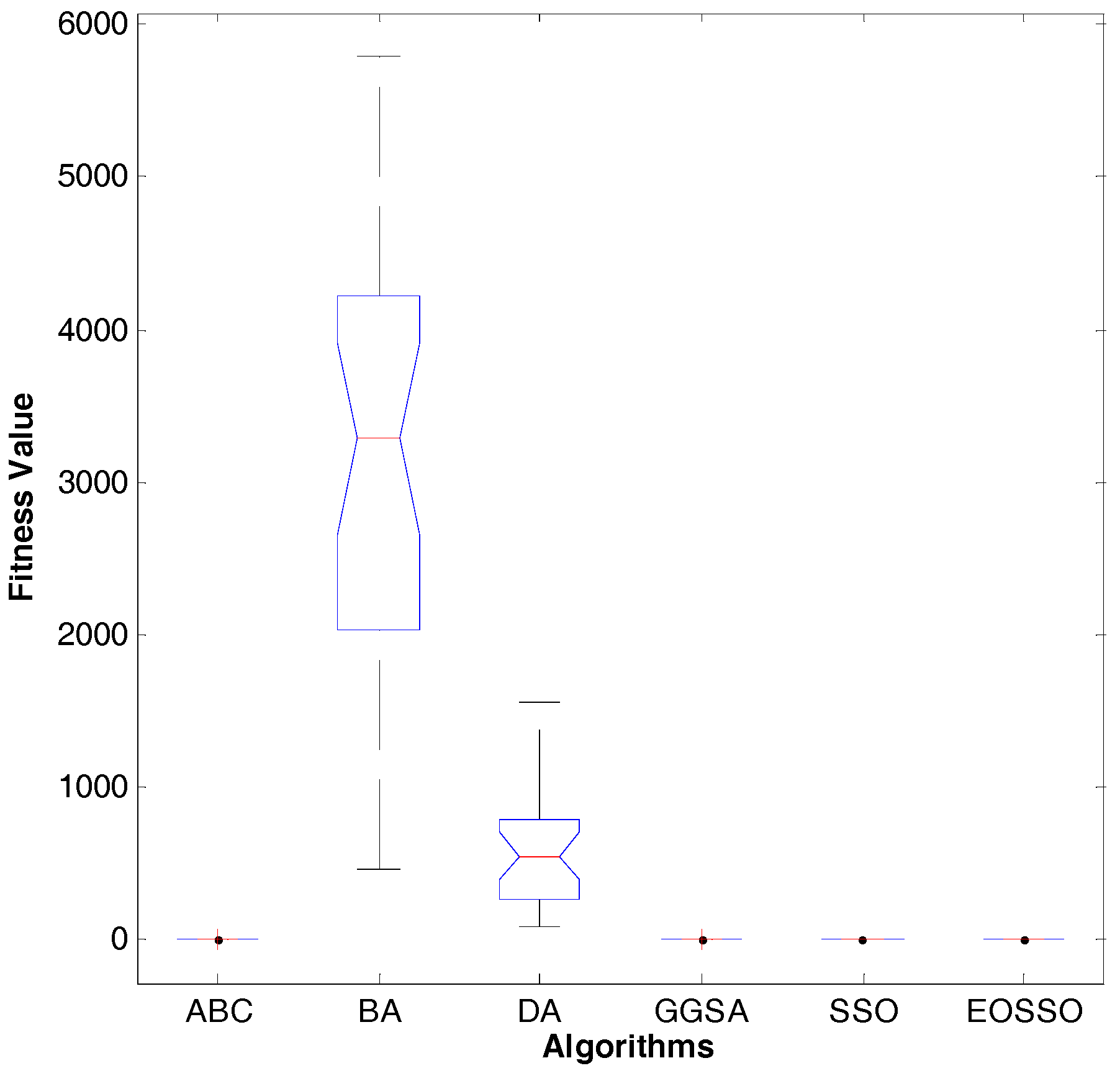

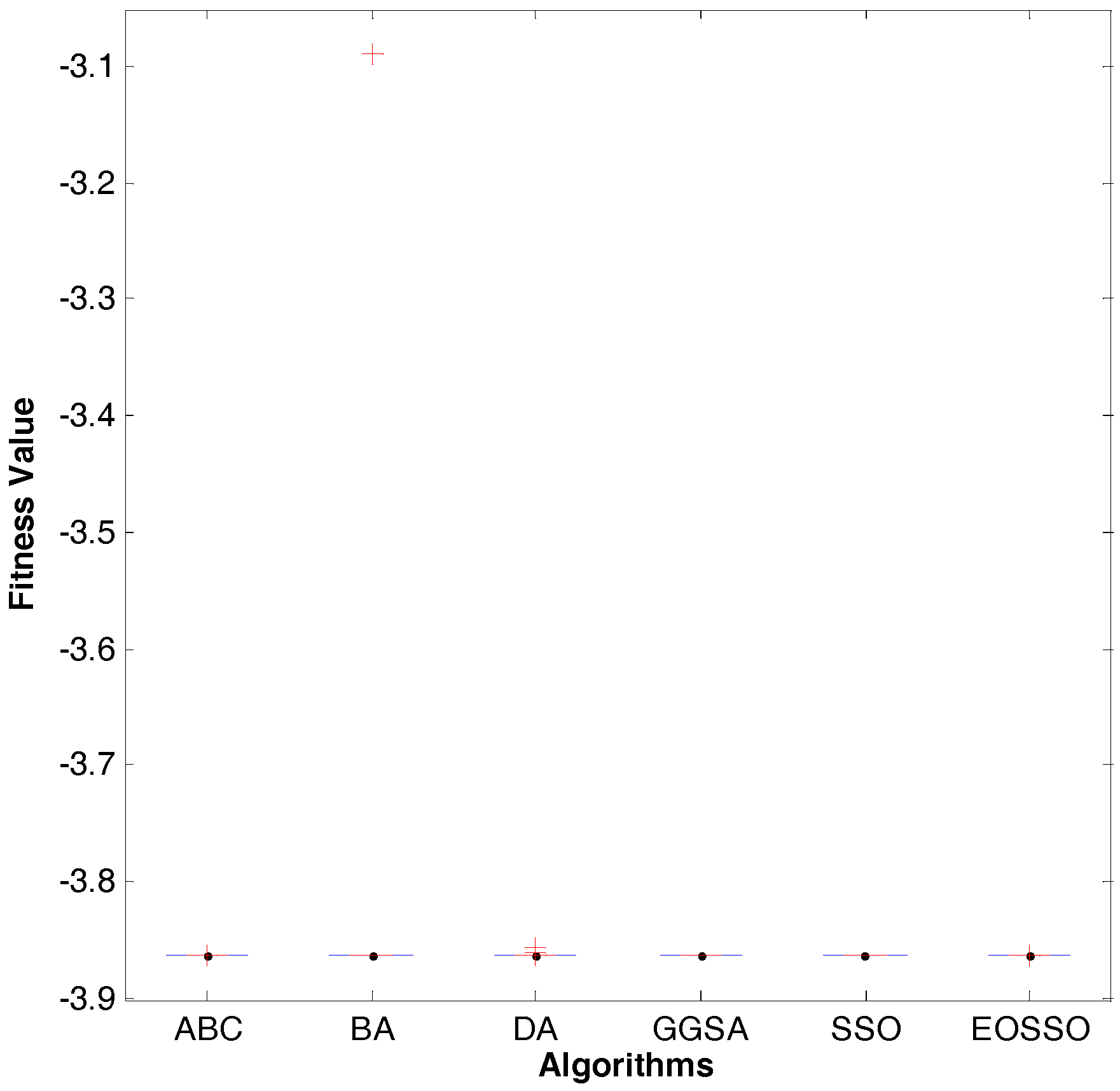

Figure 11.

Dim = 30, variance diagram of fitness value for .

Figure 11.

Dim = 30, variance diagram of fitness value for .

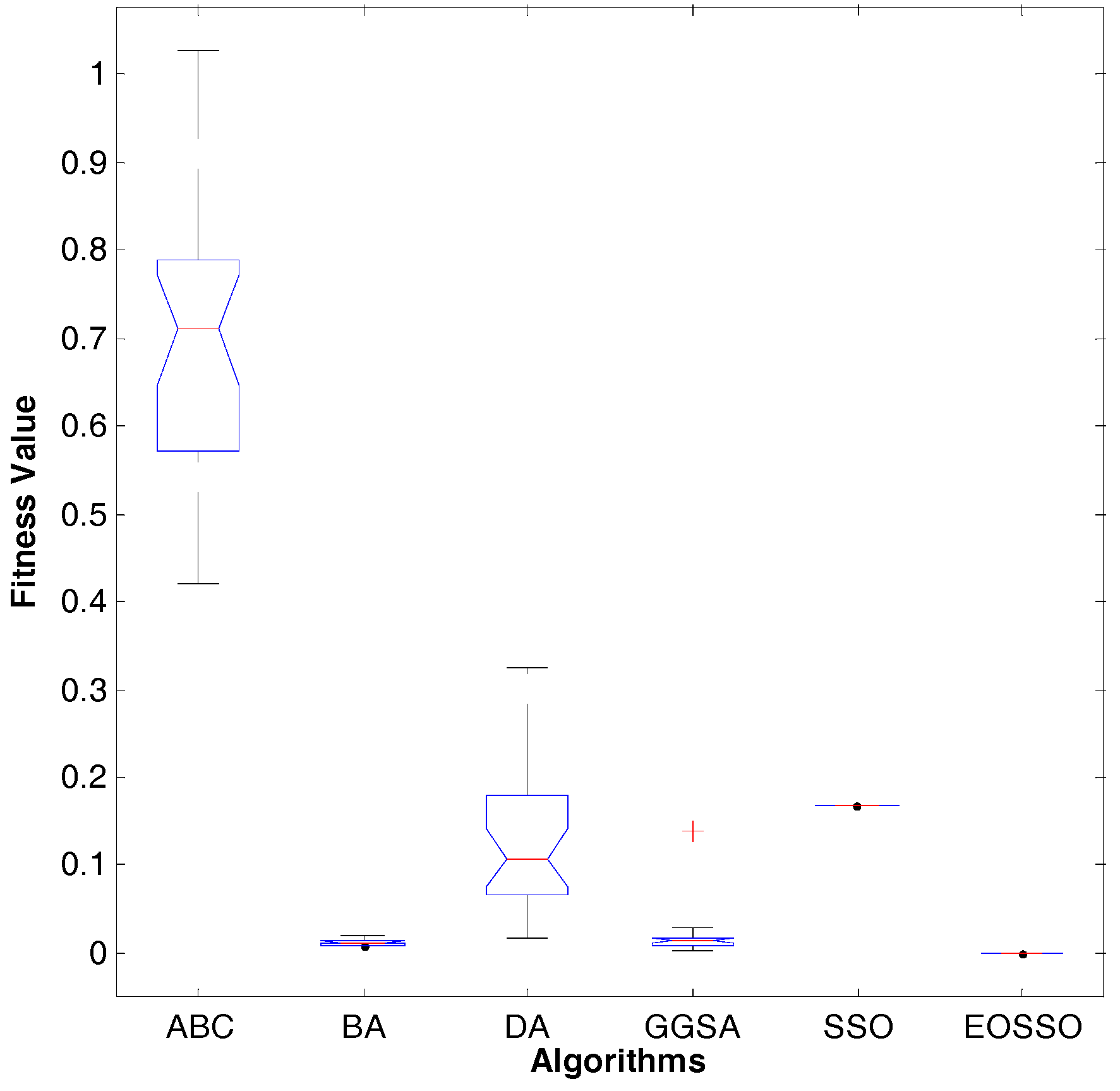

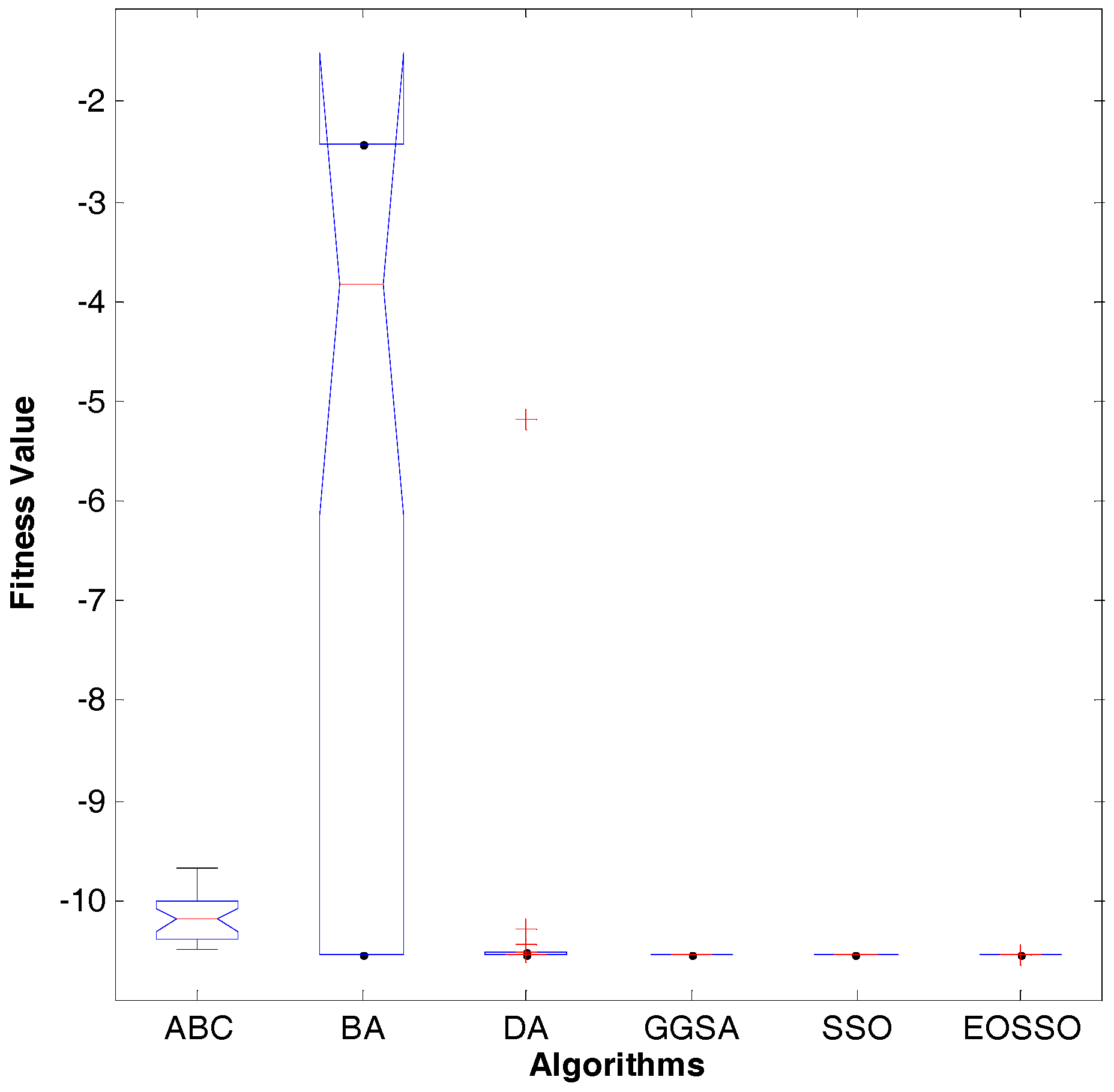

Figure 12.

Dim = 30, variance diagram of fitness value for .

Figure 12.

Dim = 30, variance diagram of fitness value for .

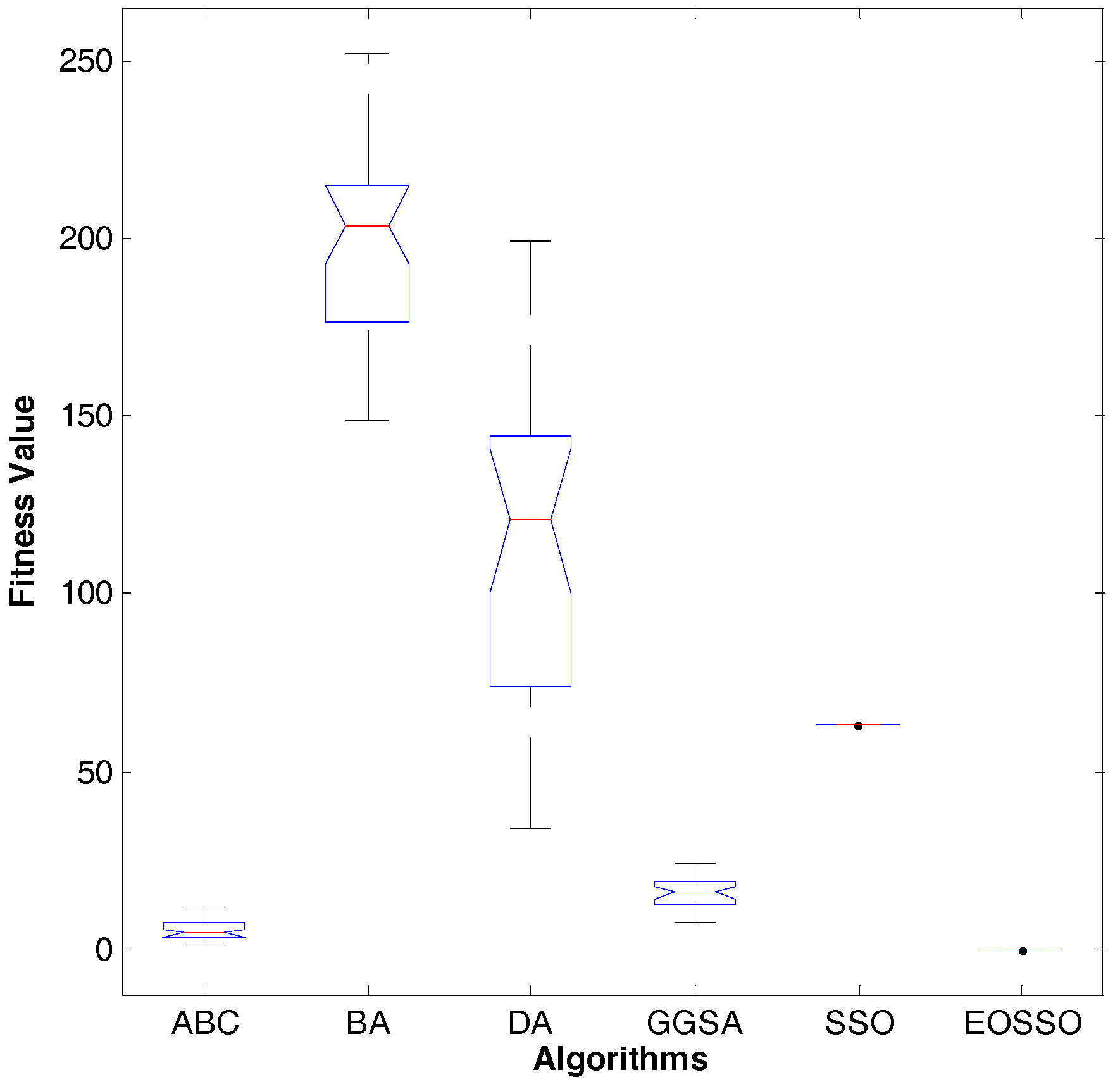

Figure 13.

Dim = 30, variance diagram of fitness value for .

Figure 13.

Dim = 30, variance diagram of fitness value for .

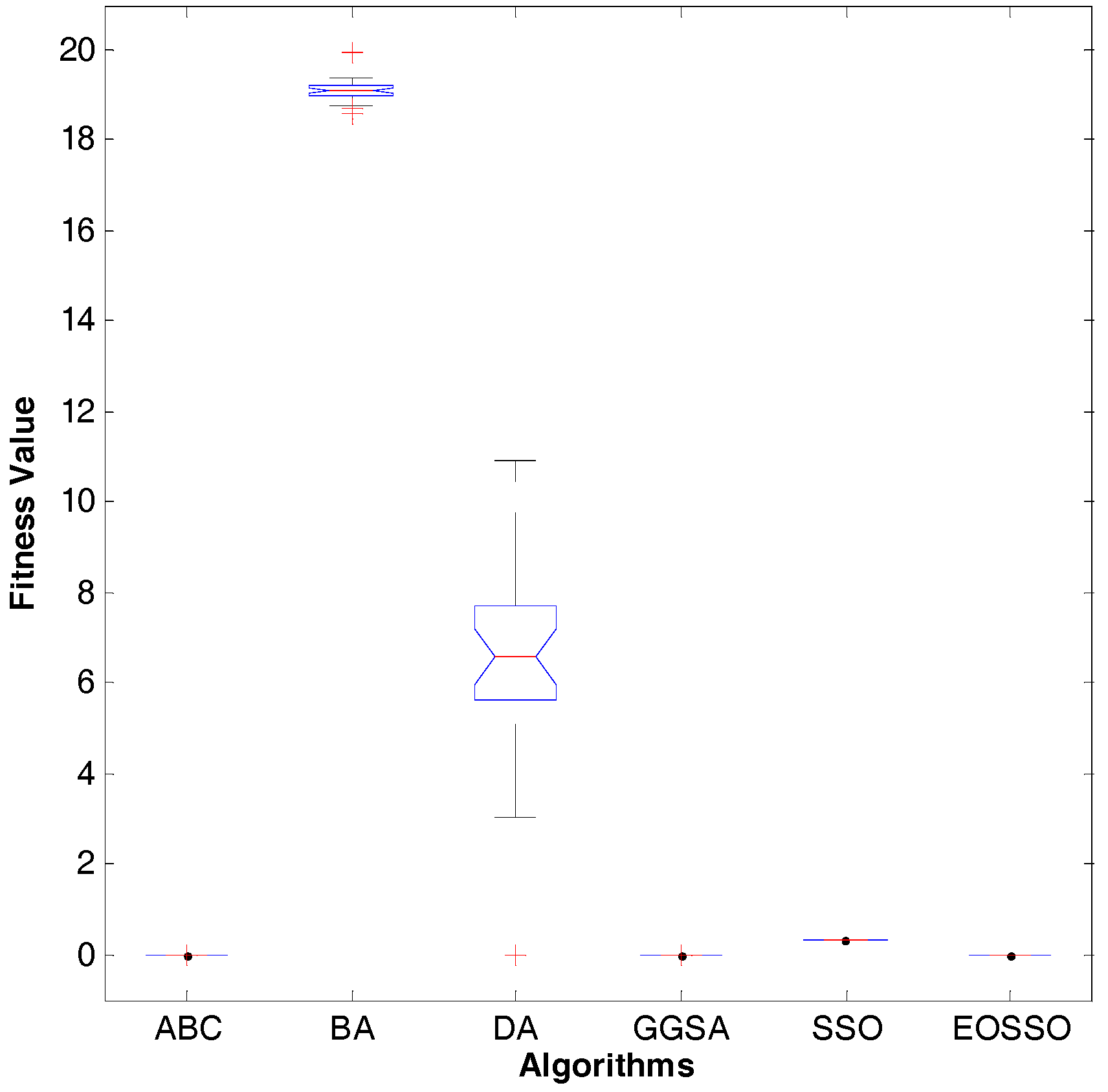

Figure 14.

Dim = 30, variance diagram of fitness value for .

Figure 14.

Dim = 30, variance diagram of fitness value for .

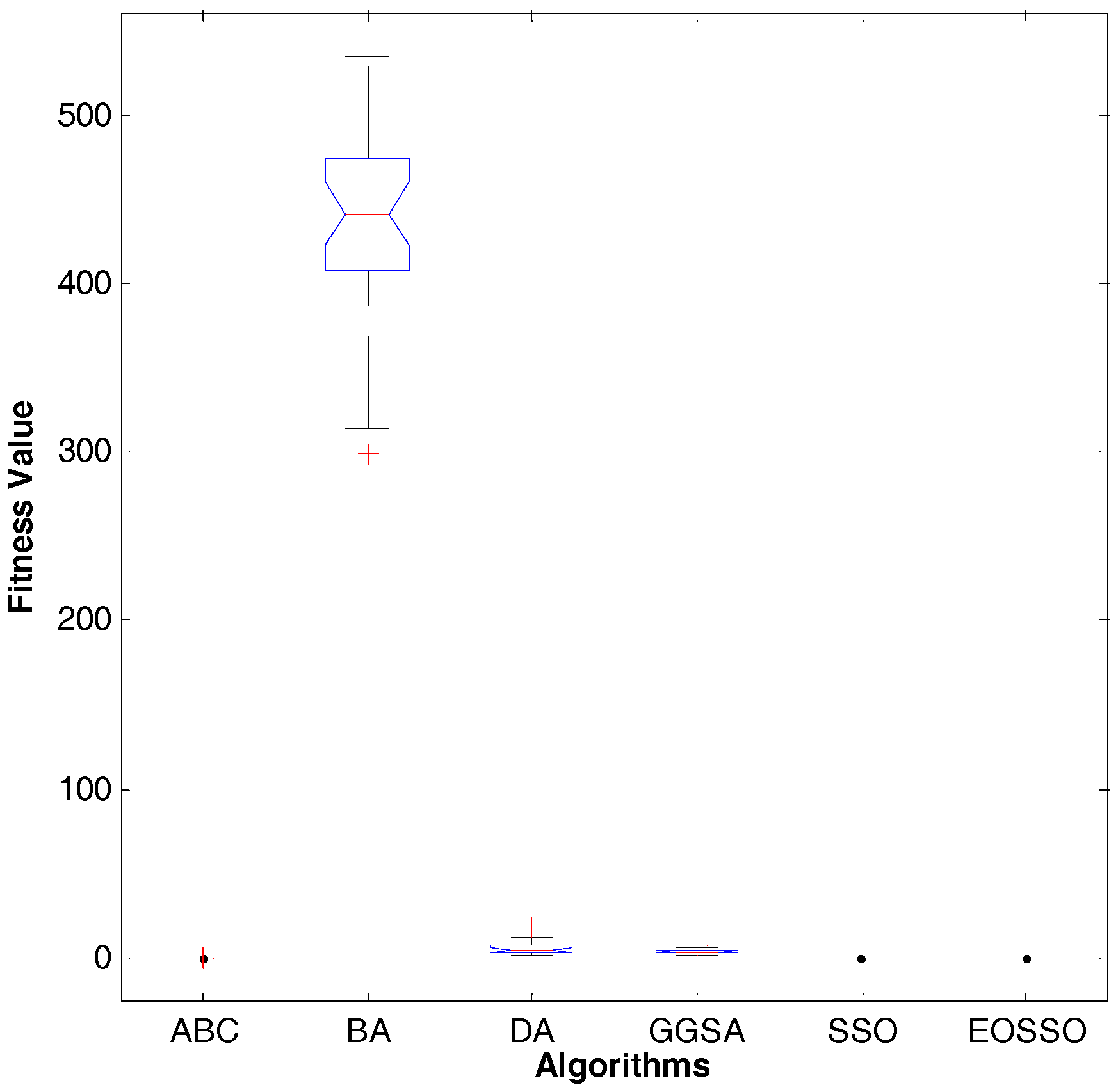

Figure 15.

Dim = 30, variance diagram of fitness value for .

Figure 15.

Dim = 30, variance diagram of fitness value for .

Figure 16.

Dim = 30, variance diagram of fitness value for .

Figure 16.

Dim = 30, variance diagram of fitness value for .

Figure 17.

Dim = 2, variance diagram of fitness value for .

Figure 17.

Dim = 2, variance diagram of fitness value for .

Figure 18.

Dim = 2, variance diagram of fitness value for .

Figure 18.

Dim = 2, variance diagram of fitness value for .

Figure 19.

Dim = 3, variance diagram of fitness value for .

Figure 19.

Dim = 3, variance diagram of fitness value for .

Figure 20.

Dim = 4, variance diagram of fitness value for .

Figure 20.

Dim = 4, variance diagram of fitness value for .

Table 1.

Unimodal benchmark function.

Table 1.

Unimodal benchmark function.

| Function | Dim | Range | fmin |

|---|

| 30 | [−100, 100] | 0 |

| 30 | [−10, 10] | 0 |

| 30 | [−100, 100] | 0 |

| 30 | [−100, 100] | 0 |

| 30 | [−30, 30] | 0 |

| 30 | [−100, 100] | 0 |

| 30 | [−1.28, 1.28] | 0 |

Table 2.

Multimodal benchmark function.

Table 2.

Multimodal benchmark function.

| Function | Dim | Range | fmin |

|---|

| 30 | [−500, 500] | −418.9829*5 |

| 30 | [−5.12, 5.12] | 0 |

| 30 | [−32, 32] | 0 |

| 30 | [−600, 600] | 0 |

| 30 | [−50, 50] | 0 |

| 30 | [−50, 50] | 0 |

Table 3.

Fixed-dimension multimodal benchmark function.

Table 3.

Fixed-dimension multimodal benchmark function.

| Function | Dim | Range | fmin |

|---|

| 2 | [−65, 65] | 1 |

| 4 | [−5, 5] | 0.00030 |

| 2 | [−5, 5] | −1.0316 |

| 2 | [−5, 5] | 0.398 |

| 2 | [−2, 2] | 3 |

| 3 | [1, 3] | −3.86 |

| 6 | [0, 1] | −3.32 |

| 4 | [0, 10] | −10.1532 |

| 4 | [0, 10] | −10.4028 |

| 4 | [0, 10] | −10.5363 |

Table 4.

Simulation results for test unimodal benchmark function.

Table 4.

Simulation results for test unimodal benchmark function.

| Function | Dim | Algorithm | Best | Worst | Mean | std. |

|---|

| 30 | ABC | 3.69 × 10−8 | 2.32 × 10−6 | 5.34 × 10−7 | 5.40 × 10−7 |

| BA | 4.58 × 102 | 5.79 × 103 | 3.13 × 103 | 1.39 × 103 |

| GGSA | 4.45 × 10−20 | 4.17 × 10−19 | 1.72 × 10−19 | 9.49 × 10−20 |

| DA | 73.2 | 1.54 × 103 | 5.50 × 102 | 3.37 × 102 |

| SSO | 8.63 × 10−2 | 8.63 × 10−2 | 8.63 × 10−2 | 0 |

| EOSSO | 3.53 × 10−67 | 3.53 × 10−67 | 3.53 × 10−67 | 2.01 × 10−82 |

| 30 | ABC | 9.47 × 10−6 | 6.56 × 10−5 | 2.75 × 10−5 | 1.27 × 10−5 |

| BA | 1.14 × 102 | 2.93 × 109 | 1.03 × 108 | 5.35 × 108 |

| GGSA | 8.97 × 10−10 | 3.42 × 10−9 | 1.88 × 10−9 | 5.72 × 10−10 |

| DA | 5.81 × 10−3 | 20.5 | 8.44 | 52.2 |

| SSO | 1.20 | 1.20 | 1.20 | 2.26 × 10−16 |

| EOSSO | 1.92 × 10−38 | 1.92 × 10−38 | 1.92 × 10−38 | 1.33 × 10−53 |

| 30 | ABC | 1.14 × 104 | 2.45 × 104 | 1.97 × 105 | 3.08 × 103 |

| BA | 4.33 × 103 | 1.71 × 104 | 9.12 × 103 | 3.17 × 103 |

| GGSA | 1.32 × 102 | 5.37 × 102 | 2.68 × 102 | 90.5 |

| DA | 3.60 × 102 | 1.49 × 104 | 5.39 × 103 | 3.87 × 103 |

| SSO | 1.98 | 1.98 | 19.8 | 1.13 × 10−15 |

| EOSSO | 7.61 × 10−76 | 7.61× 10−76 | 7.61 × 10−76 | 6.24 × 10−91 |

| 30 | ABC | 59.6 | 73.9 | 67.8 | 36.2 |

| BA | 42.9 | 68.9 | 56.0 | 65.4 |

| GGSA | 5.25 × 10−10 | 1.96 × 10−9 | 1.24 × 10−9 | 4.04 × 10−10 |

| DA | 7.38 | 24.1 | 14.8 | 43.9 |

| SSO | 1.47 × 10−1 | 1.47 × 10−1 | 1.47 × 10−1 | 5.56 × 10−17 |

| EOSSO | 1.20 × 10−37 | 1.20 × 10−37 | 1.20 × 10−37 | 2.12 × 10−53 |

| 30 | ABC | 767 | 89.3 | 37.9 | 20.7 |

| BA | 243 | 5.12 × 102 | 59.4 | 92.4 |

| GGSA | 255 | 1.18 × 102 | 33.0 | 22.0 |

| DA | 30.9 | 2.36 × 105 | 3.41 × 104 | 5.38 × 104 |

| SSO | 35.4 | 35.4 | 35.4 | 0 |

| EOSSO | 26.8 | 26.8 | 26.8 | 1.08 × 10−14 |

| 30 | ABC | 3.43 × 10−8 | 6.92 × 10−6 | 1.01 × 10−6 | 1.67 × 10−6 |

| BA | 6.99 × 102 | 5.71 × 103 | 2.82 × 103 | 1.30 × 103 |

| GGSA | 0 | 0 | 0 | 0 |

| DA | 1.20 × 102 | 1.56 × 103 | 4.22 × 102 | 3.16 × 102 |

| SSO | 1.08 × 10−1 | 1.08 × 10−1 | 1.08 × 10−1 | 1.41 × 10−17 |

| EOSSO | 5.01 × 10−1 | 5.01 × 10−1 | 5.01 × 10−1 | 2.26 × 10−16 |

| 30 | ABC | 4.19 × 10−1 | 10.2 | 7.09 × 10−1 | 1.58 × 10−1 |

| BA | 6.93 × 10−3 | 2.09 × 10−2 | 1.23 × 10−2 | 3.65 × 10−3 |

| GGSA | 3.90 × 10−3 | 1.39 × 10−1 | 1.73 × 10−2 | 2.37 × 10−2 |

| DA | 1.75 × 10−2 | 3.25 × 10−1 | 1.27 × 10−1 | 8.00 × 10−2 |

| SSO | 1.68 × 10−2 | 1.68 × 10−2 | 1.68 × 10−2 | 0 |

| EOSSO | 1.22 × 10−4 | 1.22 × 10−4 | 1.22 × 10−4 | 5.51 × 10−20 |

Table 5.

Simulation results for test multimodal benchmark function.

Table 5.

Simulation results for test multimodal benchmark function.

| Function | Dim | Algorithm | Best | Worst | Mean | std. |

|---|

| 30 | ABC | −1.21 × 104 | −1.10 × 104 | −1.16 × 104 | 2.81 × 102 |

| BA | −8.45 × 103 | −5.97 × 103 | −7.06 × 103 | 7.40 × 102 |

| GGSA | −3.93 × 103 | −2.17 × 103 | −3.08 × 103 | 3.64 × 102 |

| DA | 7.38 | 24.1 | 14.8 | 4.39 |

| SSO | −7.61 × 103 | −7.61 × 103 | −7.61 × 103 | 2.78 × 10−12 |

| EOSSO | −7.69 × 103 | −7.69 × 103 | −7.69 × 103 | 3.70 × 10−12 |

| 30 | ABC | 1.02 | 11.6 | 5.47 | 2.9 |

| BA | 1.48 × 102 | 2.51 × 102 | 1.99 × 102 | 26.3 |

| GGSA | 7.95 | 23.8 | 16 | 4.57 |

| DA | 34.3 | 1.99 × 102 | 1.14 × 102 | 49.9 |

| SSO | 63.3 | 63.3 | 63.3 | 0 |

| EOSSO | 0 | 0 | 0 | 0 |

| 30 | ABC | 9.16 × 10−5 | 1.58 × 10−3 | 4.79 × 10−4 | 3.79 × 10−4 |

| BA | 18.5 | 19.9 | 19 | 2.50 × 10−1 |

| GGSA | 1.48 × 10−10 | 5.26 × 10−10 | 3.09 × 10−10 | 7.42 × 10−11 |

| DA | 4.44 × 10−15 | 10.8 | 6.46 | 2.01 |

| SSO | 3.12 × 10−1 | 3.12 × 10−1 | 3.12 × 10−1 | 5.65 × 10−17 |

| EOSSO | 4.44 × 10−15 | 4.44 × 10−15 | 4.44 × 10−15 | 0 |

| 30 | ABC | 2.31 × 10−7 | 1.34 × 10−2 | 6.06 × 10−4 | 2.47× 10−3 |

| BA | 2.98 × 102 | 5.34 × 102 | 4.31 × 102 | 60 |

| GGSA | 1.63 | 7.87 | 3.59 | 1.3 |

| DA | 1.56 | 17.5 | 5.05 | 3.4 |

| SSO | 1.26 × 10−2 | 1.26 × 10−2 | 1.26 × 10−2 | 0 |

| EOSSO | 0 | 0 | 0 | 0 |

| 30 | ABC | 1.02 × 10−10 | 1.67 × 10−8 | 3.32 × 10−9 | 3.87 × 10−9 |

| BA | 22.1 | 49.7 | 38.3 | 7.94 |

| GGSA | 4.78 × 10−22 | 2.07 × 10−1 | 3.83 × 10−2 | 6.14 × 10−2 |

| DA | 1.71 | 52.6 | 12.2 | 12.9 |

| SSO | 1.37 × 10−3 | 1.37 × 10−3 | 1.37 × 10−3 | 2.21 × 10−19 |

| EOSSO | 9.29 × 10−3 | 9.29 × 10−3 | 9.29 × 10−3 | 3.53 × 10−18 |

| 30 | ABC | 1.27 × 10−8 | 3.55 × 10−6 | 2.93 × 10−7 | 6.53 × 10−7 |

| BA | 80.8 | 1.19 × 102 | 1.04 × 102 | 10.1 |

| GGSA | 5.41 × 10−21 | 1.09 × 10−2 | 3.66 × 10−3 | 2.00 × 10−3 |

| DA | 4.95 | 3.09 × 104 | 1.52 × 103 | 5.69 × 103 |

| SSO | 2.04 × 10−2 | 2.04 × 10−2 | 2.04 × 10−2 | 3.53 × 10−18 |

| EOSSO | 6.17 × 10−1 | 6.17 × 10−1 | 6.17 × 10−1 | 3.39 × 10−16 |

Table 6.

Simulation results for test fixed-dimension multimodal benchmark function.

Table 6.

Simulation results for test fixed-dimension multimodal benchmark function.

| Function | Dim | Algorithm | Best | Worst | Mean | std. |

|---|

| 2 | ABC | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 1.20 × 10−5 |

| BA | 9.98 × 10−1 | 22.9 | 10 | 6.97 |

| GGSA | 9.98 × 10−1 | 10.7 | 3 | 2.36 |

| DA | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 8.09 × 10−11 |

| SSO | 1.99 | 1.99 | 1.99 | 1.36 × 10−15 |

| EOSSO | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 4.52 × 10−16 |

| 4 | ABC | 4.16 × 10−4 | 1.48 × 10−3 | 9.93 × 10−4 | 2.52 × 10−4 |

| BA | 3.07 × 10−4 | 5.19 × 10−3 | 1.10 × 10−3 | 1.17 × 10−3 |

| GGSA | 4.80 × 10−4 | 3.43 × 10−3 | 1.78 × 10−3 | 6.36 × 10−4 |

| DA | 4.91 × 10−4 | 3.44 × 10−3 | 1.28 × 10−3 | 6.43 × 10−3 |

| SSO | 4.18 × 10−4 | 4.18 × 10−4 | 4.18 × 10−4 | 1.65 × 10−19 |

| EOSSO | 3.70 × 10−4 | 3.70 × 10−4 | 3.70 × 10−4 | 2.21 × 10−19 |

| 2 | ABC | −1.03 | −1.03 | −1.03 | 1.25 × 10−6 |

| BA | −1.03 | 2.1 | −8.18 × 10−1 | 6.19 × 10−1 |

| GGSA | −1.03 | −1.03 | −1.03 | 6.18 × 10−16 |

| DA | −1.03 | −1.03 | −1.03 | 6.27 × 10−8 |

| SSO | −1.03 | −1.03 | −1.03 | 4.52 × 10−16 |

| EOSSO | −1.03 | −1.03 | −1.03 | 6.78 × 10−16 |

| 2 | ABC | 3.97 × 10−1 | 3.98 × 10−1 | 3.97 × 10−1 | 3.64 × 10−5 |

| BA | 3.97 × 10−1 | 3.97 × 10−1 | 3.97 × 10−1 | 8.40 × 10−9 |

| GGSA | 3.97 × 10−1 | 3.97 × 10−1 | 3.97 × 10−1 | 0 |

| DA | 3.97 × 10−1 | 3.97 × 10−1 | 3.97 × 10−1 | 7.62 × 10−7 |

| SSO | 3.97 × 10−1 | 3.97 × 10−1 | 3.97 × 10−1 | 0 |

| EOSSO | 3.97 × 10−1 | 3.97 × 10−1 | 3.97 × 10−1 | 0 |

| 2 | ABC | 3 | 3.03 | 3 | 8.64 × 10−3 |

| BA | 3 | 84. | 12 | 21.6 |

| GGSA | 3 | 3 | 3 | 1.98 × 10−15 |

| DA | 3 | 3 | 3 | 3.28 × 10−7 |

| SSO | 3 | 3 | 3 | 4.52 × 10−16 |

| EOSSO | 3 | 3 | 3 | 1.81 × 10−15 |

| 3 | ABC | −3.86 | −3.86 | −3.86 | 1.77 × 10−6 |

| BA | −3.86 | −3.08 | −3.83 | 1.41 × 10−1 |

| GGSA | −3.86 | −3.86 | −3.86 | 2.52 × 10−15 |

| DA | −3.86 | −3.85 | −3.86 | 1.05 × 10−3 |

| SSO | −3.86 | −3.86 | −3.86 | 1.36 × 10−15 |

| EOSSO | −3.86 | −3.86 | −3.86 | 4.48 × 10−14 |

| 6 | ABC | −3.32 | −3.31 | −3.32 | 1.03 × 10−3 |

| BA | −3.32 | −3.2 | −3.25 | 6.02 × 10−2 |

| GGSA | −3.32 | −2.81 | −3.29 | 9.71 × 10−2 |

| DA | −3.32 | −3.07 | −3.26 | 7.34 × 10−2 |

| SSO | −3.2 | −3.2 | −3.2 | 1.36 × 10−15 |

| EOSSO | −3.32 | −3.2 | −3.2 | 2.17 × 10−2 |

| 4 | ABC | −10.1 | −9.64 | −9.89 | 1.58 × 10−1 |

| BA | −10.1 | −2.63 | −4.96 | 2.85 |

| GGSA | −10.1 | −5.05 | −5.22 | 9.30 × 10−1 |

| DA | −10.1 | −5.09 | −9.64 | 1.54 |

| SSO | −10.1 | −10.1 | −10.1 | 1.81 × 10−15 |

| EOSSO | −10.1 | −10.1 | −10.1 | 9.70 × 10−11 |

| 4 | ABC | −10.3 | −9.78 | −10.1 | 1.90× 10−1 |

| BA | −10.4 | 2.75 | 5.33 | 3.19 |

| GGSA | −10.4 | −5.08 | −7.56 | 2.69 |

| DA | −10.4 | −5.08 | −9.12 | 2.25 |

| SSO | −10.3 | −10.3 | −10.3 | 5.42 × 10−15 |

| EOSSO | −10.4 | −10.4 | −10.4 | 2.02 × 10−11 |

| 4 | ABC | −10.4 | −9.67 | −1.01 × 10−1 | 2.06 × 10−1 |

| BA | −10.5 | −2.42 | −5.36 | 3.52 |

| GGSA | −10.5 | −10.5 | −10.5 | 1.32 × 10−15 |

| DA | −10.5 | −5.17 | −9.98 | 1.63 |

| SSO | −10.5 | −10.5 | −10.5 | 3.61 × 10−15 |

| EOSSO | −10.5 | −10.5 | −10.5 | 2.45 × 10−11 |