Abstract

Compressive principal component pursuit (CPCP) recovers a target matrix that is a superposition of low-complexity structures from a small set of linear measurements. Pervious works mainly focus on the analysis of the existence and uniqueness. In this paper, we address its stability. We prove that the solution to the related convex programming of CPCP gives an estimate that is stable to small entry-wise noise. We also provide numerical simulation results to support our result. Numerical results show that the solution to the related convex program is stable to small entry-wise noise under board condition.

1. Introduction

Recently, there has been a rapidly increasing interest in recovering a target matrix that is a superposition of low-rank and sparse components from a small set of linear measurements. In many cases, this problem is shorted for matrix completion [1,2,3], which arises in a number of fields, such as medical imaging [4,5], seismology [6], and computer vision [7,8] and Kalman filter [9]. Mathematically, there exists a large-scale data matrix , where is a low-rank matrix, and is a sparse matrix. One of the important problems here is how to extract the intrinsic low-dimensional structure from a small set of linear measurements. In a recent paper [10], E. J. Candès et al. proved that most low-rank matrices and the sparse components can be recovered, provided that the rank of the low-rank component is not too large, and that the sparse component is reasonably sparse. It is more important that they proved that these two components can be recovered by solving a simple convex optimization problem. In [11], John Wright et al. generalized this problem to decompose a matrix into multiple incoherent components:

where are norms that encourage various types of low-complexity structure. The authors also provide a sufficient condition that can promise the existence and uniqueness theorem of compressive principle component pursuit (CPCP). The result in [11] requires that the components are low-complexity structures.

However, in many applications, the observed measurements are always corrupted by different kinds of noise which may affect every entry of the data matrix. In order to further complete the theory developed in [11], it is necessary to research the stability of CPCP which can guarantee stable and accurate recovery in the presence of entry-wise noise. In this paper, we make a commendable attempt in this respect. We denote M as the observing matrix which can decompose into multiple incoherent components, and assume that

where are corresponding incoherent components and is an independent and identically distributed (i.i.d.) noise. We assume that is only limited by for some . In order to recover the unknown low-complexity structures, we suggest solving the following relaxed optimization problem.

In this paper, we prove the solution of (2) is stable to small entry-wise noise. The rest of paper is organized as follows. In Section 2, we show some notations and the main result, which will be proven in Section 3 and Section 4. In Section 3, we give two important lemmas which are an important parts of our main result. In Section 4, The proof of Theorem 1 will be given. We further provide numerical results in Section 5 and conclude the paper in Section 6.

2. Notations and Main Results

In this section, we first give some important notions which will be used throughout this paper, and then provide the main result.

2.1. Notations

We denote the operator norm of matrix by , the Frobenius norm by , and the nuclear norm by , and denote the dual norm of by . The Euclidean inner product between two matrices is defined by the formula . Note that . The Cauchy–Schwarz inequality gives , and it is well known that we also have (e.g., [1,12]). majorized the Frobenius norm means for all X. Linear transformations which act on the space of matrices are denoted by . It is easy to see that the operator of is a high dimension matrix. The operator norm of the operator is denoted by . It should be noted that .

For any matrix vector , where is i-th matrix. We will consider two norms of this matrix pair, which can define as and . In order to simplify the stability analysis of CPCP, we also define the subspaces (the common component) , and (the different component) . In order to analyze the behavior of special projection operator, we define the projection operator .

we assume that are decomposable norms. The definition of decomposable norms is below.

Definition 1 (Decomposable Norms).

if there exists a subspace T and a matrix Z satisfying

where denotes the dual norm of and is nonexpansive with respect to . Then, we say that the norm is decomposable at X.

Definition 2 (Inexact Certificate).

We say Λ is an -inexact certificate for a putative solution to (1.1) with parameters if for each i, , and .

2.2. Main Results

Pertaining to Problem (1), we have the result as follows.

Lemma 1

([11]). Assume there exists a feasible solution to the optimization Problem (1). Suppose that each of the norms is decomposable at , and that each of the majorizes the Frobenius norm. Then, x is the unique optimal solution if are independent subspaces with

and there exists an -inexact certificate , with

The main contribution of this paper is the stability analysis of the solution of CPCP; the main Theorem of [13] can be regarded as a special case of our result (although the main idea of proof is similar to the paper [13], there are some important differences here). Next, we will provide the proposed related convex programming (2) is stable from small entry-wise noise under board condition. The main result of this paper is provided below.

Theorem 1.

Assume , are the solutions of the optimization Problems (1) and (2), respectively. Suppose that each of the norms is decomposable at , and each of the majorizes the Frobenius norm. Then, if are independent subspaces with

and there exists an -inexact certificate , with

then for any which is limited by , the solution to the convex programming (2) obeys

where is a numerical constant only depending upon .

3. Main Lemmas

In this section, we present two main lemmas which are used to obtain Theorem 1. The paper [11] states that:

Lemma 2

([11]). Suppose are independent subspaces of and , under the other conditions of Lemma 1. Then, the below equations

have a solution obeying

In order to bound the behavior of the norm of , we have the first main lemma that is used to obtain Theorem 1.

Lemma 3.

Assume . Suppose there exists an -inexact certificate satisfying Lemma 1. Then, for any perturbation obeying

wherein, let . It is easy to see that under the hypothesis of Lemma 1, the coefficients of satisfy .

Proof.

According to the property of convex function, for any subgradients , we can obtain

Now, because the norm of the subgradients is decomposable at , there exists Λ, , α, and β obeying , and . Let (see Lemma 2). Note that

where the second equation obeys . According to the above equation, we will continue bounding .

With the definition of duality, there exists with such that . Moreover, with the Cauchy–Schwarz inequality, we have

Let . Then, we can obtain:

Combining with the inequalities above, we can obtain

The Lemma 3 is established. ☐

For bounding the behavior of , we have to bound the projection operator . Therefore, we have the second main lemma that will be used to obtain Theorem 1.

Lemma 4.

Assume that . For any matrix vector , we have

It is easy to see that under the hypothesis of , the constant is strictly greater than zero.

Proof.

With respect to any matrix , we have , where . It is easy to see that . Then, we have

Note that

Together with , we have

where in the second inequality, we have used the inequity that for any , . Therefore, Lemma 4 is established. ☐

4. Proof of Theorem 1

In this section, we will provide the proof of Theorem 1. Our main proof is based on two elementary and important properties of , which is the solution of Problem (2). First, note that is also a feasible solution to Problem (2) and is the optimum solution; therefore, we can obtain . Second, according to triangle inequality, we can obtain

Let , where . According to the definition of subspace of γ, we denote , for short. Our main aim is to bound , which can be rewritten as

Combining with (4), we have

Therefore, it is necessary to bound the other two terms on the right-hand-side of (5). We will bound the second and third terms, respectively.

Norm equivalence theorem tells us that every two norms on a finite dimensional normed space are equivalent, which implies that there exists two constants satisfying

A. Estimate the third term of (5) Let Λ be a dual certificate obeying Lemma 1. Then, using triangle inequality, we have

Combining with Lemma 3, we can obtain

wherein, to get the third inequality, we used the fact . For simplification, let

Therefore, we have

Combining with (7), we can obtain

Then

where . We will estimate the third term of (5). Using triangle inequality, we have

where . The second inequality is set up by (6); the fourth inequality is obtained by (8); the last one is obtained by the fact . Therefore, we can obtain

which implies that the third term of (5) can bound by .

B. Estimate the second term of (5) According to Lemma 4, we can obtain

where . Note that

Therefore,

Taking the previous two inequalities, we have

where is an appropriate constant. Combining with (9), we can obtain

Therefore, Theorem 1 is established.

Remark 1.

if , then Theorem 1 will degrade to the main result of [13].

5. Numerical Results

In this section, numerical experiments with varieties of the value of parameter σ, parameter , and rank r are given. For each setting of parameters, we show the average errors over 10 trials. Our implementation was realized with MATLAB. All the computational results were obtained on a desktop computer with a 2.27-GHz CPU (Intel(R) Core(TM) i3) and 2 GB of memory. Without loss of generality, we assume that . In [13], the authors certified this result with Accelerated Proximal Gradient (APG) by numerical experiments. In our numerical experiments, we will provide that this result is also proper with Principal Component Pursuit by Alternating Direction Method (PCP-ADM). In our simulations, our matrix is generated by the formulation as: , and a rank-r matrix is a product , where X and Y are and matrices in which entries are independently sampled from a distribution. According to PCP-ADM, we can generate by choosing a support set of size uniformly at random, and set . Noise component is generated with entries independently sampled from a distribution. Without loss of generality, we set and , and other parameters which PCP-ADM requires are the same as parameters of PCP-ADM [10]. Here we briefly interpret PCP-ADM. In [10], in order to stably recover , the ADM method operates on the augmented Lagrangian

The details of the PCP-ADM can be found in [14,15].

In our simulations, the stopping criterion of the PCP-ADM algorithm can be

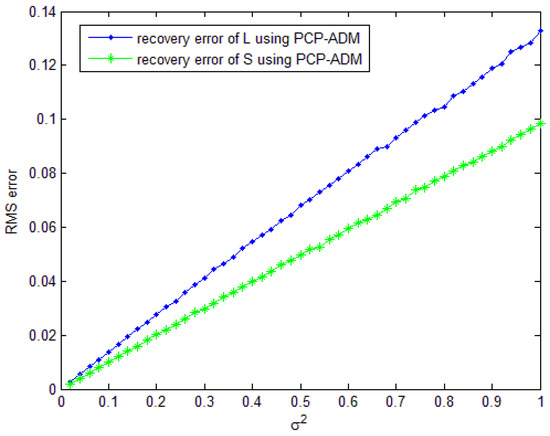

or the maximum iteration number (). In order to estimate the errors, we use the root-mean-squared (RMS) error as , for the low-rank component and the sparse component, respectively. Figure 1 shows the RMS errors’ variation with different values of . It is noted that the RMS error grows approximately linearly with the noise level in Figure 1. This phenomenon verifies Theorem 1 by numerical experiments with PCP-ADM (this phenomenon also exists in [13] with APG, which is very different from PCP-ADM in principle).

Figure 1.

Root-mean-squared (RMS) errors as a function of with . PCP-ADM: Principal Component Pursuit by Alternating Direction Method.

6. Conclusions

In this paper, we have investigated the the stability of CPCP. Our main contribution is the proof of Theorem 1, which implies the solution to the related convex programming (1.2) is stable to small entrywise noise under board condition. It is an extension of the result in [13], which only allows . Moreover, in the numerical experiments, we have investigated the performance of the PCP-ADM algorithm. Numerical results showed that it is stable to small entrywise noise.

Acknowledgments

The author would like to thank the anonymous reviewers for their comments that helped to improve the quality of the paper. This research was supported by the National Natural Science Foundation of China (NSFC) under Grant U1533125, and the Scientific Research Program of the Education Department of Sichuan under Grant 16ZB0032.

Author Contributions

Qingshan You and Qun Wan. contributed reagents/materials/analysis tools; Qingshan You wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Candès, E.J.; Recht, B. Exact matrix completion via convex optimzation. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Candès, E.J.; Plan, Y. Matrix completion with noise. Proc. IEEE 2010, 98, 925–936. [Google Scholar] [CrossRef]

- Candès, E.J.; Tao, T. The power of convex relaxation: Near-optimal matrix completion. IEEE Trans. Inf. Theory 2010, 56, 2053–2080. [Google Scholar] [CrossRef]

- Ellenberg, J. Fill in the blanks: Using math to turn lo-res datasets into hi-res samples. Wired 2010. Available online: https://www.wired.com/2010/02/ff_algorithm/all/1 (accessed on 26 January 2016). [Google Scholar]

- Antonin Chambolle and Pierre-Louis Lions. Image recovery via total variation minimization and related problems. Numer. Math. 1997, 76, 167–188. [Google Scholar]

- Jon, F. Claerbout and Francis Muir. Robust modeling of erratic data. Geophysics 1973, 38, 826–844. [Google Scholar]

- Zeng, B.; Fu, J. Directional discrete cosine transforms: A new framework for image coding. IEEE Trans. Circuits Syst. Video Technol. 2011, 18, 305–313. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef] [PubMed]

- Rodger, J.A. Toward reducing failure risk in an integrated vehicle health maintenance system: A fuzzy multi-sensor data fusion Kalman filter approach for IVHMS. Expert Syst. Appl. 2012, 39, 9821–9836. [Google Scholar] [CrossRef]

- Candès, E.J.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis? J. ACM 2011. [Google Scholar] [CrossRef]

- Wright, J.; Ganesh, A.; Min, K.; Ma, Y. Compressive Principal Component Pursuit. Available online: http://yima.csl.illinois.edu/psfile/CPCP.pdf (accessed on 9 April 2012).

- Recht, B.; Fazel, M.; Parrilo, P. Guaranteed minimum rank solutions of matrix equations via nuclear norm minimization. arXiv, 2007; arxiv:0706.4138. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, X.; Wright, J.; Candès, E.J.; Ma, Y. Stable Principal Component Pursuit. arXiv, 2010; arXiv:1001.2363v1. [Google Scholar]

- Yuan, X.; Yang, J. Sparse and low rank matrix decomposition via alternating direction method. Pac. J. Optim. 2009, 9, 167–180. [Google Scholar]

- Kontogiorgis, S.; Meyer, R. A variable-penalty alternating direction method for convex optimization. Math. Program. 1989, 83, 29–53. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).