Abstract

We study several classical decision problems on finite automata under the (Strong) Exponential Time Hypothesis. We focus on three types of problems: universality, equivalence, and emptiness of intersection. All these problems are known to be CoNP-hard for nondeterministic finite automata, even when restricted to unary input alphabets. A different type of problems on finite automata relates to aperiodicity and to synchronizing words. We also consider finite automata that work on commutative alphabets and those working on two-dimensional words.

1. Introduction

Many computer science students will get the impression, at least when taught the basics on the Chomsky hierarchy in their course on Formal Languages, that finite automata are fairly simple devices, and hence it is expected that typical decidability questions on finite automata are easy ones. In fact, for instance, the non-emptiness problem for finite automata is solvable in polynomial time, as well as the uniform word problem. (Even tighter descriptions of the complexities can be given within classical complexity theory, but this is not so important for our presentation here, as we mostly focus on polynomial versus exponential time.) This contrasts to the respective statements for higher levels of the Chomsky hierarchy.

However, this impression is somewhat misled. Finite automata can be also viewed as edge-labeled directed graphs, and as many combinatorial problems are harder on directed graphs compared to undirected ones, it should not come as such a surprise that many interesting questions are actually NP-hard for finite automata.

We will study hard problems for finite automata under the perspective of the Exponential Time Hypothesis (ETH) and variants thereof, as surveyed in [1]. In particular, using the famous sparsification lemma [2], ETH implies that there is no algorithm for Satisfiability (SAT) of m-clause 3CNF formulae with n variables, or 3SAT for short. Notice that for these reductions to work, we have to start out with 3SAT (i.e., with Boolean formulae in conjunctive normal form where each clause contains (at most) three literals), as it seems unlikely that sparsification also works for general formulae in conjunctive normal form; see [3]. Occasionally, we will also use SETH (Strong ETH); this hypothesis implies that there is no algorithm for solving the satisfiability problem (CNF-)SAT for general Boolean formulae in conjunctive normal form with n variables for any .

Recall that the notation suppresses polynomial factors, measured in the overall input length. This notation is common in exact exponential-time algorithms, as well as in parameterized algorithms, as it allows to focus on the decisive exponential part of the running time. We refer the reader to textbooks like [4,5,6].

Let us now briefly yet more thoroughly discuss the objects and questions that we are going to study in the following. Mostly, we consider finite-state automata that read input words over the input alphabet Σ one-way, from left to right, and they accept when entering a final state upon reading the last letter of the word. We only consider deterministic finite automata (DFAs) and nondeterministic finite automata (NFAs). The language (set of words) accepted by a given automaton A is denoted by . For these classical devices, both variants of the membership problem are solvable in polynomial time and they are therefore irrelevant to the complexity studies we are going to undertake.

Rather, we are going to study the following three problems. In each case, we clarify the natural parameters that come with the input, as we will show algorithms whose running times depend on these parameters, and, more importantly, we will prove lower bounds for such algorithms based on (S)ETH.

Problem 1 (Universality).

Given an automaton A with input alphabet Σ, is ? Parameters are the number q of states of A and the size of Σ.

Problem 2 (Equivalence).

Given two automata with input alphabet Σ, is ? Parameters are an upper bound q on the number of states of and the size of Σ.

Clearly, Universality reduces to Equivalence by choosing the automaton such that . Also, all these problems can be solved by computing the equivalent (minimal) deterministic automata, which requires time . In particular, notice that minimizing a DFA with s states takes time with Hopcroft’s algorithm, so that the running time of first converting a q-state NFA into an equivalent DFA () and then minimizing a -state DFA (in time ). Our results on these problems for NFAs are summarized in Table 1. The functions refer to the exponents, so, e.g., according to the first row, we will show in this paper that there is no algorithm for Universality for q-state NFAs with unary input alphabets.

Table 1.

Universality/Equivalence; functions refer to exponents of bounding functions.

Problem 3 (Intersection).

Given k automata , each with input alphabet Σ, is ? Parameters are the number of automata k, an upper bound q on the number of states of the automata , and the size of Σ.

For (Emptiness of) Intersection, our results are summarized in Table 2, whose entries are to be read similar to those of Table 1.

Table 2.

Intersection; functions refer to exponents of bounding functions.

All these problems are already computationally hard for tally NFAs, i.e., NFAs on unary inputs. Hence, we will study these first, before turning towards larger input alphabets. The classical complexity status of these and many more related problems is nicely surveyed in [7]. The classical complexity status of the mentioned problems is summarized in Table 3. Notice that (only) the last problem is also hard on deterministic finite automata.

Table 3.

The classical complexity status of three types of problems on nondeterministic finite automata.

In the second part of the paper, we are extending our research in two directions: we consider further hard problems on finite automata, more specifically, the question of whether a given DFA accepts an aperiodic language, and questions related to synchronizing words, and we also look at finite automata that work on objects different from strings.

In all the problems we study, we sometimes manage to show that the known or newly presented algorithms are in some sense tight, assuming (S)ETH, while there are also always cases where we can observe some gaps between lower and upper bounds. Without making this explicit in each case, such situations obviously pose interesting question for future research. Also, the mentioned second part of the paper can only be seen as a teaser to look more carefully into computationally hard complexity questions related to automata, expressions, grammars etc. Most of the results have been presented in a preliminary form at the conference CIAA in Seoul, 2016; see [8].

2. Universality, Equivalence, Intersection: Unary Inputs

The simplest interesting question on tally finite automata is the following one. Given an NFA A with input alphabet , is ? In [9], the corresponding problem for regular expressions was examined and shown to be CoNP-complete. This problem is also known as NEC (Non-empty Complement). As the reduction given in [9] starts off from 3SAT, we can easily analyze the proof to obtain the following result. In fact, it is often a good strategy to first start off with known NP-hardness results to see how these can be interpreted in terms of ETH-based lower bounds. However, as we can also see with this example, this recipe does not always yield results that match known upper bounds. However, the analysis often points to weak spots of the hardness construction, and the natural idea is to attack these weak spots afterwards. This is exactly the strategy that we will follow in this first problem that we consider in this paper.

We are sketching the construction for NP-hardness (as a reduction from 3SAT as in the paper of Stockmeyer and Meyer) in the following for tally NFAs.

Let F be a given CNF formula with variables . F consists of the clauses . After a little bit of cleanup, we can assume that each variable occurs at most once in each clause. Let be the first n prime numbers. It is known that . To simplify the following argument, we will only use that , as shown in ([10], Satz, p. 214). If a natural number z satisfies

then z represents an assignment α to with . Then, we say that z satisfies F if this assignment satisfies F. Clearly, if for some , then z cannot represent any assignment, as is neither 0 nor 1. (This case does not occur for .) There is a DFA for with states. Moreover, there is even an NFA for

with at most many states.

To each clause with variables occurring in , for a suitable injective index function , there is a unique assignment to these variables that falsifies . This assignment can be represented by the language

with being uniquely determined by for . As (3SAT), can be accepted by a DFA with at most states. Hence, can be accepted by an NFA with at most many states. In conclusion, there is an NFA A for with at most many states with iff F is satisfiable. For the correctness, it is crucial to observe that if for some , then also . Hence, if , then for some . As , ℓ represents an assignment α that does not falsify each clause (by construction of the sets ), so that α satisfies the given formula. Conversely, if α satisfies F, then α can be represented by an integer z, . Now, as it represents an assignment, but neither for any , as . Observe that in the more classical setting, this proves that Non-Universality is NP-hard.

We like to emphasize a possible method to ETH-based results, namely, analyzing known NP-hardness reductions first and then refining them to get improved ETH-based results.

Unless ETH fails, for any , there is no -time algorithm for deciding, given a tally NFA A on q states, whether .

Assume that, for any , there was an -time algorithm for deciding, given a tally NFA A on q states, whether . Consider some 3SAT formula with n variables and m clauses. We can assume (by the Sparsification Lemma) that this 3SAT instance is sparse. We already described the construction of [9] above. So, we can obtain in polynomial time an NFA with many states as an instance of Universality. This instance can be solved in time by . Hence, can be used to solve the given 3SAT instance in time

in the interesting range of , which contradicts ETH. To formally do the necessary computations in the previous theorem (and similar results below), dealing with logarithmic terms in the exponent, we need to understand the correctness of some computations in the notation. We exemplify such a computation in the following.

Lemma 1.

: .

Proof.

This statement can be seen by the following line, using the rule of l’Hospital.

Notice that by our assumption. ☐

We are now trying to strengthen the assertion of the previous theorem. There are actually two weak spots in the mentioned reduction: (a) The ϵ-term in the statement of the theorem is due to logarithmic factors introduced by encodings with prime numbers; however, the encodings suggested in [9] leave rather big gaps of numbers that are not coding any useful information. (b) The -term is due to writing down all possible reasons for not satisfying any clause, which needs about many states (ignoring logarithmic terms) on its own; so, we are looking for a problem that allows for cheaper encodings of conflicts. To achieve our goals, we need the following theorem, see [5], Theorem 14.6.

Theorem 1.

Unless ETH fails, there is no -time algorithm for deciding if a given m-edge n-vertex graph has a (proper) 3-coloring.

As it seems to be hard to find proof details anywhere in the literature, we provide them in the following.

Proof.

Namely, in some standard NP-hardness reduction (from 3SAT via 3-Not-All-Equal-SAT), we could first sparsify the given 3SAT instance, obtaining an instance with N variables and M clauses. Also, we can assume that . The 3-NAE-SAT instance would replace each clause of the 3SAT instance by and , where is a special new variable. Hence, this instance has variables and clauses. The 3-coloring instance that we then obtain has vertices and edges in the variable gadgets, as well as vertices and edges in the clause gadgets, plus edges to connect the clause with the variable gadgets. Hence, in particular . This rules out -time algorithms for solving 3-Coloring on m-edge graphs. ☐

The previous result can be used, together with the sketched ideas, to prove the following theorem.

Theorem 2.

Unless ETH fails, there is no -time algorithm for deciding, given a tally NFA A on q states, whether .

Proof.

We are now explaining a reduction from 3-Coloring to tally NFA Universality. Let be a given graph with vertices . E consists of the edges . Let is a prime and . To simplify the following argument, we will only use that the expected number of primes below n is at least , as shown in [10], Satz, p. 214. Hence we can assume P contains at least primes for . (For the sake of clarity of presentation, we ignore some small multiplicative constants here and in the following.)

We group the vertices of V into blocks of size . A coloring within such a block can be encoded by a number between 0 and . Hence, a coloring is described by an l-tuple of numbers.

If a natural number z satisfies

where is representing the encoding of a block, then z is an encoding of a coloring of some vertex from V.

There is a DFA for with at most states, where j is number that does not represent a valid coloring of the k-th block. Similarly, there is also a DFA for with this number of states (only the set of final states changes). Moreover, there is even an NFA for

with at most states.

To formally describe invalid colorings, we also need a function that associates the block number to a given vertex index (where the coloring information can be found), and partial functions for each vertex index j, yielding the coloring of vertex . We can cyclically extend by setting whenever is defined.

For each edge with end vertices with there are three colorings of that violate the properness condition. We can capture such a violation in the language . is regular, as

with

being finite, as . So, can be accepted by a DFA with at most states, ignoring constant factors. Hence, can be accepted by an NFA with at most many states. In conclusion, there is an NFA A for with at most many states with iff G is 3-colorable.

For the correctness, it is crucial to observe that if for some , then also . Hence, if , then for some . As , r represents a coloring c that does not color any edge improperly (by construction of the sets ). Conversely, if c properly colors G, then c can be represented by an integer z, . Now, as it represents a coloring, but neither for any , as .

Observe that in the more classical setting, this proves that Universality is CoNP-hard.

As ETH rules out -algorithms for solving 3-Coloring on m-edge graphs with n vertices, we can assume that we have as an upper bound on the number q of states of the NFA instance constructed as described above. If there would be an -time algorithm for Universality of q-state tally NFAs, then we would find an algorithm for solving 3-Coloring that runs in time This would contradict ETH. ☐

How good is this improved bound? There is a pretty easy algorithm to solve the universality problem. First, transform the given tally NFA into an equivalent tally DFA, then turn it into a DFA accepting the complement and check if this is empty. The last two steps are clearly doable in linear time, measured in the size of the DFA obtained in the first step. For the conversion of a q-state tally NFA into an equivalent -state DFA, it is known that is possible but also necessary [11]. The precise estimate on is

also known as Landau’s function. It is tightly related to the prime number estimate for we have already seen. So, in a sense, the ETH bound poses the question if there are other algorithms to decide universality for tally NFAs, radically different from the proposed one, not using DFA conversion first. Let us mention that there have been indeed proposal for different algorithms to test universality for NFAs; we only refer to [12], but we are not aware of any accompanying complexity analysis that shows the superiority of that approach over the classical one. Conversely, it might be possible to tighten the upper bound.

Notice that this problem is trivial for tally DFAs by state complementation and hence reduction to the polynomial-time solvable emptiness problem.

We now turn to the equivalence problem for tally NFAs. As an easy corollary from Theorem 2, we obtain the next result.

Corollary 1.

Unless ETH fails, there is no -time algorithm for deciding equivalence of two NFAs and on at most q states and input alphabet .

We are finally turning towards Tally-DFA-Intersection and also towards Tally-NFA-Intersection. CoNP-completeness of this problem, both for DFAs and for NFAs, was indicated in [13], referring to [9,14]. We make this more explicit in the following, in order to also obtain some ETH-based results.

Theorem 3.

Let k tally DFAs with input alphabet be given, each with at most q states. If ETH holds, then there is no algorithm with that decides if in time .

Proof.

We revisit our previous reduction (from an instance of 3-Coloring with and to some NFA instance for Universality), which delivered the union of many simple sets , each of which can be accepted by a DFA whose automaton graph is a simple cycle. These DFAs have states each. The complements of these languages can be also accepted by DFAs of the same size. Ignoring constants, originally the union of many such sets was formed. Considering now the intersection of the complements of the mentioned simple sets, we obtain a lower bound if and or, a bit weaker, if .

Finally, we can always merge two automata into one using the product construction. This allows us to halve the number of automata while squaring the size of the automata. This trade-off allows to optimize the values for k and q.

Assume we have an algorithm with running time , then we get can reduce 3-Coloring with m edges to intersection of automata each of size bounded by , and hence solving it in time , a contradiction. Similarly, there can be no algorithm with running time . ☐

Proposition 1.

Let k tally DFAs with input alphabet be given, each with at most q states. There is an algorithm that decides if in time .

Proof.

For the upper bound there are basically two algorithms; the natural approach to solve this intersection problem would be to first build the product automaton, which is of size , and then solve the emptiness problem in linear time on this device. This gives an overall running time of ; also see Theorem 8.3 in [15]. On the other hand, we can test all words up to length . As each DFA has at most q states in each DFA, processing a word enters a cycle in at most q steps. Also the size of the cycle in each DFA is bounded by q. The least common multiple of all integers bounded by q, i.e., , where ψ is the second Chebyshev function, is bounded by ; see Propositions 3.2 and 5.1. in [16]. This yields an upper bound of the running time. ☐

Hence in the case where the exponent is dominated by k, the upper and lower bound differ by a factor of , and in the other case by a factor of .

Remark 1.

From the perspective of parameterized complexity, we could also (alternatively) only look at the parameter q, as in the case of DFAs (after some cleaning; as there are no more than many functions available as state transition functions, multiplied by choices of final state sets, as well as by the q choices of initial states); the corresponding bound for NFAs is worse. However, the corresponding algorithm for solving Tally-DFA-Intersection for q-state DFAs is far from practical for any . We can slightly improve our bound on k by observing that from the potential choices of final state sets for each of the choices of transition functions and initial states, at most one is relevant for the question at hand, as the intersection of languages accepted by DFAs with identical transition functions and initial states is accepted by one DFA with the same transition function and initial state whose set of final states is just the intersection of the sets of final states of the previously mentioned DFAs; if this intersection turns out to be empty, then also the intersection of the languages in question is empty. Hence, we can assume that . A further improvement is due to the following modified algorithm: First, we construct DFAs that accept the complements of the languages accepted by . Then, we build an NFA that accepts . Notice that has about at most states by using some standard construction. If we check the corresponding DFA for Universality, this would take, altogether, time for unary input alphabets.

3. The Non-Tally Case

In the classical setting, the automata problems that we study are harder for binary (and larger) input alphabet sizes (PSPACE-complete; for instance, see [17]). Also, notice that the best-known algorithms are also slower in this case. This should be reflected in the lower bounds that we can obtain for them (under ETH), too.

Let us describe a modification (and in a sense a simplification) of our reduction from 3-Coloring. Let be an undirected graph. We construct an NFA A (on a ternary alphabet for simplicity) as follows. Σ corresponds to the set of colors with which we like to label the vertices of the graph. The state set is . W.l.o.g., . For and , we add the following transitions.

- if or if ;

- (for ) if or if ;

- .

Moreover, s is the only initial and all states are final states. If , then this corresponds to a coloring via , , that is not proper. Namely, z drives the A through the states s, , , …, , s for some and some . By construction, this is only possible if , establishing the claim. Conversely, if is a coloring that is improper, then there is an edge, say, , such that for some . Then, , where , . Namely, this z will drive A through the states s, , , …, , s.

Hence, for the constructed automaton A, if and only if there is some proper coloring of G. For such a proper coloring c, , where , .

As , the number of states of A is . So, we can conclude a lower bound of the form . We are now further modifying this construction idea to obtain the following tight bound.

Theorem 4.

Assuming ETH, there is no algorithm for solving Universality for q-state NFAs with binary input alphabets that runs in time .

Proof.

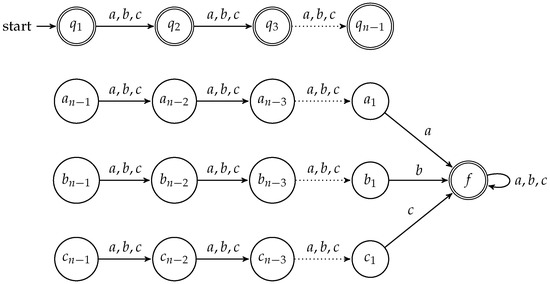

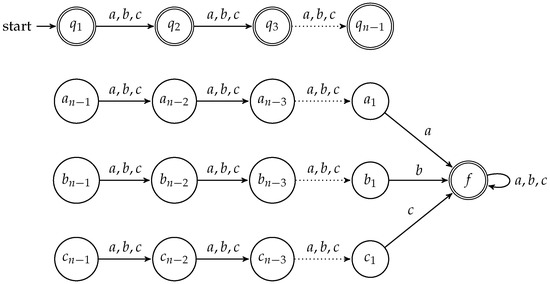

As we can encode the union of all the NFAs above more succinct we get a better bound. Let be an undirected graph, and as above. Let represent three colors. Then there is a natural correspondence of a word in to a coloring of the graph, where the i-th letter in the words corresponds to the color of . We construct an automaton with states, as sketched in Figure 1. Notice that this figure only shows the backbone of the construction. Additionally, for each edge with in the graph, we add three types of transitions to the automaton: , , . These three transitions are meant to reflect the three possibilities to improperly color the given graph due to assigning and the same color. Inputs of length n encode a coloring of the vertices. First notice that the automaton will accept every word of length not equal to n. Namely, words shorter than n can drive the automaton into one of the states through . Also, as argued below, the automaton can accept all words longer than n, starting with an improper coloring coding of the word, as this can drive the automaton into state f. Further, our construction enables the check of an improper coloring. A coloring is improper if to vertices that are connected have the same color, so we should accept a word iff and and . Pick such a word and assume, without a loss of generality, that . Then the automaton will accept w, since the additional edge allows for an accepting run terminating in the state f. Note that the automaton accepts all words of length at most . Also, it accepts a word of length at least n iff the prefix of length n corresponds to a bad coloring. Hence the automaton accepts all words iff all colorings are bad.

Figure 1.

A sketch of the NFA construction of Theorem 4.

The converse direction is also easily seen. Assume there is a valid coloring represented by a word . Assume by contradiction that this word is accepted by the automaton. As the word has length n an accepting run has to terminate in f, and so one of the edges added to the automaton backbone as shown in Figure 1 has to be part of this run. Assume, without a loss of generality, that this is the edge . Then , as the edge was chosen and since this run leads to f, also the letter at position has to equal a. However, as this is not valid coloring, hence the assumption that the word is accepted by the automaton was false. Hence, if there is a valid coloring the automaton does not accept all words.

It is simple to change the construction given above to get away with binary input alphabets (instead of ternary ones), for instance, by encoding a as 00, b as 01 and c as 10. ☐

We are now turning towards DFA-Intersection and also to NFA-Intersection. In the classical perspective, both are PSPACE-complete problems. An adaptation of our preceding reduction from 3-Coloring, considering DFAs each with states obtained from a graph instance , yields the next result, where upper and lower bounds perfectly match.

In the following proposition we have parameters k the number of automata, q the maximum size of these automata, and n the input length. The parameters are both upper bounded by n. Recall that the notation drops polynomial factors in n even though n is not explicitly mentioned in the expression.

Proposition 2.

There is no algorithm that, given k DFAs (or NFAs) with arbitrary input alphabet, each with at most q states, decides if in time unless ETH fails. Conversely, there is an algorithm that, given k DFAs (or NFAs) with arbitrary input alphabet, each with at most q states, decides if in time .

Proof.

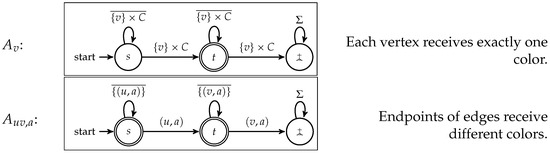

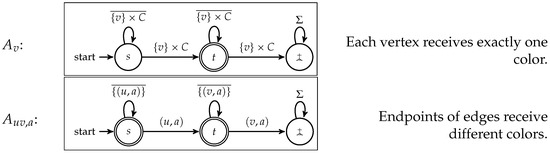

The hardness is by adaptation of the the 3-Coloring reduction we gave for Universality. For parameters k and q, we take a graph with . In this proof, we neglect the use of some ceiling functions for the sake of readability. For the DFAs, choose the alphabet , . The states are s, t,  . For each vertex v, we define the DFA , and for each edge and each color a, we define the DFA , as described in Figure 2. Clearly, we have many of these DFAs .

. For each vertex v, we define the DFA , and for each edge and each color a, we define the DFA , as described in Figure 2. Clearly, we have many of these DFAs .

. For each vertex v, we define the DFA , and for each edge and each color a, we define the DFA , as described in Figure 2. Clearly, we have many of these DFAs .

. For each vertex v, we define the DFA , and for each edge and each color a, we define the DFA , as described in Figure 2. Clearly, we have many of these DFAs .

Figure 2.

The DFAs necessary to express a proper coloring.

We can compute the intersection for each block of automata into a single DFA in polynomial time (with respect to q). This can be most easily seen by performing a multi-product construction. Hence, given a block of automata with transition function , we output the new block automaton whose set of states corresponds to all (q many) ternary numbers, interpreted as -tuples in {s, t,  }. We output a transition in the table of in the following situation:

}. We output a transition in the table of in the following situation:

}. We output a transition in the table of in the following situation:

}. We output a transition in the table of in the following situation:So, we have to look up times the tables of the ’s, where each of the look-ups takes roughly time.

This way, we obtain an automaton with q states and we reduce the number of DFAs to . Hence, we got k DFAs each with q states. If there was an algorithm solving DFA-Intersection in time , then this would result in an algorithm solving 3-Coloring in time , contradicting ETH.

Conversely, given k DFAs with arbitrary input alphabet, each with at most q states (q is fixed), we can turn these into one DFA with states by the well-known product construction, which allows us to solve the DFA-Intersection question in time . ☐

Remark 2.

The proof of the previous theorem also implies that no such algorithm even if restricted to any infinite subset of tuples in time can exist, unless ETH fails. Especially, if q is fixed to a constant greater than 2, no algorithm in time can exist, unless ETH fails.

We can encode the large alphabet of the previous construction into the binary one, but we get a weaker result. In particular, the DFAs and in this revised construction have states, and not constantly many as before. This means that we have to spell out the paths between the states s and t, but this is not necessary with the trash state  .

.

.

.Proposition 3.

There is no algorithm that, given k DFAs with binary input alphabet, each with at most q states, decides if in time or , unless ETH fails.

Proof.

We reduce this case to the case of unbounded alphabet size. Assume we are given k DFAs over the alphabet Σ, where . We encode each letter of Σ by a word of length (block code) over the alphabet .

In general, when converting an automaton from an alphabet Σ to an alphabet , the size of the automaton might increase by a factor of , as one might need to build a tree distinguishing all words of length .

But we already know that, for the unbounded alphabet size, the lower bound is achieved by using only the automata from that proof (see Figure 2). These automata are special, as there are at most many edges leaving each state, while all other edges loop.

Hence, we only increase the number of states (and also edges) by a factor or . ☐

The following proposition gives a matching upper bound:

Proposition 4.

There is an algorithm that, given k DFAs with binary input alphabet, each with at most q states, decides if in time .

Proof.

We will actually give two algorithms that solve this problem. One has a running time of and one a running time of . The result then follows.

(a) We can first construct the product automaton of the DFAs , which is a DFA with at most many states. In this automaton, one can test emptiness in time linear in the number of states.

(b) For the other algorithm, notice that for a fixed number q, a large number k of automata seems not to increase the complexity of the intersection problem, as there are only finitely many different DFAs with at most q many states. Intersection is easy to compute for DFAs with the same underlying labeled graph. On binary alphabets, each state has exactly two outgoing edges. Thus, there are possible choices for the outgoing edges of each state. Hence in total there are different such DFAs. By merging first all DFAs with the same graph structure we can assume that . We can now proceed as in (a). ☐

Let us conclude this section with a kind of historical remark, linking three papers from the literature that can also be used as a starting point for ETH-based results on DFA-Intersection. Wareham presented several reductions when discussing parameterized complexity issues for DFA-Intersection in [15]. In particular, Lemma 6 describes a reduction from Dominating Set that produces from a given n-vertex graph (and parameter ) a set of DFAs, each of at most states and with an n-letter input alphabet, so that we can rule out algorithms for DFA-Intersection in this way. In the long version of [18], it is shown that SUBSET SUM, parameterized by the number n of numbers, does not allow for an algorithm running in time , unless ETH fails. Looking at the proof, it becomes clear that (under ETH) there is also no algorithm -algorithm for Subset Sum, parameterized by the maximum number N of bits per number. Karakostas, Lipton and Viglas, apparently unaware of the ETH framework at that time, showed in [19] that an -algorithm for DFA-Intersection would entail a -algorithms for Subset Sum, for any . Although the latter condition looks rather like SETH, it is at least an indication that we could also make use of other reductions in the literature to obtain the kinds of bounds we are looking for. Also, Wehar showed in [20] that there is no -algorithm for DFA-Intersection, unless NL equals P. This indicates another way of showing lower bounds, connecting to questions of classical Complexity Theory rather than using (S)ETH.

4. Related Problems

Our studies so far only touched the tip of the iceberg. Let us mention and briefly discuss at least some related problems for finite automata in this section.

4.1. The Aperiodicity Problem

Recall that a regular language is called star-free (or aperiodic) if it can be expressed, starting from finite sets, with the Boolean operations and with concatenation. (So, Kleene star is disallowed in the set constructions, in contrast to the ‘usual’ form of regular expressions.) We denote the subclass of the regular languages consisting of the star-free languages by .

It is known that a language is star-free if and only it its syntactic monoid is aperiodic [21], that is, it does not contain any nontrivial group. Here we will use a purely automata-theoric characterization: A language accepted by some minimum-state DFA A is star-free iff for every input word w, for every integer and for every state q, implies .

This allows a minimal automaton of a star-free language to contain a cycle, but if the non-empty word w along a cycle starting at q is a power of another non-empty word u, i.e., for some , then u also forms a cycle starting at q. For example, the language is not aperiodic as forms a cycle starting at any state of the minimal automaton, but a does not. However, is aperiodic, as are the only cycles in the minimal automaton (except for cycles starting at the sink state).

For this class (and in fact for any other subregular language class), one can ask the following decision problem. Given a DFA A, is ? This problem (called Aperiodicity in the following) was shown to be PSPACE-complete by Cho and Huynh in [22]. It relies on the following characterization of aperiodicity: Cho and Huynh present a reduction that first (again) proves that the DFA-Intersection-Nonemptiness is PSPACE-complete (by giving a direct simulation of the computations of a polynomial-space bounded TM) and then show how to alter this reduction in order to obtain the desired result. Unfortunately, this type of reductions is not very useful for ETH-based lower-bound proofs. However, in an earlier paper, Stern [23] proved that Aperiodicity is CoNP-hard. His reduction is from 3SAT (on n variables and m clauses), and it produces a minimum-state DFA with many states. Hence, we can immediately conclude a lower bound of for Aperiodicity on q-state DFAs. This can be improved as follows.

The basic idea of the proof of the next proposition is to reduce the intersection problem (in a restricted version) to aperiodicity. Given language , consider the language , and let A be its minimal automaton. One direction is easy: if the intersection of the languages contains a word u, then forms a cycle in A starting at the initial state, but does not. This gives the idea to show that if there is a word w in the intersection, then the language L is not aperiodic. The other direction is more involved and requires that the languages are themselves aperiodic, and that k is a prime.

Theorem 5.

Assuming ETH, there is no algorithm for solving Aperiodicity for q-state DFAs on arbitrary input alphabets that runs in time .

Proof.

We will show this by reducing a restricted version of the intersection problem to Aperiodicity. Proposition 2 is stated only for general automata, but as the hardness part of the proof only uses automata which are aperiodic, the following claim holds:

Claim 1.

Let be fixed. Let be star-free languages given by their minimal automata over some alphabet Σ, where the number of states of each is bounded by q. Then there is no algorithm deciding if in time unless ETH fails.

We will use the claim only for .

Let be star-free languages given by their minimal automata , where and for we let . (For technical reasons explained later, the initial state of is whereas the initial states of the other are called .) Without a loss of generality, we can assume that k is a prime. If not, one can simply add multiple times to the list of automata, until the length of the list is a prime. Moreover, we can assume that the state alphabets satisfy, for all , , i.e., the state alphabets are pairwise disjoint.

We let

and our main goal will be to show that L is star-free if and only if . This will complete the proof, as the number of states for an automata recognizing A can be bounded by by the construction given below, and as by the reduction no algorithm deciding Aperiodicity in time can exist unless ETH fails.

If one of the is empty, a property that can be tested in linear time, then the intersection is empty and hence is also star-free. So from now on, we assume that for all , we have .

In the following, we will first give an explicit construction of the minimal automaton A of L. We use the characterization from above that A is star-free iff for every input w and every integer and every state q, implies .

First we show the “easy direction”: given a word in the intersection, , q being the initial state of A, and , the condition for being aperiodic fails. Then we proceed with the “hard direction” given a word w and an integer and a state q of A such that and implies that the maximal prefix of w in is in the intersection , and hence the intersection is non-empty.

Step 1: Construction of the minimal automaton of L

The idea is to construct an automaton over the alphabet , , which is basically a large cycle consisting of the automata . For , we connect the accepting state(s) of to the initial state of via an edge labeled $, and finally we connect the accepting state(s) of to the initial state of via an edge labeled $.

The details of this construction are a bit more involved. By minimality, each of the might contain at most one sink state, i.e., a state that has no path leading to an accepting state. Let be the set of states of that contain a path to a state from . As all languages are non-empty, the initial state of is in . In particular, each is non-empty. Let , where {q1,  }, and consists of the following sets:

}, and consists of the following sets:

}, and consists of the following sets:

}, and consists of the following sets:- for (recall that the state sets are pairwise disjoint),

- ,

- ,

- ,

- if ,

- {(

, σ,

, σ,  ) ∣ σ ∈ Σ},

) ∣ σ ∈ Σ}, - {(q, σ,

) ∣ for all where there is no such that is in one of the sets above}.

) ∣ for all where there is no such that is in one of the sets above}.

Since the initial state of was called , we could add a new state and make sure there are no edges from connecting to . This is needed as otherwise we might recognize additional words looping within from to . Also we merge all sink states of the into a single sink state  .

.

.

.We need to show that the automaton is minimal. For this we need to show that no pair of states can be merged, i.e., there exists a word that leads to the final state for exactly one of them. First note that no word leads from  to the final state , and there is a path from every other state. Hence

to the final state , and there is a path from every other state. Hence  cannot be merged with any other state. Also note that cannot be merged with any other state as it is the only final state.

cannot be merged with any other state. Also note that cannot be merged with any other state as it is the only final state.

to the final state , and there is a path from every other state. Hence

to the final state , and there is a path from every other state. Hence  cannot be merged with any other state. Also note that cannot be merged with any other state as it is the only final state.

cannot be merged with any other state. Also note that cannot be merged with any other state as it is the only final state.Hence we need to show that , , cannot be merged. If and are both in some , then these state corresponds to states in and by minimality of they cannot be merged. Assume that and for , then there is a path from to the final state using exactly many $-transitions, and such a path cannot exist for .

So A is the minimal automaton for L.

Step 2: “Easy direction”

Assume the intersection of the is nonempty and w is a word in the intersection. Then and and since A is the minimal automaton for L, this implies that L is not star-free.

Step 3: “Hard direction”

Assume there is a common word w that forms a nontrivial cycle in A for , i.e., there exists a state q such that and . First we can rule out that q =  , as all cycles through

, as all cycles through  are trivial.

are trivial.

, as all cycles through

, as all cycles through  are trivial.

are trivial.Assume that , i.e., w does not contain a $ symbol. Then the cycle is contained completely within one of the and hence there is a corresponding cycle in which cannot be nontrivial as the are aperiodic.

Recall that the states corresponding to the initial states of are called in A (strictly speaking for this is not correct, but behaves similar enough in the following). First note that if forms a cycle starting at the state q, then forms a cycle starting at the state . We will use this idea to find a cycle starting at one of the states for corresponding to an initial state of . So w is of the form , where and . Since is a word ending with a $ symbol, we have that is one of the states corresponding to an initial states of an . By rotating one easily sees that . Also assume by contradiction that . We get by removing one loop through . However, this implies by induction on c that , contradicting the assumption that w forms a nontrivial cycle. Hence also forms a nontrivial cycle starting at . We let .

Also since ends with a $ symbol we have where (for as ), and since the number of $ in is proportional to t, we get , where (this also uses the fact that , hence no prefix can lead to the state  ). Also since k is a prime, for each j, there exists a t such that . However, then this implies that where for all . Hence for all (note this is also true for as the out-going transitions from are the same as the ones from ), and so the intersection is not empty. ☐

). Also since k is a prime, for each j, there exists a t such that . However, then this implies that where for all . Hence for all (note this is also true for as the out-going transitions from are the same as the ones from ), and so the intersection is not empty. ☐

). Also since k is a prime, for each j, there exists a t such that . However, then this implies that where for all . Hence for all (note this is also true for as the out-going transitions from are the same as the ones from ), and so the intersection is not empty. ☐

). Also since k is a prime, for each j, there exists a t such that . However, then this implies that where for all . Hence for all (note this is also true for as the out-going transitions from are the same as the ones from ), and so the intersection is not empty. ☐If we use the automaton over the binary alphabet from Proposition 3 in the proof of the previous theorem, we require automata of logarithmic size instead of constant size for the hardness of the intersection problem. Hence, the number of states decreases by a factor of . This will give us the following result.

Corollary 2.

Assuming ETH, there is no algorithm for solving Aperiodicity for q-state DFAs on binary input alphabets running in time .

We are not aware of any published exponential-time algorithm for solving Aperiodicity. However, as some NP-hard problems involving cycles in directed graphs admit subexponential-time algorithms, see [24,25], our lower bound could be even matched. Nonetheless, this stays an open question.

Proposition 5.

There is an algorithm for solving Aperiodicity that runs in time on a given q-state DFA with arbitrary input alphabet.

Proof.

Namely, first (as a preparatory step) create a table of size , classifying those mappings as good that have the property that for some state p of the given DFA A, but for some . The table creation needs time .

In a second column of our table, write down if a certain mapping f is realizable, i.e., does there exist a word such that ? In order to be able to reconstruct the word realizing f, either notify that f is the identity (and hence f is realized as ), or write down a realizable map g, i.e., for some , and a letter , such that . We build this second column by dynamic programming, starting with only one realizable entry, the identity, and then we keep looping through the whole alphabet (for all ) and all realizable mappings and mark as realizable until no further changes happen to the table. Hence, this part of the algorithm will perform at most loops. Finally, we have to check in our table if there are any mappings that are both realizable and good. If so, we can construct a star witness, proving that is not aperiodic. If not, we know that the language is aperiodic. The overall running time of the algorithm is . ☐

Another related problem asks whether, given a DFA A, the language belongs to , which means testing if is quasi-aperiodic.Let us make this more precise. Let be a language, M be its syntactic monoid. and its syntactic morphism. A regular language is in AC iff the syntactic morphism is quasi-aperiodic, i.e., for all the subset of M does not contain a non-trivial subgroup of M. Analyzing the PSPACE-hardness proof of Beaudry, McKenzie and Thérien given in [26], we see that the same lower bound result as stated for Aperiodicity holds for this question, as well. We can reduce testing if the syntactic monoid of a language is aperiodic to the question whether the syntactic morphism is quasi-aperiodic by adding a neutral letter. This will give us the same lower bound as deciding aperiodicity. For the upper bound, note that we only need to test if contains a group up to .

Corollary 3.

Assuming ETH, there is no algorithm that runs in time and decides, given a q-state DFA A on arbitrary input alphabets, whether or not the syntactic morphism of is quasi-aperiodic. Conversely, we can decide if a q-state automaton recognizes a language with a quasi-aperiodic syntactic morphism in time .

It would be also interesting to study other “hard subfamily problems” for regular languages, as exemplified with [27], within the ETH framework. In addition, it would be also interesting to systematically study the complexity of the problems under scrutiny in the previous section, restricted to subclasses of regular languages, as we did in Claim 1 of the proof of Theorem 5.

4.2. Synchronizing Words

A deterministic finite semi-automaton (DFSA) A can be specified as , where, for each , there is a mappting . Given some DFSA A, a synchronizing word enjoys

The Synchronizing Word (SW) problem is the question, given a DFSA A and an integer ℓ, whether there exists a synchronizing word w of length at most ℓ for A. This decision problem is related to the arguably most famous combinatorial conjecture in Formal Languages, which is Černý’s conjecture [28], stating (in a relaxed form) that there is always a synchronizing word of size at most for any DFSA, should there be a synchronizing word at all.

We have undertaken a multi-parameter analysis of this problem in [29]. The most straightforward parameters are , , and an upper bound ℓ on the length of the synchronizing word we are looking for. In [29], algorithms with running times of and of were given, complemented by proofs that show that there is neither an -time (with unbounded input alphabet size) nor an -time algorithm (for any ) under ETH or SETH, respectively.

From the reduction presented in ([29] Theorem 12), we cannot get any lower bounds for bounded input alphabets; the dependency on Σ for the mentioned -time algorithm is only linear.

We were not able to answer this question completely for SW, but (only) for a more general version of this problem. Given a DFSA and a state set , a -synchronizing word satisfies

The -Synchronizing Word (-SW) problem is the question, given a DFSA A, a set of states and an integer ℓ, whether there exists a -synchronizing word w of length at most ℓ for A. Correspondingly, the -Synchronizing Word problem can be stated. Notice that while SW is NP-complete, -SW is even PSPACE-complete; see [30].

Theorem 6.

There is an algorithm for solving -SW on bounded input alphabets that runs in time for q-state deterministic finite semi-automata. Conversely, assuming ETH, there is no -time algorithm for this task.

Proof.

It was already observed in [29] that the algorithm given there for SW transfers to -SW, as this is only a breadth-first search algorithm on an auxiliary graph (of exponential size, with vertex set ). The PSPACE-hardness proof contained in ([30] Theorem 1.22), based on [31], reduces from DFA-Intersection. Given k automata each with at most s states, with input alphabet Σ, one deterministic finite semi-automaton is constructed such that . Hence, an -time algorithm for -SW would result in an -time algorithm for DFA-Intersection, contradicting Proposition 3. ☐

5. SETH-Based Bounds: Length-Bounded Problem Variants

Cho and Huynh studied in [32] the complexity of a so-called bounded version of Universality, where in addition to the automaton A with input alphabet Σ, a number k (encoded in unary) is input, and the question is if . This problem is again CoNP-complete for general alphabets. The proof given in [32] is by reduction from the n-Step Halting Problem for NTMS, somehow modifying earlier constructions of [33]. Our reduction from 3-Coloring given above also shows the mentioned CoNP-completeness result in a more standard way. Our ETH-based result also transfers into this setting; possibly, there are now better algorithms for solving Bounded Universality, as this problem might be a bit easier compared to Universality. We will discuss this a bit further below.

Notice that in [29], another SETH-based result relating to synchronizing words was derived. Namely, it was shown that (under SETH) there is no algorithm that determines, given a deterministic finite semi-automaton and an integer ℓ, whether or not there is a synchronizing word for A, and that runs in time for any . Here, Σ is part of the input; the statement is also true for fixed binary input alphabets. We will use this result now to show some lower bounds for the bounded versions of more classical problems we considered above.

Theorem 7.

There is an algorithm with running time that, given k DFAs over the input alphabet Σ and an integer ℓ, decides whether or not there is a word accepted by all these DFAs. Conversely, there is no algorithm that solves this problem in time for any , unless SETH fails.

Proof.

The mentioned algorithm simply tests all words of length up to ℓ. We show how to find a synchronizing word of length at most ℓ for a given DFSA and an integer ℓ that runs in time , assuming for the sake of contradiction that there is an algorithm with such a running time for Bounded DFA-Intersection. From A, we build many DFAs (namely, with start state s and with unique final state f, while the transition function of all is identical, corresponding to ). Furthermore, let be the automaton that accepts any word of length at most ℓ. Now, we create many instances of of Bounded DFA-Intersection. Namely, is given by . Now, A has a synchronizing word of length at most ℓ if and only if for some , is a YES-instance.

Clearly, the above reasoning implies that there is no -time algorithm for Bounded NFA-Intersection, unless SETH fails. More interestingly, we can use state complementation and a variant of the NFA union construction to show the following result.

Corollary 4.

There is an algorithm with running time that, given some NFA over the input alphabet Σ and an integer ℓ, decides whether or not there is a word not accepted by this NFA. Conversely, there is no algorithm that solves this problem in time for any , unless SETH fails.

Clearly, this implies a similar result for Bounded NFA-Equivalence.

Corollary 5.

There is an algorithm with running time that, given two NFAs over the input alphabet Σ and an integer ℓ, decides whether or not there is a word not accepted by exactly one NFA. Conversely, there is no algorithm that solves this problem in time for any , unless SETH fails.

From these reductions, we can borrow quite a lot of other results from [29], dealing with inapproximability and parameterized intractability.

- [29], Theorem 3, yields: Bounded NFA Universality is hard for W[2], when parameterized by ℓ. Similar results hold also for the intersection and equivalence problems that we usually consider.

- Using (in addition) recent results due to Dinur and Steurer [34], we can conclude that there is no polynomial-time algorithm that computes an approximate synchronizing word of length at most a factor of off from the optimum, unless P equals NP. (This sharpens [29], Corollary 4. Neither is it possible to approximate the shortest word accepted by some NFA up to a factor of .

It would be interesting to obtain more inapproximability results in this way.

6. Two Further Ways to Interpret Finite Automata

Finite automata cannot be only used to process (contiguous) strings, but they might also jump from one position of the input to another position, or they can process two-dimensional words. We picked these two processing modes for the subsequent analysis, as they were introduced quite recently [35,36].

6.1. Jumping Finite Automata

A jumping finite automaton (JFA) formally looks like a usual string-processing NFA. However, the application of a rule to a word is different: If is a transition rule, then it can transform the input string u into provided that decompose as and . In other words, a JFA may first jump to an arbitrary position of the input and then apply the rule there. This model was introduced in [36] and further studied in [37]. It is relatively easy to see that the languages accepted by JFAs are just the inverses of the Parikh images of the regular languages, or, in other words, the commutative (or permutation) closure of the regular languages, or, yet in different terminology, the inverses of the Parikh images of semilinear sets. In particular, the emptiness problem for JFAs is as simple as for NFAs. Also, for the case of unary input alphabets, JFAs and NFAs just work the same. Hence, Universality is hard for JFAs, as well. Classical complexity considerations on these formalisms are contained in [37,38,39,40,41]; observe that mostly the input is given in the form of Parikh vectors of numbers encoded in binary, while we will consider the input given in unary-encoded Parikh vectors below (namely, as words, i.e., as elements of the free monoid), since JFAs were introduced this way in [36]. Yet another way to formally look at how JFAs operate incorporates the use of the shuffle operation. Recall that denotes the shuffle of and , which can be seen as observing a concurrent left-to-right read of and , listing all possible concurrent reads. For instance, .

Notice however that even if a JFA might formally look like a DFA, there is a certain nondeterminism inherent to this mechanism, which is the position at which the next symbol is read (in the case of non-unary input alphabets). It can be therefore shown that the uniform word problem for JFAs is NP-hard. Analyzing the proof given in [37], Theorem 54, we can conclude:

Theorem 8.

Under ETH, there is no algorithm that, given a JFA A on q states and a word , decides if in time , nor .

Notice that this problem can be solved in time on arbitrary input alphabets, feeding all permutations of an input word of length n into the given automaton, interpreted as an NFA. There is also a dynamic programming algorithm solving Universal Membership for q-state JFAs (that is not improvable by Theorem 8, assuming ETH). This algorithm is based on the following idea. A word w allows the transition from state p to state iff for some decomposition , p can transfer to by reading and from one can go into when reading . For the correct implementation of the shuffle possibilities, we need to store possible translations for all subsets of indices within the input word, yielding a table (and time) complexity of . We have no other upper bound.

This also means (assuming P is not equal to NP) that there is no polynomial-time algorithm that computes, given a JFA A, another JFA that describes the Parikh mapping inverses of the complement of the Parikh images that A describes (otherwise, we would have a polynomial-time algorithm to solve Universality), nor is there a polynomial-time algorithm that computes, given two JFAs and , a third JFA such that the intersection of the (commutative / JFA) languages associated to and is just the language accepted by the JFA (namely, otherwise we could solve the Universal Membership Problem in polynomial time; given a JFA A and a word w, first construct a JFA that accepts the permutation closure of and then check if the intersection of the JFA languages of A and of is empty). Recall that, by way of contrast, intersection is an easy operation even on NFAs. (This also indicates that the simple constructions for the corresponding closure properties of JFA languages as given in [36] are flawed.) However, the closure properties themselves hold true, as this was already known for the Parikh images of regular sets, also known as semi-linear sets, also see [42] and the references quoted therein.

What about the three decidability questions that are central to this paper for these devices? As the behavior of JFA is the same as that of NFA on unary alphabets, we can borrow all results from Section 2.

Theorem 9.

Let be fixed. Unless ETH fails, there is no algorithm that solves Universality for q-state JFAs in time .

Proof.

We only sketch the idea in the case of binary alphabets, i.e., when . We revisit our reduction from 3-Coloring for the case detailed above. The main bottleneck was the coding of badly colored edges. This is taken care of in the following way. We encode the vertices no longer by n prime numbers, but by pairs of many prime numbers, which are then expressed as powers of the input letters a and b, resp. Hence, to describe the bad colorings of the m edges, we need no longer many states, but only many. We can extend this method such that, for arbitrary k, we would need many states for all bad edge colorings. ☐

Notice that the expression that we claim somehow interpolates between the third root of q (in the exponent of 2), namely, when , and then it also coincides with our earlier findings, and q itself (if k tends to infinity). We could make the construction for arbitrary alphabets more explicit by re-interpreting the proof of Proposition 2 as one dealing with Universality for JFAs.

Proposition 6.

Unless ETH fails, there is no algorithm that solves Universality for q-state JFAs in time .

Proof.

First observe that the DFAs and that we constructed in the proof of Proposition 2 can be as well interpreted as JFAs, and also the automata and that can be constructed by complementing the sets of final states can read the input in arbitrary sequence. It is easy to construct a JFA that accepts the union of all languages accepted by any JFA or . This union equals (with Σ as in the referred proof) if and only if the given graph was not 3-colorable. ☐

We can obtain very similar results for Equivalence for JFAs.

Let us now briefly discuss Intersection. Interestingly enough, also the problem of detecting emptiness of the intersection of only two JFA languages is NP-hard. This and a related study on ETH-based complexity can be found in [37]. For the intersection of k JFAs, the proof of Proposition 2 actually shows the analogous result also in that case. For bounded alphabets, we can re-analyze the proof of Theorem 9 to obtain:

Corollary 6.

Let Σ be fixed. Unless ETH fails, there is no algorithm for solving JFA intersection in time for k JFAs with at most q states.

Namely, we can construct to a given 3-Coloring instance with n vertices and m edges a collection of many JFAs, each with many states.

6.2. Boustrophedon Finite Automata

In the last four decades, several attempts have been made to transfer automata theory into the area of image processing. Unfortunately, most (natural) attempts failed insofar, as even the most simple algorithmic questions (like the emptiness problem for the corresponding devices) turn out to be undecidable; see [43,44]. In order to avoid these negative results, simpler devices have been discussed in the literature; see [45] or [35] for more recent works.

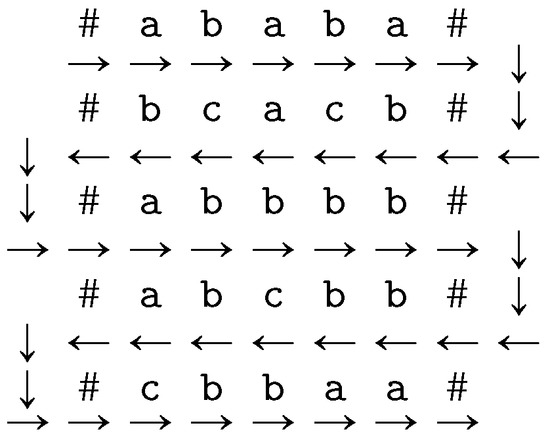

Boustrophedon finite automata (BFAs) have been introduced to describe a simple processing of rectangular-shaped pictures with finite automata that scan these pictures as depicted in Figure 3.

Figure 3.

How a BFA processes a picture.

Without going into formal details, let us mention that it has been shown in [35] that the non-emptiness problem for this type of finite automata is NP-complete. This might read like a very negative result, but as mentioned above, for picture-processing automata, mostly undecidability results can be expected for the non-emptiness problem; see [46] for 4-way DFAs. Even for the class of 3-way automata obviously related to BFAs, the known decidability result for non-emptiness does not give an NP algorithm; see [47]. This NP-hardness reduction is from Tally-DFA-Intersection. From this (direct) construction, we can immediately deduce:

Proposition 7.

There is no algorithm that, given some BFA A with at most q states, decides if in time , unless ETH fails.

How good is this bound?

To give a more formal treatment, we need some more definitions. A two-dimensional word (also called a picture, a matrix or an array in the literature) over Σ is a tuple

where and, for every i, , and j, , . We define the number of columns (or width) and number of rows (or height) of W by and , respectively. For the sake of convenience, we also denote W by or by a matrix in a more pictorial form. If we want to refer to the symbol in row i of the picture W, then we use . By , we denote the set of all (non-empty) pictures over Σ. Every subset is a picture language.

Let and be two non-empty pictures over Σ. The column concatenation of W and , denoted by , is undefined if and is the picture

otherwise. The row concatenation of W and , denoted by , is undefined if and is the picture

otherwise. Column and row catenation naturally extend to picture languages. Accordingly, we can define powers of languages, as well as a closure operation. , for ; then .

Definition 1.

A boustrophedon finite automaton, or BFA for short, can be specified as a 7-tuple , where Q is a finite set of states, Σ is an input alphabet, is a finite set of rules. A rule is usually written as . The special symbol indicates the border of the rectangular picture that is processed, is the initial state, F is the set of final states. Let be a new symbol indicating an erased position and let . Then is the set of configurations of M.

A configuration is valid if and, for every i, , the row equals , for every j, , the row equals , , and, for some ν, , , the row equals , if μ is odd and , if μ is even. Notice that valid configurations model the idea of observable snapshots of the work of the BFA.

- If and are two valid configurations such that A and are identical but for one position , where while , then if .

- If and are two valid configurations, then if the row contains only # and symbols, and if .

The reflexive transitive closure of the relation is denoted by .

The language accepted by M is then the set of all pictures A over Σ such that

for some .

First observe that although the emptiness problem is similar to the intersection problem, the only “communication” between the rows is via the state that is communicated and via the length information that is implicitly checked. In particular, we can first convert a given BFA into one, say, A, that only deals with one input letter, by replacing any input letter in any transition by, say, . Let A have state set Q, with and let be the initial state of A.

Now, if and only if there is some array in that can be linearized as . Here, from the start state , first would lead into , then would lead into etc., until would lead into and then leads into some final state f. By a simple pumping argument, we can assume that . So, we could try all permutations of at most q different states , and then construct the product automaton from , where is as A, but starts with and has as its only accepting state, is like A, but starts with and has as its only accepting state, …, is like A, but starts with and has as its only accepting state, is like A, but starts with and from f there is another arc labeled # that leads into the only final state , and finally is the 2-state NFA accepting . Now, the string-processing NFA does not accept the empty language if and only if there is some r such that is accepted by each of the constructed automata , if and only if (with n rows) is accepted by A. The whole procedure can be carried out in time , which is obviously far off from our lower bound.

A slightly better bound can be obtained by a graph-algorithm based procedure that results in the following statement.

Proposition 8.

Emptiness for q-state BFAs can be decided in time (and polynomial space).

Proof.

Namely, consider the following algorithm. For each , we successively build a directed graph with vertices , and . Construct an arc if A on input could enter state p. More generally, we have an arc for if A, when started in state , would be driven in state p by the input . Finally, for each , we have an arc if A, starting in p, would enter a final state upon reading . Now, A accepts (with at most q rows) if and only if the constructed graph has a path from s to f. Notice that with a little bit of bookkeeping, can be computed from in polynomial time. Also, observe that we can stop the loop after at most iterations, as we can view (in the case of deterministic BFAs) each word as defining a mapping (from the state that we started out to some well-defined state we ended in), and there are no more than many such mappings. From this perspective, our algorithm can be viewed as looking for some r such that is a final state of A, for some . Now, nondeterministic BFAs can be viewed as providing some additional shortcuts in the transition graph, i.e., again at most iterations suffice. (In [35], a “column pumping lemma” was suggested that also shows that iterations suffice.) So, our algorithm performs steps. Also, it only uses polynomial space, while the previous algorithm used space already for the product automaton construction. ☐

7. Conclusions

So far, there has been no systematic study of hard problems for finite automata under ETH. Frankly speaking, we are only aware of the papers [29,37] on these topics. Returning to the survey of Holzer and Kutrib [7], it becomes clear that there are quite a many hard problems related to finite automata and regular expressions that have not yet been examined with respect to exact algorithms and ETH. This hence gives ample room for future research. Also, there are quite a many modifications of finite automata with hard decision problems. One (relatively recent) such example are finite-memory automata [48,49].

It might be also interesting to study these problems under different yet related hypotheses, Pătraşcu and Williams list some of such hypotheses in [50]. Notice that even the Strong ETH was barely used in this paper.

It should be also interesting to rule out certain types of XP algorithms for parameterized automata problems, as this was started out in [51] (relating to assumptions from Parameterized Complexity) and also mentioned in [1,5] (with assumptions like (S)ETH). In this connection, we would also like to point to the fact that if the two basic Parameterized Complexity classes FPT and W[1] coincide, then ETH would fail, which provides another link to the considerations of this paper.

More generally speaking, we believe that it is now high time to interconnect the classical Formal Language area with the modern areas of Parameterized Complexity and Exact Exponential-Time Algorithms, including several lower bound techniques. Both communities can profit from such an interconnection. For the Parameterized Complexity community, it might be interesting to learn about results as in [52], where the authors show that Intersection Emptiness for k tree automata can be solved in time , but not (and this is an unconditional not, independent of the belief in some complexity assumptions) in time , for some suitable constants . Maybe, we can obtain similar results also in other areas of combinatorics. It should be noted that Intersection Emptiness is EXPTIME-complete even for deterministic top-down tree automata.

In relation to the idea of approximating automata, Holzer and Jacobi [53] recently introduced and discussed the following problem(s). Given an NFA A, decide if one of the six variants of an a-boundary of is finite. By reduction from DFA-Intersection, they proved all variants to be PSPACE-hard. Membership of the problems can be easily seen by reducing the problems to a reachability problem of some DFA closely related to the NFA A. Although the hardness reductions in Lemma 15 slightly differ in each case i, all in all the number of states of the resulting NFA is just the total number of states of all DFAs used as input in the reduction, plus a constant. In particular, if the number of states per input DFA is bounded, say, by 3, and if we use unbounded input alphabets, then our previous results immediately entail that, unless ETH fails, none of the six variants of the a-boundary problems admit an algorithm with running time , where q is now the number of states of the given NFA A. This bound is matched by the sketched reduction to prove PSPACE-membership, as the subset construction to obtain the desired equivalent DFA gives a single-exponential blow-up.

In short, this area offers quite a rich ground for further studies.

Acknowledgments

We are grateful for discussions on aspects of this paper with several colleagues. In particular, we thank Martin Kutrib. We also like to thank for the opportunity to present this work at the Simons Institute workshop on Satisfiability Lower Bounds and Tight Results for Parameterized and Exponential-Time Algorithms at Berkeley in November, 2015. Feedback (in particular from Thore Husfeldt) has led us to think about SETH results not contained in that presentation. We are also thankful for the referee comments on the submitted version of this paper.

Author Contributions

Both authors wrote the paper together. In particular, H. Fernau initiated this study and first results were obtained when A. Krebs visited Trier in September, 2015. Without the algebraic background knowledge of A. Krebs, especially the section on aperiodicity would not be there. Both authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lokshtanov, D.; Marx, D.; Saurabh, S. Lower bounds based on the Exponential Time Hypothesis. EATCS Bull. 2011, 105, 41–72. [Google Scholar]

- Impagliazzo, R.; Paturi, R.; Zane, F. Which Problems Have Strongly Exponential Complexity? J. Comput. Syst. Sci. 2001, 63, 512–530. [Google Scholar] [CrossRef]

- Calabro, C.; Impagliazzo, R.; Paturi, R. A Duality between Clause Width and Clause Density for SAT. In Proceedings of the 21st Annual IEEE Conference on Computational Complexity (CCC), Prague, Czech Republic, 16–20 July 2006; pp. 252–260.

- Fomin, F.V.; Kratsch, D. Exact Exponential Algorithms; Texts in Theoretical Computer Science; Springer: Berlin, Germany, 2010. [Google Scholar]

- Cygan, M.; Fomin, F.; Kowalik, L.; Lokshtanov, D.; Marx, D.; Pilipczuk, M.; Pilipczuk, M.; Saurabh, S. Parameterized Algorithms; Springer: Berlin, Germany, 2015. [Google Scholar]

- Downey, R.G.; Fellows, M.R. Fundamentals of Parameterized Complexity; Texts in Computer Science; Springer: Berlin, Germany, 2013. [Google Scholar]

- Holzer, M.; Kutrib, M. Descriptional and computational complexity of finite automata—A survey. Inf. Comput. 2011, 209, 456–470. [Google Scholar] [CrossRef]

- Fernau, H.; Krebs, A. Problems on Finite Automata and the Exponential Time Hypothesis. Implementation and Application of Automata. In Proceedings of the 21st International Conference CIAA 2016, Seoul, South Korea, 19–22 July 2016; Han, Y.S., Salomaa, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9705, pp. 89–100. [Google Scholar]

- Stockmeyer, L.J.; Meyer, A.R. Word Problems Requiring Exponential Time: Preliminary Report. In Proceedings of the 5th Annual ACM Symposium on Theory of Computing, STOC, Austin, TX, USA, 30 April–2 May 1973; Aho, A.V., Borodin, A., Constable, R.L., Floyd, R.W., Harrison, M.A., Karp, R.M., Strong, H.R., Eds.; pp. 1–9.

- Landau, E. Handbuch der Lehre von der Verteilung der Primzahlen; Teubner: Leipzig/Berlin, Germany, 1909. [Google Scholar]

- Chrobak, M. Finite automata and unary languages. Theor. Comput. Sci. 1986, 47, 149–158. [Google Scholar] [CrossRef]

- Wulf, M.D.; Doyen, L.; Henzinger, T.A.; Raskin, J. Antichains: A New Algorithm for Checking Universality of Finite Automata. In Proceedings of the 18th International Conference CAV 2006, Seattle, WA, USA, 17–20 August 2006; Ball, T., Jones, R.B., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4144, pp. 17–30. [Google Scholar]

- Lange, K.J.; Rossmanith, P. The Emptiness Problem for Intersections of Regular Languages. In Proceedings of the 17th International Symposium on Mathematical Foundations of Computer Science, MFCS’92, Prague, Czech Republic, 24–28 August 1992; Havel, I.M., Koubek, V., Eds.; Springer: Berlin/Heidelberg, Germany, 1992; Volume 629, pp. 346–354. [Google Scholar]

- Galil, Z. Hierarchies of Complete Problems. Acta Inf. 1976, 6, 77–88. [Google Scholar] [CrossRef]

- Wareham, H.T. The parameterized complexity of intersection and composition operations on sets of finite-state automata. In Proceedings of the 5th International Conference on Implementation and Application of Automata, CIAA 2000, Ontario, Canada, 24–25 July 2000; Yu, S., Păun, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 2088, pp. 302–310. [Google Scholar]

- Dusart, P. Estimates of Some Functions Over Primes without R.H. 2010; arXiv:1002.0442. [Google Scholar]

- Kozen, D. Lower Bounds for Natural Proof Systems. In Proceedings of the 18th Annual Symposium on Foundations of Computer Science, FOCS, Providence, RI, USA, 31 October–1 November 1977; pp. 254–266.

- Etscheid, M.; Kratsch, S.; Mnich, M.; Röglin, H. Polynomial Kernels for Weighted Problems. In Proceedings of the 40th International Symposium on Mathematical Foundations of Computer Science, MFCS 2015, Milan, Italy, 24–28 August 2015; Italiano, G.F., Pighizzini, G., Sannella, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9235, pp. 287–298. [Google Scholar]

- Karakostas, G.; Lipton, R.J.; Viglas, A. On the complexity of intersecting finite state automata and NLversus NP. Theor. Comput. Sci. 2003, 302, 257–274. [Google Scholar] [CrossRef]

- Wehar, M. Hardness Results for Intersection Non-Emptiness. In Proceedings of the 41st International Colloquium on Automata, Languages, and Programming—ICALP 2014, Copenhagen, Denmark, 8–11 July 2014; Esparza, J., Fraigniaud, P., Husfeldt, T., Koutsoupias, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8573, pp. 354–362. [Google Scholar]

- Schützenberger, M.P. On finite monoids having only trivial subgroups. Inf. Control (Inf. Comput.) 1965, 8, 190–194. [Google Scholar] [CrossRef]

- Cho, S.; Huynh, D.T. Finite-Automaton Aperiodicity is PSPACE-Complete. Theor. Comput. Sci. 1991, 88, 99–116. [Google Scholar] [CrossRef]

- Stern, J. Complexity of Some Problems from the Theory of Automata. Inf. Control (Inf. Comput.) 1985, 66, 163–176. [Google Scholar] [CrossRef]

- Alon, N.; Lokshtanov, D.; Saurabh, S. Fast FAST. In Proceedings of the 36th International Colloquium on Automata, Languages and Programming—ICALP 2009, Rhodes, Greece, 5–12 July 2009; Albers, S., Marchetti-Spaccamela, A., Matias, Y., Nikoletseas, S.E., Thomas, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5555, pp. 49–58. [Google Scholar]

- Fernau, H.; Fomin, F.V.; Lokshtanov, D.; Mnich, M.; Philip, G.; Saurabh, S. Social Choice Meets Graph Drawing: How to Get Subexponential Time Algorithms for Ranking and Drawing Problems. Tsinghua Sci. Technol. 2014, 19, 374–386. [Google Scholar] [CrossRef]

- Beaudry, M.; McKenzie, P.; Thérien, D. The Membership Problem in Aperiodic Transformation Monoids. J. ACM 1992, 39, 599–616. [Google Scholar] [CrossRef]

- Brzozowski, J.A.; Shallit, J.; Xu, Z. Decision problems for convex languages. Inf. Comput. 2011, 209, 353–367. [Google Scholar] [CrossRef]

- Černý, J. Poznámka k homogénnym experimentom s konečnými automatmi. Matematicko-fyzikálny časopis 1964, 14, 208–216. [Google Scholar]

- Fernau, H.; Heggernes, P.; Villanger, Y. A multi-parameter analysis of hard problems on deterministic finite automata. J. Comput. Syst. Sci. 2015, 81, 747–765. [Google Scholar] [CrossRef]

- Sandberg, S. Homing and Synchronizing Sequences. In Model-Based Testing of Reactive Systems; Broy, M., Jonsson, B., Katoen, J.P., Leucker, M., Pretschner, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3472, pp. 5–33. [Google Scholar]

- Rystsov, I.K. Polynomial Complete Problems in Automata Theory. Inf. Process. Lett. 1983, 16, 147–151. [Google Scholar] [CrossRef]

- Cho, S.; Huynh, D.T. The Parallel Complexity of Finite-State Automata Problems. Inf. Comput. 1992, 97, 1–22. [Google Scholar] [CrossRef]