1. Introduction

A dispersed random composite is an important class of material science. Classification of composites by reinforcement geometry in the engineering sciences is based on observation of their structures and determination of geometric parameters, such as concentrations of phases, shapes of inclusions, their sizes, correlation functions, etc. [

1,

2]. Unidirectional fiber-reinforced composites [

3,

4,

5] constitute a class of two-dimensional (2D) dispersed composites (In the present paper, we give different meanings to the term “2D” referring to a section of composite perpendicular to fibers and “two-dimensional” concerning features of ML). Determining their macroscopic properties is the primary challenge of the homogenization theory [

6,

7], and its constructive implementation [

8,

9,

10]. In the present paper, Part III, we utilize the analytical results of constructive homogenization obtained in [

11,

12], referred to as Parts I and II, respectively, to extend the study of two-phase fiber-reinforced composites using machine learning (ML) methods.

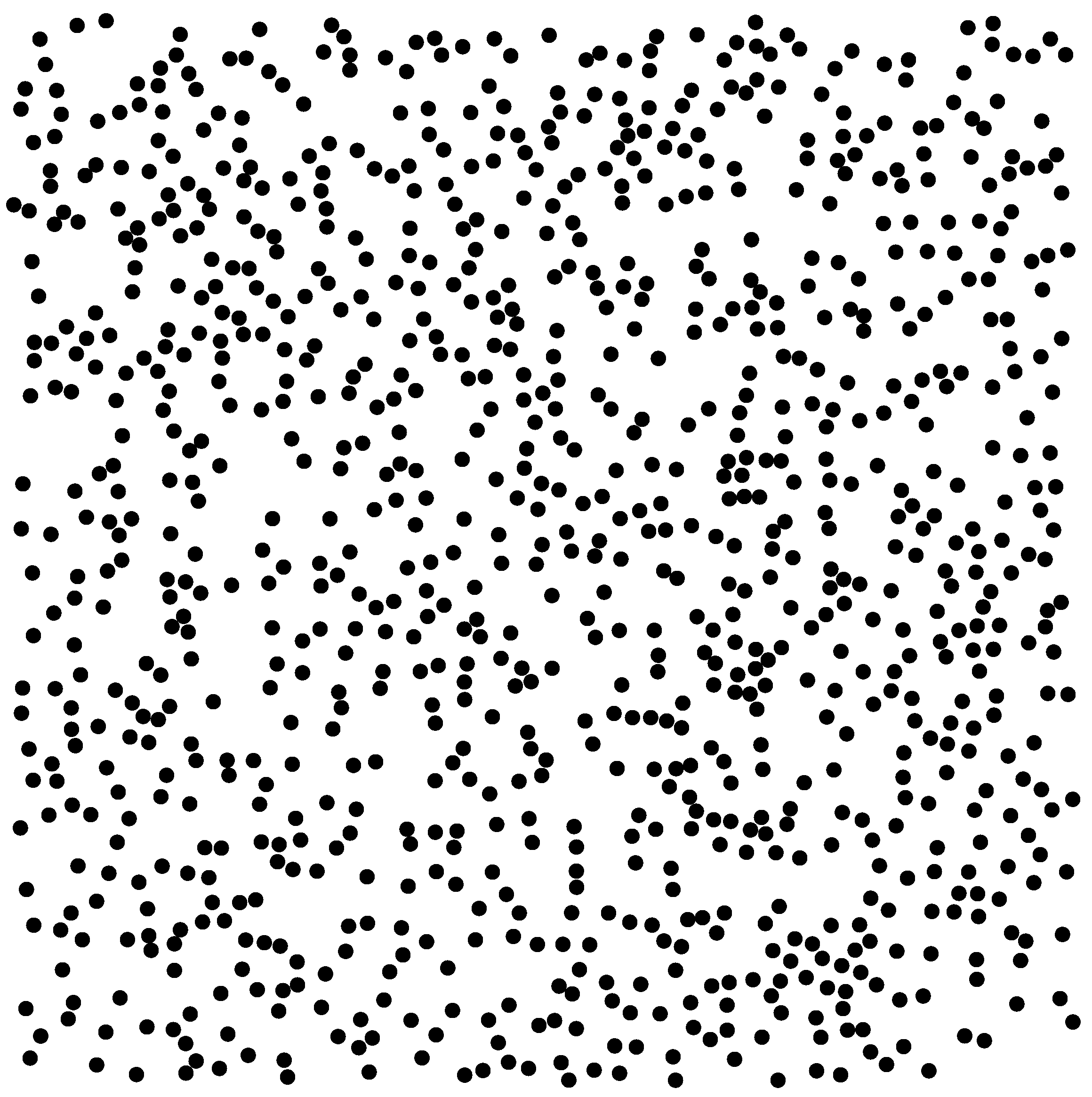

We are interested in random composites and their microstructure. A fiber-reinforced composite is represented by its section perpendicular to the unidirectional fibers, more precisely, by a distribution of non-overlapping disks in a periodicity cell, identified with the Representative Volume Element (RVE) shown in

Figure 1.

Here, we follow the revised Hill’s conception developed in Part II. It is worth noting that there exists an infinite many probabilistic distributions of non-overlapping disks [

13], not only the unique uniform distribution (i.i.d. random variables) tacitly considered in the majority of published works [

14].

Let the image analysis of composites be performed, and we proceed to discuss the next step of its interpretation. Having at their disposal pictures of microstructure, engineers may intuitively use the term “random composite”, though a considered picture can be deterministically described, i.e., after the corresponding image and spectral analysis, one can say where and what is located in the picture. Randomness may show itself in the external control parameter, such as the temperature of the technological process, e.g., stir/sand casting [

15,

16]. The classification problem of the obtained materials and their dependence on the control parameters can be considered in the framework of statistics.

Simple observations and measurements may give a restricted set of geometrical parameters, such as the concentration

f and two-point correlation functions of phases. Using these geometrical parameters for dispersed composites may lead to analytical formulas for the effective constants at most up to

. Higher-order formulas can be derived by structural sums [

17,

18]. Recent progress in artificial intelligence (AI), particularly in machine learning (ML), has opened new opportunities for analyzing and classifying microstructural patterns beyond traditional statistical approaches. ML enables automated recognition of geometrical regularities and correlations that remain hidden within large sets of analytical descriptors or digitized images. In the context of composites, this approach supports not only prediction of effective properties but also quantitative comparison of randomization protocols and structural irregularities.

Machine learning (ML) and artificial intelligence (AI) offer powerful tools for analyzing complex, random, and dispersed composites. However, many existing studies rely on purely numerical or empirical implementations, often limited to finite element simulations of selected datasets without a clear theoretical linkage to homogenization or analytical modeling. As emphasized in [

19], such approaches may yield inconsistent or physically unverified results. In contrast, the present work employs ML within a rigorous analytical framework, ensuring that each feature and prediction step remains grounded in the constructive theory of effective properties.

ML may be effectively applied to special types of composites. For instance, laminates under varied layer orientations during tensile tests were successfully investigated in [

20]. Data characterizing the mechanical load behavior were obtained by using twelve composite laminates with different layer orientations. It is important that the special software assigned to composite laminates was used. Compressive strength prediction of steel fiber-reinforced concrete was discussed in [

21]. One can find other results of this type concerning particular problems and other algorithms in [

22].

To effectively characterize dispersed composites in materials science, datasets must include both the spatial distribution of inclusions, ideally represented through digitized images, and the mechanical properties of the constituent phases depicted therein. These tasks of image analysis were addressed in [

23,

24]. For our purposes, we assume access to a geometrical dataset detailing the inclusions. Specifically, we consider unidirectional fiber-reinforced elastic composites. A representative cross-section of such a composite is illustrated in

Figure 1, where the inclusion coordinates

and their associated mechanical properties

are known. An example of the dataset may be the plane coordinates of centers

of equal non-overlapping circular inclusions and the pairs of elastic moduli

where

and

k denote the elastic shear and bulk moduli of host;

and

the elastic moduli of

mth inclusion. The concentration of different phases is a key parameter. In the case of a two-phase composite, we have

and the total concentration of inclusions

f.

Figure 1 illustrates the dataset

, comprising the plane coordinates of inclusions and their corresponding mechanical properties, arranged in a specific configuration. It is worth noting that a plane section of macroscopically isotropic 3D dispersed composites can adequately represent the considered 3D composites [

25].

If the analysis is limited to a particular composite represented by a single picture, it suffices to input the given dataset into a standard finite element method package to compute the effective properties. Such computations are feasible when the number of inclusions per representative volume element (RVE) does not exceed approximately 100. However, for configurations involving around 1000 inclusions, direct computation becomes practically intractable. In such a case, one may partition the RVE into smaller subdomains to facilitate numerical analysis of local fields, but not the effective constants [

26].

Simulations involving uniform, non-overlapping distributions with fixed mechanical properties have been conducted in prior studies [

27,

28], where the number of inclusions per RVE was typically on the order of 30 [

14]. The term “random” has been widely used in these and subsequent publications, often without a rigorous definition of the underlying stochastic model. In reality, an infinite variety of probabilistic distributions can be employed to capture different modes of clustering and interaction arising from chemical, biological, or mechanical processes within composite materials. The first results on using ML, the Naive Bayes classifier, for various types of 2D structures were obtained in [

13].

In the present paper, advanced machine learning algorithms are employed, including ensemble (bagging) tree-based models and dimensionality-reduction techniques such as PCA and t-SNE, to analyze structural sums as geometric features of composites. In the present study, machine learning is not treated as a “black box” numerical tool, but rather as an analytical extension of the constructive homogenization framework developed in Parts I and II. The goal is to integrate symbolic descriptors, structural sums, with data-driven algorithms capable of capturing nonlinear dependencies between geometry and macroscopic behavior.

Specifically, supervised models (ensemble decision-tree regressors and classifiers) are applied to distinguish generation protocols (R, T, P). Unsupervised techniques, such as Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE), are employed to visualize high-dimensional geometric relationships and verify the separability of microstructural families.

Each sample is represented by a feature vector derived from structural sums, complex-valued quantities encoding spatial distributions. Their real, imaginary, and modulus components form a multidimensional space analogous to latent representations in modern AI. This allows the combination of physics-informed and statistical learning approaches, where analytical relations constrain the search for correlations detected by the model.

In the context of materials science, we develop a computationally effective strategy to classify very similar dispersed composites that are not distinguishable by direct observations and other methods, such as using the correlation function or pure numerical methods (FEM). Our method can be applied to the investigation of particle interactions and clustering analysis of dispersed composites by their microstructure.

2. Schwarz’s Method and Decomposition Theorem

The generalized alternating Schwarz method can be interpreted as an infinite sequence of mutual interactions among inclusions within the boundary value problem formulated for a composite material [

17]. The classical Schwarz approach for overlapping domains is typically associated with decomposition techniques widely applied in purely numerical computations. In contrast, the generalized alternating method for non-overlapping inclusions proves advantageous for the symbolic-numerical strategies discussed in this work. Implementing both explicit and implicit schemes leads to new approximate analytical expressions for the effective properties of dispersed composites. Furthermore, the accuracy of these formulas is quantified with respect to concentration and contrast parameters [

29]. It is worth noting that the first iteration of this procedure coincides with Maxwell’s well-known self-consistent method [

30].

Integral equations in a Banach space associated with Schwarz’s method were first formulated in [

31] and later refined in [

29] along with related works. In what follows, we present these equations in a general operator form, omitting certain technical details discussed in [

29,

32]. Let

denote the prescribed external potential, and

the unknown potential within the

kth inclusion

(

). In many physical settings, the potentials

and

are connected through a linear operator equation defined in a Banach space

where

denotes the field in the domain

induced by the inclusion

. The term

produces the self-induced field.

The physical contrast parameter

is a multiplier on the bounded operators

. The operator

is determined by the impact of the local field in the

mth inclusion onto the field in the

kth one (

). The operator

has the same form as in the integral equation for a potential

in a single inclusion

Each operator

implicitly depends on the concentration of inclusions

f. After a constructive homogenization procedure, the dependence on

f can become explicit. Moreover, the operator

does not depend on the material constants. This concerns heat conduction, elastic stress and strain fields, and other processes; see Universality in Mathematical Modeling, Table [

19] (Chapter 8). Application of the successive approximation method to Equation (

2) leads to a power series in the variables

(

) with the pure geometrical coefficients consisting of operator compositions

. The explicit form of the operator

and convergence of this series were discussed in [

17,

18,

29], and works cited therein.

After averaging the field over a representative cell [

6], we arrive at the effective constants of dispersed composites. The series for the local fields is transformed into a series for the effective constants. Due to the linearity of

, it is a power series in the physical variables

and the geometrical coefficients consisting of the integrals on

. Therefore, the effective constants can be decomposed into a linear combination of pure physical and geometrical parameters of composites. This leads to the following fundamental theoretical result formulated for the effective permittivity tensor.

Decomposition Theorem [

32] (p. 25). The effective property tensor can be expressed as a linear combination of purely geometrical parameters of the inclusions, with coefficients determined by the local physical constants.

4. ML Methods

We now proceed to apply ML to the considered above composites. The input for classification of dispersed composites is the family of 14-dimensional vectors (

12). Traditionally, the ML approach has its own set of designations that differ from those used in academia. For convenience, we select the ML designations at the end of the paper.

4.1. Feature Construction from Structural Sums

The dataset comprises the following: a categorical label

type ; a value of concentration

; and multiple columns of structural sums (

12) in complex format

. For each structural sum

we extract four elemental features:

We compute family-level aggregates over structural sum index families. Each family corresponds to a group of structural sums that share the same order and thus represent a common geometric hierarchy. For example, a family such as includes all structural sums but differing in index combinations. Within every family, descriptive statistics are calculated, including the mean and standard deviation of the real parts , magnitudes , and arguments . These aggregates summarize intra-family variability and capture geometric trends common across related sums. In addition, derived descriptors—such as the ratio of real to imaginary parts and normalized amplitude differences—are introduced to measure deviations from geometric symmetry.

The number of ML features is reported in this subsection. The considered hierarchical representation yields approximately ∼130 features, improving both the interpretability and stability of the subsequent ML analysis. All features are standardized via z–score normalization (zero mean, unit variance). Principal Component Analysis (PCA) reveals about 36 effectively independent components, reflecting collinearities among raw features.

Dataset Generation and Implementation Details

The dataset used in this study consists of 1200 composite configurations. For each generation protocol and each concentration level , we generated 100 independent realizations containing non–overlapping inclusions per periodic cell. The corresponding 14 structural sums listed in (12) were exported in complex format and combined into a tabular dataset together with the class label and concentration f.

All data processing and machine-learning analyses were performed in MATLAB R2023b. The complex values of structural sums were parsed from their textual representation (“”) into MATLAB complex numbers. For each structural sum z, four numerical descriptors were computed: real part , imaginary part , magnitude , and argument . Additionally, simple family-level aggregates were incorporated (e.g., grouping sums indexed as “22”, “33”, “44”), resulting in approximately 130 raw features. All features were standardized using MATLAB’s zscore function.

The classification task was performed using a bagging ensemble of decision trees (fitcensemble with default templateTree learners). Model performance was evaluated using stratified 5-fold cross-validation executed via cvpartition and crossval, ensuring balanced representation of all three classes in every fold. The mean cross-validated classification accuracy was . To ensure full reproducibility, we specify the exact configuration of the ensemble model. The classifier was implemented using MATLAB’s fitcensemble function with the ‘Bag’ method and the default templateTree base learner. This corresponds to an ensemble of 200 decision trees trained on bootstrap-resampled datasets, with each tree grown without predetermined depth constraints (unpruned CART trees). At each split, all available predictors were considered (NumVariablesToSample = ‘all’), and no additional feature-selection or dimensionality reduction step was applied prior to training. This configuration yields a low-bias, high-variance base learner whose variance is reduced through averaging across trees, consistent with classical bagging theory.

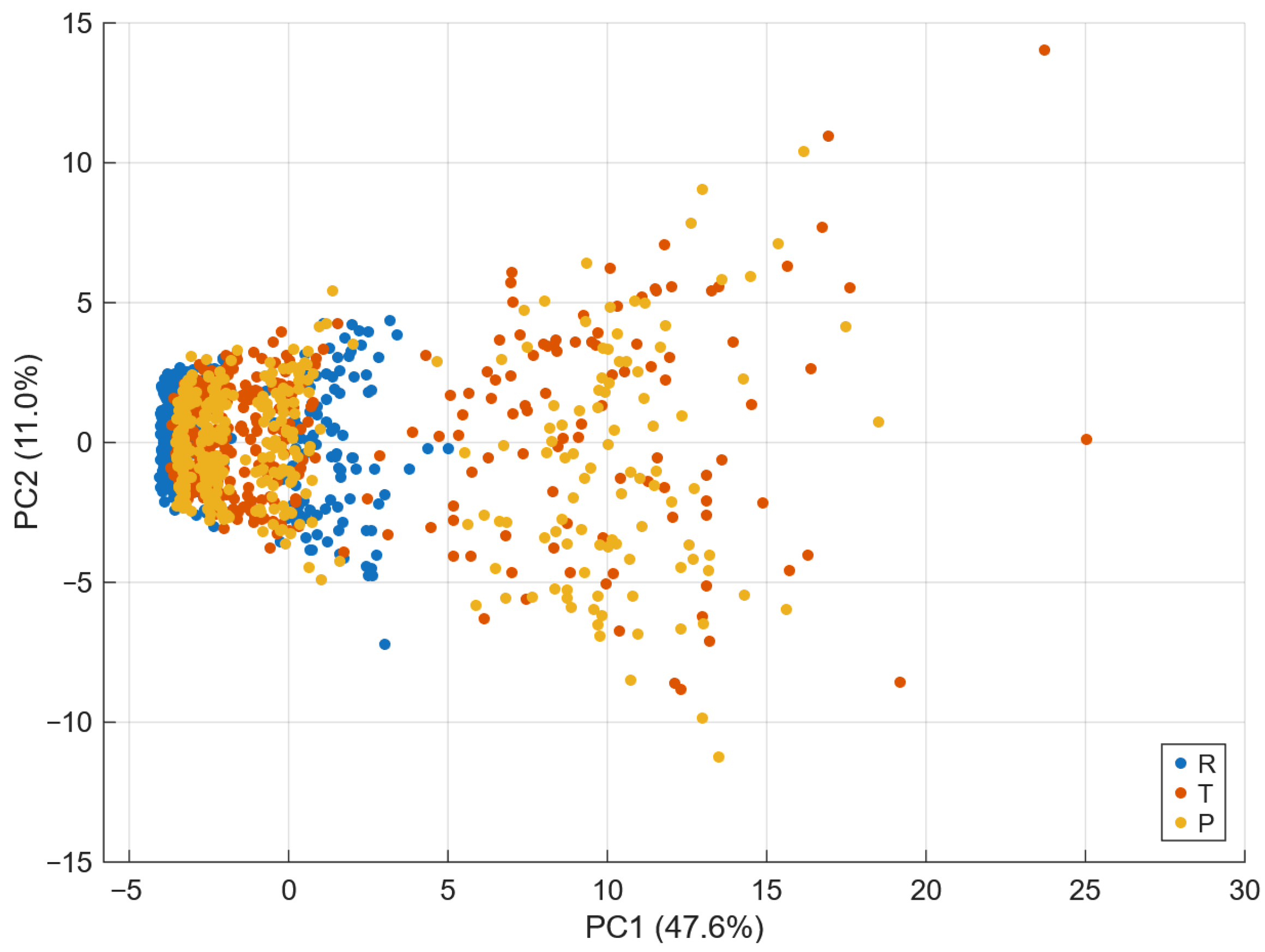

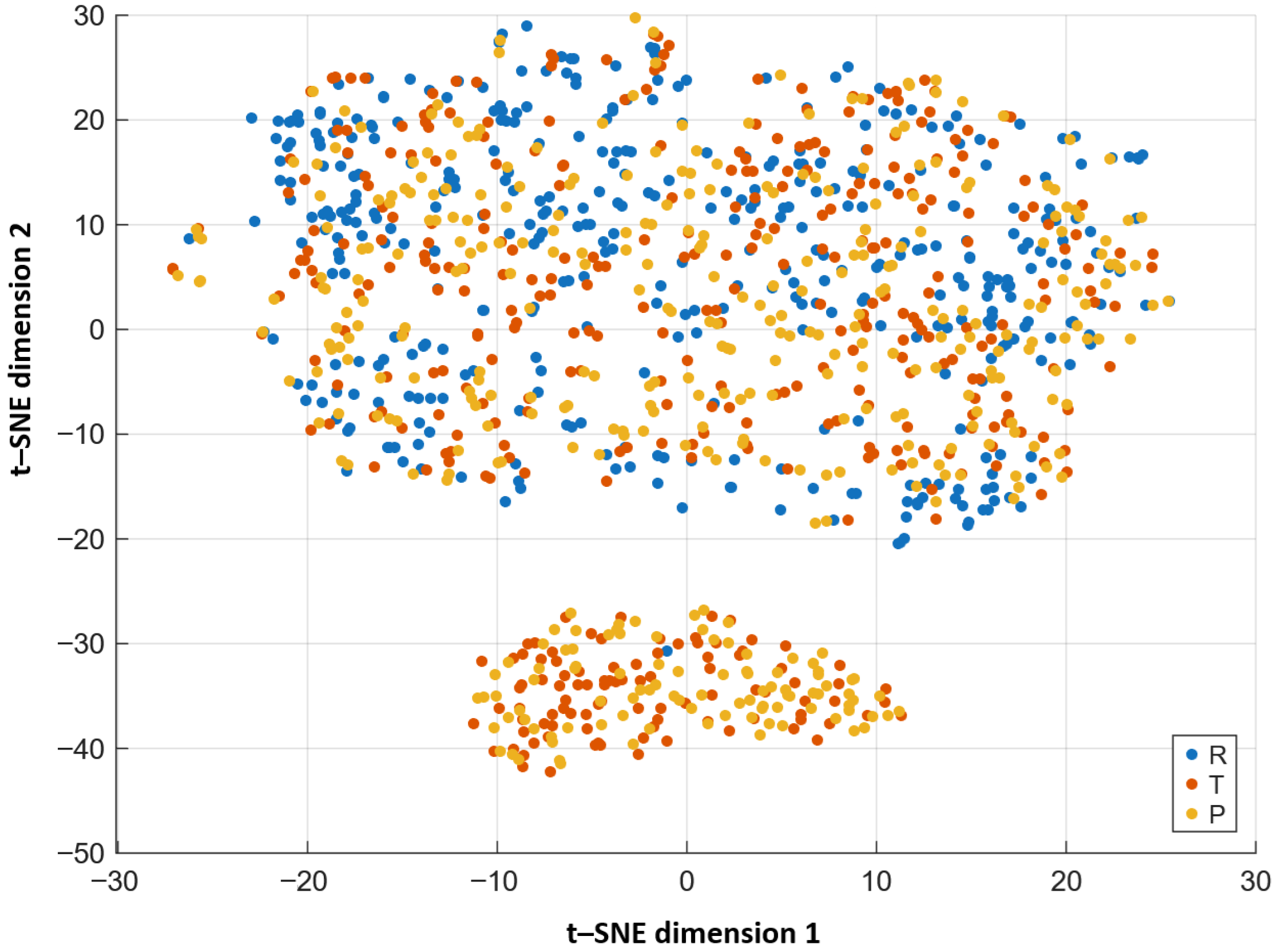

The number of trees (200) and the unconstrained depth were verified to be sufficient by monitoring the out-of-bag error curve, which plateaued well before reaching the full ensemble, indicating that variance reduction had stabilised. Increasing the number of trees to 300 or limiting tree depth did not produce statistically meaningful changes in cross-validated accuracy (variations below ), confirming that the reported ACC = 0.7125 is robust with respect to ensemble size and model capacity. No regularisation or pruning was applied, as deep trees are known to be optimal base learners for bagging. Dimensionality reduction was applied for visualization and interpretability. Principal Component Analysis (PCA) was performed using MATLAB’s pca function; the first two principal components explained approximately and of the variance, respectively, and already revealed clear separation between R, T, and P samples. A nonlinear embedding was additionally obtained using tsne, confirming local cluster separability.

Feature relevance was quantified using permutation importance computed via out-of-bag predictor perturbations (oobPermutedPredictorImportance). Higher-order magnitudes and real parts consistently ranked as the most informative descriptors, in agreement with the theoretical expectation that higher-order structural sums encode finer geometric information.

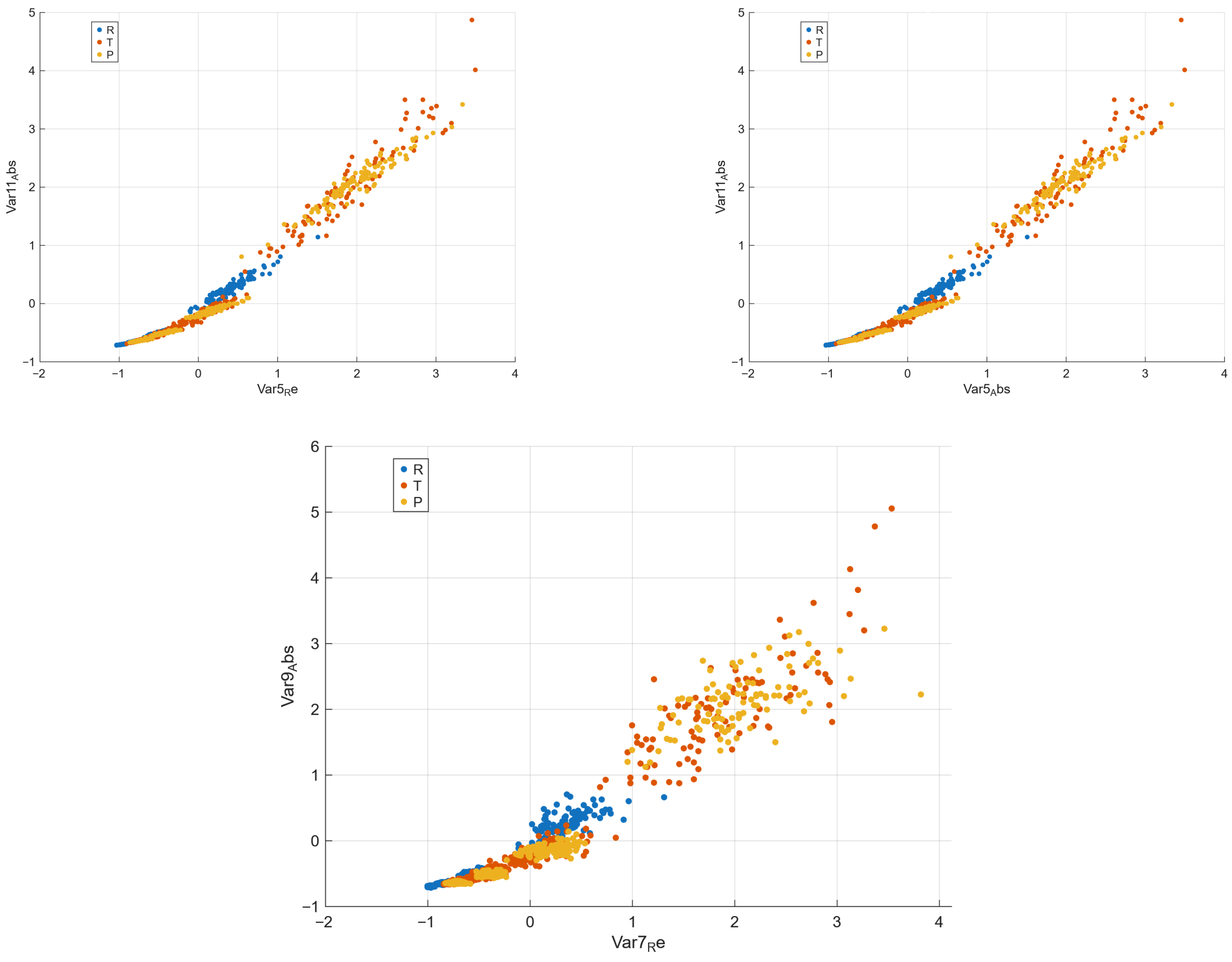

To illustrate discriminability at low dimensionality, all two-feature combinations were evaluated using a k–nearest neighbours classifier (, 5–fold CV). This procedure identified several highly separative pairs, enabling intuitive two-dimensional visualizations of class boundaries.

The PCA was used exclusively for visualization and dimensionality reduction diagnostics, not for model training. The observation that approximately 36 principal components capture most of the variance indicates internal redundancy within the engineered feature set, but the classifiers always operated on the full set of engineered features. Model-agnostic interpretability techniques such as permutation importance are therefore the primary tool used here to quantify feature relevance in the full engineered space; methods such as SHAP, while applicable in principle, were not required for the present analytical objectives of the study.

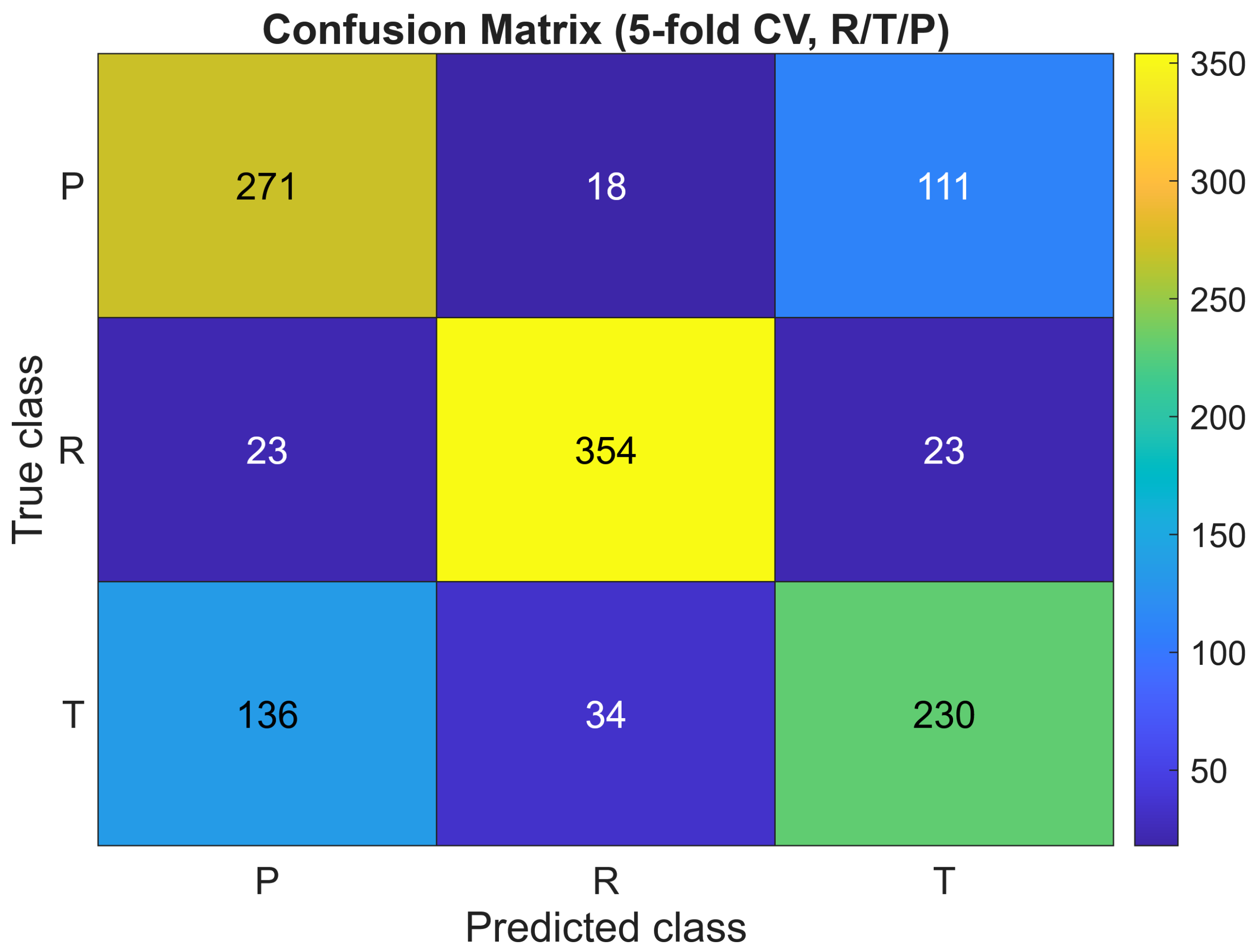

To assess the reliability of the classifier beyond aggregated metrics, we also examined patterns in the misclassified samples and evaluated the robustness of the model under perturbations. As visible in the confusion matrix (

Figure 2), the majority of errors occur between the

R and

T classes, which is consistent with their partial geometric overlap observed in both the PCA and t–SNE embeddings. The

P class remains the most distinct owing to its pronounced regularity. A misclassification inspection reveals that mislabelled cases tend to have intermediate values of higher-order magnitudes

and phase-variability descriptors, confirming that errors arise from genuinely ambiguous geometries rather than numerical instability.

To test sensitivity to noise, we added independent Gaussian perturbations to all features ( of the empirical standard deviation) and retrained the classifier. The resulting cross-validated accuracy varied by less than , indicating strong numerical stability. Doubling the sampling density (by regenerating the dataset with 200 realizations per class and concentration) produced the same qualitative separation between R, T, and P and changed the accuracy by less than . Furthermore, the classifier was evaluated on modified datasets in which the inclusion radii were varied by while preserving non-overlap; no statistically significant degradation of performance was observed. These tests collectively indicate that the model is robust to perturbations in sampling density, numerical noise, and moderate geometric variations in location of inclusions, and that misclassified cases reflect intrinsic transitional microstructures rather than model instability.

4.2. Accuracy ACC

We use a bagging ensemble of decision trees for the classification

, cross-validation (5-fold CV),

where

n denotes the total number of test samples, and

is the number of samples whose predicted labels match the true class labels.

Accuracy quantifies the proportion of correctly classified samples among all observations. ACC = 1 corresponds to perfect classification, whereas a value close to the random baseline (approximately 0.33 for three classes ) indicates low discriminative capability. In this study, ACC measures the ensemble classifier’s ability to correctly identify the composite type (, or P) based on geometric descriptors derived from structural sums.

The classification accuracy ACC thus provides a concise and reliable value for verifying that the structural–sum-based features preserve sufficient geometric information to distinguish between microstructure generation protocols. This metric is consistently used in the subsequent sections to evaluate the performance of the ensemble models. The confusion matrix presented in

Figure 2 corresponds directly to these 5-fold cross–validation predictions, ensuring full consistency between the reported accuracy ACC = 0.7125 and the sample–wise classification outcomes.

4.3. Visualization and Feature Interpretation

Principal Component Analysis (PCA) provides a linear two-dimensional projection that highlights the global structure.

t–distributed Stochastic Neighbor Embedding (

t–SNE) gives a nonlinear embedding preserving local neighborhoods by minimizing the Kullback–Leibler divergence

with

and

denoting pairwise similarities in the input and embedded spaces, respectively. Permutation importance quantifies feature relevance as the drop in ACC after randomly permuting a given feature. Best feature pairs are identified by enumerating all two-dimensional pairs, training

k–nearest neighbors (

k–NN with

) on each pair, and selecting the highest 5-fold CV ACC. To make the methodology explicit, the selection of “best feature pairs” proceeds as follows. From the standardized feature matrix containing approximately 130 engineered descriptors derived from the fourteen structural sums in Equation (12), we enumerate all unordered pairs

. For each candidate pair, we train a

k-nearest neighbours classifier (

fitcknn,

, Euclidean distance) using only the two-dimensional input

and evaluate its performance via stratified 5-fold cross-validation.

The mean cross-validated accuracy serves as the scoring criterion for each feature pair, and the highest-scoring pairs are retained for subsequent visualization.

This procedure is intended purely as an interpretable, model-agnostic probe of low-dimensional separability, rather than as an alternative classifier for the main task. Higher-dimensional feature subsets () could also be selected, yet two-dimensional projections uniquely enable geometric visualization of class separation, which is essential for interpreting how individual structural–sum descriptors encode microstructural differences.

In parallel, global feature relevance in the full 130-dimensional space is quantified via permutation importance for the bagging-tree ensemble (oobPermutedPredictorImportance). The resulting importance profiles consistently show that higher-order magnitudes and real-part components dominate predictive performance, while lower-order aggregates contribute less. These findings align with the theoretical argument that higher-order structural sums encode the finest geometric information.

SHAP-style analyses could be performed, but for tree ensembles the permutation-based approach already provides stable, model-agnostic, and easily interpretable feature rankings, which suffices for the analytical objectives of this study.

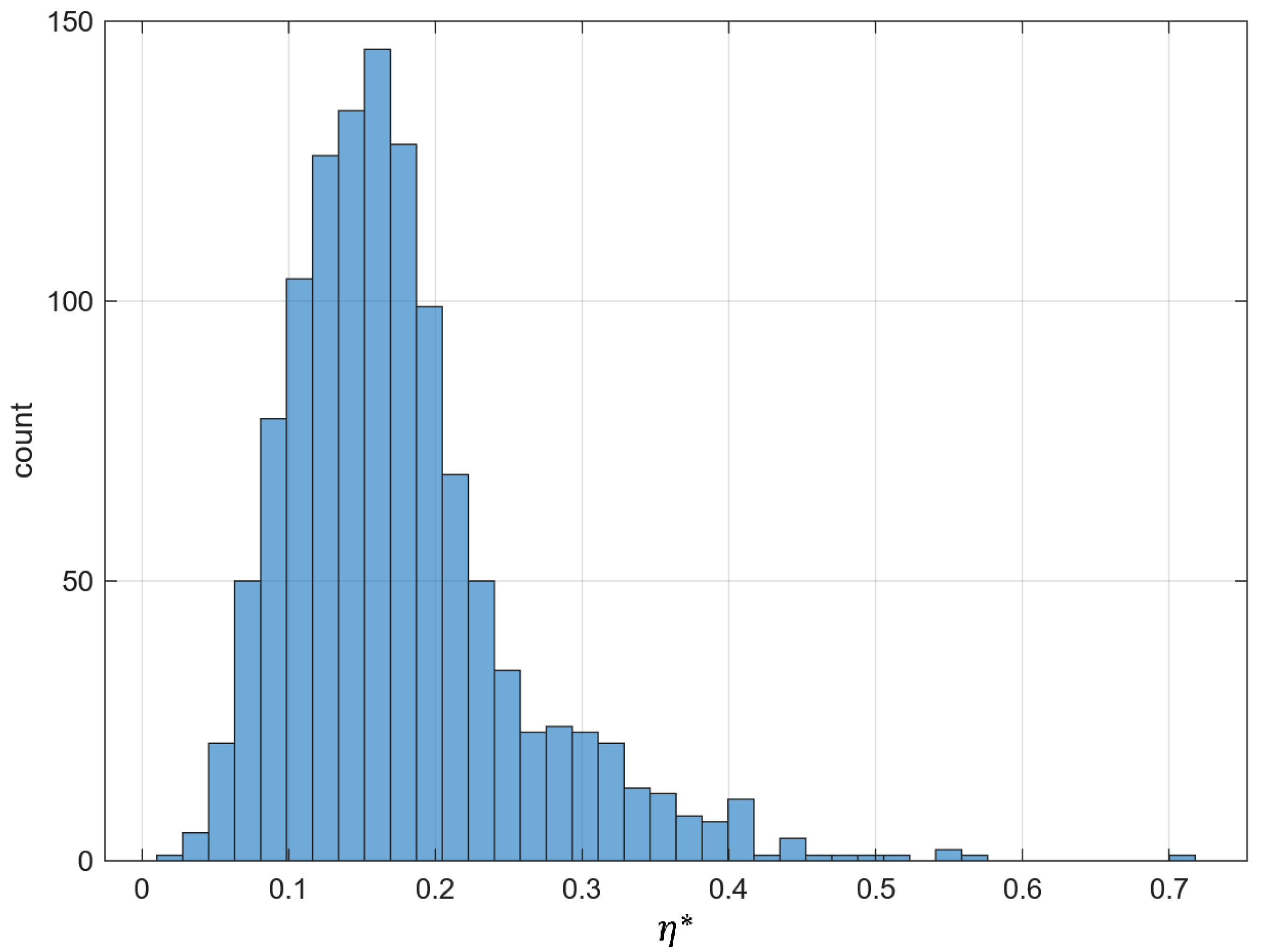

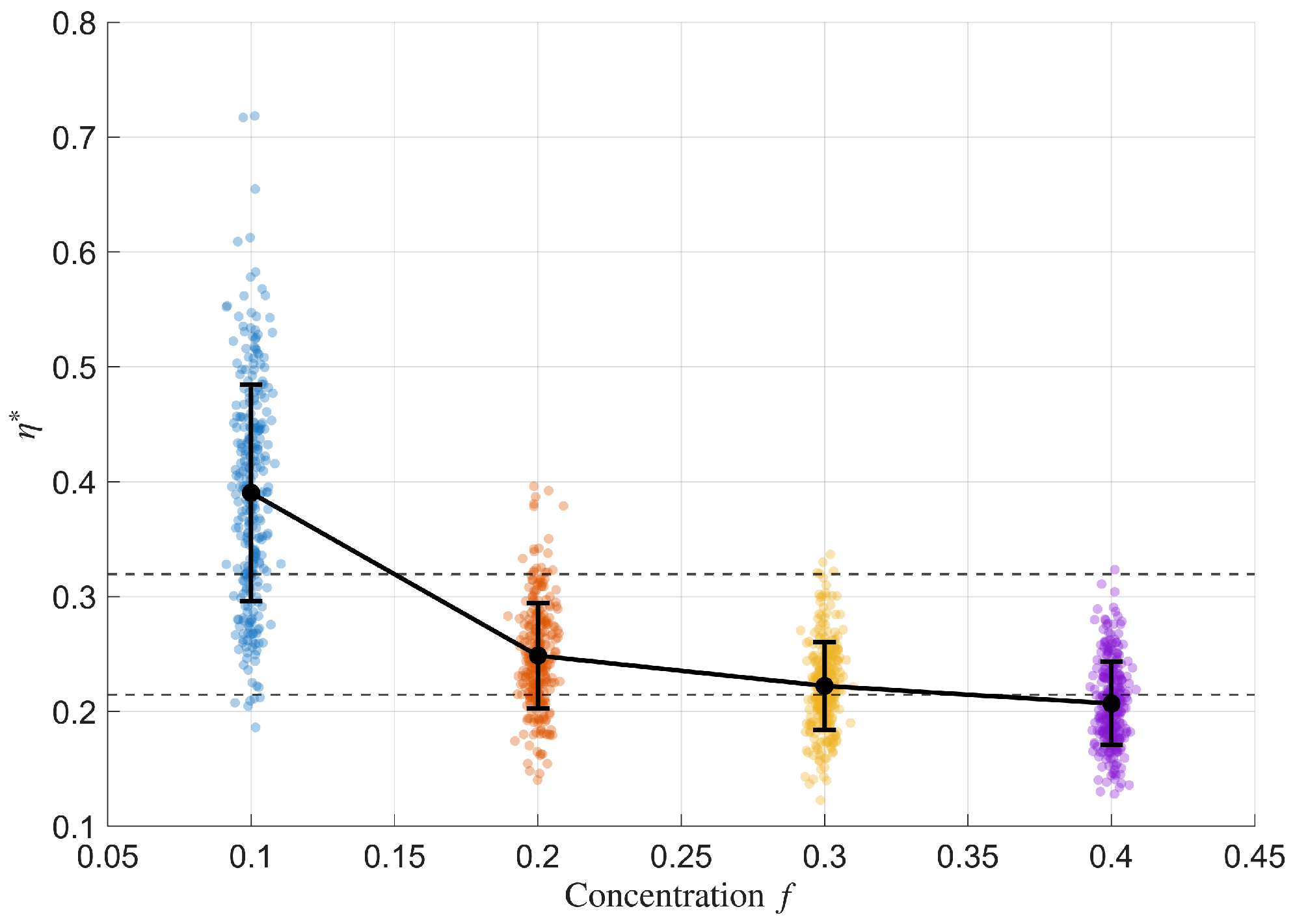

4.4. Degree of Irregularity

Let denote the Euclidean norm . Consider the following three standardized components:

High-order energy is the mean of over higher-index families (captures intensity of higher-order content),

Phase chaos denotes the standard deviation of over selected families (captures angular variability),

Im/Re asymmetry is the ratio of the

norms of the imaginary and real components across selected structural-sum families,

The value (

16) quantifies the imbalance between the amplitudes of the imaginary and real parts in the complex plane and, therefore, reflects deviations from geometric symmetry in the spatial arrangement of inclusions. To give a simple example, consider the case of one-element vector

, where the structural sum is defined by (

11) with

and

. Write it for clarity in the explicit form

where

is defined by (

6) and can be calculated by (

5). Here, it is assumed for shortness that

for

. Then, the value

determines the degree of macroscopical isotropy, since

for ideally isotropic composites [

23]. In addition to this, the second equation must hold

for plane strain [

18]. It is worth noting that the Eisenstein summation is applied in this example.

We define the irregularity index

as a linear combination of the above three values rescaled to

All three components are made dimensionless and comparable prior to aggregation:

is min–max normalized over the selected families of

,

is computed as the standard deviation of

and normalized by

, and

is squashed to

via

and then min–max scaled. The weights

in (

18) emphasize high–order energy while retaining phase variability and Im/Re asymmetry. We verified that small perturbations of these weights do not affect the conclusions. Maybe other combinations do affect the conclusions. These weights were selected based on empirical variance analysis of the 14 structural-sum families and on their relative discriminative power observed in the

classification task. Higher-order magnitudes

contribute the dominant share of variance (approximately

), which justifies assigning the largest weight to

. The phase variability component

shows a moderate but systematic class-separating effect, whereas the Im/Re asymmetry

exhibits the weakest but still non-negligible sensitivity. Therefore, the triplet of weights

reflects the relative statistical importance of the three standardized components while keeping

stable under small perturbations of these weights. To verify that the coefficients in (18) are not arbitrary, we conducted a robustness study using several alternative weighting schemes, including uniform weights

, variance-proportional weights, and entropy-based normalization. In all cases, the resulting values of

were strongly correlated with the baseline definition (

), and the ranking of samples as well as all class-level trends remaining unchanged. This confirms that the triplet

acts merely as a convenient normalized scaling that reflects the relative statistical contributions of the three components, rather than a sensitive or arbitrary choice that would affect the interpretation of the irregularity index. Higher

indicates greater irregularity of the set of composite microstructures, not an irregularity of a single microstructure. Specifically, it reflects stronger deviations from spatial symmetry, higher angular disorder, and increased imbalance between the real and imaginary components of the structural sum families.

It is worth noting the entropy-like irregularity measure introduced in [

13,

18], which conceptually relates to the present definition but was derived for different classes of composites. We now extend this notion to 2D elastic composites. Consider the infinite set of structural sums

introduced in the previous section. Let

be the set of structural sums calculated for the regular normalized hexagonal array of disks with formally fixed

. This set consists of the lattice sums (

4) and (

7) calculated for

and

.

Let for fixed integers

i and

M some coefficients from the polynomial approximation of degree

M (similar to (

13)) contain a structural sum

. Let

denote the minimal index of these coefficients. Introduce the structural irregularity measure similar to [

13,

18]

The measure

holds zero for the regular hexagonal array. It can be considered as the distance in some metric between a structure and the hexagonal array. The measure

indicates the degree of regularity/irregularity of a single microstructure. Introduction of the measure

qualitatively resolves the irregularity question of disordered structures traditionally considered in physics, rather quantitatively, as a deviation of random structure from regular lattices [

41].

6. Discussion and Conclusions

Compared with previous exploratory studies using simple classifiers such as Naive Bayes [

13], the present approach extends the methodology toward robust ensemble learning, feature-importance analysis, and interpretable embeddings consistent with the analytical decomposition of effective tensors. Consequently, the ML stage acts as a bridge between the deterministic mathematical model and empirical statistical inference, reinforcing reproducibility and interpretability in the study of random composites. The workflow follows the general paradigm of data-driven materials modeling—dataset construction, feature engineering, training, validation, and interpretation—adapted here to analytical descriptors rather than raw images.

The considered model reaches the accuracy for classification into classes under 5-fold cross-validation, which confirms the predictive strength of the constructed feature vector. Visualization methods, namely Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t–SNE), further confirm the natural separation of the classes. The analysis of the best two-dimensional feature pairs supports the interpretability of the model.

The irregularity index provides a scalar descriptor that quantifies the set irregularity of composite microstructures. The observed decrease in the mean value of for higher concentrations () compared with lower ones () indicates that denser microstructures tend to be more regular and stabilized at higher packing.

The study demonstrates that structural sums constitute an effective and quantitative descriptor of 2D composite geometry and can be directly used as machine-learning features. Our cycle of papers, based on [

17,

18,

32], essentially extends the previous naive statistical investigations, which were concentrated on the special uniform distribution.

The main advantages and features of the structural sums, the cornerstone of

aRVE, were partially summarized in [

32] (Chapter 4) and given below in the context of the elasticity problems.

A class of random composites can be directly determined by a set of structural sums without the computation of its effective properties. In the present paper, ML methods is used to reach the goal of classification.

In the framework of

aRVE, random clustering composites can be theoretically simulated [

13] as well as an observed composite can be investigated [

43].

The macroscopic isotropy can be quickly verified by means of structural sums [

40,

42] (Equations (3.2)).

aRVE can be applied to any distribution of disks on the plane, not only to the uniformly distributed inclusions tacitly considered in the majority of published works.

The method does not use expansive, purely numerical computations, such as FEM, infinite systems of equations, and integral equations.

The method does not use virtually impossible computation of higher-order correlation functions.

Future research will address practical classification challenges, which were preliminarily explored in [

23] for the one-dimensional vector (

12), simplified to the scalar form

. The present study builds upon the theoretical foundations established in Part II, where stochastic simulations were conducted using three distinct protocols

, each based on a uniform distribution of identical disks. To illustrate the scheme, we now consider three specific fourth-order approximate expressions from Part II for the normalized effective shear modulus of composites containing hard-particle inclusions:

In Part II, the structural sums were simulated using the protocols

. These sums were then averaged within each protocol and substituted into the general formulas derived in Part II, yielding the coefficients of Equation (

20). The coefficients in

exhibit deviations near the value 2, which corresponds to an ideally isotropic composite. The subsequent coefficients in

and

encapsulate more nuanced information about the composites, reflecting higher-order correlations. In this paper, we analyze these coefficients in depth by examining their fundamental components, the structural sums.

Section 4 demonstrates a strong correspondence between the protocols

and their respective features, providing theoretical validation for the proposed ML approach.

Looking ahead, practical research will focus on investigating real microstructures. If we need the effective constants of random composites, we follow the strategy developed in Parts I and II, for example, by estimating the constants directly using Formula (

20). The results of the present paper can be applied to the classification of a set of microstructures. In addition to the effective constants, the structural-sum feature vectors represent the hidden information on the morphological structure of heterogeneous media.

Let us give a bright example from the study of bacteria. The collective behaviour of bacteria was discussed in [

44,

45], where the correlation length and correlation time of a bacterial suspension were studied. The onset of collective motion was related to the hydrodynamic interactions versus collisions by studying the effect of the dipole moment. The macroscopically isotropic behavior of bacteria was established in [

46,

47], with some local oscillations on the mesoscopic level, which can be explained by the distinction between active and passive particles [

48]. Taking into account that the effective viscosity of 2D macroscopically isotropic suspensions within the approximation

does not depend on the location of bacteria, we conclude that the results [

44,

45] are based rather on semi-empirical observations. At the same time, the higher-order structural sums are significantly different for chaotic and collective behavior [

46,

47]. This clearly demonstrates the advantage of higher-order structural sums.

Come back to material science. Suppose we have several microstructure images produced via the same technological process but governed by three distinct control parameters, denoted by the same letters

. Instead of relying on theoretical simulations, we can extract data directly from these raw images and apply the ML method outlined in

Section 4. The current simulations suggest that it should be possible to classify the composites based on the actual technological parameters

. If classification fails, it may indicate that these parameters are not fundamentally significant. Naturally, such a negative outcome could also result from excessive deviations in the data.

The extension of ML methods in future research will be applied to multi-phase composites, building on the methods developed in [

49,

50]. The shape-form impact on classification will also be considered in light of the studies [

29,

50,

51].