4.2. Results of the Baseline Model (BLM)

As shown in

Table 4, the BLM trained on the target domain datasets S2, XGBoost, and RF was evaluated 0.81, and MAPE of 7.70%, while the RF model performed slightly better with an RMSE of 3.20 MPa, R

2 of 0.83, but a marginally higher MAPE of 9.21%.

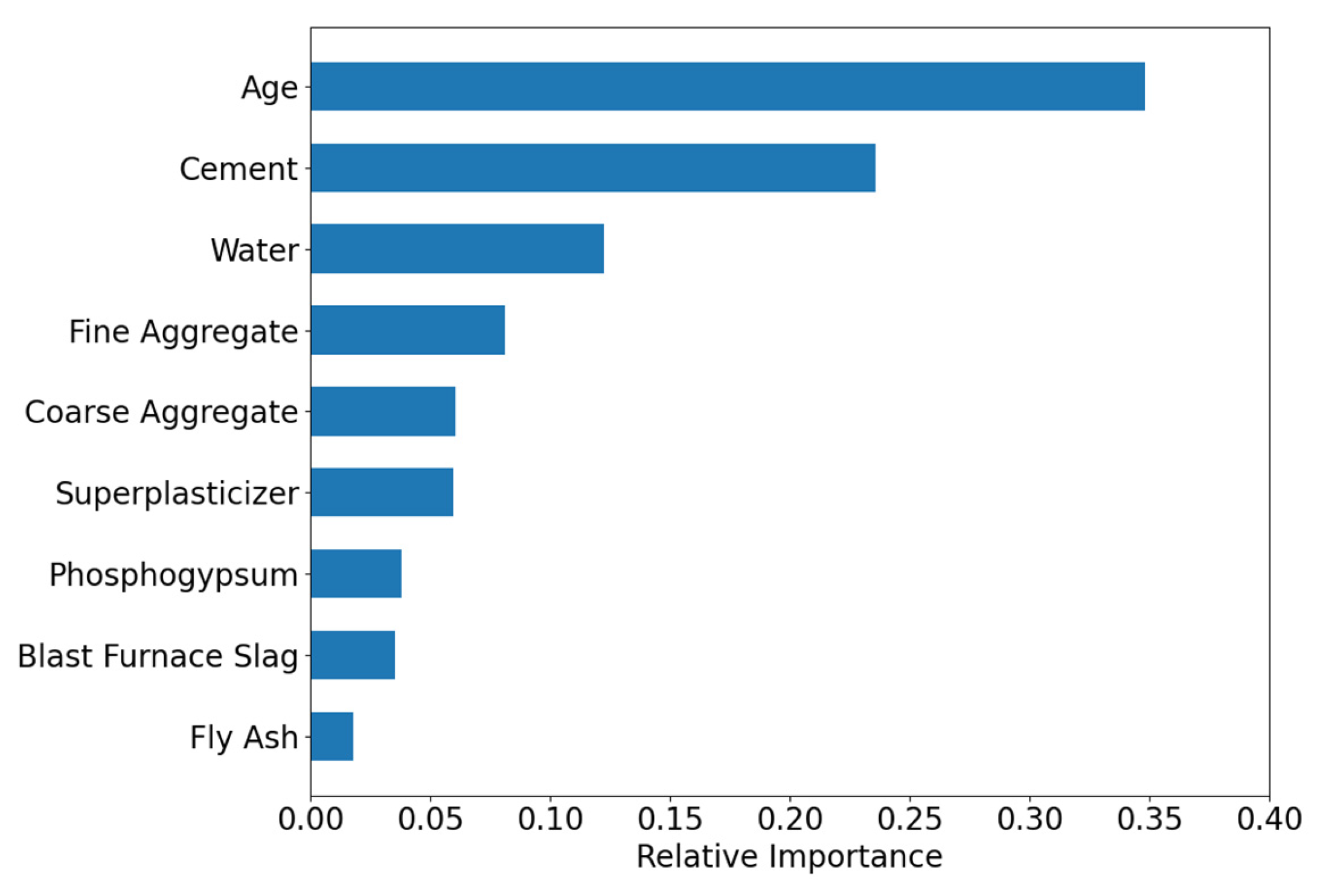

As illustrated in

Figure 7, the feature importance analysis showed both similarities and differences between the two models. Both identified Age as the most critical feature (importance > 0.35), significantly outweighing other variables. However, the secondary feature rankings diverged:

XGBoost: Coarse Aggregate > Cement > PGA > Phosphogypsum > Water > Superplasticizer > Fine Aggregate > Retarder.

RF: Cement > Phosphogypsum > Coarse Aggregate > PGA > Water > Superplasticizer > Fine Aggregate > Retarder.

This discrepancy arises from the limited sample size (N = 19), which introduces instability in feature importance estimation under small-sample conditions. Notably, both models assigned zero importance to Fine Aggregate and Retarder, mainly due to their low data variability, making it difficult to discern their relationship with compressive strength.

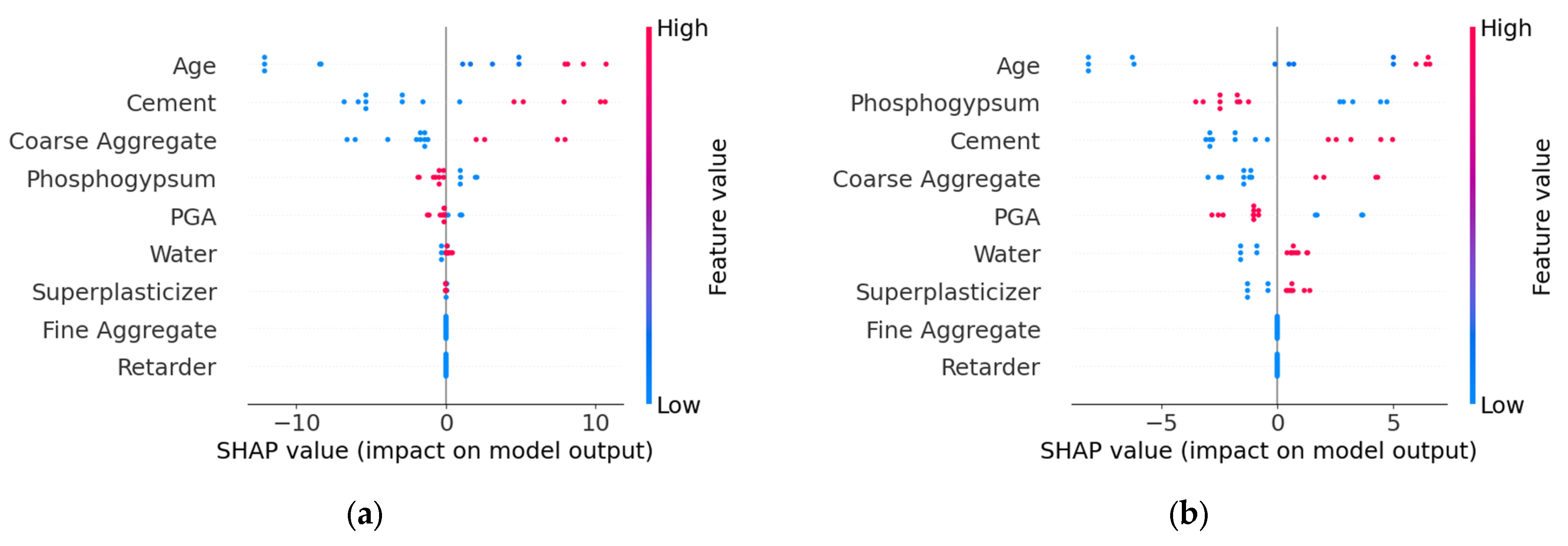

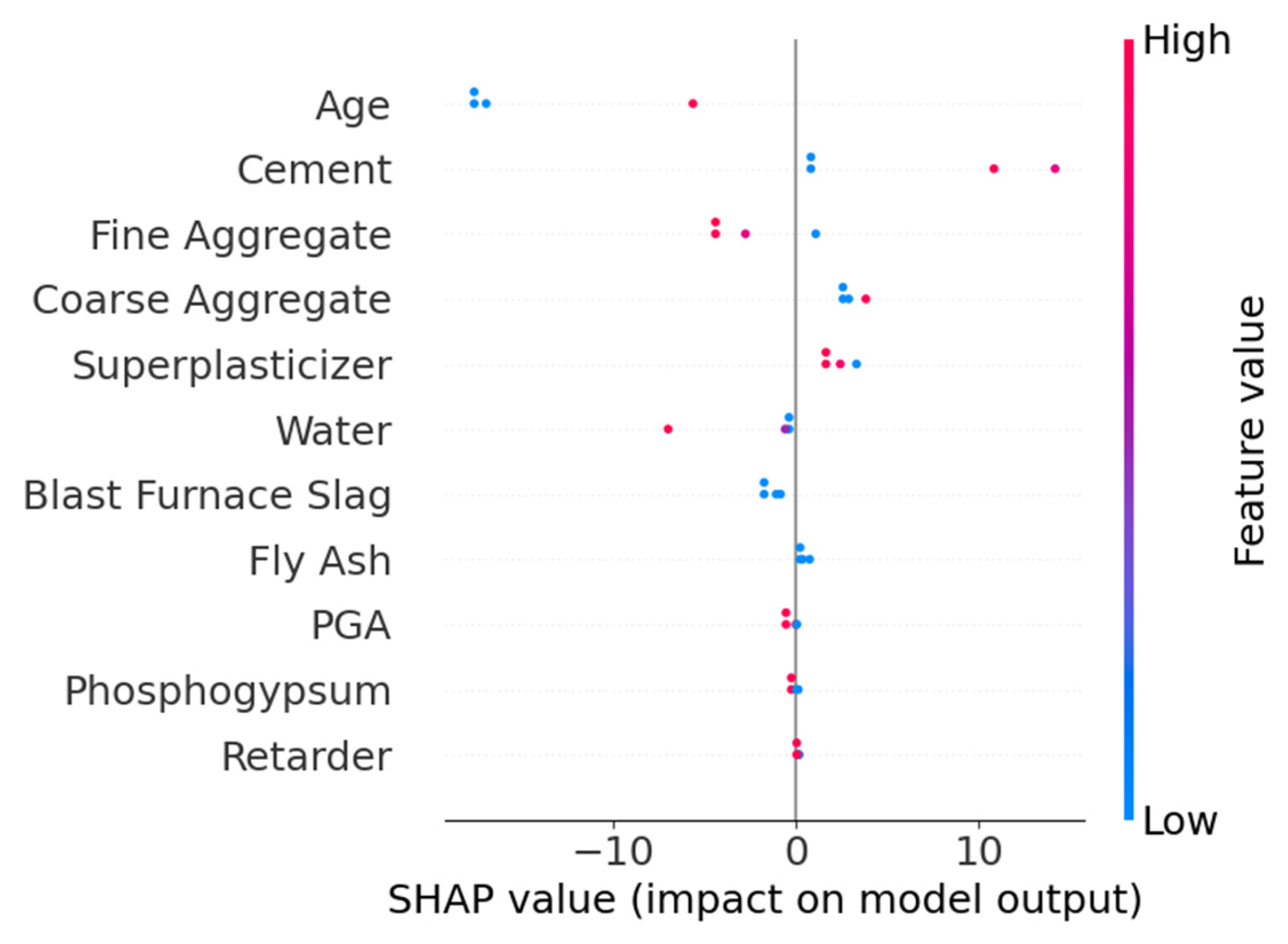

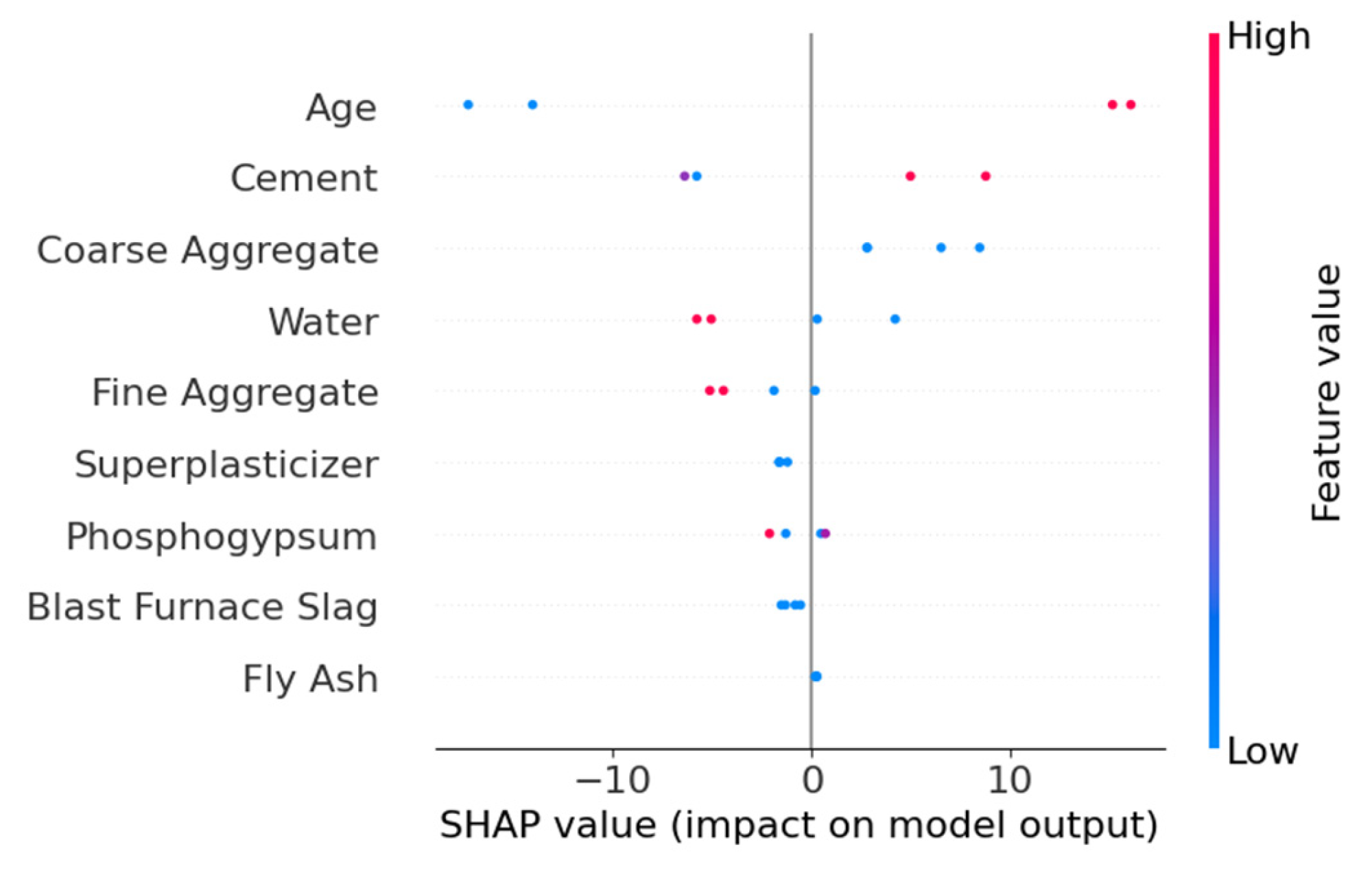

The SHAP overview shown in

Figure 8 indicates that different algorithms exhibit variations in feature interpretation. In the XGBoost model, Age, Cement, and Coarse Aggregate have the greatest impact on the model’s output, with relatively dispersed SHAP value distributions, suggesting these features contribute significantly to the model’s predictions. In contrast, the RF model shows more pronounced influences from Age, Phosphogypsum, and Cement. Additionally, Fine Aggregate and Retarder display minimal SHAP value variations in both XGBoost and RF models, indicating that both algorithms consider their impact on strength to be negligible. The SHAP values of other parameters calculated by the two models differ in both ranking and distribution, demonstrating clear disparities.

The above analysis reveals that even when using the same datasets, the output results can vary significantly depending on the machine learning algorithm employed. This distinction is particularly prominent in small-sample data. The primary reason is that in small samples, the true relationships between features are difficult to capture, leading the models to misinterpret random fluctuations as meaningful patterns.

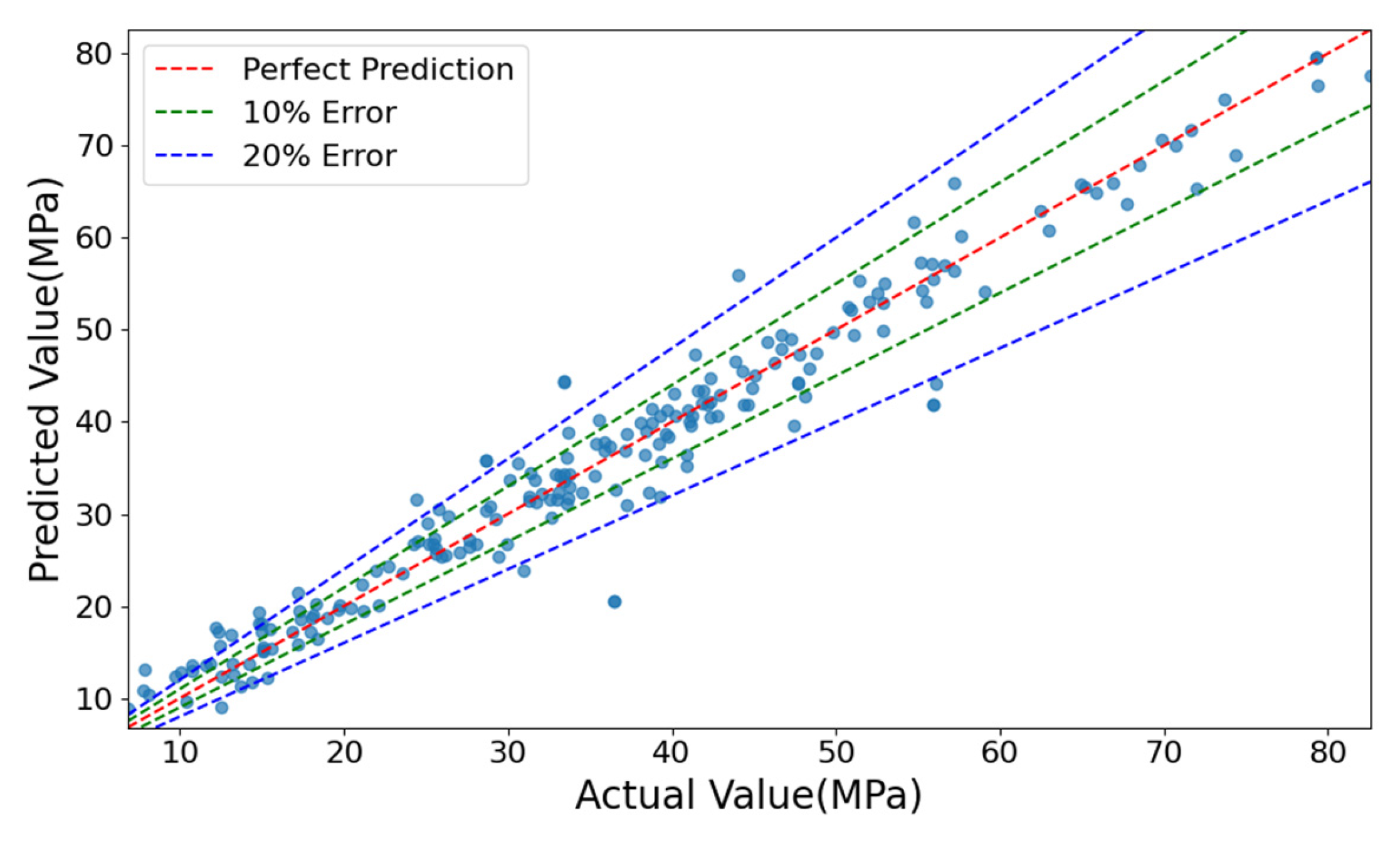

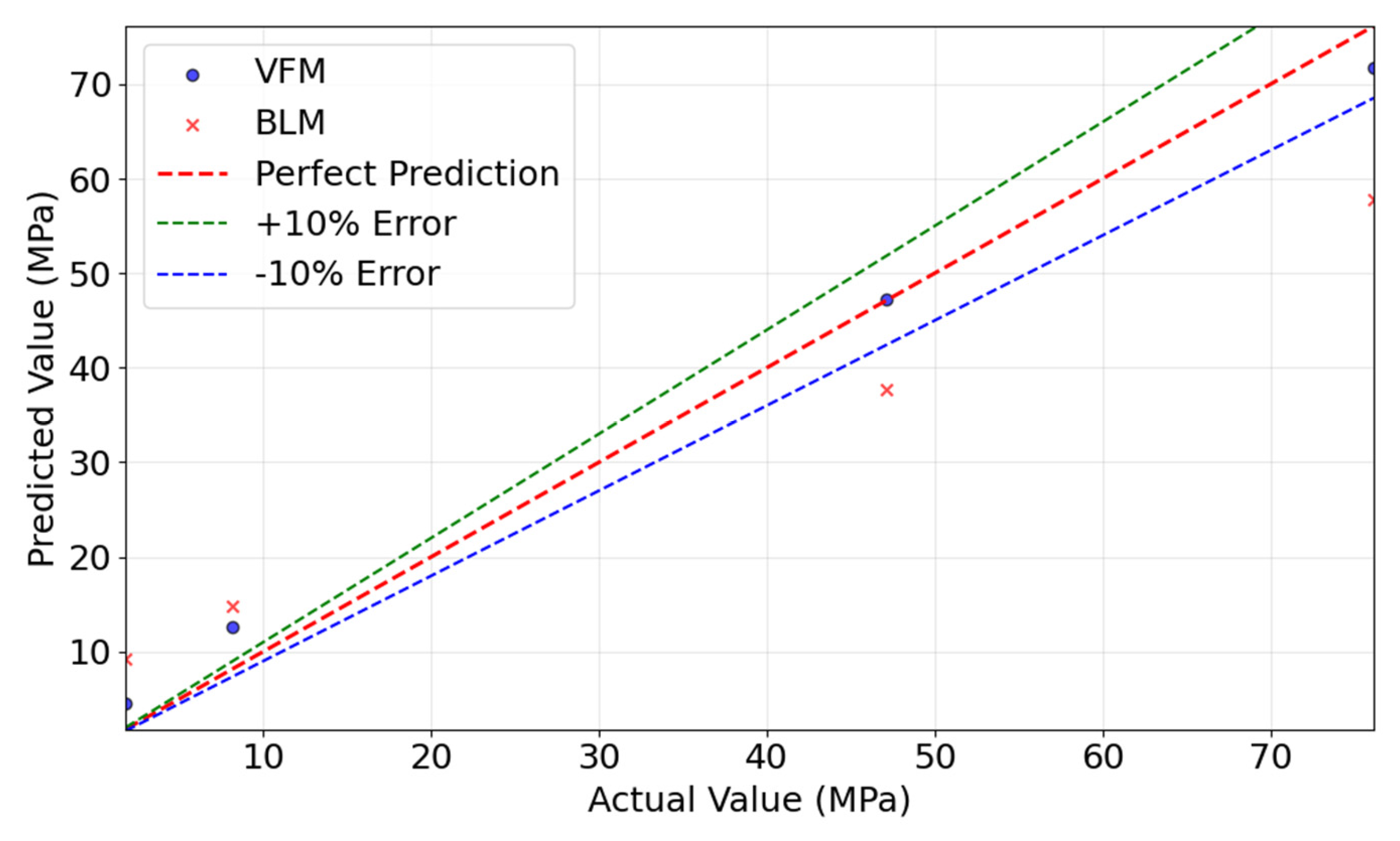

Figure 9 compares the predicted and actual values for both models. RF demonstrated better fitting in the medium-to-high strength range, while XGBoost performed well in the low-to-medium range. Overall, most predictions fell within the ±10% error band, confirming reasonable accuracy.

In the S2 target domain datasets, the predictive capability of traditional machine learning models is constrained by the limited sample. Although the XGBoost and RF models achieve an average R2 score of 0.82 on the test set, demonstrating basic predictive performance, this remains insufficient to meet engineering-level strength prediction requirements. Furthermore, feature importance analysis exhibits significant randomness, reflecting the instability in feature contribution interpretation due to the small sample. SHAP value comparisons reveal that the models struggle to capture stable correlation mechanisms among components from the limited data.

4.3. Results of the Transfer Learning Model (TLM)

Table 5 presents the test results of the TLM, constructed using the dynamic weighted transfer learning framework. Compared to the average performance of the BLM, the TLM demonstrated superior and more stable results. The RMSE was reduced from 3.31 MPa to 2.86 MPa, representing a 13.6% decrease. The R

2 significantly improved from 0.82 to 0.95, a 15.9% increase, and the MAPE decreased from 8.48 to 7.78. These results validate the effectiveness of the dynamic weighted transfer learning model in small-sample conditions, demonstrating its ability to overcome the performance limitations of traditional methods under extreme data scarcity conditions.

Figure 10 displays the feature importance distribution output by the TLM. The feature Age has the highest importance score (≈0.35), indicating its critical role in model predictions, followed by Cement and Water, with importance scores of 0.27 and 0.14, respectively. Other secondary features are ranked as follows: Fine Aggregate, Coarse Aggregate, Superplasticizer, Blast Furnace Slag, Fly Ash, Phosphogypsum, Retarder, and PGA. Compared to the feature importance results of the SDM, both models identify Age, Cement, and Water as the top three most important features, confirming that TLM successfully inherits the general knowledge of conventional concrete. Notably, the target domain-specific features (Phosphogypsum, Retarder, and PGA) exhibit low but non-zero importance scores, indicating that TLM captures their unique influence on strength.

Figure 11 shows the SHAP overview for feature contributions. The features Age and Cement exhibit the most significant impact on model outputs. Specifically, lower values of Age negatively affect strength, while higher values of Cement positively correlate with strength. The SHAP values of other features are more concentrated, suggesting their relatively minor influence on strength predictions.

A sensitivity analysis was conducted to assess the stability and robustness of the SHAP attributions. The proposed TLM was retrained 10 times, each with a different random seed, to account for stochastic variability during the training process. For each of the 10 runs, SHAP values were computed on the test set. The mean and standard deviation of the absolute SHAP values for each feature were then calculated across all runs to quantify the variance.

The results are presented in

Table 6. The standard deviations for all feature attributions are consistently low relative to their mean values, particularly for the most influential features such as Age, Cement, and Fine Aggregate. This low variance indicates that the feature importance and attributions generated by the model are stable and not highly sensitive to model reruns. This robustness strengthens the reliability of the conclusions drawn from the SHAP analysis regarding the underlying mechanisms of PGC strength development.

Figure 12 compares the prediction results of the TLM and BLM. The plot illustrates a representative run where the TLM achieved the median R

2 score among the 10 iterations, while the aggregated statistical performance is presented in

Table 5. The TLM model’s predictions closely align with the true values, with most data points distributed near the ideal prediction line, indicating superior predictive performance. In contrast, the BLM model shows larger deviations, particularly in low-value regions, where predictions significantly diverge from the ideal line. This analysis confirms that the TLM outperforms the BLM in both prediction accuracy and generalization capability.

In summary, the proposed dynamic weighted transfer learning framework achieves high-precision strength prediction for PGC, effectively addressing the challenges posed by limited sample sizes and cross-material domain knowledge transfer.

4.4. Results of the Verification Model (VFM)

As shown in

Table 7, the performance of the VFM model on the external validation datasets (S3) was evaluated. When evaluated on the external validation datasets (S3) using the same repeated random splits methodology, the BLM (XGBoost) achieved a RMSE of 11.47 MPa, R

2 of 0.86, and MAPE of 13.80. In stark contrast, the VFM model demonstrated significantly improved and more stable performance, with a mean RMSE of 3.40 MPa, R

2 of 0.97, and MAPE of 5.30%. This comparison highlights the performance degradation of traditional methods when faced with reduced feature dimensions and material system differences. In contrast, the TLM, through dynamic weight calculation and weighted loss optimization, achieved highly accurate strength predictions for PGC.

To provide a more granular view of the model’s performance on the external dataset,

Table 8 details the per-sample prediction results for the S3 dataset. The table shows that the model maintains consistently small residuals across the entire range of strength values, further validating its high accuracy and generalization capability.

Figure 13 presents the feature importance ranking computed by the VFM model. Similar to the SDM model, Age has the highest importance score (0.35), followed by Cement (0.25) and Water (0.13), indicating that the transfer learning model successfully captured the general strength prediction rules of conventional concrete. Other features, such as Fine Aggregate (0.08), Coarse Aggregate (0.07), and Superplasticizer (0.06), had relatively lower importance scores, suggesting their limited contribution to model predictions. Features like Phosphogypsum (0.04), Blast Furnace Slag (0.03), and Fly Ash (0.02) exhibited even weaker influence. Importantly, all features had non-zero importance scores, confirming that the transfer learning model successfully identified their impact on strength.

Figure 14 illustrates the SHAP overview for feature contributions. The Age and Cement features show dispersed SHAP values, indicating their complex influence on model outputs (both positive and negative effects). In contrast, Coarse Aggregate, Water, and Fine Aggregate exhibit more concentrated SHAP values, suggesting a more consistent impact. Other features, such as Superplasticizer, Phosphogypsum, Blast Furnace Slag, and Fly Ash, had minimal influence on strength predictions.

Figure 15 compares the predicted and actual values for the VFM and BLM models. The VFM model’s predictions closely follow the ideal prediction line, whereas the baseline model shows significant deviations, particularly in high-strength regions. This further confirms the superior predictive performance of the transfer learning model.

The proposed transfer learning model demonstrates excellent predictive performance on external test sets, making it applicable to similar PGC strength prediction tasks.

4.5. Discussion

(1) Comparative analysis of transfer learning model adaptability across different algorithms

To evaluate how algorithmic adaptability affects cross-domain generalization in transfer learning, this study implements CNN-, TabNet-, and XGBoost-based models for systematic comparison with LightGBM. The experimental results are shown in

Figure 16, with detailed data in

Table 9. The experiments revealed significant performance differences among algorithms in cross-domain transfer scenarios. The CNN model achieved an RMSE of 6.71 MPa, R

2 of 0.61, and MAPE of 18.68% on the test datasets, with its prediction accuracy notably lower than that of tree-based ensemble models. This is primarily because CNN’s convolutional inductive bias is more suited for localized correlation data (e.g., images), whereas the global nonlinear interactions among concrete mix features are difficult to capture effectively via convolutional kernels, especially when sample sizes are limited, leading to suboptimal parameter optimization.

The TabNet model triggered early stopping at the 88th iteration, with severely degraded test performance (RMSE = 9.13 MPa, R2 = 0.25). This indicates that its attention-based sequential modeling strategy suffers from overfitting under extremely small sample conditions, suggesting the model merely memorized noise in the training data rather than learning the underlying physical laws. In contrast, the XGBoost model achieved strong results (RMSE = 3.72, R2 = 0.92), approaching the performance of the LightGBM model (R2 = 0.95). This confirms the universal advantage of gradient-boosted tree algorithms in material data modeling. The performance gap between the two mainly stems from LightGBM’s histogram acceleration and dynamic weight allocation strategy. The histogram algorithm reduces sensitivity to small-sample noise through feature discretization, while the error-feedback weighting strategy allows the model to focus on high-residual spots, making it more adaptable to the local nonlinear characteristics of PGC compared to XGBoost’s global loss optimization. These results demonstrate that tree-based ensemble algorithms exhibit stronger adaptability in cross-domain transfer tasks, whereas neural network models require sufficient sample sizes to overcome the curse of dimensionality.

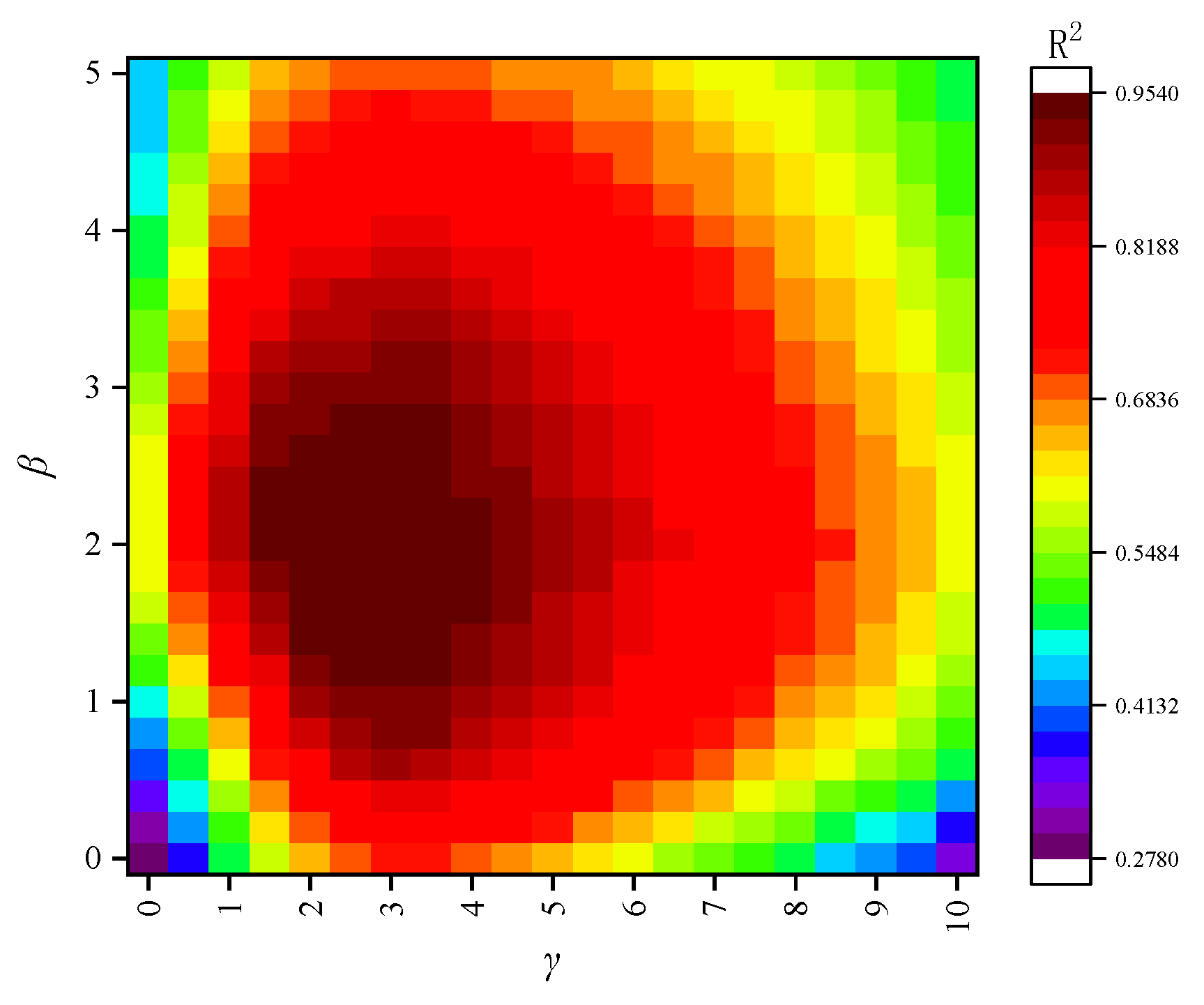

(2) Hyperparameter sensitivity analysis for dynamic weights

The hyperparameters

β and

γ in the dynamic weighting formula (Equation (6)) are crucial as they control the baseline importance and the dynamic adjustment range for the target domain samples. To justify the selection of

β = 2 and

γ = 3, a grid search-based sensitivity analysis was conducted. We systematically varied

β within the range of [0, 5] and

γ within the range of [0, 10], training the transfer learning model for each combination and evaluating its performance on the validation set. The results are visualized as a heatmap of the R

2 score in

Figure 17.

The heatmap reveals that the model’s performance is highly dependent on the choice of these parameters. A clear optimal region emerges where R2 is maximized. The parameter β, which sets the minimum weight for target domain samples relative to source domain samples, shows poor performance when close to 0. This is because a very low base weight can make the model unstable and fail to give sufficient priority to the target domain. As β increases, performance improves, but excessively high values (e.g., >4) lead to diminishing returns, as the model starts to ignore the nuanced error feedback provided by γ. The parameter γ, which scales the importance of high-error samples, demonstrates that a complete lack of dynamic adjustment results in suboptimal performance. As γ increases, the model’s ability to focus on difficult samples improves performance. However, if γ is too large, the model becomes susceptible to overfitting to potential outliers in the small training set, causing a slight degradation in generalization performance.

The combination of β = 2 and γ = 3 is situated squarely within the high-performance plateau of the metric surface. This choice ensures that all target domain samples receive at least double the weight of source domain samples, while also providing a significant but stable dynamic range for error-based adjustments. This balanced approach was therefore adopted for the final model.

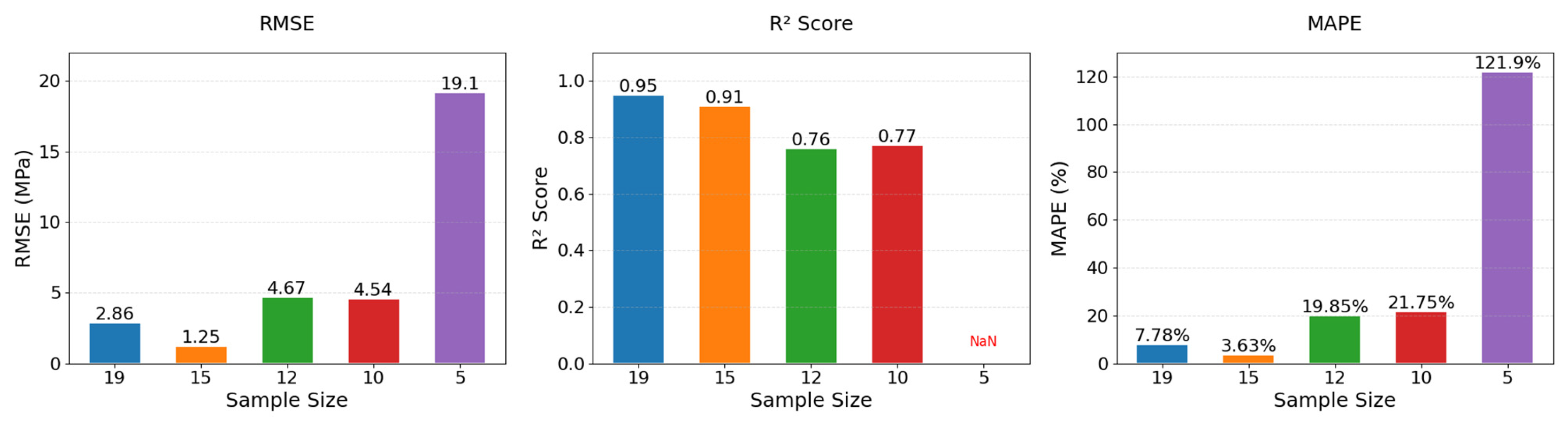

(3) Applicability boundary analysis for small-sample target domains

Through sensitivity analyses involving incremental reductions in the number of training samples in the target domain, this study quantitatively establishes the minimum viable sample threshold for transfer learning models, empirically mapping the performance degradation frontier in small-sample regimes. Sample sizes were set to

N = 15, 12, 10, and 5, with results compared in

Figure 18 and metrics listed in

Table 10.

When the sample size was reduced to 15, the model maintained high predictive performance (RMSE = 1.25 MPa, R2 = 0.91, MAPE = 3.63%), with R2 only 4.2% lower than the full sample datasets (N = 19), indicating reliable prediction capability for sample sizes ≥15. However, when the sample size dropped to 12, performance deteriorated sharply (RMSE = 4.67 MPa, R2 = 0.76, MAPE = 19.85%), with R2 decreasing by 20.0%, suggesting the model began losing stable mapping ability between material components and strength. Further reduction to 10 samples resulted in R2 = 0.77 but worsened MAPE to 21.75%, indicating extreme prediction biases for some samples. At N = 5, the model completely failed (R2 = NaN, MAPE = 121.92%), with predictions showing random correlations to true values. These analyses demonstrate that 15 samples represent the minimum critical threshold for maintaining the stability of the transfer learning in PGC strength prediction.

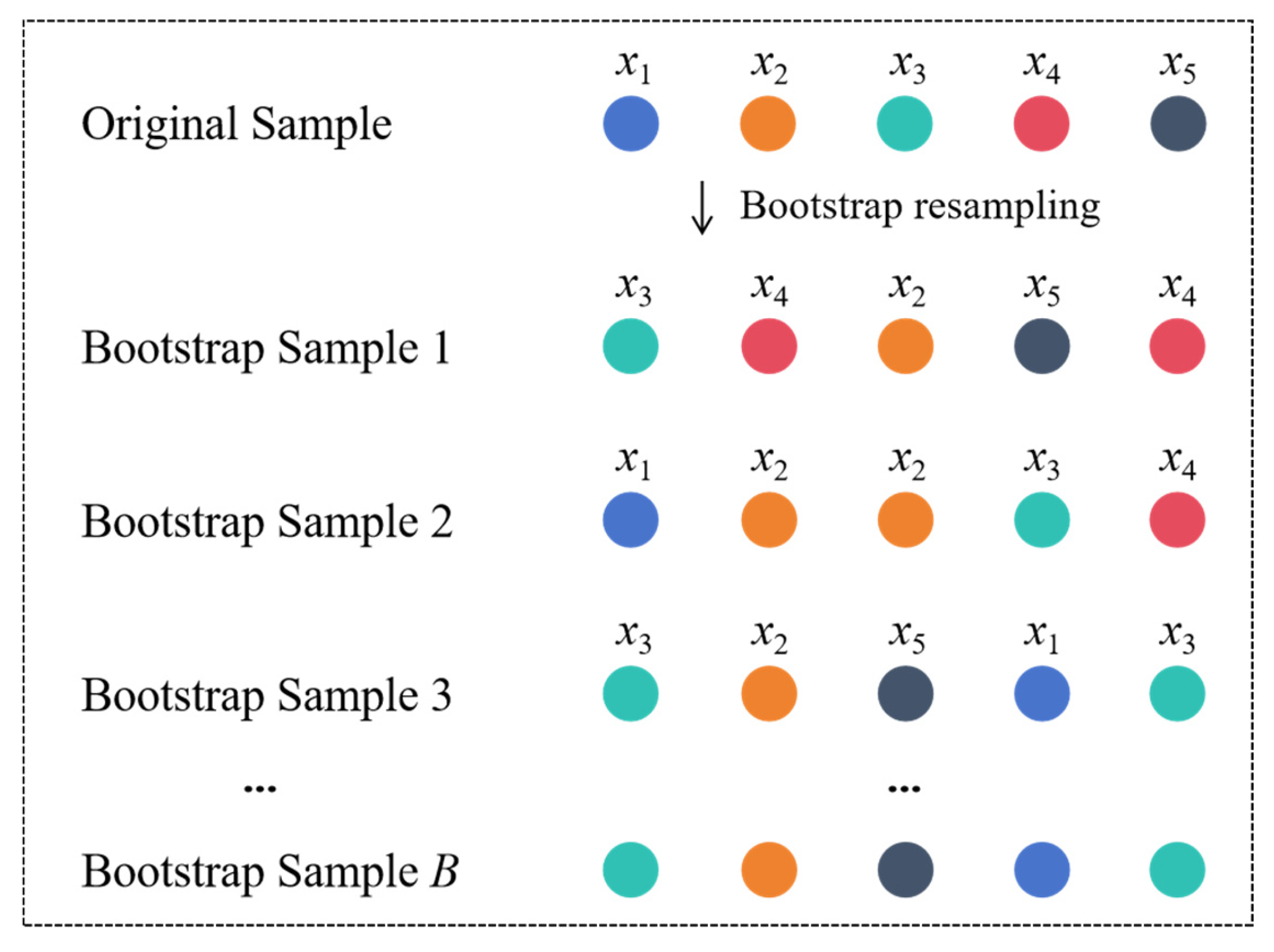

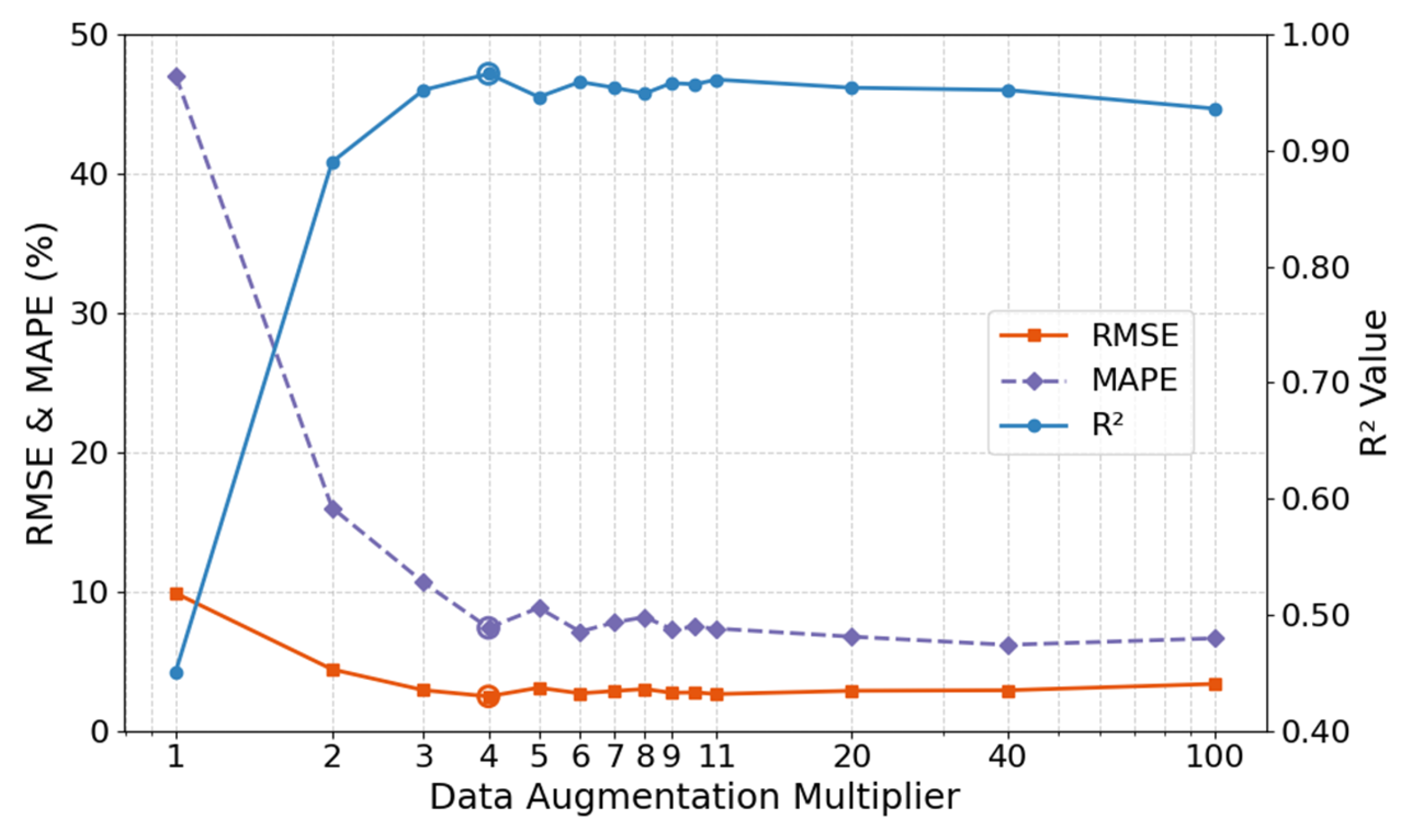

(4) Analysis of data augmentation multiplier

The data augmentation multiplier governs the target-domain knowledge supplementation intensity in transfer learning, representing a critical trade-off parameter between generation efficiency and model generalization capability. This study systematically tested prediction performance across augmentation multipliers ranging from 1 to 100×, quantitatively revealing the nonlinear relationship between data expansion scale and model accuracy. The results in

Figure 19 showed a non-monotonic relationship between augmentation multiplier and model performance, divisible into two phases:

(a) Rapid optimization phase (1~4×): RMSE plummeted from 9.87 MPa to 2.47 MPa, R2 surged from 0.45 to 0.95, and MAPE shrank from 47.03% to 7.78%. Bootstrap Resampling effectively mitigated underfitting caused by insufficient original samples, with augmented samples significantly enhancing predictive capability.

(b) Stabilization phase (5~100×): Performance plateaued, with RMSE fluctuations < 0.8 MPa and R2 maintained at 0.94~0.96, though marginally lower than the 4× peak. Thus, a 4× augmentation multiplier is recommended to ensure effective small-sample expansion while avoiding overfitting risks.

(5) Analysis of physical plausibility

To validate the model’s adherence to fundamental material science principles, a sensitivity analysis was conducted to examine the relationship between cement content and predicted compressive strength. In this analysis, the water-cement ratio was held constant at 0.45. All other parameters were held constant, with values set as follows: Superplasticizer = 12.0 kg/m3, Fine Aggregate = 810.0 kg/m3, PGA = 510.0 kg/m3, Retarder = 1.2 kg/m3, Phosphogypsum = 299.8 kg/m3, and Age = 28 days. The cement content was then incrementally increased, and the corresponding strength predictions from the trained TLM model were recorded.

The results, presented in

Table 11, demonstrate a clear and positive trend. As the cement content increases from 300 kg/m

3 to 550 kg/m

3, the predicted compressive strength generally rises from 48.7 MPa to 53.9 MPa. This outcome aligns with the established physical law that, within a certain range, higher cement content leads to greater strength. Notably, the model revealed a slight non-monotonic dip, a behavior reflecting its data-driven nature. Unlike models with monotonic constraints, it captures complex non-linear interactions from the data. This flexibility allows it to learn nuanced relationships while respecting general physical laws, confirming its predictions are physically sound and robust. This analysis confirms that the model successfully captured this fundamental monotonic relationship without being explicitly constrained. The ability of the TLM to learn such physical laws, even from limited target domain data, underscores the effectiveness of the knowledge transfer from the large, diverse source domain, enhancing its reliability for practical engineering applications.