Exploration of Solid Solutions and the Strengthening of Aluminum Substrates by Alloying Atoms: Machine Learning Accelerated Density Functional Theory Calculations

Abstract

:1. Introduction

2. Computational Details

2.1. Crystal Structure and Calculation Method

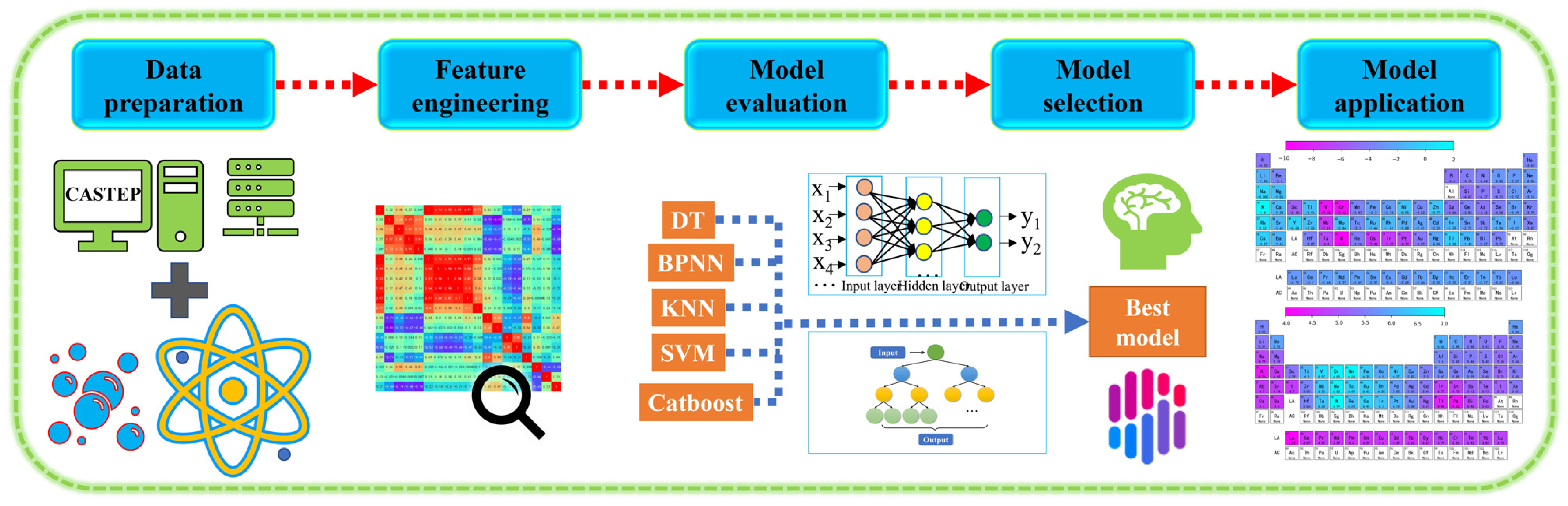

2.2. Machine Learning Databases and Models

3. Results and Discussion

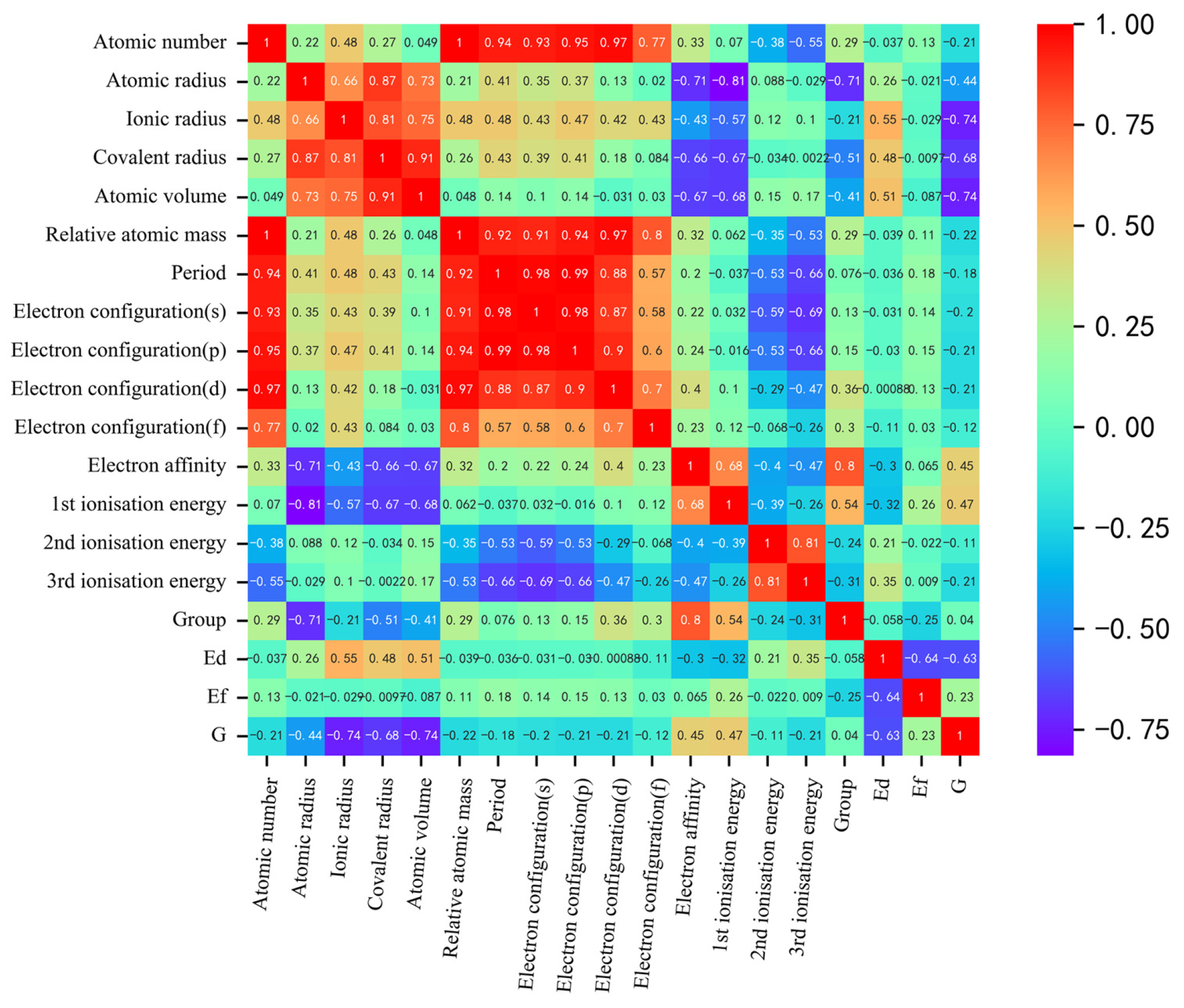

3.1. Database Establishment and Selection of Feature Values

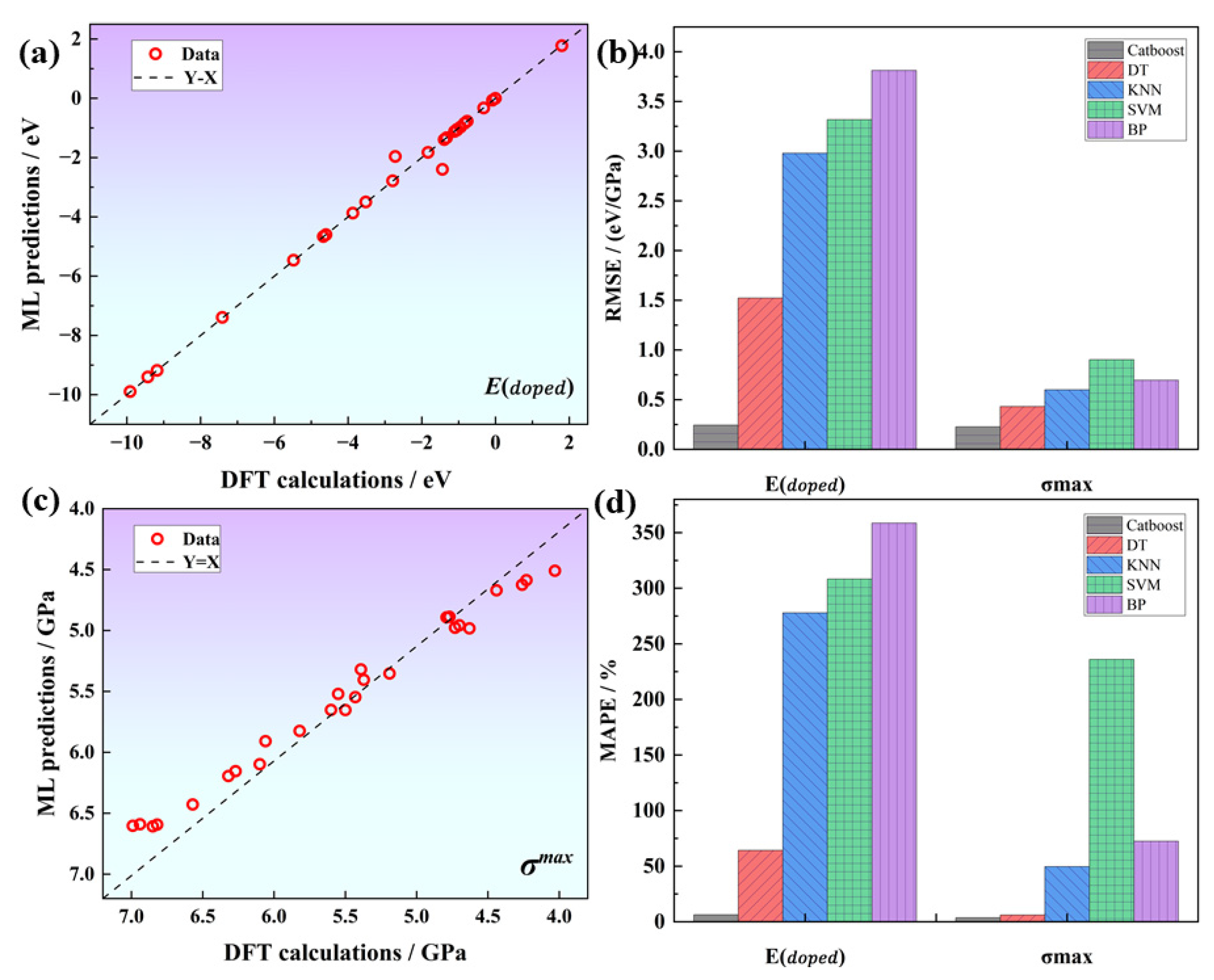

3.2. Machine Learning Model Building and Optimization

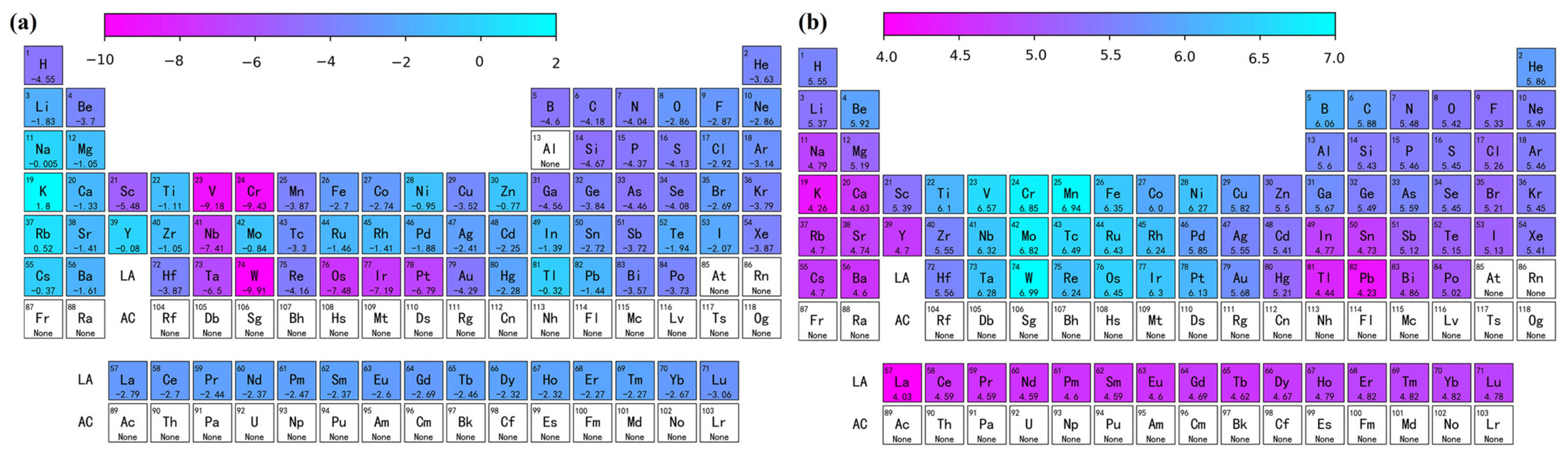

3.3. Interpretable Machine Learning and Result Prediction

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Biswas, A.; Siegel, D.J.; Wolverton, C.; Seidman, D.N. Precipitates in Al-Cu alloys revisited: Atom-probe tomographic experiments and first-principles calculations of compositional evolution and interfacial segregation. Acta Mater. 2011, 59, 6187–6204. [Google Scholar] [CrossRef]

- Mortsell, E.A.; Marioara, C.D.; Andersen, S.J.; Ringdalen, I.G.; Friis, J.; Wenner, S.; Royset, J.; Reiso, O.; Holmestad, R. The effects and behaviour of Li and Cu alloying agents in lean Al-Mg-Si alloys. J. Alloys Compd. 2017, 699, 235–242. [Google Scholar] [CrossRef]

- Huang, J.T.; Li, M.W.; Liu, Y.; Chen, J.Y.; Lai, Z.H.; Hu, J.; Zhou, F.; Zhu, J.C. A first-principles study on the doping stability and micromechanical properties of alloying atoms in aluminum matrix. Vacuum 2023, 207, 111596. [Google Scholar] [CrossRef]

- Fang, X.Y.; Ren, J.K.; Chen, D.B.; Cao, C.; He, Y.M.; Liu, J.B. Effect of Alloying Elements and Processing Parameters on Microstructure and Properties of 1XXX Aluminium Alloys. Rare Met. Mater. Eng. 2022, 51, 1565–1571. [Google Scholar]

- Liang, W.J.; Rometsch, P.A.; Cao, L.F.; Birbilis, N. General aspects related to the corrosion of 6xxx series aluminium alloys: Exploring the influence of Mg/Si ratio and Cu. Corros. Sci. 2013, 76, 119–128. [Google Scholar] [CrossRef]

- Xiao, W.; Wang, J.W.; Sun, L. Theoretical investigation of the strengthening mechanism and precipitation evolution in high strength Al-Zn-Mg alloys. Phys. Chem. Chem. Phys. 2018, 20, 13616. [Google Scholar] [CrossRef]

- Goswami, R.; Spanos, G.; Pao, P.S.; Holtz, R.L. Precipitation behavior of the ß phase in Al-5083. Mater. Sci. Eng. A 2010, 527, 1089–1095. [Google Scholar] [CrossRef]

- Dorward, R.C.; Beerntsen, D.J. Grain structure and quench-rate effects on strength and toughness of AA7050 Al-Zn-My-Cu-Zr alloy plate. Met. Mater. Trans. A 1995, 26, 2481–2484. [Google Scholar] [CrossRef]

- Keramidas, P.; Haag, R.; Grosdidier, T.; Tsakiropoulos, P.; Wagner, F. Influence of Zr addition on the microstructure and properties of PM Al-8Fe-4Ni alloy. Mater. Sci. Forum 1996, 217, 629–634. [Google Scholar] [CrossRef]

- Wang, E.R.; Hui, X.D.; Wang, S.S.; Zhao, Y.F.; Chen, G.L. Improved mechanical properties in cast Al-Si alloys by combined alloying of Fe and Cu. Mater. Sci. Eng. A 2010, 527, 7878–7884. [Google Scholar] [CrossRef]

- Yang, M.; Lim, M.K.; Qu, Y.C.; Ni, D.; Xiao, Z. Supply chain risk management with machine learning technology: A literature review and future research directions. Comput. Ind. Eng. 2023, 175, 108859. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.Z.; Liu, A.; Kara, S. Machine learning for engineering design toward smart customization: A systematic review. J. Manuf. Syst. 2022, 65, 391–405. [Google Scholar] [CrossRef]

- Takahashi, K.; Tanaka, Y. Material synthesis and design from first principle calculations and machine learning. Comput. Mater. Sci. 2016, 112, 364–367. [Google Scholar] [CrossRef]

- Schutt, K.T.; Glawe, H.; Brockherde, F.; Sanna, A.; Muller, K.R.; Gross, E.K.U. How to represent crystal structures for machine learning: Towards fast prediction of electronic properties. Phys. Rev. B 2014, 89, 205118. [Google Scholar] [CrossRef]

- Siegel, D.J.; Hector, L.G.; Adams, J.B. Adhesion, atomic structure, and bonding at the Al(111)/α-Al2O3(0001) interface: A first principles study. Phys. Rev. B 2002, 654, 5415. [Google Scholar]

- Cao, M.; Luo, Y.; Xie, Y.; Tan, Z.; Fan, G.; Guo, Q.; Su, Y.; Li, Z.; Xiong, D.B. The influence of interface structure on the electrical conductivity of graphene embedded in aluminum matrix. Adv. Funct. Mater. 2019, 6, 1900468. [Google Scholar] [CrossRef]

- Pei, X.; Yuan, M.N.; Han, F.Z.; Wei, Z.Y.; Ma, J.; Wang, H.L.; Shen, X.Q.; Zhou, X.S. Investigation on tensile properties and failure mechanism of Al(111)/Al3Ti(112) interface using the first-principles method. Vacuum 2022, 196, 110784. [Google Scholar] [CrossRef]

- Peng, M.J.; Wang, R.F.; Wu, Y.J.; Yang, A.C.; Duan, Y.H. Elastic anisotropies, thermal conductivities and tensile properties of MAX phases Zr2AlC and Zr2AlN: A first-principles calculation. Vacuum 2022, 196, 110715. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.Z.; Zhang, S.Y.; Song, X.Q.; Wang, Y.X.; Chen, Z. First principles study of stability, electronic structure and fracture toughness of Ti3SiC2/TiC interface. Vacuum 2022, 196, 110745. [Google Scholar] [CrossRef]

- Wang, D.Z.; Xiao, Z.B. Revealing the Al/L12-Al3Zr inter-facial properties: Insights from first-principles calculations. Vacuum 2022, 195, 110620. [Google Scholar] [CrossRef]

- Perdew, J.P.; Burke, K.; Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 1998, 77, 3865–3868. [Google Scholar] [CrossRef]

- Jones, R.O.; Gunnarsson, O. The density functional formalism, its applications and prospects. Rev. Mod. Phys. 1989, 61, 689–746. [Google Scholar] [CrossRef]

- Perdew, J.P.; Wang, Y. Accurate and simple analytic representation of the electron-gas correlation energy. Phys. Rev. B 1992, 45, 13244–13249. [Google Scholar] [CrossRef] [PubMed]

- Segall, M.D.; Lindan, P.J.D.; Probert, M.J.; Pickard, C.J.; Hasnip, P.J.; Clark, S.J.; Payne, M.C. First-principles simulation: Ideas, illustrations and the CASTEP code. J. Phys. Condens. Matter 2002, 14, 2717–2744. [Google Scholar] [CrossRef]

- Xu, L.L.; Zheng, H.F.; Xu, B.; Liu, G.Y.; Zhang, S.L.; Zheng, H.B. Suppressing Nonradiative Recombination by Electron-Donating Substituents in 2D Conjugated Triphenylamine Polymers toward Efficient Perovskite Optoelectronics. Nano Lett. 2023, 23, 1954–1960. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.T.; Xue, J.T.; Li, M.W.; Chen, J.Y.; Cheng, Y.; Lai, Z.H.; Hu, J.; Zhou, F.; Qu, N.; Liu, Y.; et al. Adsorption and modification behavior of single atoms on the surface of single vacancy graphene: Machine learning accelerated first principle computations. Appl. Surf. Sci. 2023, 635, 157757. [Google Scholar] [CrossRef]

- Mu, Y.S.; Liu, X.D.; Wang, L.D. A Pearson’s correlation coefficient based decision tree and its parallel implementation. Inf. Sci. 2018, 435, 40–58. [Google Scholar] [CrossRef]

- Tang, M.C.; Zhang, D.Z.; Wang, D.Y.; Deng, J.; Kong, D.T.; Zhang, H. Performance prediction of 2D vertically stacked MoS2-WS2 heterostructures base on first-principles theory and Pearson correlation coefficient. Appl. Surf. Sci. 2022, 596, 153498. [Google Scholar] [CrossRef]

- Chen, W.C.; Schmidt, J.N.; Yan, D.; Vohra, Y.K.; Chen, C.C. Machine learning and evolutionary prediction of superhard B-C-N compounds. Npj Comput. Mater. 2021, 7, 114. [Google Scholar] [CrossRef]

- Qiao, L.; Liu, Y.; Zhu, J.C. Application of generalized regression neural network optimized by fruit fly optimization algorithm for fracture toughness in a pearlitic steel. Eng. Fract. Mech. 2020, 235, 107105. [Google Scholar] [CrossRef]

- Sutojo, T.; Rustad, S.; Akrom, M.; Syukur, A.; Shidik, G.F.; Dipojono, H.K. A machine learning approach for corrosion small datasets. Npj Mater. Degrad. 2023, 7, 18. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Nair, B.; Vavilala, M.S.; Horibe, M.; Eisses, M.J.; Adams, T.; Liston, D.E.; Low, K.W.; Newman, S.F.; Kim, J. Explainable machine-learning predictions for the prevention of hypoxaemia during surgery. Nat. Biomed. Eng. 2018, 2, 749–760. [Google Scholar] [CrossRef] [PubMed]

| N | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| E (eV) | 0.28 | 0.19 | 0.18 | 0.16 | 0.16 | 0.15 | 0.15 | 0.15 | 0.14 | 0.16 | 0.16 | 0.17 | 0.15 | 0.15 | 0.15 | 0.16 |

| G (GPa) | 9.61 | 8.30 | 6.80 | 5.78 | 6.20 | 5.31 | 5.30 | 5.82 | 5.76 | 5.75 | 5.62 | 6.19 | 5.74 | 5.86 | 5.91 | 5.73 |

| E | G | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Catboost | DT | BPNN | KNN | SVM | Catboost | DT | BPNN | KNN | SVM | |

| MSE | 0.06 | 2.32 | 14.53 | 8.87 | 11.00 | 0.05 | 0.18 | 0.48 | 0.36 | 0.81 |

| RMSE | 0.24 | 1.52 | 3.81 | 2.98 | 3.31 | 0.22 | 0.43 | 0.69 | 0.59 | 0.90 |

| MAPE (%) | 6.34 | 64.2 | 358.6 | 277.93 | 308.39 | 3.63 | 6.11 | 72.38 | 49.61 | 236.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Xue, J.; Li, M.; Cheng, Y.; Lai, Z.; Hu, J.; Zhou, F.; Qu, N.; Liu, Y.; Zhu, J. Exploration of Solid Solutions and the Strengthening of Aluminum Substrates by Alloying Atoms: Machine Learning Accelerated Density Functional Theory Calculations. Materials 2023, 16, 6757. https://doi.org/10.3390/ma16206757

Huang J, Xue J, Li M, Cheng Y, Lai Z, Hu J, Zhou F, Qu N, Liu Y, Zhu J. Exploration of Solid Solutions and the Strengthening of Aluminum Substrates by Alloying Atoms: Machine Learning Accelerated Density Functional Theory Calculations. Materials. 2023; 16(20):6757. https://doi.org/10.3390/ma16206757

Chicago/Turabian StyleHuang, Jingtao, Jingteng Xue, Mingwei Li, Yuan Cheng, Zhonghong Lai, Jin Hu, Fei Zhou, Nan Qu, Yong Liu, and Jingchuan Zhu. 2023. "Exploration of Solid Solutions and the Strengthening of Aluminum Substrates by Alloying Atoms: Machine Learning Accelerated Density Functional Theory Calculations" Materials 16, no. 20: 6757. https://doi.org/10.3390/ma16206757

APA StyleHuang, J., Xue, J., Li, M., Cheng, Y., Lai, Z., Hu, J., Zhou, F., Qu, N., Liu, Y., & Zhu, J. (2023). Exploration of Solid Solutions and the Strengthening of Aluminum Substrates by Alloying Atoms: Machine Learning Accelerated Density Functional Theory Calculations. Materials, 16(20), 6757. https://doi.org/10.3390/ma16206757