Abstract

Modeling of a cylindrical heavy media separator has been conducted in order to predict its optimum operating parameters. As far as it is known by the authors, this is the first application in the literature. The aim of the present research is to predict the separation efficiency based on the adjustment of the device’s dimensions and media flow rates. A variety of heavy media separators exist that are extensively used to separate particles by density. There is a growing importance in their application in the recycling sector. The cylindrical variety is reported to be the most suited for processing a large range of particle sizes, but optimizing its operating parameters remains to be documented. The multivariate adaptive regression splines methodology has been applied in order to predict the separation efficiencies using, as inputs, the device dimension and media flow rate variables. The results obtained show that it is possible to predict the device separation efficiency according to laboratory experiments performed and, therefore, forecast results obtainable with different operating conditions.

1. Introduction

Density separation is generally considered to be the most cost effective industrial material density separation process. It is comparatively simple when compared with other techniques, and easily automated. A large variety of density separation processes and devices exist and experience in the mineral processing sector has shown that the type of density separation method or device used must be selected according to the proximity in the density of the particles to be separated and their size [1].

Density media separation (DMS) is generally considered to be the most precise type of density separation technology [2]. It is extensively used to separate materials of different densities where those of a density lower than that of the separation media float, and those greater sink, in the media. It is used for the recovery of a number of types of minerals, non-ferrous metals and plastics. In the plastic recycling sector, it is a standard method for preparing many plastic wastes for subsequent separation methods or to produce a final marketable product. It is possible to process large volumes of materials with a much wider range of particle sizes than other density separation devices. However, the efficiency obtained is susceptible to: yield stress and viscosity effects due to ultrafine suspended media particles; high solids content of the separation media; and the abundance of particles with densities very close to that of the separation media.

DMS cyclones are well established as the most precise type of density separation devices and as having very high throughputs. DMS cyclones traditionally have a cono-cylindrical form and use centrifugal forces developed in the cyclones to accelerate the float/sink separation of particles by density. Separation with this type of cyclone is accomplished by tangentially feeding the materials to separate along with the media into the cyclone, developing centrifugal forces which facilitate the rapid settling of dense particles and the floating of light particles. This is especially important for separating fine particles or those with densities approaching that of the separation media. DMS cyclones have become well established for the separation of particles from 0.5 to 50 mm. Pure density separation products are not usually obtained with this type of separator as the separation media carrying the particles drag or short-circuit some of the low-density particles to the high-density (sink) product being obtained. The degree of this short-circuiting tends to be a function of the volume of media reporting with the sink product.

There is a significant potential for increasing the volumes and range of particles sizes of waste plastics separated based on the centrifugal forces developed within DMS cyclones, as outlined by [3]. These cyclones are the most versatile, but most complicated among the density separation devices due to their operational parameters. There are a number of parameters that affect the separation quality obtained, including: separation media viscosity; media part size distribution; media flow rate and separation media pressure; cyclone diameter, length, feed and exit port sizes; and the particle size distribution of materials to process, as well as the proportion of these particles having densities in proximity to that of the separation media density.

The large coal density media separator (LARCODEMS) originally developed by British Coal is a version of the cylindrical version of a DMS cyclone. It is being used effectively for processing 0.5–120 mm particles. This device is quite advantageous compared to the cono-cylindrical type of DMS in that it is suitable for processing a wide range of particle sizes simultaneously and that the material to be processed can be fed dry into the vortex of the cyclone. This negates the need to having to pass through the separation media pumping circuit. In the case of this cylindrical type cyclone, when particles are fed into the vortex of the cyclone, the high-density particles must sink through the separation media to exit as a product without the possibility of short-circuiting occurring. Subsequently, this product fraction contains a minimum, or no, low-density particles. However, it can also be operated where the particles are fed with the separation media, as is done with the cono-cylindrical type. The separation media flow paths in the cono-cylindrical and cylindrical type cyclones are quite distinct and result in distinct types of concentrations. To date the only publication known to the authors that treats the operating parameters of the cylindrical type of DMS cyclone is that of Venkoba Rao et al. [4]. Unfortunately, no conclusions can be derived from this article as to the means for optimization of separations with this type of device. There is no public domain information as to the operating parameters of cylindrical DMS cyclones. A more theoretical understanding of the process and its design is necessary for the optimization their application. DMS cyclone separation tests have been conducted with irregularly-shaped particles with a wide range of particle sizes using a 110 mm diameter demonstration model of LARCODEMS. Results highlight that the highest-density product fraction produced was virtually pure [5].

The reported quality of density separations obtained with the LARCODEMS relative to cylindrical cyclone diameter (300 mm to 1200 mm) appears to remain consistent or to improve as the cyclone diameter is increased [6,7]. This characteristic is contrary to that of hydrocyclone particle size classification where the separation efficiency of fine particles increases with the reduction in cyclone diameter due to the higher centrifugal forces present. This improvement is probably due to the increase in particle flow path and separation residence time with the cyclone diameter. Since longer, flaky, or finer particles tend to separate more slowly, the increase in particle residence time facilitates separation of these particles. Lengthening the separation cylinder increases the separation media flow path and, as such, the particle residence time. However, the extent to which the length of the cyclone may be realistically increased remains to be determined.

The LARCODEMS

Originally designed for treating coal, but also used for processing iron ore and plastics [6], the LARCODEMS is currently manufactured in six versions (300, 500, 850, 1000, and 1200 mm diameters) with recommended processing capacities and maximum particle sizes varying in proportion with the diameter of the apparatus. Even the smallest version has an excessive capacity for laboratory scale tests. The smallest version (300 mm diameter) has a calculated capacity to treat 6 tons/hour of plastics.

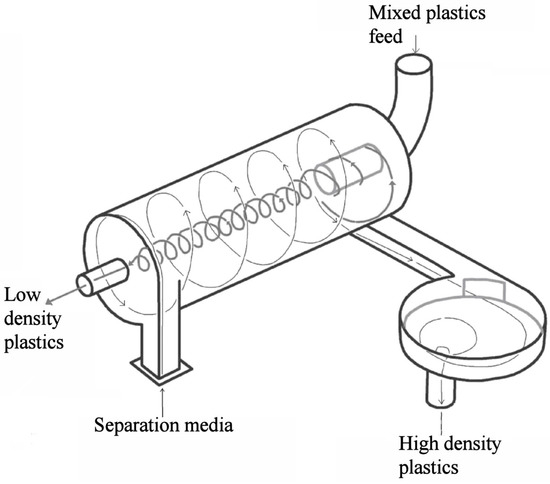

The device consists of a cylindrical separating chamber (Figure 1) inclined at 30° from the horizontal, with the separating media pumped tangentially into the lower end. The media circulates up the interior surface of the separation cylinder until it reaches the top end where part of the media returns down in counter current along the surface of the media going up the cylinder. A vortex is formed (VF) with a central air core when a sufficient volume of separation media is pumped into the separation cylinder. The part of the media not returning down the cylinder exits tangentially through a sink port. Material to be treated is fed into the top end of the vortex so that dense fragments must settle through the descending separating media so as to reach the ascending media circulating around the inner circumference of the cylinder and exit through the sink port. Low-density particles float down the vortex to exit through a central float port (FP) at the bottom end of the cylinder. A type of siphon known as a vortex extractor can be connected (VE) to the sink port (SP). The VE provides stability to the vortex when there are fluctuations in the media flow rate into the separation cylinder and permits its operation with a significantly greater range of media input flow rates. It has been observed that as the diaphragm area (DA) is increased so does the diameter of the top end of the vortex. The diameters and, thus, the areas of the SP and FP regulate the media flow rate required to form the vortex and the rates of sink and float flow (FF and SF) exiting their corresponding ports. If one port diameter is maintained constant and the other reduced, the rate of the media flow rate of the port of constant diameter increases.

Figure 1.

Schematic diagram of the large coal density media separator (LARCODEMS) (courtesy of JMC Engineering Ltd.).

To date virtually no research has been published as to the optimization of separation efficiencies based on the design of the LARCODEMS or other any cylindrical cyclone separators. The two publications that are of any relevance in this regard are those of Chiné and Ferrtara [8] and Yang and Wang [9]. Unfortunately, only the second study has conducted any investigation as to the effect of cylindrical cyclone design parameters on the efficiencies of the separations obtained.

The aim of the present research consists of creating mathematical models for the operation of the LARCODEMS in the established mode of feeding material to be separated into the vortex and an alternative procedure of feeding this material along with the separation media. The models obtained take into account the dimensional characteristics of the device and the volume flow of dense and light materials that are introduced. The utility of this type of model is two-fold; on the one hand, they predict the efficacy of the device with different dimensions and operation conditions and, on the other hand, allow one to propose changes and design improvements having a forecast of how the device will behave with such changes.

2. Materials and Methods

2.1. Description of LARCODEMS Test Procedure

The 110 mm diameter laboratory version of LARCODEMS was designed to be operated with a 12, 21.4, 32.9, and 45 cm long separation cylinder lengths (CL). Three scaled versions (52, 72, and 172 mm diameter) of the 45 cm long 110 mm LARCODEMS were built for inclusion in these tests. Since these versions were scaled to the same relative dimensions of the 110 mm model, the relative cylinder, SP, FP, and diaphragm areas (DA) are constant.

Fragments and pellets of 1.5 to 2.8 mm of different types of plastics with a wide range of densities were selected based on their differences in densities and differences in visual appearances for the separation tests. Densities of these were controlled to 0.002 g/cc by weight to volume ratios determined in methyl alcohol (to avoid air bubbles adhering to the plastic particles). The types were selected such that their densities (e.g., 0.940, 0.955, 0.962, 1.002, 1.034, 1.043, 1.143, and 1.160) were both greater and less than that of the separation media (water). Approximately 2000 particles for each density were combined and fed into the vortex of the LARCODEMS. The processing within the device was accomplished within some 20 seconds. The SP and FP products obtained for a given test were dried, sorted manually by plastic type, and each fraction weighed. This setup of the test procedure required some 15 hours per sample to complete. The efficiency of the separation obtained was determined as the difference in the percentage of recovery of particles denser than 1.0 g/cc in the sink product and the percentage of recovery of particles with a density <1.0 g/cc in the sink product.

The following are the variables that were employed as input data for the models of the LARCODEMS efficiency feeding either into the vortex or with media are:

(A) Separation tests with the plastic particles being fed into the vortex were conducted with:

- Twelve, 21.4, 32.9 and 45 cm long separation cylinder versions with and without a vortex extractor of the 110 mm LARCODEMS.

- Fifty-two, 72, and 172 mm diameter versions of the scaled 45 cm long, 110 mm LARCODEMS.

- Variations in diameter of the feed DA of the 110 mm LARCODEMS.

- Variations in FPA of the 110 mm LARCODEMS.

- Variations in SPA of the 52, 72, 110, and 172 mm LARCODEMS.

- Three variations in total media feed flow rate for all of the above variables when separations of plastic particles that had received a treatment (TM) to reduce hydrophobic effects were conducted without a VE.

- Five variations in total media feed flow rate for all of the above variables when separations were conducted without a VE.

(B) Separation tests with the plastic particles being fed with the separation media (FPM) were conducted with:

- The 32.9 cm-long and the 45 cm-long separation cylinder versions with and without a vortex extractor of the 110 mm LARCODEMS.

- Variations in diameter of the float port of the 110 mm LARCODEMS.

- Three variations in total media feed flow rate for all of the above variables when separations of plastic particles that had received a treatment (TM) to reduce hydrophobic effects were conducted without a vortex extractor.

- Five variations in total media feed flow rate for all of the above variables when separations were conducted without a vortex extractor.

Since the four different versions of the LARCODEMS used in this investigation were scaled to almost identical proportions, the volumes of media flow are a function of the area of the exit ports and the feed diaphragm relative to the area of the separation cylinder.

2.2. The Multivariate Adaptive Regression Splines

Multivariate adaptive regression splines (MARS) are techniques in the family of multivariate nonparametric regression, based in the adjustment of its parameters to the data to be modelled. These types of models were introduced by Friedman in 1991 [10]. In other words, MARS is a multivariate method able to generate models based on several input variables. The use of MARS in the present research is due to its ability to model nonlinearities and interaction between parameters. At the beginning of the research, the use of linear regression models was checked without satisfactory results. The main advantage of MARS is that it is able to effectively model relationships and patterns that are not able for other regression methods. MARS are based on measures of explanatory variables on sizes for predicting values of the continuous dependent variable , of size . The MARS model can be represented as:

where is the error vector of dimension . With classification and regression trees (CART) models as the base [11], MARS can be considered a generalization with the capacity of overcoming some of the limitations of CART, as it does not require any information a priori relating the relationships between dependent and independent variables. CART is a statistical method for multivariate analysis that creates a decision tree which strives to correctly classify the members of a population. The MARS regression model is constructed by piecewise polynomials, also called splines, which have smooth connections. This is performed through fitting basis functions to distinct intervals of the independent variables. The polynomials joining points are named as , and are known as knots, nodes, or breakdown points. The splines for MARS are polynomials, concretely two-sided truncated power functions, which can be expressed as follows [12,13]:

Considering basis functions, a MARS model can be expressed with the next expression of the estimation of the dependent variable [14]:

where is a constant, the coefficients of the , and its correspondent basis function is .

Then, MARS models use, as required inputs, the model and the knot positions for each individual variable to be optimized. For a dataset containing objects and explanatory variables, then, the number of basis functions would be pairs of spline basis functions, given by the above equations Equation (2), with knot locations , with and .

To reach the expression of the model that MARS provides, a selection of basis functions in consecutive pairs is necessary. The selection of the basis functions can be done with a two-at-a-time forward stepwise procedure [15]. This forward stepwise selection of basis function leads to an over-fitted model; this means that although it fits the training data well, it becomes a very complex model that is not able to make predictions accurately with new objects. To avoid this issue, basis functions which are redundant are removed one at a time using a backward stepwise procedure. The selection of which basis functions must be included in the model, MARS utilizes generalized cross-validation (GCV). GCV consist of the calculus of the mean squared residual error divided by a penalty dependent on the model complexity. The GCV criterion is defined in the following way:

The model complexity has a penalty, denoted as , that increases with the number of basis functions required by the model, following the next expression:

which depends on the number of basis function M, and is the smoothing parameter that characterizes the penalty for each basis function included into the model. When takes large values, fewer basis functions are to be included in the model and consequently, smoother function estimates. The selection of the parameter is discussed in [10].

In order to analyse a MARS model, surface plots can be used to visualise the interactions and relations between the basis functions. Let be the set of all single variable basis functions that contain only . In the same way, is the set of basis functions of two variables, and , and the set of all basis functions of three variables. The MARS model can be rewritten as a series of sums in the following form:

where the first sum is with all the basis functions of one variable, the second is with the basis functions with only two variables. The third sum is over the basis functions of three variables. The expression above is known as ANOVA decomposition since it is similar to the ANOVA decomposition of experimental design [10]. The interaction of a MARS model, based on two variables, is determined by:

For higher level interactions, they are defined in the same way.

The estimated importance [16,17] of the explanatory variables in the model can be used to construct the basis functions. Determinate predictor importance is, in general, a complex problem that requires several criteria. To obtain reliable results, GCV is usually used to count the number of models subsets (nsubsets) in which each variable is included, and the residual sum of squares (RSS). The definition of the RSS is:

Then, the expression of GCV can be rewritten as:

2.3. Model Performance Measurement

The performance and accuracy of the trained MARS models can be tested with a comparison of the real efficiency values of the validation datasets, and with the values of the predictions . The root mean squared error (RMSE), the mean absolute error (MAE) and the R2 [18,19] of the model are measures that can be employed with this aim.

The MAE is a quantity employed to measure how close forecasts are to eventual outcomes [20] and which equation is as follows:

The RMSE is a general-purpose measure used in a wide range of applications as a measure of the error for numerical predictions [21,22]. Compared to MAE, RMSE amplifies and severely punishes large errors. The RMSE is defined as follows:

2.4. Model Training and Validation

The steps followed during the training and validation of all the models are detailed below:

Firstly, data were split in training and validation datasets. As the calculus of efficiency is a regression problem, the function determines the quantiles of the dataset and samples within those groups. The split of the data in training and validation sets was performed using the k-fold cross-validation methodology for . The data was randomly split into five distinct blocks of equal size. Afterwards, the first block of data is left out and the model is fit. This model is used to predict the held-out block. This process was continued until all five held-out blocks had been predicted. A total of 1000 versions of the five-fold cross-validation for both models was created. The k-fold cross-validation is a well-known methodology that was already used in previous studies by the authors with successful results [23,24,25].

For each one of the five models of the five-fold cross-validation, the performance parameters MAE, RMSE, and R2 were calculated. This permitted the determination of the minimum, average, and maximum values of those parameters in each one of the 1000 models replications.

After the calculus of all the models referred above, an average model was calculated for the vortex feed scenario and another for the with-media scenario with the two sets of variables, indicating both its performance and the importance of the variables that take part in them.

The 1000 replicas of the five-fold cross-validation methodology are made in order to assure that with independence of the data subset selected, the trained MARS model is able to perform a good prediction of the efficacy. In the case of the average model, it is calculated in order to know the importance of the different variables for the prediction of the efficiency, and also having a parametric model of the LARCODEMS efficiency for both feeds. This model could help in order to know the behaviour of the efficiency variables when changes are performed in both the machine and the volume of flow.

3. Results

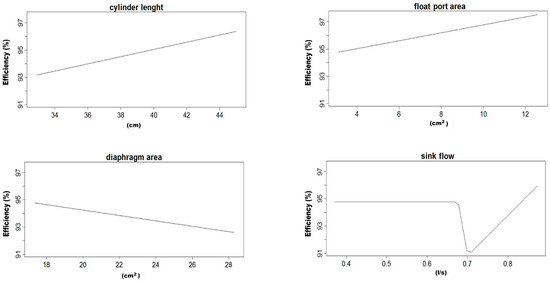

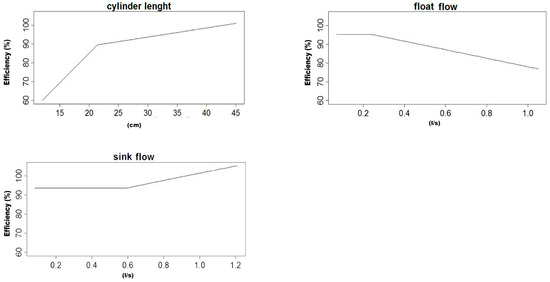

In this work, four average MARS models were obtained for the efficiency of the LARCODEMS device, two with feed into the vortex and another two for feed with media, using the two sets of variables referred in the Materials and Methods section. Their equations are listed in Table 1, Table 2, Table 3 and Table 4. As all the equations are MARS models of the first degree, all their terms can be graphically represented. Please note that the function is equal to in when is larger than 0 and 0 in the rest of the cases. The graphical representation for these models are in Figure 2, Figure 3, Figure 4 and Figure 5, respectively. This helps in the interpretation of the results. Figure 2 corresponds to model number one for feed with the media. It demonstrates that the efficiency of LARCODEMS improves with its length and that there is a linear relation between the improvements in separations obtained and the length of the cylinder. The same relationship also exists between the FPA and efficiency. The increase in efficiency with separation CL can be attributed to the increase in residence time within the separation cylinder and, as such, a greater degree of separation is obtained. Similarly, as the FPA increases, separation media transporting low-density particles increases in thickness, providing more opportunity for only dense particles to pass into the ascending media reporting to the sink port. As the DA increases, efficiency decreases due to the vortex been forced to a diameter where there is insufficient thickness of low-density media resulting in low-density particles reporting to the sink port and, thus, resulting in a decrease in separation efficiency. In general, efficiency is constant with the sink flow, except in a limited number of cases corresponding to data with the minimum CL. Figure 3 shows a substantial increase in efficiency with a cylinder length up to 21.4 cm, and then a less accentuated, but still consistent, increase in efficiency with length up to 44 cm. On the other hand, efficiency is optimum with a minimum FF, and then a maximum after which it consistently decreases with the increase of FF. This decrease in efficiency corresponds to a certain degree of the increase in SF above 0.588 litres per second. Below this value, efficiency is constant. Although in some of the basis functions it would seem possible to reach efficiencies over 100%, as the efficiency in each model is the sum of all the basis functions, none of the MARS models gives an efficiency value above 100% for real working conditions.

Table 1.

List of basis functions of multivariate adaptive regression splines (MARS) model number one and their coefficients for feed with media.

Table 2.

List of basis functions of MARS model number one and their coefficients for feed into the vortex.

Table 3.

List of basis functions of MARS model number two and their coefficients for feed with media.

Table 4.

List of basis functions of the MARS model number two and their coefficients for feed into the vortex.

Figure 2.

Graphical representation of the basis functions of MARS model number one and their coefficients for feed with media.

Figure 3.

Graphical representation of the basis functions of MARS model number one and their coefficients for feed into the vortex.

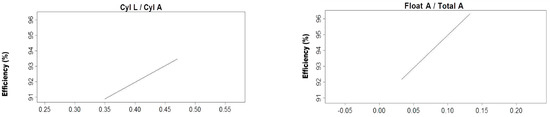

Figure 4.

Graphical representation of the basis functions of MARS model number two and their coefficients for feed with media.

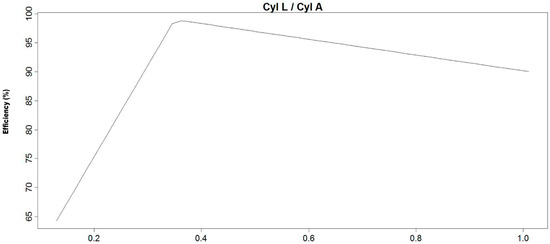

Figure 5.

Graphical representation of the basis functions of the MARS model number one and their coefficients for feed into the vortex the ratio CL to CA relative to separation efficiency.

Figure 4 shows that, contrary to the results reported by Yang and Wang [8], the separation efficiency is determined by the medium inlet diameter and shape and that the separation density is determined by SP, and efficiency increases as the cylinder length to area increases. Similarly, as the diameter of the float port with respect to the area increases so does the efficiency. In Figure 5 it can be seen how the increases of about 0.09 to 0.35 correspond to an increase in the cylinder length of one of the devices reaching an optimum condition, while the relation of the length to area about 0.35 below this level efficiency tends to decrease.

Results indicated in Figure 4 are dominated by the data for the 110 mm version with 12, 21.4, 32.9, and 45 cm CL and the four diameters of the LARCODEMS versions tested. The increase in separation efficiency for CL/CA values of 0.1–0.38 reflect the increase in the separation efficiency with the increase in CL of the 110 mm model. The subsequent moderate decrease in separation efficiency with an increase in CL/CA from 0.38 to 1.0 is related to the increase in CL/CA with the reduction in the size of the model tested. The efficiency decrease indicates that the separation efficiency obtained increases with the size of the device. This effect is attributed to the increase in the proportion of the surface area affecting the flow of the separation media to the total area of the media going up the separation cylinder, and the relative proportion of thickness of the turbulence layer between the media going up and the media going down, and the separation cylinder to the total area of the separation cylinder occupied by the separation media.

The MARS models make it possible to know which variables take part in each model and their relative importance. Table 5, Table 6, Table 7 and Table 8 indicate the relative importance of the variables. These are presented according to the three metrics for importance classification described in Materials and Method. The classification of these three metrics in all of the models is the same.

Table 5.

Relative importance of variables in MARS model number one and their coefficients for feed with media.

Table 6.

Relative importance of variables in MARS model number one and their coefficients for feed into the vortex.

Table 7.

List of basis functions of MARS model number two and their coefficients for feed with media.

Table 8.

List of basis functions of MARS model number two and their coefficients for feed into the vortex.

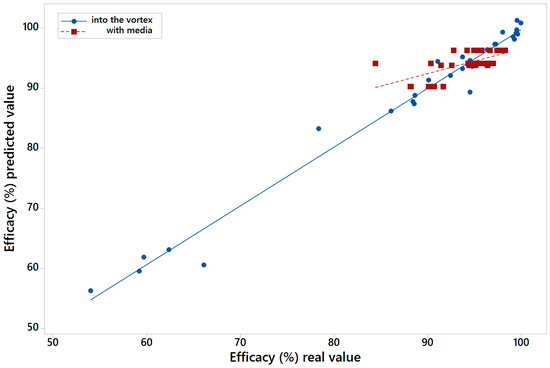

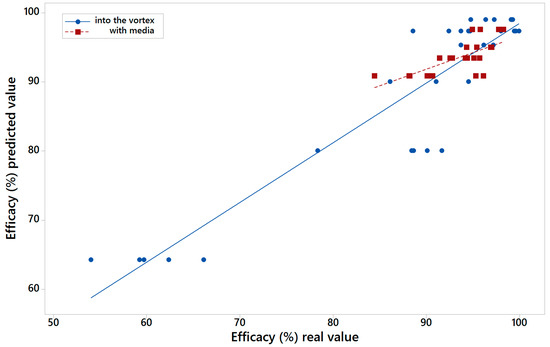

Figure 6 shows the real value of efficacy versus the value calculated by MARS model one for into the vortex (blue colour) and with media feed (red colour). Figure 7 shows the same but for those results calculated with the variables of model two. The R2 for the models of the LARCODEMS tests performed were as follows: model one with media: 0.6254; into the vortex: 0.9553; model two with media: 0.4820; and into the vortex: 0.8630. This shows a high correlation coefficient of real and predicted efficiencies. In the case of model one, the RMSE, for the test performed with feed into the vortex has a value of 1.9894; while for those test with media feed it is 2.3741. For model two, RMSE values are 2.421 with the media, and 4.8996 into the vortex. In the case of the MAE, for model one with media, the value is 1.7404, and into the vortex, 1.3456. Finally, for model two, the MAE value is 1.8419 with media, and 3.7928 into the vortex. The R2 values obtained show a high correlation for the case of the LARCODEMS test performed with media. In the case of the model for the test performed into the vortex, although the correlation is significant, it is not as high. As far as it is known to the authors, there are no other studies that would allow us to compare the RMSE and MAE obtained in this work.

Figure 6.

Real value of efficacy versus the calculated by MARS model one for into the vortex and with media feed tests.

Figure 7.

Real value of efficacy versus the calculated by MARS model two for into the vortex and with media feed tests.

In order to know the performance and stability of those models, the average values of the R2, RMSE, and MAE of the 1000 different five-fold cross-validation sets created are summarized in Table 9 for both models one and two with media and into the vortex. The average value of R2 is better in the case of both models with into the vortex feed when compared with the model with media feed. In the case of both RMSE and MAE, the average value is larger in the models trained and validated with the into the vortex media test.

Table 9.

Performance measurements of models one and two trained with the media feed and the feed into the vortex.

4. Discussion and Conclusions

In this research, the performance of the MARS models has been tested by means of a five-fold cross-validation methodology. The referred methodology is used to assess the ability of the MARS model to fit to any validation set using, for each model, 5000 different sets (five subsets per test and 1000 replications of the test). The results obtained are in line with previous research that has proven the capability of MARS models to deal with noisy data in the solution, creating a trade-off between the goodness of fit to the amount of data in the structure. Additionally, with the help of MARS models, it is possible to establish an importance order of all of the variables included in the model.

From a technical perspective, it is shown that:

- Separation efficiencies increase with both the length and size of the separation cylinder. This is reflected by the relation between the separation efficiency and media flow rates in the sink and float ports and by the relation between the cylinder length to the cylinder area.

- For a given separation cylinder size there is an optimum length above which the separation efficiency does not increase.

The results of this investigation indicate that the LARCODEMS is a relatively robust density separation device suitable for obtaining efficient separations, provided that a suitable CL is maintained. It is indicated that since the FF and SF rates are related to the SPA, FPA, and the DA, such separations may be optimized based on an adequate control of the FPA and, to a lesser extent, the DA.

The increase in separation efficiency with a decrease in CL/CA indicates that, for this type of DMS cyclone, the larger it is, the better the results. Furthermore, unlike the cono-cylindrical type of DMS, the diameter of the cyclone does not appear to be limited to the >500 micron particle size that may be treated, and supports the observations of previous works [6,7].

It is suggested that this methodology could also be applied to analyse the operation of other heavy media separator density separations to optimize the efficiencies obtained. Finally, we would like to also remark that, as far as it is known by the authors, the efficiency model proposed in this research is the first parametric model proposed for the efficiency of a LARCODEMS device.

Acknowledgments

Francisco Javier de Cos Juez and Fernando Sánchez Lasheras appreciate support from the Spanish Economics and Competitiveness Ministry, through grant AYA2014-57648-P and the Government of the Principality of Asturias (Consejería de Economía y Empleo), through grant FC-15-GRUPIN14-017.

Author Contributions

Mario Menéndez Alvarez and Hector Muñiz Sierra conceived and designed the experiments; Mario Menéndez Alvarez, Hector Muñiz Sierra, and Fernando Sánchez Lasheras analysed the data; and Hector Muñiz Sierra, Fernando Sánchez Lasheras, and Francisco Javier de Cos Juez wrote the paper. The authors declare no conflict of interest. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gent, G.M.; Mario, M.; Javier, T.; Susana, T. Optimization of the recovery of plastics for recycling by density media separation cyclones. Resour. Conserv. Recycl. 2011, 55, 472–482. [Google Scholar]

- Burt, R.O.; Mills, C. Gravity Concentration Technology, Developments in Mineral Processing; Elsevier: Amsterdam, The Netherlands, 1984; p. 164. [Google Scholar]

- Gent, M.R.; Menendez, M.; Toraño, J.; Isidro, D.; Torno, S. Cylinder cyclone (LARCODEMS) density media separation of plastic wastes. Waste Manag. 2009, 29, 1819–1827. [Google Scholar] [CrossRef] [PubMed]

- Rao, V.; Kapur, P.C.; Konnur, R. Modeling the size-density partition surface of dense medium separators. Int. J. Miner. Process. 2003, 72, 443–453. [Google Scholar]

- Gent, M.R.; Menendez, M.; Toraño, J.; Diego, I. Recycling of plastic waste by density separation: Prospects for optimization. Waste Manag. Res. 2009, 27, 175–187. [Google Scholar] [CrossRef] [PubMed]

- McCulloch, J.; Baille, D. Developments in LARCODEMS media processing technology. In Proceedings of the XIII International Coal Preparation Congress; Brisbane, Australia, 4–10 October 1998, Partridge, A.C., Partridge, I.R., Eds.; Australian Coal Preparation Society: Brisbane, Australia, 1998; pp. 467–468. [Google Scholar]

- Baille, D.; Shah, C.; Heley, A. Coal Preparation—Three-Product ‘LARCODEMS’ Separator Demonstration, Installation and Performance Testing; EUR 17155; Office for official publications of the European Communities: Luxembourg, 1997; p. 138. [Google Scholar]

- Chiné, B.; Ferrtara, G. Comparison between flow velocity profiles in conical and cylindrical hydrocyclones. Kona Powder Part. J. 1997, 15, 170–179. [Google Scholar] [CrossRef]

- Yang, J.G.; Wang, Y.L. Effect of Structural Parameters of Heavy Medium Cylindrical Cyclone on Separation Efficiency. J. China Univ. Min. Technol. 2005, 34, 770–773. [Google Scholar]

- Friedman, J.H. Multivariate adaptive regression splines. Ann. Stat. 1991, 19, 1–67. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth and Brooks: Monterey, CA, USA, 1984. [Google Scholar]

- De Andrés, J.; Sánchez-Lasheras, F.; Lorca, P.; de Cos Juez, F.J. A hybrid device of Self Organizing Maps (SOM) and Multivariate Adaptive Regression Splines (MARS) for the forecasting of firms’ bankruptcy. Account. Manag. Inf. Syst. 2011, 10, 351–374. [Google Scholar]

- Sekulic, S.; Kowalski, B.R. MARS: A tutorial. J. Chemom. 1992, 6, 199–216. [Google Scholar] [CrossRef]

- Antón, J.C.Á.; Nieto, P.J.G.; de Cos Juez, F.J.; Lasheras, F.S.; Viejo, C.B.; Gutiérrez, N.R. Battery state-of-charge estimator using the MARS technique. IEEE Trans. Power Electron. 2013, 28, 3798–3805. [Google Scholar] [CrossRef]

- Fernández, J.R.A.; Muñiz, C.D.; Nieto, P.J.G.; de Cos Juez, F.J.; Lasheras, F.S.; Roqueñi, M.N. Forecasting the cyanotoxins presence in fresh waters: A new model based on genetic algorithms combined with the MARS technique. Ecol. Eng. 2013, 53, 68–78. [Google Scholar] [CrossRef]

- Sánchez, A.S.; Iglesias-Rodriguez, F.J.; Fernández, P.R.; de Cos Juez, F.J. Applying the K-nearest neighbor technique to the classification of workers according to their risk of suffering musculoskeletal disorders. Int. J. Ind. Ergon. 2016, 52, 92–99. [Google Scholar] [CrossRef]

- Lasheras, F.S.; de Cos Juez, F.J.; Sánchez, A.S.; Krzemień, A.; Fernández, P.R. Forecasting the COMEX copper spot price by means of neural networks and ARIMA models. Resour. Policy 2015, 45, 37–43. [Google Scholar] [CrossRef]

- Vilán, J.A.V.; Fernández, J.R.A.; Nieto, P.J.G.; Lasheras, F.S.; de Cos Juez, F.J.; Muñiz, C.D. Support vector machines and multilayer perceptron networks used to evaluate the cyanotoxins presence from experimental cyanobacteria concentrations in the Trasona reservoir (Northern Spain). Water Resour. Manag. 2013, 27, 3457–3476. [Google Scholar] [CrossRef]

- De Cos Juez, F.J.; Lasheras, F.S.; Nieto, P.J.G.; Álvarez-Arenal, A. Non-linear numerical analysis of a double-threaded titanium alloy dental implant by FEM. Appl. Math. Comput. 2008, 206, 952–967. [Google Scholar] [CrossRef]

- Turrado, C.C.; López María del, C.M.; Lasheras, F.S.; Gómez, B.A.R.; Rollé, J.L.C.; de Cos Juez, F.J. Missing data imputation of solar radiation data under different atmospheric conditions. Sensors 2014, 14, 20382–20399. [Google Scholar] [CrossRef] [PubMed]

- De Cos Juez, F.J.; Lasheras, F.S.; Roqueñí, N.; Osborn, J. An ANN-based smart tomographic reconstructor in a dynamic environment. Sensors 2012, 12, 8895–8911. [Google Scholar] [CrossRef] [PubMed]

- Osborn, J.; Guzman, D.; de Cos Juez, F.J.; Basden, A.G.; Morris, T.J.; Gendron, E.; Butterley, T.; Myers, R.M.; Guesalaga, A.; Sanchez Lasheras, F.; et al. Open-loop tomography with artificial neural networks on CANARY: On-sky results. Mon. Not. R. Astron. Soc. 2014, 441, 2508–2514. [Google Scholar] [CrossRef]

- García Nieto, P.J.; Alonso Fernández, J.R.; de Cos Juez, F.J.; Sánchez Lasheras, F.; Díaz Muñiz, C. Hybrid modelling based on support vector regression with genetic algorithms in forecasting the cyanotoxins presence in the Trasona reservoir (Northern Spain). Environ. Res. 2013, 122, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Sánchez, A.S.; Fernández, P.R.; Lasheras, F.S.; de Cos Juez, F.J.; García Nieto, P.J. Prediction of work-related accidents according to working conditions using support vector machines. Appl. Math. Comput. 2011, 21, 3539–3552. [Google Scholar] [CrossRef]

- Garcia Nieto, P.J.; Alonso Fernández, J.R.; Sánchez Lasheras, F.; de Cos Juez, F.J.; Díaz Muñiz, C. A new improved study of cyanotoxins presence from experimental cyanobacteria concentrations in the Trasona reservoir (Northern Spain) using the MARS technique. Sci. Total Environ. 2012, 430, 88–92. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).