1. Introduction

Electric demand forecasting plays the critical role in the daily operational and economic management of power systems, such as energy transfer scheduling, transaction evaluation, unit commitment, fuel allocation, load dispatch, hydrothermal coordination, contingency planning load shedding, and so on [

1]. Therefore, a given percentage of forecasting error implies great losses for the utility industries in the increasingly competitive market, as decision makers take advantage of accurate forecasts to make optimal action plans. As mentioned by Bunn and Farmer [

2], a 1% increase in electric demand forecasting error represents a £10 million increase in operating costs. Thus, it is essential to improve the forecasting accuracy or to develop new approaches, particularly for those countries with limited energy [

3].

In the past decades, many researchers have proposed lots of methodologies to improve electric demand forecasting accuracy, including traditional linear models, such as the ARIMA (auto-regressive integrated moving average) model [

4], exponential smoothing models [

5], Bayesian estimation model [

6], state space and Kalman filtering technologies [

7,

8], regression models [

9], and other time series technologies [

10]. Due to the complexity of load forecasting, with these mentioned models it is difficult to illustrate well the nonlinear characteristics among historical data and exogenous factors, and they cannot always achieve satisfactory performance in terms of electric demand forecasting accuracy.

Since the 1980s, due to superior nonlinear mapping ability, the intelligent techniques like expert systems, fuzzy inference, and artificial neural networks (ANNs) [

11] have become very successful applications in dealing with electric demand forecasting. In addition, these intelligent approaches can be hybridized to form new novel forecasting models, for example, the random fuzzy variables with ANNs [

12], the hybrid Monte Carlo algorithm with the Bayesian neural network [

13], adaptive network-based fuzzy inference system with RBF neural network [

14], extreme learning machine with hybrid artificial bee colony algorithm [

15], fuzzy neural network (WFNN) [

16], knowledge-based feedback tuning fuzzy system with multi-layer perceptron artificial neural network (MLPANN) [

17], and so on. Due to their multi-layer structure and corresponding outstanding ability to learn non-linear characteristics, ANN models have the ability to achieve more accurate performance of a continuous function described by Kromogol’s theorem. However, the main shortcoming of the ANN models are their structure parameter determination [

18]. Complete discussions for the load forecasting modeling by ANNs are shown in references [

19,

20].

Support vector regression (SVR) [

21], which has been widely applied in the electric demand forecasting field [

11,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33], hybridizes different evolutionary algorithms with various chaotic mapping functions (logistic function, cat mapping function) to simultaneously and carefully optimize the three parameter combination, to obtain better forecasting performance. As concluded in Hong’s series of studies, determination of these three parameters will critically influence the forecasting performance,

i.e., low forecasting accuracy (premature convergence and trapped in local optimum) results from the theoretical limitations of the original evolutionary algorithms. Therefore, Hong and his successors have done a series of trials on hybridization of evolutionary algorithms with a SVR model. However, each algorithm has its embedded drawbacks, so to overcome these shortcomings, they continue applying chaotic mapping functions to enrich the searching ergodically over the whole space to do more compact searching in chaotic space, and also apply cloud theory to solve well the decreasing temperature problem during the annealing process to meet the requirement of continuous decrease in actual physical annealing processes, and then, improve the search quality of simulated annealing algorithms, eventually, improving the forecasting accuracy.

Inspired by Hong’s efforts mentioned above, the author considers the core drawback of the classical PSO algorithm, which results in an earlier standstill of the particles and loss of activities, eventually causing low forecasting accuracy, therefore, this paper continues to explore possible improvements of the PSO algorithm. As known in the classical PSO algorithm, the particle moving in the search space follows Newtonian dynamics [

34], so the particle velocity is always limited, the search process is limited and it cannot cover the entire feasible area. Thus, the PSO algorithm is not guaranteed to converge to the global optimum and may even fail to find local optima. In 2004, Sun

et al. [

35] applied quantum mechanics to propose the quantum delta potential well PSO (QDPSO) algorithm by empowering the particles to have quantum behaviors. In a quantum system, any trajectory of any particles is non-determined,

i.e., any particles can appear at any position in the feasible space if it has better fitness value, even far away from the current one. Therefore, this quantum behavior can efficiently enable each particle to expand the search space and to avoid being trapped in local minima. Many improved quantum-behaved swarm optimization methods have been proposed to achieve more satisfactory performance. Davoodi

et al. [

36] proposed an improved quantum-behaved PSO-simplex method (IQPSOS) to solve power system load flow problems; Kamberaj [

37] also proposed a quantum-behaved PSO algorithm (q-GSQPO) to forecast the global minimum of potential energy functions; Li

et al. [

38] proposed a dynamic-context cooperative quantum-behaved PSO algorithm by incorporating the context vector with other particles while a cooperation operation is completed. In addition, Coelho [

39] proposed an improved quantum-behaved PSO by hybridization with a chaotic mutation operator. However, like the PSO algorithm, the QPSO algorithm still easily suffers from shortcomings in iterative operations, such as premature convergence problems.

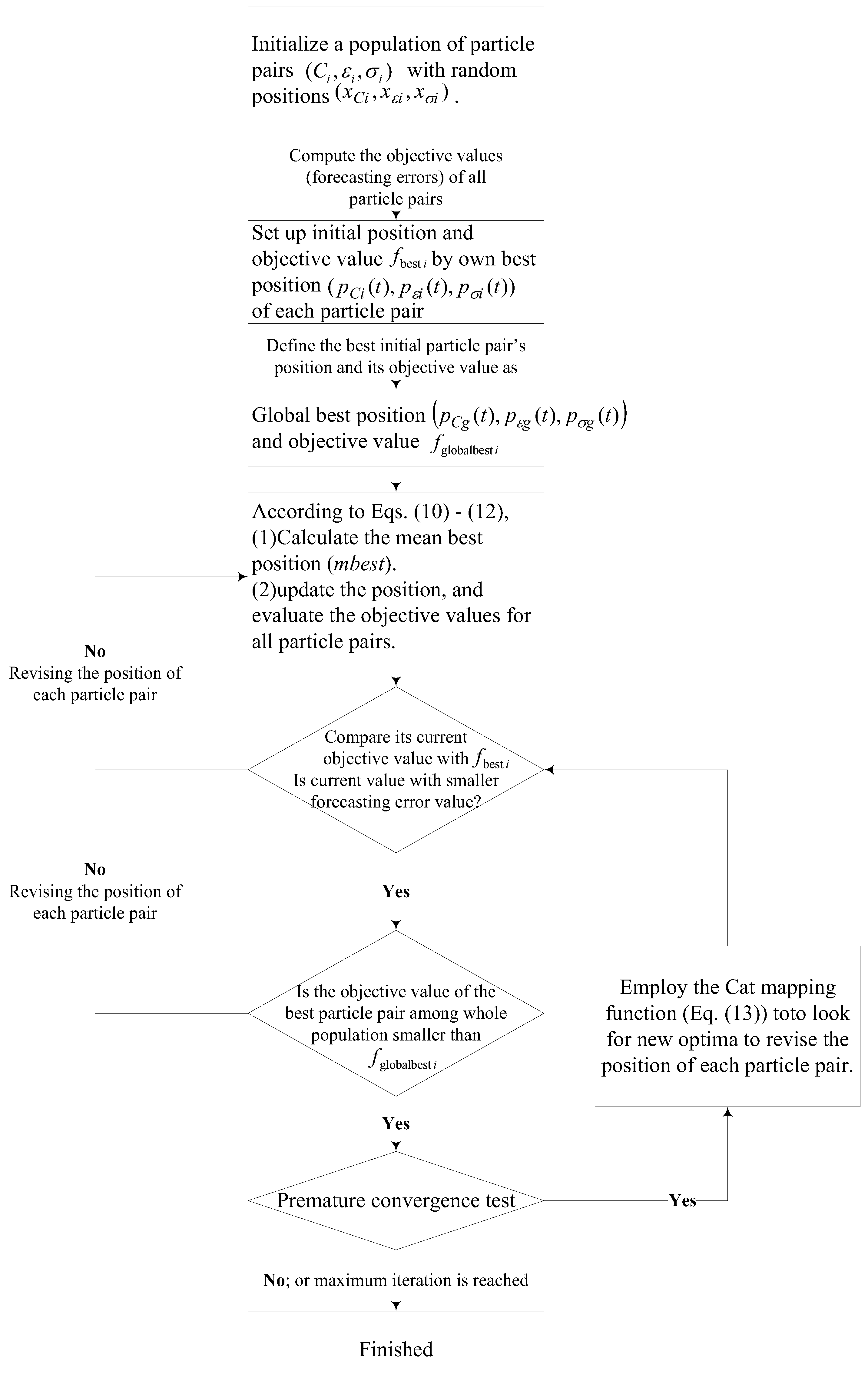

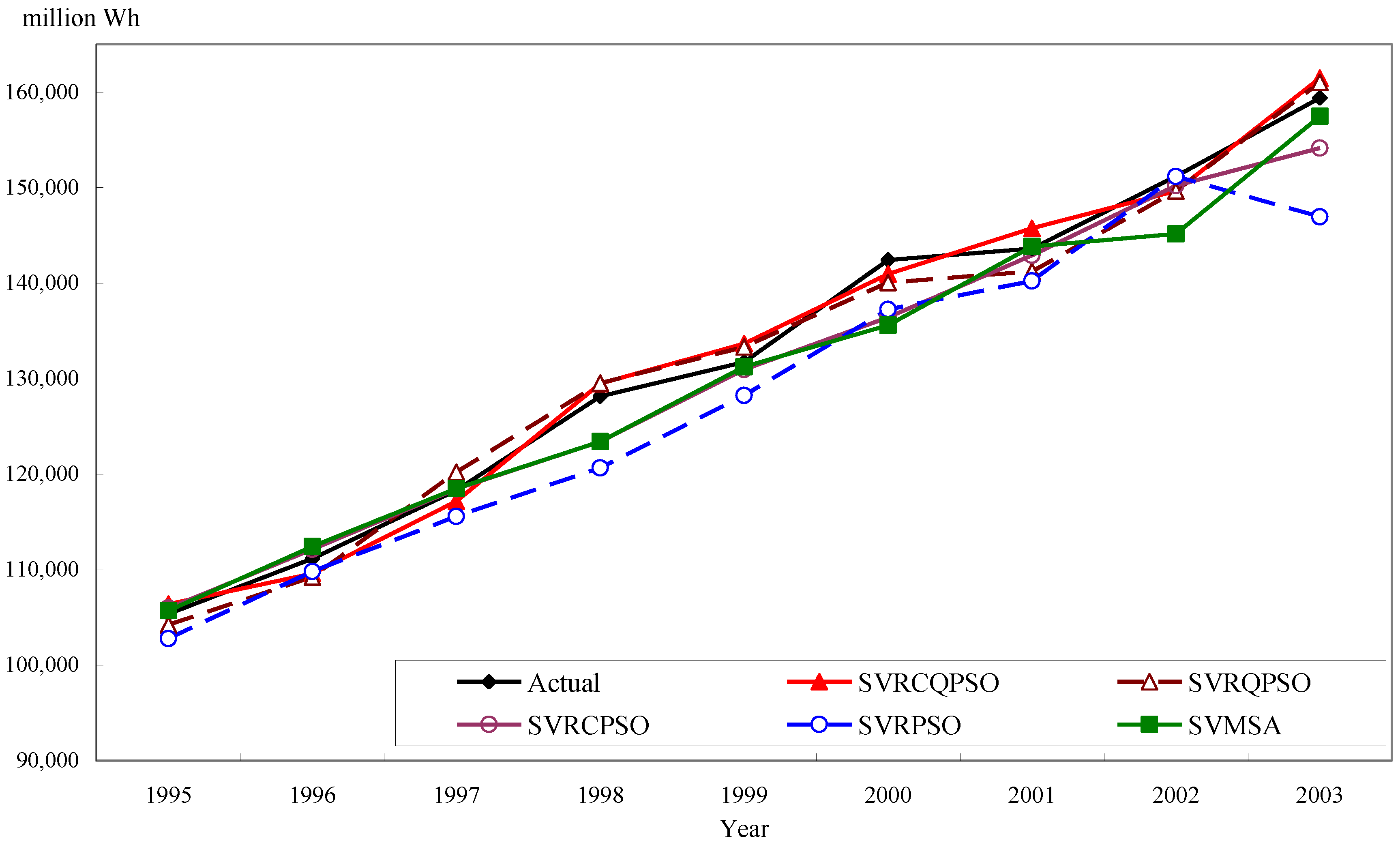

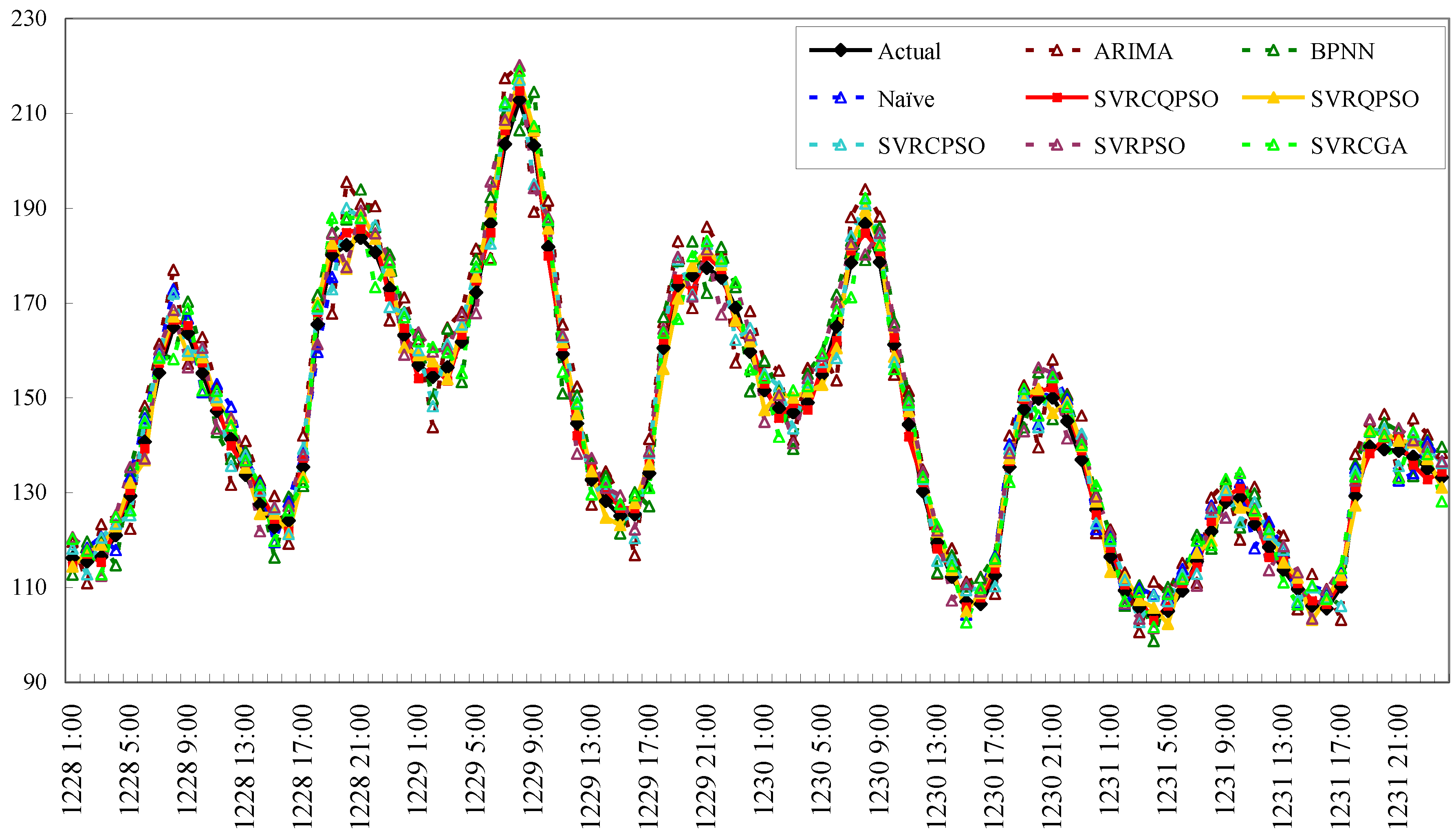

In this paper, the author applies quantum mechanics to empower each particle in the PSO algorithm to possess quantum behavior to enlarge the search space, then, a chaotic mapping function is employed to help the particles break away the local optima while the premature condition appears in each iterative searching process, eventually, improving the forecasting accuracy. Finally, the forecasting performance of the proposed hybrid chaotic quantum PSO algorithm with an SVR model, named SVRCQPSO model, is compared with four other existing forecasting approaches proposed in Hong [

33] to illustrate its superiority in terms of forecasting accuracy.

This paper is organized as follows:

Section 2 illustrates the detailed processes of the proposed SVRCQPSO model. The basic formulation of SVR, the QPSO algorithm, and the CQPSO algorithm will be further introduced.

Section 3 employs two numerical examples and conducts the significant comparison among alternatives presented in an existing published paper in terms of forecasting accuracy. Finally, some meaningful conclusions are provided in

Section 4.