Non-Invasive Multivariate Prediction of Human Thermal Comfort Based on Facial Temperatures and Thermal Adaptive Action Recognition

Abstract

1. Introduction

1.1. Relevant Research

1.2. Main Contributions

1.3. Paper Organization

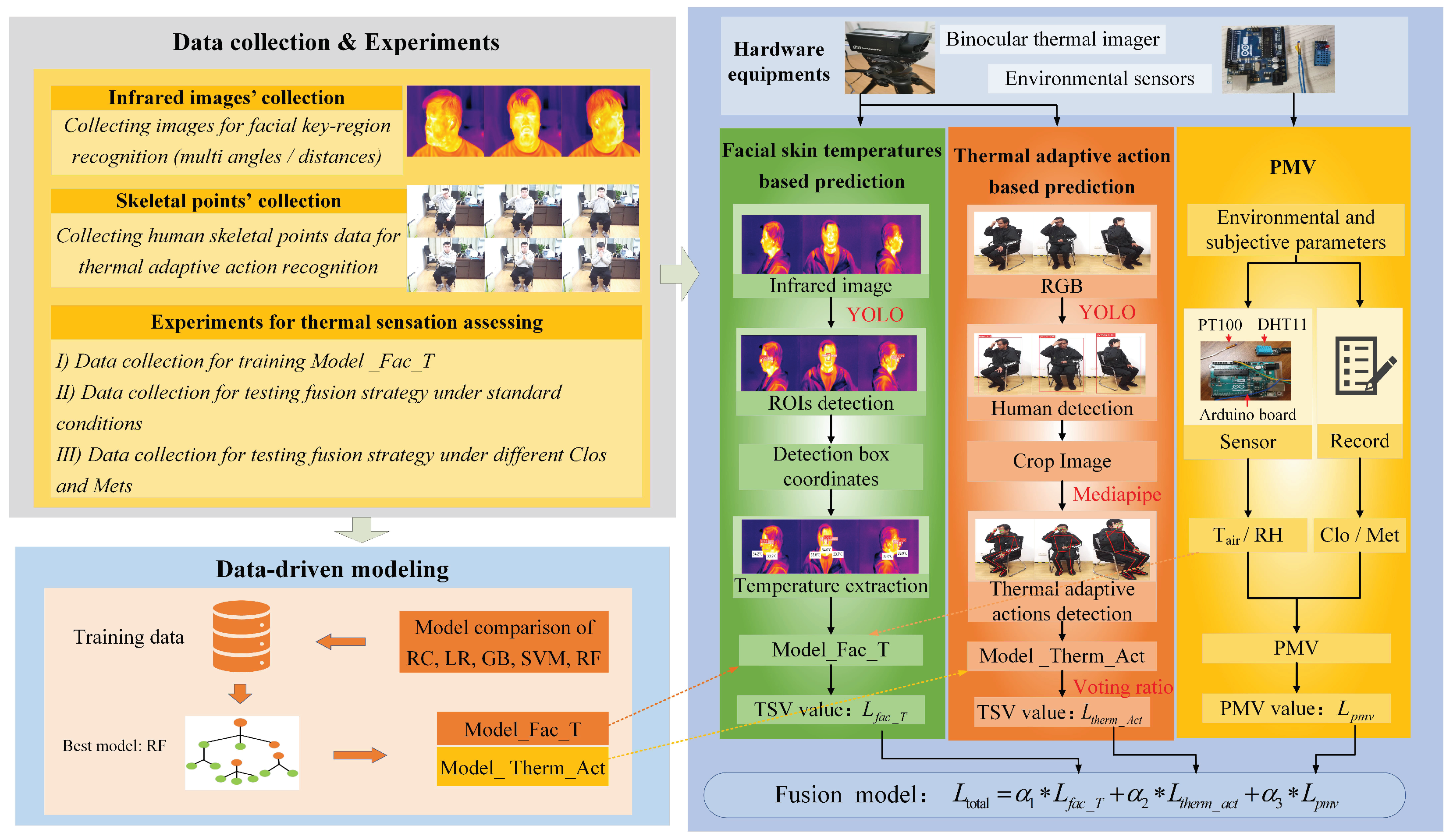

2. Methods

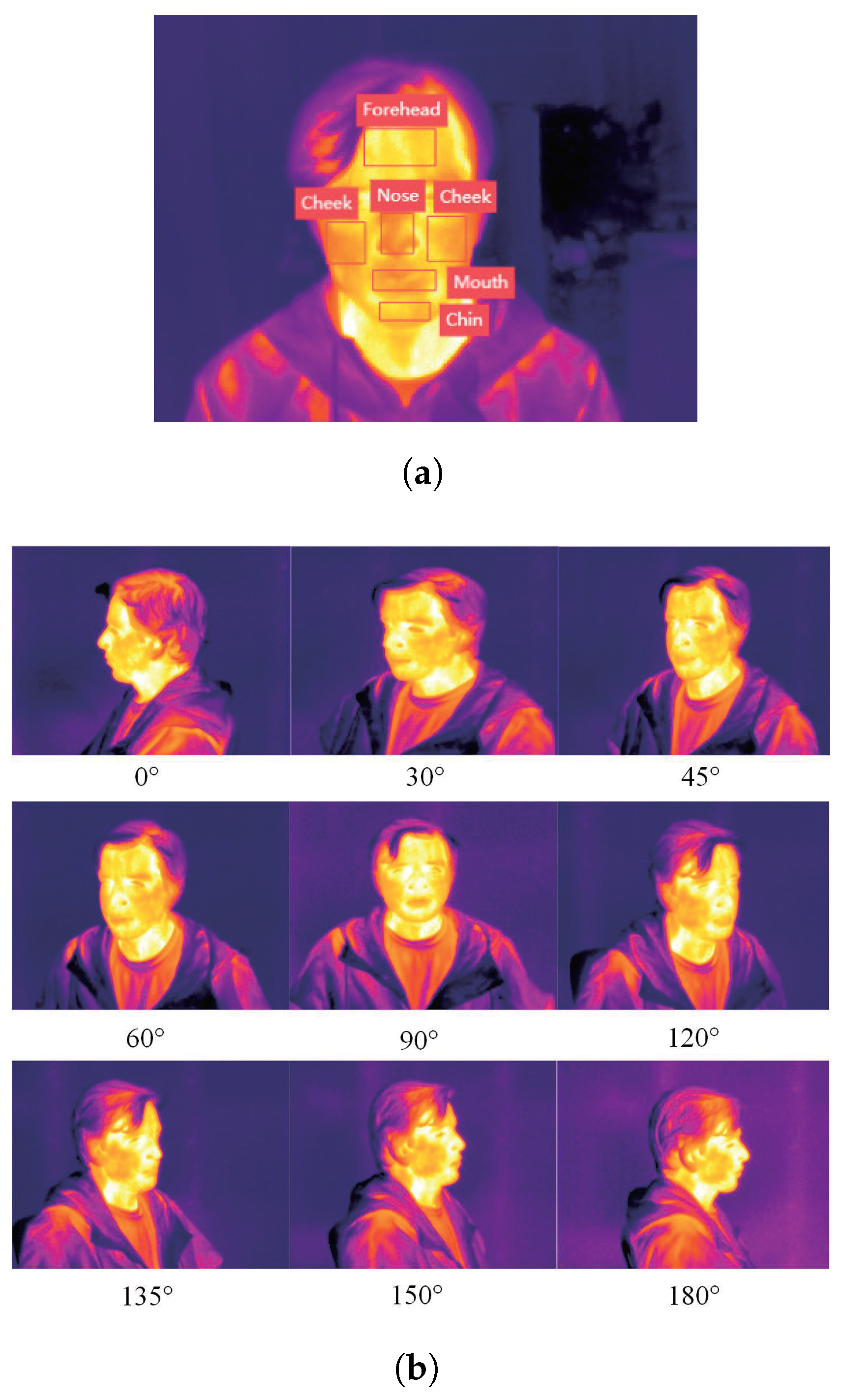

2.1. Multi-Region Facial Recognition Method

- (1)

- Object detection algorithm

- (2)

- Dataset collection of thermal images

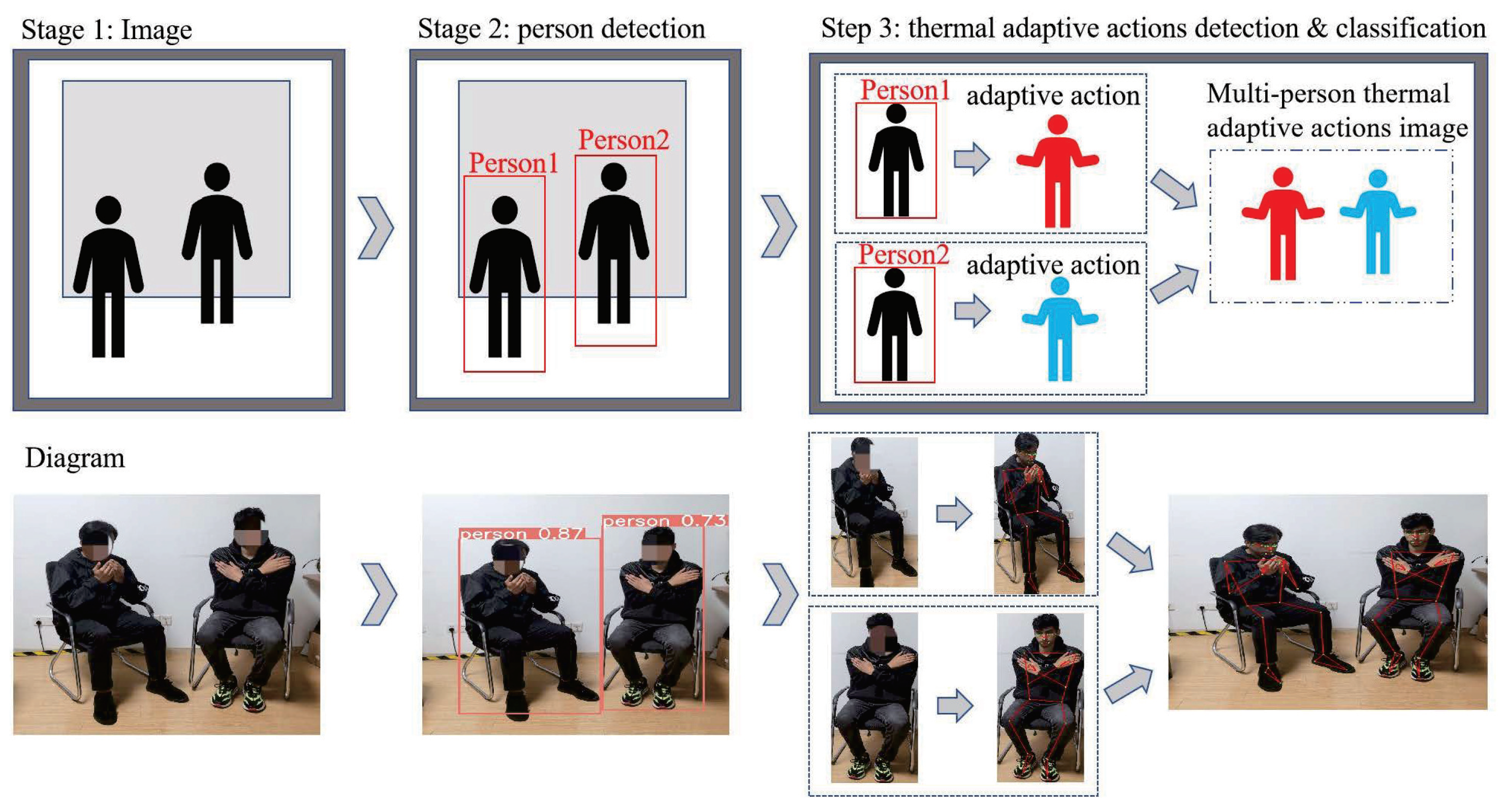

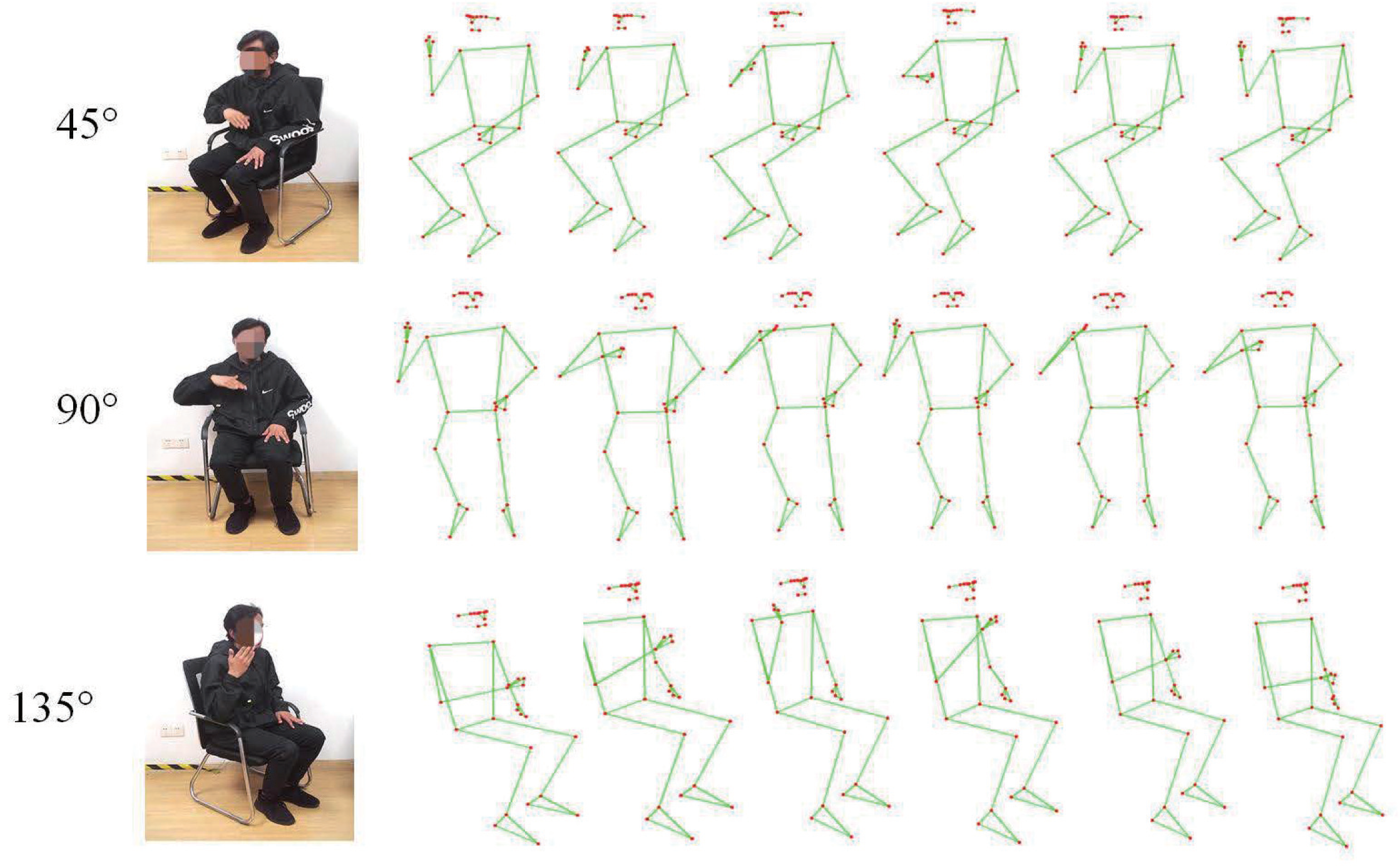

2.2. Thermal Adaptive Action Recognition Method

- (1)

- Action detection algorithm

- (2)

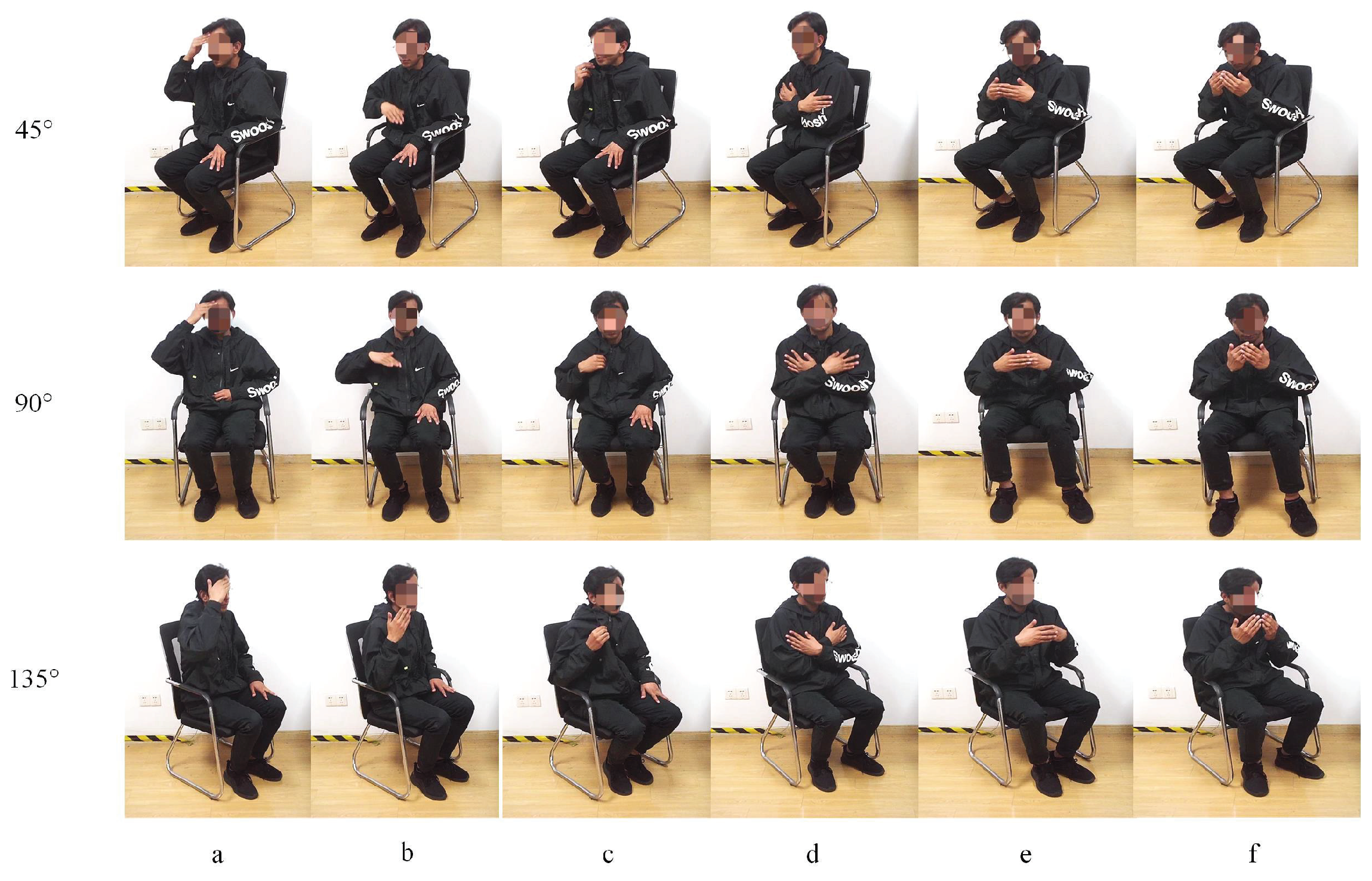

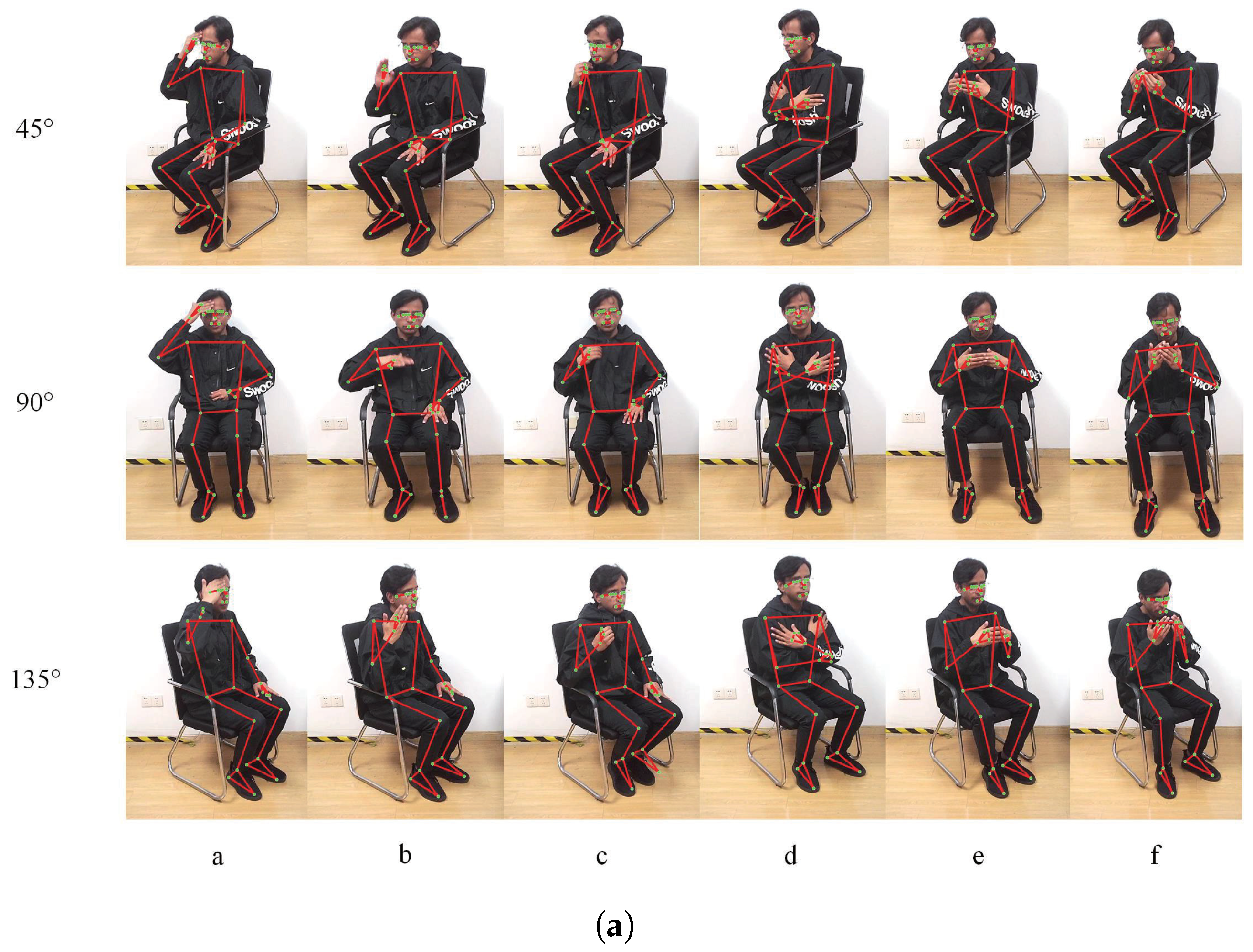

- Dataset collection of skeletal points

- (3)

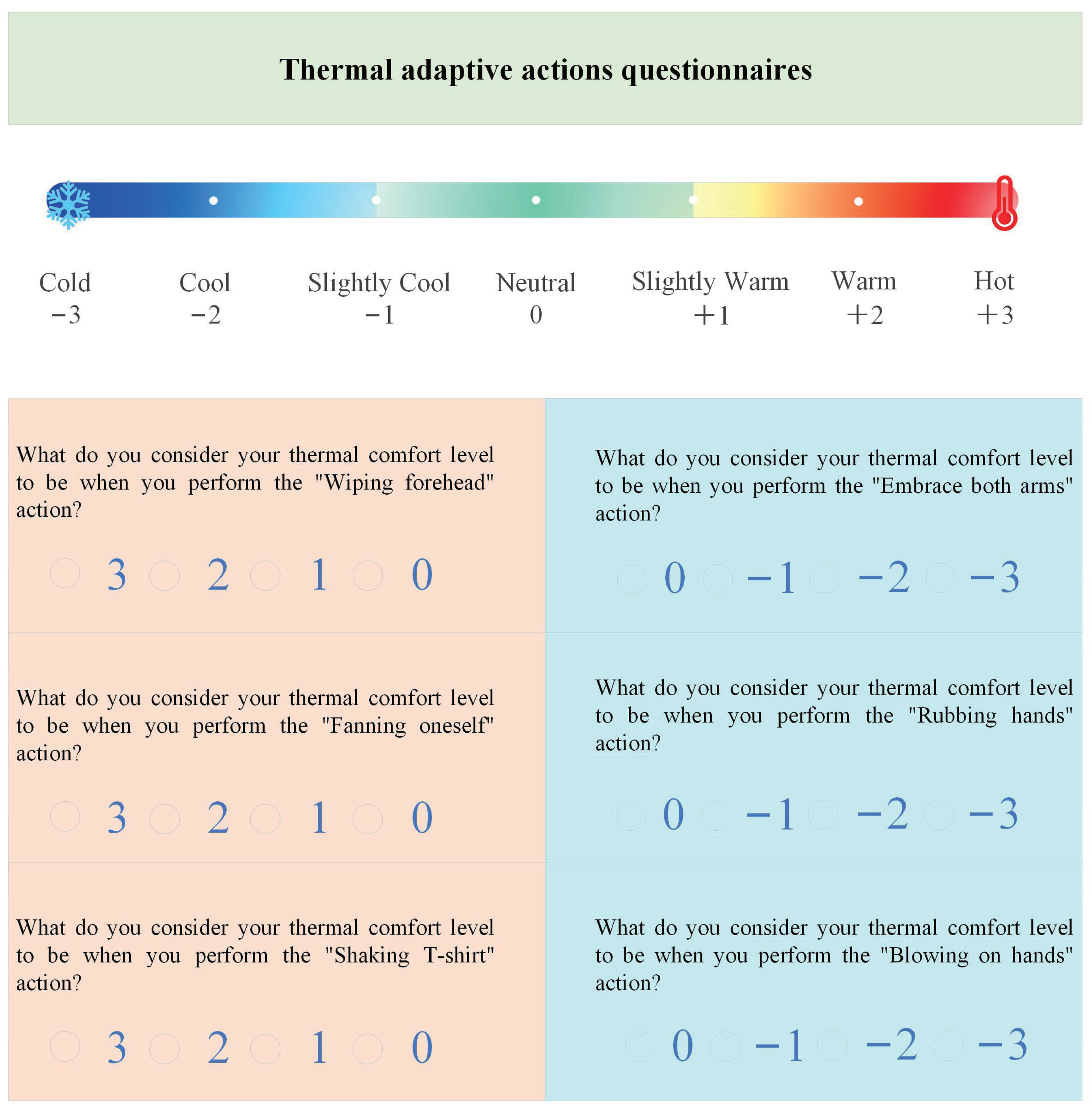

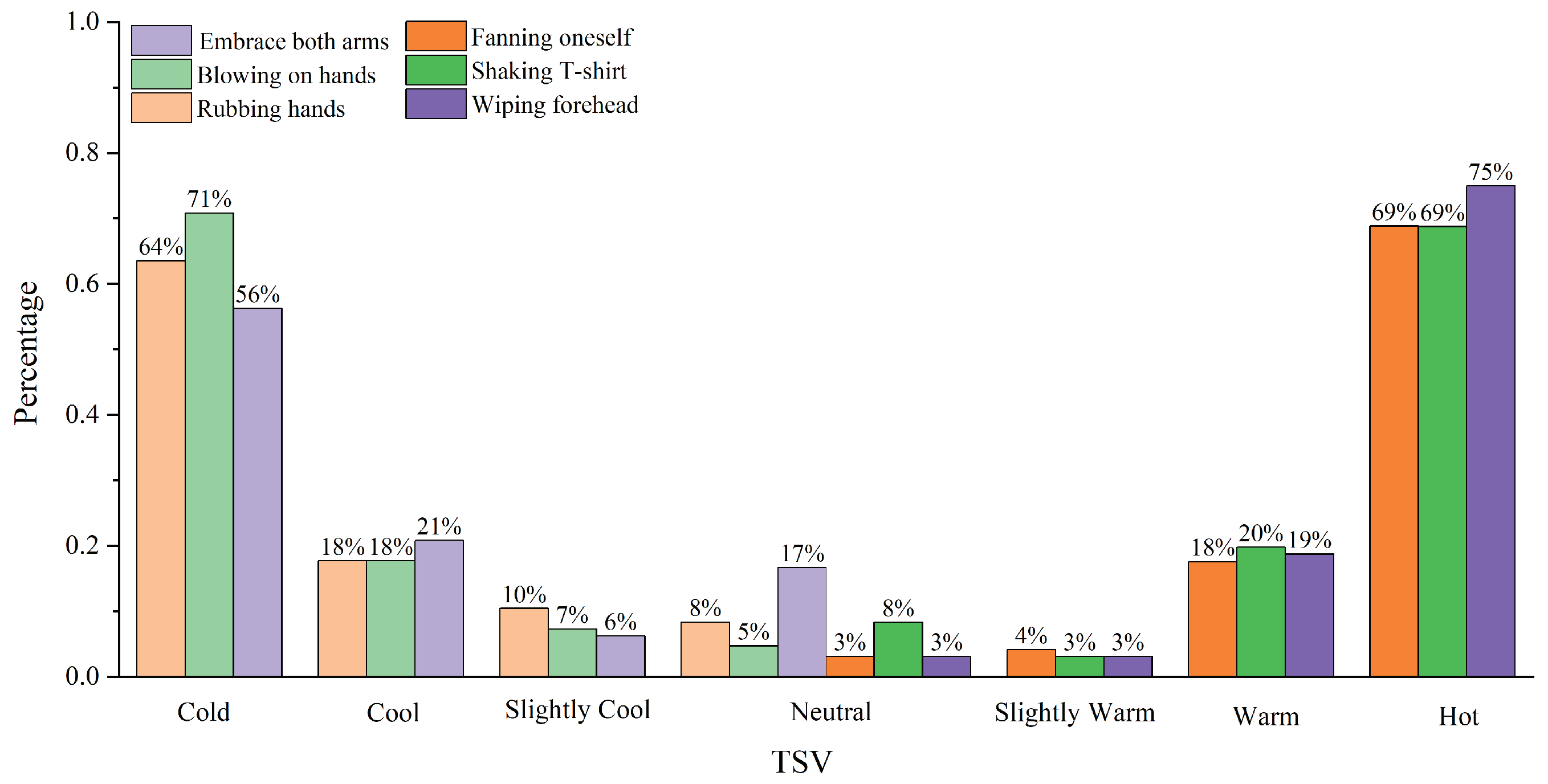

- Determination of TSV levels corresponding to thermal adaptive actions

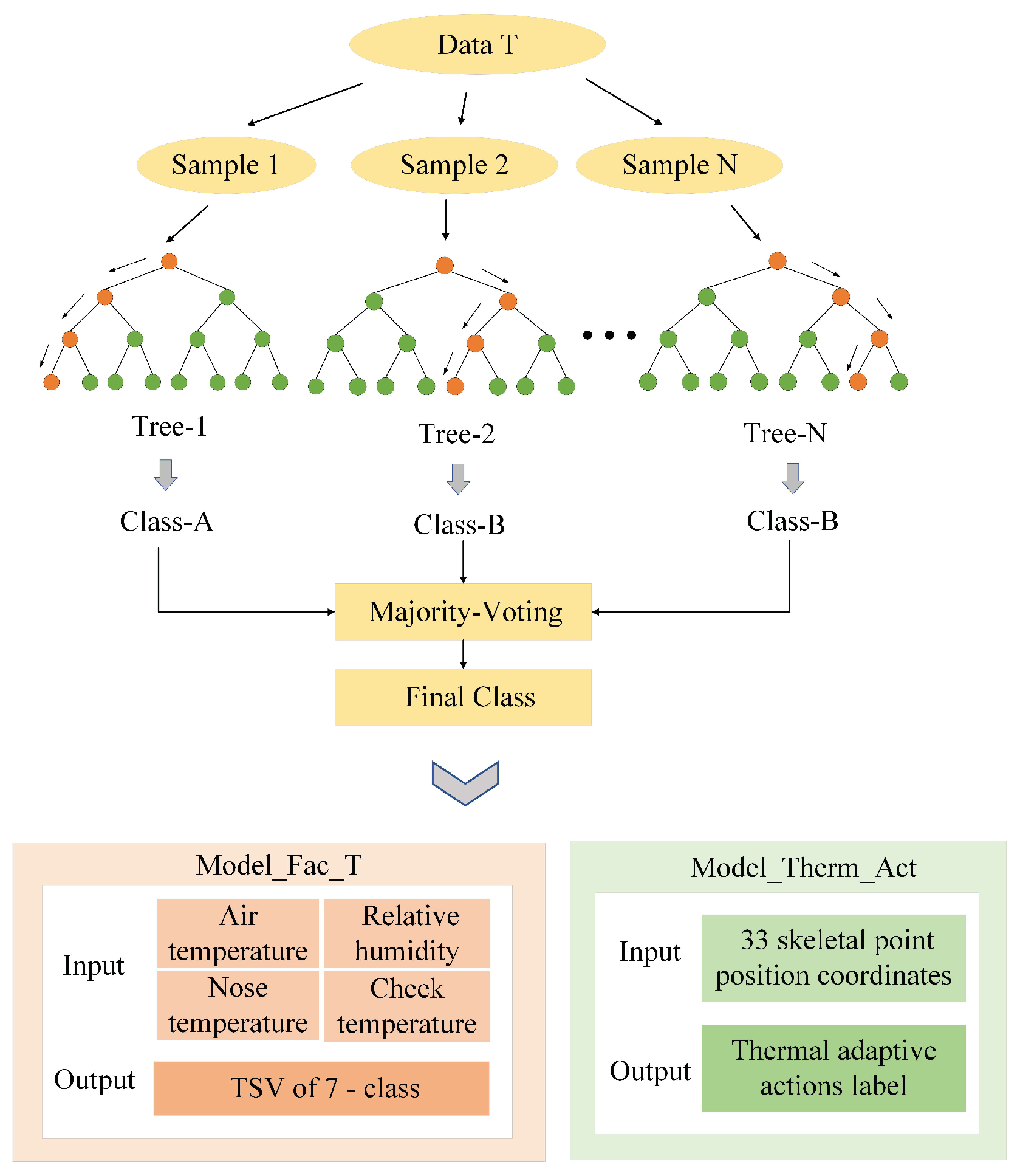

2.3. Thermal Comfort Prediction Model

- (1)

- Random forest

- (2)

- Fusion strategy

2.4. Overall Framework

2.5. Data Collection Experiment

- (1)

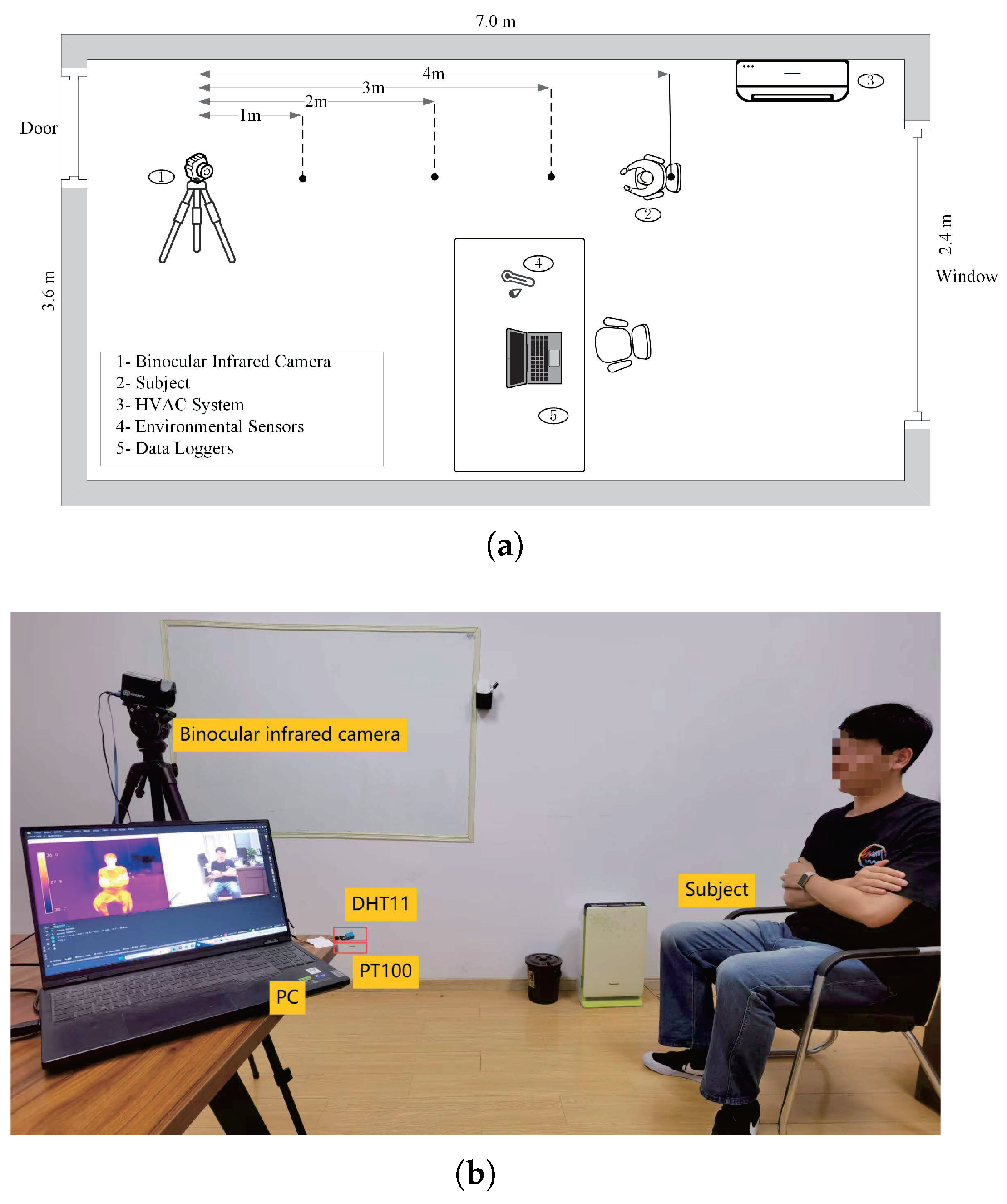

- Experimental environment and equipments

- (2)

- Subjects and TSV levels

- (3)

- Experiment process and experiment content

3. Results

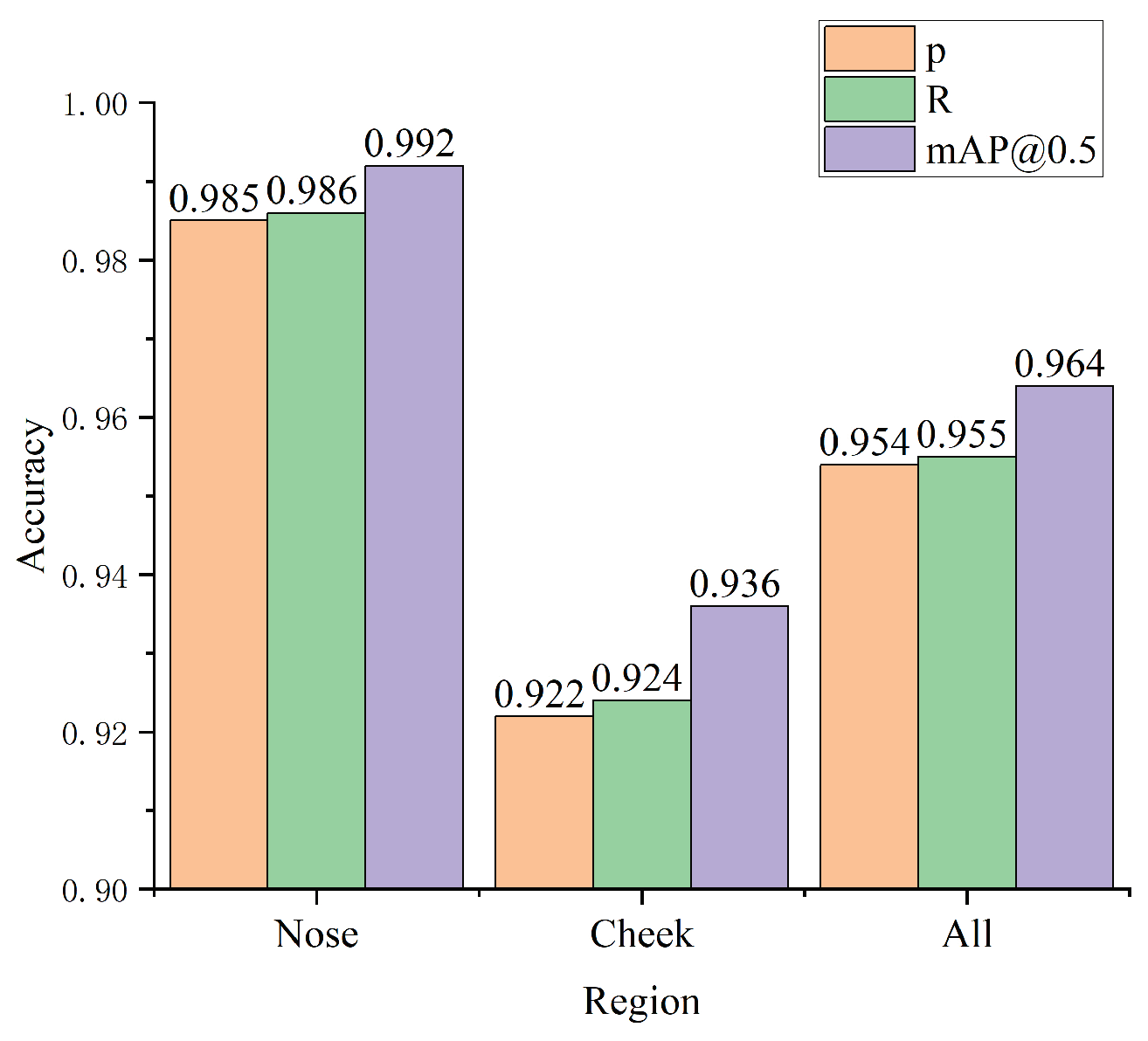

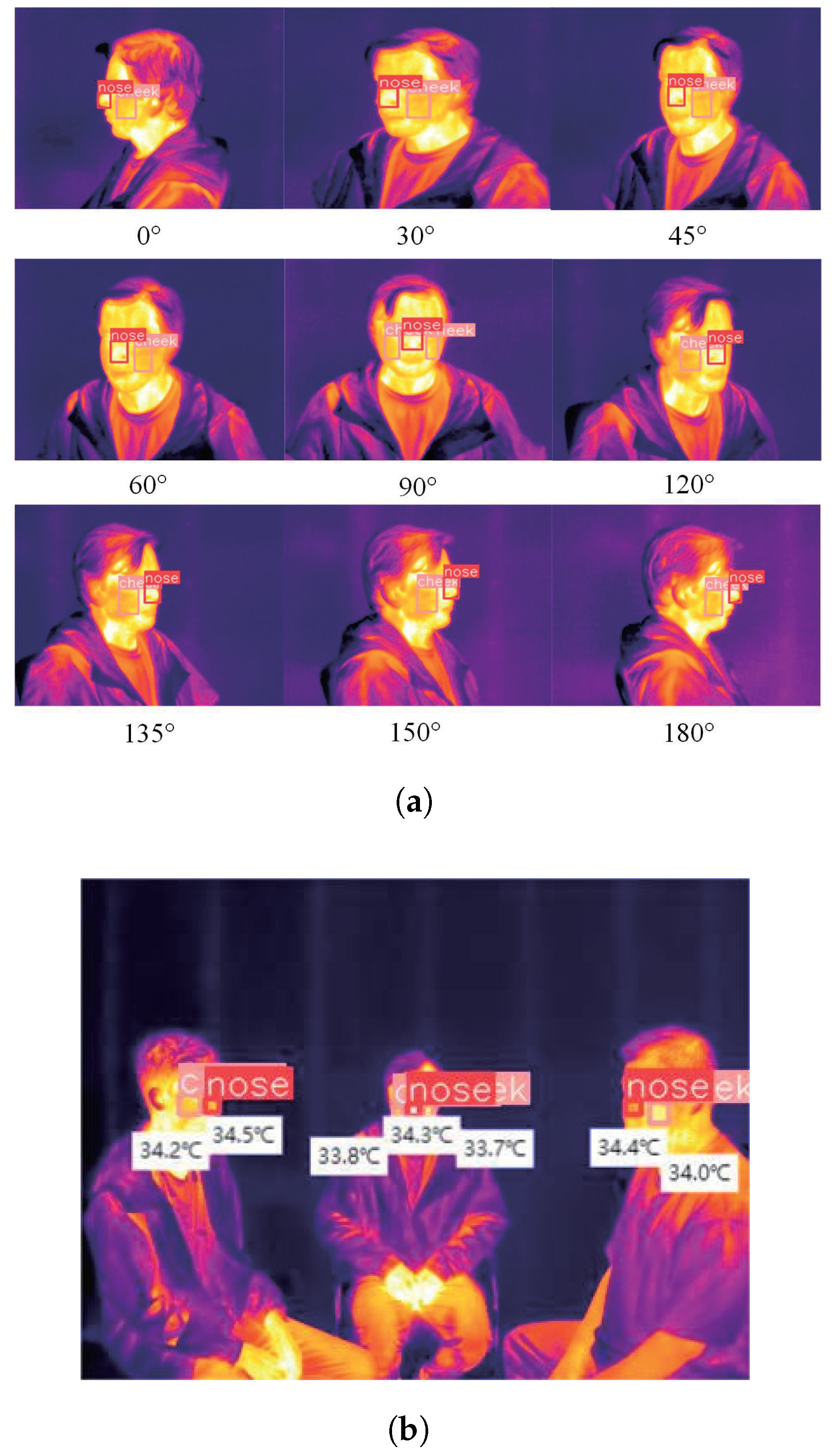

3.1. Recognition Results of Facial Regions and Temperatures

3.2. Recognition Results of Thermal Adaptive Actions

3.3. Results of Thermal Comfort Prediction

- (1)

- Results of TSV prediction based on facial region temperatures

- (2)

- Results of TSV prediction based on fusion model

- (3)

- Prediction results of fusion model in changed condition (clo/met)

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| HVAC | Heating, ventilation, and air conditioning |

| PMV | Predicted mean vote |

| TSV | Thermal sensation vote |

| SVM | Support vector machine |

| LR | Logistic regression |

| GB | Gradient boosting |

| RF | Random forest |

| ROI | Region of interest |

| Met | Metabolic level |

| Clo | Clothing thermal resistance |

| Model_Fac_T | Thermal comfort prediction model based on human facial temperature |

| Model_Therm_Act | Thermal comfort prediction model based on thermal adaptive action detection |

| Indoor temperature | |

| Relative humidity | |

| Nose temperature | |

| Cheek temperature | |

| The thermal comfort level predicted by Model_Fac_T | |

| The product sum of the predicted action’s voted TSV values | |

| PMV value calculated under the ASHRAE standard | |

| The fusion result | |

| The weight of facial temperature-based TSV prediction | |

| The weight of thermal adaptive action prediction | |

| The weight of PMV result | |

| The mean average precision of facial key-region recognition | |

| The TSV prediction accuracy of the RF model (Model_Fac_T) | |

| The recognizing accuracy of thermal adaptive actions based on Mediapipe | |

| mean average precision calculated at an IoU threshold of 0.5 |

Appendix A

References

- Cao, X.; Dai, X.; Liu, J. Building energy-consumption status worldwide and the state-of-the-art technologies for zero-energy buildings during the past decade. Energy Build. 2016, 128, 198–213. [Google Scholar] [CrossRef]

- Ma, Z.; Wang, J.; Ye, S.; Wang, R.; Dong, F.; Feng, Y. Real-time indoor thermal comfort prediction in campus buildings driven by deep learning algorithms. J. Build. Eng. 2023, 78, 107603. [Google Scholar] [CrossRef]

- Abt, E.; Suh, H.H.; Catalano, P.; Koutrakis, P. Relative contribution of outdoor and indoor particle sources to indoor concentrations. Environ. Sci. Technol. 2000, 34, 3579–3587. [Google Scholar] [CrossRef]

- Nafiz, M.; Zaki, S.A.; Nadarajah, P.D.; Singh, M.K. Influence of psychological and personal factors on predicting individual’s thermal comfort in an office building using linear estimation and machine learning model. Adv. Build. Energy Res. 2024, 18, 105–125. [Google Scholar] [CrossRef]

- Iskandaryan, D.; Ramos, F.; Trilles, S. Application of deep learning and machine learning in air quality modeling. In Current Trends And Advances In Computer-Aided Intelligent Environmental Data Engineering; Elsevier: Amsterdam, The Netherlands, 2022; pp. 11–23. [Google Scholar]

- Iskandaryan, D.; Ramos, F.; Trilles, S. Air quality prediction in smart cities using machine learning technologies based on sensor data: A review. Appl. Sci. 2020, 10, 2401. [Google Scholar] [CrossRef]

- Pérez-Lombard, L.; Ortiz, J.; Pout, C. A review on buildings energy consumption information. Energy Build. 2008, 40, 394–398. [Google Scholar] [CrossRef]

- Huizenga, C.; Abbaszadeh, S.; Zagreus, L.; Arens, E.A. Air quality and thermal comfort in office buildings: Results of a large indoor environmental quality survey. Proc. Healthy Build. 2006, III, 393–397. [Google Scholar]

- Ma, N.; Zhang, Q.; Murai, F.; Braham, W.W.; Samuelson, H.W. Learning building occupants’ indoor environmental quality complaints and dissatisfaction from text-mining Booking. com reviews in the United States. Build. Environ. 2023, 237, 110319. [Google Scholar] [CrossRef]

- Luo, M.; Wang, Z.; Brager, G.; Cao, B.; Zhu, Y. Indoor climate experience, migration, and thermal comfort expectation in buildings. Build. Environ. 2018, 141, 262–272. [Google Scholar] [CrossRef]

- Luo, T.; He, Q.; Wang, W.; Fan, X. Response of summer Land surface temperature of small and medium-sized cities to their neighboring urban spatial morphology. Build. Environ. 2024, 250, 111198. [Google Scholar] [CrossRef]

- Almagro-Lidón, M.; Pérez-Carramiñana, C.; Galiano-Garrigós, A.; Emmitt, S. Thermal comfort in school children: Testing the validity of the Fanger method for a Mediterranean climate. Build. Environ. 2024, 253, 111305. [Google Scholar] [CrossRef]

- ANSI/ASHRAE Standard 55-2020; Thermal Environmental Conditions for Human Occupancy. ASHRAE: Atlanta, Georgia, 2020.

- ISO 7730: 2005; Ergonomics of the Thermal Environment-Analytical Determination and Interpretation of Thermal Comfort Using Calculation of the PMV and PPD Indices and Local Thermal Comfort Criteria. ISO: Geneva, Switzerland, 2005.

- Zare, S.; Hasheminezhad, N.; Sarebanzadeh, K.; Zolala, F.; Hemmatjo, R.; Hassanvand, D. Assessing thermal comfort in tourist attractions through objective and subjective procedures based on ISO 7730 standard: A field study. Urban Clim. 2018, 26, 1–9. [Google Scholar] [CrossRef]

- Özbey, M.F.; Turhan, C. A novel comfort temperature determination model based on psychology of the participants for educational buildings in a temperate climate zone. J. Build. Eng. 2023, 76, 107415. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, L.; Zhang, J.; Niu, B.; Luo, M.; Zhou, G.; Zhang, X. Data-driven thermal comfort model via support vector machine algorithms: Insights from ASHRAE RP-884 database. Energy Build. 2020, 211, 109795. [Google Scholar] [CrossRef]

- Sun, J.; Cong, S.; Mao, H.; Zhou, X.; Wu, X.; Zhang, X. Identification of eggs from different production systems based on hyperspectra and CS-SVM. Br. Poult. Sci. 2017, 58, 256–261. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, L.; Huang, C.; Lu, Y.; Wang, C. A dynamic tire model based on HPSO-SVM. Int. J. Agric. Biol. Eng. 2019, 12, 36–41. [Google Scholar] [CrossRef]

- Jiang, L.; Yao, R. Modelling personal thermal sensations using C-Support Vector Classification (C-SVC) algorithm. Build. Environ. 2016, 99, 98–106. [Google Scholar] [CrossRef]

- Luo, M.; Xie, J.; Yan, Y.; Ke, Z.; Yu, P.; Wang, Z.; Zhang, J. Comparing machine learning algorithms in predicting thermal sensation using ASHRAE Comfort Database II. Energy Build. 2020, 210, 109776. [Google Scholar] [CrossRef]

- Xu, Q.; Cai, J.R.; Zhang, W.; Bai, J.W.; Li, Z.Q.; Tan, B.; Sun, L. Detection of citrus Huanglongbing (HLB) based on the HLB-induced leaf starch accumulation using a home-made computer vision system. Biosyst. Eng. 2022, 218, 163–174. [Google Scholar] [CrossRef]

- Yu, J.; Zhangzhong, L.; Lan, R.; Zhang, X.; Xu, L.; Li, J. Ensemble Learning Simulation Method for Hydraulic Characteristic Parameters of Emitters Driven by Limited Data. Agronomy 2023, 13, 986. [Google Scholar] [CrossRef]

- Li, D.; Menassa, C.C.; Kamat, V.R. Personalized human comfort in indoor building environments under diverse conditioning modes. Build. Environ. 2017, 126, 304–317. [Google Scholar] [CrossRef]

- Katić, K.; Li, R.; Zeiler, W. Machine learning algorithms applied to a prediction of personal overall thermal comfort using skin temperatures and occupants’ heating behavior. Appl. Ergon. 2020, 85, 103078. [Google Scholar] [CrossRef] [PubMed]

- Alam, N.; Zaki, S.A.; Ahmad, S.A.; Singh, M.K.; Azizan, A.; Othman, N.A. Machine learning approach for predicting personal thermal comfort in air conditioning offices in Malaysia. Build. Environ. 2024, 266, 112083. [Google Scholar] [CrossRef]

- Nadarajah, P.D.; Lakmal, H.; Singh, M.K.; Zaki, S.A.; Ooka, R.; Rijal, H.; Mahapatra, S. Identification and application of the best-suited machine learning algorithm based on thermal comfort data characteristic: A data-driven approach. J. Build. Eng. 2024, 95, 110319. [Google Scholar] [CrossRef]

- Simone, A.; Kolarik, J.; Iwamatsu, T.; Asada, H.; Dovjak, M.; Schellen, L.; Shukuya, M.; Olesen, B.W. A relation between calculated human body exergy consumption rate and subjectively assessed thermal sensation. Energy Build. 2011, 43, 1–9. [Google Scholar] [CrossRef]

- Metzmacher, H.; Wölki, D.; Schmidt, C.; Frisch, J.; van Treeck, C. Real-time human skin temperature analysis using thermal image recognition for thermal comfort assessment. Energy Build. 2018, 158, 1063–1078. [Google Scholar] [CrossRef]

- Ghahramani, A.; Tang, C.; Becerik-Gerber, B. An online learning approach for quantifying personalized thermal comfort via adaptive stochastic modeling. Build. Environ. 2015, 92, 86–96. [Google Scholar] [CrossRef]

- Aryal, A.; Becerik-Gerber, B. A comparative study of predicting individual thermal sensation and satisfaction using wrist-worn temperature sensor, thermal camera and ambient temperature sensor. Build. Environ. 2019, 160, 106223. [Google Scholar] [CrossRef]

- Huizenga, C.; Zhang, H.; Arens, E.; Wang, D. Skin and core temperature response to partial-and whole-body heating and cooling. J. Therm. Biol. 2004, 29, 549–558. [Google Scholar] [CrossRef]

- Yang, B.; Li, X.; Hou, Y.; Meier, A.; Cheng, X.; Choi, J.H.; Wang, F.; Wang, H.; Wagner, A.; Yan, D.; et al. Non-invasive (non-contact) measurements of human thermal physiology signals and thermal comfort/discomfort poses—A review. Energy Build. 2020, 224, 110261. [Google Scholar] [CrossRef]

- Ghahramani, A.; Castro, G.; Karvigh, S.A.; Becerik-Gerber, B. Towards unsupervised learning of thermal comfort using infrared thermography. Appl. Energy 2018, 211, 41–49. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Hedman, A.; Olofsson, T.; Li, H.; Van Gool, L. NIDL: A pilot study of contactless measurement of skin temperature for intelligent building. Energy Build. 2019, 198, 340–352. [Google Scholar] [CrossRef]

- Cosma, A.C.; Simha, R. Machine learning method for real-time non-invasive prediction of individual thermal preference in transient conditions. Build. Environ. 2019, 148, 372–383. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, J.; Cao, B. A systematic review of research on personal thermal comfort using infrared technology. Energy Build. 2023, 301, 113666. [Google Scholar] [CrossRef]

- Farokhi, S.; Flusser, J.; Sheikh, U.U. Near infrared face recognition: A literature survey. Comput. Sci. Rev. 2016, 21, 1–17. [Google Scholar] [CrossRef]

- Miura, J.; Demura, M.; Nishi, K.; Oishi, S. Thermal comfort measurement using thermal-depth images for robotic monitoring. Pattern Recognit. Lett. 2020, 137, 108–113. [Google Scholar] [CrossRef]

- Jazizadeh, F.; Jung, W. Personalized thermal comfort inference using RGB video images for distributed HVAC control. Appl. Energy 2018, 220, 829–841. [Google Scholar] [CrossRef]

- Jung, W.; Jazizadeh, F. Vision-based thermal comfort quantification for HVAC control. Build. Environ. 2018, 142, 513–523. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, B.; Olofsson, T.; Liu, G.; Li, H. A pilot study of online non-invasive measuring technology based on video magnification to determine skin temperature. Build. Environ. 2017, 121, 1–10. [Google Scholar] [CrossRef]

- Jeoung, J.; Jung, S.; Hong, T.; Lee, M.; Koo, C. Thermal comfort prediction based on automated extraction of skin temperature of face component on thermal image. Energy Build. 2023, 298, 113495. [Google Scholar] [CrossRef]

- Bai, Y.; Liu, L.; Liu, K.; Yu, S.; Shen, Y.; Sun, D. Non-intrusive personal thermal comfort modeling: A machine learning approach using infrared face recognition. Build. Environ. 2024, 247, 111033. [Google Scholar] [CrossRef]

- Li, K.; Li, W.; Liu, F.; Xue, W. Non-invasive human thermal comfort assessment based on multiple angle/distance facial key-region temperatures recognition. Build. Environ. 2023, 246, 110956. [Google Scholar] [CrossRef]

- Jocher, G.; Nishimura, K.; Mineeva, T.; Vilariño, R. Yolov5. Code Repository. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 September 2022).

- Meier, A.; Dyer, W.; Graham, C. Using human gestures to control a building’s heating and cooling System. In Energy Efficiency in Domestic Appliances and Lighting (EEDAL’17); European Union: Luxembourg, 2017; pp. 627–635. [Google Scholar]

- Yang, B.; Cheng, X.; Dai, D.; Olofsson, T.; Li, H.; Meier, A. Real-time and contactless measurements of thermal discomfort based on human poses for energy efficient control of buildings. Build. Environ. 2019, 162, 106284. [Google Scholar] [CrossRef]

- Yang, B.; Cheng, X.; Dai, D.; Olofsson, T.; Li, H.; Meier, A. Macro pose based non-invasive thermal comfort perception for energy efficiency. arXiv 2018, arXiv:1811.07690. [Google Scholar]

- Wu, Y.; Cao, B. Recognition and prediction of individual thermal comfort requirement based on local skin temperature. J. Build. Eng. 2022, 49, 104025. [Google Scholar] [CrossRef]

- Cosma, A.C.; Simha, R. Using the contrast within a single face heat map to assess personal thermal comfort. Build. Environ. 2019, 160, 106163. [Google Scholar] [CrossRef]

- Li, D.; Menassa, C.C.; Kamat, V.R. Non-intrusive interpretation of human thermal comfort through analysis of facial infrared thermography. Energy Build. 2018, 176, 246–261. [Google Scholar] [CrossRef]

- Garg, S.; Saxena, A.; Gupta, R. Yoga pose classification: A CNN and MediaPipe inspired deep learning approach for real-world application. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 16551–16562. [Google Scholar] [CrossRef]

- Makhijani, R.; Sagar, S.; Reddy, K.B.P.; Mourya, S.K.; Krishna, J.S.; Kulkarni, M.M. Yoga Pose Rectification Using Mediapipe and Catboost Classifier. In Computer Vision and Machine Intelligence: Proceedings of CVMI 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 379–387. [Google Scholar]

- Bucarelli, N.; El-Gohary, N. Deep learning approach for recognizing cold and warm thermal discomfort cues from videos. Build. Environ. 2023, 242, 110277. [Google Scholar] [CrossRef]

- Duan, W.; Wang, Y.; Li, J.; Zheng, Y.; Ning, C.; Duan, P. Real-time surveillance-video-based personalized thermal comfort recognition. Energy Build. 2021, 244, 110989. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Z.; Zheng, Z.; Zhang, S.; Fang, Z. A review of investigation of the metabolic rate effects on human thermal comfort. Energy Build. 2024, 315, 114300. [Google Scholar] [CrossRef]

- Zhang, H.; Xie, X.; Hong, S.; Lv, H. Impact of metabolism and the clothing thermal resistance on inpatient thermal comfort. Energy Built Environ. 2021, 2, 223–232. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A real-time apple targets detection method for picking robot based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 1619. [Google Scholar] [CrossRef]

- Yang, B.; Liu, Y.; Liu, P.; Wang, F.; Cheng, X.; Lv, Z. A novel occupant-centric stratum ventilation system using computer vision: Occupant detection, thermal comfort, air quality, and energy savings. Build. Environ. 2023, 237, 110332. [Google Scholar] [CrossRef]

- Zhang, Q.; Bao, X.; Sun, S.; Lin, F. Lightweight network for small target fall detection based on feature fusion and dynamic convolution. J. Real-Time Image Process. 2024, 21, 17. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.; Lee, J.; et al. Mediapipe: A framework for perceiving and processing reality. In Proceedings of the Third Workshop on Computer Vision for AR/VR at IEEE Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 17 June 2019; Volume 2019, pp. 1–4. [Google Scholar]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. Blazepose: On-device real-time body pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar]

- Singh, A.K.; Kumbhare, V.A.; Arthi, K. Real-time human pose detection and recognition using mediapipe. In International Conference on Soft Computing and Signal Processing; Springer: Singapore, 2021; pp. 145–154. [Google Scholar]

- Min, Z. Human body pose intelligent estimation based on BlazePose. In Proceedings of the 2022 IEEE International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 25–27 February 2022; IEEE: NewYork, NY, USA, 2022; pp. 150–153. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Aryal, A.; Becerik-Gerber, B. Energy consequences of Comfort-driven temperature setpoints in office buildings. Energy Build. 2018, 177, 33–46. [Google Scholar] [CrossRef]

- GB/T 50785-2012; Evaluation Standard for Indoor Thermal Environment in Civil Buildings. Standardization Administration: Beijing, China, 2012.

| Literatures | Detection Object/Image Type | Detection Tool | Thermal Comfort Prediction Model | TSV Class | Accuracy |

|---|---|---|---|---|---|

| Jeoung et al. (2024) [43] | facial temperature/infrared | YOLOv5 | MLP, GBM, KNN, SVM, RF, DT | 3-class * | 90.26% (MLP) |

| Bai et al. (2024) [44] | facial temperature/infrared | Dlib/HRNet | BL, RF, GBM, GBDT, DCF | 3-class | 90.44% (BL) |

| Li et al. (2023) [45] | facial temperature/infrared | YOLOv5 | CLPSO-SVM | 7-class ** | 81.65% |

| 3-class | 85.68% | ||||

| Wu et al. (2022) [50] | facial temperature/infrared | not reported | SVM | 2-class | 79.9% |

| Cosma et al. (2019) [51] | facial temperature/infrared and RGB | Haar/OpenPose | SVM | 7-class | 76% |

| Li et al. (2018) [52] | facial temperature/infrared | Haar Cascade | RF | 3-class | 85% |

| Garg et al. (2023) [53] | body action/RGB | Mediapipe | CNN | not reported | 97.09% |

| Makhijani et al. (2023) [54] | body action/RGB | Mediapipe | CatBoost | not reported | 98.9% |

| Bucarell et al. (2023) [55] | body action/RGB | TensorFlow Object Detection API | ResNet101 + LSTM + MLP | 3-class | 86.7% |

| Duan et al. (2021) [56] | body action/RGB | OpenPose | ST-GCN | 3-class | 78% |

| Yang et al. (2019) [48] | body action/RGB | OpenPose | not reported | 7-class | 86.37% |

| Meier et al. (2017) [47] | body action/RGB | Kinect | not reported | not reported | not reported |

| Gender | Age | Height (cm) | Weight (Kg) | BMI (Kg/m2) |

|---|---|---|---|---|

| Men | 24.4 ±1.58 | 177 ± 6.27 | 69.8 ± 9.23 | 22.28 ± 2.60 |

| Female | 23.9 ± 1.35 | 166 ± 2.44 | 56.43 ± 3.51 | 20.37 ± 1.16 |

| Experiment | Quantity of Data | Collected Features | Subjects (Times/Subject) | Gender Ratio |

|---|---|---|---|---|

| I | 168 | , , , | 12 (2) | 7:5 |

| II | 140 | , , , , Clo, Met, Thermal adaptive actions | 10 (2) | 3:2 |

| III | 70 | , , , , Clo(0.2 clo), Met, Thermal adaptive actions | 5 (2) | 3:2 |

| 70 | , , , , Clo, Met(2 met), Thermal adaptive actions | 5 (2) | 3:2 | |

| 70 | , , , , Clo(0.2 clo), Met(2 met), Thermal adaptive actions | 5 (2) | 3:2 |

| YOLOv5 | Mediapipe | ||

|---|---|---|---|

| Size | 640 ∗ 640 | Static_image_mode | False |

| Batch size | 16 | Smooth_landmarks | True |

| Epoch | 300 | Min_tracking_confidence | 0.5 |

| Learning rate | 0.01 | Model_complexity | 1 |

| Weight decay | 0.0005 | Min_detection_confidence | 0.5 |

| Quantity of Data | Model | Parameter | Accuracy | |

|---|---|---|---|---|

| 7-Class | 3-Class | |||

| 140 (experiment II) | PMV | , , Clo, Met | 20.71% | 65% |

| Model_Fac_T | , , , | 78.57% | 90% | |

| Fusion model (total data) | , , | 82.86% | 94.29% | |

| Fusion model (actions detected) | , , | 86.8% | 100% | |

| Quantity of Data | Model | Accuracy | |||||

|---|---|---|---|---|---|---|---|

| Change Clo | Change Met | Change Clo and Met | |||||

| 7-Class | 3-Class | 7-Class | 3-Class | 7-Class | 3-Class | ||

| 210 (experiment III) | PMV | 31.42% | 71.4% | 22.85% | 58.57% | 18.57% | 45.71% |

| Model_Fac_T | 74.28% | 91.42% | 54.29% | 81.42% | 57.14% | 87.14% | |

| Fusion model (total data) | 75.71% | 92.86% | 74.29% | 92.86% | 75.7% | 94.29% | |

| Fusion model (actions detected) | 80% | 100% | 86.38% | 100 % | 80.95 % | 95.24% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Liu, F.; Luo, Y.; Khoso, M.A. Non-Invasive Multivariate Prediction of Human Thermal Comfort Based on Facial Temperatures and Thermal Adaptive Action Recognition. Energies 2025, 18, 2332. https://doi.org/10.3390/en18092332

Li K, Liu F, Luo Y, Khoso MA. Non-Invasive Multivariate Prediction of Human Thermal Comfort Based on Facial Temperatures and Thermal Adaptive Action Recognition. Energies. 2025; 18(9):2332. https://doi.org/10.3390/en18092332

Chicago/Turabian StyleLi, Kangji, Fukang Liu, Yanpei Luo, and Mushtaque Ali Khoso. 2025. "Non-Invasive Multivariate Prediction of Human Thermal Comfort Based on Facial Temperatures and Thermal Adaptive Action Recognition" Energies 18, no. 9: 2332. https://doi.org/10.3390/en18092332

APA StyleLi, K., Liu, F., Luo, Y., & Khoso, M. A. (2025). Non-Invasive Multivariate Prediction of Human Thermal Comfort Based on Facial Temperatures and Thermal Adaptive Action Recognition. Energies, 18(9), 2332. https://doi.org/10.3390/en18092332