1. Introduction

Coal texture is an important factor in optimizing the characterization of CBM reservoirs, which directly affects key reservoir properties such as permeability, gas content, and production potential. The texture of coal reflects its structural characteristics shaped by mechanical deformation, which can vary from undeformed coal to more altered forms, including cataclastic and granulated coal types. Understanding these textures is important for accurate reservoir modeling, as they impact the efficiency of gas migration and accumulation processes within CBM fields [

1,

2,

3].

These different textures can affect the coal’s porosity and permeability, which in turn influence how easily gas can be extracted from the reservoir. For instance, undeformed coal generally has lower permeability, while cataclastic and granulated coal are typically more fractured and offer higher permeability, being more favorable for CBM extraction [

4,

5]. Accurate classification of coal textures is thus essential for effective resource evaluation, extraction planning, and the optimization of mining techniques [

6,

7,

8,

9].

The Zhengzhuang Field, located in the southern part of the Qinshui Basin in China, presents a unique geological setting with significant CBM reserves. This field is characterized by complex coal types and textures, influenced by tectonic movements and variations in burial depth [

3]. The coal seams in this field exhibit substantial heterogeneity in terms of coal textures, which directly impacts the permeability and gas production potential of the reservoir [

3]. The ability to accurately classify these textures is fundamental for assessing the field’s CBM potential and optimizing extraction strategies. Given the complex geological conditions, including faulting and folding, coal texture classification becomes even more challenging. Therefore, advanced techniques are necessary to effectively characterize coal textures in such heterogeneous environments, with well-logging data providing a valuable tool for the classification process [

10].

Several studies have investigated coal texture classification and CBM reservoir characterization using machine learning and other advanced techniques. For example, Li et al. (2022) employed machine learning algorithms, such as K-means clustering and KNN, to classify coal textures based on well-log data [

2]. These studies demonstrated the feasibility of using well-log parameters, including gamma-ray (GR), density (DEN), and resistivity (LLD), for accurate coal texture classification. Despite these advancements, limitations remain in data coverage, accuracy, and the ability to account for geological complexities. Cao et al. (2020) further highlighted the importance of in situ stress in influencing coal texture and permeability [

11]. Their work emphasizes the role of stress distribution in coal structure and fracture patterns, which are critical for CBM extraction. These findings suggest that integrating geological factors such as stress and deformation into coal texture models could significantly improve prediction accuracy, particularly in challenging geological settings like Zhengzhuang. Additionally, Yan et al. (2024) applied deep-ensemble learning to reflectance spectra images to identify coal types, achieving high classification accuracy [

6]. These approaches represent a shift toward image-based techniques for coal classification. In coal-rock recognition, Xu et al. (2024) used neural texture synthesis with deep convolutional networks (CNNs) to generate synthetic coal-rock images, addressing data scarcity issues and improving recognition model performance in real-world mining conditions [

12]. Moreover, Zhao et al. (2022) explored machine learning techniques, including Random Forest and K-Nearest Neighbors, to predict coal slime flotation behavior [

7]. Their study showed that the RF model performed best in simulating particle migration, highlighting the importance of particle size and composition in optimizing flotation efficiency. This research is essential for improving the processing of coal slurry and enhancing the overall quality of coal extraction. Cheng et al. (2025) demonstrated how CNNs could estimate coal ash content, emphasizing the importance of color and texture features in improving the accuracy of coal quality assessments for CBM exploration [

13]. Zhang et al. (2024) also utilized Convolutional Neural Networks (CNN), Vision Transformers (ViT), and Graph Convolution Networks (GCN) to estimate coal ash content based on image data [

14]. Their study demonstrated that color features contributed significantly to the accuracy of the model, with CNN achieving the highest estimation precision. This highlights the growing role of deep learning in coal quality estimation, which is critical for industrial coal use. Embaby et al. (2023) [

15] studied the use of machine learning algorithms, geostatistical techniques, and GIS analysis to estimate phosphate ore grade at the Abu Tartur Mine in the Western Desert, Egypt. Their research highlighted the effectiveness of various modeling approaches for improving phosphate resource estimation and mining efficiency [

15]. Hao et al. (2025) combined Raman spectroscopy and X-ray fluorescence (XRF) spectroscopy with machine learning to improve the classification accuracy of complex coal samples [

4]. Their model demonstrated that combining both organic and inorganic spectral information provided a more accurate classification than using single-spectral methods. This study is significant in refining coal classification techniques for more efficient industrial applications. Cui et al. (2021) proposed a methodology for quantifying coal macro lithotypes, such as bright and semi-dull coal, by combining geophysical logging data with Principal Component Analysis (PCA) [

16]. The study applied this model to the Zhengzhuang field in the Qinshui Basin, revealing that coal with lower S-Index values (bright coal) is more likely to form long fractures during hydraulic fracturing. This finding enhances the understanding of coal texture’s influence on CBM production potential.

Despite these advancements, there remain significant gaps in fully characterizing coal textures in complex geological settings like Zhengzhuang. Many studies have relied on limited datasets or failed to fully account for regional variations in coal texture distributions and geological stress factors, which can significantly affect coal permeability and fracture development. Furthermore, while machine learning and geophysical methods have been applied, there is still a need for more robust models that integrate geological theories and data analytics to improve the accuracy of coal texture classification over large areas.

This study aims to address these gaps by developing a more comprehensive and accurate method for coal texture classification in the Zhengzhuang Field. By integrating well-log data, advanced machine learning techniques, and geological insights, this research seeks to improve the understanding of coal textures and enhance the prediction accuracy for CBM exploration. Additionally, this study will explore the use of more refined feature selection techniques and incorporate geological theories, such as stress and tectonic movement, to better characterize coal textures and their impact on CBM reservoirs. Our approach will not only improve the efficiency of coal texture classification but also contribute to optimizing CBM extraction methods, particularly in complex geological settings like Zhengzhuang.

This study offers a novel approach to coal texture classification by leveraging advanced machine learning (ML) techniques applied to well-log data from the Zhengzhuang Field in the Qinshui Basin. By integrating multiple geophysical parameters and employing a rigorous data preprocessing pipeline, this research enhances the predictive modeling of the coal texture types UC, CC, and GC, which are essential for CBM reservoir characterization. The novelty of this work lies in the use of machine learning classifiers, such as Extra Trees and Gradient Boosting, to predict coal texture with high accuracy, providing valuable insights into the underlying geological structures. The contributions of this paper are as follows:

Development of a robust methodology for coal texture classification using well-log data, with a focus on UC, CC, and GC types.

Application of five of machine learning models, including Extra Trees and Gradient Boosting, to accurately predict coal textures types for Zhengzhuang Field in the Qinshui Basin, thus enhancing the efficiency of CBM exploration.

Provision of a comprehensive analysis of the impact of test/train size splits on model performance, offering a reliable framework for future CBM reservoir assessments.

Overall, these innovations contribute significantly to the accuracy and robustness of coal texture classification models.

2. Methodology

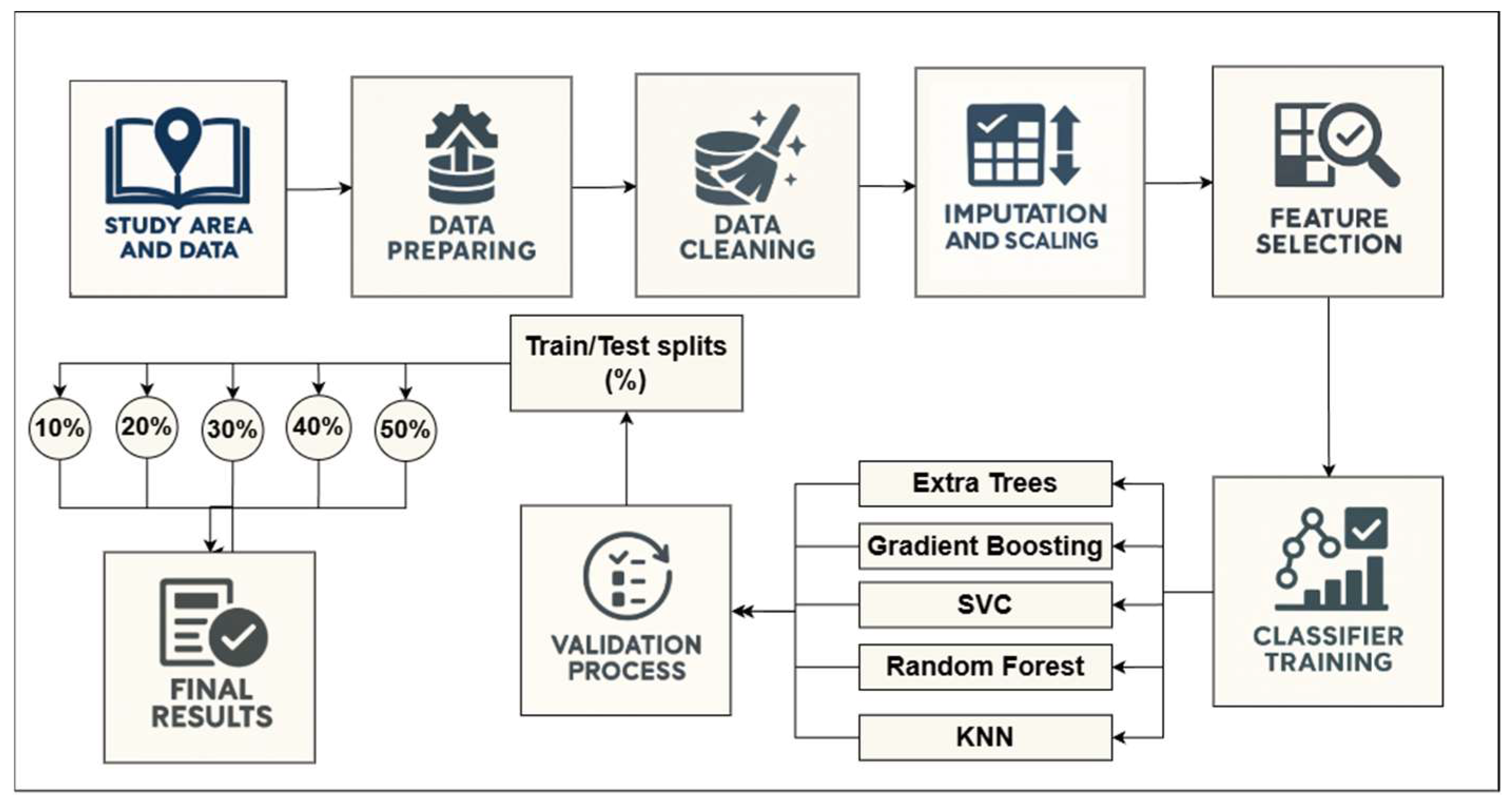

Figure 1 illustrates the methodology of this study, which follows a structured workflow that integrates geological data by preparing the well logging data from the Zhengzhuang Field and using machine learning classification techniques (using Python programming language version 3.11.9) to predict coal texture types. The workflow also includes selecting the most effective classifier with the most effective test train split size that can predict coal texture types with high performance.

2.1. Study Area Overview

The Zhengzhuang Field, located in the central part of the Qinshui Basin, North China, is one of the most significant CBM production areas in the region. The Qinshui Basin is a fault-controlled synclinal basin with extensive coal-bearing strata, primarily of Carboniferous–Permian age. The Zhengzhuang block is characterized by thick coal seams, relatively stable stratigraphy, and well-developed structural deformation, making it an ideal site for coal texture analysis and reservoir characterization.

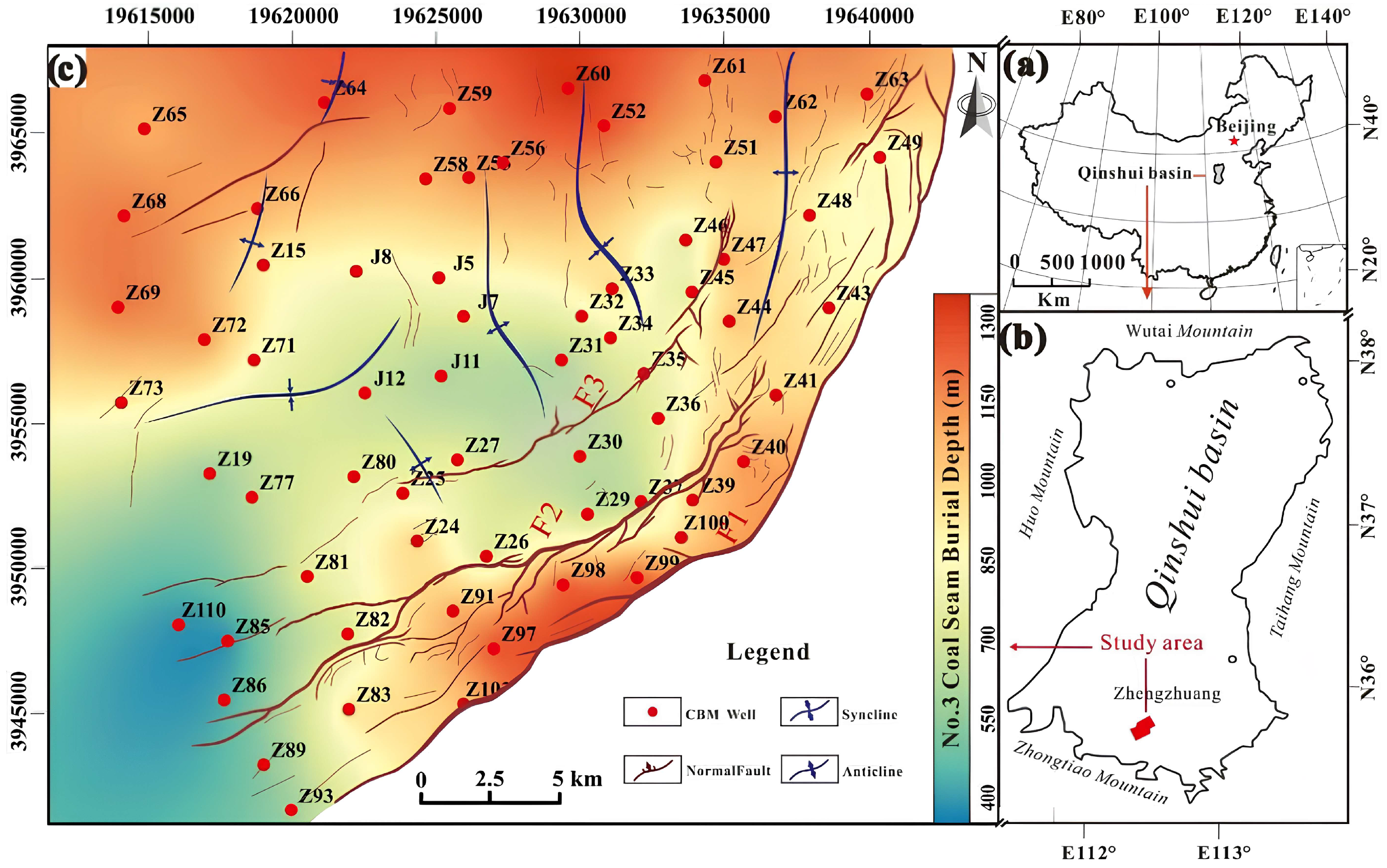

Figure 2 shows a map of the Zhengzhuang Field, highlighting its location and the geological structures of the area. This area forms part of one of China’s most developed CBM basins, characterized by a gently dipping synclinal structure and high-rank anthracite coals of the Permian Shanxi Formation and Carboniferous Taiyuan Formation. The target seams in this study are the No. 3 and No. 15 coal seam layers, which were encountered at depths ranging from approximately 351.3 to 1341.9 m across all 86 wells from which the data were obtained.

In this study, three principal coal texture types are identified across the Zhengzhuang field. The coal texture labels were determined based on geological observations and well-log data. Coal textures (Undeformed Coal-UC, Cataclastic Coal-CC, and Granulated Coal-GC) were classified based on their distinct geological characteristics such as mechanical deformation, porosity, permeability, and fracturing. These classifications were verified and validated by geological experts, who ensured consistency in labeling based on established coal classification systems, those three types are:

Undeformed Coal (UC):

UC represents primary or weakly deformed coal with preserved banded structure and strong mechanical integrity. This type typically shows low microporosity but high cleat connectivity, favoring fracture-dominated gas flow.

Cataclastic Coal (CC):

CC formed under moderate tectonic stress, this texture exhibits partially disrupted banding and increased microfracturing. Cataclastic coal possesses enhanced adsorption capacity and moderate permeability, often acting as transitional facies between undeformed and granulated types.

Granulated Coal (GC):

GC produced by intense tectonic deformation, granulated coal is fine-grained and friable, with a loss of bedding structures. It exhibits high microporosity but very low permeability, making it less favorable for CBM extraction without stimulation.

For this research, data were compiled from 86 wells distributed across the Zhengzhuang field. From each well, two representative stratigraphic layers Layer 3 and Layer 15 were selected based on their continuous logging coverage and lithological consistency. These layers were chosen because they represent key productive coal seams in the field, providing robust data for texture classification and prediction modeling.

2.2. Data Collection and Preprocessing

2.2.1. Well-Log Dataset Description

This study employed well-log data from 86 wells to characterize coal seam properties. The initial dataset comprised 13 logging parameters, each offering distinct geophysical information relevant to coal texture classification.

Measured depth (DEPTH) served as a spatial reference for correlating textural variations with stratigraphy. Acoustic transit time (AC) is sensitive to lithological changes and fracturing, with higher values indicating more deformed or porous coal. Caliper (CAL) measurements reflect borehole diameter, where deviations suggest weak or granulated coal zones. The compensated neutron log (CNL) indicates hydrogen content, indirectly representing microporosity and adsorbed gas. Bulk density (DEN) distinguishes intact from fractured coal, as higher densities typically correspond to undeformed textures. Gamma ray (GR) readings identify shale impurities and help separate coal from non-coal strata.

Resistivity measurements, including microresistivity (Rxo), deep resistivity (RD), and shallow resistivity (RS), provide insights into pore structure, cleat water content, and invasion profiles. Spontaneous potential (SP) reflects electrochemical contrasts that may relate to lithological or permeability variations. Other parameters borehole deviation (DEVI), resistivity at 2.5 m (R2_5), and porosity (POR) were excluded from modeling due to limited data coverage as shown in

Table 1.

2.2.2. Preparing the Data

Prior to preprocessing, a descriptive statistical analysis was performed to assess data completeness and variability. The initial dataset exhibited heterogeneous coverage parameters such as POR, DEVI, and R2_5 had low availability (<20%), while others like DEPTH, AC, CAL, GR, and SP were fully recorded (100%). Variables with insufficient data were excluded from subsequent analysis to prevent overfitting and model bias.

The final dataset retained the following well-log parameters for modeling: DEPTH, AC, CAL, CNL, DEN, GR, Rxo, SP, RD, and RS.

Table 1 summarizes the descriptive statistics for all well-log parameters prior to data cleaning and imputation. The presented metrics include count, completeness percentage, mean, standard deviation (std), minimum (min), maximum (max), and interquartile range (IQR). The completeness percentage highlights the degree of data availability for each parameter, which informed subsequent preprocessing and variable selection decisions. A total of 2992 data points were extracted from the well-logging dataset, comprising 2429 UC samples, 554 CC samples, and only 9 GC samples. These data points were obtained from two stratigraphic layers 2037 points from Layer 3 and 955 points from Layer 15 representing the primary intervals analyzed for coal texture classification. Although Granulated Coal—GC has only 9 data points, an oversampling technique has been used to balance the dataset. Specifically, SMOTE (Synthetic Minority Over-sampling Technique) was used to increase the number of samples for GC by generating synthetic examples based on the existing data points of this class. This helps mitigate the challenge of having a very small number of samples for GC and ensures that the model is trained on a more balanced dataset.

2.2.3. Data Cleaning

The descriptive statistics indicate pronounced variability among several logging parameters, reflecting both geological heterogeneity and potential measurement inconsistencies. Most parameters—such as DEPTH, AC, CAL, GR, and SP—exhibit near-complete data coverage (≥98%), whereas CNL (78%), RS (84%), and R2_5 (74%) show moderate data loss. In contrast, POR (13%) and DEVI (3%) possess extremely limited data availability, making them unsuitable for inclusion in subsequent modeling due to their high potential to introduce statistical bias.

The well-logging dataset from the Zhengzhuang Field underwent systematic quality enhancement to ensure data completeness, reliability, and consistency prior to model construction. The initial dataset displayed a combination of complete and incomplete records across 13 logging parameters, necessitating the careful treatment of missing values and outliers to produce a coherent and interpretable dataset suitable for machine learning applications.

A detailed completeness assessment identified POR, DEVI, and R2_5 as parameters with inadequate data coverage of approximately 13%, 3%, and 74%, respectively. Due to their sparsity and risk of bias, these parameters were excluded from subsequent analyses. The remaining variables—DEPTH, AC, CAL, CNL, DEN, GR, Rxo, SP, RD, and RS—demonstrated sufficient coverage (>85%) and were retained for preprocessing and imputation. This refined subset provides a robust suite of petrophysical indicators that effectively capture lithological variations, coal texture characteristics, and structural deformation features.

2.2.4. Missing Value Imputation Using Machine Learning

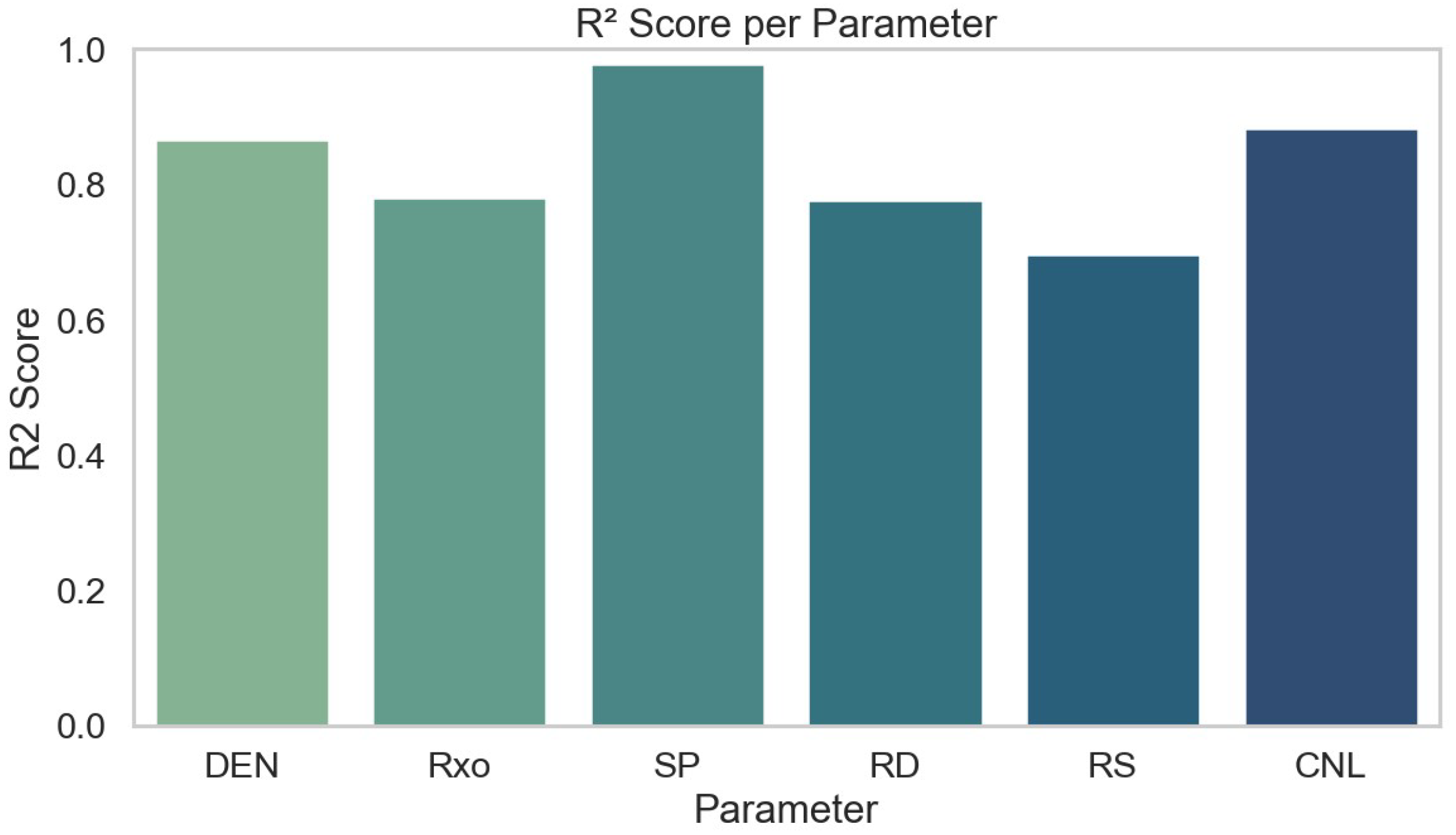

To achieve full data completeness across all parameters, missing values were estimated using machine learning–based regression models instead of traditional mean or median substitution methods, which can distort the natural distribution of the data. For each parameter containing missing observations, a Random Forest Regression was trained using the available portion of the dataset. The model utilized correlated variables such as AC, DEN, GR, and SP to infer the missing values with high accuracy.

The performance of the imputation models was evaluated using the coefficient of determination (R

2) for each parameter, as illustrated in

Figure 3. The results demonstrate high predictive accuracy across all six imputed parameters (DEN, Rxo, SP, RD, RS, and CNL), with R

2 values consistently exceeding 0.7. This confirms the robustness of the machine learning-based regression approach and its effectiveness in preserving inter-parameter relationships while achieving complete and statistically reliable data reconstruction.

After imputation, the reconstructed dataset was validated by comparing key statistical properties, including mean and variance, before and after processing to ensure consistency and realism in the data distribution. This approach successfully preserved the multivariate dependencies among features while achieving complete data coverage across all retained parameters, totaling 2992 samples per variable. The refined dataset, presented in

Table 2 demonstrates uniform completeness and stabilized variance, with all parameters exhibiting 100% availability and improved distributional integrity relative to the raw data.

2.2.5. Parameters Reduction Before Modeling

Although all resistivity curves (RD, RS, and R2_5) capture similar aspects of the formation’s electrical characteristics, retaining them collectively introduces a high risk of multicollinearity within machine learning models. To optimize the input feature set and improve interpretability, only Rxo was preserved as the representative resistivity parameter, as it exhibited the most stable statistical behavior and the strongest correlation with coal texture variability.

This refinement produced a compact and reliable suite of features specifically tailored for coal texture prediction: DEPTH, AC, CAL, CNL, DEN, GR, Rxo, and SP. The finalized dataset achieved full completeness (100%) and consistent quality control across all retained parameters, ensuring robustness for subsequent modeling and analysis. This refined dataset serves as the foundation for the subsequent feature selection and coal-texture classification modeling, ensuring that all analyses are based on high-quality and bias-free data.

2.3. Input Parameter Selection for Coal-Texture Prediction

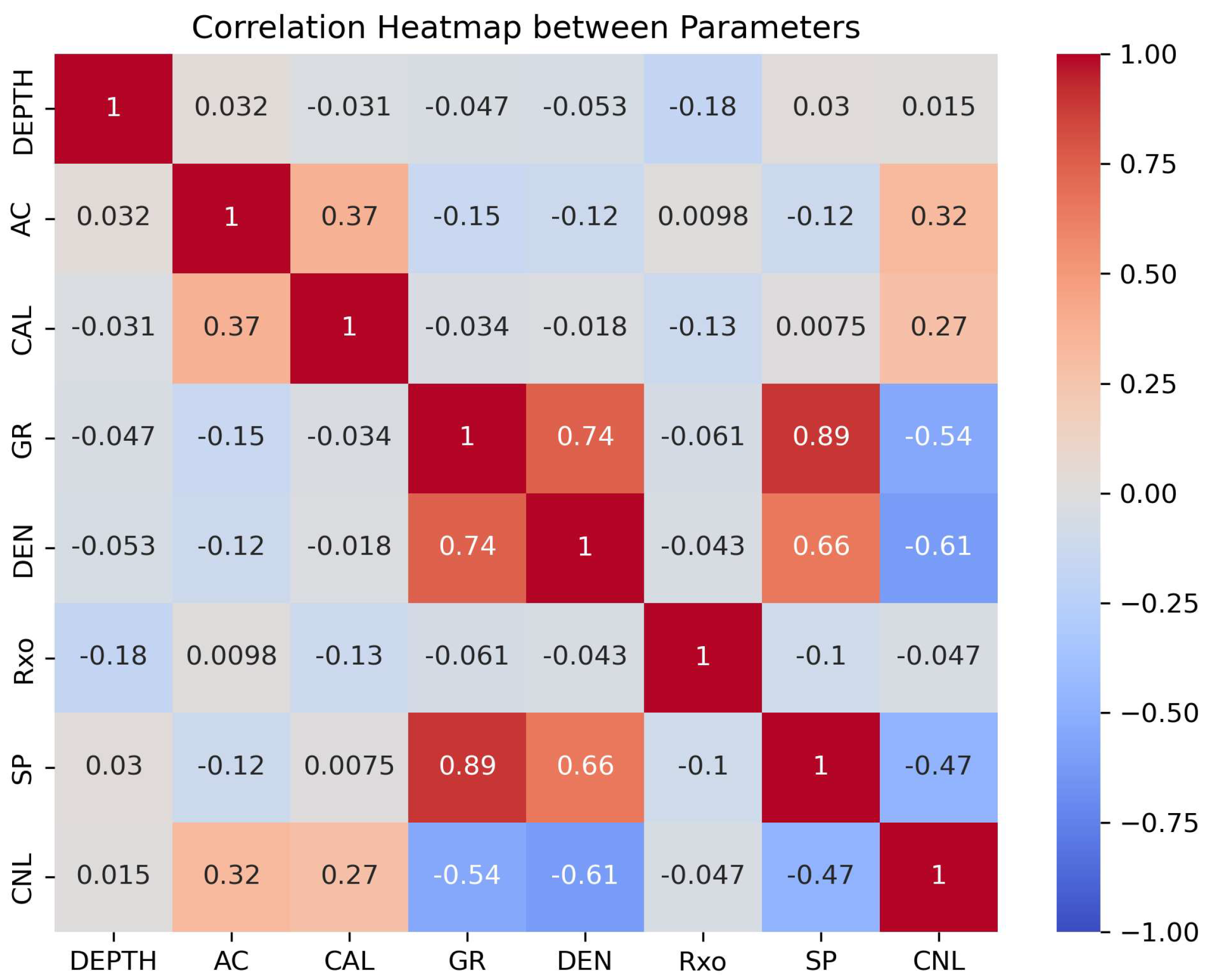

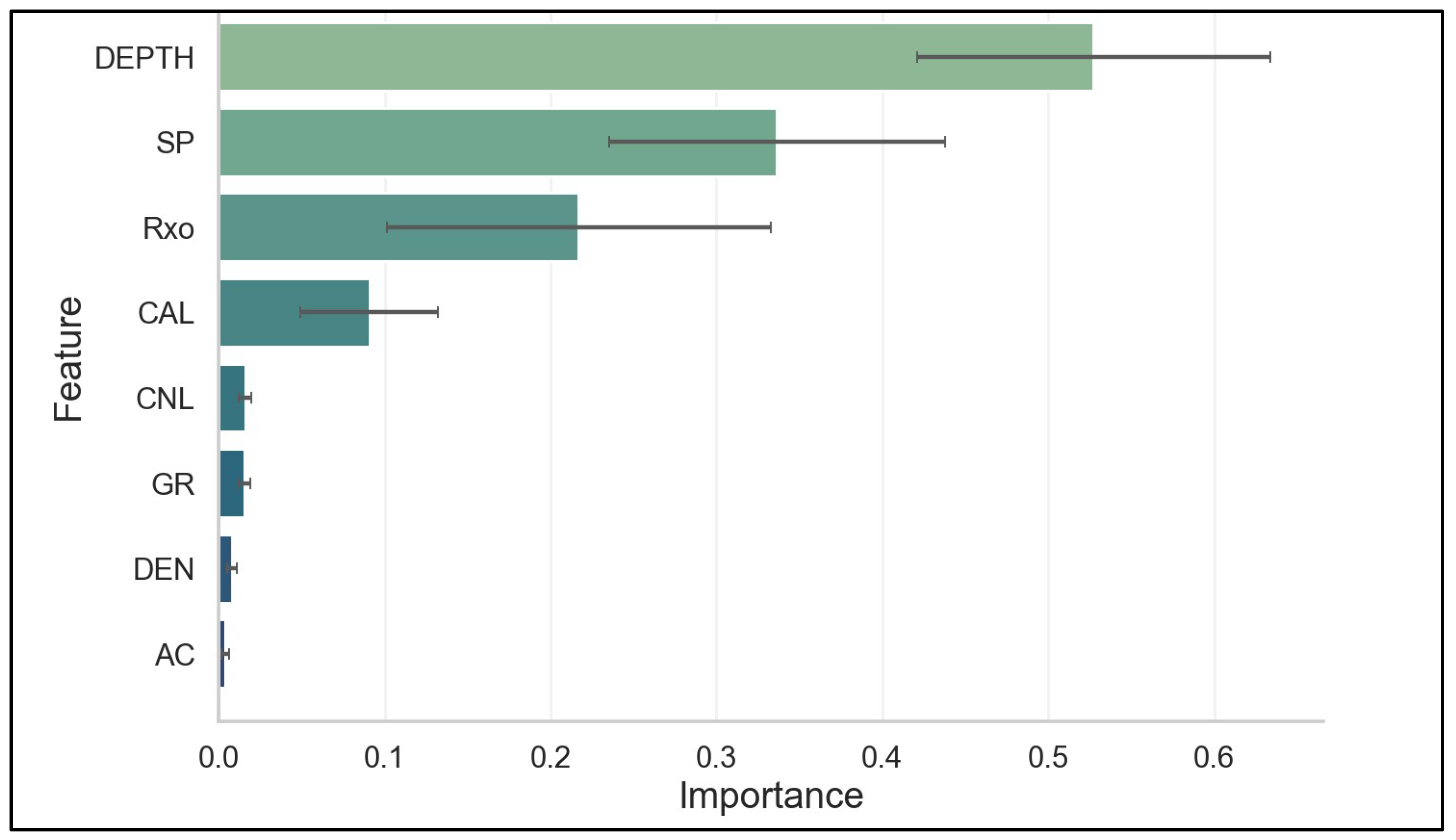

After preprocessing, eight well-log parameters—DEPTH, AC, CAL, CNL, DEN, GR, Rxo, and SP—were retained for modeling to evaluate their relative predictive contributions while minimizing redundancy. Three complementary analyses were conducted to assess the importance and interdependence of these features. The heat map correlation analysis as shown in

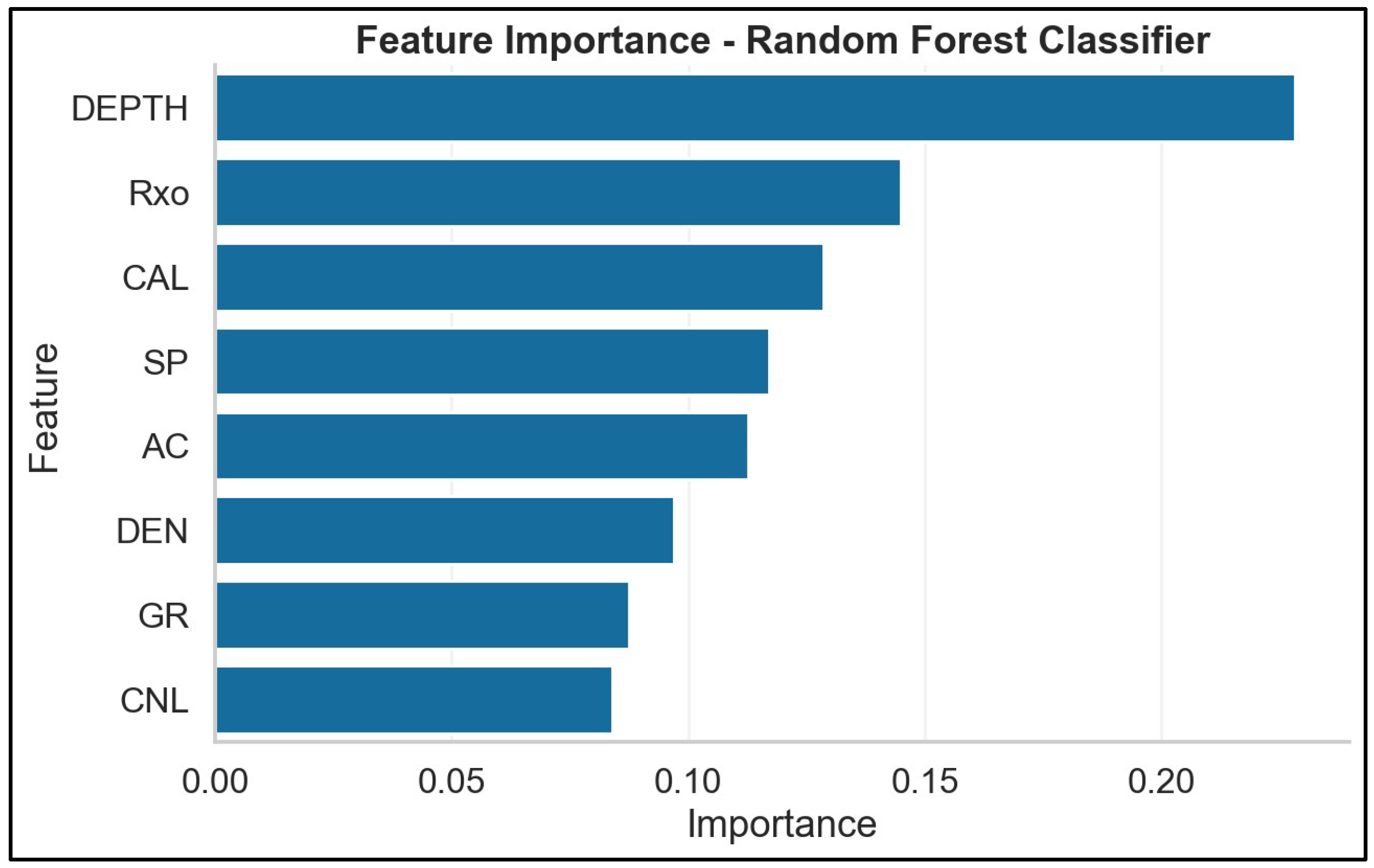

Figure 4 revealed low to moderate correlations among most parameters, with the highest correlations observed between GR–SP (r = 0.89) and GR–DEN (r = 0.74), indicating shared sensitivity to lithological composition and borehole conditions. The Random Forest feature importance analysis which shown in

Figure 5 demonstrated that DEPTH, Rxo, and CAL were the most influential parameters in predicting coal texture types, with SP and AC also contributing significantly. Additionally, the multinomial logistic regression coefficients shown in

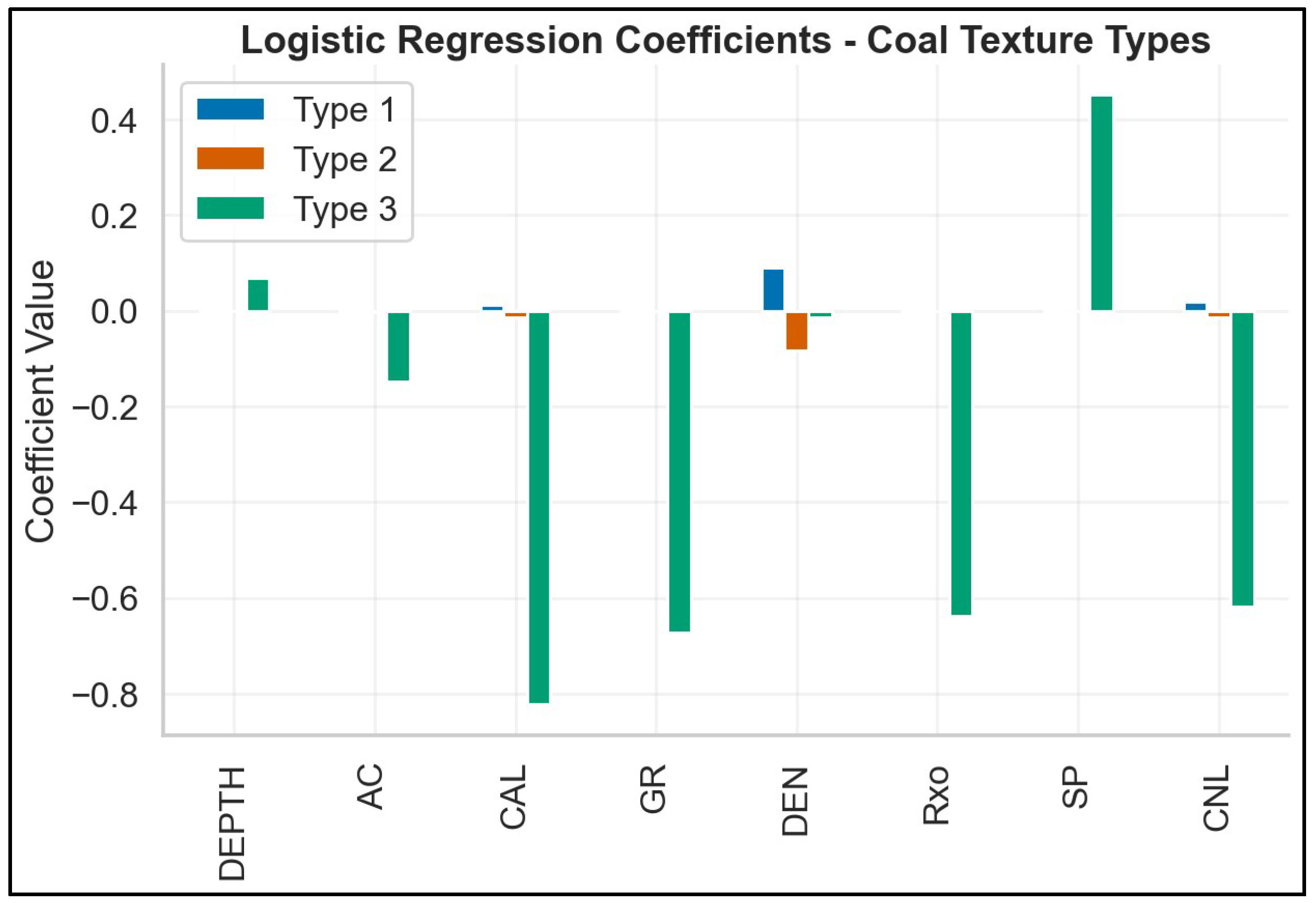

Figure 6 represent the influence of each feature on the classification of coal texture types. In this model, the coal texture types were encoded as categorical variables, where Type 1 represents Undeformed Coal (UC), Type 2 represents Cataclastic Coal (CC), and Type 3 represents Granulated Coal (GC). The multi-class dependent variable was thus a multi-class categorical variable corresponding to these three coal texture types.

Based on these findings, all eight features were retained for the primary modeling scenario. To explore the effect of reduced dimensionality, a secondary model was also developed using only the top five features—DEPTH, Rxo, CAL, SP, and AC—excluding DEN, GR, and CNL, which were found to have lesser impact. This dual-scenario approach enabled an assessment of how input dimensionality influenced model accuracy and stability. In this study, the relationships observed between the coal texture classifications and geophysical parameters, such as DEPTH and Rxo, are consistent with the geological understanding of coal seams in the Zhengzhuang Field. DEPTH is a crucial parameter that reflects the stratigraphy of the region, which can influence coal porosity and permeability. Coal textures such as UC, CC, and GC are closely linked to the depth of burial and the degree of tectonic stress exerted during the formation of the coal seams, the deeper coal seams tend to show a more cataclastic and fractured texture due to the higher stress levels at these depths.

2.4. Machine Learning Models

To predict coal-texture classes (undeformed, cataclastic, and granulated) from the well-log data, five complementary ML classifiers were selected: Extra Trees, Gradient Boosting, SVC, Random Forest, and kNN. These algorithms were chosen to represent diverse modeling paradigms—ensemble decision trees, boosting methods, kernel-based learning, and distance-based classification—allowing robust evaluation of nonlinear relationships and complex feature interactions inherent in the coal-log dataset [

17].

2.4.1. Extra Trees Classifier

The Extra Trees algorithm is an ensemble method that constructs multiple unpruned decision trees with increased randomization in feature splits. Its computational efficiency and resistance to overfitting make it particularly suitable for noisy and correlated data, such as the heterogeneous coal-log dataset. This method is ideal for capturing complex, nonlinear relationships between features and coal-texture types [

17].

2.4.2. Gradient Boosting Classifier

Gradient Boosting sequentially builds an ensemble of weak learners (typically shallow decision trees), where each model aims to correct the errors of its predecessor. It excels at capturing complex nonlinear relationships and handling noisy, imbalanced datasets. This method enhances prediction accuracy and is particularly effective in improving the precision of coal-texture classification when dealing with mixed data types [

18,

19,

20].

2.4.3. SVC

The SVC is a kernel-based algorithm that finds the optimal hyperplane to separate different classes by maximizing the margin between them. It is effective in high-dimensional spaces and can handle situations where the number of features is much larger than the number of samples. SVC is particularly useful for distinguishing subtle differences between coal-texture classes when linear separability is weak [

20].

2.4.4. Random Forest Classifier

The Random Forest algorithm aggregates predictions from multiple decision trees built on Random Forest is an ensemble method that aggregates predictions from multiple decision trees trained on bootstrapped subsets of the data with random feature selections. This model provides high generalization ability and is resistant to noise, making it suitable for complex datasets like coal logs. It also offers valuable insights into feature importance, helping to identify the most influential variables in coal-texture classification [

21].

2.4.5. KNN

The kNN algorithm classifies samples based on the majority label of their k nearest neighbors in the feature space. Simple and non-parametric, kNN is effective for capturing local structures and clusters within data. This method serves as a baseline model for evaluating spatial similarities among well-log signatures and coal textures, offering insight into the relationship between proximity in the feature space and coal-texture classification [

2,

20,

22].

Before training the models, hyperparameter optimization was performed to ensure optimal performance for each classifier. The hyperparameter tuning process was carried out to find the best values for each model.

Table 3 summarizes the key hyperparameters optimized for each machine learning model.

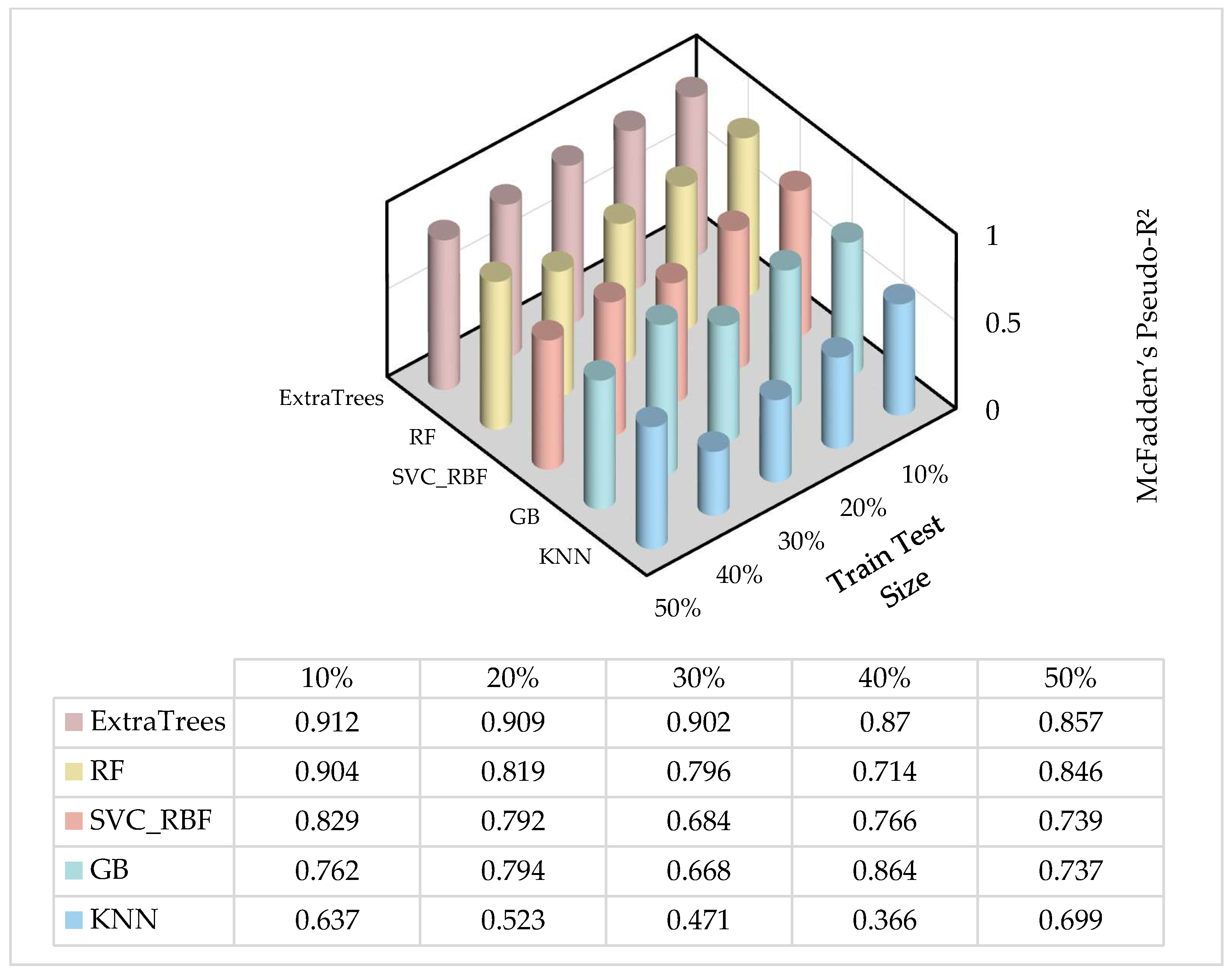

To comprehensively evaluate model performance and ensure robust prediction of coal-texture types, those five selected machine learning classifiers were each tested under two distinct input scenarios.

Scenario 1: Utilized all eight well-log features—DEPTH, AC, CAL, CNL, DEN, GR, Rxo, and SP.

Scenario 2: Focused on the top five most influential features—DEPTH, Rxo, CAL, SP, and AC.

This dual-scenario framework enabled the assessment of how input dimensionality impacts model accuracy, stability, and generalization. After model evaluation, the best-performing classifiers were tested across multiple train-test size partitions (20%, 30%, 40%, and 50%) to determine the optimal data-split ratio for balancing training efficiency and predictive accuracy. This approach led to the identification of the most stable and accurate model configuration, forming the foundation for the final predictive framework in this study.

2.5. Evaluation Metrics

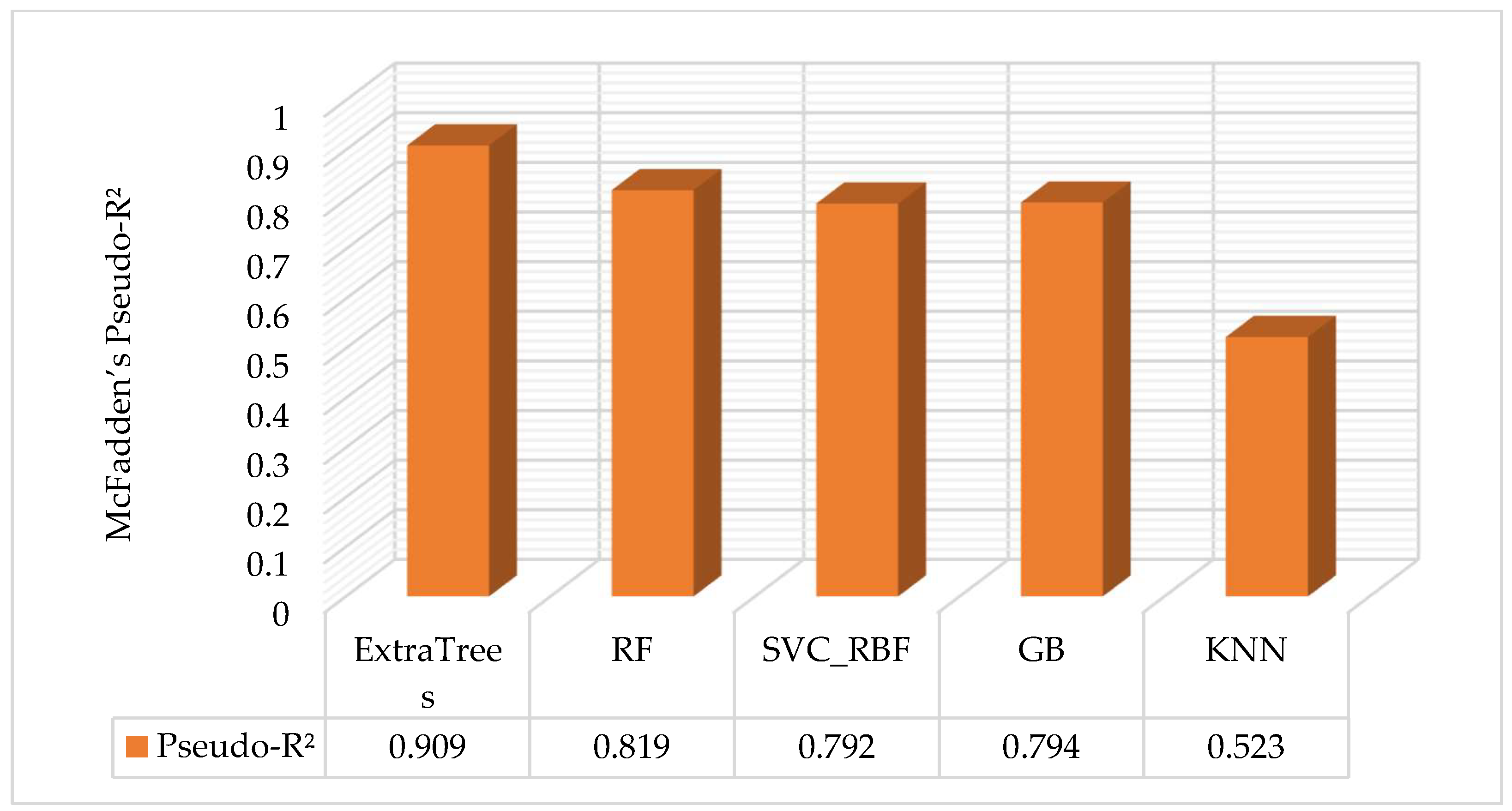

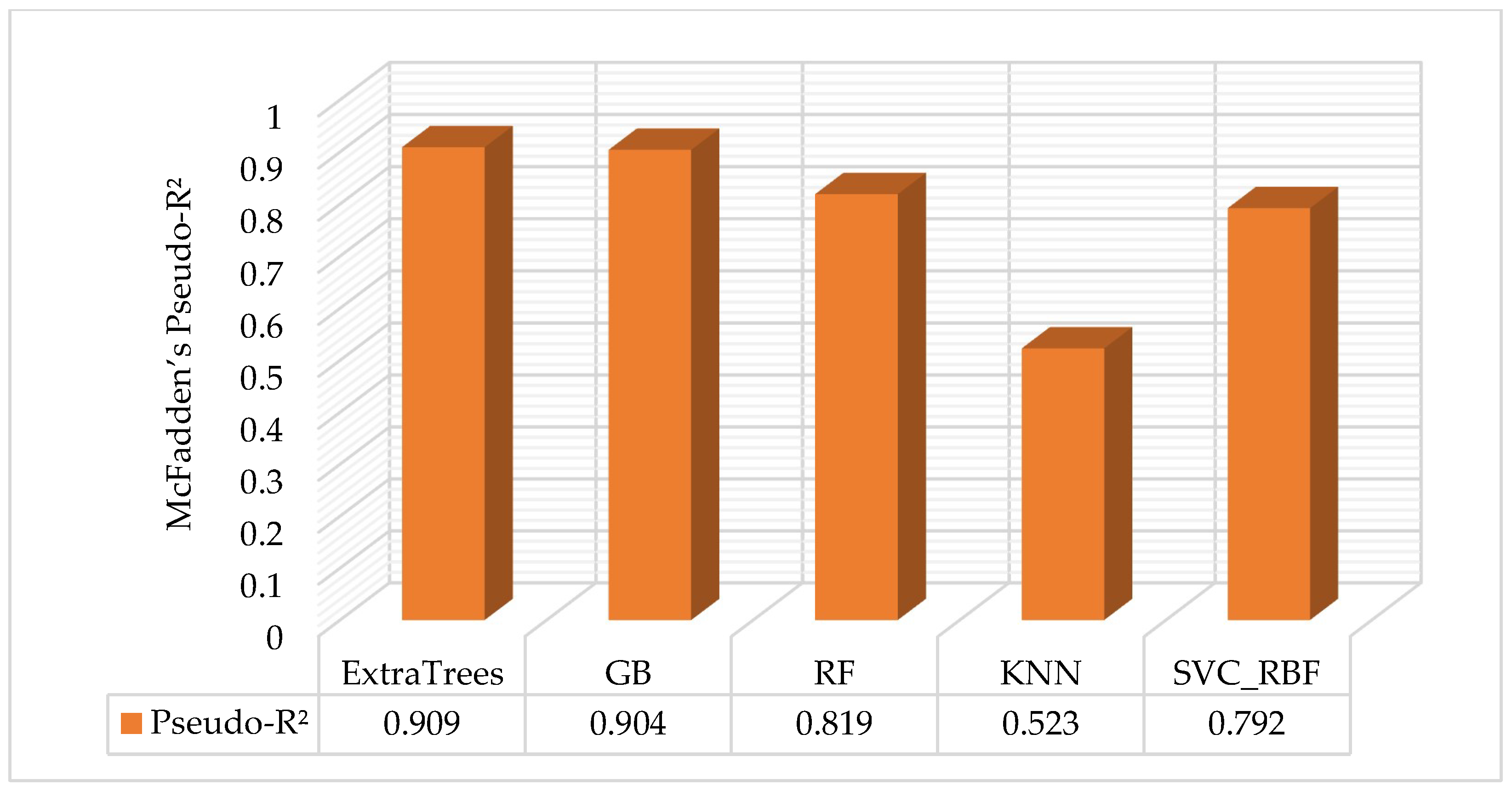

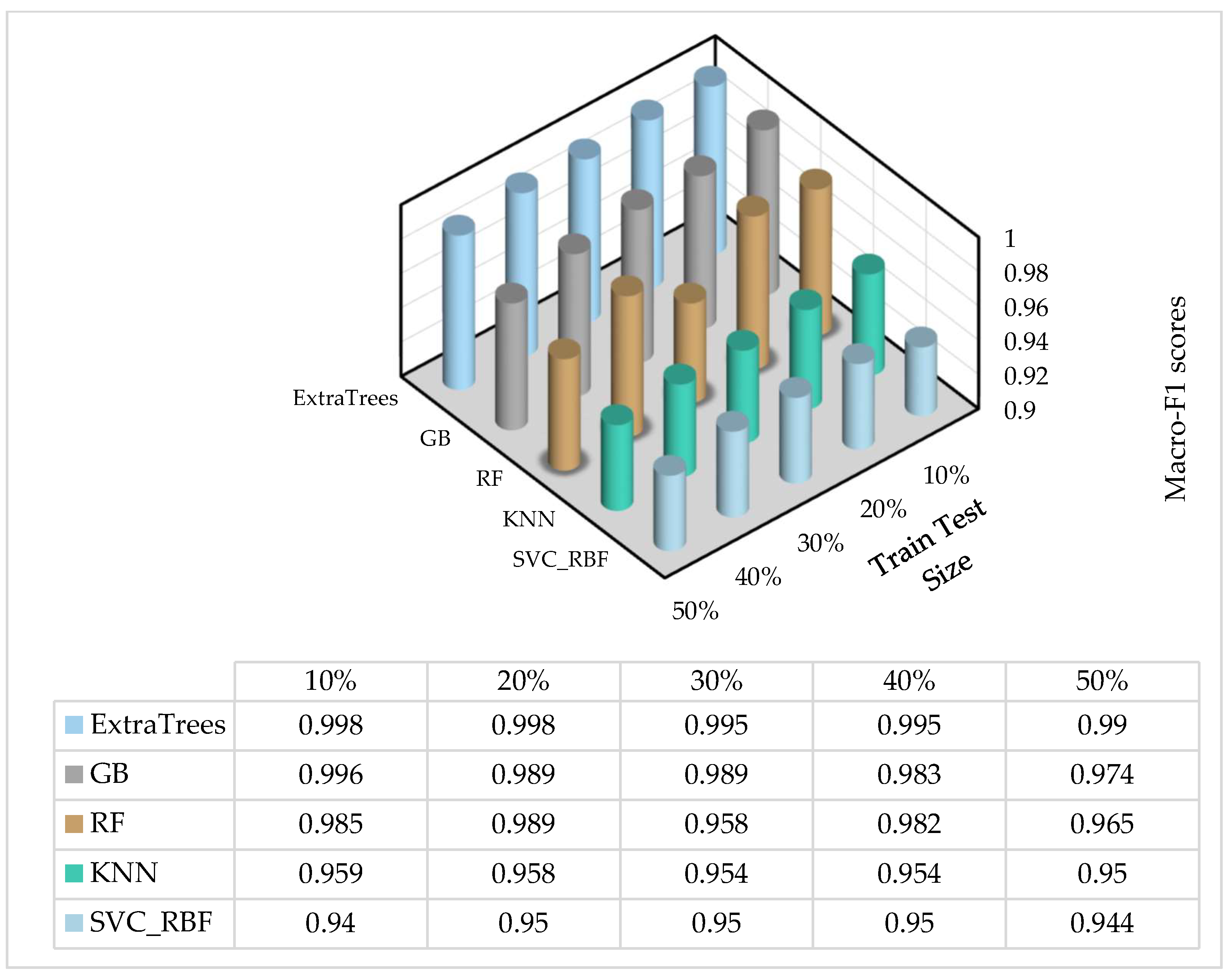

To evaluate the performance of the machine learning models, three key metrics were used: McFadden’s Pseudo-R2, Macro F1 Score, and PCA Projection by Coal Texture Type.

McFadden’s Pseudo-R

2 is a measure of model fit that serves as an alternative to traditional R

2 in logistic regression models. It is particularly useful for models that predict categorical outcomes, such as coal-texture classification. McFadden’s Pseudo-R

2 is defined as:

where Lmodel is the log-likelihood of the fitted model, and Lnull is the log-likelihood of a model that only includes an intercept (i.e., no predictors). A higher value of McFadden’s Pseudo-R

2 indicates a better-fitting model, with values close to 1 suggesting strong predictive power [

23,

24]. This metric is traditionally used for logistic regression models, but this study has applied it to all models, including non-logistic models such as Random Forest, Extra Trees, and Gradient Boosting by used the log-likelihood of the model (L_model) and compared it with the log-likelihood of a null model (L_null), which contains only the intercept.

Macro F1 Score is a metric used to evaluate classification models, particularly in imbalanced datasets. It is the harmonic mean of precision and recall, providing a single metric that balances the trade-off between false positives and false negatives. The Macro F1 Score is calculated as:

where N is the number of classes, and Precisioni and Recalli are the precision and recall for class i, respectively. The Macro F1 Score averages the F1 scores of each class, giving equal weight to all classes, regardless of their frequency in the dataset [

17,

18,

25].

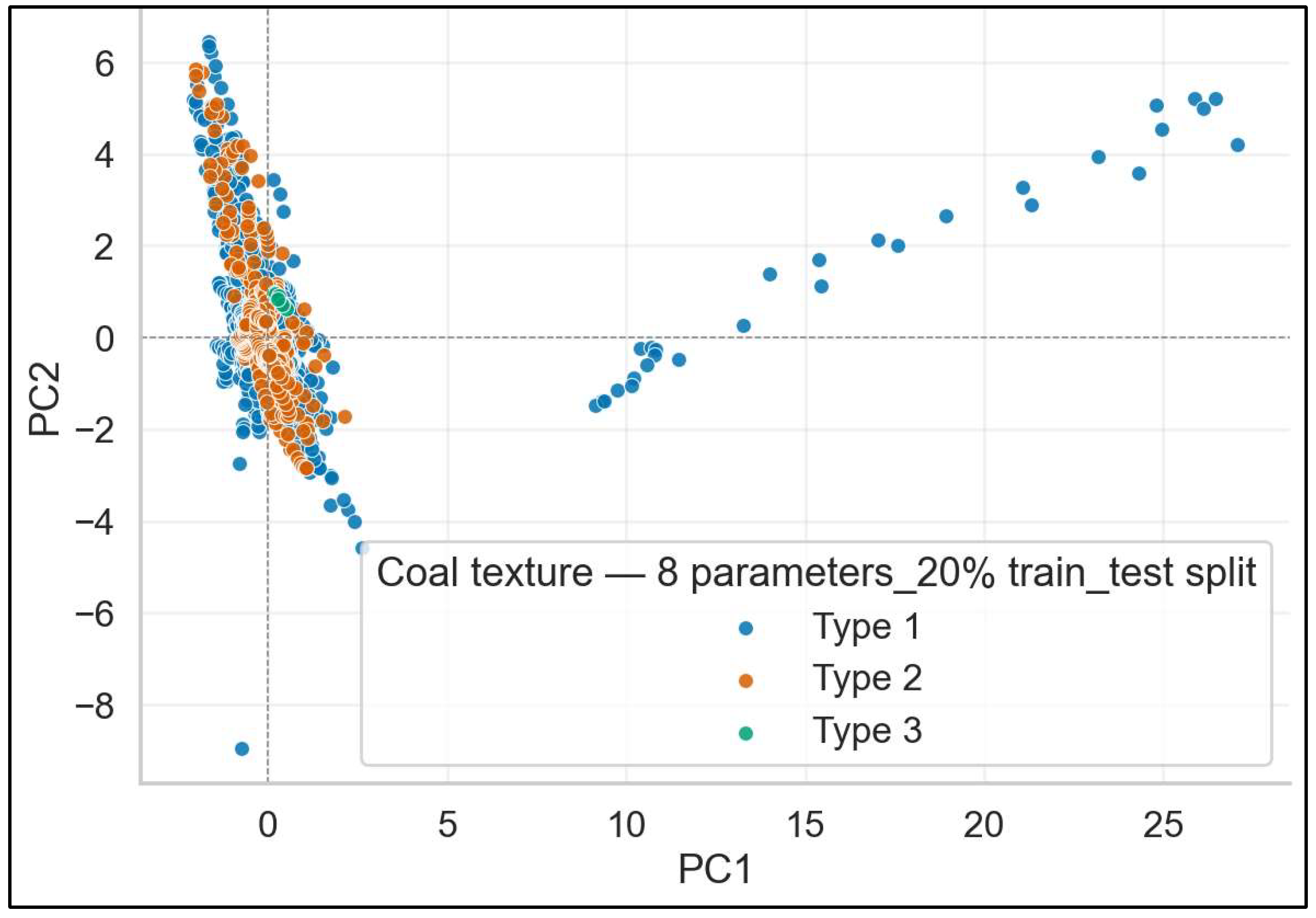

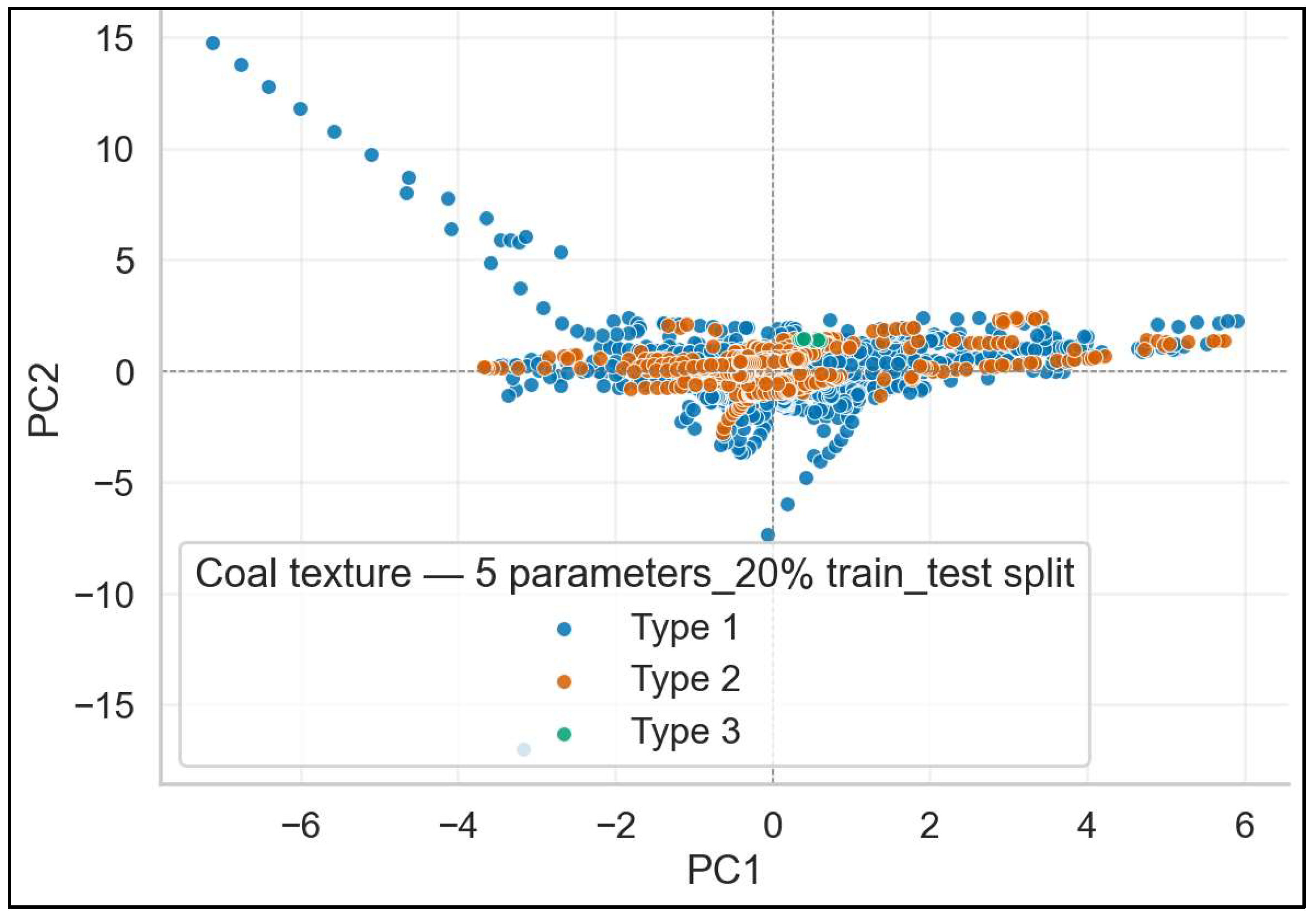

PCA Projection by Coal Texture Type (PC1–PC2) is used to visualize the data in a reduced dimensional space, typically focusing on the first two principal components (PC1 and PC2) derived from Principal Component Analysis (PCA). This method helps to identify the separation between coal-texture classes in the feature space. By plotting the data in this reduced dimensional space, the projection helps visualize the separation of coal textures based on the well-log parameters, providing insight into the effectiveness of the model in distinguishing between the different classes [

26,

27].

These metrics offer a comprehensive evaluation of the model’s performance, focusing on fit, classification accuracy, and the underlying structure of the data in relation to coal-texture types.

4. Conclusions

This study presents a novel and comprehensive approach to optimizing reservoir characterization by leveraging machine learning for coal texture classification in the Zhengzhuang Field of the Qinshui Basin. By utilizing well-log data from 86 wells, the study refined a dataset initially comprising 2992 data points, categorized into three coal texture types: Undeformed Coal (UC), Cataclastic Coal (CC), and Granulated Coal (GC). After data optimization, the dataset was further reduced to 8 input parameters, followed by the selection of the 5 most influential features for model evaluation.

The research focused on improving the accuracy of coal texture prediction and enhancing the understanding of CBM reservoir properties, with a particular emphasis on gas migration and accumulation processes. Two main scenarios were evaluated: Scenario 1, using all 8 parameters, and Scenario 2, using the 5 most influential parameters. Through the application of five distinct machine learning models, the study demonstrated high-performance coal texture classification, advancing the understanding of coalbed methane reservoir characterization.

The following points summarize the key contributions of this work:

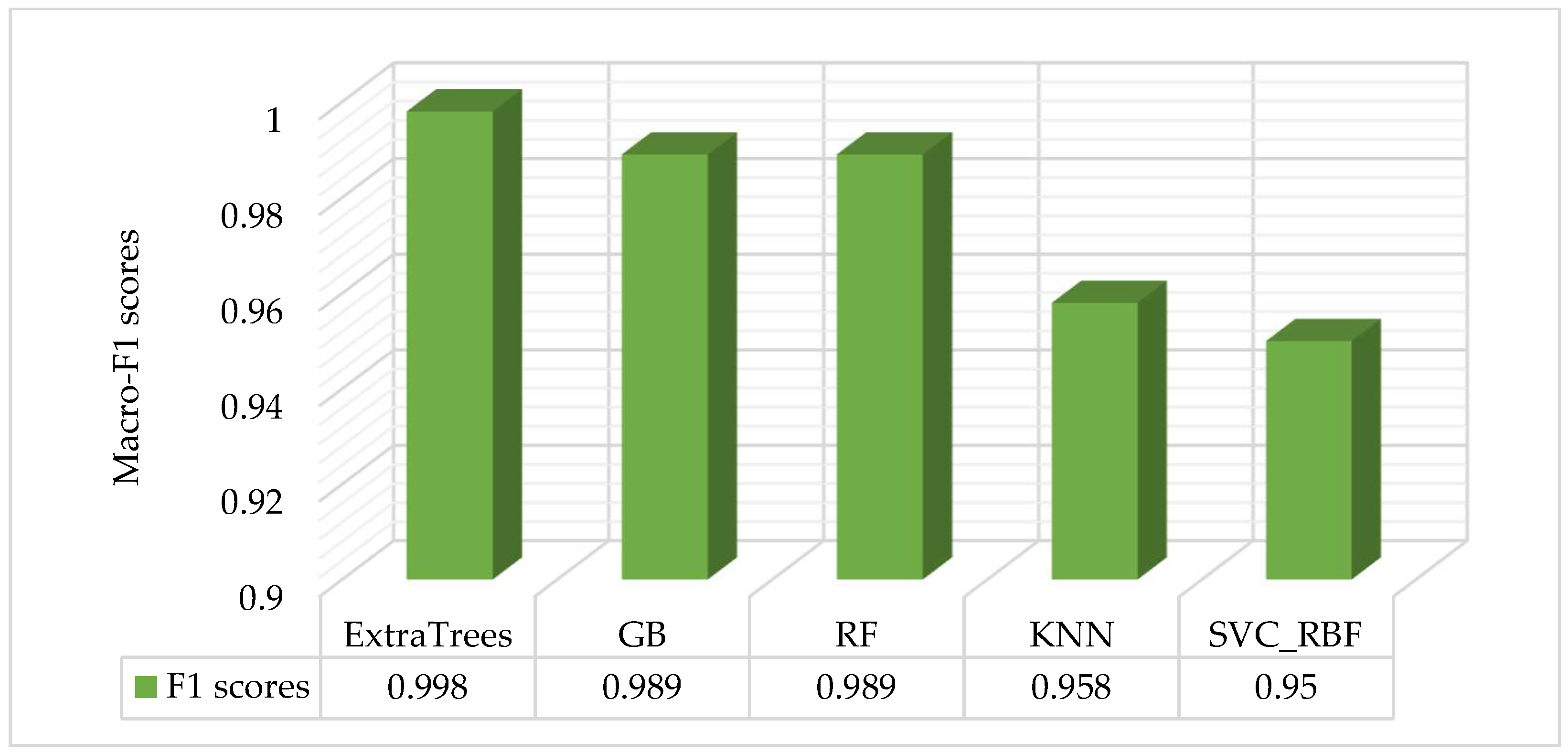

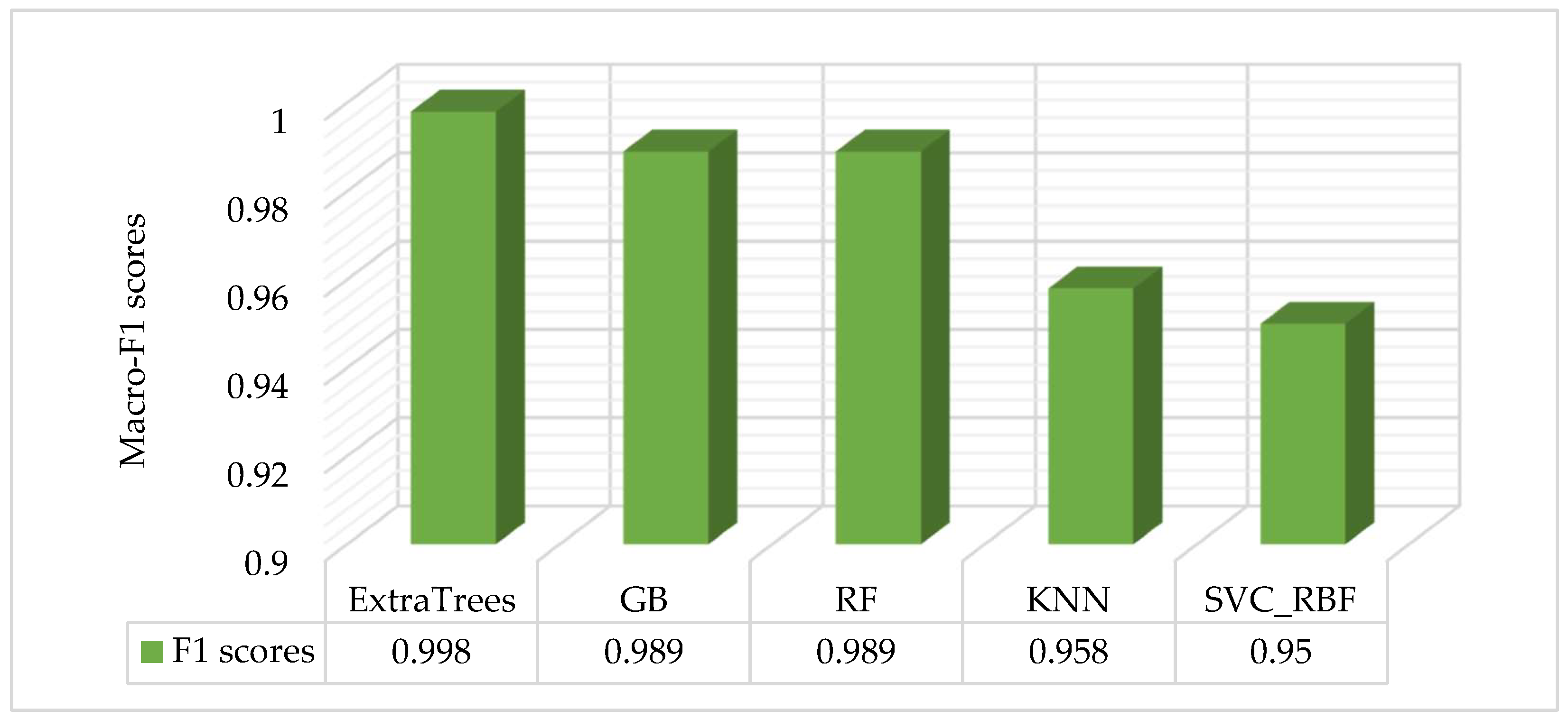

This study utilized five different machine learning models—Extra Trees, Gradient Boosting, Support Vector Classifier (SVC), Random Forest, and k-Nearest Neighbors (kNN). Among these models, Extra Trees stood out as the best-performing classifier in both scenarios. Its ability to capture intricate patterns within the data, particularly the complex relationships between geophysical parameters, made it the optimal choice for coal texture classification. The model’s performance was evaluated using various metrics, such as McFadden’s Pseudo-R2 and Macro F1 Score, where Extra Trees consistently outperformed the others, demonstrating its exceptional predictive capabilities.

Extra Trees achieved the highest performance with a Macro F1 Score of 0.998, particularly when 20% of the data was used for the test set and 80% for training. This peak performance underscores the model’s robustness and its ability to maintain high accuracy, even with varying test sizes. These findings highlight the superior capability of ensemble learning methods, especially Extra Trees, in handling the complex geological data associated with coalbed methane reservoirs. The results suggest that machine learning can significantly enhance the accuracy of CBM reservoir modeling, particularly for gas migration and accumulation analysis.

While this study provides a robust methodology for coal texture classification, it is limited by the relatively small and geographically focused dataset from the Zhengzhuang Field. The model’s performance may vary with larger datasets or different geological settings, and the prediction accuracy for the Granulated Coal (GC) class could be further improved with more data points. While the study provides a successful approach to coal texture classification, further work could incorporate additional geophysical parameters and datasets to enhance model robustness. Including more diverse geological features from different regions would create a more comprehensive model for CBM exploration. Additionally, expanding this methodology to predict coal texture types in unsampled layers would optimize resource evaluation and gas accumulation predictions, improving extraction strategies. Future efforts will also focus on integrating geological stress modeling and multi-source data, such as seismic and geophysical measurements, to further improve the reliability and generalizability of machine learning-based coal texture classification across coal basins with varying geological conditions.