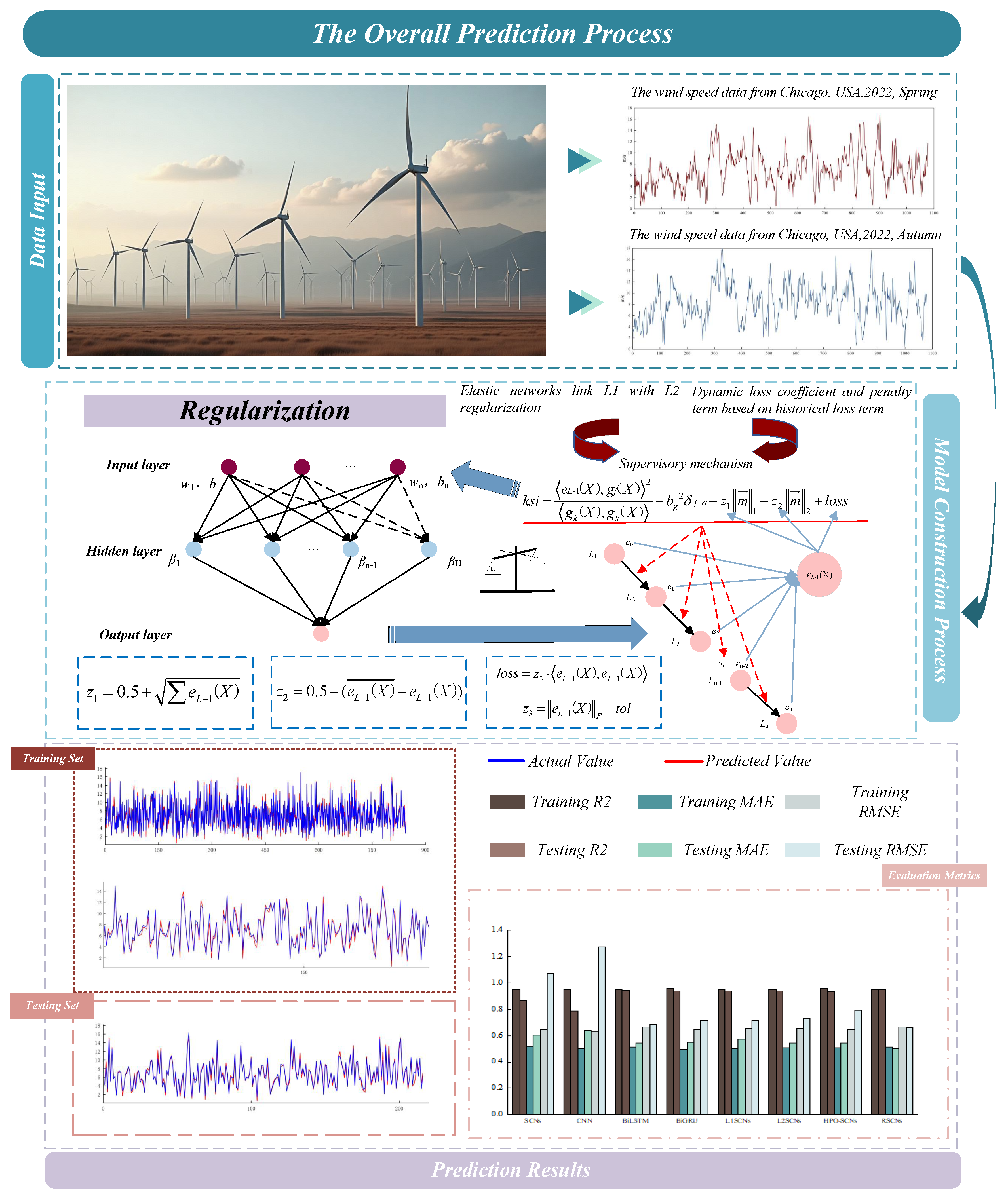

An Improved Regularization Stochastic Configuration Network for Robust Wind Speed Prediction

Abstract

1. Introduction

- (1)

- The Elastic Net framework, which integrates L1 and L2 regularization techniques, is incorporated into SCNs. This integration enables the model to produce sparse solutions while maintaining a high level of prediction accuracy, thereby enhancing its suitability for feature selection. Furthermore, the approach effectively controls model complexity and mitigates the risk of overfitting.

- (2)

- A dynamic loss coefficient, derived from historical loss values, is introduced to enable adaptive adjustment of the model’s regularization intensity, and a penalty term based on both the historical error and the contribution of newly added nodes is incorporated to fine-tune the regularization strength.

2. Materials and Methods

2.1. Stochastic Configuration Networks

2.2. L1 and L2 Regularization

3. RSCNs

3.1. Elastic Networks Combine L1 and L2 Regularization

3.2. Dynamic Loss Coefficient and Penalty Term Based on Historical Loss Term

| Algorithm 1 RSCNs Algorithm |

| Require: Training data X, Y, random scale factor , error threshold , number of nodes L, regularization strength Ensure: Model

|

4. Experiment and Analysis

4.1. Evaluation Index

- (1)

- Root mean square error: By squaring the error, this metric becomes more sensitive to larger errors.where N represents the total number of samples, denotes the actual value, and indicates the predicted value.

- (2)

- Mean absolute error: This metric directly quantifies the difference between the predicted value and the actual value. Its calculation does not involve squaring the error, making it less sensitive to outliers and thus more suitable for datasets with numerous outliers.

- (3)

- R-Square: The value of is easily influenced by the number of samples. Generally, a larger indicates a better model fit, reflecting higher prediction accuracy of the model.

4.2. Comparative Experiment

5. Discussions

6. Conclusions

- (1)

- L1 and L2 regularization are integrated through the Elastic Net and incorporated into the SCNs framework. By balancing sparsity and smoothness, this approach effectively addresses the issues of underfitting or overfitting that arise from single regularization techniques.

- (2)

- A dynamic loss coefficient and a penalty term based on historical error values were proposed, enabling adaptive adjustment of regularization strength and reducing the subjectivity and limitations inherent in manual hyperparameter tuning.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Z.; Lin, L.; Gao, S.; Wang, J.; Zhao, H.; Yu, H. A machine learning model for hub-height short-term wind speed prediction. Nat. Commun. 2025, 16, 3195. [Google Scholar] [CrossRef]

- Han, Y.; Hu, X.; Li, K. Chaotic property based multi-interval informer modeling method for long-term photovoltaic power generation prediction. Appl. Soft Comput. 2025, 184, 113843. [Google Scholar] [CrossRef]

- Ye, L.; Zhao, Y.; Zeng, C.; Zhang, C. Short-term wind power prediction based on spatial model. Renew. Energy 2017, 101, 1067–1074. [Google Scholar] [CrossRef]

- Ouyang, T.; Zha, X.; Qin, L. A combined multivariate model for wind power prediction. Energy Convers. Manag. 2017, 144, 361–373. [Google Scholar] [CrossRef]

- Miao, S.; Yang, H.; Gu, Y. A wind vector simulation model and its application to adequacy assessment. Energy 2018, 148, 324–340. [Google Scholar] [CrossRef]

- Chen, G.; Wang, G. A supervised learning algorithm for spiking neurons using spike train kernel based on a unit of pair-spike. IEEE Access 2020, 8, 53427–53442. [Google Scholar] [CrossRef]

- Chen, G.; Wang, G. Tstkd: Triple-spike train kernel-driven supervised learning algorithm. Pattern Recognit. 2025, 164, 111525. [Google Scholar] [CrossRef]

- Wang, D.; Li, M. Stochastic configuration networks: Fundamentals and algorithms. IEEE Trans. Cybern. 2017, 47, 3466–3479. [Google Scholar] [CrossRef]

- Li, J.; Wang, D. 2D convolutional stochastic configuration networks. Knowl.-Based Syst. 2024, 300, 112249. [Google Scholar] [CrossRef]

- Zhou, T.; Wang, Y.; Yang, G.; Zhang, C.; Wang, J. Greedy stochastic configuration networks for ill-posed problems. Knowl.-Based Syst. 2023, 269, 110464. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Y.; Zhang, D. Greedy deep stochastic configuration networks ensemble with boosting negative correlation learning. Inf. Sci. 2024, 680, 121140. [Google Scholar] [CrossRef]

- Dang, G.; Wang, D. Self-organizing recurrent stochastic configuration networks for nonstationary data modeling. IEEE Trans. Ind. Inform. 2025, 21, 4820–4829. [Google Scholar] [CrossRef]

- Wang, D.; Dang, G. Fuzzy recurrent stochastic configuration networks for industrial data analytics. IEEE Trans. Fuzzy Syst. 2025, 33, 1178–1191. [Google Scholar] [CrossRef]

- Sun, K.; Yang, C.; Gao, C.; Wu, X.; Zhao, J. Development of an online updating stochastic configuration network for the soft-sensing of the semi-autogenous ball mill crusher system. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Han, Y.; Yu, Y.; Wu, H.; Li, K. Multi-level optimizing of parameters in stochastic configuration networks based on cloud model and nutcracker optimization algorithm. Inf. Sci. 2025, 689, 121495. [Google Scholar] [CrossRef]

- Li, M.; Huang, C.; Wang, D. Robust stochastic configuration networks with maximum correntropy criterion for uncertain data regression. Inf. Sci. 2019, 473, 73–86. [Google Scholar] [CrossRef]

- Lu, J.; Ding, J. Mixed-distribution-based robust stochastic configuration networks for prediction interval construction. IEEE Trans. Ind. Inform. 2019, 16, 5099–5109. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, A.; Han, Y.; Nan, J.; Li, K. Fast stochastic configuration network based on an improved sparrow search algorithm for fire flame recognition. Knowl.-Based Syst. 2022, 245, 108626. [Google Scholar] [CrossRef]

- Han, Y.; Yu, Y.; Li, K. Adaptive inertia weights: An effective way to improve parameter estimation of hidden layer in stochastic configuration networks. Int. J. Mach. Learn. Cybern. 2025, 16, 2203–2218. [Google Scholar] [CrossRef]

- Dai, W.; Ning, C.; Nan, J.; Wang, D. Stochastic configuration networks for imbalanced data classification. Int. J. Mach. Learn. Cybern. 2022, 13, 2843–2855. [Google Scholar] [CrossRef]

- Zhao, L.; Zou, S.; Guo, S.; Huang, M. Ball mill load condition recognition model based on regularized stochastic configuration networks. Control Eng. China 2020, 27, 1–7. [Google Scholar]

- Pan, C.; Xv, J.; Weng, Y. A fault identification method of chemical process based on manifold regularized stochastic configuration network. Chin. J. Sci. Instrum. 2021, 42, 219–226. [Google Scholar]

- Szabo, Z.; Lorincz, A. L1 regularization is better than l2 for learning and predicting chaotic systems. arXiv 2004, arXiv:cs/0410015. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Zhao, P.; Yu, B. Stagewise lasso. J. Mach. Learn. Res. 2007, 8, 2701–2726. [Google Scholar]

- Zhang, Z.; Xu, Y.; Yang, J.; Li, X.; Zhang, D. A survey of sparse representation: Algorithms and applications. IEEE Access 2017, 3, 490–530. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. 2005, 67, 768. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Ma, Y.; Fu, Y. Smoothing l1 regularization for stochastic configuration networks. Control Decis. 2024, 39, 813–818. [Google Scholar]

- Xiong, B.; Meng, X.; Xiong, G.; Ma, H.; Lou, L.; Wang, Z. Multi-branch wind power prediction based on optimized variational mode decomposition. Energy Rep. 2022, 8, 11181–11191. [Google Scholar] [CrossRef]

- Yu, M.; Niu, D.; Gao, T.; Wang, K.; Sun, L.; Li, M.; Xu, X. A novel framework for ultra-short-term interval wind power prediction based on rf-woa-vmd and bigru optimized by the attention mechanism. Energy 2023, 269, 126738. [Google Scholar] [CrossRef]

- Wang, Z.; Ying, Y.; Kou, L.; Ke, W.; Wan, J.; Yu, Z.; Liu, H.; Zhang, F. Ultra-short-term offshore wind power prediction based on pca-ssa-vmd and bilstm. Sensors 2024, 24, 444. [Google Scholar] [CrossRef] [PubMed]

| Datasets | Features | Instances | Brief Introduction |

|---|---|---|---|

| Plastic | 2 | 1650 | The objective is to determine the amount of pressure a given piece of plastic can withstand when subjected to a specific pressure strength at a fixed temperature. Input: Strength and Temperature; Output: Pressure. |

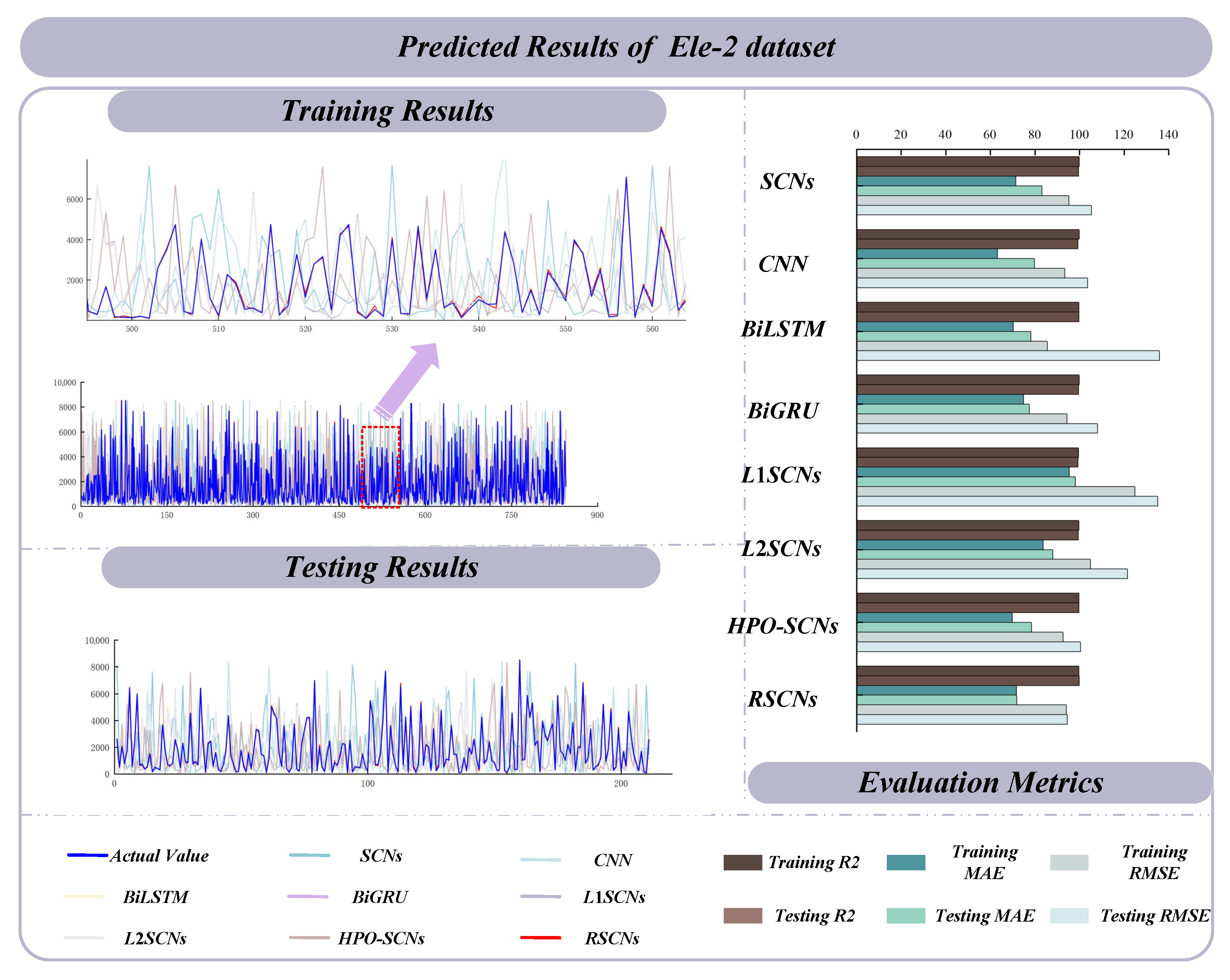

| Ele-2 | 4 | 1056 | Electrical maintenance data consist of four input variables. Input: Reactive power at 110 kV side, 35 kV side, 10 kV side and Reactive power output of reactive power compensation device; Output: Reactive power on the high voltage side of the main transformer. |

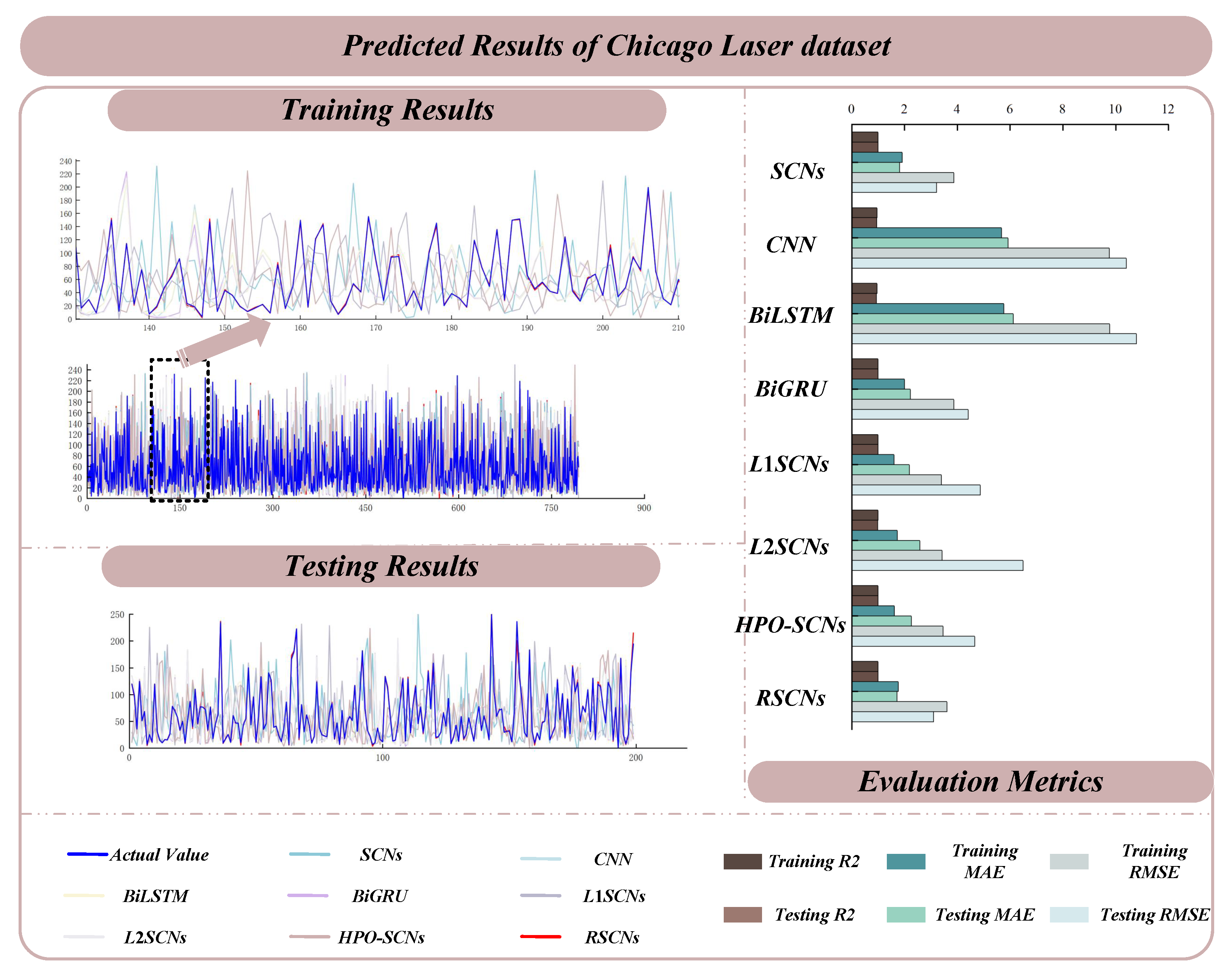

| Laser | 4 | 993 | The dataset originates from the Santa Fe Time Series Competition database and comprises four features with 993 entries. Initially, this dataset was a univariate time series recording the chaotic state of a far-infrared laser. By selecting four consecutive values as input, the output is to predict the subsequent value. |

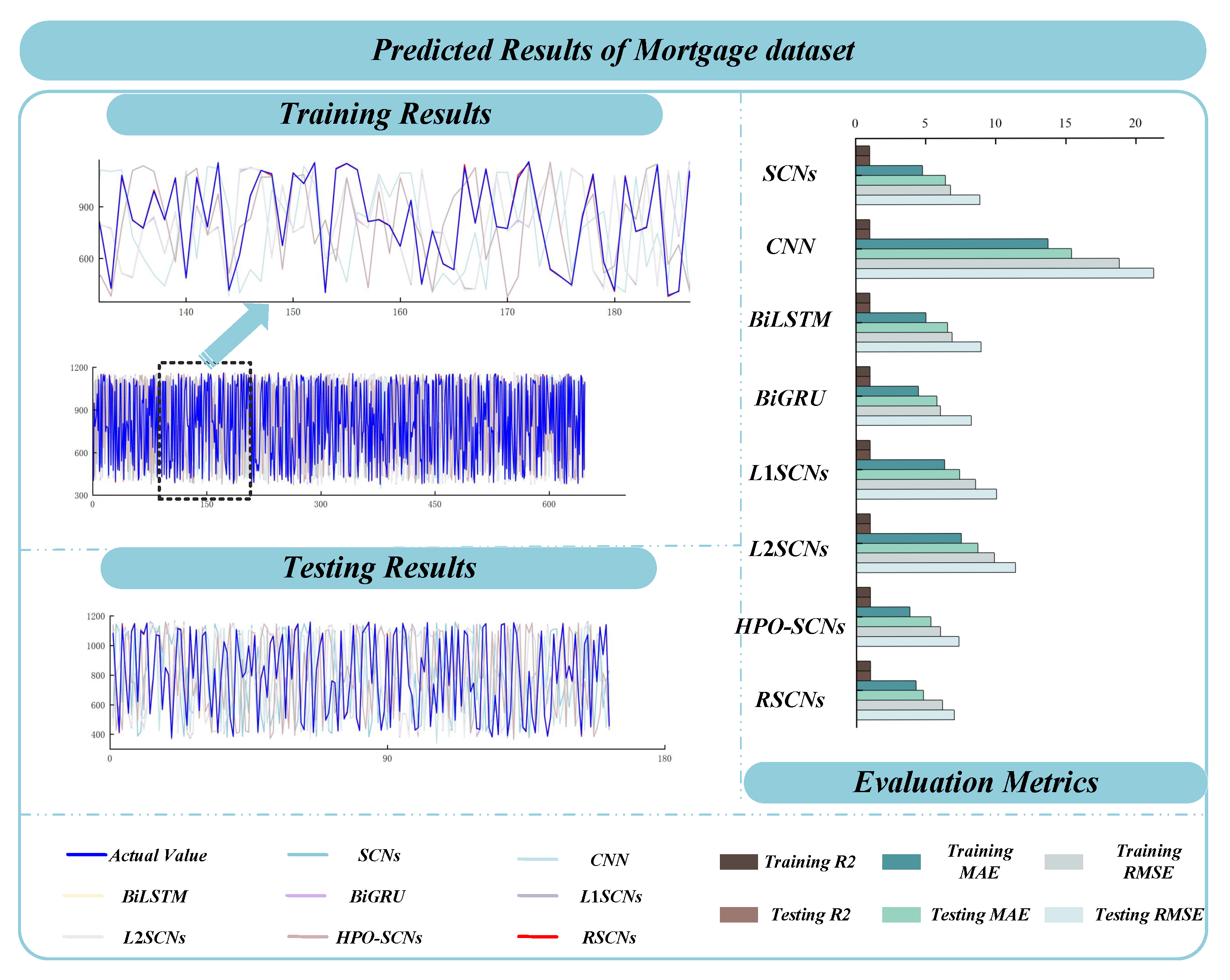

| Mortgage | 15 | 1049 | This file contains weekly Economic data for the USA from 4 January 1980, to 4 February 2000. Based on the provided features, the objective is to predict the 30-Year Conventional Mortgage Rate. Input: 16 kinds of variables such as MonthCDRate, DemandDeposits, FederalFunds, etc. Output: 30Y-CMortgageRate. |

| Model | Parameter Settings |

|---|---|

| BiGRU | = 400; = 500; = 0.01. |

| BiLSTM | = 128; = 1500; = 0.001. |

| L1SCNs, L2SCNs | = 0.5 |

| Dataset | Algorithm | Training | Testing | ||||

|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | ||||

| Ele-2 | SCNs | 0.9976 ± 0.0010 | 71.4170 ± 7.2304 | 95.2537 ± 9.6631 | 0.9951 ± 0.0010 | 86.7361 ± 7.9880 | 107.8005 ± 10.7783 |

| CNN | 0.9980 ± 0.0010 | 63.1699 ± 6.0307 | 92.3980 ± 9.9267 | 0.9926 ± 0.0011 | 91.1139 ± 8.8537 | 144.4438 ± 15.1040 | |

| BiLSTM | 0.9975 ± 0.0014 | 70.2551 ± 7.0040 | 95.2117 ± 8.6073 | 0.9965 ± 0.0010 | 81.2314 ± 7.3015 | 137.3628 ± 14.3399 | |

| BiGRU | 0.9976 ± 0.0010 | 74.9013 ± 7.0407 | 94.3403 ± 9.1557 | 0.9934 ± 0.0009 | 87.4428 ± 7.9001 | 141.5133 ± 13.8105 | |

| L1SCNs | 0.9957 ± 0.0011 | 95.3727 ± 10.2334 | 124.8383 ± 13.7131 | 0.9908 ± 0.0010 | 102.1128 ± 11.0060 | 155.6993 ± 15.6332 | |

| L2SCNs | 0.9970 ± 0.0010 | 83.7090 ± 8.2304 | 104.9157 ± 11.0047 | 0.9928 ± 0.0010 | 88.0278 ± 8.8123 | 122.3325 ± 12.6332 | |

| HPO-SCNs | 0.9975 ± 0.0011 | 69.7793 ± 6.1004 | 92.5619 ± 9.1116 | 0.9966 ± 0.0010 | 79.1055 ± 7.3981 | 102.4117 ± 10.4069 | |

| RSCNs | 0.9974 ± 0.0011 | 71.6095 ± 6.5423 | 94.1306 ± 9.3283 | 0.9971 ± 0.0010 | 75.2506 ± 6.2667 | 99.5576 ± 9.5196 | |

| Laser | SCNs | 0.9934 ± 0.0011 | 1.9084 ± 0.2993 | 3.8698 ± 1.2131 | 0.9921 ± 0.0009 | 1.9997 ± 0.3556 | 3.8830 ± 1.2139 |

| CNN | 0.9588 ± 0.0012 | 5.6749 ± 1.0315 | 9.7562 ± 1.9267 | 0.9325 ± 0.0011 | 7.1779 ± 1.9312 | 13.7063 ± 2.4499 | |

| BiLSTM | 0.9586 ± 0.0011 | 5.7630 ± 1.0141 | 9.7795 ± 1.6179 | 0.9335 ± 0.0013 | 6.3766 ± 1.2773 | 11.5503 ± 2.0863 | |

| BiGRU | 0.9935 ± 0.0010 | 1.9917 ± 0.2713 | 3.8639 ± 0.4927 | 0.9860 ± 0.0009 | 2.2394 ± 0.3770 | 4.8089 ± 0.9031 | |

| L1SCNs | 0.9946 ± 0.0011 | 1.6940 ± 0.2370 | 3.3936 ± 0.7167 | 0.9892 ± 0.0010 | 2.1979 ± 0.2738 | 4.8680 ± 0.6117 | |

| L2SCNs | 0.9949 ± 0.0010 | 1.7218 ± 0.1983 | 3.4257 ± 0.8631 | 0.9664 ± 0.0010 | 2.6843 ± 0.9707 | 6.1735 ± 1.0033 | |

| HPO-SCNs | 0.9943 ± 0.0012 | 1.6066 ± 0.2121 | 3.4615 ± 1.1963 | 0.9905 ± 0.0027 | 2.1503 ± 0.4063 | 4.6004 ± 1.2102 | |

| RSCNs | 0.9909 ± 0.0012 | 1.7568 ± 0.2463 | 3.6045 ± 1.2069 | 0.9930 ± 0.0010 | 2.0012 ± 0.0727 | 3.3159 ± 1.1136 | |

| Mortgage | SCNs | 0.9993 ± 0.0002 | 4.7576 ± 0.7304 | 6.7721 ± 1.8115 | 0.9980 ± 0.0005 | 7.0892 ± 1.4439 | 9.3939 ± 2.0125 |

| CNN | 0.9948 ± 0.0014 | 13.7083 ± 1.8387 | 18.7895 ± 1.9213 | 0.9933 ± 0.0008 | 15.5009 ± 1.9781 | 21.8564 ± 3.1196 | |

| BiLSTM | 0.9993 ± 0.0008 | 5.0023 ± 1.1171 | 6.8520 ± 1.6683 | 0.9987 ± 0.0010 | 6.7349 ± 1.8013 | 9.0836 ± 2.0009 | |

| BiGRU | 0.9995 ± 0.0010 | 4.4419 ± 0.7217 | 6.0152 ± 1.2017 | 0.9988 ± 0.0009 | 6.7153 ± 1.4428 | 9.1326 ± 1.8097 | |

| L1SCNs | 0.9989 ± 0.0011 | 6.3121 ± 1.2114 | 8.5141 ± 1.9140 | 0.9971 ± 0.0010 | 9.4081 ± 1.6296 | 10.8934 ± 2.4033 | |

| L2SCNs | 0.9986 ± 0.0010 | 7.4919 ± 1.7727 | 9.8601 ± 2.1017 | 0.9973 ± 0.0010 | 9.2103 ± 1.9119 | 12.2874 ± 2.7737 | |

| HPO-SCNs | 0.9997 ± 0.0002 | 3.8093 ± 0.4961 | 6.0048 ± 1.3794 | 0.9989 ± 0.0002 | 7.2106 ± 1.4001 | 9.3117 ± 1.7090 | |

| RSCNs | 0.9996 ± 0.0002 | 4.2476 ± 0.7783 | 6.1398 ± 1.5537 | 0.9991 ± 0.0002 | 6.4081 ± 1.1928 | 8.91080 ± 1.3774 | |

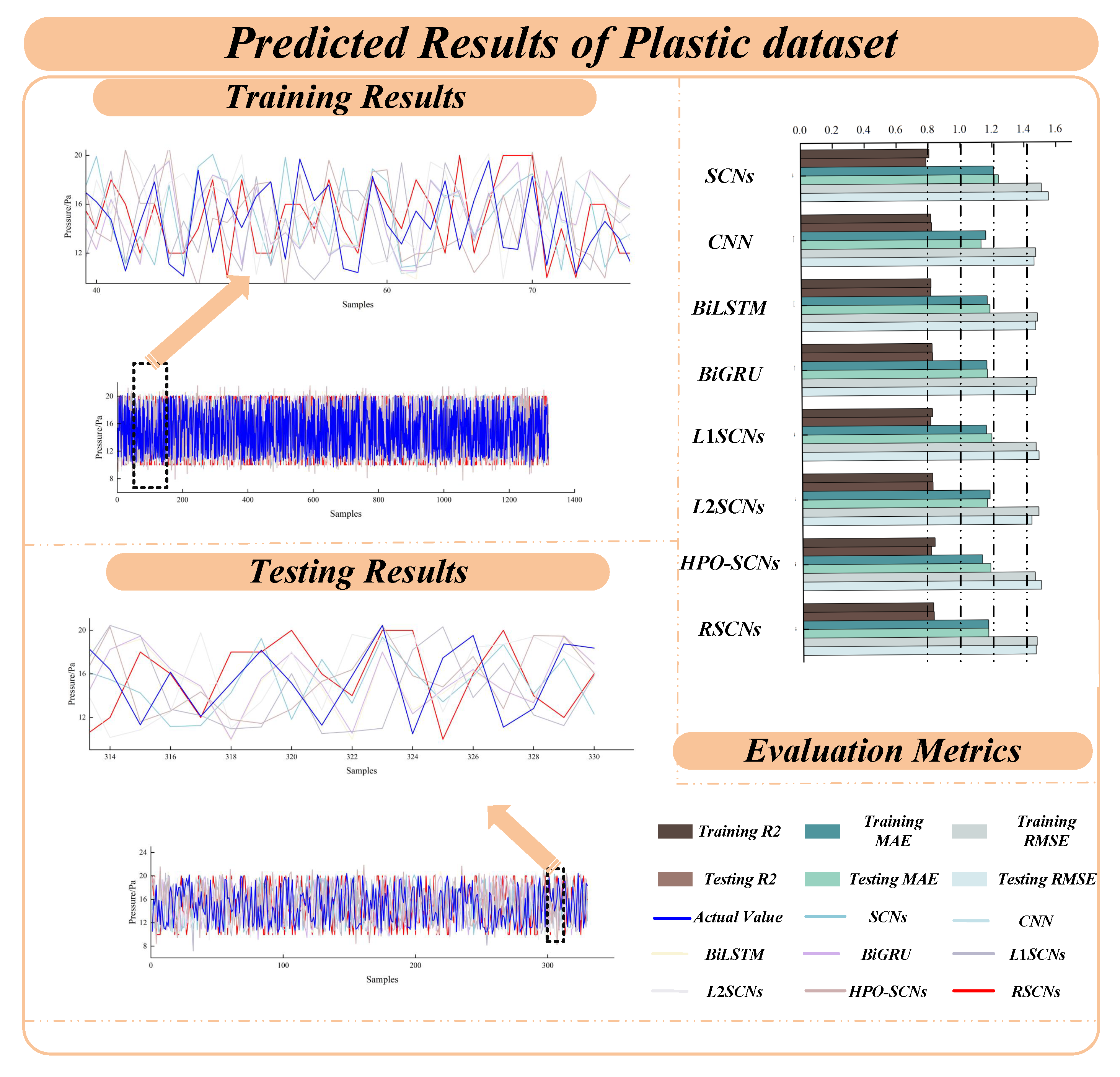

| Plastic | SCNs | 0.8063 ± 0.0323 | 1.2085 ± 0.1032 | 1.5096 ± 0.3114 | 0.7856 ± 0.0366 | 1.2663 ± 0.1317 | 1.6179 ± 0.3912 |

| CNN | 0.8148 ± 0.0221 | 1.1577 ± 0.0217 | 1.4709 ± 0.1267 | 0.8104 ± 0.0225 | 1.1785 ± 0.0764 | 1.9578 ± 0.1436 | |

| BiLSTM | 0.8128 ± 0.0218 | 1.1650 ± 0.0340 | 1.4789 ± 0.0473 | 0.8116 ± 0.0121 | 1.1896 ± 0.0629 | 1.5711 ± 0.1809 | |

| BiGRU | 0.8167 ± 0.0315 | 1.1597 ± 0.0407 | 1.4636 ± 0.1557 | 0.8123 ± 0.0188 | 1.1805 ± 0.0391 | 1.4819 ± 0.0781 | |

| L1SCNs | 0.8191 ± 0.0313 | 1.1538 ± 0.0334 | 1.4543 ± 0.1031 | 0.8059 ± 0.0119 | 1.1931 ± 0.0816 | 1.5115 ± 0.1129 | |

| L2SCNs | 0.8136 ± 0.0310 | 1.1724 ± 0.0314 | 1.4795 ± 0.0747 | 0.8095 ± 0.0108 | 1.1631 ± 0.0304 | 1.5251 ± 0.0633 | |

| HPO-SCNs | 0.8284 ± 0.0291 | 1.1252 ± 0.0939 | 1.4550 ± 0.2871 | 0.8081 ± 0.0217 | 1.1794 ± 0.1143 | 1.5424 ± 0.2926 | |

| RSCNs | 0.8164 ± 0.0213 | 1.1598 ± 0.981 | 1.4625 ± 0.0935 | 0.8128 ± 0.0223 | 1.1702 ± 0.1104 | 1.4632 ± 0.2628 | |

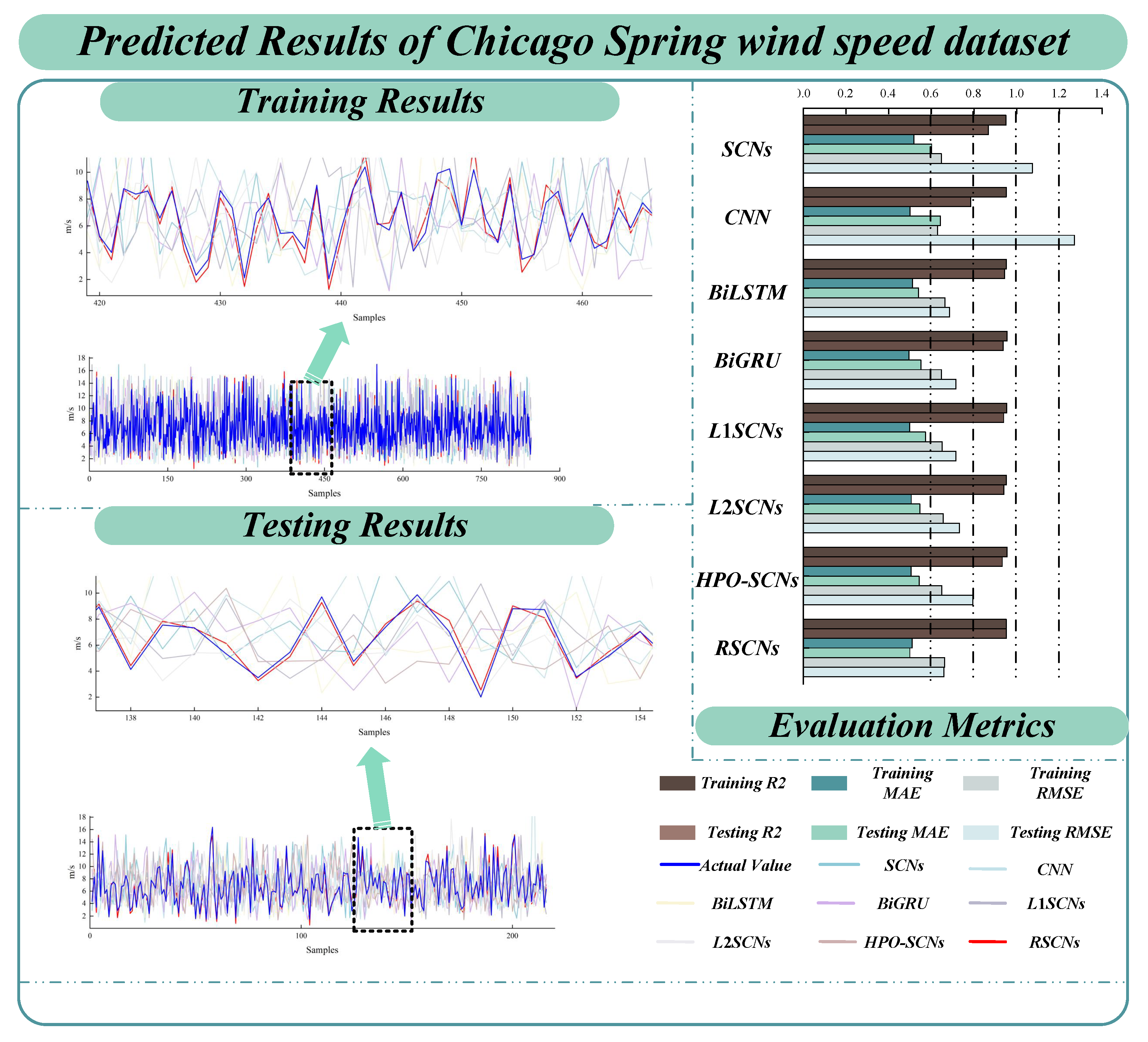

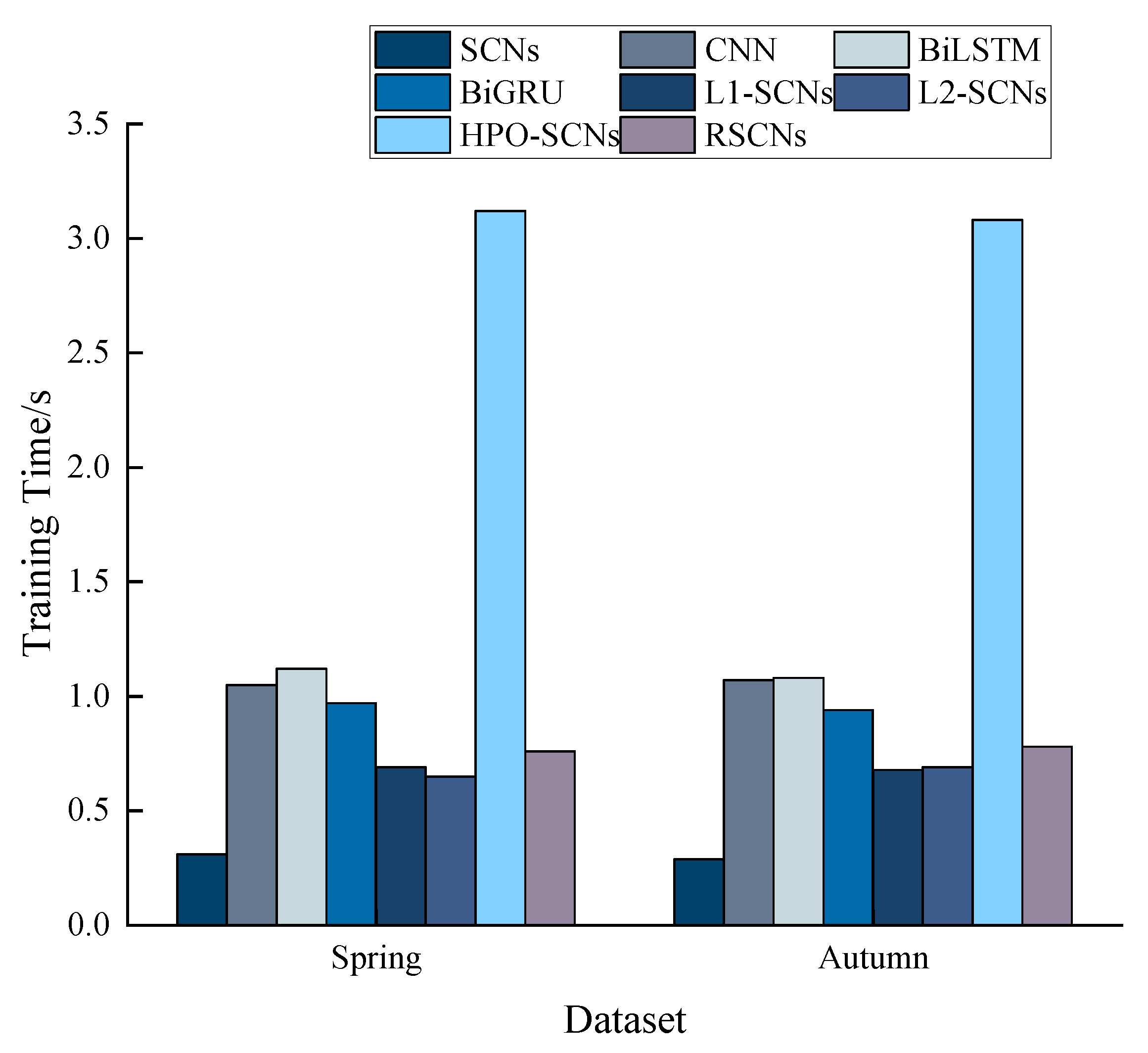

| Spring wind speed | SCNs | 0.9502 ± 0.0102 | 0.5197 ± 0.1033 | 0.6473 ± 0.1308 | 0.8665 ± 0.0077 | 0.6217 ± 0.1226 | 1.1286 ± 0.3118 |

| CNN | 0.9518 ± 0.0221 | 0.4999 ± 0.0417 | 0.6142 ± 0.1267 | 0.7829 ± 0.0205 | 0.6591 ± 0.0908 | 0.8433 ± 0.0416 | |

| BiLSTM | 0.9524 ± 0.0208 | 0.5114 ± 0.0740 | 0.6642 ± 0.1473 | 0.9419 ± 0.0117 | 0.5618 ± 0.0611 | 0.7027 ± 0.1039 | |

| BiGRU | 0.9548 ± 0.0215 | 0.4956 ± 0.0577 | 0.6482 ± 0.1007 | 0.9355 ± 0.0210 | 0.5701 ± 0.0446 | 0.7281 ± 0.0591 | |

| L1SCNs | 0.9540 ± 0.0213 | 0.4989 ± 0.0318 | 0.7153 ± 0.0931 | 0.9381 ± 0.0131 | 0.5839 ± 0.0699 | 0.7303 ± 0.1109 | |

| L2SCNs | 0.9527 ± 0.0210 | 0.5064 ± 0.0298 | 0.6554 ± 0.0678 | 0.9415 ± 0.0106 | 0.5437 ± 0.0388 | 0.7285 ± 0.0326 | |

| HPO-SCNs | 0.9550 ± 0.00193 | 0.5047 ± 0.0903 | 0.6496 ± 0.1256 | 0.9319 ± 0.0092 | 0.5387 ± 0.1232 | 0.7710 ± 0.1988 | |

| RSCNs | 0.9514 ± 0.00163 | 0.5107 ± 0.1002 | 0.6624 ± 0.1118 | 0.9421 ± 0.0049 | 0.5169 ± 0.0772 | 0.6813 ± 0.1122 | |

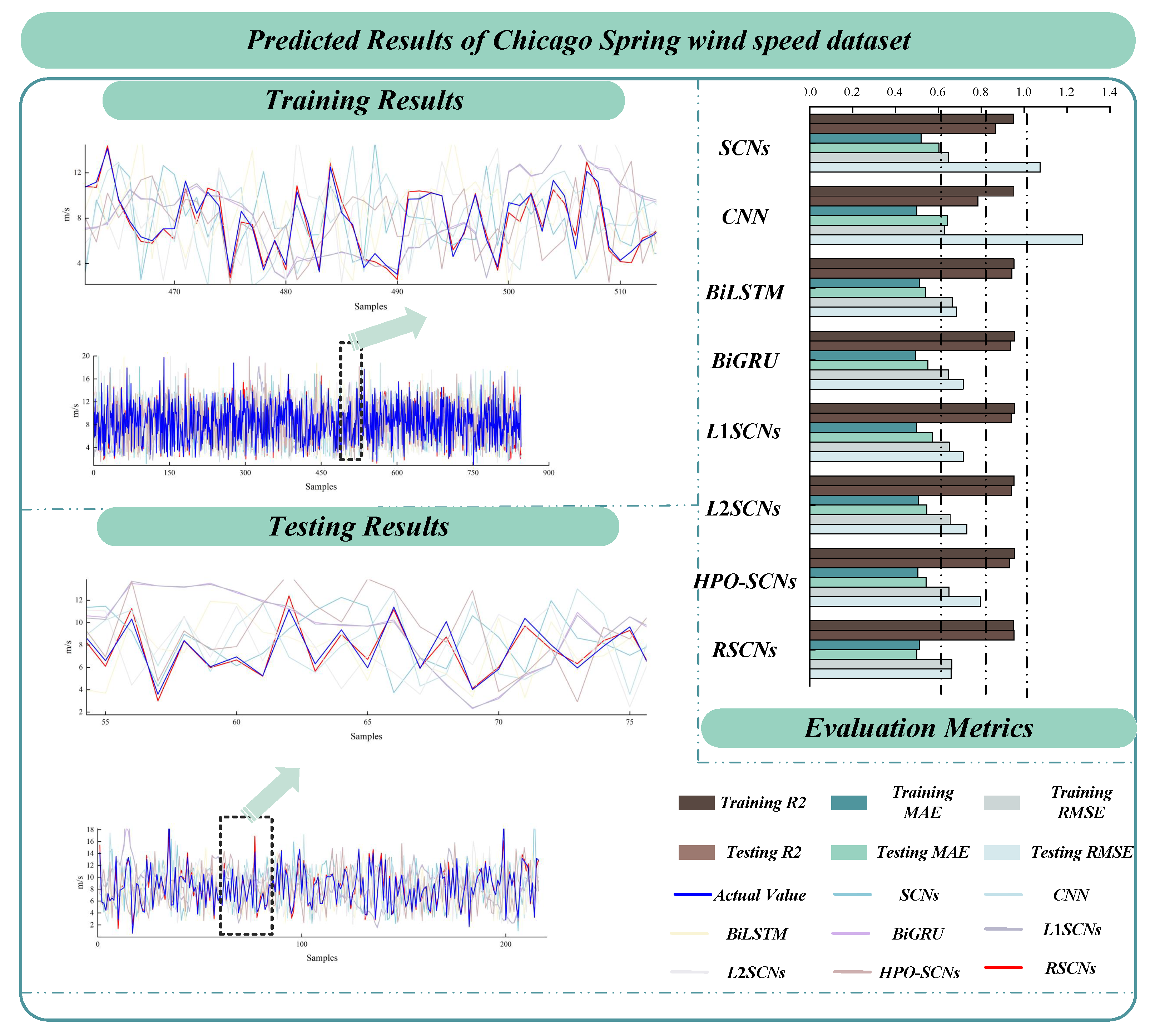

| Autumn wind speed | SCNs | 0.9570 ± 0.0085 | 0.5174 ± 0.1266 | 0.6586 ± 0.1758 | 0.9479 ± 0.0072 | 0.5637 ± 0.1336 | 0.7446 ± 0.2305 |

| CNN | 0.9587 ± 0.0211 | 0.5053 ± 0.0446 | 0.6535 ± 0.1267 | 0.9359 ± 0.0229 | 0.6425 ± 0.0905 | 0.7883 ± 0.1671 | |

| BiLSTM | 0.9578 ± 0.0118 | 0.5085 ± 0.0570 | 0.6570 ± 0.1073 | 0.9477 ± 0.0105 | 0.5726 ± 0.0553 | 0.7209 ± 0.1115 | |

| BiGRU | 0.9587 ± 0.0205 | 0.5061 ± 0.0377 | 0.6540 ± 0.1007 | 0.9385 ± 0.0203 | 0.5818 ± 0.0317 | 0.7241 ± 0.0646 | |

| L1SCNs | 0.9589 ± 0.0243 | 0.5115 ± 0.0318 | 0.6521 ± 0.0531 | 0.8913 ± 0.0171 | 0.6959 ± 0.0518 | 0.9447 ± 0.1696 | |

| L2SCNs | 0.9564 ± 0.0212 | 0.5229 ± 0.0288 | 0.6712 ± 0.0578 | 0.9517 ± 0.0208 | 0.5361 ± 0.0318 | 0.7123 ± 0.0651 | |

| HPO-SCNs | 0.9594 ± 0.0076 | 0.5052 ± 0.1289 | 0.6605 ± 0.1793 | 0.9433 ± 0.0063 | 0.5680 ± 0.1262 | 0.7414 ± 0.1951 | |

| RSCNs | 0.9560 ± 0.0041 | 0.5613 ± 0.1199 | 0.6672 ± 0.1648 | 0.9543 ± 0.0053 | 0.5221 ± 0.1103 | 0.6921 ± 0.1538 | |

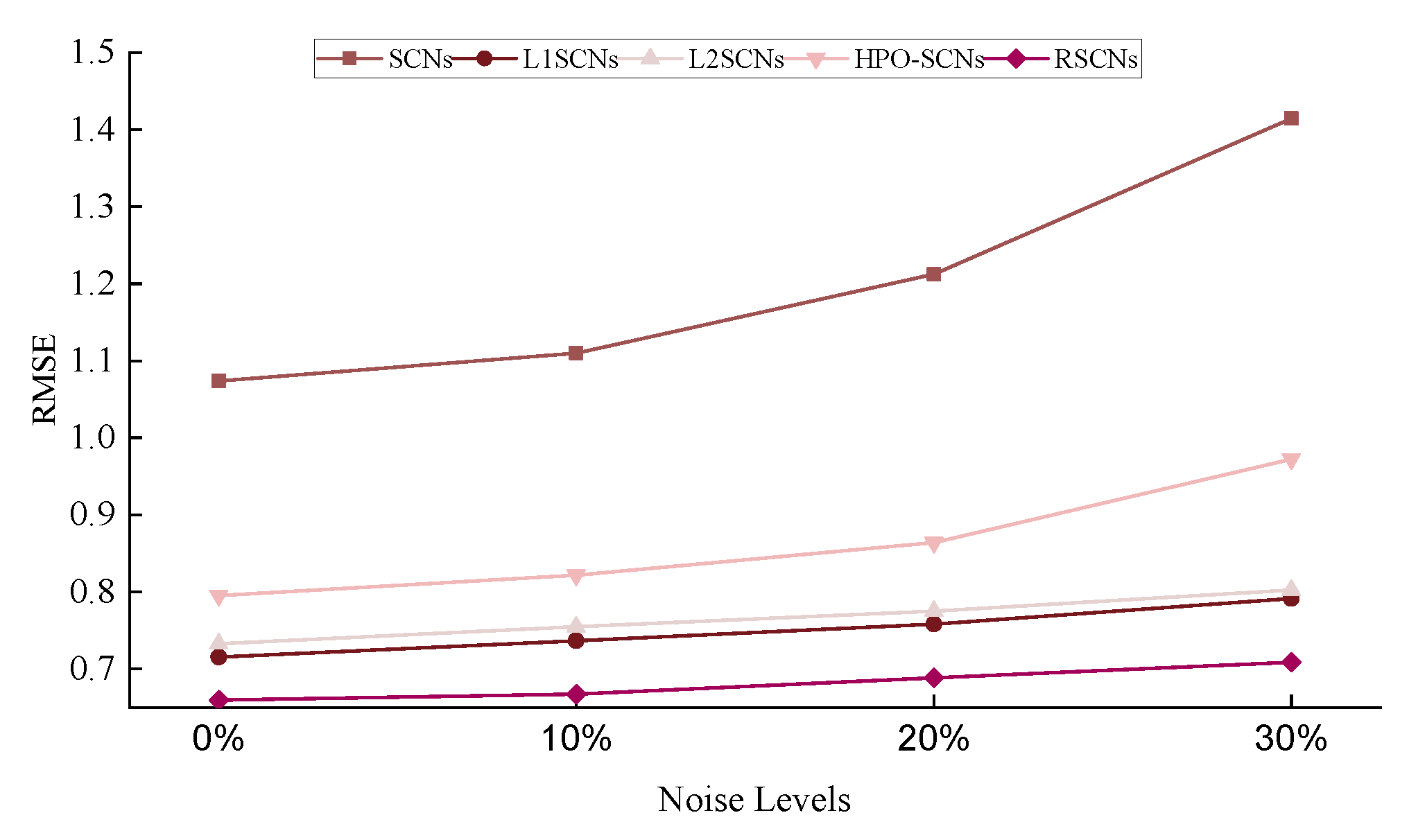

| Algorithms | Noise Levels | |||

|---|---|---|---|---|

| 0% | 10% | 20% | 30% | |

| SCNs | ||||

| CNN | ||||

| BiLSTM | ||||

| BiGRU | ||||

| L1SCNs | ||||

| L2SCNs | ||||

| HPO-SCNs | ||||

| RSCNs | 0.6597 ± 0.1091 | 0.6674 ± 0.1099 | 0.6885 ± 0.1082 | 0.7086 ± 0.1426 |

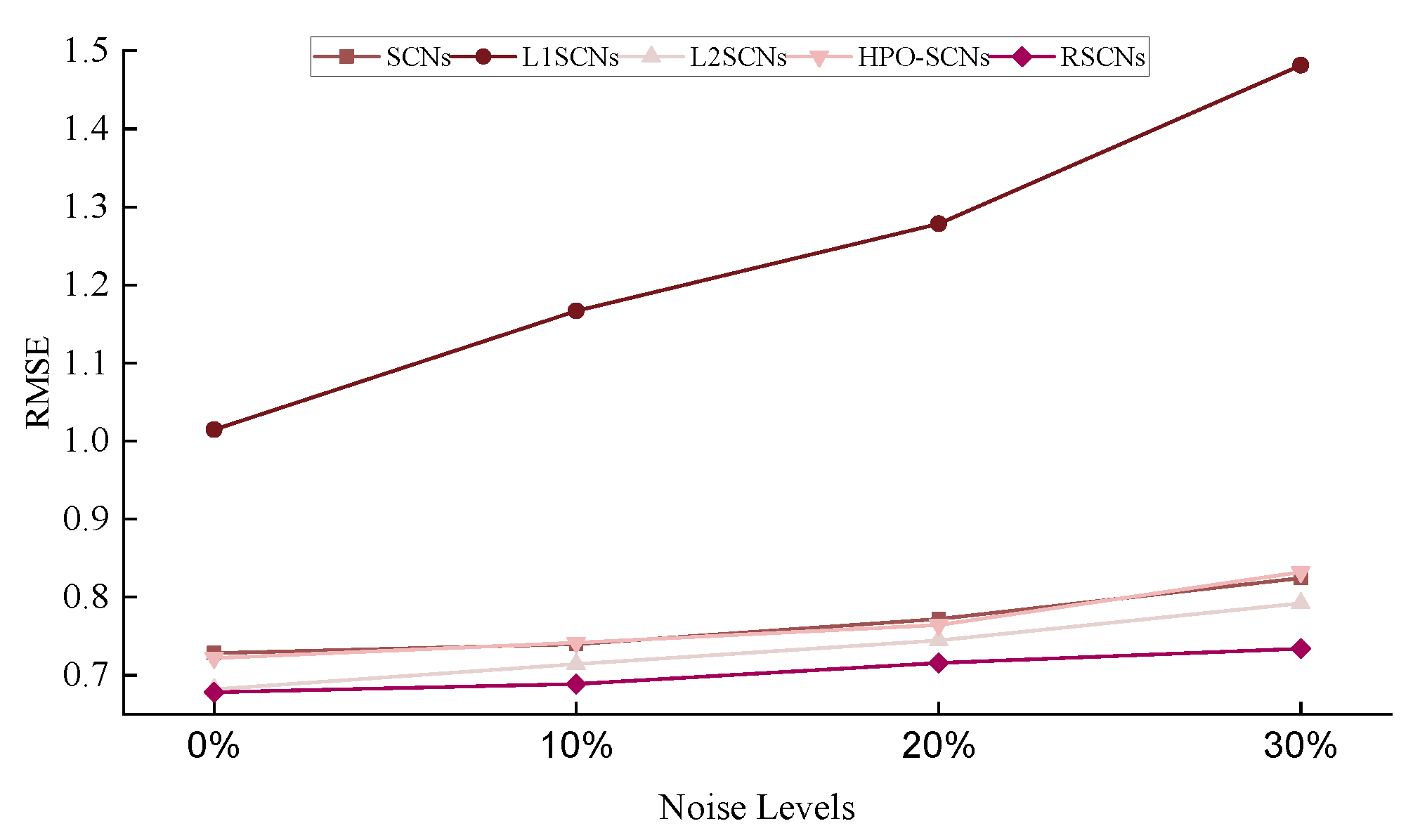

| Algorithms | Noise Levels | |||

|---|---|---|---|---|

| 0% | 10% | 20% | 30% | |

| SCNs | ||||

| CNN | ||||

| BiLSTM | ||||

| BiGRU | ||||

| L1SCNs | ||||

| L2SCNs | ||||

| HPO-SCNs | ||||

| RSCNs | 0.6782 ± 0.1591 | 0.6891 ± 0.1483 | 0.7158 ± 0.1468 | 0.7341 ± 0.1796 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, F.; Chen, X.; Yu, Y.; Li, K. An Improved Regularization Stochastic Configuration Network for Robust Wind Speed Prediction. Energies 2025, 18, 6170. https://doi.org/10.3390/en18236170

Jin F, Chen X, Yu Y, Li K. An Improved Regularization Stochastic Configuration Network for Robust Wind Speed Prediction. Energies. 2025; 18(23):6170. https://doi.org/10.3390/en18236170

Chicago/Turabian StyleJin, Fuguo, Xinyu Chen, Yuanhao Yu, and Kun Li. 2025. "An Improved Regularization Stochastic Configuration Network for Robust Wind Speed Prediction" Energies 18, no. 23: 6170. https://doi.org/10.3390/en18236170

APA StyleJin, F., Chen, X., Yu, Y., & Li, K. (2025). An Improved Regularization Stochastic Configuration Network for Robust Wind Speed Prediction. Energies, 18(23), 6170. https://doi.org/10.3390/en18236170