Instance-Based Transfer Learning-Improved Battery State-of-Health Estimation with Self-Attention Mechanism

Abstract

1. Introduction

- To eliminate dependence on prior knowledge, it is crucial to develop accurate data-driven methods for battery SOH estimation.

- Comprehensive importance weighting is essential for SOH estimation under multiple complex operating conditions to effectively extract useful information.

- Conducting interpretability analysis of the operational mechanisms within black-box data-driven methods can enhance credibility and provide clearer guidance for battery management system maintenance.

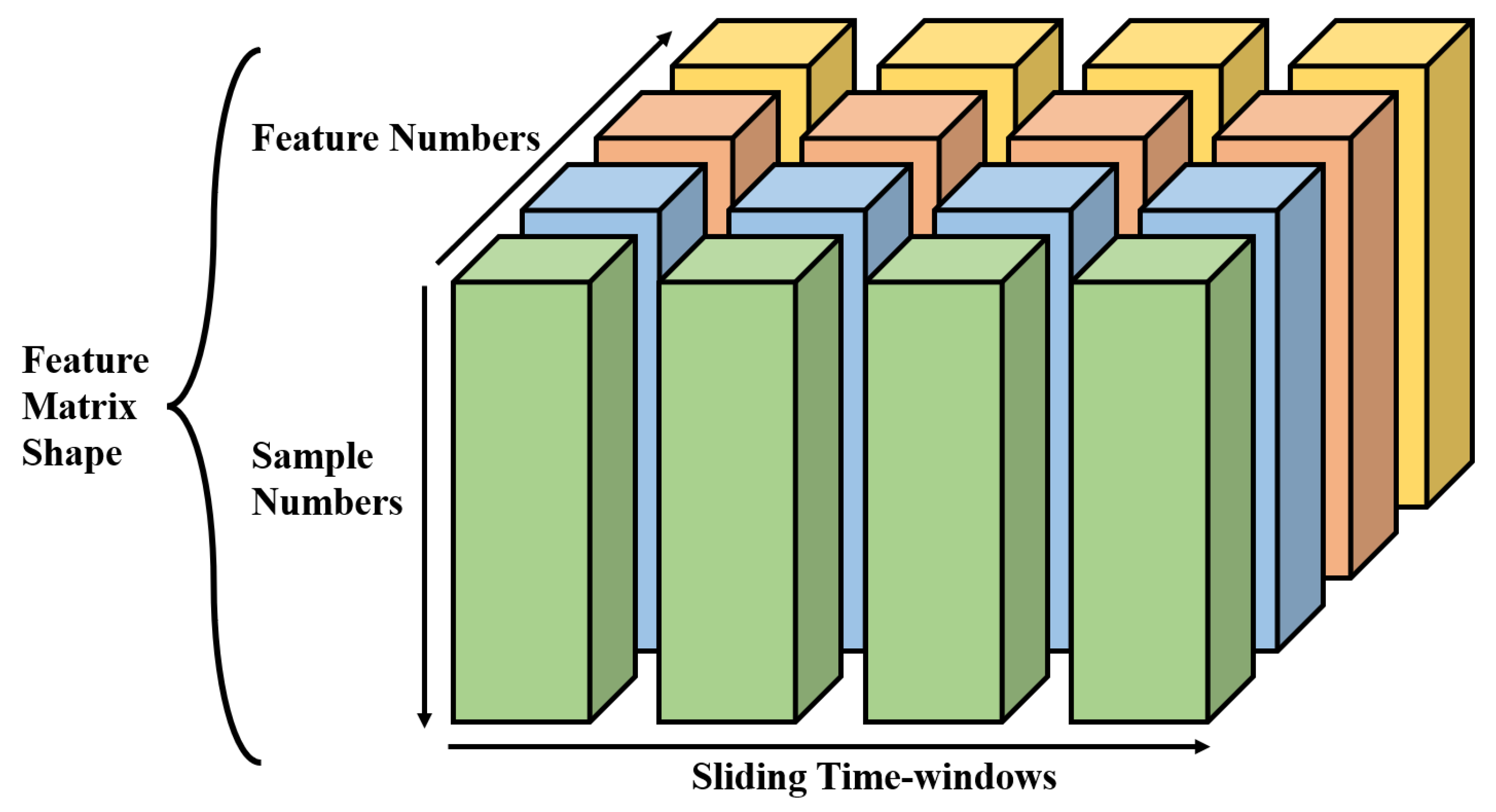

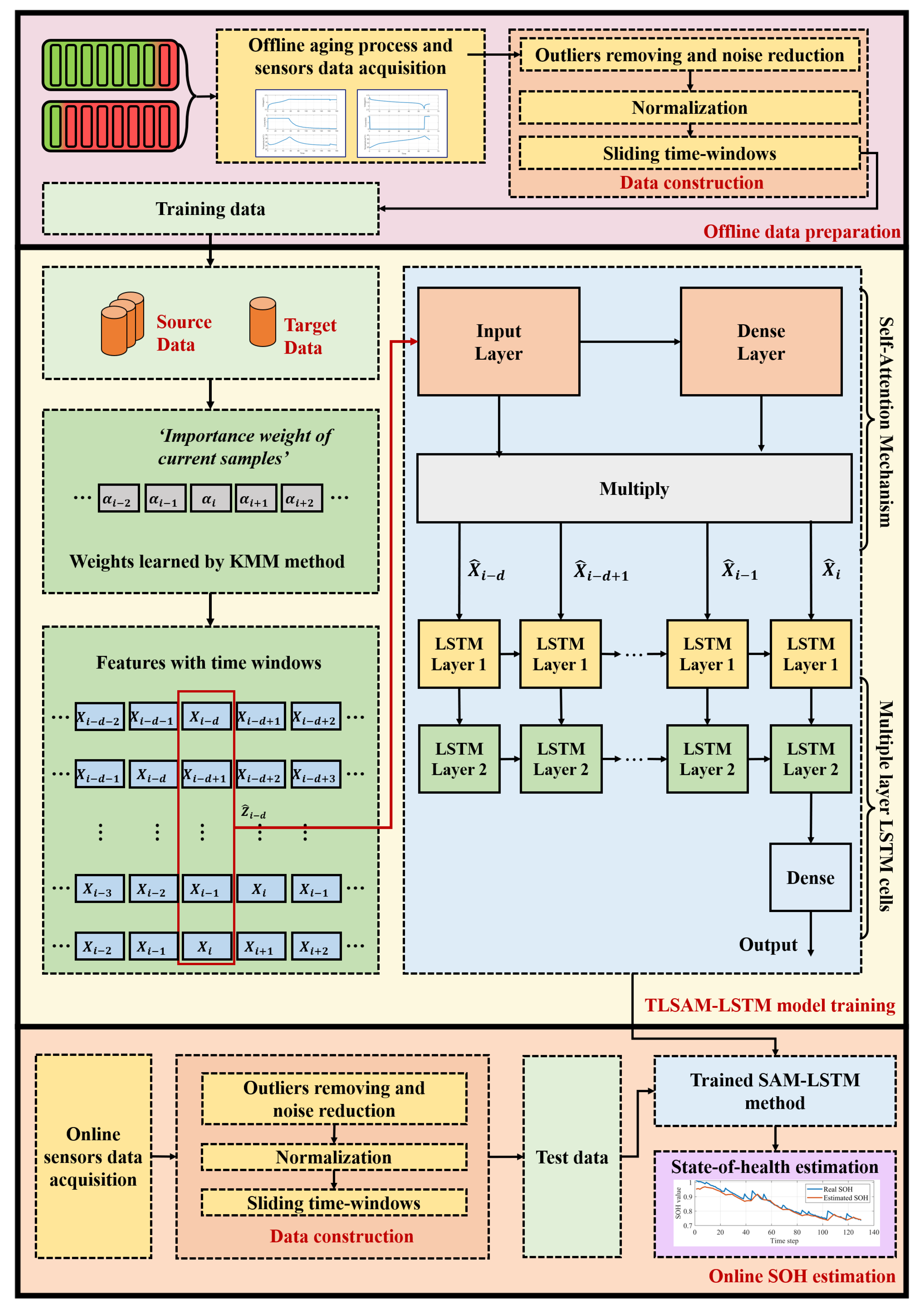

- This study employs sliding-window-based features to implement a data-driven battery health monitoring framework. Recognizing that each data point in the input matrix contributes differentially to the results, we incorporate feature weighting via a self-attention mechanism.

- For multi-source domains, data source quality significantly impacts model training outcomes and generalization capability in the target domain. We derive sample weights through a sample transfer approach that minimizes inter-dataset distribution distances, assigning higher weights to highly transferable samples to facilitate domain adaptation [30].

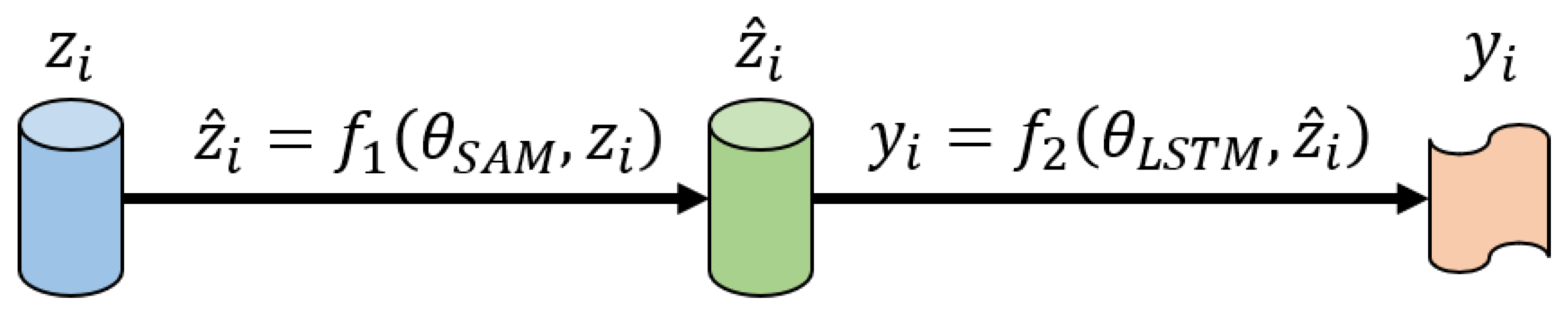

- Our methodology incorporates dual weighting across feature and sample dimensions. Employing a multi-layer LSTM architecture as the base estimator, we implement pre-training and fine-tuning strategies to effectively capture underlying data relationships and reduce estimation errors.

- Validation on the CALCE and NASA datasets demonstrates the superior performance of our proposed algorithm in comparative analysis. To enhance interpretability, we develop a visual representation of the importance weighting mechanism, illustrating the model’s focus during the training process.

2. Methodology

2.1. Discrepancy-Based Importance Weighting

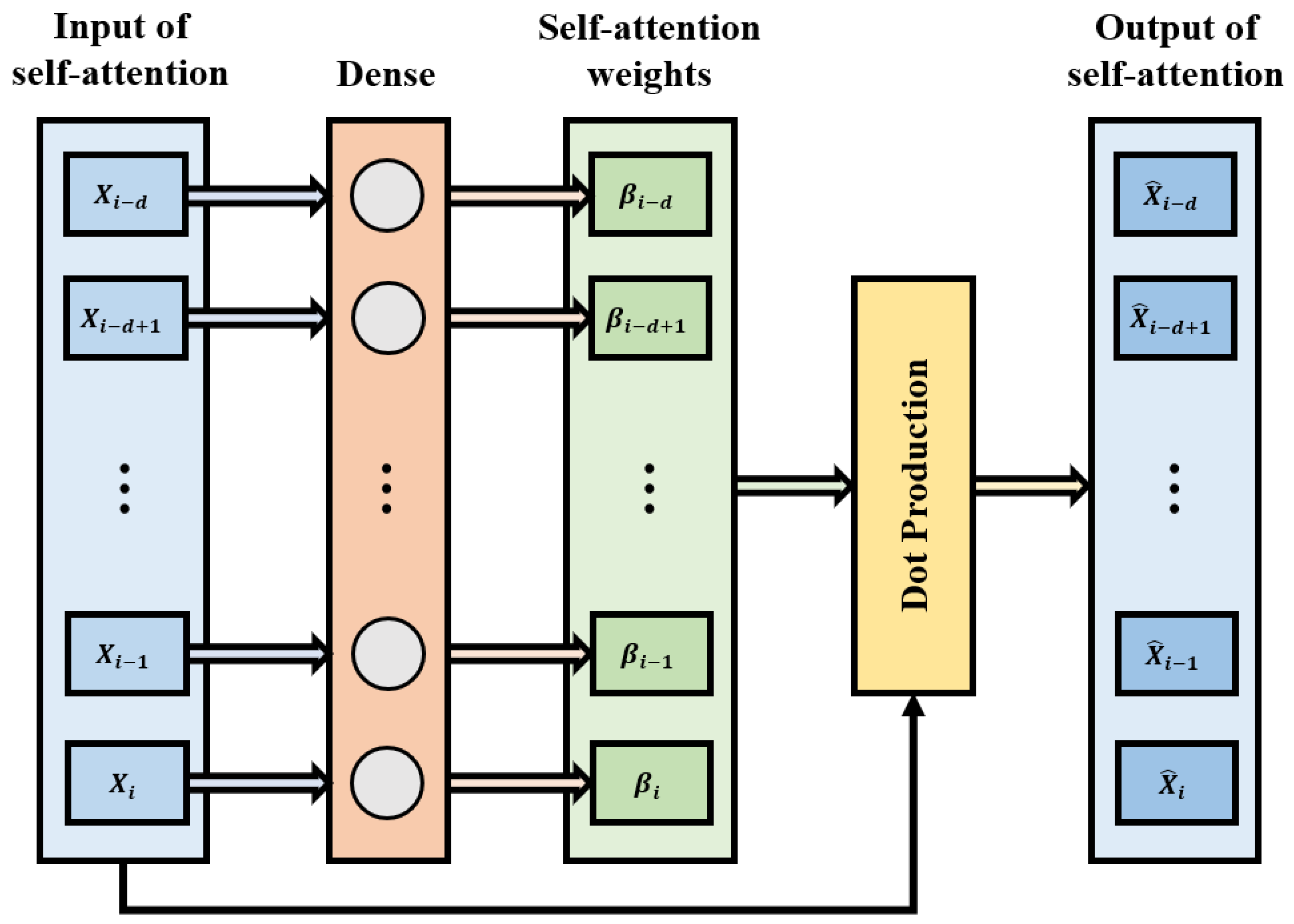

2.2. Self-Attention-Based Importance Weighting

2.3. Training Process

- Input gate: determine the useful information to the cell state in the current sequence.

- Forget gate: decide which part of the information is forgotten, and update cell state with input gate.

- Output gate: the state are used jointly to determine the current hidden state .

2.4. Pre-Train and Fine-Tuning

2.5. Algorithm Implementation

| Algorithm 1: Process of TLSAM-LSTM methods |

|

3. Experimental Results and Improvement Analysis

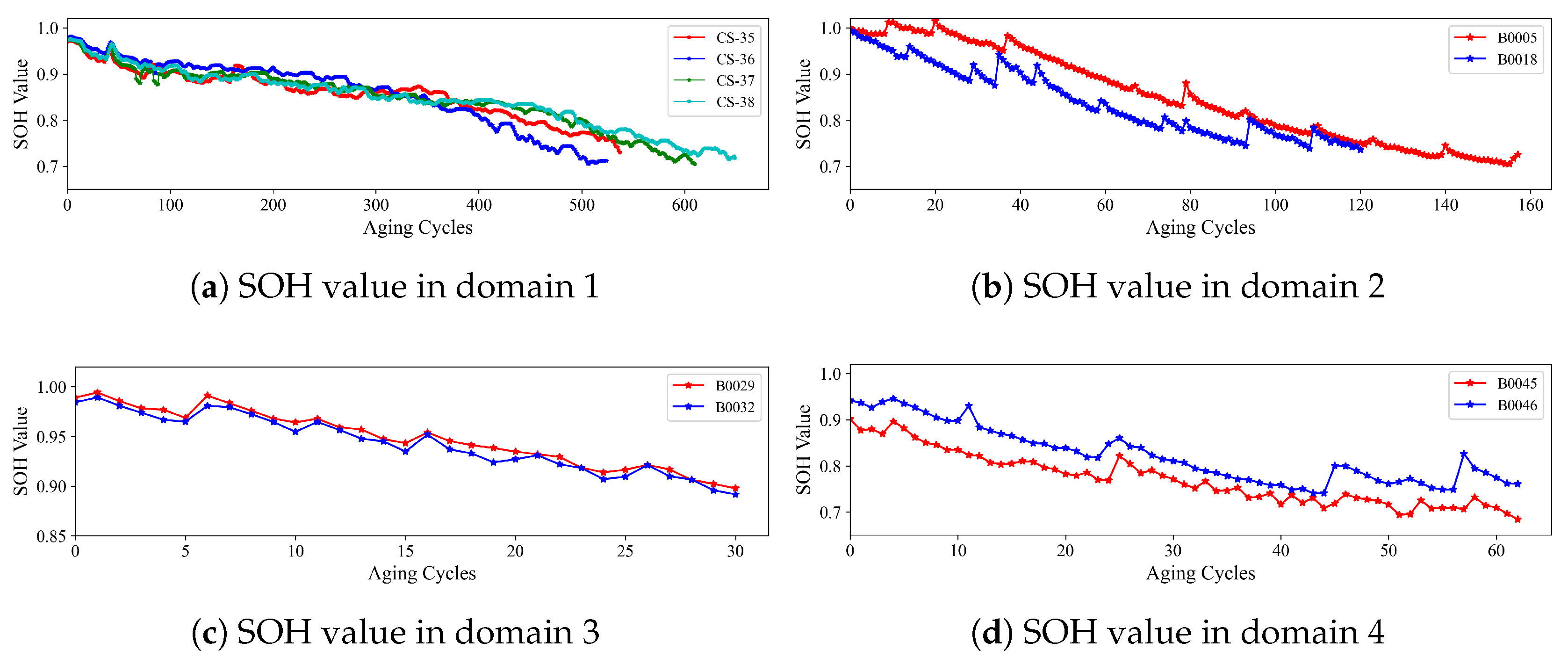

3.1. Datasets Selection and Preprocessing

3.1.1. Datasets Introduction

3.1.2. Feature Extraction

3.1.3. Preprocessing

- Outliers removing: Set as the standard deviation of a short time interval. In the short time duration, if any feature value exceeds the 2 of average, the related samples are set as an outlier. Outliers are removed to enhance the data quality.

- Noise reduction: Reduce the negative impact of noise on data quality by moving average method.

- Normalization: Features are transformed into a range of 0 to 1, which can reduce the impact of real data size imbalance.

3.1.4. Task Setting

3.2. Compared Algorithms and Hyper-Parameter Settings

- LSTM-NS (No Source): LSTM model is only trained by few known target datasets.

- CNN (Source): The Convolutional Neural Network automatically extracts local features from input data through its hierarchical architecture of convolutional, pooling, and fully-connected layers. This architecture progressively compresses information, reduces data redundancy, and ultimately enhances the model’s generalization capability.

- LSTM-S (Source): Source and target datasets are used to train the LSTM model, but there is no difference during training process.

- LSTM-PS: LSTM model is pre-trained by source data, and then fine-tuned by target data.

- TL-LSTM: Without SAM-based method, TL-LSTM combines the subsections A, C, and D in Section 3.

- SAM-LSTM: Without KMM-based method, SAM-LSTM combines the subsections B, C, and D in Section 3.

- TLSAM-LSTM: Proposed complete method that is described in Algorithm 1.

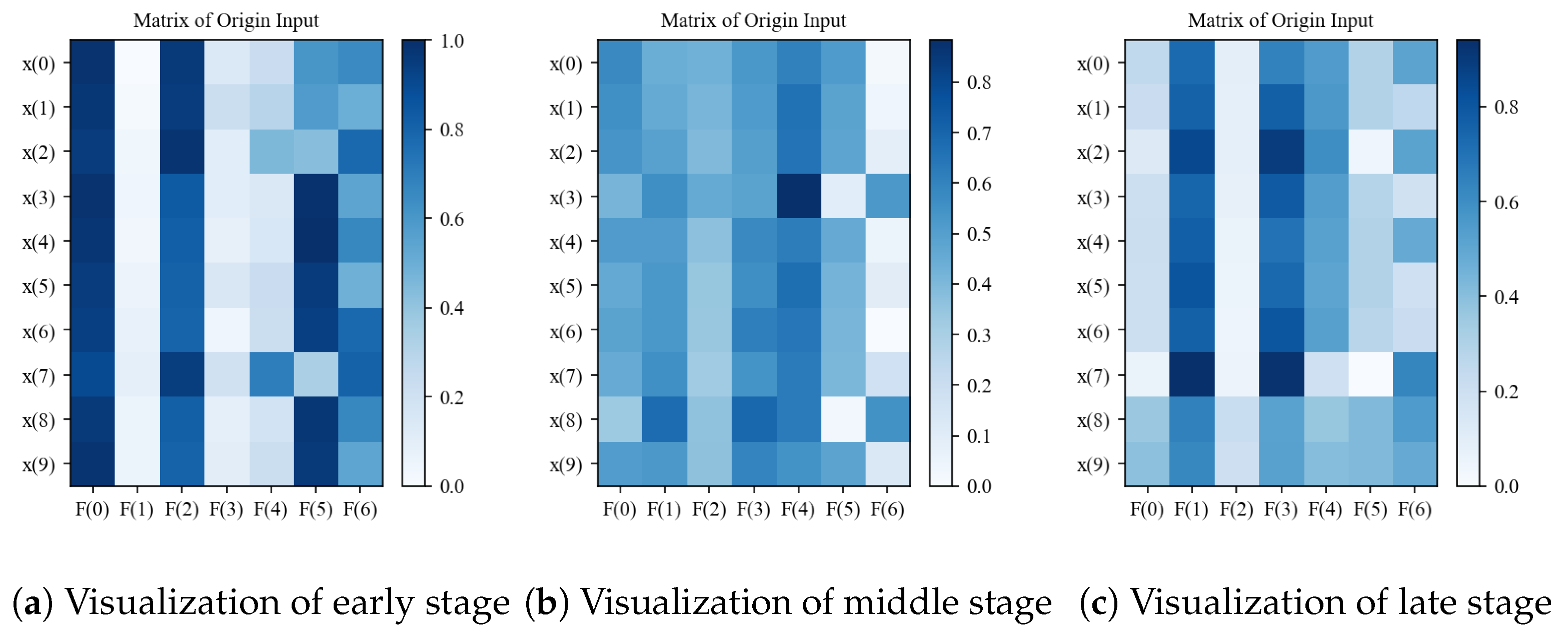

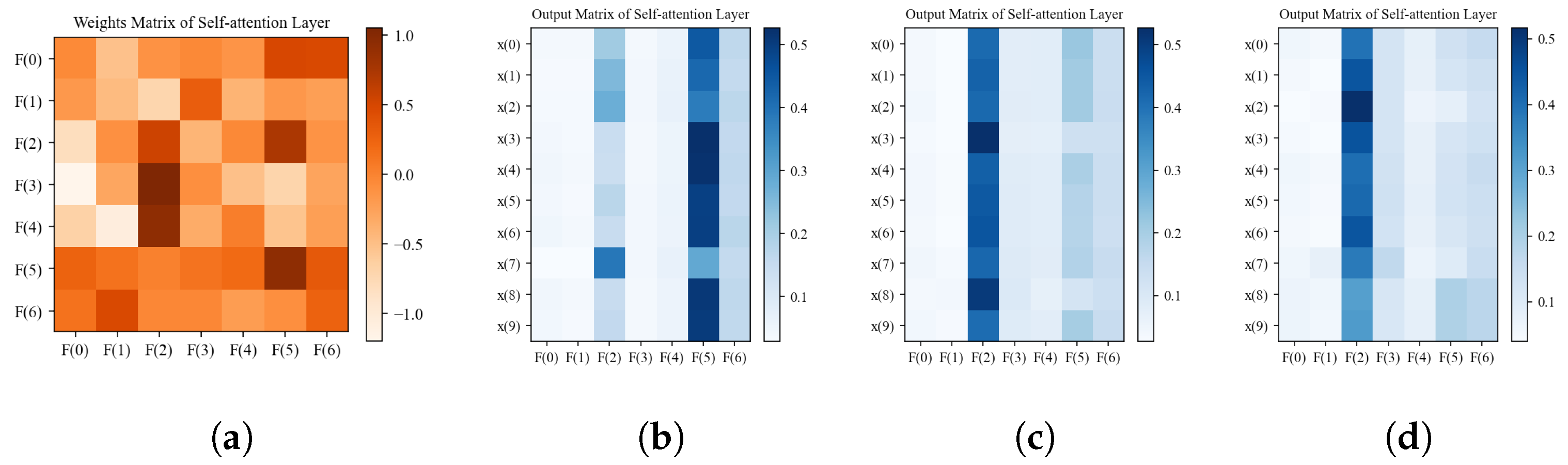

3.3. Interpretability Analysis of the Proposed Method

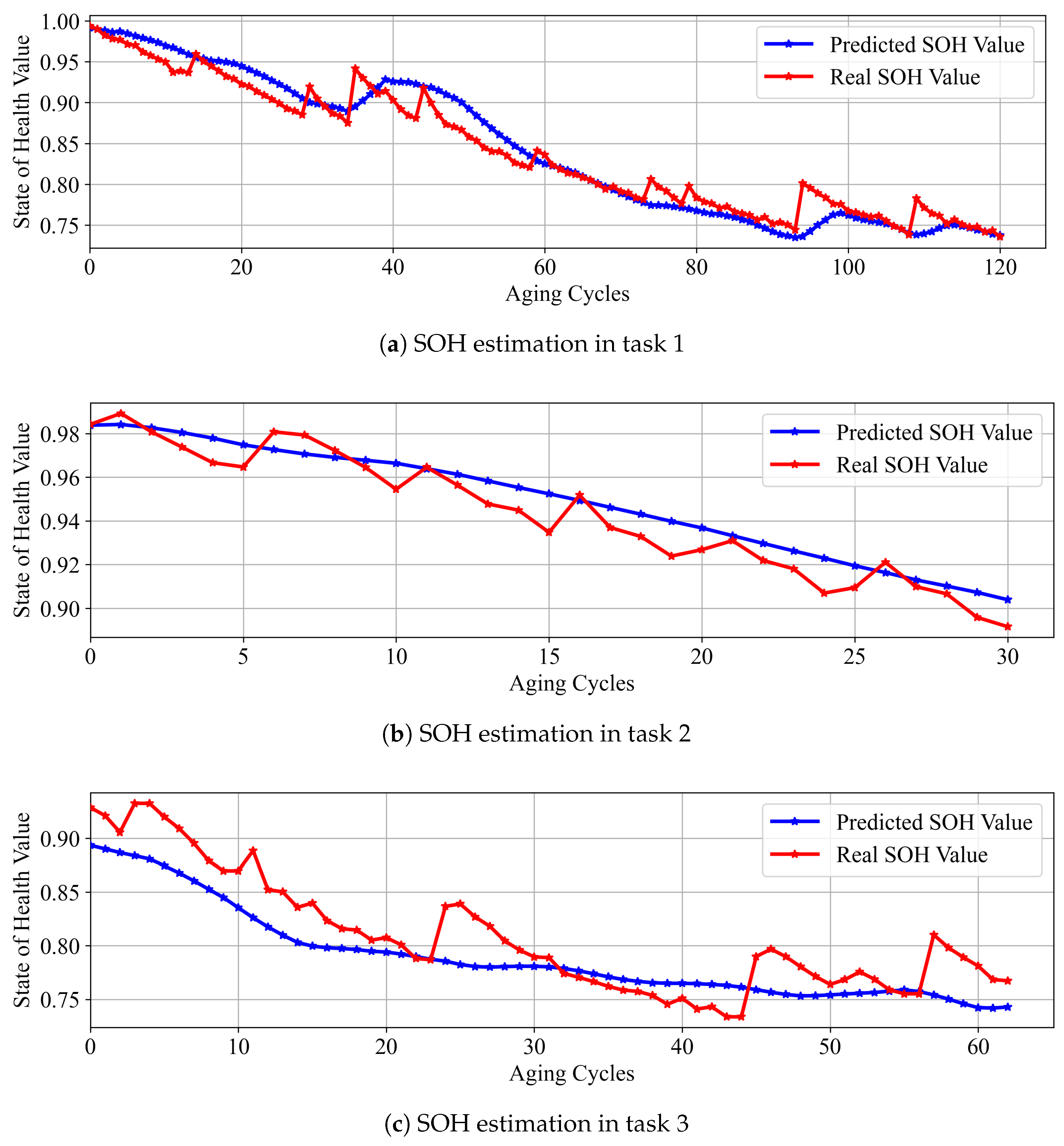

3.4. Results

Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yao, L.; Xu, S.; Tang, A.; Zhou, F.; Hou, J.; Xiao, Y.; Fu, Z. A review of lithium-ion battery state of health estimation and prediction methods. World Electr. Veh. J. 2021, 12, 113. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, Y.; Li, X.; Huo, M.; Luo, H.; Yin, S. An adaptive remaining useful life prediction approach for single battery with unlabeled small sample data and parameter uncertainty. Reliab. Eng. Syst. Saf. 2022, 222, 108357. [Google Scholar] [CrossRef]

- Ge, M.F.; Liu, Y.; Jiang, X.; Liu, J. A review on state of health estimations and remaining useful life prognostics of lithium-ion batteries. Measurement 2021, 174, 109057. [Google Scholar] [CrossRef]

- Belhachat, F.; Larbes, C.; Bennia, R. Recent advances in fault detection techniques for photovoltaic systems: An overview, classification and performance evaluation. Optik 2024, 306, 171797. [Google Scholar] [CrossRef]

- Tian, J.; Jiang, Y.; Zhang, J.; Luo, H.; Yin, S. A novel data augmentation approach to fault diagnosis with class-imbalance problem. Reliab. Eng. Syst. Saf. 2024, 243, 109832. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Alcaide, A.M.; Leon, J.I.; Vazquez, S.; Franquelo, L.G.; Luo, H.; Yin, S. Lifetime extension approach based on the Levenberg–Marquardt neural network and power routing of DC–DC converters. IEEE Trans. Power Electron. 2023, 38, 10280–10291. [Google Scholar] [CrossRef]

- Xiang, K.; Song, Y.; Ioannou, P.A. Nonlinear Adaptive PID Control for Nonlinear Systems. IEEE Trans. Autom. Control 2025, 70, 7000–7007. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, J.; Xia, L.; Liu, Y. State of health estimation for lithium-ion battery using empirical degradation and error compensation models. IEEE Access 2020, 8, 123858–123868. [Google Scholar] [CrossRef]

- Li, Y.; Wei, Z.; Xie, C.; Vilathgamuwa, D.M. Physics-Based Model Predictive Control for Power Capability Estimation of Lithium-Ion Batteries. IEEE Trans. Ind. Inform. 2023, 19, 10763–10774. [Google Scholar] [CrossRef]

- Wang, S.; Gao, H.; Takyi-Aninakwa, P.; Guerrero, J.M.; Fernandez, C.; Huang, Q. Improved Multiple Feature–Electrochemical Thermal Coupling Modeling of Lithium-Ion Batteries at Low-Temperature with Real-Time Coefficient Correction. Prot. Control Mod. Power Syst. 2024, 9, 157–173. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, H.; Kang, L.; Zhang, Y.; Wang, L.; Wang, K. Prediction of Health Level of Multiform Li–S Batteries Based on Incremental Capacity Analysis and an Improved LSTM. Prot. Control Mod. Power Syst. 2024, 9, 21–31. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Y.; Li, B.; Luo, D. Battery states online estimation based on exponential decay particle swarm optimization and proportional-integral observer with a hybrid battery model. Energy 2020, 191, 116509. [Google Scholar] [CrossRef]

- Lin, C.; Xu, J.; Hou, J.; Liang, Y.; Mei, X. Ensemble Method with Heterogeneous Models for Battery State-of-Health Estimation. IEEE Trans. Ind. Inform. 2023, 19, 10160–10169. [Google Scholar] [CrossRef]

- Guo, P.; Cheng, Z.; Yang, L. A data-driven remaining capacity estimation approach for lithium-ion batteries based on charging health feature extraction. J. Power Sources 2019, 412, 442–450. [Google Scholar] [CrossRef]

- Li, X.; Sun, Y.; Lin, J.; Yin, S. The Synergy of Seeing and Saying: Revolutionary Advances in Multi-modality Medical Vision-Language Large Models. Artif. Intell. Sci. Eng. 2025, 1, 79–97. [Google Scholar] [CrossRef]

- Zhang, J.; Qian, K.; Luo, H.; Liu, Y.; Qiao, X.; Xu, X.; Tian, J. Process monitoring for tower pumping units under variable operational conditions: From an integrated multitasking perspective. Control Eng. Pract. 2025, 156, 106229. [Google Scholar] [CrossRef]

- Chang, C.; Wang, Q.; Jiang, J.; Wu, T. Lithium-ion battery state of health estimation using the incremental capacity and wavelet neural networks with genetic algorithm. J. Energy Storage 2021, 38, 102570. [Google Scholar] [CrossRef]

- Choi, Y.; Ryu, S.; Park, K.; Kim, H. Machine learning-based lithium-ion battery capacity estimation exploiting multi-channel charging profiles. IEEE Access 2019, 7, 75143–75152. [Google Scholar] [CrossRef]

- Li, P.; Zhang, Z.; Xiong, Q.; Ding, B.; Hou, J.; Luo, D.; Rong, Y.; Li, S. State-of-health estimation and remaining useful life prediction for the lithium-ion battery based on a variant long short term memory neural network. J. Power Sources 2020, 459, 228069. [Google Scholar] [CrossRef]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A theory of learning from different domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Qian, Q.; Zhang, B.; Li, C.; Mao, Y.; Qin, Y. Federated transfer learning for machinery fault diagnosis: A comprehensive review of technique and application. Mech. Syst. Signal Process. 2025, 223, 111837. [Google Scholar] [CrossRef]

- Yu, F.; Xiu, X.; Li, Y. A Survey on Deep Transfer Learning and Beyond. Mathematics 2022, 10, 3619. [Google Scholar] [CrossRef]

- Qin, Y.; Yuen, C.; Yin, X.; Huang, B. A Transferable Multi-stage Model with Cycling Discrepancy Learning for Lithium-ion Battery State of Health Estimation. IEEE Trans. Ind. Inform. 2022, 19, 1933–1946. [Google Scholar] [CrossRef]

- Li, Y.; Sheng, H.; Cheng, Y.; Stroe, D.I.; Teodorescu, R. State-of-health estimation of lithium-ion batteries based on semi-supervised transfer component analysis. Appl. Energy 2020, 277, 115504. [Google Scholar] [CrossRef]

- Ye, Z.; Yu, J. State-of-Health Estimation for Lithium-Ion Batteries Using Domain Adversarial Transfer Learning. IEEE Trans. Power Electron. 2021, 37, 3528–3543. [Google Scholar] [CrossRef]

- Deng, Z.; Lin, X.; Cai, J.; Hu, X. Battery health estimation with degradation pattern recognition and transfer learning. J. Power Sources 2022, 525, 231027. [Google Scholar] [CrossRef]

- Tan, Z.; Luo, L.; Zhong, J. Knowledge transfer in evolutionary multi-task optimization: A survey. Appl. Soft Comput. 2023, 138, 110182. [Google Scholar] [CrossRef]

- Qian, Q.; Wen, Q.; Tang, R.; Qin, Y. DG-Softmax: A new domain generalization intelligent fault diagnosis method for planetary gearboxes. Reliab. Eng. Syst. Saf. 2025, 260, 111057. [Google Scholar] [CrossRef]

- Tian, J.; Luo, H.; Wu, S.; Yan, P.; Zhang, J. Source-Free Domain Adaptation for Open-Set Cross-Domain Fault Diagnosis. IEEE Trans. Ind. Inform. 2025. [Google Scholar] [CrossRef]

- Zhao, K.; Lin, F.; Liu, X. Comprehensive production index prediction using dual-scale deep learning in mineral processing. arXiv 2024, arXiv:2408.02694. [Google Scholar]

- Xing, Y.; Ma, E.; Tsui, K.L.; Pecht, M. An ensemble model for predicting the remaining useful performance of lithium-ion batteries. Microelectron. Reliab. 2013, 53, 811–820. [Google Scholar] [CrossRef]

- Saha, B.; Goebel, K. Battery Data Set. NASA Ames Prognostics Data Repository (2007). Available online: http://ti.arc.nasa.gov/project/prognostic-data-repository (accessed on 1 January 2025).

- Tan, Y.; Zhao, G. Transfer learning with long short-term memory network for state-of-health prediction of lithium-ion batteries. IEEE Trans. Ind. Electron. 2019, 67, 8723–8731. [Google Scholar] [CrossRef]

- Qi, F.; Zhou, Y.; Zhang, Y.; Shen, X.; Hou, E.; Ci, S. Topology Construction Based on Graph Theory for SOC Balancing in Dynamic Reconfigurable Battery System. In Proceedings of the 2025 IEEE International Conference on Electrical Energy Conversion Systems and Control (IEECSC), Chongqing, China, 23–25 May 2025; pp. 615–622. [Google Scholar] [CrossRef]

- Li, Q.; Tan, H.; Wang, T.; Wang, S.; Chen, W. Hierarchical and Domain-Partitioned Coordinated Control Method for Large-Scale Fuel Cell/Battery Cluster Hybrid Power Electric Multiple Units. IEEE Trans. Transp. Electrif. 2025. [Google Scholar] [CrossRef]

- Zhang, M.; Han, Y.; Liu, Y.; Zalhaf, A.S.; Zhao, E.; Mahmoud, K.; Darwish, M.M.F.; Blaabjerg, F. Multi-Timescale Modeling and Dynamic Stability Analysis for Sustainable Microgrids: State-of-the-Art and Perspectives. Prot. Control Mod. Power Syst. 2024, 9, 1–35. [Google Scholar] [CrossRef]

- Kiruthiga, B.; Karthick, R.; Manju, I.; Kondreddi, K. Optimizing harmonic mitigation for smooth integration of renewable energy: A novel approach using atomic orbital search and feedback artificial tree control. Prot. Control Mod. Power Syst. 2024, 9, 160–176. [Google Scholar] [CrossRef]

| Information | Domain 1 | Domain 2 | Domain 3 | Domain 4 |

|---|---|---|---|---|

| Data Source | CALCE | NASA | NASA | NASA |

| Used Datasets | CS-35∼38 | B-05/18 | B-29/32 | B-45/47 |

| Num of Samples | 2324 | 297 | 62 | 126 |

| (A) | 1 | 2 | 4 | 1 |

| (V) | 2.7 | (2.7/2.5) | (2.0/2.7) | (2.0/2.5) |

| C | - | 24 | 24 | 4 |

| (Ahr) | 1.1 | 2 | 2 | 2 |

| Other Information | cathode prismatic shape | 18,650 lithium-ion cells | ||

| Num | Feature Instrument |

|---|---|

| Time ratio between CC and CV stage | |

| Average voltage of early CC stage (Start to 3.85 V) | |

| Time interval of early CC stage | |

| Time interval of later CC stage | |

| Average voltage of later CC stage (3.85 V to end) | |

| Time interval of CV stage | |

| Average current of CV stage |

| Task | Training Set | Test Set | |

|---|---|---|---|

| Source | Known Target | Unknown Target | |

| Task 1 | Domain 1,3,4 | NASA B0005 | NASA B0018 |

| Task 2 | Domain 1,2,4 | NASA B0029 | NASA B0032 |

| Task 3 | Domain 1,2,3 | NASA B0045 | NASA B0047 |

| Hyper-Parameters | Configuration |

|---|---|

| Feature variables | 7 |

| Sliding time-windows | 10 |

| Attention layers | 7/16 |

| LSTM layers | 128/64 |

| Learning rate | 0.01 |

| Epoch (pre-train step) | 100 |

| Epoch (fine-tuning step) | 50 |

| Batchsize | 64 |

| Loss function | Mean square error |

| Optimizer | Adam |

| Task | Methods | MAPE | RMSE |

|---|---|---|---|

| Task 1 | LSTM-NS | 4.4888 ± 1.0082 | 0.0416 ± 0.0080 |

| CNN-S | 3.0236 ± 0.2867 | 0.0320 ± 0.0031 | |

| LSTM-S | 3.0082 ± 0.5733 | 0.0313 ± 0.0051 | |

| LSTM-PS | 2.6763 ± 0.3740 | 0.0281 ± 0.0034 | |

| TL-LSTM | 2.6279 ± 0.3404 | 0.0278 ± 0.0032 | |

| SAM-LSTM | 1.9826 ± 0.3758 | 0.0212 ± 0.0034 | |

| TLSAM-LSTM | 1.8005 ± 0.3719 | 0.0198 ± 0.0032 | |

| Task 2 | LSTM-NS | 1.2248 ± 0.6032 | 0.0132 ± 0.0059 |

| CNN-S | 5.1264 ± 0.3545 | 0.0588 ±0.0031 | |

| LSTM-S | 5.9450 ± 1.3516 | 0.0613 ± 0.0114 | |

| LSTM-PS | 0.9295 ± 0.1491 | 0.0100 ± 0.0016 | |

| TL-LSTM | 0.8990 ± 0.1510 | 0.0097 ± 0.0016 | |

| SAM-LSTM | 0.9083 ± 0.2443 | 0.0098 ± 0.0023 | |

| TLSAM-LSTM | 0.7944 ± 0.1106 | 0.0086 ± 0.0011 | |

| Task 3 | LSTM-NS | 4.7160 ± 1.2634 | 0.0504 ± 0.0169 |

| CNN-S | 5.2641 ± 0.8841 | 0.0498 ± 0.0079 | |

| LSTM-S | 3.7969 ± 0.7257 | 0.0358 ± 0.0066 | |

| LSTM-PS | 3.5022 ± 0.8344 | 0.0344 ± 0.0074 | |

| TL-LSTM | 3.0893 ± 0.5470 | 0.0296 ± 0.0048 | |

| SAM-LSTM | 2.8358 ± 0.6000 | 0.0297 ± 0.0056 | |

| TLSAM-LSTM | 2.5917 ± 0.5087 | 0.0282 ± 0.0045 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, R.; Wang, C.; Yin, C.; Yang, S.; Wang, Y.; Fang, Y.; Chen, K.; Zhang, J. Instance-Based Transfer Learning-Improved Battery State-of-Health Estimation with Self-Attention Mechanism. Energies 2025, 18, 5672. https://doi.org/10.3390/en18215672

He R, Wang C, Yin C, Yang S, Wang Y, Fang Y, Chen K, Zhang J. Instance-Based Transfer Learning-Improved Battery State-of-Health Estimation with Self-Attention Mechanism. Energies. 2025; 18(21):5672. https://doi.org/10.3390/en18215672

Chicago/Turabian StyleHe, Renjun, Chunxiao Wang, Chun Yin, Shang Yang, Yifan Wang, Yuanpeng Fang, Kai Chen, and Jiusi Zhang. 2025. "Instance-Based Transfer Learning-Improved Battery State-of-Health Estimation with Self-Attention Mechanism" Energies 18, no. 21: 5672. https://doi.org/10.3390/en18215672

APA StyleHe, R., Wang, C., Yin, C., Yang, S., Wang, Y., Fang, Y., Chen, K., & Zhang, J. (2025). Instance-Based Transfer Learning-Improved Battery State-of-Health Estimation with Self-Attention Mechanism. Energies, 18(21), 5672. https://doi.org/10.3390/en18215672