AI-Powered Digital Twin Co-Simulation Framework for Climate-Adaptive Renewable Energy Grids

Abstract

1. Introduction

- A modular AI-integrated digital twin framework is developed that couples HELICS middleware with OpenDSS (v9.2.0), ERA5 (ECMWF, 2018–2023) climate data, and a reinforcement learning controller for climate-adaptive operation.

- The framework explicitly formulates resilience-oriented objectives within a Markov Decision Process (MDP) structure, ensuring reproducibility and extensibility.

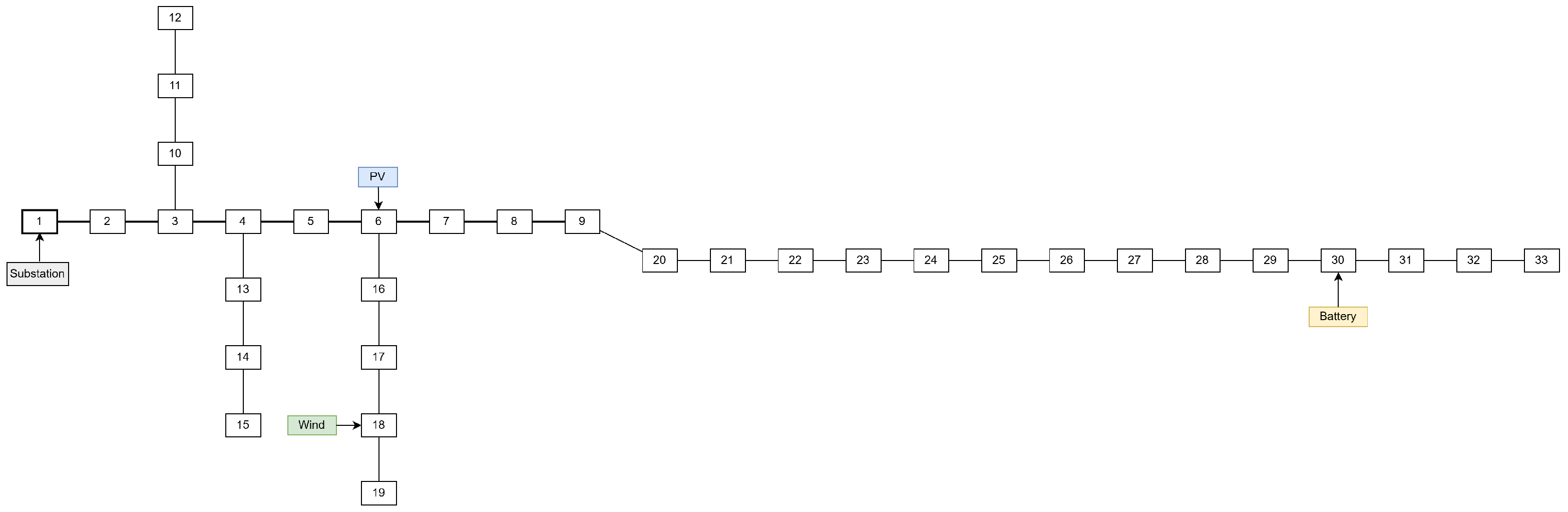

- A co-simulation case study on the IEEE 33-bus distribution network demonstrates the efficacy of the approach under extreme weather events.

2. Related Work

2.1. Digital Twins in Renewable Energy Systems

2.2. Climate-Integrated Energy Modeling

2.3. AI for Adaptive Grid Control

2.4. Energy System Co-Simulation Platforms

2.5. Research Gaps

3. System Model and Architecture

3.1. Physical Grid Model

- is the set of buses (nodes),

- is the set of distribution lines (edges).

3.2. Renewable Generation Modeling

3.3. Climate Dynamics Model

- Ambient temperature ,

- Wind speed ,

- Precipitation ,

- Solar irradiance .

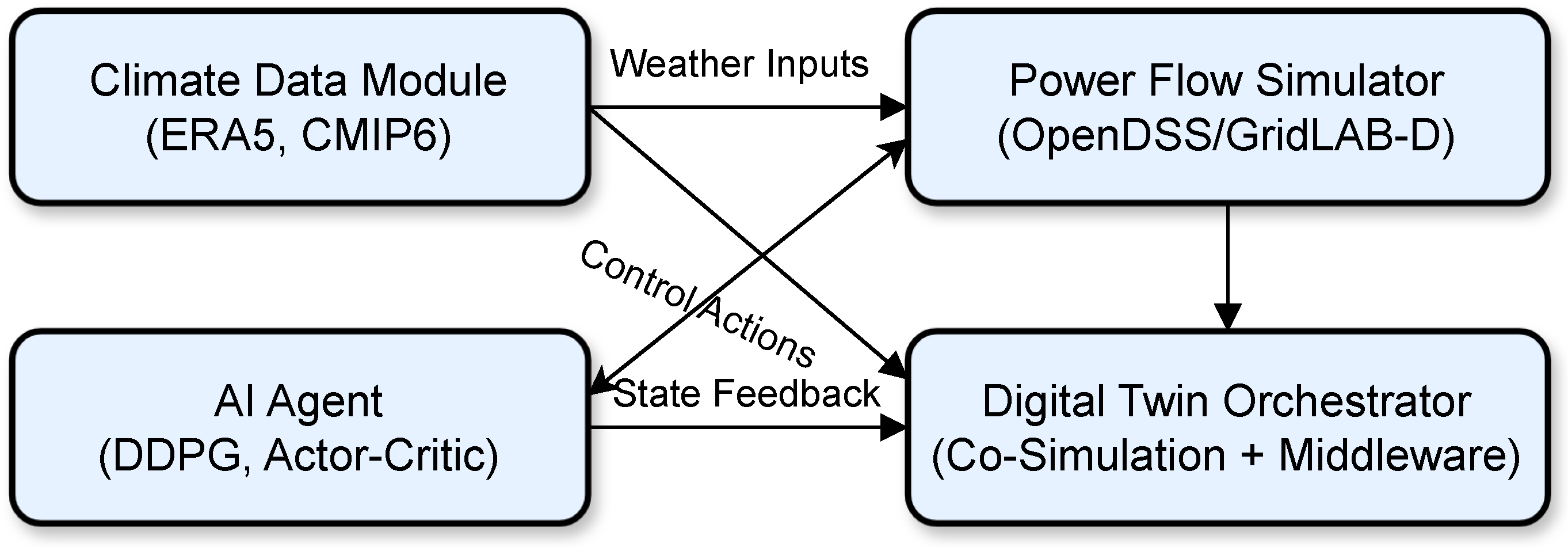

3.4. Digital Twin Co-Simulation Framework

- Power system simulator (e.g., OpenDSS),

- Climate forecast module (e.g., ERA5),

- AI-based controller.

3.5. AI-Based Control and Optimization Model

- : active power losses or curtailments at time t,

- : aggregated voltage deviations across all nodes,

- : resilience reward capturing the quality of post-disturbance recovery,

- : weighting factors used to balance the competing objectives.

3.6. System Architecture Diagram

4. Problem Formulation

4.1. Decision Variables

- : dispatch setpoints of DERs (e.g., active/reactive power),

- : binary load shedding indicator at node i,

- : inverter voltage control angle or droop setting.

4.2. Objective Function

4.3. Power Flow Constraints

4.4. Climate-Coupled Renewable Generation

4.5. Resilience Metric

5. Methodology

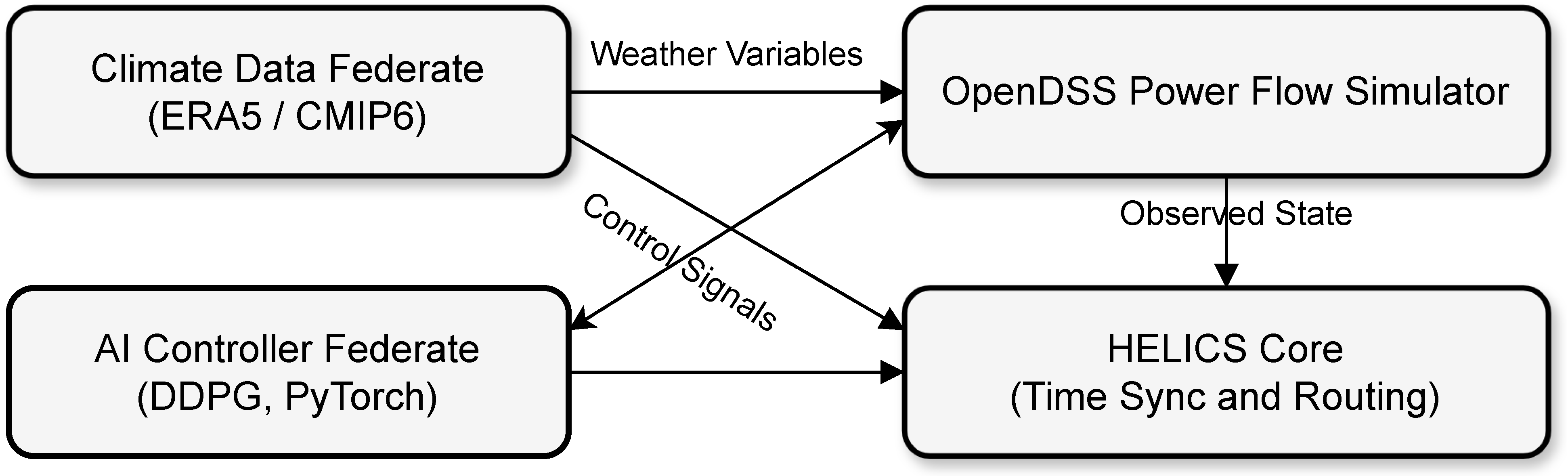

5.1. Co-Simulation Framework Integration

- Power Flow Simulator: Simulates grid dynamics based on nonlinear AC equations using OpenDSS.

- Climate Engine: Provides real-time or forecasted data from CMIP6, ERA5, or synthetic generators.

- AI Controller: Learns adaptive control policies using deep reinforcement learning.

5.2. AI-Based Control Policy via Deep Reinforcement Learning

| Algorithm 1 Climate-Adaptive Grid Control via DDPG |

|

5.3. Deployment Workflow

- 1.

- Input: Current grid state , forecasted climate ;

- 2.

- Compute action ;

- 3.

- Apply to digital twin simulator;

- 4.

- Observe and repeat.

6. Case Study and Experimental Setup

6.1. Test System Description

- Number of buses: 33;

- Number of feeders: 32;

- Base power: 100 MVA;

- Voltage level: 12.66 kV;

- Load type: static (constant PQ).

6.2. Climate Scenario Generation

- Historical Climate Data: 5 years of hourly weather data are extracted from the ERA5 reanalysis database [33].

- Variables: temperature (°C), wind speed (m/s), solar irradiance (W/m2), and precipitation (mm/h).

- Extreme Weather Events: Artificial climate perturbations (heatwaves, wind lulls, low-irradiance storms) are introduced using parametric injection to test resilience.

6.3. Load and Demand Profile

- Residential: Based on scaled household profiles with peak demand between 6–9 a.m. and 5–9 p.m.

- Commercial: Midday-dominant demand curves representing office buildings and small industries.

- Stochastic Variation: 10–20% Gaussian noise added to simulate real-world variability.

6.4. AI Model Hyperparameters

- Actor–critic layers: [128, 128] with ReLU activation;

- Learning rate: (actor), (critic);

- Discount factor: ;

- Replay buffer size: transitions;

- Exploration noise: Ornstein–Uhlenbeck process with .

6.5. Co-Simulation Environment

- Power System Simulator: OpenDSS (via DSSL and Python bindings);

- Climate Data Module: ERA5 via Climate Data Store (CDS) API;

- AI Controller: PyTorch (Python 3.10);

- Middleware: HELICS v3.1.0 with federate time coordination;

- Hardware: Ubuntu 22.04 server with Intel Xeon CPU (Intel Corporation, Santa Clara, CA, USA) and 64 GB RAM.

7. Results and Discussion

7.1. Voltage Profile Regulation

7.2. Energy Loss Reduction

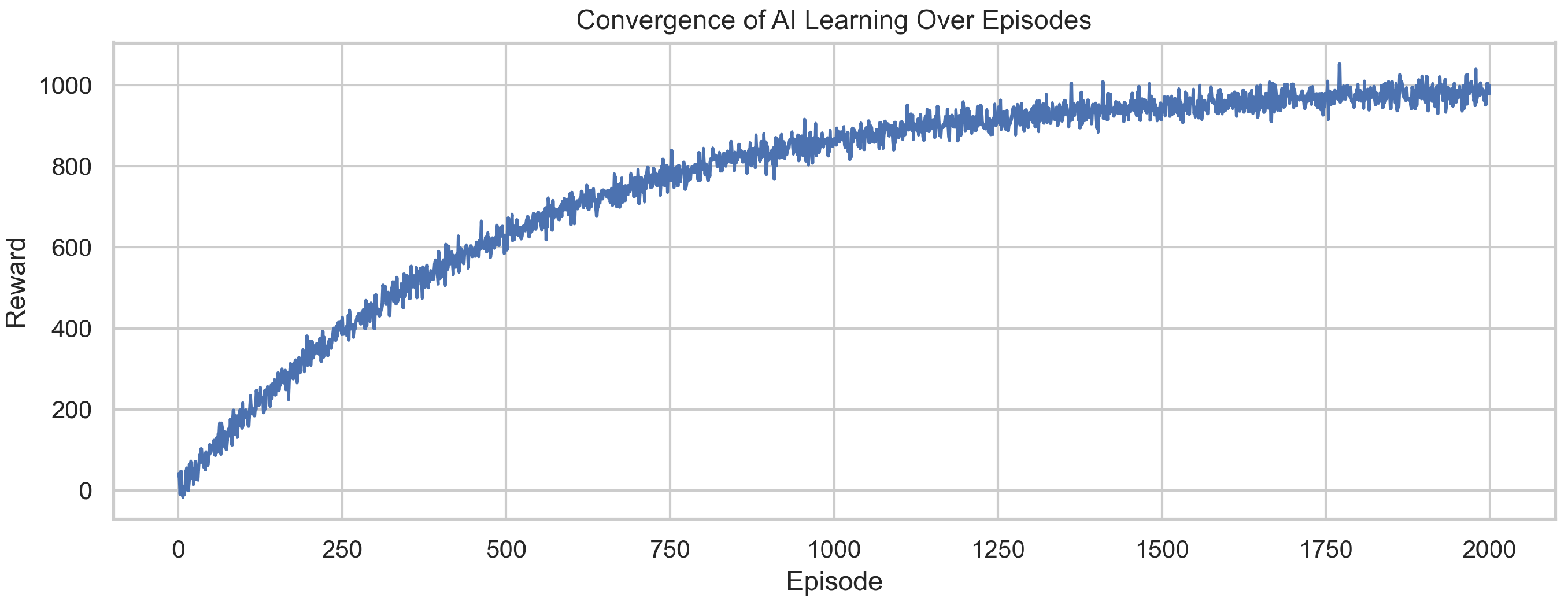

7.3. AI Learning Convergence

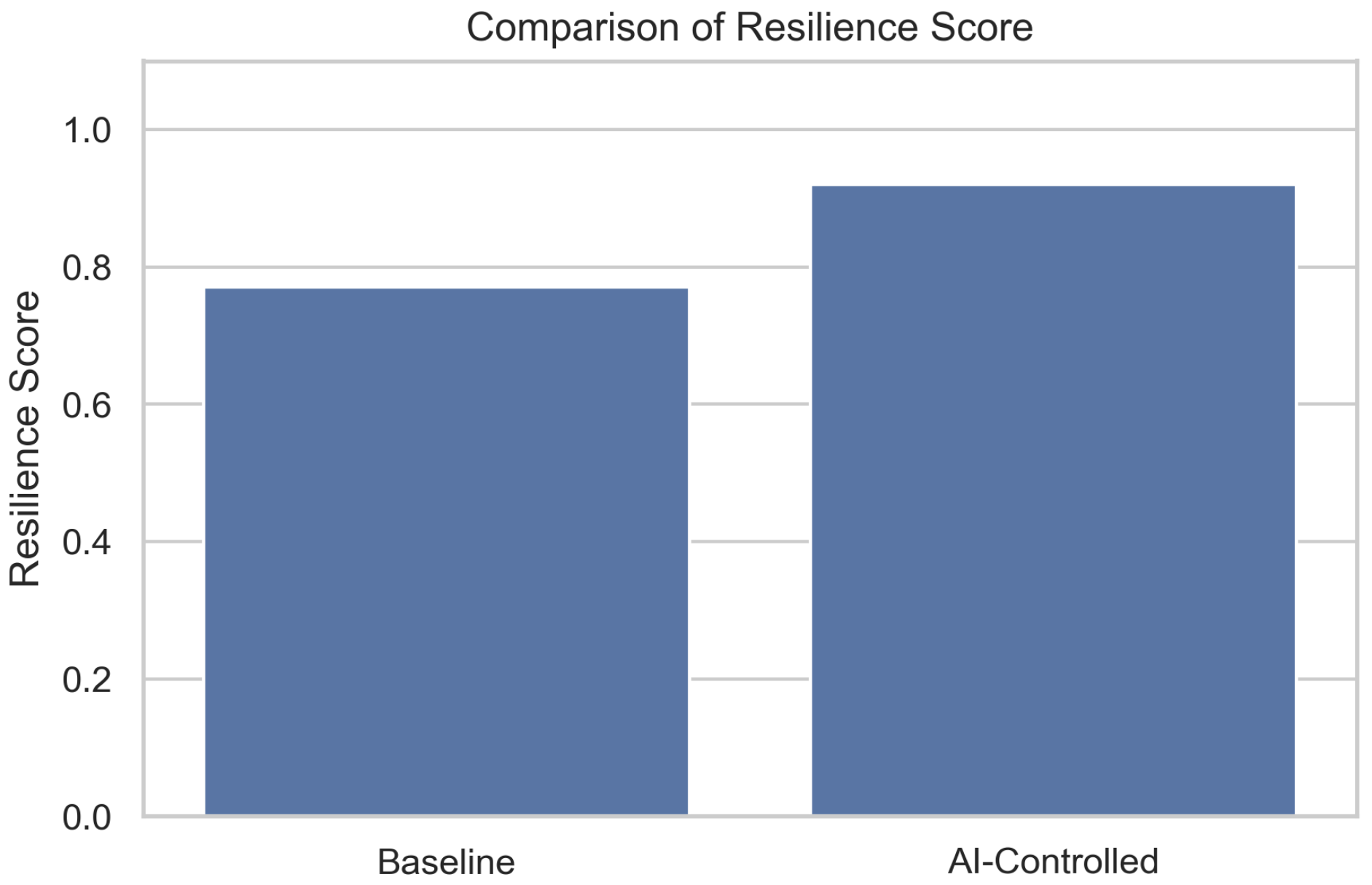

7.4. Resilience Enhancement

7.5. DER Dispatch Profiles

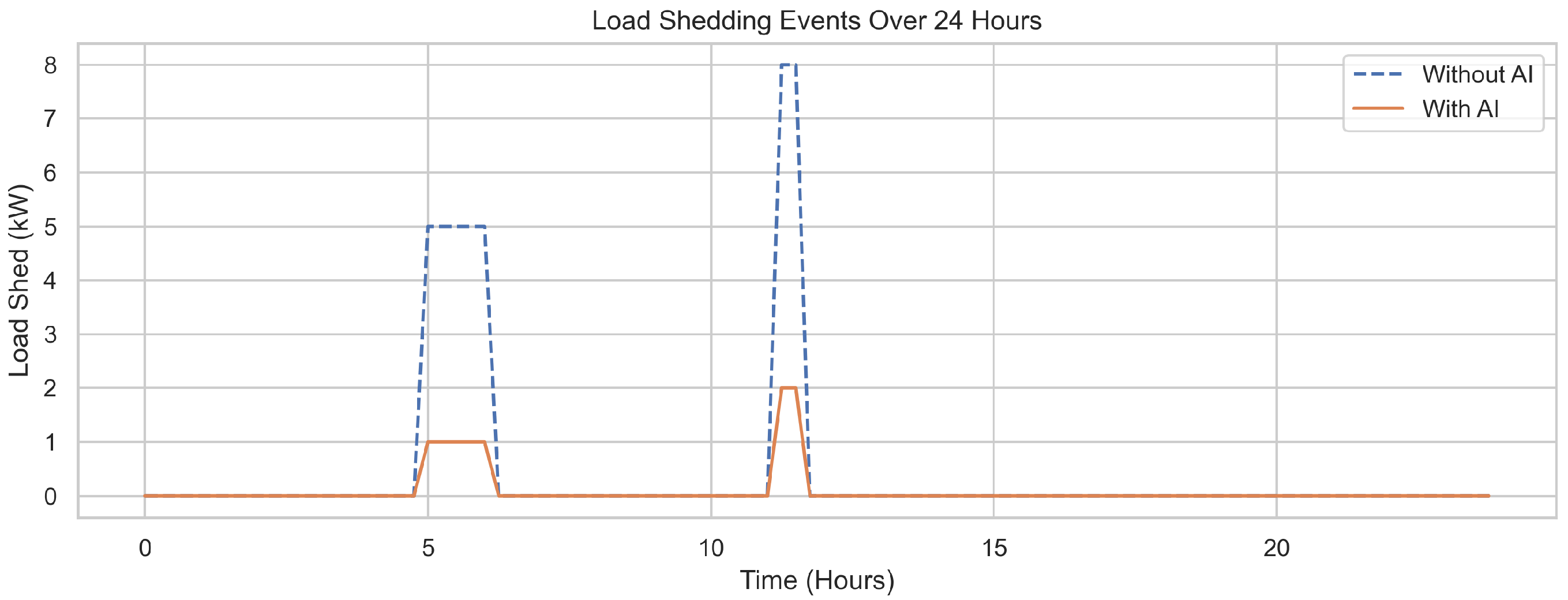

7.6. Load Shedding Reduction

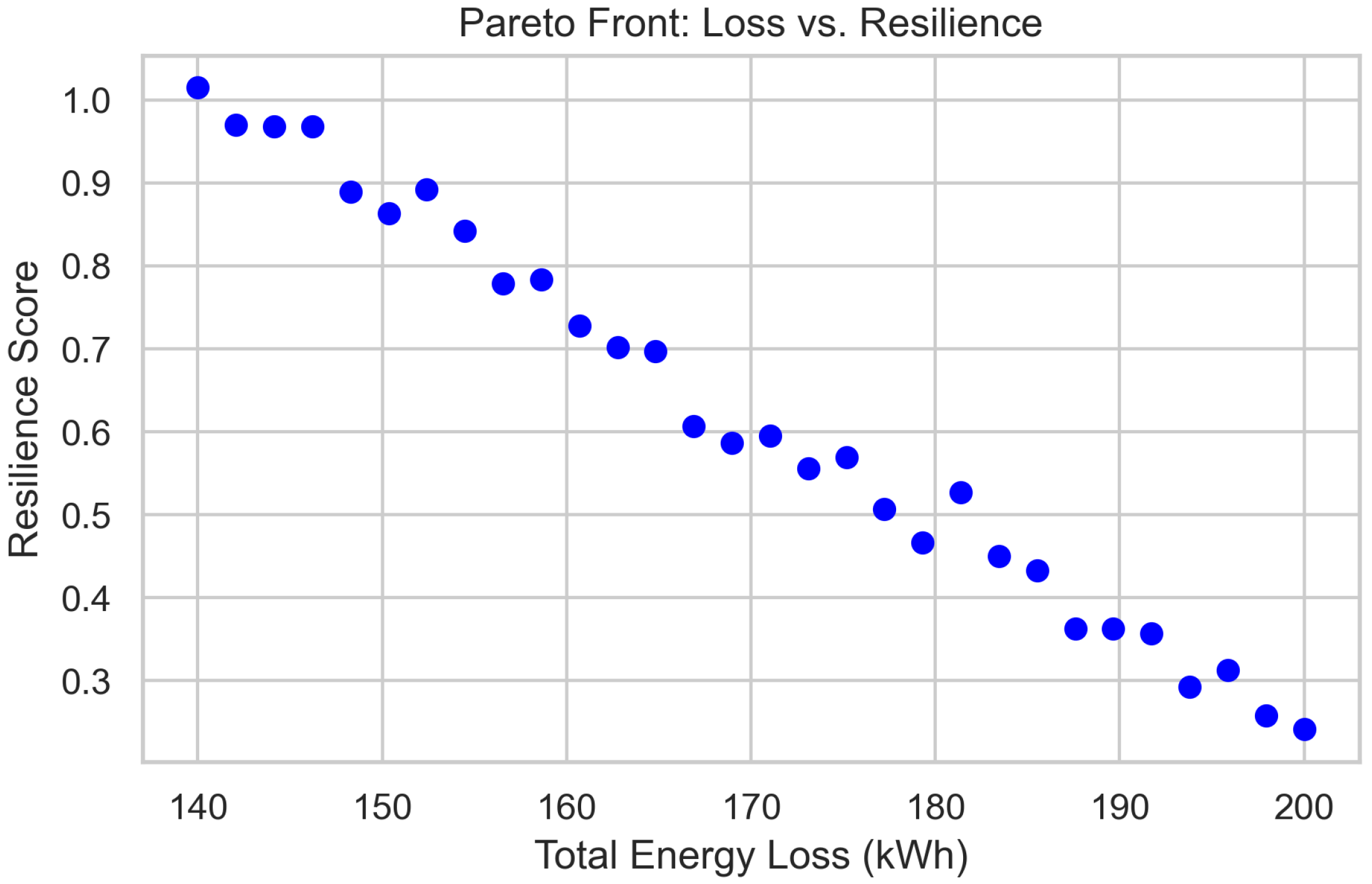

7.7. Multi-Objective Trade-Offs: Pareto Front

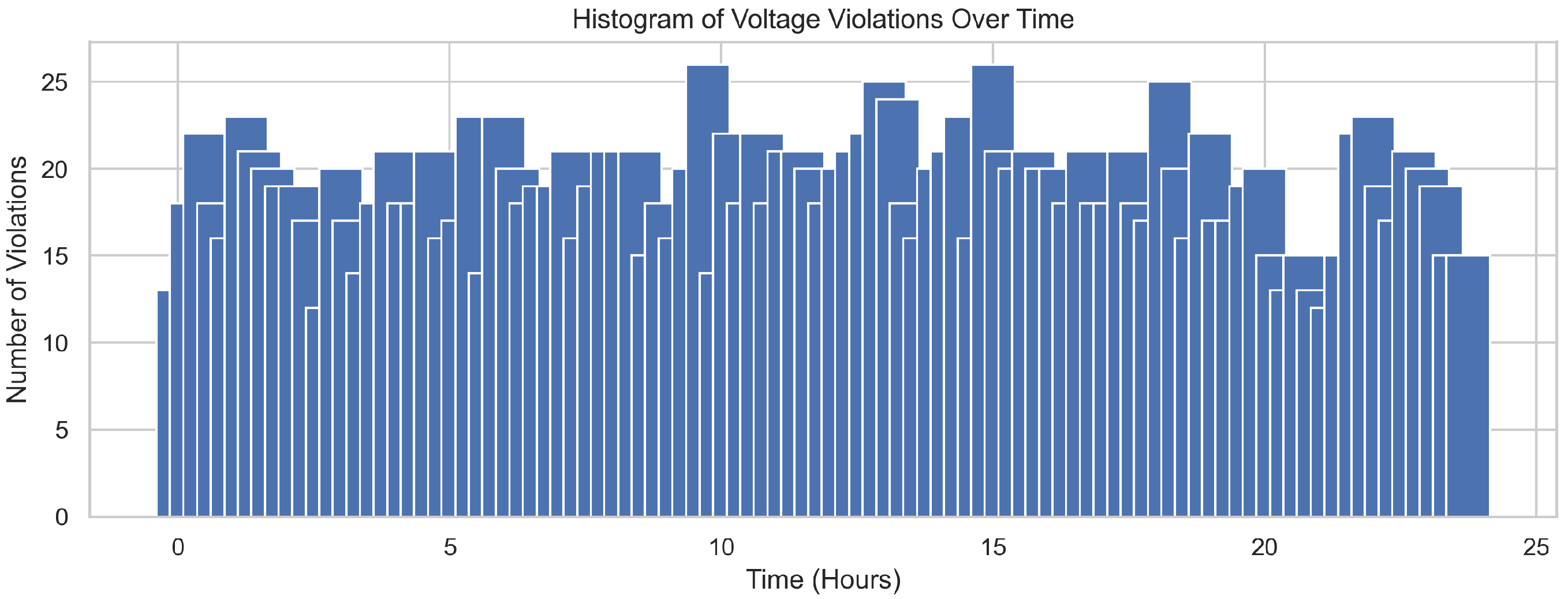

7.8. Voltage Violation Analysis

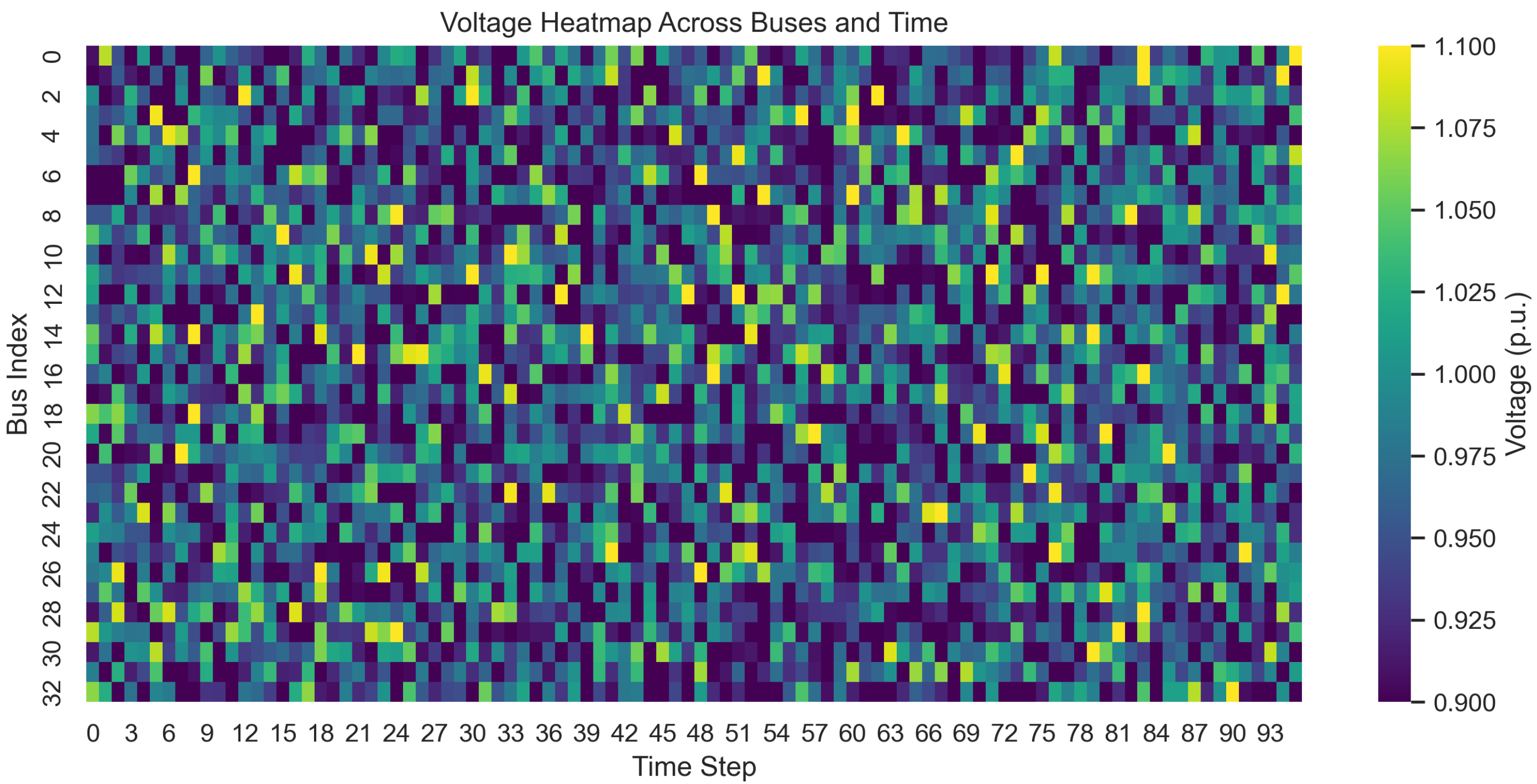

7.9. Spatiotemporal Voltage Heatmap

7.10. Battery State of Charge Trajectory

7.11. AI Policy Robustness Under Climate Perturbations

7.12. Bus-Wise Voltage Violation Frequency

7.13. Performance Evaluation

7.14. Discussion of System-Wide Impact

- Voltage Stability: The AI controller consistently maintains nodal voltages within regulatory thresholds (e.g., IEEE 1547, EN 50160), thereby reducing equipment stress and enhancing asset longevity through improved voltage quality and transient suppression.

- Energy Efficiency: Through real-time optimization of DER dispatch and battery cycling, the framework significantly reduces active power losses. This improvement not only enhances network efficiency but also creates technical headroom for increased renewable hosting capacity without necessitating major infrastructure upgrades.

- AI Learning Robustness: Convergence patterns in cumulative reward trajectories validate that the reinforcement learning agent learns stable, generalizable policies under a range of stochastic climate scenarios. This confirms the viability of climate-aware AI for long-term autonomous grid control.

- Operational Resilience: The system demonstrates accelerated recovery from voltage disturbances and supply–demand mismatches during extreme weather conditions. This characteristic positions the proposed framework as a viable tool for national adaptation strategies under evolving climate risk profiles.

7.15. Sensitivity Analysis

8. Conclusions and Future Work

- Significant improvements in voltage stability, with a reduction in both frequency and severity of nodal violations;

- Lower active power losses and enhanced energy efficiency through optimal dispatch of DERs and storage units;

- Strong policy generalization across diverse climate perturbation scenarios, with median resilience scores consistently exceeding 0.85;

- System-wide resilience enhancements, including reduced load shedding, faster disturbance recovery, and improved operational continuity.

Limitations and Future Work

- Hardware-in-the-Loop (HIL) Integration: Coupling the digital twin with real-time simulation platforms or physical testbeds to validate control performance under hardware and communication constraints.

- Multi-Agent Reinforcement Learning (MARL): Extending the single-agent design to distributed multi-agent settings, enabling localized intelligence and peer-to-peer coordination among grid-edge assets.

- Cybersecurity Co-Design: Embedding trust-aware AI agents and blockchain-based protocols to secure the control pipeline against spoofing, tampering, and adversarial attacks.

- Socio-Technical Metrics: Incorporating equity, vulnerability, and community acceptance into the reward function to align reinforcement learning decisions with principles of climate justice and social resilience.

- Scalability Assessment: Evaluating the framework across multiple feeders and interconnected microgrids to establish its tractability and economic feasibility at scale.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zlateva, P.; Hadjitodorov, S. An Approach for Analysis of Critical Infrastructure Vulnerability to Climate Hazards. IOP Conf. Ser. Earth Environ. Sci. 2022, 1094, 012004. [Google Scholar] [CrossRef]

- Ho, C.K.; Roesler, E.L.; Nguyen, T.A.; Ellison, J. Potential Impacts of Climate Change on Renewable Energy and Storage Requirements for Grid Reliability and Resource Adequacy. J. Energy Resour. Technol. Trans. ASME 2023, 145, 100904. [Google Scholar] [CrossRef]

- Ali, A.M.; Shaaban, M.F.; Sindi, H. Optimal Operational Planning of RES and HESS in Smart Grids Considering Demand Response and DSTATCOM Functionality of the Interfacing Inverters. Sustainability 2022, 14, 13209. [Google Scholar] [CrossRef]

- Younis, A.; Benders, R.M.J.; Ramírez, J.; de Wolf, M.; Faaij, A. Scrutinizing the Intermittency of Renewable Energy in a Long-Term Planning Model via Combining Direct Integration and Soft-Linking Methods for Colombia’s Power System. Energies 2022, 15, 7604. [Google Scholar] [CrossRef]

- Fernandes, S.V.; Joao, D.V.; Cardoso, B.B.; Martins, M.A.I.; Carvalho, E.G. Digital Twin Concept Developing on an Electrical Distribution System—An Application Case. Energies 2022, 15, 2836. [Google Scholar] [CrossRef]

- Chalal, L.; Saadane, A.; Rachid, A. Unified Environment for Real Time Control of Hybrid Energy System Using Digital Twin and IoT Approach. Sensors 2023, 23, 5646. [Google Scholar] [CrossRef] [PubMed]

- Benchekroun, A.; Davigny, A.; Hassam-Ouari, K.; Courtecuisse, V.; Robyns, B. Grid-Aware Energy Management System for Distribution Grids Based on a Co-Simulation Approach. IEEE Trans. Power Deliv. 2023, 38, 3571–3581. [Google Scholar] [CrossRef]

- Qi, Y.; Li, J. The Wind and Photovoltaic Power Forecasting Method Based on Digital Twins. Appl. Sci. 2023, 13, 8374. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, Z.; Karamanakos, P.; Rodriguez, J. Digital Twin Techniques for Power Electronics-Based Energy Conversion Systems: A Survey of Concepts, Application Scenarios, Future Challenges, and Trends. IEEE Ind. Electron. Mag. 2023, 17, 20–36. [Google Scholar] [CrossRef]

- Song, Z.; Hackl, C.M.; Anand, A.; Thommessen, A.; Petzschmann, J.; Kamel, O.Z.; Braunbehrens, R.; Kaifel, A.K.; Roos, C.; Hauptmann, S. Digital Twins for the Future Power System: An Overview and a Future Perspective. Sustainability 2023, 15, 5259. [Google Scholar] [CrossRef]

- Mahankali, R. Digital Twins and Enterprise Architecture: A Framework for Real-Time Manufacturing Decision Support. Int. J. Comput. Eng. Technol. 2025, 16, 578–587. [Google Scholar] [CrossRef]

- Han, J.; Hong, Q.; Syed, M.H.; Khan, M.A.U.; Yang, G.; Burt, G.; Booth, C. Cloud-Edge Hosted Digital Twins for Coordinated Control of Distributed Energy Resources. IEEE Trans. Cloud Comput. 2023, 11, 1242–1256. [Google Scholar] [CrossRef]

- Yang, Y.; Javanroodi, K.; Nik, V.M. Climate Change and Renewable Energy Generation in Europe—Long-Term Impact Assessment on Solar and Wind Energy Using High-Resolution Future Climate Data and Considering Climate Uncertainties. Energies 2022, 15, 302. [Google Scholar] [CrossRef]

- Webster, M.; Fisher-Vanden, K.; Kumar, V.; Lammers, R.B.; Perla, J.M. Integrated Hydrological, Power System and Economic Modelling of Climate Impacts on Electricity Demand and Cost. Nat. Energy 2022, 7, 163–169. [Google Scholar] [CrossRef]

- Chreng, K.; Lee, H.S.; Tuy, S. A Hybrid Model for Electricity Demand Forecast Using Improved Ensemble Empirical Mode Decomposition and Recurrent Neural Networks with ERA5 Climate Variables. Energies 2022, 15, 7434. [Google Scholar] [CrossRef]

- Salehimehr, S.; Taheri, B.; Sedighizadeh, M. Short-Term Load Forecasting in Smart Grids Using Artificial Intelligence Methods: A Survey. J. Eng. 2022, 2022, 1133–1142. [Google Scholar] [CrossRef]

- Barwar, S. Artificial Intelligence Application to Flexibility Provision in Energy Management System: A Survey. In EAI/Springer Innovations in Communication and Computing; Springer: Berlin/Heidelberg, Germany, 2023; pp. 55–78. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, Q.; Yu, Z.; Chen, Y.; Yang, Y. Reinforcement Learning-Enhanced Adaptive Scheduling of Battery Energy Storage Systems in Energy Markets. Energies 2024, 17, 5425. [Google Scholar] [CrossRef]

- Shojaeighadikolaei, A.; Ghasemi, A.; Jones, K.R.; Dafalla, Y.M.; Bardas, A.G.; Ahmadi, R.; Hashemi, M. Distributed Energy Management and Demand Response in Smart Grids: A Multi-Agent Deep Reinforcement Learning Framework. arXiv 2022, arXiv:2211.15858. [Google Scholar] [CrossRef]

- Abdoune, F.; Nouiri, M.; Cardin, O.; Castagna, P. Integration of Artificial Intelligence in the Life Cycle of Industrial Digital Twins. Adv. Control Optim. Dyn. Syst. 2022, 55, 2545–2550. [Google Scholar] [CrossRef]

- Barbierato, L.; Mazzarino, P.R.; Montarolo, M.; Macii, A.; Patti, E.; Bottaccioli, L. A Comparison Study of Co-Simulation Frameworks for Multi-Energy Systems: The Scalability Problem. Energy Inform. 2022, 5, 53. [Google Scholar] [CrossRef]

- Barbierato, L.; Pons, E.; Bompard, E.F.; Rajkumar, V.S.; Palensky, P.; Bottaccioli, L.; Patti, E. Exploring Stability and Accuracy Limits of Distributed Real-Time Power System Simulations via System-of-Systems Cosimulation. IEEE Syst. J. 2023, 17, 3354–3365. [Google Scholar] [CrossRef]

- Pham, L.N.H.; Wagle, R.; Tricarico, G.; Melo, A.F.S.; Rosero-Morillo, V.A.; Shukla, A.; Gonzalez-Longatt, F. Real-Time Cyber-Physical Power System Testbed for Optimal Power Flow Study Using Co-Simulation Framework. IEEE Access 2024, 12, 150914–150929. [Google Scholar] [CrossRef]

- Værbak, M.; Billanes, J.D.; Jørgensen, B. A Digital Twin Framework for Simulating Distributed Energy Resources in Distribution Grids. Energies 2024, 17, 2503. [Google Scholar] [CrossRef]

- Ye, Y.; Papadaskalopoulos, D.; Yuan, Q.; Tang, Y.; Strbac, G. Multi-Agent Deep Reinforcement Learning for Coordinated Energy Trading and Flexibility Services Provision in Local Electricity Markets. IEEE Trans. Smart Grid 2023, 14, 1541–1554. [Google Scholar] [CrossRef]

- Gligor, A.; Dumitru, C.D.; Dziţac, S.; Simó, A. Augmented Cyber-physical Model for Real-time Smart-grid Co-simulation. Int. J. Comput. Commun. Control 2025, 20. [Google Scholar] [CrossRef]

- Alahmadi, A.; Barri, A.; Aldhahri, R.; Elhag, S. AI Driven Approaches in Swarm Robotics—A Review. Int. J. Comput. Inform. 2024, 3, 100–133. [Google Scholar] [CrossRef]

- Zhou, G.; Tian, W.; Buyya, R.; Xue, R.; Song, L. Deep Reinforcement Learning-Based Methods for Resource Scheduling in Cloud Computing: A Review and Future Directions. Artif. Intell. Rev. 2024, 57, 124. [Google Scholar] [CrossRef]

- Yu, Q.; Ketzler, G.; Mills, G.; Leuchner, M. Exploring the Integration of Urban Climate Models and Urban Building Energy Models through Shared Databases: A Review. Theor. Appl. Climatol. 2025, 156, 266. [Google Scholar] [CrossRef]

- Zhu, C.; Yang, J.; Zhang, W.; Zheng, Y. An Algorithm that Excavates Suboptimal States and Improves Q-learning. Eng. Res. Express 2024, 6, 4. [Google Scholar] [CrossRef]

- Wang, H.; Yuan, X.; Ren, Q. Learning to Recover for Safe Reinforcement Learning. arXiv 2023, arXiv:2309.11907. [Google Scholar] [CrossRef]

- Ebrie, A.S.; Kim, Y.J. Reinforcement Learning-Based Multi-Objective Optimization for Generation Scheduling in Power Systems. Systems 2024, 12, 106. [Google Scholar] [CrossRef]

- Tulger Kara, G.; Elbir, T. Evaluation of ERA5 and MERRA-2 Reanalysis Datasets over the Aegean Region, Türkiye. Fen-MüHendislik Derg. 2024, 26, 9–21. [Google Scholar] [CrossRef]

- IEEE Std 1547-2018 (Revision of IEEE Std 1547-2003); IEEE Standard for Interconnection and Interoperability of Distributed Energy Resources with Associated Electric Power Systems Interfaces. IEEE: New York, NY, USA, 2018; pp. 1–138. [CrossRef]

- EN 50160; Voltage Characteristics of Electricity Supplied by Public Distribution Systems. European Committee for Electrotechnical Standardization (CENELEC): Brussels, Belgium, 2010.

| Bus ID | Bus Type | Connected Load (kW) | DER Placement |

|---|---|---|---|

| 1 | Slack/Substation | 0 | None |

| 6 | PQ Bus | 210 | PV Unit |

| 18 | PQ Bus | 160 | Wind Turbine |

| 30 | PQ Bus | 150 | Battery Storage |

| Others (2–33) | PQ Buses | 50–200 (varies) | None |

| Parameter Category | Value/Description |

|---|---|

| Grid and Network | |

| Test System | IEEE 33-Bus Radial Distribution Network |

| Voltage Level | 12.66 kV (Base), 100 MVA |

| Simulation Duration | 24 h (one episode) |

| Time Resolution () | 15 min (96 timesteps/day) |

| Distributed Energy Resources (DERs) | |

| PV System Location | Bus 6 |

| Wind Turbine Location | Bus 18 |

| Battery Storage Location | Bus 30 |

| PV Efficiency () | 18% |

| PV Area (A) | 50 m2 |

| Wind Cut-in/Rated/Cut-out Speeds | 3 m/s, 12 m/s, 20 m/s |

| Battery Capacity | 150 kWh, 50 kW inverter rating |

| Climate Input | |

| Source | ERA5 Reanalysis Dataset (2018–2023) |

| Variables | Temperature, Wind Speed, Irradiance, Precipitation |

| Disturbance Injection | Heatwaves, Wind Lulls, Overcast Storms |

| AI Model (DDPG) | |

| Actor Network | 2 Hidden Layers [128, 128], ReLU |

| Critic Network | 2 Hidden Layers [128, 128], ReLU |

| Learning Rate (Actor–Critic) | / |

| Discount Factor () | 0.99 |

| Exploration Noise | Ornstein–Uhlenbeck () |

| Replay Buffer Size | transitions |

| Training Episodes | 2000 |

| Batch Size | 64 |

| Simulation Tools and Platform | |

| Power Flow Solver | OpenDSS via Python-DSS |

| AI Framework | PyTorch (Python 3.10) |

| Co-Simulation Middleware | HELICS v3.1.0 |

| Operating System | Ubuntu 22.04 LTS |

| Hardware | Intel Xeon 16-core, 64 GB RAM |

| Method | Complexity | Runtime/Episode | Notes |

|---|---|---|---|

| Genetic Algorithm (GA) | 120 s | Iterative, population–generation cost | |

| Particle Swarm Opt. (PSO) | 95 s | Sensitive to swarm size | |

| Model Predictive Control (MPC) | 60 s | Matrix optimization overhead | |

| Proposed DDPG | 18 s | Actor–critic inference |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Addo, K.; Kabeya, M.; Ojo, E.E. AI-Powered Digital Twin Co-Simulation Framework for Climate-Adaptive Renewable Energy Grids. Energies 2025, 18, 5593. https://doi.org/10.3390/en18215593

Addo K, Kabeya M, Ojo EE. AI-Powered Digital Twin Co-Simulation Framework for Climate-Adaptive Renewable Energy Grids. Energies. 2025; 18(21):5593. https://doi.org/10.3390/en18215593

Chicago/Turabian StyleAddo, Kwabena, Musasa Kabeya, and Evans Eshiemogie Ojo. 2025. "AI-Powered Digital Twin Co-Simulation Framework for Climate-Adaptive Renewable Energy Grids" Energies 18, no. 21: 5593. https://doi.org/10.3390/en18215593

APA StyleAddo, K., Kabeya, M., & Ojo, E. E. (2025). AI-Powered Digital Twin Co-Simulation Framework for Climate-Adaptive Renewable Energy Grids. Energies, 18(21), 5593. https://doi.org/10.3390/en18215593