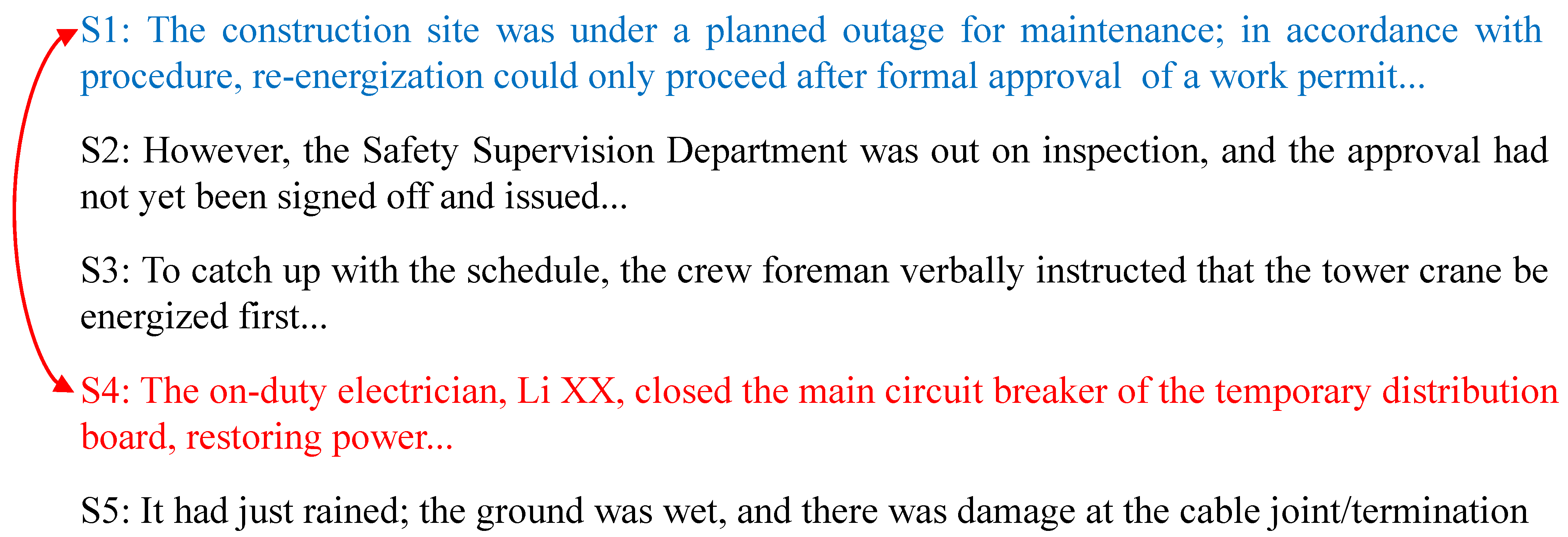

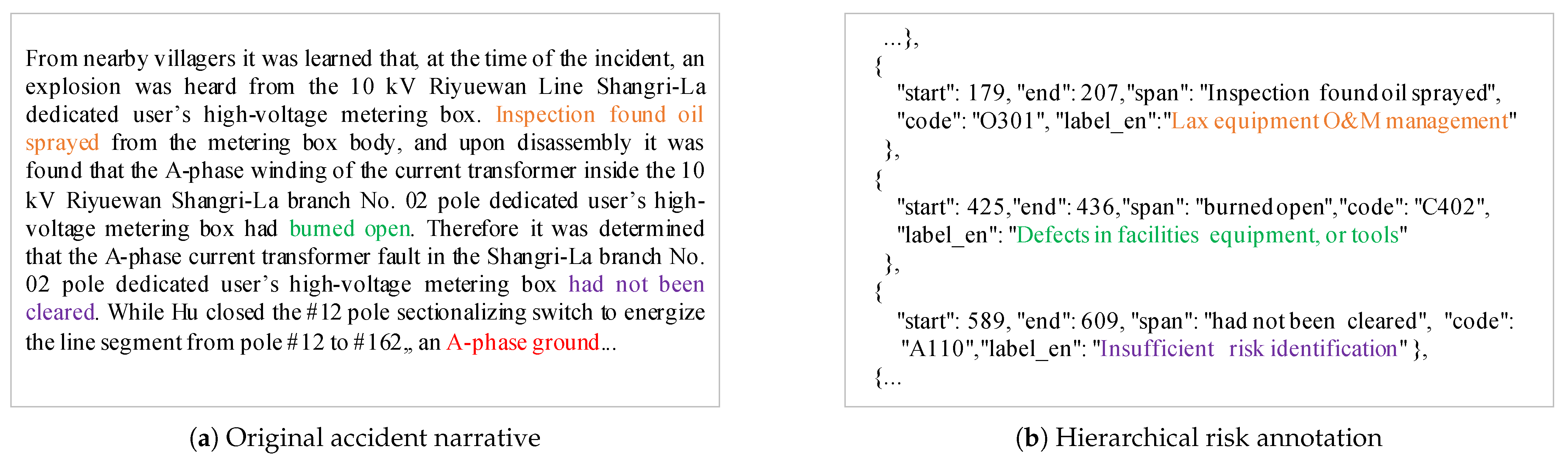

2.1. Analysis of Accident Report Texts

In recent years, NLP has been widely applied to accident risk analysis in the safety domain. Early studies relied primarily on traditional machine learning pipelines. Unstructured accident narratives were converted into shallow statistical features, such as bag-of-words (BoW) and TF-IDF, and fed to classifiers including Naïve Bayes (NB), support vector machines (SVM), logistic regression (LR), and k-nearest neighbors (kNN) for cause identification and category assignment [

24,

25,

26,

27,

28,

29]. For example, Goh and Ubeynarayana [

24] systematically evaluated multiple algorithms on 1000 OSHA construction accident reports; Zhang et al. [

25] compared SVM, LR, and kNN in construction accident texts; and Kim and Chi [

26] achieved automatic organization and classification of accident reports in the construction industry. In the power sector, Lu et al. [

27] leveraged tokenization, term frequency, and TF-IDF to extract high-frequency terms from the description and cause the paragraphs to identify hazards; Xu et al. [

28] built a domain lexicon to extract risk factors; and Li et al. [

29] combined text mining with Bayesian networks for causation analysis in coal mine accidents. Although these approaches are simple and interpretable, they are limited by shallow representations and struggle to capture cross-sentence dependencies and implicit semantics. Methodologically, these pipelines emphasize feature engineering with linear or kernel decision rules, which offers fast training and transparent outputs; empirically, they improve coarse-grained categorization on short narratives but degrade on long reports where key evidence is scattered across sentences, and they rarely ground predicted labels in verifiable text spans.

With the rapid progress of deep learning, approaches based on RNNs, CNNs, GNNs, and pre-trained language models (PLMs) have been introduced into accident analysis [

30,

31,

32]. Representative examples include Fang et al. [

33] applying BERT to near-miss classification in construction; Meng et al. [

34] combining BERT, Bi-LSTM, and CRF to construct a knowledge graph of power equipment failures from the literature; Liu et al. [

35] proposing an intelligent parsing pipeline for power accident reports; Liu and Yang [

36] integrating HMMs and random forests for association visualization and quantitative assessment of railway accident risk; and Khairuddin et al. [

37] using Bi-LSTM to predict injury severity in accident reports. Numerous studies further validate the effectiveness of CNNs and GNNs for accident text tasks [

30,

38,

39,

40,

41]: for example, Liu et al. [

38] used CNNs for short text classification of secondary equipment faults in power systems; Li and Wu [

30] applied CNNs to categorize construction accidents; Pan et al. [

41] employed graph convolutional networks (GCNs) to automatically extract accident type, injury type, and injured body part from construction reports; and Cao et al. [

42], Zeng et al. [

43] incorporated structurally aware and multimodal textual features to extract key information. There is also a line of work that couples accident-causation theory with text analysis to enhance risk prediction. Jia et al. [

44] embedded a classical causation model into the classification process to automatically extract causation patterns from gas explosion reports in coal mining. Qin and Ai [

45] proposed a causality-driven hierarchical multi-label classifier that treats predefined hazard checklists as hierarchical labels, enabling the model to learn hazard–accident correspondences and improving the accuracy of risk identification. Cheng and Shi [

46] applied a dual graph attention network to tourism resource texts, showing that integrating domain knowledge and heterogeneous label relations can significantly enhance classification accuracy in vertical domains. Overall, deep models substantially enhance the modeling of contextual semantics and local structure; however, they still fail to fully exploit hierarchical label dependencies and provide evidence-based explanations. In terms of contributions, PLM-based encoders increase robustness to lexical variation and typically raise Micro- and Macro-F1 over traditional baselines; in terms of challenges, most systems remain flat or weakly hierarchy-aware and seldom return sentence/entity-level evidence that auditors can verify, which limits deployment in safety-critical workflows.

More recently, research has shifted toward prompt engineering with large language models (LLMs). Ray et al. [

47] used LLMs to classify OSHA narratives and fault details for automatic identification of equipment failures in construction; Ahmadi et al. [

48] used GPT-4.0, Gemini Pro, and LLaMA 3.1 with zero-shot and customized prompts to extract root causes, injury causes, body part, severity, and time from construction accident reports; and Jing and Rahman [

49] combined GPT-4 with prompt engineering for power grid fault diagnosis. Despite their versatility and rapid adaptability, LLMs face challenges in safety-critical scenarios, including domain knowledge gaps, hallucinations, and auditability of decisions; Majumder et al. [

50] argued that domain-specific fine-tuning data, professional toolchains, and retrieval-augmented generation (RAG)-style knowledge augmentation are essential to improve reliability and controllability. In practice, we observe that zero/low-shot setups can expedite prototyping, but robust deployment often requires domain grounding and explicit evidence outputs, which current LLM pipelines do not guarantee.

In summary, accident text analysis has evolved along three main trajectories: from shallow features to deep representations, from flat labels to structure-aware modeling, and from purely supervised learning to prompt-driven and knowledge-augmented paradigms [

51]. Nevertheless, most studies still rely on flat labels and under-utilize the inherent hierarchical structure of accident data; interpretability is also underaddressed, making it difficult to explicitly link labels to evidence. In critical infrastructure domains such as power systems, trustworthy and auditable decision processes are vital, and explainable AI (XAI) has become a key determinant of real-world deployment [

52]. Beyond classification, prior work in the safety domain also investigates information extraction and event/causality modeling (entities, relations, and causal chains that explain accident mechanisms), temporal progression and hazard evolution, retrieval and question answering for incident investigation, and knowledge-graph construction; additional strands explore multimodal fusion, retrieval-augmented reasoning, and human-in-the-loop auditing. Our study focuses on evidence-grounded hierarchical classification, while these complementary directions clarify the broader landscape and delineate the scope of this work.

2.2. Hierarchical Text Classification Methods

Hierarchical text classification must balance label prediction with hierarchical dependency constraints. Its technical trajectory has generally evolved progressively from hierarchical variants of traditional learners to end-to-end neural modeling, then to explicit/implicit structural encodings and low-resource paradigms. As a field-level map, Zangari et al. [

5] synthesized traditional and neural HTC lines and highlighted the need for metrics and benchmarks that reflect hierarchical structure (e.g., path consistency and level-aware scoring), which framed many of the methods discussed below.

Early studies focused on hierarchy-adaptive modifications of traditional machine learning algorithms. Granitzer et al.’s hierarchical boost (BoosTexter/Centroid Booster) trained binary classifiers independently at each node and suppressed error propagation across levels via local negative sample selection [

53,

54]; Esuli et al. [

55,

56] recursed AdaBoost.MH into TreeBoost.MH, dynamically updating sample weights according to parent–child relations to enhance the robustness of hierarchical decisions. ClusHMC by Vens et al. jointly predicted multiple labels with a single decision tree and introduced a hierarchical distance to improve the split criterion, markedly boosting multi-task sharing efficiency [

57]. To address label scarcity, Santos et al. [

58] proposed HMC-SSRAkEL (self-training + ensemble), while Nakano et al. H-QBC [

59] used query-by-committee to select highly informative samples, promoting the deployment of semi-supervised/active learning in HMTC scenarios. Methods at this stage are simple and efficient but have limited capacity for cross-level semantic propagation and contextual representation. They are computationally light and interpretable, and they reduce top-level mistakes; however, node-local decisions tend to propagate errors downward, and performance drops on deeper levels and long documents that require cross-sentence context.

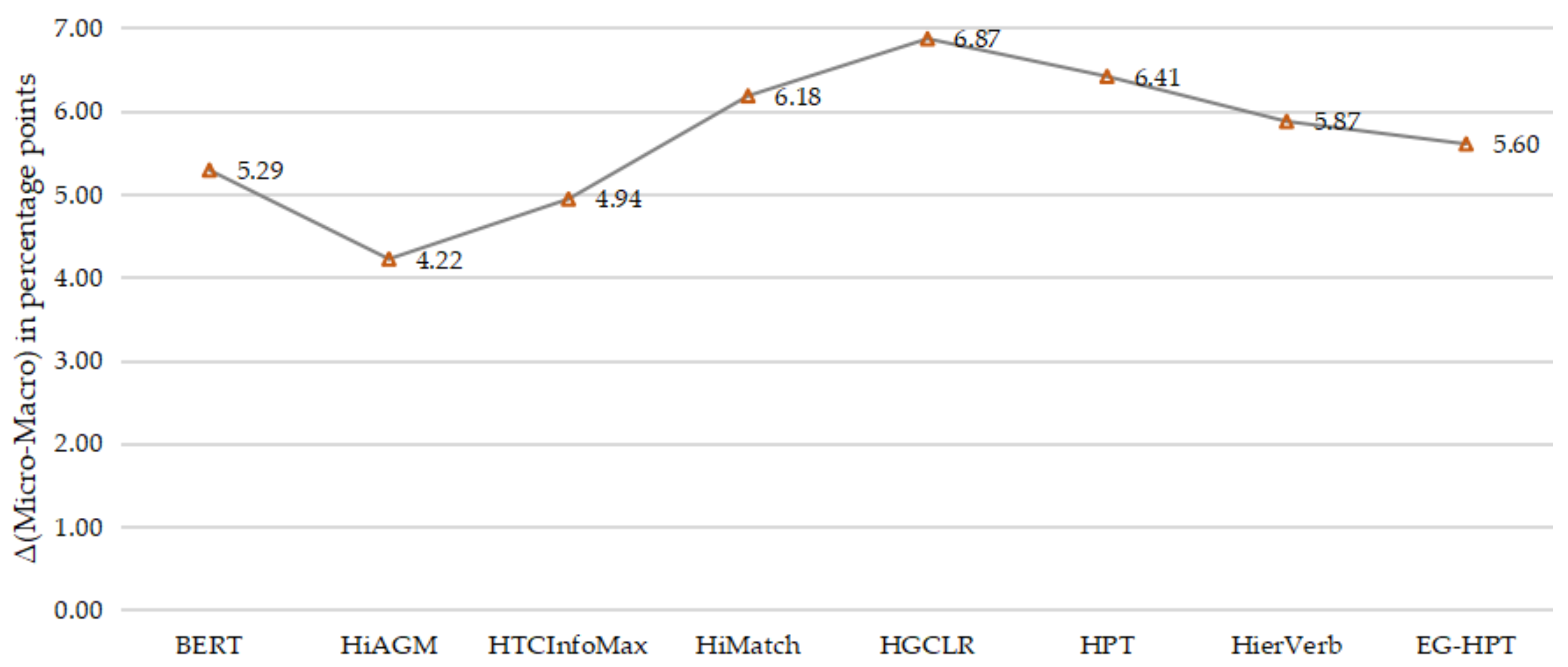

With the rise of deep learning, end-to-end neural modeling has become a mainstream approach. On the recurrent front, Huang et al.’s HARNN/HmcNet generated document representations top-down via hierarchical attention units and ensured decoding consistency with path-constrained pruning (PCP) [

60,

61]; Wang et al.’s HCSM combined an ordered RNN (AORNN) with a bidirectional capsule network (HBiCaps), using dynamic routing to pass semantics across the hierarchy [

62]. On the convolutional side, Shimura et al.’s HFT-CNN hierarchically fine-tuned the characteristics of the parent class to strengthen discrimination between child classes [

63]; Peng et al. [

64] converted text into a Word graph and used graph convolution to capture non-contiguous patterns, including a joint hierarchical regularizer that inspired subsequent graph-based research. From a generative perspective, Mao et al. [

14] treated HMTC as a Markov decision process, and HiLAP searched label paths via reinforcement learning; Fan et al. [

65] adopted a sequence generation paradigm to output structured labels and introduced hierarchical constraints via multilevel decoupling. These approaches enhance end-to-end representation and inference, but they remain relatively weak in explicitly aligning label semantics with textual evidence. Across benchmarks, these models typically improved accuracy and F1 over traditional learners—especially at mid-depth levels—yet they seldom provided auditable evidence per predicted label, and path consistency often relied on post hoc rules rather than learned grounding. HTCInfoMax [

7] advanced this line by maximizing mutual information between texts and gold labels while imposing priors on label embeddings, effectively filtering irrelevant content and mitigating label imbalance beyond HiAGM.

In recent years, graph neural networks have become a key technique for explicit structural modeling. In semantic alignment, Chen et al.’s HiMatch [

17] computed text–label similarity in a shared embedding space, with a hierarchical matching loss that enforced coarse-to-fine alignment and improved hierarchy awareness; Zhang et al.’s LA-HCN [

66] employed label-aware graph attention to dynamically aggregate same-level labels and generate feature masks. In geometric embeddings, Chen et al.’s HyperIM [

15] leveraged the tree-like property of hyperbolic space to encode parent–child relations in the Poincaré ball; Deng et al.’s HLGM [

67] modeled branch dependencies with a hypergraph and strengthened inter-class separation via a hierarchical triplet loss. For knowledge augmentation, Liu et al.’s K-HTC [

22] integrated knowledge-graph entities and used graph attention to jointly optimize text and label representations; Cheng et al. [

68] integrated domain knowledge and hierarchical relations via a heterogeneous graph. Kumar and Toshinwal [

69] further introduced a Hierarchy-Aware Label Correlation (HLC) model with Graphormer encoders and a CEAL loss, which effectively captured fine-grained label dependencies.

This line of work reinforces structural priors and topological plasticity, but it often relies on high-quality ontologies/knowledge graphs, as well as stable structural assumptions. These methods inject topology into learning and reduce coarse-to-fine errors when structural priors are accurate; however, they depend on ontology/knowledge-graph quality, and robustness degrades when priors are noisy or incomplete. Moreover, many still optimize structure without explicitly grounding predictions in sentence/entity evidence. HiMatch [

17] exemplified hierarchy-aware text–label alignment in a joint embedding space, reporting consistent gains in hierarchy-sensitive metrics.

Breakthroughs in low-resource learning paradigms are pushing HMTC toward practical deployment. In contrastive learning, Wang et al. proposed HGCLR [

18] and HPT [

16]. HGCLR injected label topology into the text encoder through hierarchy-aware construction of positives and negatives, and, together with label path control and phrase-level enhancement, produced high-quality samples [

18]. HPT appended learnable virtual label tokens to the end of the input sequence, reformulated hierarchical multilabeling as layer-wise multilabel MLM, and jointly trained text and label representations within a shared BERT encoding space using ZMLCE loss [

16]. Yu et al.’s HJCL [

70] combined contrastive learning at the instance level and the label level, using batching strategies to stabilize optimization over complex structures. In the few-shot setting, Shen et al.’s TaxoClass [

71] initialized the core classifier from class names and achieved efficient generalization through hierarchical self-training; Ji et al.’s HierVerbalizer [

19] verbalized label descriptions as prompts to adapt pre-trained models; Zhang et al.’s Dataless [

72] achieved zero-shot classification via semantic-space similarity. Zhang et al. [

73] proposed Bpc-lw, which introduced a bidirectional path constraint with label weighting to enforce inter-layer consistency and employed contrastive learning to enhance discriminability in few-shot HTC. Compared with fully supervised end-to-end methods, these approaches offer advantages in parameter efficiency, generalization, and adaptability to weak supervision, but they still provide limited support for evidence interpretability and cross-sentence dependencies. Empirically, HGCLR [

18] trained hierarchy-aware encoders by constructing contrastive positives and negatives directly guided by taxonomy, while HPT [

16] narrowed the PLM-task mismatch via prompt-based verbalizers; HierVerbalizer (HierVerb) [

19] injected hierarchical knowledge into verbalizers through hierarchical contrastive learning and outperformed graph-based baselines in few-shot HTC. Still, most methods fall short of selecting verifiable Top-

k evidences per label or modeling cross-sentence chains explicitly.

Task-specific extensions continue to broaden application boundaries. Under extreme multi-label and dynamic environments, researchers explore computational and evaluation scalability. Liu et al. [

74] validated the scalability of CNNs on large label sets and proposed dynamic pooling; Gargiulo et al. [

75] mitigated long-tailed effects via label set expansion. Ren et al. [

76] combined time-aware topics to cope with concept drift in social streams; Zhao et al. [

77] designed an interactive feature fusion to accommodate the hierarchical classification of the scholarly literature. In terms of toolkits and metrics, Liu et al. [

78] provided multiple text encoders and hierarchical loss interfaces; Amigó and Delgado [

79] introduced an Information-Contrast Measure (ICM) to characterize evaluation consistency under hierarchical imbalance, supporting reproducibility and fair comparison. These efforts address scale and evaluation, but persistent challenges remain in long-tail robustness, deep-level recall, and delivering path-consistent predictions with evidence that auditors can verify.

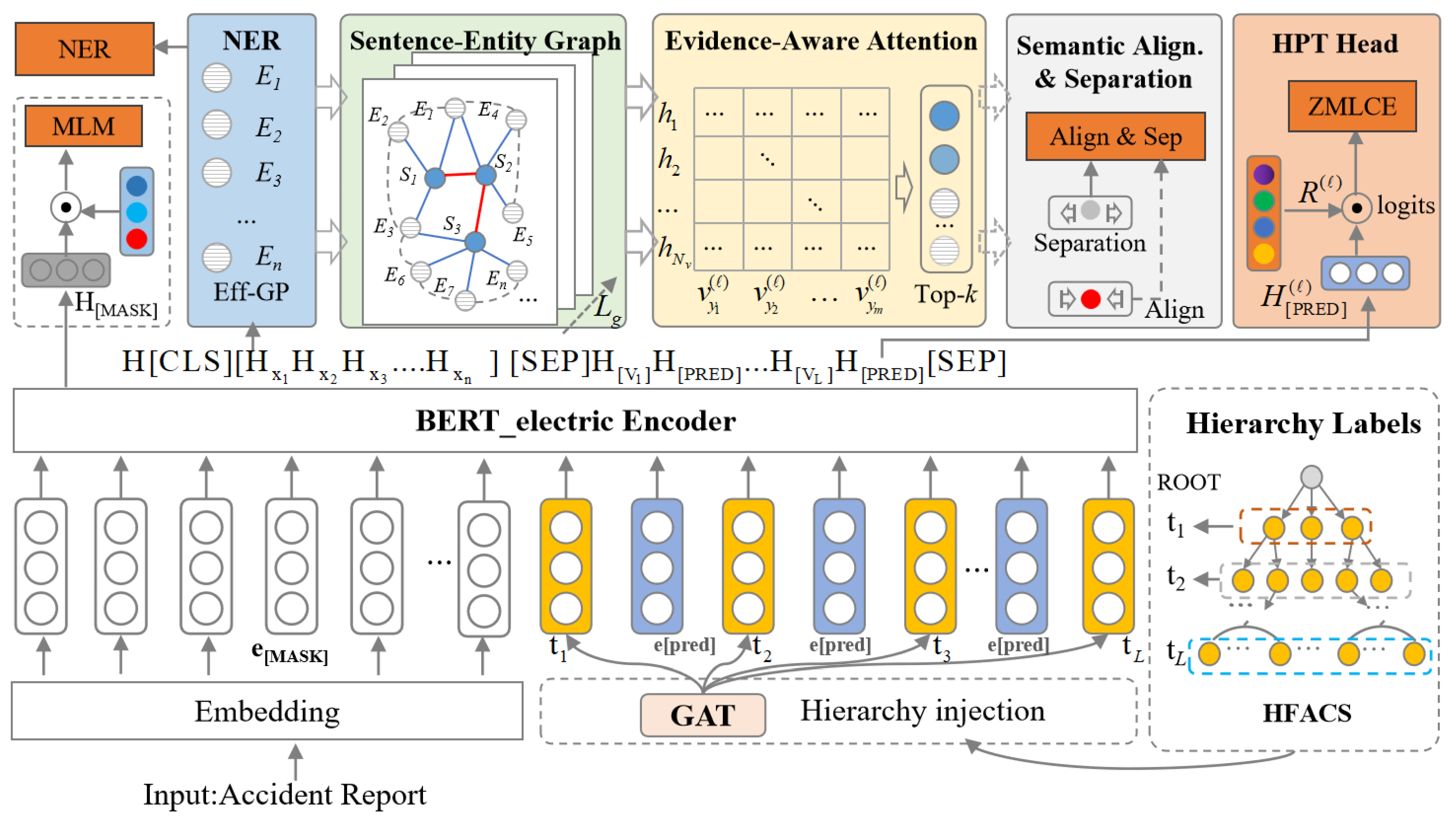

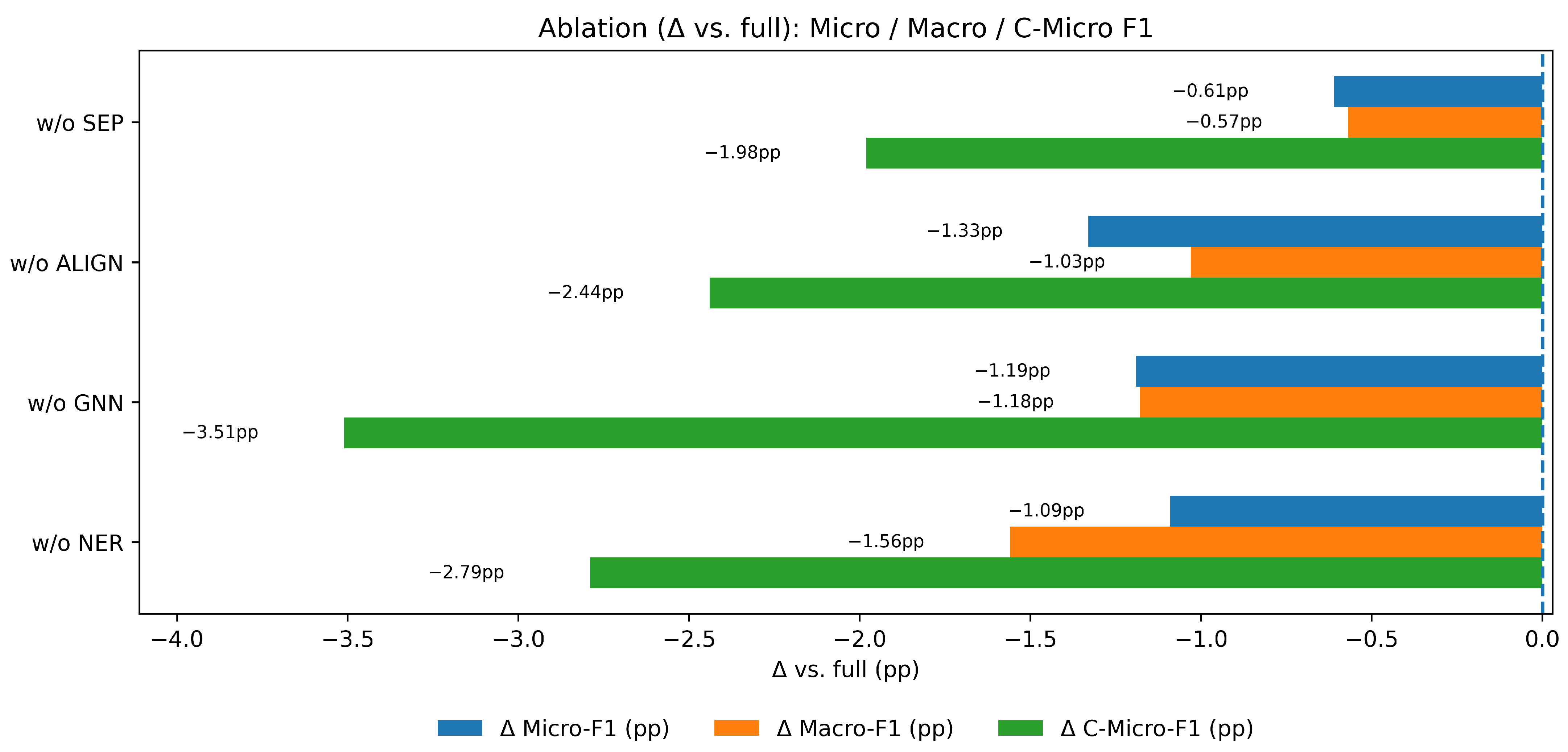

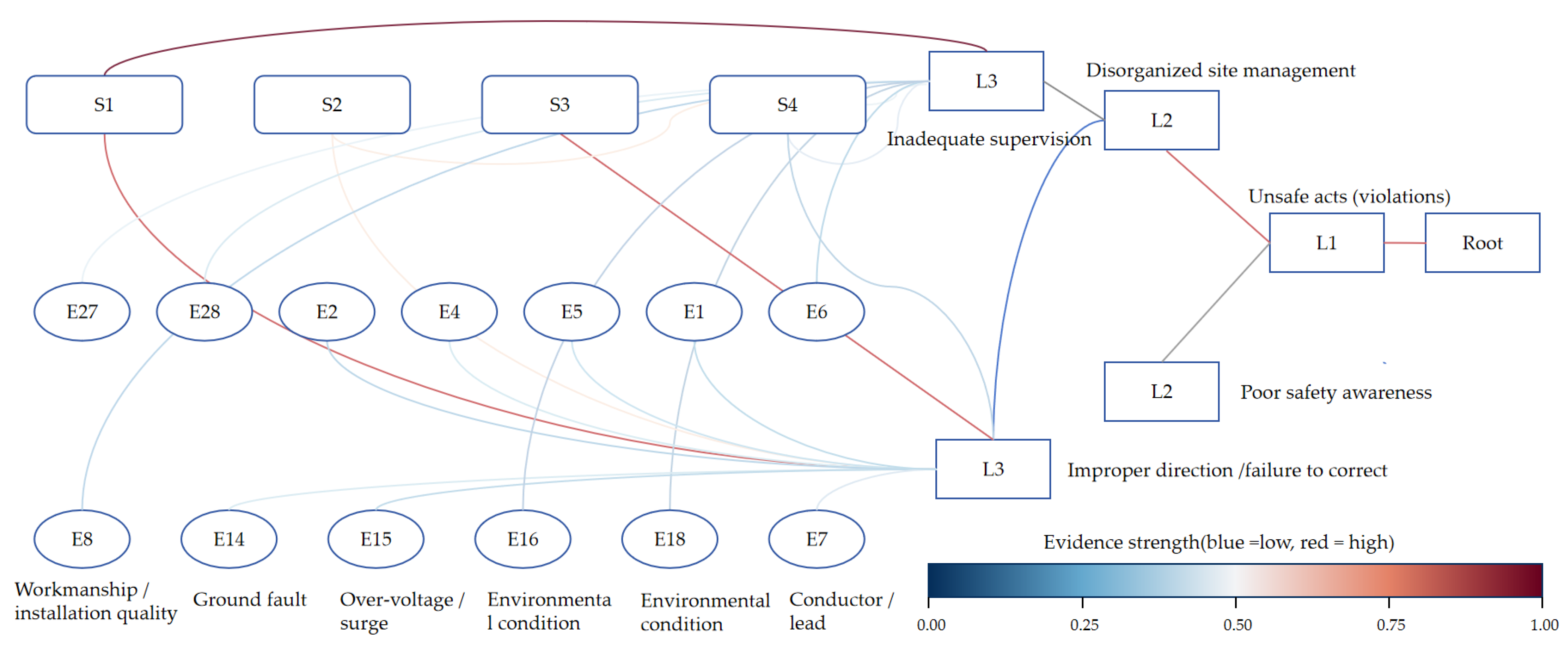

In summary, the development of HMTC reflects a continuous evolution from hand-crafted features → end-to-end representations, from explicit constraints → implicit structural encodings, and from full supervision → low-resource/parameter-efficient regimes [

14,

16,

17,

18,

64]. However, existing methods still have shortcomings in the following aspects. First, label–evidence alignment is insufficiently explicit: most models improve structural consistency via label embeddings or path constraints, but they do not systematically address the auditable question of “which sentences/entities support a predicted label.” Second, cross-sentence/cross-entity dependency modeling is inadequate: in long documents, causal cues are often scattered across sentences, and pure sequence encoding or label alignment struggles to capture cross-sentence interactions and inter-entity relations. In addition, hierarchical separability and long-tail robustness remain fragile, with semantic collapse and insufficient recall of deep labels and rare categories. To address these issues, this paper introduces label-aware evidence localization, hierarchical semantic alignment (weighted InfoNCE), and hierarchical separation (triplet) constraints under the HPT framework, and it models cross-sentence dependencies via Global Pointer [

23]-based nested entity recognition and a sentence–entity heterogeneous graph, with the aim of simultaneously improving structural consistency, few-shot robustness, and interpretability, thus remediating gaps in engineering auditability and long-document understanding. Our approach therefore seeks to join structural priors with explicit evidence grounding and to deliver path-consistent predictions that can be inspected and audited in safety-critical applications.