1. Introduction

As global reliance on finite resources like oil and natural gas becomes increasingly untenable, the transition to renewable energy has emerged as an irreversible global trend [

1]. The wind power sector, in particular, has demonstrated exceptional growth. According to the Global Wind Report 2025 by the Global Wind Energy Council (GWEC), 2024 marked another record year with 117 GW of new wind capacity installed worldwide, bringing the total cumulative capacity to 1136 GW. Onshore wind continues to be the backbone of this expansion, reaching a cumulative capacity of 1052.3 GW and accounting for approximately 92.7% of the total global installations by the end of 2024 [

2]. The large-scale integration of wind power, which is essential for achieving carbon neutrality, is fundamentally constrained by its inherent intermittency and stochastic characteristics. The output from a wind farm is highly sensitive to a variety of complex and dynamic factors, including not only meteorological variables but also the geographical topology of the turbines [

3]. This unpredictability introduces significant risks to power system operations, complicating grid dispatch and jeopardizing system reliability. To address these challenges and fully harness the potential of wind energy, it is therefore crucial to develop accurate and robust power forecasting technologies [

4,

5].

The development of wind power prediction methods reflects an evolutionary shift from physical and statistical models to advanced machine learning and deep learning approaches, driven by the continuous pursuit of higher prediction accuracy and advancements in computational science. In the early stages, wind power forecasting primarily relied on two categories of models. The first category consisted of physical models based on numerical weather prediction (NWP), which employed the control equations of fluid mechanics and atmospheric science to simulate atmospheric conditions. These models are grounded in rigorous theoretical principles and exhibit low dependency on historical data [

6]. However, their high computational demands constrain frequent model updates, making it difficult to meet the real-time requirements of short-term forecasting. The second category encompassed traditional statistical models, such as autoregressive moving average (ARMA) [

7] and autoregressive integrated moving average (ARIMA) [

8]. These models demonstrated high computational efficiency by capturing linear patterns in historical observations. However, their predictive robustness diminished when confronted with the strong nonlinearity and non-stationary fluctuations inherent in wind speed data.

With the rapid advancement of computing power, machine learning has emerged as an effective tool for addressing the nonlinear challenges in wind power prediction. Traditional machine learning techniques, including support vector machines (SVM) [

9], (Categorical Boosting) Catboost [

10], and random forests (RF) [

11], have shown strong capabilities in modeling nonlinear patterns. Nevertheless, the performance of these models heavily depends on the quality of feature engineering. If critical physical or statistical patterns are not effectively extracted and represented as input features, the generalization ability of the model may be significantly compromised. The emergence of deep learning (DL) marked a paradigm shift in this domain [

12]. Unlike conventional approaches that rely on manual feature engineering, deep learning models can automatically learn complex nonlinear mappings from raw data in an end-to-end manner, directly linking meteorological inputs with power outputs. Consequently, current research has increasingly focused on sophisticated architectures capable of capturing multi-variable interactions and spatiotemporal dependencies to enhance prediction accuracy [

13].

The application of deep learning in wind power forecasting initially centered on classical models such as long short-term memory networks (LSTM) and convolutional neural networks (CNN), which were employed to extract temporal dynamics and local spatial features, respectively. To better capture spatial correlations among wind turbines, graph neural networks (GNNs) have emerged as a key technological breakthrough, as demonstrated by Peng et al. [

14]. Researchers have moved beyond static, geography-based graph structures and explored multi-graph construction methods that integrate multi-dimensional information, as shown by Zhao et al. [

15], These approaches are often combined with reinforcement learning for dynamic model integration, as explored by Yu et al. and Zhao et al. [

15,

16]. Additionally, attention mechanisms are increasingly used to interpret model decision-making processes, as implemented by Zhang et al. [

17]. And dynamic graph techniques capable of adaptively learning graph structures, developed by Xie et al. [

18].

In recent years, research has increasingly emphasized practical application scenarios. To address the “black box” nature of deep learning models, explainability analysis has gained prominence. In time series feature extraction, more advanced architectures such as the Transformer have been introduced, and even dual-domain Transformers capable of joint time-frequency analysis have been developed to uncover deeper dynamic patterns, as proposed by Hou et al. [

19]. Considering the economic implications of prediction errors in electricity markets, researchers have proposed asymmetric loss functions tailored to decision costs, as developed by Liang et al. and Dong et al. [

20,

21], and have developed online learning frameworks coupled with concept drift detection mechanisms to adapt to evolving data distributions, as presented by He et al. [

22]. Furthermore, a growing trend involves shifting from deterministic point predictions to probabilistic interval predictions, offering richer information for grid risk management, as demonstrated by Li et al. [

23]. Cutting-edge research now integrates multiple components into unified frameworks, such as deep fusion of historical power data and environmental variables using novel architectures like Kolmogorov-Arnold networks (KANs), as developed by Wu et al. [

24], or combining signal processing techniques (e.g., VMD, MODWT) for multi-scale data preprocessing and analysis, as shown by Qiao et al. and Gao et al. [

25,

26], leading to end-to-end predictive systems.

Recently, significant progress has been made in enhancing wind power forecasting through graph neural networks (GNNs), focusing on optimizing graph construction, improving physical interpretability, addressing scalability challenges, and quantifying prediction uncertainty. In terms of model fusion and integration, Qu et al. proposed a dual-stacking ensemble spatiotemporal graph deep neural network based on multiple ensemble strategies. This model integrates Bagging, LSTM, and RF through a sophisticated stacking algorithm to fully exploit the spatiotemporal correlations within wind farm clusters, achieving high-precision multi-step-ahead forecasting [

27]. To enhance physical realism, Qiu et al. incorporated turbine-level “blocking effects” into their spatiotemporal GNN to better simulate airflow interactions [

28]. For large-scale deployment, Wang et al. designed a comprehensive framework from data preprocessing to prediction, employing an improved DBSCAN algorithm for anomaly detection and repair, and using spectral clustering to partition wind farms into sub-clusters for parallel prediction, thereby improving computational efficiency and scalability [

29]. To quantify prediction uncertainty, Liao et al. combined GNNs with an enhanced Bootstrap technique to achieve ultra-short-term probabilistic interval forecasting, providing valuable support for risk-informed decision-making in power systems [

30]. Qu et al. integrated prediction intervals with expert behaviors into a deep reinforcement learning framework to enhance the economic operation of microgrids. This approach effectively mitigates prediction uncertainty and improves training efficiency, leading to more robust and cost-effective power scheduling decisions [

31].

In summary, wind power forecasting research has now entered a stage of deeper integration and more refined modeling. Although existing models have made progress in capturing both spatial and temporal patterns, they continue to face challenges in handling the complex dynamics of wind energy systems. In particular, current methods struggle to represent the different time scales of variability, to adaptively pinpoint which past conditions are most relevant, and to capture the large-scale, non-local spatial correlations driven by broader meteorological systems. These unresolved challenges remain key obstacles to further improving forecasting accuracy and form the central motivation of this study.

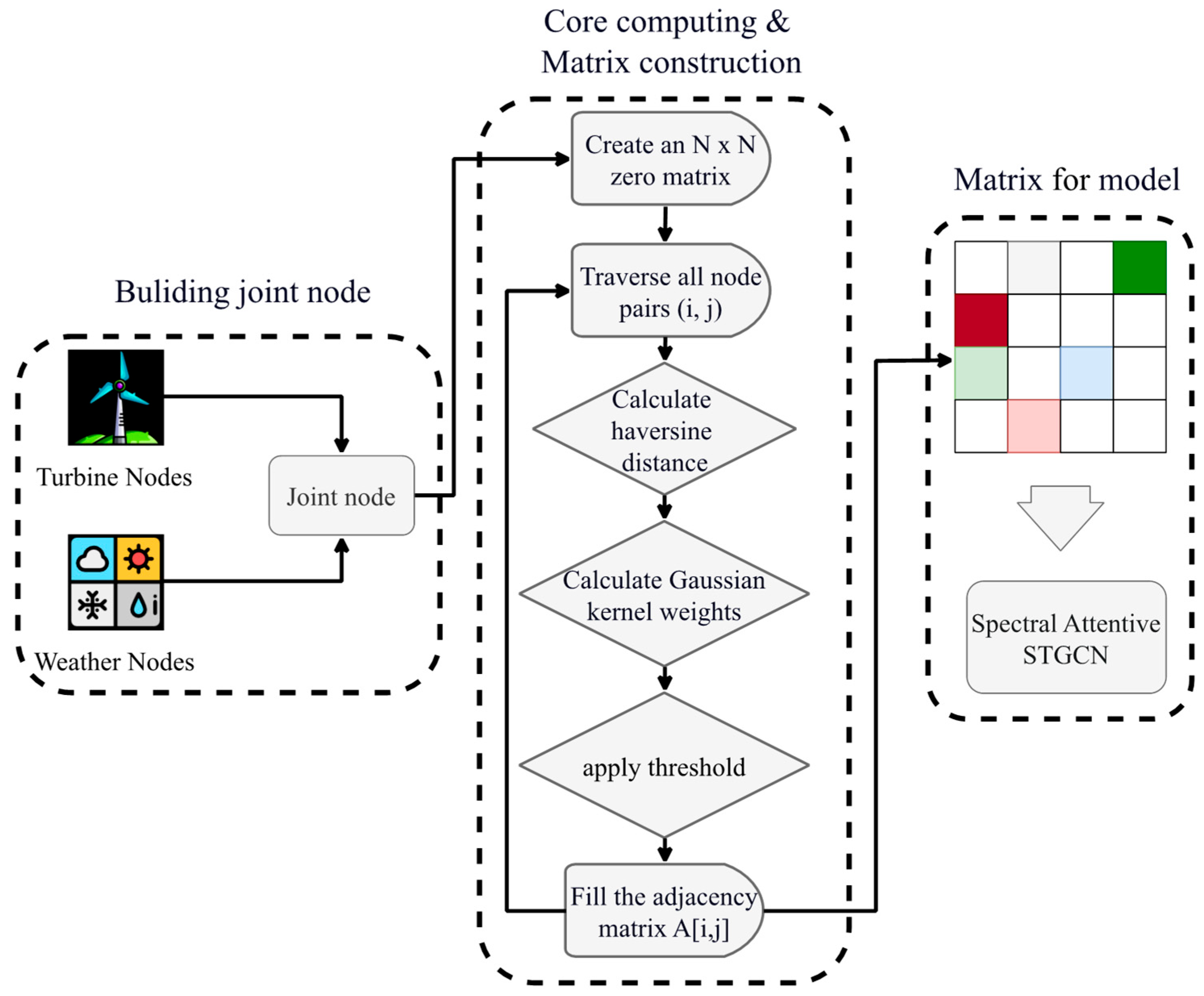

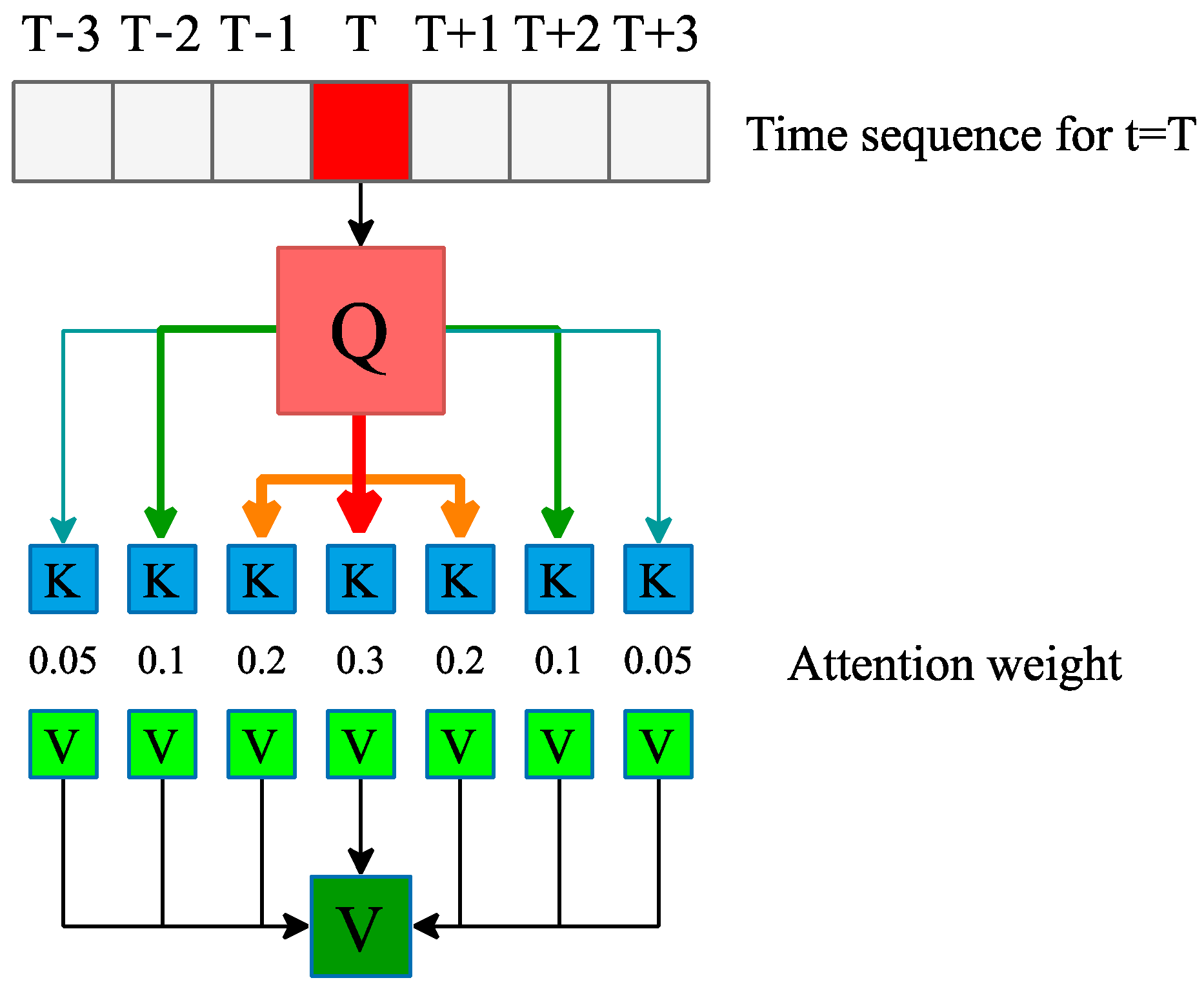

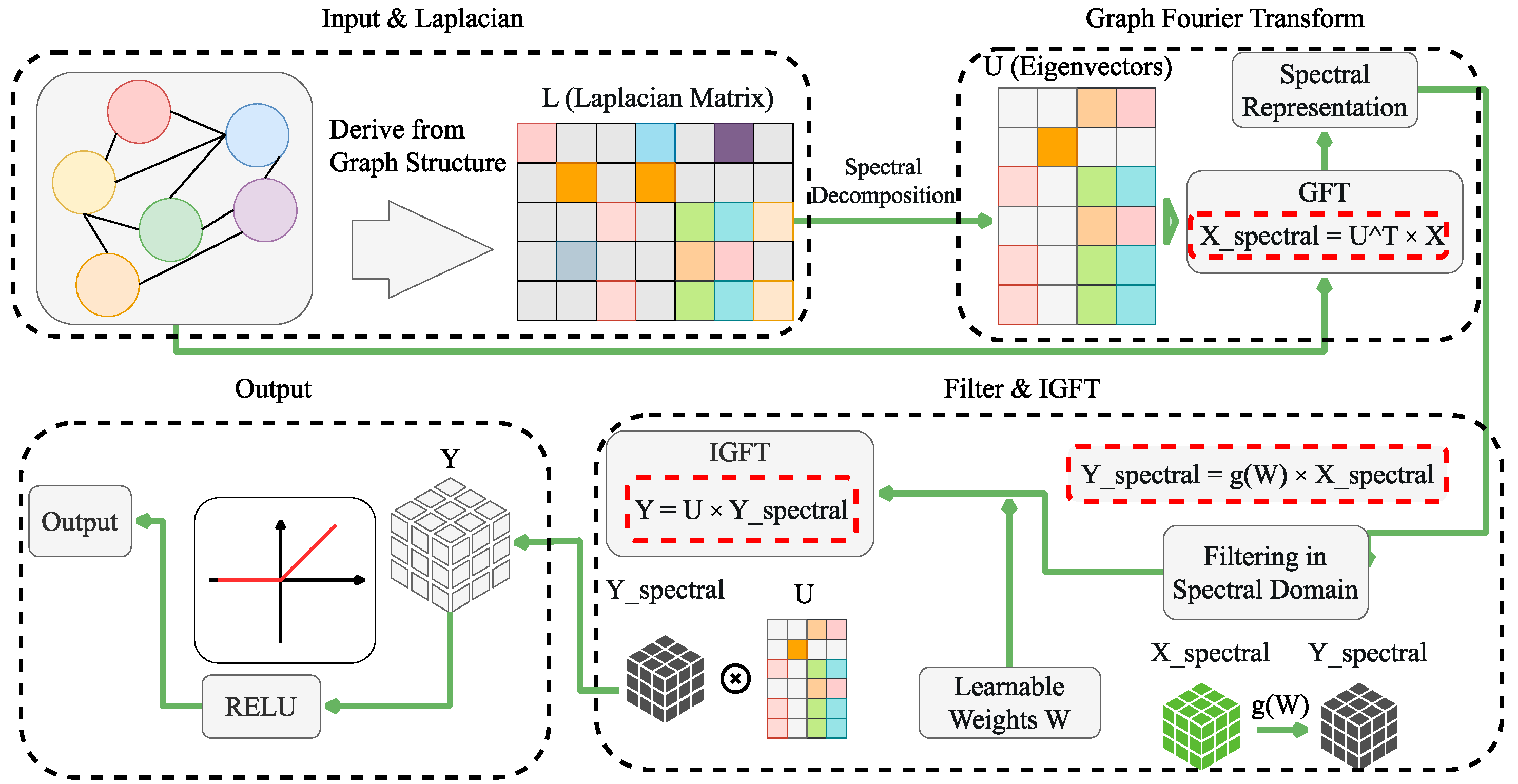

To address these challenges, we propose the Spectral-Attentive Spatio-Temporal Graph Convolutional Network (SA-STGCN). Our model uses a unified framework—not because such a framework is inherently superior, but because it provides the right foundation to combine three complementary components that directly target the above problems. The power of SA-STGCN lies in how these elements work together. The process starts by transforming the raw and unstable wind power time series with a wavelet transform. This step decomposes the noisy signal into different frequency sub-bands, making the data easier to interpret. In doing so, it separates long-term, relatively stable wind patterns from short-term, turbulent fluctuations. This multi-scale representation then serves as the input for the predictive core of the model. Inside this block, a Temporal Attention mechanism scans the historical sequence and highlights the most influential past time steps. This allows the network to focus on key precursors—such as those leading up to sudden output ramps. At the same time, the model handles spatial relationships by moving beyond the limitations of conventional localized graph message passing. Instead, it adopts Spectral Graph Convolution, which operates in the graph’s spectral space to extract global, farm-level correlations influenced by large weather systems. By combining multi-resolution decomposition, adaptive temporal weighting, and global spatial filtering, SA-STGCN builds a richer representation of wind dynamics than conventional models can achieve.

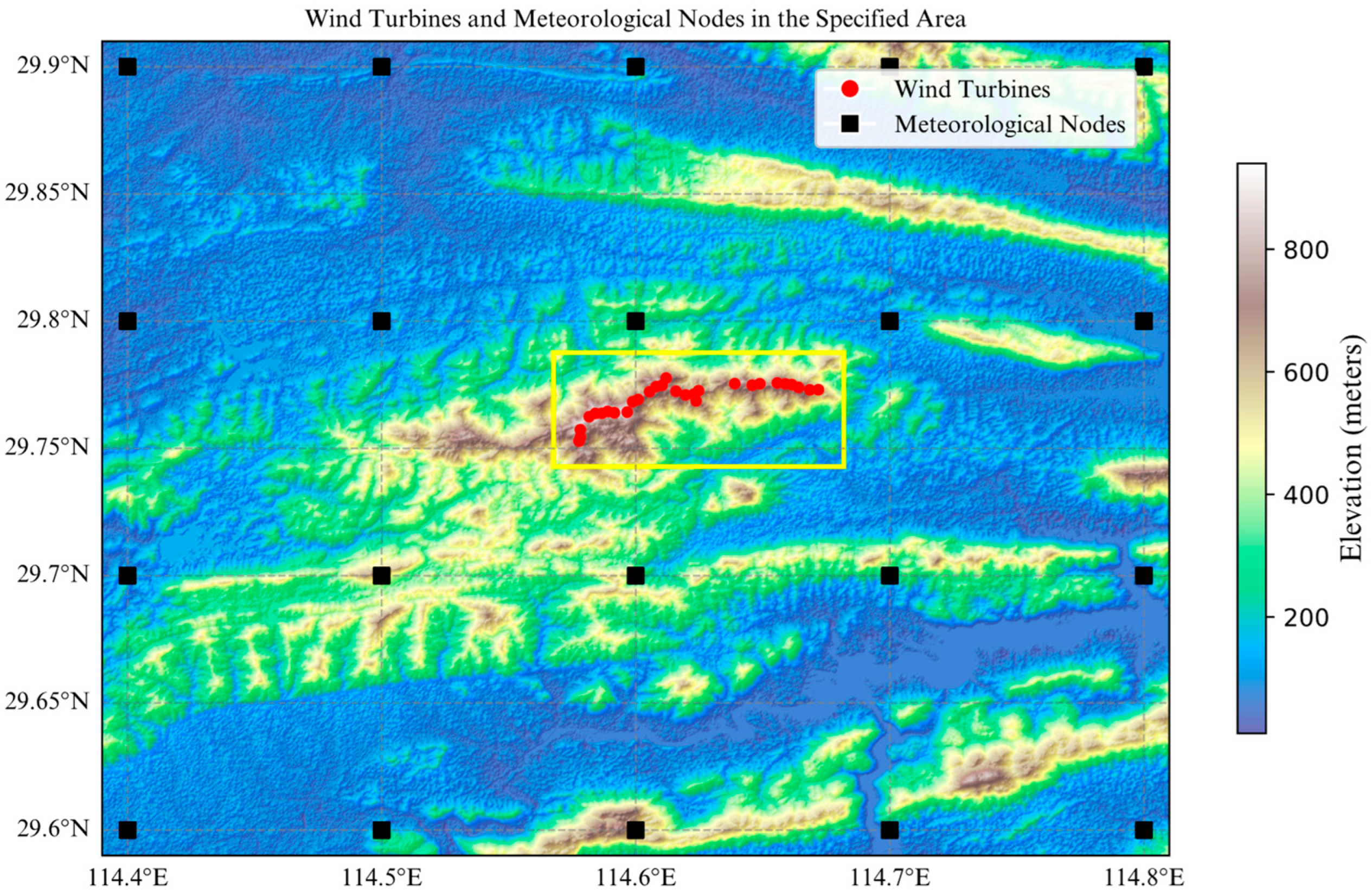

We tested SA-STGCN using a real-world dataset collected from a wind farm in central China. Experimental results show that our model delivers significantly better forecasting performance than current state-of-the-art (SOTA) methods. To summarize, the main contributions of this paper are:

- (1)

We propose SA-STGCN, a new unified spatiotemporal framework that improves the STGCN architecture for accurate short-term wind power forecasting. Its integration of frequency analysis, temporal attention, and spectral graph theory enables stronger and more coherent modeling of the coupled spatial and temporal characteristics of wind power.

- (2)

The model introduces a tri-fold innovation. First, a wavelet transform is applied as a front-end feature extraction tool, breaking the time series into multiple frequency bands to reveal both short- and long-term patterns. Second, a Temporal Attention mechanism embedded in each block adaptively identifies the most relevant historical time steps, solving the problem of static temporal kernels. Third, the use of Spectral Graph Convolution, based on the graph Laplacian, enables the model to capture global and multi-scale spatial connections—going beyond the limits of purely local graph operations.

- (3)

Through comprehensive evaluation on the collected dataset, we show that SA-STGCN achieves significantly better forecasting accuracy than existing SOTA methods, proving its effectiveness for modeling complex spatiotemporal systems.

The rest of this paper is organized as follows.

Section 2 explains the SA-STGCN architecture and methodology.

Section 3 describes the dataset, preprocessing steps, and evaluation metrics.

Section 4 presents the experimental setup, model performance, and comparison with other baselines. Finally,

Section 5 concludes with key findings and discusses future research directions.

4. Experiment and Performance Evaluation

We evaluate the proposed SA-STGCN on our self-collected wind farm dataset through comprehensive experiments against six representative deep learning baselines: wavelet_STGCN, (Graph Attention Network) GAT, (Spatio-Temporal Transformer) ST_TRANSFORMER, BASIC_TRANSFORMER, (Attention LSTM) ATTN-LSTM, and SIMPLE_LSTM, (Light Gradient Boosting Machine) LGBM. These models represent state-of-the-art approaches in spatio-temporal forecasting and provide strong comparative benchmarks. All models undergo identical data preprocessing and hyperparameter optimization to ensure fair evaluation. Performance is assessed using Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) metrics. The experimental results demonstrate that SA-STGCN consistently outperforms these baselines, showcasing superior accuracy and robustness in capturing complex wind power dynamics.

4.1. Settings

The experiments were conducted on a high-performance computing platform featuring an NVIDIA RTX 4090 (24GB VRAM) with CUDA 12.4 support, implemented within the PyTorch 2.8.0 framework. The forecasting task was defined as predicting the total wind farm power output for a 16-step future horizon (equivalent to 4 h, assuming 15-min intervals) based on a 24-step historical input window (6 h). The entire dataset was partitioned into training and validation sets with an 80/20 split.

All models were implemented in PyTorch and trained on a CUDA-enabled GPU. Optimization was performed using the AdamW optimizer, configured with a learning rate of 1 × 10−4 and a weight decay of 1 × 10−5, minimizing a Mean Squared Error (MSE) loss function. To accelerate training and improve numerical stability, automatic mixed precision (AMP) with gradient scaling was employed. The training was conducted with a batch size of 128 for a maximum of 50 epochs. An early stopping mechanism with a patience of 10 epochs was instituted to prevent overfitting, monitored on the validation loss. This was complemented by a ReduceLROnPlateau learning rate scheduler, which reduced the learning rate by a factor of 0.5 after 3 consecutive epochs with no improvement in validation RMSE.

The architecture of our proposed Spectral Attentive STGCN (SA-STGCN) model is configured as follows. It is built with a hidden dimension of 64 and consists of 3 stacked STGCN blocks. For the feature augmentation stage, we employ a Daubechies 4 (‘db4’) wavelet transform with a single decomposition level. Within each STGCN block, the temporal attention mechanism is implemented with 4 attention heads and operates globally across the entire 24-step input sequence to capture long-range dependencies. A dropout rate of 0.1 is applied within the attention module. Finally, the output projection network utilizes an intermediate layer of 512 dimensions followed by a dropout layer with a rate of 0.2 before generating the final 16-step forecast. The performance of each model was quantified using four metrics: Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE), both reported in Megawatts (MW), R2 Score, and a Day-Ahead Accuracy Rate, calculated relative to the farm’s rated capacity of 58 MW.

4.2. Evaluation Metrics

In this research, we employ multiple evaluation metrics to comprehensively assess the performance of prediction models. These metrics are categorized into two groups: Error Metrics and Predictive Accuracy Metrics. Error Metrics include the following:

The mean absolute error (MAE) measures the average absolute difference between actual values

yi and predicted values

, providing an intuitive sense of overall prediction error:

The root mean squared error (RMSE) is similar but emphasizes larger errors due to the squaring operation:

The coefficient of determination (

R2) quantifies how well the model explains the variance in the target variable:

here, sgn(⋅) is the sign function, returning +1 if the argument is positive, −1 if negative, and 0 if zero, indicating whether two pairs are concordant or discordant. Finally, Mutual Information (MI), denoted as (

) quantifies the amount of information shared between actual and predicted sequences:

In this expression, : Joint probability distribution of actual and predicted values; , : Marginal probability distributions. This metric captures both linear and non-linear dependencies, making it particularly useful for assessing complex model behaviors. By applying these error and predictive accuracy metrics, we are able to comprehensively evaluate the performance of the proposed prediction models.

4.3. Experimental Results

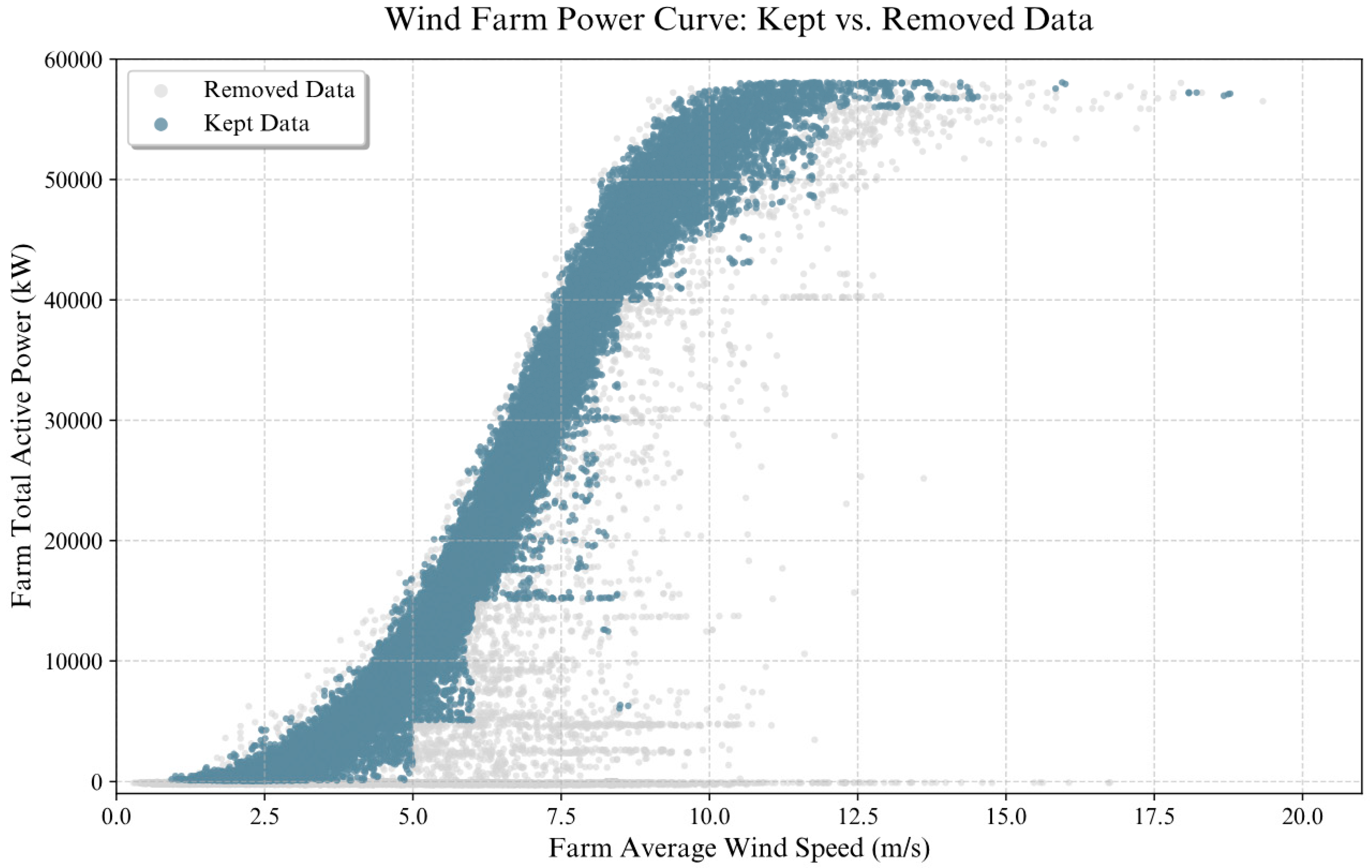

We evaluate 4-hour-ahead wind power forecasting (16 future steps) using 24 historical steps as input on a self-curated SCADA dataset. All models share the same splits, training pipeline, and a conservative preprocessing protocol that flags curtailment/inefficiency/shutdowns and applies short-window forward/back filling to preserve autocorrelation without fabricating high-frequency content. We benchmark the proposed SA-STGCN against (Graph Attention Network) GAT, (Spatio-Temporal Transformer) ST_TRANSFORMER, BASIC_TRANSFORMER, (Attention LSTM) ATTN-LSTM, and SIMPLE_LSTM, (Light Gradient Boosting Machine) LGBM.

Table 1 reports the quantitative results and

Figure 7 visualizes them.

4.4. Evaluation Results and Analysis

As illustrated in

Figure 7 and

Table 1, the comparison across seven competing models reveals a striking advantage of the proposed SA-STGCN framework. Among all contenders, it consistently delivered the most accurate predictions, securing the lowest error rates (MAE 1.52, RMSE 2.31) while achieving the highest accuracy and information efficiency (R

2 0.94, MI 1.61). Against the next-best WAVELET_STGCN, these differences translate into 12.6% and 14.8% reductions in MAE and RMSE, respectively, accompanied by a more subtle—yet still meaningful—rise in R

2 from 0.93 to 0.94. Such improvements, though numerically modest in R

2, are backed by double-digit drops in absolute error, pointing to real, not cosmetic, advances. The implication is straightforward: coupling spatial attention with spatio-temporal graph convolution allows the model to grasp both structural dependency and temporal dynamics in a synergistic way.

This advantage is magnified when SA-STGCN is set against architectures that do not explicitly embed graph structure. Purely sequential models, whether Transformer-based or recurrent, fall noticeably behind. Against ST_TRANSFORMER, SA-STGCN reduces MAE and RMSE by roughly one--third, and against BASIC_TRANSFORMER the gap widens to nearly half, with R2 surging from 0.80 to 0.94. The contrast grows even sharper for recurrent variants: compared with SIMPLE_LSTM, error rates collapse by nearly 60%, while predictive power (R2) leaps from 0.71 to 0.94. Even models enhanced with graph attention (GAT) or attention-augmented LSTMs confirm this same pattern—the absence of a unified spatio-temporal perspective proves costly. Interestingly, the tree-based LGBM slots into an intermediate position. It performs noticeably better than traditional sequence learners, with R2 0.88 and MI 1.41, yet it lags behind specialized graph architectures. This is hardly surprising: gradient boosting captures nonlinear effects with finesse, but it lacks the structural machinery to encode long-range temporal correlations or spatial interdependencies that graph neural networks exploit almost effortlessly.

Taken together, the performance hierarchy is unambiguous. Methods that integrate spatial dependence with temporal evolution—exemplified by SA-STGCN and WAVELET_STGCN—consistently take the lead. Some might regard a 0.01 improvement in R2, from 0.93 to 0.94, as trivial in a saturating regime; however, this view overlooks the paired double-digit reductions in MAE and RMSE. In practice, such improvements represent tangible gains in reliability and robustness, the kind that can shift decision-making outcomes in real datasets. This blend of subtle statistical refinement with substantial application-level benefit is precisely what signals a genuine advancement rather than an exercise in numerical cosmetics.

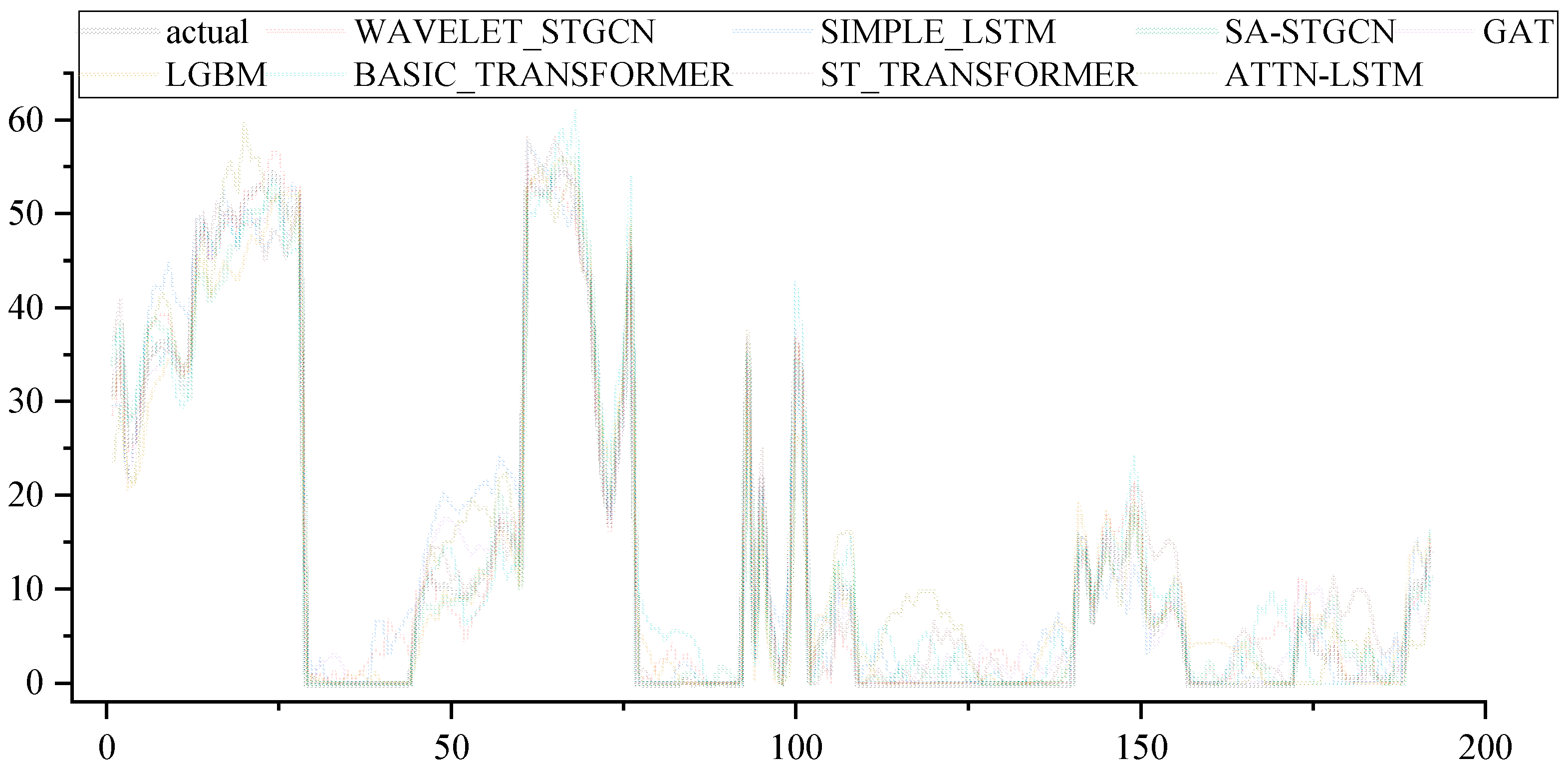

Figure 8 comparison of predicted wind power outputs from different models against the ground truth (black curve) over a representative period characterized by sharp fluctuations. The results clearly demonstrate that the proposed SA-STGCN model (green curve) achieves the closest alignment with the actual values, successfully capturing the rapid variations and peak magnitudes in power output. In contrast, other benchmark models exhibit noticeable deviations, with some predictions showing evident lags during steep ramps, while others tend to underestimate peak values or display unstable tracking in highly volatile intervals. This indicates the superior ability of SA-STGCN to model spatiotemporal dependencies and maintain robustness under highly dynamic conditions.

The computational cost analysis in

Table 2 reveals a clear stratification of models according to resource demands. At the upper tier, ST_TRANSFORMER and SA-STGCN are the most resource-intensive, requiring over 1100 s of training and more than 15 GB of GPU memory, a direct consequence of their heavy attention mechanisms. At the opposite end, LSTM-based models such as SIMPLE_LSTM demonstrate far greater efficiency, with training times under 600 s and modest memory footprints around 4 GB. Intermediate architectures—WAVELET_STGCN, GAT, BASIC_TRANSFORMER, and ATTN-LSTM—strike a balance, incurring moderate costs of 700–1000 s and 5–8 GB memory. Notably, the inclusion of LGBM sharpens this contrast: with just 75 s of training, a 1.5-s inference, and peak memory under 1 GB, it represents the extreme low-cost frontier. While this efficiency highlights its practicality in constrained environments, its lightweight profile also explains why it cannot rival graph-based deep models in capturing rich spatio-temporal dependencies.

4.5. Ablation Experiments

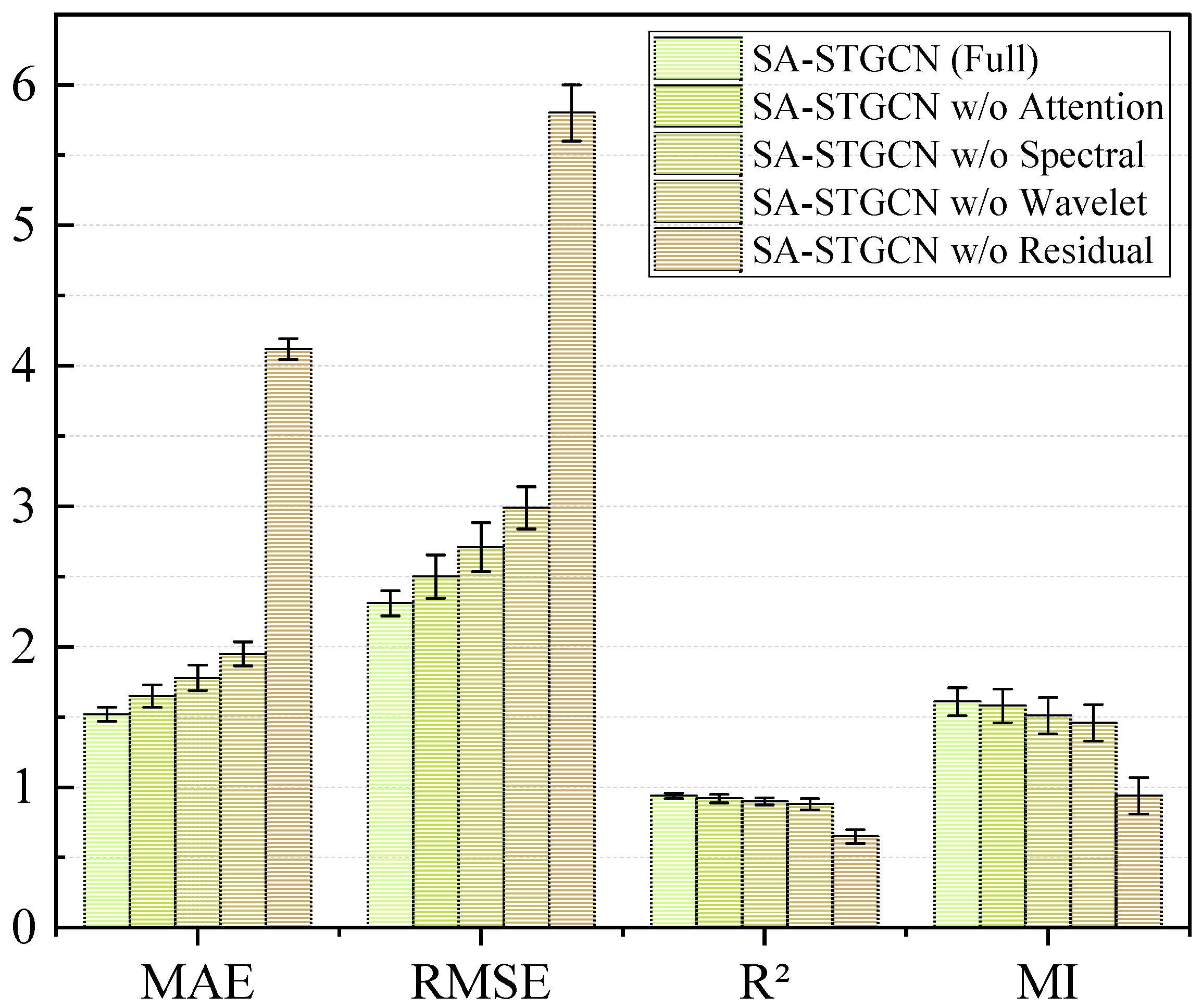

To rigorously validate the effectiveness and necessity of each key component within our proposed SA-STGCN model, we have conducted a comprehensive ablation study, with the results presented in

Table 3 and

Figure 9 This study was designed to systematically deconstruct the model and quantify the contribution of each module to the overall predictive performance. To ensure a fair and controlled comparison, all model variants were trained and evaluated under identical experimental conditions, including the same dataset, data preprocessing, and hyperparameter settings. Our complete proposed model, SA-STGCN (Full), serves as the benchmark representing the optimal performance achieved when all components work in synergy. Against this benchmark, we evaluated several ablated versions. The ‘SA-STGCN w/o Attention’ variant was developed by removing the self-attention mechanism to measure its impact on identifying salient spatiotemporal features. Similarly, the ‘SA-STGCN w/o Spectral’ model excluded the spectral graph convolution layers to evaluate the contribution of graph-based spatial analysis. To test the role of multi-resolution temporal analysis, we implemented the ‘SA-STGCN w/o Wavelet’ variant, which omits the wavelet transform component. Finally, to demonstrate the critical role of residual learning in enabling a deep architecture and facilitating gradient flow, we tested a ‘SA-STGCN w/o Residual’ model with all residual connections removed. By comparing the performance metrics of these ablated configurations against the full model, any significant performance degradation directly quantifies the positive and necessary contribution of the removed component to the model’s overall success.

Beyond just improving predictive accuracy, the components of SA-STGCN also significantly enhance the model’s interpretability. The temporal attention mechanism, for instance, highlights which past time steps have the greatest influence on the forecast, offering a transparent view of the key historical conditions that drive sudden ramps or sustained fluctuations in wind power. Simultaneously, the spectral graph convolution sheds light on the spatial dimension by capturing non-local correlations among distant wind turbines that are jointly affected by large-scale meteorological systems. Together, these components provide valuable insights into the temporal and spatial patterns underlying the model’s predictions.

The conclusion from our ablation study is that all components of the SA-STGCN model—the attention mechanism, spectral graph convolution, wavelet transform, and residual connections—are effective and necessary, working in synergy to achieve optimal performance. The variant without residual connections (‘SA-STGCN w/o Residual’) exhibited the most substantial performance degradation, underscoring that these connections are the most critical component of the architecture. This is because residual connections are fundamental for training deep models by providing identity shortcut paths that ensure stable gradient propagation during backpropagation, effectively mitigating the vanishing gradient problem. Without them, our 3-block deep model becomes significantly harder to optimize, leading to poor convergence and a collapse in predictive accuracy. While less severe, the performance drops in other variants still confirm the value of the wavelet transform for multi-resolution analysis, spectral graph convolution for capturing complex spatial correlations, and the attention mechanism for focusing on salient temporal features. This comprehensive analysis validates the rationality and integrity of our model’s design.

5. Conclusions

This paper presents a novel deep learning architecture, the Spectral Attentive Spatio-Temporal Graph Convolutional Network (SA-STGCN), designed for high-precision wind power forecasting. The model integrates Wavelet Transform, Temporal Attention, and Spectral Graph Convolutions within a unified framework to effectively capture complex spatiotemporal dependencies in wind power systems.

Experimental validation demonstrates the model’s superior performance, achieving a Mean Absolute Error (MAE) of 1.52 and Root Mean Squared Error (RMSE) of 2.31. These results validate the model’s capacity for reliable and precise forecasting in real-world wind power prediction scenarios.

The effectiveness of SA-STGCN stems from its multi-component architecture. The wavelet transform decomposes input signals into frequency sub-bands, enabling simultaneous capture of long-term trends and short-term fluctuations. The Temporal Attention mechanism adaptively weights historical time steps, focusing computational resources on the most predictive temporal patterns. The Spectral Graph Convolution operates in the graph Fourier domain to model global spatial correlations across the turbine network, transcending the locality constraints of traditional message-passing approaches.

Future research directions include developing dynamic graph structures that incorporate time-varying physical factors such as wind direction patterns, enhancing computational efficiency through architectural optimization, and extending the framework to long-term and probabilistic forecasting scenarios. Additionally, its application should be tested on other renewable sources like solar power, where precise forecasting is equally critical for resource management [

32]. These advances will further improve forecasting accuracy and support more effective grid management strategies for renewable energy integration.