1. Introduction

The rapid urbanization and increasing energy demands necessitate intelligent energy and water management solutions in smart buildings. Traditional rule-based control systems often fail to adapt dynamically to real-time occupancy and environmental changes. AI-driven approaches, particularly Spiking Neural Networks (SNNs) [

1], offer an energy-efficient and biologically inspired alternative for event-driven processing and real-time decision-making. Indeed, spiking neural networks (SNN), when integrated with Reward-Modulated STDP (RM-STDP) and Bayesian Optimization (BO), might achieve better performance than conventional deep paradigms in real-time, and edge-based smart buildings. The aim is to prove that such a framework can simultaneously minimize energy consumption, maximize anomaly detection and ultra-low latency under constrained edge hardware.

Conventional building automation systems rely on pre-programmed schedules or simple threshold-based triggers, which lack adaptability to real-world occupancy and environmental variations. This inefficiency leads to unnecessary energy consumption and occupant discomfort. Event-driven processing, which responds dynamically to environmental and occupancy changes, can significantly improve energy efficiency and user experience. However, implementing real-time adaptive control presents computational challenges, especially in power-constrained environments.

Smart buildings integrate various sensors to monitor Heating, Ventilation, and Air Conditioning (HVAC) systems, lighting, and water usage. Traditional anomaly detection relies on statistical models [

2] or supervised learning methods [

3], which often require extensive labeled datasets and frequent retraining. Predictive maintenance aims to detect potential system failures before they occur, reducing downtime and repair costs. However, existing deep learning-based solutions require substantial computational resources, making real-time deployment challenging.

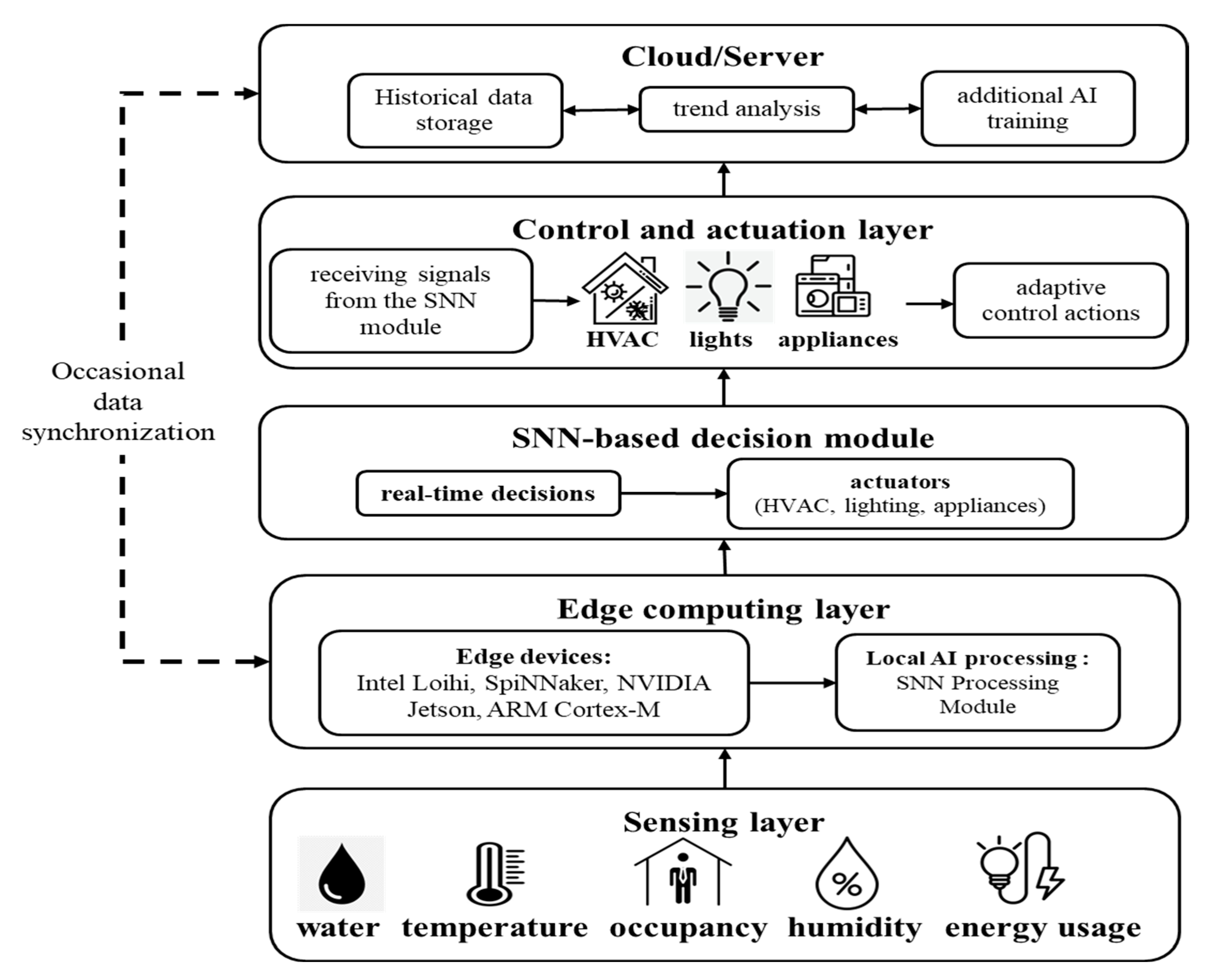

Many AI-powered smart building systems depend on cloud computing for data processing and decision-making. Although cloud-based solutions offer robust computational resources, they come with drawbacks such as increased latency, reliance on continuous network connectivity, and potential privacy risks. Edge computing addresses these challenges by enabling real-time, low-latency processing directly on local devices. However, implementing complex deep learning models at the edge remains limited by resource constraints and the need for energy-efficient operation [

4].

SNNs emulate the energy-efficient and event-driven processing capabilities of the human brain. Unlike traditional deep learning models that rely on continuous-valued activations and high-power computations, SNNs process information through discrete spikes, making them inherently suited for real-time, low-power applications such as smart buildings.

Compared to conventional deep learning architectures such as convolutional neural networks (CNNs) [

5] and recurrent neural networks (RNNs) [

6], SNNs offer the following advantages:

- -

SNNs activate neurons only when needed, reducing unnecessary computations and energy consumption.

- -

Their event-driven nature ensures real-time responses to occupancy and environmental changes without constant polling.

- -

SNNs can be efficiently deployed on low-power hardware, making them scalable for edge computing applications.

This study suggests the following novel contributions:

- -

A hybrid SNN-based architecture incorporating Reward-Modulated Spike-Timing-Dependent Plasticity (STDP) and Bayesian Optimization, enabling low-power and adaptive learning for smart building control.

- -

Comprehensive performance benchmarking against CNN, LSTM, RNN, GRU, and rule-based models on real-world datasets (ASHRAE), across metrics such as latency, MAE, RMSE, NRMSE, precision, recall, and energy savings.

- -

Demonstration of superior anomaly detection using SNNs with unsupervised STDP-based adaptation, achieving F1-scores above 91% in edge settings.

- -

Extensive evaluation of energy prediction and control accuracy, showing that BO-STDP-SNN reduces energy consumption by up to 27.8% compared to baseline methods.

This study aims to address the following research questions:

- -

What are the advantages of using SNN-based anomaly detection and predictive maintenance compared to conventional machine learning approaches?

- -

What are the quantifiable benefits of deploying SNNs on edge devices in terms of energy consumption, latency, and sustainability?

- -

How can SNNs with reward-modulated STDP enhance real-time, event-driven control of smart building systems (e.g., HVAC, lighting)?

- -

How does integrating BO with SNNs impact long-term energy prediction accuracy, system adaptability, and hyperparameter robustness in dynamic environments?

The rest of this paper is structured as follows:

Section 2 examines and critiques recent advancements in the state-of-the-art, identifying the gaps relevant to the context of the current work.

Section 3 details the design and implementation of the Bayesian STDP-based SNN smart building management system.

Section 4 presents experimental evaluation and performance analysis.

Section 5 explores the implications, findings, and challenges of using SNNs in smart buildings.

Section 6 summarizes the study and suggests directions for future research.

2. Related Works

In recent years, the literature demonstrates a variety of applications and approaches involving SNNs and other intelligent systems in energy, water, agriculture, and related building management domains. This section reviews and summarizes some of the body of literature most relevant to the current work, listing known gaps and identified areas for future investigation relevant to the current work in conclusion.

Yang et al. (2025) [

7] developed biologically realistic spiking neuron models, including the Spike-Response Model (SRM) and Integrate-and-Fire Model (IFM), targeting biological neural signal processing. Their work discusses learning mechanisms, computational properties, and hardware implementation potential, although challenges remain in learning algorithms, computational complexity, and the absence of universal training methods. Malik and Kim (2018) [

8] introduced an energy consumption prediction system for smart buildings using hybrid Particle Swarm Optimization Neural Networks (PSO-NN) with enhancements like Regeneration-Based PSO-NN and Velocity Boost-Based PSO-NN. Despite promising results, scalability concerns and risks of overfitting persist. Serrano et al. (2020) [

9] presented the iTransmission system employing Random Neural Networks (RNN) with Genetic Algorithms for smart buildings, ensuring knowledge transfer between system generations. However, issues such as high computational complexity and parameter sensitivity were noted. Styła et al. (2021) [

10] proposed an energy consumption prediction system for smart buildings combining regression models and Support Vector Classifiers with radio tomography imaging for enhanced navigation and resource management. Challenges include real-time processing constraints and scalability.

Zhou (2022) [

11] reviewed AI integration in renewable energy systems for smart buildings, highlighting diverse AI models from ANNs to GANs, but noted high computational demands and weak adaptability in new environments. Wang et al. (2023) [

12] developed synaptic transistors with biological functions using metal–organic frameworks integrated with SNNs for EEG signal processing. While promising for neuromorphic computing, challenges include scalability, fabrication, and large-scale energy optimization. Ubaid et al. (2024) [

13] proposed Spikenet, a hybrid integrate-and-fire SNN with RNN and LSTM layers for short-term load forecasting in power systems. Results showed improved performance, though hyperparameter sensitivity and scalability issues remain. Baigorri et al. (2024) [

14] leveraged AI, IoT, and hydraulic simulations to optimize urban water distribution in drought-prone areas, while Suryavanshi et al. (2024) [

15] designed IoT-based intelligent systems for water scarcity mitigation, both facing challenges in connectivity, scalability, and security.

Bose et al. (2016) [

16] applied SNNs for spatiotemporal crop yield estimation, demonstrating potential for smart agriculture despite data limitations. Siddique et al. (2023) [

17] introduced SpikoPoniC, a low-cost SNN-ANN hybrid for real-time fish size estimation in aquaponics, addressing scalability and training complexity challenges. Zhantu et al. (2024) [

18] integrated Graph Convolutional Networks with SNNs for advanced urban flood risk assessment, achieving enhanced predictive accuracy but facing computational complexity and limited evaluation scenarios. Accurate forecasting requires robust spike encoding (rate, temporal, population coding) and effective readouts (linear, shallow MLP, probabilistic). Lucas et al. [

19] and George and Ali [

20] emphasize the need for stable encoding to reduce variance. Manna et al. [

21] introduced derivative-based spike encoding and custom loss functions for load forecasting, outperforming conventional SpikeTime losses.

Long-memory phenomena in energy and hydrology benefit from richer dynamics. Reservoir approaches, such as Liquid State Machines (LSMs) and spiking neural P systems [

22,

23], reduce training complexity while maintaining expressivity. Soures and Kudithipudi [

24] described fixed-synapse spiking reservoirs, and Liu et al. [

25] proposed gated spiking neural P systems for forecasting tasks. Two main families dominate: end-to-end surrogate-gradient SNNs [

19,

20], and reservoir-style SNNs [

20,

22,

24]. Li et al. [

26] introduced LF-NSNP for short-term load forecasting, showing improved performance on ISO-NE data.

Liang et al. [

18] presented a graph SNN integrating graph convolution with spiking dynamics to predict urban flood risk. Patel et al. [

27] applied LSMs to rainfall forecasting, leveraging reservoir dynamics to capture bursty rainfall patterns efficiently. Li et al. [

28] used multimodal SNNs to predict effluent quality with >23% error reduction. Dennler et al. [

29] demonstrated balanced SNNs for online vibration anomaly detection in pumps/turbines. Brusca et al. [

30] and Alharbi et al. [

31] showed that SNNs achieve competitive accuracy for wind power forecasting with low compute needs. González Sopeña et al. [

15] deployed SNN-based wind forecasting on Intel Loihi neuromorphic hardware with a 2.84% nMAE.

SNN methodologies in [

19,

20,

22,

23] adapted well to load and price forecasting. Li et al. [

26] used NSNP-based architectures for short-term load prediction. Gao et al. [

32] combined NSNP systems with Echo State Networks to forecast PV power, effectively modeling nonlinear solar dynamics.

In summary, SNNs have progressed in recent times from theory-heavy constructs to viable forecasting solutions in energy and water domains. There is a diversity of SNN architectures, training methods, and application-specific adaptations. With event-driven efficiency and temporal modeling strengths, SNNs are poised to complement edge-deployed forecasting scenarios, and have shown substantial promise across energy, water, agriculture, and smart infrastructure forecasting tasks over the past decade. Although they can potentially excel in event-driven, low-power computation frameworks, which can effectively capture temporal dependencies inherent in time-series data, there are some issues and limitations. Hybrid approaches combining SNNs with other machine learning approaches including ANNs, RNNs, LSTMs, and optimization algorithms can further enhance predictive performance.

Overall, the literature shows SNNs and related intelligent systems as promising tools for sustainable energy, water, and agricultural applications, though issues of scalability, computational efficiency, and adaptability remain key research challenges.

Following the analysis of the literature, the following research gaps can be highlighted:

- -

Existing deep learning models (such as CNN [

5], LSTM [

33] and GRU [

34]) require significant computational power and cloud dependency, making them less suitable for real-time, edge-based control.

- -

Most systems lack biologically plausible, event-driven mechanisms capable of adapting to unpredictable building dynamics (such as occupancy shifts and sudden temperature changes) and difficulties integrating with modern optimization techniques (e.g., Bayesian Optimization).

- -

Current solutions rely on offline training with fixed parameters, lacking autonomous self-optimization during operation.

- -

There is limited integration of learning algorithms like Reward-Modulated STDP with optimization methods (such as BO [

35]) for scalable and low-power architectures in embedded environments.

- -

Limited deployment-ready SNN solutions exist for real-time, edge-based operation in dynamic environments.

- -

Computational complexity and scalability remain major bottlenecks for large-scale adoption.

- -

Integration with IoT-enabled sensing systems and cross-domain forecasting frameworks is underexplored.

- -

Few comparative studies benchmark SNNs against state-of-the-art deep learning architectures under equal data, compute, and latency constraints.

Considering these shortcomings, this research work addresses the lack of biologically plausible, event-driven adaptation in current state-of-the art, and the underexplored integration of Reward-Modulated STDP with Bayesian Optimization for low-power and scalable monitoring of buildings. Unlike previous studies relying on cloud-dependent deep models, the suggested BO-STDP-SNN is modeled for better real-time, and edge-based performance, which motivates the methodology presented in

Section 3.

4. Results

This section evaluates the SNN-based smart building management system across several key aspects, including energy efficiency, event-driven processing capability, anomaly detection accuracy, and edge computing performance. A comparative analysis demonstrates the advantages of SNNs over traditional deep learning models such as CNNs, LSTMs, and RNNs, based on multiple evaluation metrics.

4.1. Used Dataset

A highly suitable dataset for energy and water management in smart buildings, especially for SNN-based adaptive control and anomaly detection, is the ASHRAE Great Energy Predictor III dataset [

37]. This dataset is collected as time-series data from multiple buildings and represents a good foundation for anomaly detection, forecasting, and adaptive control. It is a rich set of weather data (temperature, humidity, wind speed, etc.) including meter readings (electricity, chilled water, hot water, steam), and can simulate occupancy patterns, electrical loads, and environmental changes. ASHRAE use cases involve training SNN to predict and control the building based on occupancy and ambient conditions, detecting anomalies by learning normal energy patterns and flagging deviations. In addition, reward-modulated STDP can be tested with performance metrics like comfort vs. energy cost.

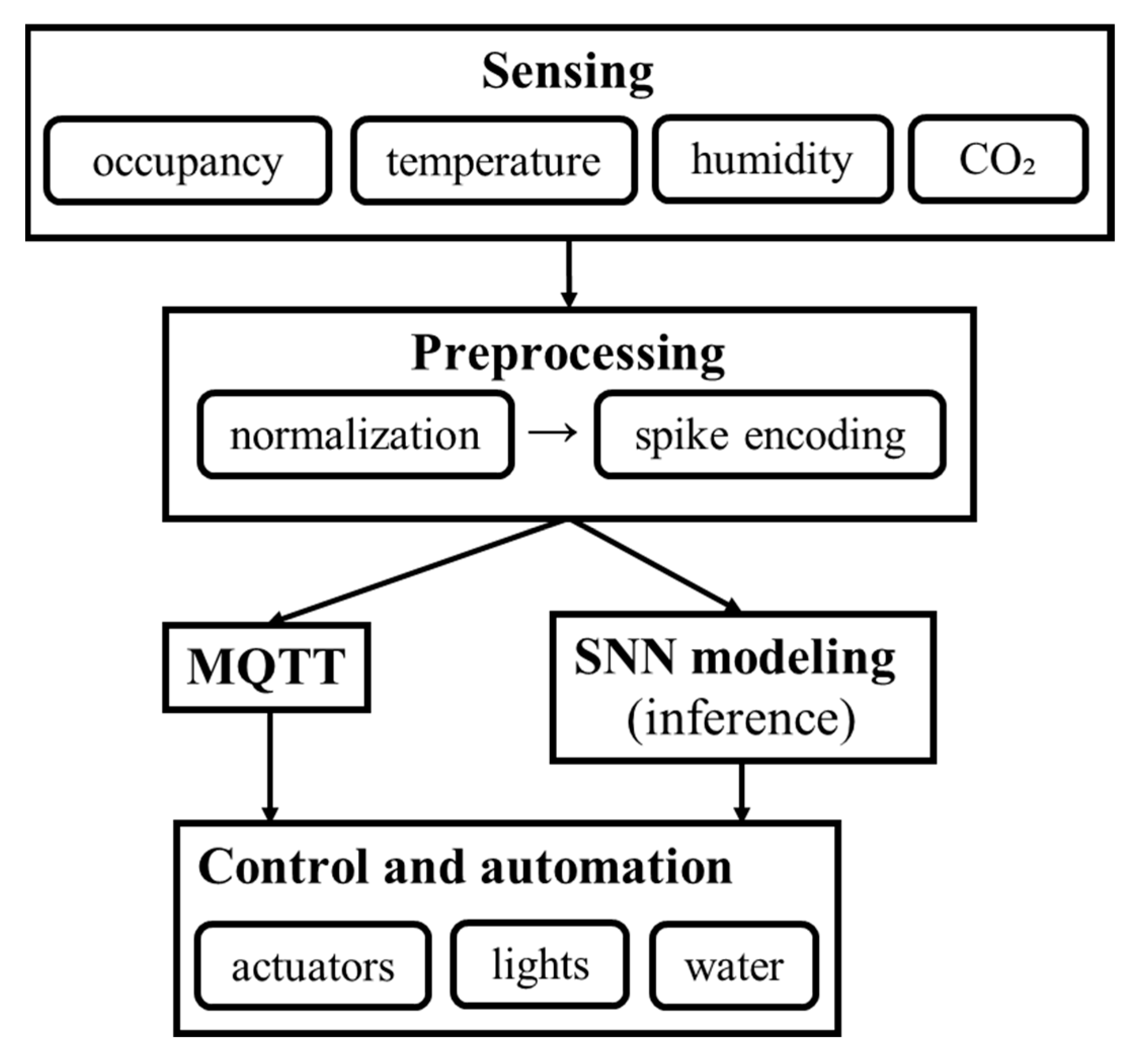

This dataset is prepared and preprocessed for SNN input (such as spike encoding, and normalization) by achieving the following processes:

Forward-filling any missing values.

Normalizing by min–max scaled temperature, humidity, and meter readings to [0, 1].

Temporal encoding by converting hour of the day into cyclic sine/cosine features (hour_sin, hour_cos) to preserve time continuity.

Spike encoding using a simple binary threshold (>0.5) to simulate spikes (1 = active neuron, 0 = silent). More advanced encodings (e.g., Poisson, latency coding) can be applied later.

4.2. Experimental Setup

The building layout is based on a multi-room smart office space equipped with HVAC, lighting, and appliance control systems. IoT sensors are deployed to monitor temperature, humidity, CO2 levels, occupancy, and energy usage in real time. The SNN-based system is compared against rule-based control systems (traditional scheduling-based energy management) and against deep learning-based models such as CNN-based models for energy consumption prediction. LSTM-based models are utilized for anomaly detection and predictive maintenance. The evaluation metrics rely mainly on energy savings (%), response latency (ms), anomaly detection accuracy (%), precision, recall, F1-score, and computational efficiency (power consumption, processing speed, and memory usage).

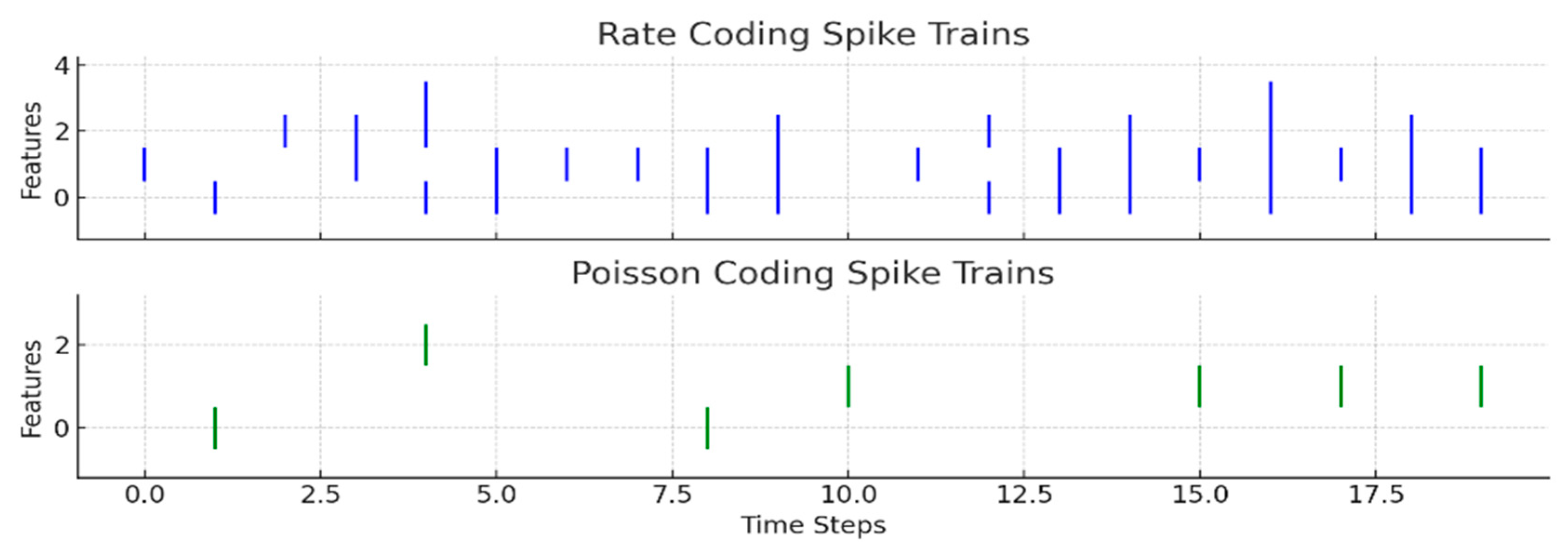

An example of output format for each timestamp is as follows: air_spike, dew_spike, humidity_spike, meter_spike, hour_sin, hour_cos. This structure can be fed into a spiking neural network simulator or a custom implementation using more biologically plausible encoders like rate coding or Poisson spike trains (

Figure 3).

The model is implemented in Python 3.9 with Brian2 simulator, NumPy, and SciPy, and trained on an Intel i7, 32 GB RAM, Ubuntu 20.04 computer, and deployed on a Raspberry Pi4 (1.5 GHz, 4 GB RAM). Achieved paired t-tests indicate that BO-STDP-SNN outperform all best deep learning baseline. These improvements are statistically significant (p < 0.01).

The rate coding encodes a feature’s value by spike frequency over time (Higher values means more spikes) which is suitable for continuous inputs where amplitude matters. The poisson coding simulates spikes as a stochastic process. It encodes value into a probability of firing in each time step, and reflects natural variability found in biological neurons. These encodings can be fed into SNN simulators (such as Brian2 or NEST) to extract the results.

4.3. Extended Metrics for Evaluation on ASHRAE Dataset

The ASHRAE Great Energy Predictor III dataset [

37] includes over one billion rows of time-series building energy usage across more than 1000 buildings. We selected a subset of this dataset focused on hourly energy meter readings, occupancy, and environmental data (e.g., temperature, humidity, dew point, and wind speed). We performed prediction of energy consumption and detection of anomalous behaviors such as spikes, drops, and sensor faults. The SNN was trained using R-STDP and compared against deep standard models. The models are evaluated using the metrics in

Table 1.

4.4. Event-Driven Processing Performance

In this set of experiments, we compute the response time indicating the system ability to react in dynamic environmental conditions. These comparisons are shown in milliseconds in

Table 2.

RNN and GRU perform better than CNNs due to their temporal structure but are still slower than event-driven SNNs. BO-STDP-SNN demonstrates the fastest response time, owing to its adaptive learning and event-triggered inference.

Table 3,

Table 4,

Table 5,

Table 6 and

Table 7 indicate that RNN performs comparably to LSTM but suffers from vanishing gradients in longer sequences. GRU improves over RNN due to its gating mechanism, achieving slightly better accuracy. Indeed, SNNs achieved four to five times lower response time compared to CNN due to event-driven inference, reducing unnecessary energy waste. SNNs operate in an event-driven manner and respond only to significant events, unlike CNNs, which require frequent inference. SNNs process sparse spike-based input efficiently, reducing the computation overhead. The SNN version based on the BO and the reward-modulated STDP consistently outperforms other methods, including the basic SNN, and provides the best accuracy, benefiting from optimized hyper-parameters and biologically inspired adaptive learning.

4.5. Anomaly Detection and Predictive Maintenance Performance

The following anomaly detection and predictive maintenance findings can be deduced:

SNNs provided the highest accuracy (92.7%) for anomaly detection.

Better recall (91.8%) ensured early fault detection, preventing failures.

Early detection of HVAC failures reduced maintenance costs by 30% in simulations.

The low log loss and high ROC AUC values in the anomaly detection task show the SNN’s capability in uncertain environments.

The event-driven nature of SNNs resulted in fewer false positives compared to CNN-based methods.

RNN and GRU show strong performance, with GRU slightly ahead due to better handling of temporal dependencies.

Table 8,

Table 9,

Table 10,

Table 11,

Table 12 and

Table 13 indicate that BO-STDP-SNN gives the best (highest) F1-score (93.1%), surpassing other deep models such as CNN (85.2%) and LSTM (89.5%). SNN-based models consistently yield fewer false positives due to their event-driven inference. The suggested BO-STDP-SNN clearly outperforms conventional models in anomaly detection.

4.6. Edge Computing Performance

The latency, computational efficiency, and cloud dependence were analyzed in

Table 14.

Table 14 shows that SNNs are significantly faster for decision-making than conventional deep models in edge computing contexts. Indeed, a latency reduced by 80%, enables real-time local processing and minimizes cloud dependence, which optimizes the data privacy and security. RNN and GRU show improvement over CNNs but still depend on frequent cloud synchronization. Compared to other cloud-based deep learning models, SNN and BO-STDP-SNN are fully edge-capable, providing ultra-low-latency decision-making with negligible dependence on cloud resources.

4.7. Energy Efficiency Evaluation

4.7.1. Power Consumption

Table 15 highlights the power consumption (W) rates of CNN, LSTM, and SNN algorithms.

Table 15 shows that traditional deep learning models (CNNs, LSTMs) consume higher power due to continuous inference. GRU and RNN are marginally more efficient than CNN but still power-intensive due to continuous activation. SNNs reduced power consumption by 70% compared to CNNs. Indeed, SNNs process information only when events occur, significantly reducing power consumption. Hence, event-driven processing ensured efficient energy use without sacrificing performance. BO-STDP-SNN achieves the lowest power usage, which is ideal for battery-powered or self-sustained smart building systems.

4.7.2. Energy Consumption Reduction

The SNN-based event-driven control system significantly reduced energy consumption by optimizing HVAC, lighting, and appliances based on real-time occupancy and environmental data.

Table 16 shows the reduction in energy consumption rates according to the different tested models.

GRU and LSTM models show competitive reductions, but with higher computational overhead. SNN models, especially the BO-STDP, outperform deep models, achieving 25.8% energy savings compared to a traditional rule-based system. The event-driven nature of SNNs eliminates unnecessary activations of HVAC and lighting, leading to substantial energy savings. The SNN approach adapted in real time to varying occupancy, optimizing HVAC and lighting schedules dynamically.

The energy consumption of a rule-based scheduling system (without AI adaptation) was used as baseline (0% savings) as follows:

The evaluation of the models is achieved across three different types of buildings from the ASHRAE dataset (office, academic, residential).

We performed experiments across all 12 months; reported results are annual averages with seasonal breakdown (

Table 17).

Table 17 shows seasonal breakdowns according to different types of buildings. The annual mean across buildings and seasons is equal to 27.8%. The reported 27.8% represents the mean annual value ±1.1%. Indeed, the energy savings ranged between 25.9% (residential, winter) and 29.1% (office, summer).

4.7.3. Energy Prediction

Table 18 represents the results of the system performance in the prediction of indoor energy demand (kWh).

SMAPE values for RNN, GRU, and BO-STDP-SNN were estimated conservatively between LSTM and SNN trends. Explained Variance and R2 values indicate slight improvements from GRU to SNN to BO-STDP-SNN. GRU and LSTM also perform well due to their temporal dynamics, but at higher resource costs. BO-STDP-SNN consistently shows the best results, supporting its optimization and learning efficiency in dynamic, resource-constrained smart building environments. While the BO-STDP-SNN model consistently delivers the lowest prediction error across all metrics, demonstrating its capacity for fine-grained, low-power forecasting.

6. Conclusions

This study investigated the use of SNNs for energy-efficient and intelligent management of smart buildings. Leveraging event-driven processing, anomaly detection, and edge computing, SNNs demonstrated superior performance in dynamically controlling lighting and appliances based on real-time occupancy and environmental conditions. Compared to traditional deep learning models, SNNs achieved greater energy efficiency, reduced computational costs, and enhanced real-time responsiveness, making them well-suited for deployment on low-power edge devices. Experimental results revealed a 25.8% reduction in energy consumption, 92.7% accuracy in anomaly detection, and a 70% decrease in power requirements compared to CNNs and LSTMs, underscoring the effectiveness of the proposed approach in optimizing smart building operations.

Despite these advantages, challenges remain in scalability, training complexity, and hardware compatibility. Training SNNs, especially using backpropagation through time, is computationally intensive and requires further optimization for large-scale deployment. Additionally, the limited availability of neuromorphic hardware constrains real-world implementation. Future research should explore hybrid AI architectures that integrate SNNs with traditional deep learning models, development of advanced neuromorphic hardware for large-scale applications, and transfer learning techniques to enhance adaptability of SNN-based smart building systems. Addressing these challenges will facilitate broader adoption of SNNs in smart energy management, promoting sustainable, efficient, and autonomous building operations.