1. Introduction

The global energy landscape is undergoing a significant transformation, driven by the urgent need to mitigate climate change and facilitate the transition to sustainable energy systems. Renewable energy sources, such as solar photovoltaics and wind turbines, are increasingly being integrated into smart grids to reduce carbon emissions and dependence on fossil fuels. However, the intermittent nature of these resources poses challenges for grid reliability, stability, and cybersecurity, exposing to vulnerabilities such as False Data Injection Attacks (FDIA) and Denial of Service (DoS) attacks.

Improving the functionality and adaptability of smart grids is increasingly reliant on artificial intelligence (AI) and machine learning (ML). The paper briefly describes advanced models such as the Long Short-Term Memory (LSTM) network, the Random Forest (RF) model, and the autoencoder, among others, which have been applied in demand prediction, pattern detection, and anomaly detection in energy systems. Specifically, LSTM and Gated Recurrent Units (GRUs) do not suffer from the vanishing gradient problem, which supports their application in smart grid for tasks such as load-profile analysis and energy-routing optimization. Moreover, the Self-Organizing Maps (SOMs) can be employed to identify anomalies in consumer-load patterns and irregular sensor records, which may indicate equipment malfunctions, including defects or FDIA-related cyberattacks. These AI-based technologies enhance grid intelligence and adaptability, enabling real-time decision-making and effective use of resources to meet the demands of dynamic energy requirements.

In addition, the increasing digitalization of smart grids presents cybersecurity challenges, whereby smart meters (SMs), Phasor Measurement Units (PMUs), and Remote Terminal Units (RTUs) are vulnerable to cyber intrusions targeting their control. In this regard, this paper identifies and discusses the application of advanced solutions, such as the Cluster Partition–Fuzzy Broad Learning System (CP-FBLS) and multimodal deep learning, for the immediate and swift response to FDIA and DoS attacks in power-generation systems. The methods exploit neural network topologies as well as decentralized control and ensure effective resource management at high performance levels. The threat of the Advanced Persistent Threats (APTs), which can cripple grid functionality and cause cascading failures, is also addressed in this review study. This paper further notes that secure and locally resilient architectures are critical in safeguarding critical energy infrastructures against emerging cyberattacks, through the exploration of not only the frameworks but also the overall effects of integrating grid-level architectures into motion-control strategies that mitigate the degradation of resilient consensus.

Renewable energy intermittency and the resulting requirements to manage it pose significant demands for energy-storage systems (ESSs) and comprehensive policy frameworks necessary to support the sustainability of power systems. The paper also discusses hybrid energy-storage systems (HESSs), which consist of a combination of mechanical, electrochemical, and thermal storage technologies to improve grid stability and efficiency. The co-optimization models reviewed have reduced transmission expansion requirements by nearly 10%, thus cutting infrastructure costs and enhancing grid reliability. Moreover, the research highlights the role of policy instruments in overcoming the challenges of political opposition, financial limitations, and social acceptance. Combining econometric modeling, policy studies, and AI-driven optimization methods, the work offers a comprehensive framework for developing scalable, secure, and sustainable energy chains, capable of meeting the increasing global energy demand and ensuring environmental protection and system stability.

The smart-grid technologies, such as energy management systems (EMSs) and Advanced Metering Infrastructure (AMI), further enhance the efficiency of energy networks. EMS provides real-time visibility and control over grid operations as well as optimization, and AMI allows demand-side management based on consumption profiles derived in real time from consumption data. The paper also discusses energy routing, incorporating AI-based solutions and multi-agent systems (MASs) as approaches enabling decentralized control and peer-to-peer scheduling to enhance resilience. Nonetheless, these technologies face challenges such as heavy computational burdens and scalability limitations, underscoring the importance of future research into hybrid protocols that integrate metaheuristics, AI, and blockchain to create secure and flexible energy networks. By examining these developments in detail, this study contributes to the ongoing discourse on power system transformation, aiming to achieve sustainability, security, and efficiency in response to evolving energy needs.

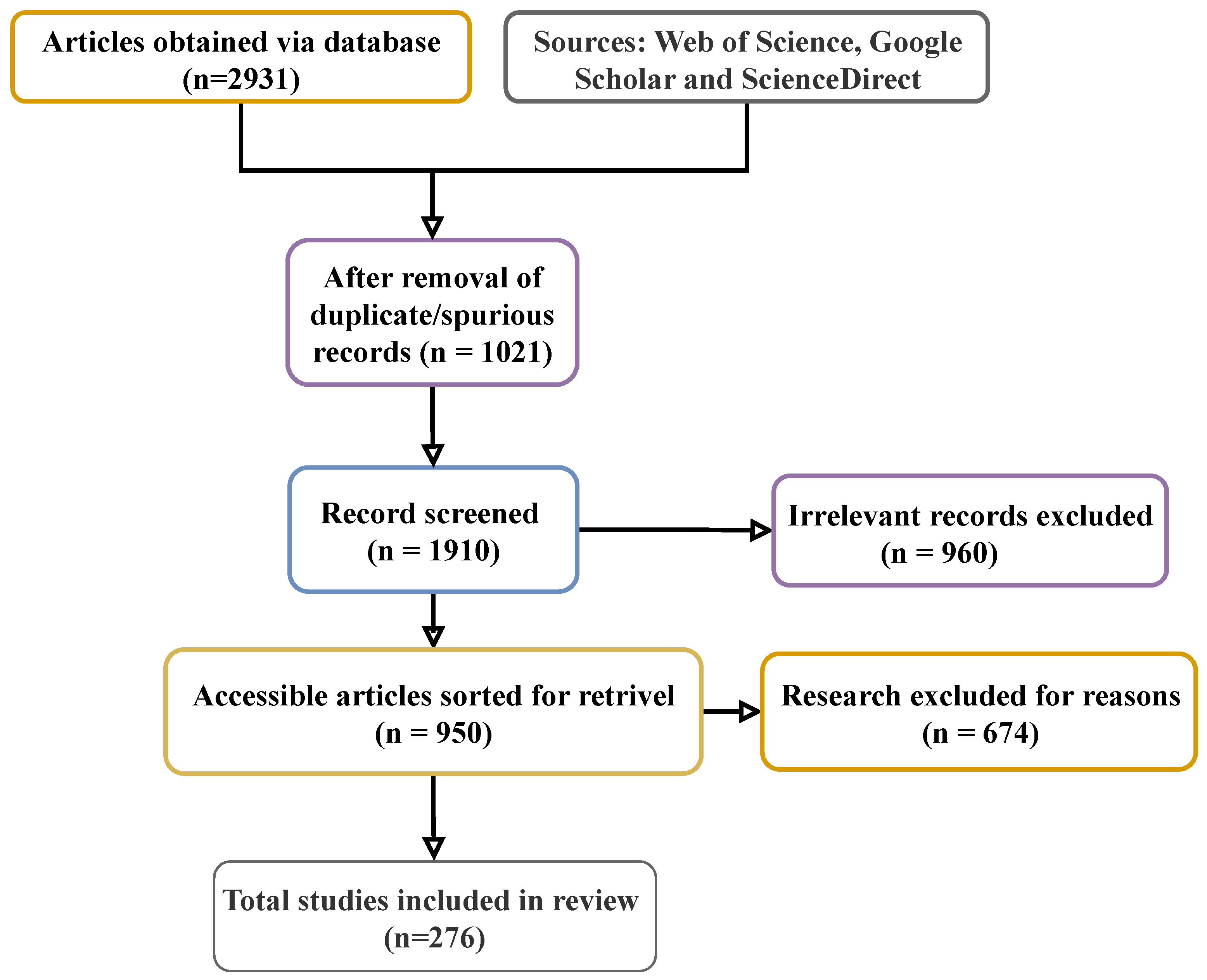

This review aims to provide a holistic security and efficiency analysis for modern smart grids based on an extensive literature review, as presented in

Figure 1. While existing surveys often treat cybersecurity and renewable integration in isolation, this work synthesizes these domains to highlight their interplay. Furthermore, it addresses a significant gap in the literature by dedicating substantial focus to energy-routing protocols, a strategic decision-making layer essential for managing power flow in decentralized, renewable-heavy grids. By evaluating AI, metaheuristic, and multi-agent systems for routing optimization, this review offers a distinct perspective on achieving grid resilience, going beyond conventional discussions to address the core operational challenge of future energy networks.

3. Transmission and Distribution (T&D) System of Power Grid

In a traditional power grid, power flows in one direction from the utility through transmission and distribution systems to consumers. This one-way flow is a key component of traditional grid architecture, where centralized monitoring is essential to balance generation with consumer demand while respecting system limitations [

64]. Utility operators manage every stage of the process to ensure both reliability and financial sustainability. However, the rapid growth in electricity demand from new devices, notably electric vehicles (EVs), has introduced vulnerabilities in traditional grids, including losses, limited monitoring and communication, poor distribution routes, and a lack of advanced automation and digital technologies [

65,

66].

To address these limitations and make the grid system more reliable, the smart grid has been introduced. By integrating technologies like the Internet of Things (IoT), modern digital technology, and automation, the smart grid facilitates two-way communication and allows electricity to flow in both directions [

67]. These advancements enhance the monitoring of electricity transmission and distribution, support the seamless integration of electricity sources, and enable real-time monitoring and immediate response to disruptions, making the grid system more reliable and efficient. Smart-grid technology is better equipped to handle energy demands and operational issues in real time [

68].

However, smart grids have complex architecture, which makes it more challenging to maintain effective power quality when dealing with a variety of load types. It is more difficult to maintain voltage stability, control harmonic distortion, and prevent other quality concerns when the network faces issues caused by diverse devices and generating sources [

69].

Advanced, AI-powered grid interfaces are becoming essential tools for real-time control and adaptability [

70,

71]. AI-enhanced power quality-monitoring devices predict and mitigate future issues while also addressing current abnormalities. They achieve this by monitoring system conditions [

72], forecasting disruptions, and automatically modifying operating conditions. This proactive approach support future innovations like direct-current distribution networks [

73], which might further reduce power delivery issues in data centers, homes, and renewable energy agriculture. This enhances the functionality of connected equipment while encouraging safer, more efficient grid operation.

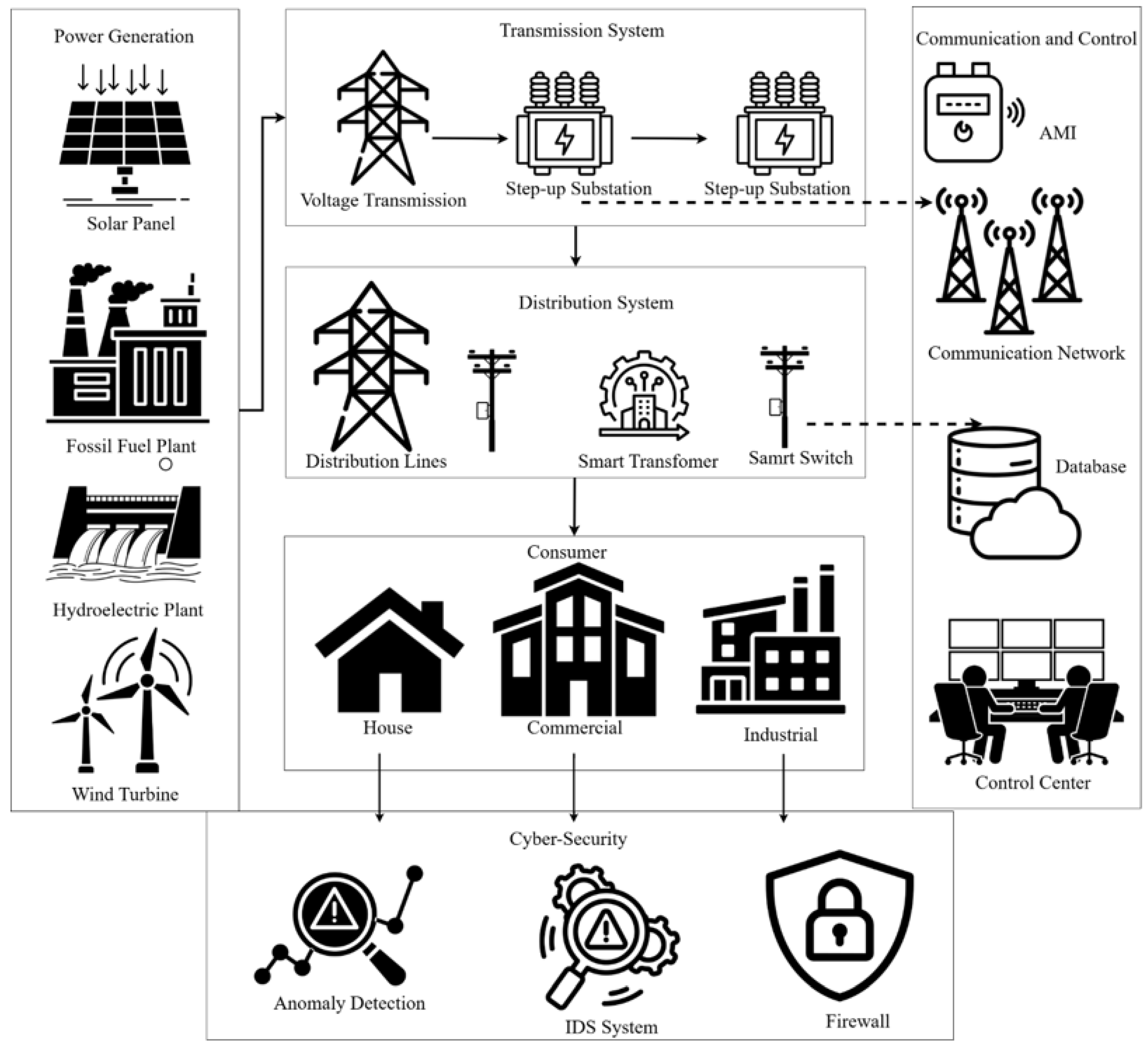

While integrating these IoT and modern digital techniques introduces challenges and safety risks like cyber threats, power outages, and blackouts [

74], an overview of the smart grid is presented in

Figure 2. To address these problems, much research has been conducted in recent years.

Utkarsh et al. [

75] introduced a Self-Organizing Map (SOM) method for resilience assessment and restoration control in a distribution system during severe events. The study proposes a temporal resilience index to support resource allocation and operational dispatch. The proposed SomRes framework detects weak states and promotes pre-emptive load shedding, thereby improving coordination of T&D system responses during contingencies.

A fuzzy SOM-based third-party approach for electricity distribution networks was presented by Ovidiu Ivanov. Under an affinity control policy, consumer classification through load-profile shapes was performed for the rapid development of a Typical Load Profiles (TLPs) database, which is essential for effective T&D planning. The approach demonstrated good strength and stability of segmentation under load.

Wu et al. [

76] proposed Gridtopo-GAN, a GAN-based method which uses topology-preserved node embeddings to correctly identify meshed and radial patterns in large-scale networks and performs effectively on both IEEE benchmark systems and real-world networks.

Yan et al. [

77] proposed UG-GAN, a model that synthesizes three-phase unbalanced distribution systems. By observing real network random walks, it emulates local structures and enriches the synthetic grids with realistic nodal demands and components. UG-GAN was tested on smart meter data to balance data privacy and structural realism.

Venkatraman et al. [

78] developed a CoTDS model with static/induction loads, reactive shunts, and DG inverters. The PI control and small-signal model serve as the foundation for analyzing mutual influences between the T&D systems. Simulations highlight the necessity of coordinated grid control under high DG penetration.

Mohammadabadi et al. [

79] introduced a GenAI-based communication model that employs pre-trained CNNs to generate synthetic time-series data. Local agents process the data, reducing transmission load and preserving privacy. This method supports scalable, distributed learning for tasks such as anomaly detection and energy optimization in smart grids.

Shankar et al. [

80] developed a deep learning OPA (dLOPA) model based on LSTM for smart-grid contingency analysis. It incorporates a pre-processing step that improves training efficiency, achieving 93% accuracy while maintaining low false-positive and false-negative rates. The dLOPA model does not target T&D systems specifically but contributes to the reliability of the grid.

Gonzalez et al. [

81] introduced an RNN approach for demand prediction in distribution network planning. By incorporating confidence intervals, the model successfully respects explicit transformer thermal boundaries and enhances planning when accounting for load variations and distributed generation.

Shrestha et al. [

58] proposed a federated learning LSTM-AE model for anomaly detection. The model achieved a 99% F1-score, maintained privacy, and outperformed conventional techniques.

Liu et al. [

82] introduced MDRAE, an attention-based autoencoder for missing data recovery in transmission and distribution systems, that outperformed comparison models and enhanced both data quality and grid stability.

Raghuvamsi et al. [

83] proposed a TCDAE for topology detection in distribution systems with missing measurements that outperformed other types of DAE variants.

Li et al. [

84] proposed GTCA, a mixed T&D contingency analysis application that integrates the Global Power Flow and DC models. The approach is more effective than traditional methods, especially for looped networks.

3.1. Important Factors of Transmission & Distribution (T&D) System

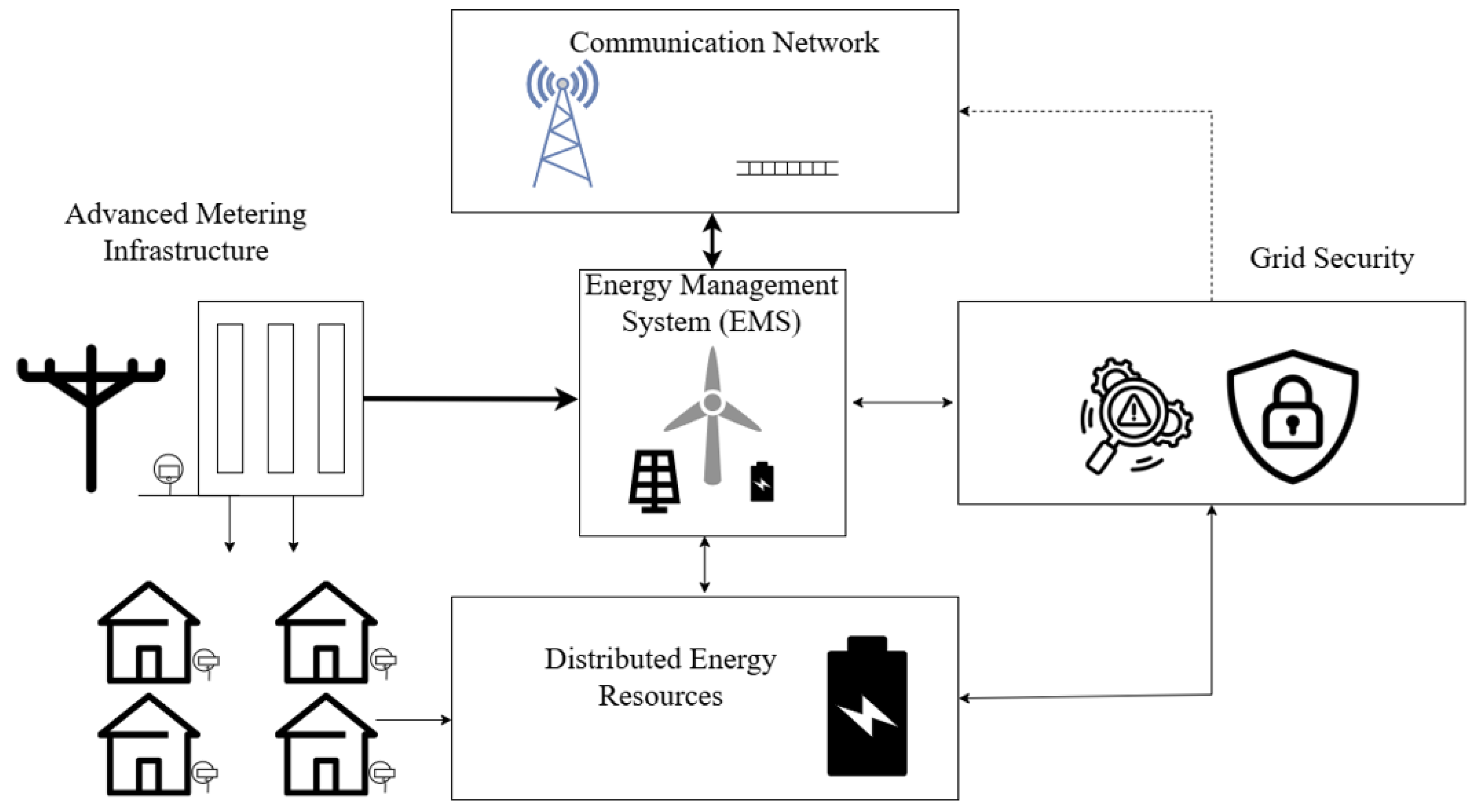

There are several important factors of the T&D system in the smart grid, which are presented in

Figure 3.

3.2. Advanced Metering Infrastructure (AMI)

AMI is an advanced digital system that automatically measures energy consumption in real time [

85]. In contrast, traditional metering relies on analog devices requiring manual readings. AMI enables two-way communication between consumers and prosumers, providing accurate consumption monitoring and billing based on exact energy usage. This technology also facilitates demand response programs and allows consumers to track their real-time energy usage, helping them to reduce consumption during peak hours and optimize costs [

86].

3.2.1. Communication Networks

Communication networks enable frequent status checks, the sharing of data, and two-way communication to enable accurate T&D data which, in consideration of smart-grid networks, requires a high level of security. With constant monitoring, prosumers can track grid status and identify potential problems that might occur in order to enhance distribution of energy properly. It is also remotely managed through automated control systems that operates electronic devices such as routers and electric vehicles. This enhances the efficiency of transfer data and communication channels between prosumers and consumers more efficiently [

87].

3.2.2. Distributed Energy Resources (DER)

Distributed energy resources (DER) include facilities located closer to consumption points than conventional generation facilities. These systems comprise small energy sources like solar arrays, batteries, wind generators, and electric cars that produce and store energy close to the households and enterprise premises. Implementing DER on the smart grid increases reliability and flexibility while reducing dependences on conventional power plants. Moreover, DER expands the utilization of green energy and reduces carbon emission. Moreover, self-sufficient energy systems, known as microgrids, can operate independently and control multiple processes with the smart grids [

88,

89].

3.3. Energy Management Systems (EMS)

An energy management system (EMS) is a computer-based system designed to monitor, manage, and optimize the operations of the smart power grid. It also assists utilities in generating, distributing, and managing electricity consumption in real time [

90]. The overall goal of EMS is to enhance grid stability through effective management of the power resources, energy supplies, and loss as well as enhance efficiency in general. Information collected by EMS in actual time varies in different sectors of the smart-grid tools in order to carry out functions such as forecasting about the energy consumption and regulation of the amount of needed power. Machine learning methods are also applied in EMS to increase system performance, enhance prediction accuracy, and enable autonomous systems to work [

91,

92].

Grid Security

Grid security aims to protect the smart-grid infrastructure from cyberattacks, which can disrupt operations and cause major losses, such as data theft. To prevent such disruptions, smart grids employ security systems that ensure secure and reliable operation [

93]. Cyberattacks primarily target smart grid-connected systems containing critical information. To prevent these attacks, grid security employs powerful encryption, secure authentication methods, and real-time monitoring for immediate threat identification and prevention. In addition, grids implement physical safeguards, such as fences, cameras, and guards, to prevent unauthorized physical access that could lead to equipment damage or cyber intrusions [

94,

95].

3.4. Detecting Methods

To ensure grid security and sustainability, several detection methods have been proposed in the literature, as summarized in

Table 8.

3.4.1. Optimization Models

Optimization models are mathematical and computational tools used to enhance the performance and efficiency of electricity transmission and distribution. These models allow smart-grid systems to make intelligent decisions and determine the optimal operational strategy in various scenarios. They also help reduce energy loss and improve real-time efficiency. Recent investigations demonstrate that these approaches are effective in both simulations and real-world situations [

115].

Barajas et al. [

116] introduced optimization models to enhance the generation, transmission, and distribution of electricity in a microscopic system using the energy hub concept. The first linear programming model addressed hourly operations, while a mixed-integer linear programming (MILP) model was applied for 24 h planning with 21,773 constraints. Results from Mexico’s national grid show that the integrated-cycle plant generates 50% of power, while the hydroelectric plant provides 18%. The proposed model achieved a 30% energy objective using the existing architecture, demonstrating its suitability for large-scale energy management.

Tianqi et al. [

117] introduced an optimization model for smart-grid investment planning during T&D tariff reform. The proposed model integrates indices for planning efficiency and benefits, while also considering constraints from previous projects and the relative significance of different projects. A risk index model was additionally developed to address the uncertainty that can affect planning decisions. The results show that the proposed models outperform traditional models by effectively responding to changing user load profiles under peak–valley time-of-use pricing schemes and enhancing planning efficiency.

In their study, Ogbogu et al. [

118] used an optimal power-flow model to reduce investment costs, transmission losses, and maintenance requirements, as well as optimize power distribution. The proposed model is subject to constraints, such as generator power limits, bus voltages, transformer tap applications, and transmission line capability. These limitations are determined by the physical and operational principles of the power system.

3.4.2. Numerical Methods

Numerical models refer to mathematical tools that deal with physical systems by dexterously representing a system with numbers and an algorithm to simulate and analyze real-life problems. These models are critical for monitoring, planning, and optimization in the system of transmission and distribution. They are perfectly applicable in the calculation of solutions, more so in complex systems beyond the scope of analytical or manual calculation methods [

119].Bragin et al. [

120] proposed a surrogate Lagrangian relaxation (SLR) model to address the same issue in the smart grid by solving the coordinated operation of the entire T&D system. Such work aims to improve the efficiency of operationsand resource utilization. Convergence is compared with conventional methods like the sub-gradient model, where the results indicate that the model is effective and gives an added advantage to the previously available methods in enhancing the coordinated decision making.

A model where the electricity market equilibrium was presented as a transmission and distribution system in the work of Saukh et al. [

121], using electricity circuit theory, Kirchhoff’s laws, and a quadratic loss function. By using the measurement factor, this technique assists in making a more realistic simulation of the market and efficiently.

An OpenDSS model of the smart-grid planning using GIS data and CIM files was developed by Cordova et al. [

122]. This strategy can achieve better results of simulating and enhancing decision-making within the distribution system, thus supporting effective open-source smart-grid planning.

Moradi et al. [

123] proposed a coordinated mechanism of improving the performance of the T&D power system in smart-grid networks. The approach uses a mathematical step-by-step model, which considers grid risks that include the varying electricity demand, production, and behavior of electric vehicles. The proposed model is employed in battery storage, wind farms, switchable feeders, distributed generation and demand–response programs. There are also EV charging stations across different grid levels to cope with short-term uncertainty. The efficiency of the model was validated using with the IEEE RTS and test feeder of 33 nodes, validating the test experiment.

3.4.3. Artificial Intelligence Model (AI)

AI-based models are computer programs that use data for classification, prediction, and pattern recognition, encompassing a wide range of methods, such as Machine Learning (ML), Deep Learning (DL), data mining, evolutionary computation, and fuzzy logic [

124]. One of the most well-known subsets of AI is ML, which focuses on identifying patterns in large datasets [

125]. ML analyzes input features to develop a model that can be used for tasks like prediction, classification, and clustering.

There are three main categories of ML: Supervised Learning [

126], Unsupervised Learning [

127], and Reinforcement Learning (RL) [

128]. Supervised learning models are trained on labeled datasets with known input–output pairs. The aim of supervised learning is to build a model that can accurately map the input to output. Common models include linear regression, Support Vector Machines, and Random Forests.

In contrast, unsupervised learning is used for unlabeled data. The primary objective is to identify hidden patterns and structures within the data. This method is primarily used for clustering and pattern recognition with the help of models like k-means and autoencoders.

Reinforcement learning uses a goal-driven approach where agents learn by interacting with the environment to optimize long-term cumulative rewards based on prior experience. A key principle of this learning method is the Markov Decision Process (MDP), which helps the agent determine the most effective policy. RL also identifies and manages the trade-off between exploration (trying new actions to discover outcomes) and exploitation (using known-good actions).

3.4.4. Random Forest (RF)

RF is an ML model used for prediction, which enhances accuracy, performance, and model stability by integrating the outputs of multiple decision trees [

129]. RF commonly employs historical data from sensors, SCADA systems, and meteorological input to forecast a range of operational parameters. RF is also known for its ability to manage high-dimensional, nonlinear datasets that are typical of complex grid operations, and its resilience to overfitting, making it essential for applications such as load forecasting, fault detection, and optimizing power flow within smart grids [

130,

131].

3.4.5. Support Vector Machine (SVM)

SVM is a supervised learning method used for complex tasks such as classification and regression [

132]. To address these two functions, it is defined as either Support Vector Regression (SVR) for continuous value prediction or Support Vector Classification (SVC) for classification.

SVR is used to predict continuous values. The primary objective of SVR is to identify a hyperplane that accurately fits the training data, while minimizing the error for data points that fall outside a predefined margin of tolerance [

133,

134].

3.4.6. XGBoost

Extreme Gradient Boosting, or XGBoost, is a powerful technique for building predictive models. The process entails sequentially adding decision trees, where each new tree corrects the errors of its predecessors. It uses internal regularization controls to prevent over-complexity and thus reduces the risk of overfitting. Due to its speed and accuracy, XGBoost is a popular tool in a variety of fields. It is designed to process big data quickly and efficiently by performing several tasks in parallel. Because of these benefits, it is commonly used to predict energy consumption, identify equipment anomalies, and detect abnormal trends in modern power-grid systems for energy distribution and delivery [

135].

3.4.7. Artificial Neural Network (ANN)

ANNs are computational networks modeled after the human brain [

136] and are composed of hierarchies of interconnected neurons. Each neuron performs a weighted summation of its inputs, followed by an activation function [

137].

3.4.8. Long Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) networks are a variant of Recurrent Neural Networks (RNNs) designed to capture long-term dependencies to address the vanishing gradient problem. LSTMs also incorporate memory cells and a gating mechanism comprising an input gate, a forget gate, and an output gate to control the flow of information [

138].

LSTMs are deployed in smart-grid transmission and distribution systems, where they have been applied to load forecasting, anomaly detection, and identifying cyberattacks. This is because they can effectively model temporal dependencies within time-series data from sensors and meters. This capability enables earlier detection of errors or attacks, enhancing grid stability and security [

139,

140].

3.4.9. Convolutional Neural Network (CNN)

CNNs are a type of deep learning model designed to capture spatial and temporal features hierarchies from input data. In smart-grid transmission and distribution, CNNs are used to identify anomalies, cyberattacks, and system faults using real-time data streaming from devices such as Phasor Measurement Units (PMUs), Remote Terminal Units (RTUs), and smart meters. The building block of a CNN is the convolutional layer, which applies learned filters to the input data to extract informative features [

141].

In the context of the smart grid, CNNs are fundamental to many applications. They enhance cybersecurity by identifying anomalies resulting from infiltrations such as False Data Injection Attacks (FDIA), Denial of Service (DoS), and Load Redistribution Attacks. They also support in fault diagnosis by detecting and localizing line faults, transformer failures, and voltage instability. Finally, they enhance short-term load forecasting by learning temporal patterns from historical data [

142]. CNNs play an essential role in improving the efficiency, reliability, and robustness of smart-grid T&D networks, as they can automatically extract spatial–temporal dependencies.

3.4.10. Recurrent Neural Network (RNN)

Recurrent Neural Networks (RNNs) are deep learning models used to process time-series and sequential data. For smart-grid transmission and distribution systems, RNNs are useful in applications with time-dependent characteristics, such as load forecasting, anomaly detection, and state estimation. Unlike feedforward networks, RNNs do not maintain a separate representation for time steps; instead, information persists through repeating loops of weights [

140].

Due to their ability to model temporal dynamics, RNNs are suitable for forecasting future power load based on historical data. They can also detect patterns that indicate imminent failure or identify anomalies (e.g., cyberattacks like FDIA and DoS attacks) [

143]. For fault diagnosis, RNNs can process voltage and current waveforms to detect abnormalities by comparing them with normal behavioral trends. In cybersecurity, the RNN can examine communication or measurement sequences for unusual patterns.

RNN-based algorithms also have potential for load forecasting, as they can extract daily and seasonal variations, thus supporting utilities in generation and distribution planning. More advanced versions, such as LSTM and GRUs, overcome shortcomings caused by the vanishing gradients problem, making RNNs more useful for smart-grid applications [

144]. Overall, RNNs play an important role in enhancing the intelligence, adaptivity, and security of smart-grid operations.

3.4.11. Autoencoder Models

Autoencoders are unsupervised neural network architectures that learn compressed representation of the input data. They are consist of two parts: the encoder, which compresses the input into a latent-space representation, and the decoder, which reconstructs the input from this representation. The purpose of the autoencoder is to reconstruct the input as accurately as possible, with the latent space capturing the most salient features of the data [

145].

In smart-grid transmission and distribution systems, autoencoders can be used for anomaly detection, fault diagnosis, and data denoising. As an inherent feature of their learning process, autoencoders that have been trained on non-anomalous operational data are highly sensitive to deviations that result from False Data Injection Attacks (FDIA), Load Redistribution Attacks, and sensor failures [

146]. By examining the reconstruction error, the model can determine when a data point is not consistent with the expected norm. For instance, a sudden voltage collapse or abnormal load generation can be flagged as suspicious if the reconstruction loss exceeds a predefined threshold.

Autoencoders can also be employed for dimensionality reduction of high-dimensional observations from multiple smart-grid sensors, retaining the most relevant features for use as input for forecasting or classification. Furthermore, models such as sparse autoencoders, denoising autoencoders, and variational autoencoders (VAEs) enhance performance through improved feature extraction, noise robustness, and probabilistic interpretation [

147]. In this context, autoencoders represent a valuable tool for the reliable identification of disturbances and efficient representation of data, thus contributing to the security, resilience, and efficiency of smart-grid systems.

3.4.12. Self-Organizing Map (SOM)

The self-organizing map (SOM) is an unsupervised neural network model suggested by Teuvo Kohonen, known for clustering and visualizing high-dimensional data by projecting it into a lower-dimensional grid (usually 2D) [

148].

In smart-grid transmission and distribution systems, SOMs are especially beneficial for load profiling, anomaly detection, consumer behavior analysis, and fault-type detection. The SOM is consists of neurons in a 2D lattice, each of which is linked to a weight vector of the same size as the input [

149,

150]. During training, the SOM undergoes competitive learning, reorganizing itself so that similar input patterns will activate neurons that are nearby each other on the map.

In the context of the smart grid, SOMs are employed to identify patterns in consumer-load profiles to group similar consumption behavior and assist with demand-side management. They can also detect abnormal sensor or measurement data that could signal a failure or equipment defect. In addition, SOMs can help classify power quality events (PQEs) or cluster different parts of a network according to their operation [

151]. As SOMs can be trained in an unsupervised manner, they are well-suited for situations where data labeling is costly or impossible. Ultimately, SOMs enhance smart-grid operations, imparting the abilities of pattern discovery, anomaly detection, and dataset visualization.

3.4.13. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs), a family of deep learning models proposed in 2014 by Ian Goodfellow, operate on a generate-and-test basis. A GAN consists of two neural networks: a generator, tasked with producing synthetic data that appears realistic, and a discriminator, which acts as a classifier to determine whether the data is realistic or unrealistic [

152,

153]. The generator strives to produce more realistic data to deceive the discriminator, while the discriminator works to improve its classification accuracy. With each iteration, the generator gets better at deceiving the discriminator, and over time, it produces high-quality synthetic data that closely matches the real data distribution.

With regard to smart-grid transmission and distribution systems, GANs have several impactful applications. They can be used for data augmentation, particularly in datasets with imbalanced representation where incidents like cyberattacks (e.g., False Data Injection Attacks, DoS) are rare. By creating synthetic data of these rare incidents, GANs enhance the effectiveness of classifiers trained to detect them.

GANs are also used for anomaly detection, where they learn the characteristics of normal operational data and flag data points with high discriminator loss as anomalies. Additionally, GANs can enable privacy-preserving grid data sharing, enabling secure risk-sharing partnerships among utilities. In predictive maintenance, GANs can be used to model the effects of hypothetical fault conditions and overloads under future operating conditions [

154]. Other variants, such as Conditional GANs (CGANs) and Wasserstein GANs (WGANs), improve training stability and enable conditional generation based on labels like grid zones, time windows, or attack types. In general, GANs can make the grid smarter and more secure by promoting realistic simulation, data augmentation, and anomaly detection in complex T&D networks [

155].

3.5. Hybrid Models

The methodology in [

156] employs multivariable regression methods, including linear, polynomial, and exponential regressions, for predicting peak loads. When these models were applied to Jordan’s electricity grid, achieved an accuracy of nearly 90%, with a performance comparable to regular exponential regression techniques. As discussed in [

157], the aim is to examine issues of power consumption at the national level through active long-term demand forecasting for a deregulated electricity market. The study also underlines the importance of activities like energy procurement, infrastructure expansion, and contract management.

[

158] highlight a deficiency in prior research regarding hybrid deep learning. They note that limited work has explored combine multiple deep learning structures for large-scale smart-grid load forecasting. According to their findings, combining models improves predictive accuracy and provides insights into the relationships between various models and complex data patterns. In [

159], a hybrid algorithm was proposed that combines evolutionary deep learning with LSTM and a genetic algorithm to optimize parameters as lag windows and the number of neurons. This mixed method proved more accurate and flexible than traditional forecasting methods.

In [

160], the authors examined two recurrent neural network sequence-to-sequence (S2S) implementations to predict building-level energy consumption using GRU and LSTM layers. They also compared these hybrid models with five conventional forecasting techniques: multiple linear regression, stochastic time-series analysis, exponential smoothing, Kalman filtering, and state-space modeling, using an identical dataset for the comparison. An LSTM-based approach proposed by Yong et al. [

161] was specifically designed to forecast the same-day energy demand of buildings.

3.6. Cyberattacks in Transmission and Distribution

Cyberattacks are malicious activities designed to damage networks, steal data, and disrupt normal processes in the smart grid. Their primary goal is to compromise transmission and distribution, potentially leading to blackouts and physical damage. Several types of cyberattacks target T&D systems, with each having unique features and potential outcomes. For instance, a Man-in-the-Middle (MiTM) attack targets communication between two devices or users. Similarly, Replay Attacks (RA) and Time Synchronization Attacks (TSA) can replay or alter measurement data and signals.

TSA and False Data Injection Attacks (FDIA) are designed to manipulate smart meter readings in order to manipulate state estimation and evade bad-data detection systems. Additionally, Denial of Service (DoS) attacks prevent information from reaching its intended destination. Countermeasures are introduced to detect these attacks, protect the grid, and prioritize cybersecurity efforts and resources. Some of these cyberattacks pose a greater threat than others due to their potential impact on grid stability and security [

162].

Table 9 provides a comparative analysis of several studies focusing on cyberattacks in T&D.

3.6.1. Man-in-the-Middle Attack (MiTM)

A Man-in-the-Middle (MiTM) attack occurs when an attacker intercepts communication between two parties or devices and impersonates one of them, making it seem as though the information is being transmitted normally [

173]. Kulkarni et al. [

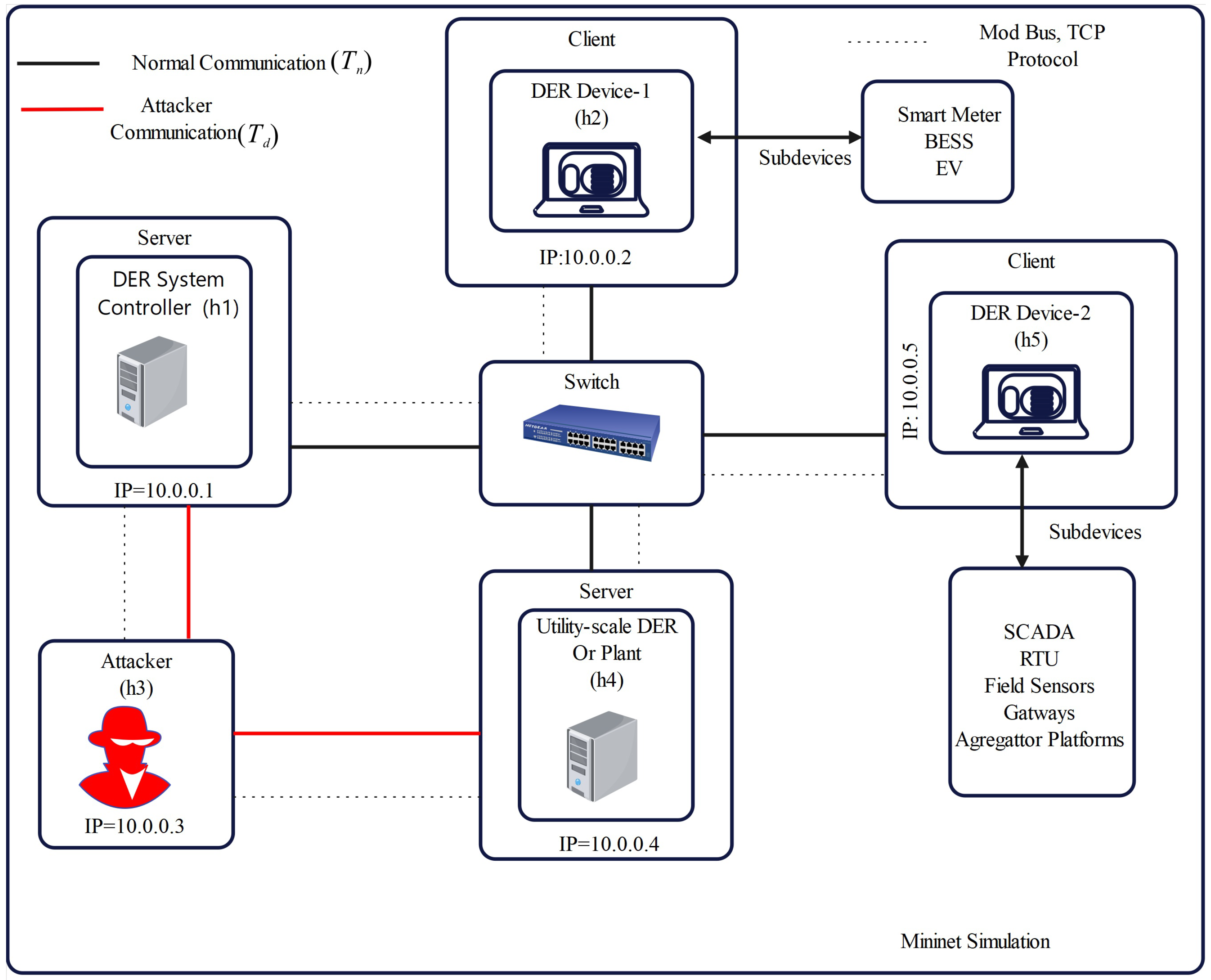

174] investigated the potential security threats related to MiTM attacks in power systems, focusing on vulnerabilities in the Modbus TCP/IP protocol used for communication.

Fritz et al. [

175] developed a prototype of a MiTM attack on a smart-grid emulation platform. The authors also demonstrated a technique for compromising the integrity and validity of IEEE Synchrophasor protocol packets. Detecting packet interception is difficult because of the physical distance between the PMU and the Phasor Data Concentrator (PDC). Additionally, the PDC acquires data with minimal time for encryption, authentication, and integrity testing.

3.6.2. Replay Attack (RA) and Time-Delay Attack (TDA)

Accurate timing of control signals is essential for maintaining proper system operation. Time-Delay Attacks (TDAs) compromise this by introducing random delays in data transmission and reception, which can disrupt timing-dependent control components [

175]. In contrast, Replay Attacks (R) are used to capture sensor measurements over a set period and then replace real-time data with these previous measurements. Additionally, they may involve retransmitting past recorded control signals from the operator to the actuator [

176,

177]. These two attacks use legitimate past data to manipulate system behavior, resulting in a system state that differs from the intended scenario. This can lead to major outages by disrupting and damaging the power grid [

178].

3.6.3. False Data Injection Attack (FDIA)

Smart grids represent a radical departure from conventional electrical networks, aiming to promote interconnection among operators, suppliers, and users to create a tightly linked environment. This greater interconnection introduces significant challenges, particularly concerning consumer data privacy and the safe operation of the grid. A major concern is the vulnerability of real-time system operations, including generator synchrophasormeasurements, to cyber threats like FDIAs.

FDIAs pose a significant threat to smart-grid cybersecurity. First proposed by Liu et al. [

179], compromises the state estimation (SE) process at the control center by injecting false values into measurement data. Although bad-data detection (BDD) tools, which use the l2-norm, are employed to identify inconsistencies, it is possible to design attack strategies that manipulate these systems. By injecting errors stealthily, attackers can bypass the BDD tools.

The FDIA is a hidden attack due to the subtle modification of the attack vector in the measurement data. Attackers are typically modeled as either requiring knowledge of the grid topology to launch an attack (FC-attackers) relying solely on observed data without topology information. FDIA behavior is also affected by application-specific vulnerabilities. For example, open communication links make Wireless Sensor Networks (WSNs) more susceptible. In power systems, attacks are more difficult to execute because of the complexity of modeling real grid parameters. Attackers may conduct broad or targeted attacks depending on their objectives and the available system information. Crucially, these attacks may threaten the integrity, availability, or confidentiality of the system. One example is providing false meter readings, which can lead to incorrect system operation, service outages, and private customer data leakage, as recorded in the AMI [

180].

3.6.4. Load Redistribution Attack (LRA)

LRA is a type of FDIA attack proposed by Yuan et al. [

181] and collaborators, which aims to disrupt the operation of the power system by targeting the Economic Dispatch (ED) process. ED aims to minimize generation costs and optimize power distribution to meet load demands. When LRA manipulates the estimated system state, it can lead to incorrect ED decisions, resulting in grid instable and inefficiency.

LRA has two primary objectives: immediate effects or delayed effects. The immediate effect aims to significantly increase the operating cost right after the attack. In contrast, the delayed effect aims to gradually overload power lines, which can ultimately cause physical damage to the power grid.

3.6.5. Denial of Service (DoS)

In the smart grid, there are numerous measurement devices, such as smart meters (SMs), data aggregators, Phasor Measurement Units (PMUs), Remote Terminal Units (RTUs), Intelligent Electronic Devices (IEDs), and Programmable Logic Controllers (PLCs). These devices exhibit vulnerabilities that can be exploited for Denial of Service (DoS) attacks.

A DoS attack in a power system makes measurement data unavailable to both users and the control system, disrupting communication and causing network instability. The attack also hinders the smart-grid ability to capture events during its occurrence. A DoS attack can be caused by flooding a device or communication channel with high volumes of data or exploiting system weaknesses such as jamming or routing vulnerabilities [

182,

183,

184]. A puppet attack is a newer form of DoS attack that blocks communication in the Advanced Metering Infrastructure (AMI) network [

185]. The attacker selects normal nodes to send attack data. When those nodes receive the data, the attacker continues to send more, overloading the system and blocking communication.

4. Energy Utilization

The objectives of energy T&D models are to effectively transfer energy from generation plants to consumers. Energy utilization has become a critical concern due to the pressing need to reduce consumption, combat climate change, and enhance the sustainability of energy systems. While the oil and gas industry has long driven global economic growth, the increasing link between national economies and global energy demands necessitates maximizing energy efficiency to harmonize economic growth with environmental protection. Buildings, which account for about 34% of global energy consumption and carbon emissions, are a major source of energy use and therefore a priority for efficiency enhancement, as discussed in [

186]. This specific study, however, did not use a dedicated model to examine energy utilization. In contrast, studies by [

187,

188] used optimization models to quantify the impacts of energy use and its utilization.

Conventional building energy management strategies have traditionally been based on fixed power grids and conventional energy systems, which are often inefficient, costly, and environmentally harmful [

189,

190,

191,

192]. Over the last few years, there has increasingly shifted toward smarter energy management systems through the integration of renewable energy sources (RES) into built environments [

193,

194,

195,

196]. These systems, which include solar photovoltaic (PV) systems, wind turbines (WTs), and energy-storage systems (ESS), aim to reduce dependence on the grid, lower energy costs, and minimize building’s carbon footprints [

197,

198,

199].

The concept of energy utilization optimization in buildings encompasses a diverse range of strategies, including demand-side management (DSM), smart grids, energy storage, and real-time monitoring of consumption patterns [

200,

201,

202,

203,

204]. These studies highlight that optimization techniques such as genetic algorithms and demand-side strategies are pivotal in addressing the dynamic challenges of balancing energy supply and demand, managing uncertainty, and coordinating resources. The ability to track energy utilization in real time enables adaptive building operations to optimize energy use, minimize losses, and coordinate consumption with periods of high renewable energy production [

205,

206,

207]. These studies also emphasize the evolution of solar technologies, from first-generation silicon-based cells to advanced PV/T and quantum dot cells, aiming for improved efficiency and lower costs. Such developments, supported by policy incentives and innovation, are critical for maximizing solar energy utilization and achieving global decarbonization goals.

With increasing urgency in energy conservation efforts, the advantages of combining smart meters with building energy management systems (BEMS) are abundant [

191,

208]. These systems can automate energy optimization processes, respond to changes in energy generation, and enhance overall efficiency. Smart meters facilitate flexible pricing, enable effective peak demand management, and improve grid stability. They also empower occupants to monitor and control their energy usage. At the same time, rapid cost reductions in solar, wind, and battery-storage technologies, along with climate policies and carbon pricing, have accelerated a global shift toward electricity-dominated energy systems [

209,

210]. Recent research on wind energy emphasizes the optimization of turbine performance, control systems, and offshore infrastructure to enhance efficiency, reliability, and integration into future hydrogen economies and applications.

This section discusses the importance of smart meters in maximizing energy utilization in buildings, exploring how they increase energy efficiency, decrease operational expenses, and facilitate the integration of renewable sources. By investigating new developments and trends in smart meter technology, this section provides a comprehensive overview of how these systems transform energy use in the built environment and contribute to the broader goal of sustainability.

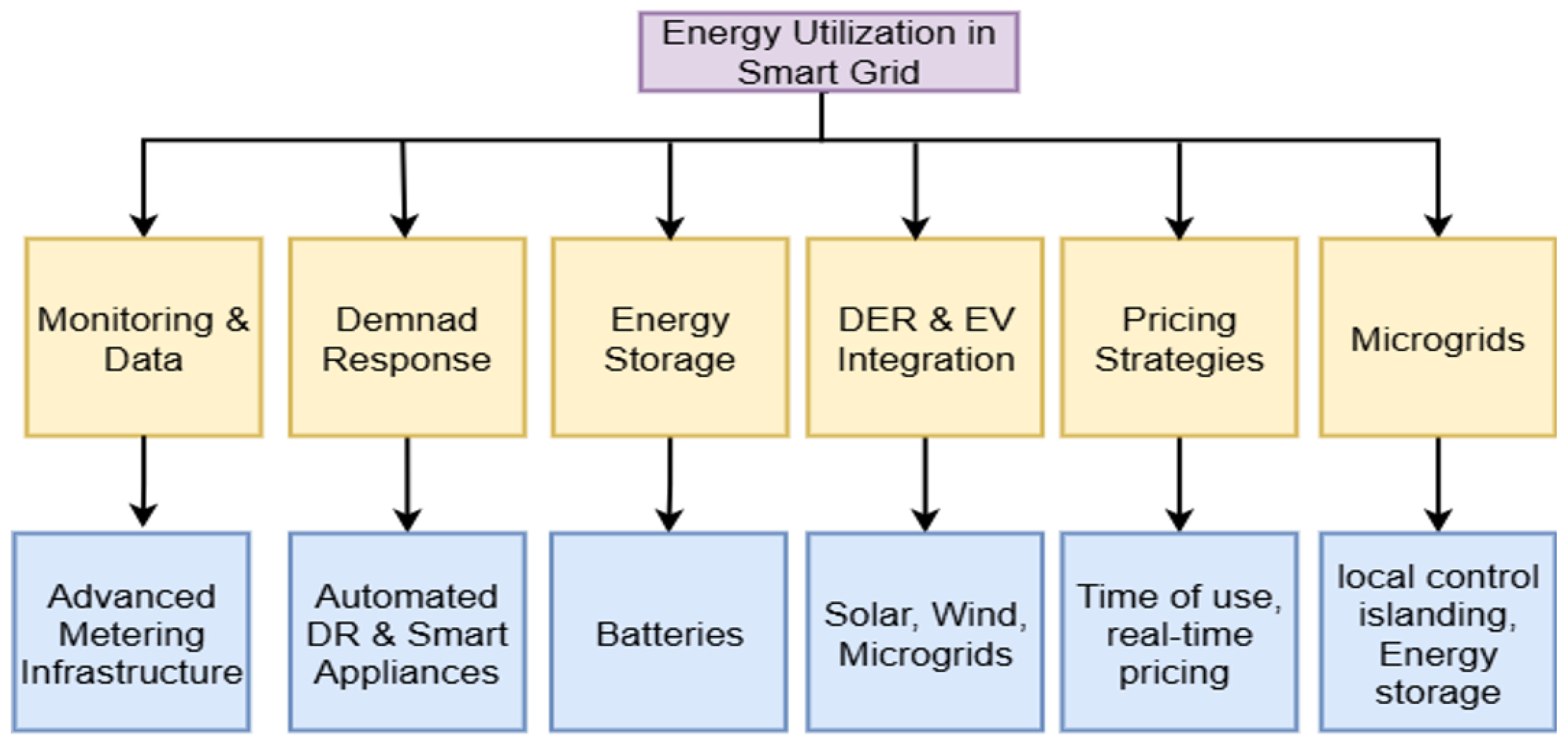

Figure 4 provides a complete overview of energy utilization in the smart grid.

4.1. Technologies for Energy Utilization in Smart Grid

The integration of smart grids has transformed energy systems by introducing advanced technologies designed to enhance efficiency, reliability, and sustainability in energy use. For example, policy-driven demand-side management in industrial sectors further improves energy efficiency and support broader sustainability goals [

68,

211]. Smart grids are characterized by their ability to provide real-time monitoring, data analytics, and automated control, which collectively enable better energy management and optimization. As discussed by [

212,

213], demand-side management plays a pivotal role in enhancing energy efficiency, reducing emissions, and facilitating smart-grid integration through optimized consumption strategies and the use of hybrid algorithms.

Several major technologies are key to the effective operation of smart grids [

214,

215,

216]. These include renewable energy integration, Advanced Metering Infrastructure (AMI), advances in energy-storage technologies, and demand-side management tools. The key technologies that enable optimal energy use within smart grids and how they affect efficient grid operation are presented in

Table 10.

4.1.1. Advanced Metering Infrastructure (AMI)

Smart grids rely on Advanced Metering Infrastructure (AMI), which is an integrated system of smart meters, communication networks, and data management platfoms. AMI systems gather real-time data on energy usage, voltage, and power quality for analysis and monitoring by utilities. AMI systems support self-configuration, algorithm integration, and scalable deployment, paving the way for intelligent energy market participation and system resilience [

215,

217].

These systems also enable utilities to monitor energy consumption in detail, providing valuable insights into usage patterns. AMI helps facilities gather real-time energy usage data, enabling instant access for both consumers and utilities. Furthermore, AMI facilitates two-way communication that supports remote meter control and troubleshooting for both consumers and utilities.

Table 11 presents a comparative analysis of several studies and their key findings.

4.1.2. Renewable Energy Integration

Smart grids are crucial for integrating renewable energy systems, such as solar photovoltaics (PV), wind turbines (WTs), and hydropower, to overcome the constraints of conventional fossil-fuel-based energy systems. Advanced optimization techniques and smart-grid technologies are key to managing renewable variability and ensuring reliable, efficient, and sustainable energy supply [

222,

223].

Integrating a large number of renewable energy sources into the grid introduces variability and uncertainty. Smart grids handle this through advanced forecasting, energy storage, and demand–response techniques. The integration of PVs and electric vehicles (EVs) requires coordinated operation models and simulation-based techniques to mitigate grid instability, optimize load scheduling, and reduce energy costs. In parallel, modern power systems leverage AI, machine learning, and dynamic modeling to enhance grid resilience, enabling real-time control and supporting the seamless integration of renewable energy sources [

224,

225]. Moreover, intelligent grids allow for the efficient use of distributed energy resources (DERs), including rooftop solar panels and small-scale wind turbines, to provide local energy. Smart grids also employ weather forecasting, data mining, and machine learning to predict the availability of renewable energy, thereby streamlining grid operations, especially during periods of fluctuating energy production [

226,

227].

Table 12 presents a comparative analysis of several studies, highlighting their limitations.

4.1.3. Energy-Storage Systems (ESS)

One of the key elements of smart grids is the energy-storage systems (ESS), which includes batteries and pumped hydro storage facilities. These systems store energy surpluses generated by renewable sources during periods of high production and release them during times of low generation to maintain the balance between supply and demand [

233]. Accurate load forecasting using advanced deep learning models, such as the IntDEM framework, enables optimized charging and discharging schedules for ESS in IoT-enabled smart grids [

234].

ESS ensures grid stability by storing energy during low-demand periods and discharging it during peak demand, thus preventing blackouts and maintaining a constant supply. By discharging stored energy during peak load periods, ESS helps reduce the need for costly peaking power plants and reduce electricity prices [

235]. An integrated demand–response framework that combines medium-term forecasting and an optimized battery ESS can reduce costs and emissions while improving energy efficiency [

236]. Furthermore, ESS enables greater integration of intermittent renewable energy sources by compensating for fluctuations in generation, thereby ensuring a continuous and reliable energy supply.

The following

Table 13 compares several studies in the domain of energy utilization by highlighting their key findings and limitations.

4.1.4. Demand-Side Management (DSM)

Demand-side management (DSM) refers to strategies employed to influence consumers’ energy usage in order to improve efficiency and reduce grid stress. DSM systems enable utilities to actively manage consumption patterns through incentives, dynamic pricing, and direct control of appliances. For instance, one study used conceptual frameworks like intersectionality and social license to examine social barriers to DSM adoption, while another employed statistical and machine learning models to optimize DSM implementation through dynamic pricing and load-control strategies [

242,

243].

Smart grids enable dynamic pricing schemes that encourage the use of energy during off-peak periods, thereby reducing peak load demand and promoting a more balanced energy distribution [

244]. Furthermore, appliances equipped with smart technologies, such as smart thermostats, washing machines, and refrigerators, can be controlled remotely to optimize energy consumption based on grid conditions and pricing signals. The key findings and limitations of several previous studies are presented in

Table 14.

4.1.5. Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) play an increasingly important role in smart-grid technology by enabling advanced analytics, real-time decision-making, and optimization. For example, an AI-driven framework using Deep Reinforcement Learning, blockchain, and federated learning has demonstrated enhance virtual power plant and smart-grid performance by optimizing renewable integration and ensuring secure, scalable operation [

250,

251].

ML algorithms can predict energy demand patterns, renewable energy generation, and potential faults in the grid, thereby allowing for proactive management and reduced downtime. Furthermore, AI-powered systems optimize energy dispatch from various resources, including renewable energy, storage, and conventional power plants, ensuring the most efficient and cost-effective energy mix. AI algorithms can analyze sensor data to detect anomalies, predict equipment failures, and initiate preventive maintenance, thereby improving grid reliability [

252].

A comparison of several studies highlights the importance of AI in the energy system, as presented in

Table 15.

4.2. Cyber Attacks on Energy Utilization in Smart Grids

The integration of advanced technologies in smart grids has clearly transformed energy management into a more efficient, sustainable, and adaptive system. Nevertheless, this revolution has introduced new vulnerabilities, especially with respect to the security of digital infrastructure. As detailed in a comprehensive review by [

258,

259], the rising threat of cyberattacks is a major challenge due to the widespread integration of IoT in modern energy systems. These studies examine smart-grid architecture, categorize cyber threats, and review past attack incidents. As smart grids rely on sophisticated networks of communication and data management and interconnected devices, they are prime targets for cybercriminals who exploit potential flaws.

The effects of such cyberattacks on energy systems can be devastating, leading to shortages of energy supply, misrepresentation of consumption data, loss of revenue, and threats to national security. This section discusses different types of cyber threats to smart grids, the severity of these attacks, and strategies for risk mitigation.

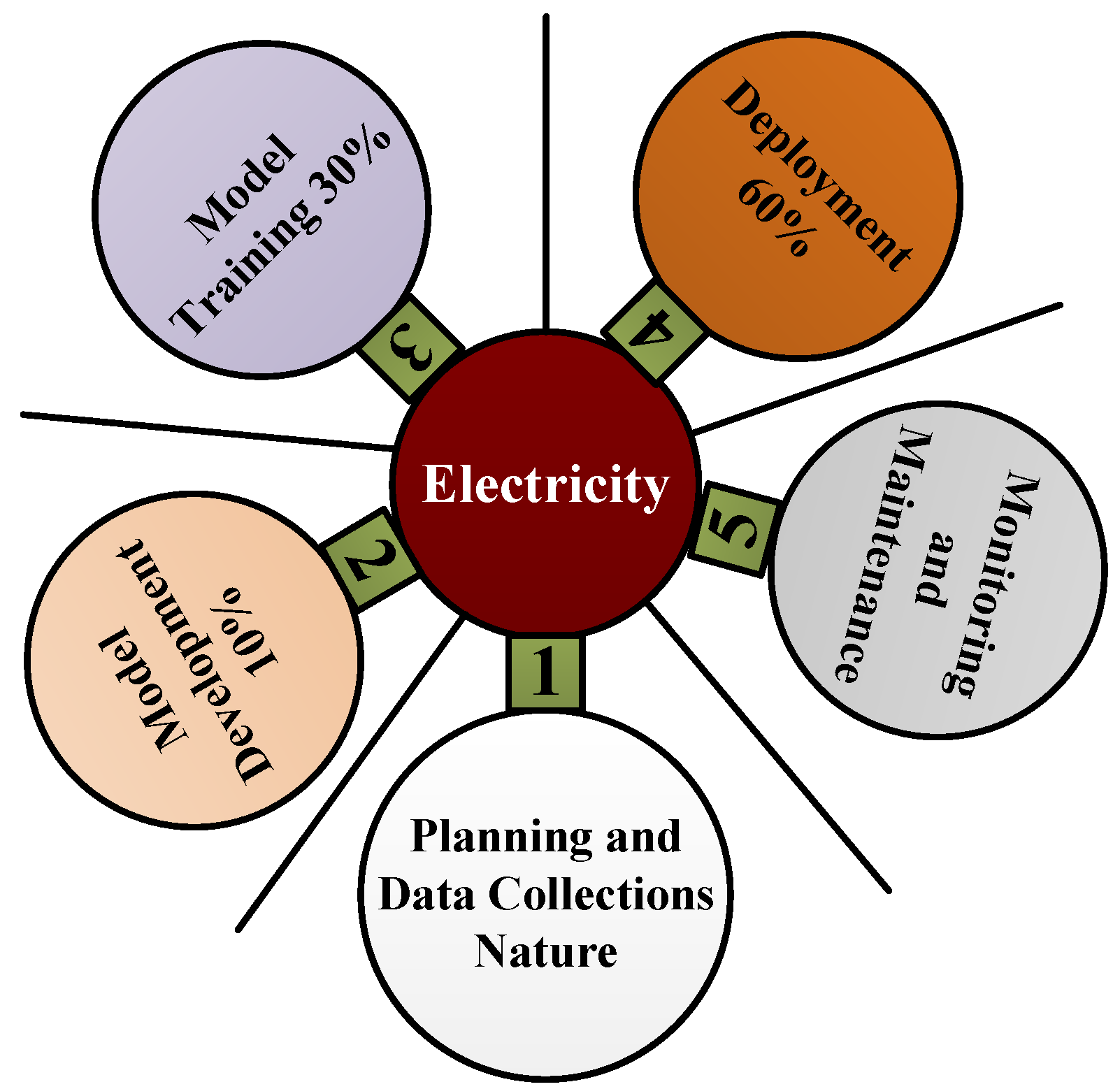

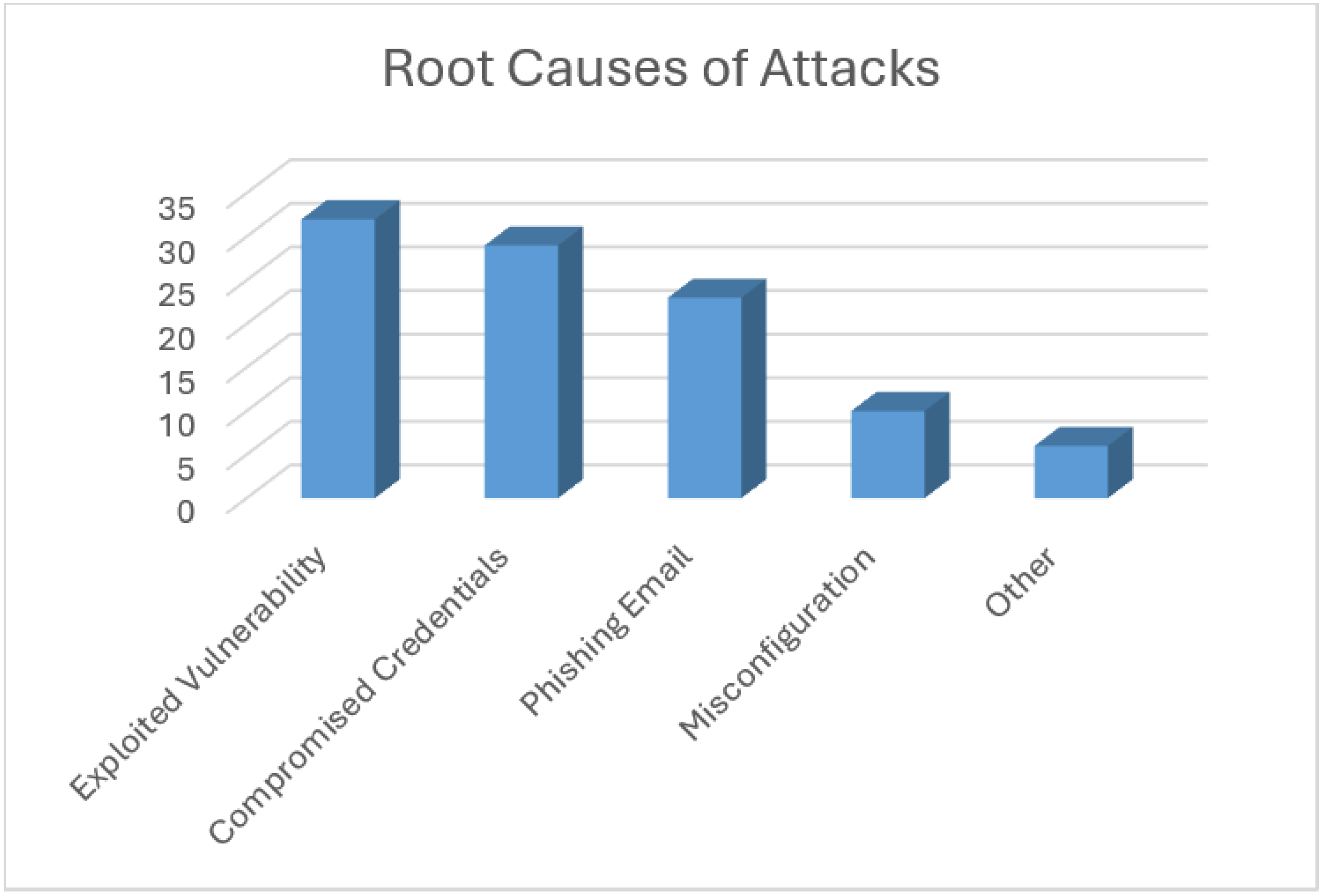

Figure 5 illustrates electricity consumption throughout the life cycle of an AI model. For example, when implementing AI models in the energy market, electricity is consumed during model development, training, and deployment. These values are detailed in a study [

253]. Furthermore, the energy sector continues to be heavily impacted by ransomware attacks, with critical infrastructure facing increasing vulnerabilities. According to a report by Sophos [

260], the primary root causes of these attacks were exploited vulnerabilities (35%), compromised credentials (32%), and phishing emails (27%), as shown in

Figure 6, while misconfiguration and other causes contributed marginally.

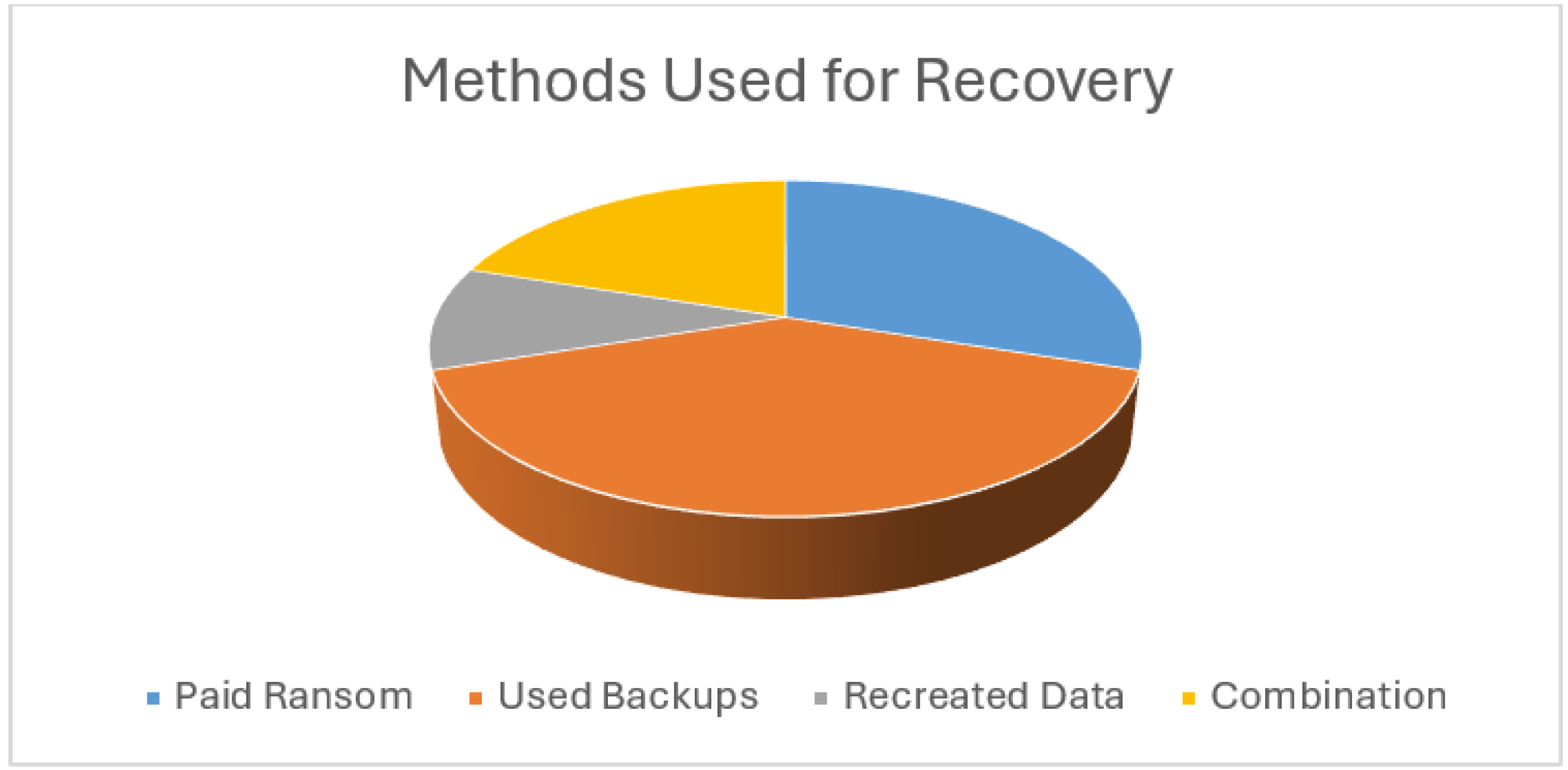

Figure 7 highlights the recovery methods, showing a strong reliance on backups, with the majority of organizations using backups to restore data after an attack. A smaller fraction reported paying ransom, recreating data, or using a combination of these methods.

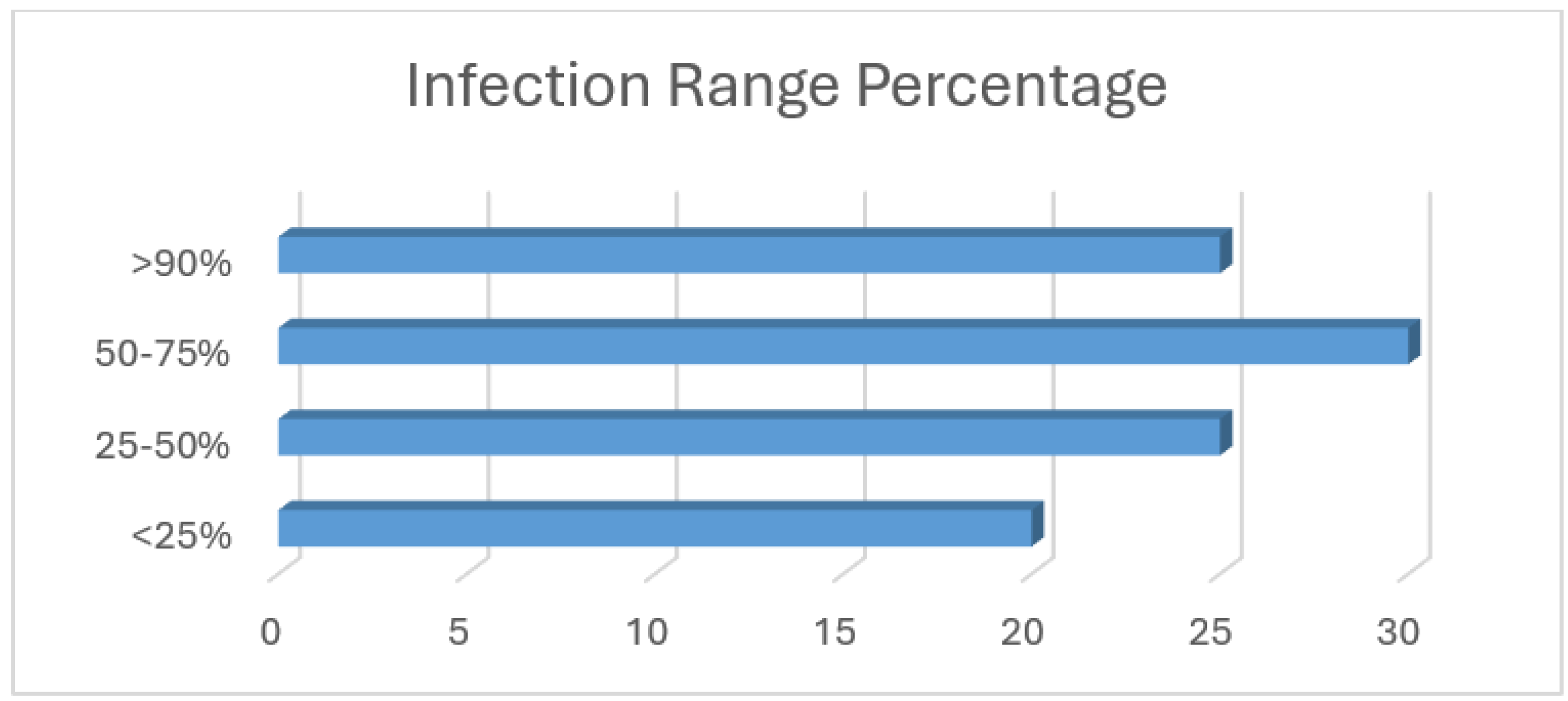

Furthermore, an infection range analysis, shown in

Figure 8, revealed that in 30% of incidents, over 75% of the organization’s devices were impacted, demonstrating the devastating reach of such attacks. Approximately 22% of cases involved infection rates below 25%, suggesting some success in containment strategies.

4.3. Types of Cyberattacks on Energy Systems

Energy grids are typically targeted by cyberattacks that exploit the digital infrastructure supporting the generation, distribution, and consumption of energy. Such attacks vary in size, complexity, and purpose and can be classified into the following categories.

Denial of Service (DoS) Attacks

When energy management systems are attacked, an overload of traffic can cause a disruption in services. In the smart grid, a DoS attack can target communication networks, leading to delays or a complete shutdown of energy-distribution systems. Studies by [

66,

261] discuss cybersecurity in energy systems using advanced models. The first paper proposes a resilient consensus control strategy embedded with reduced-order dynamic gain filters to identify DoS attacks in multi-agent systems, thereby improving energy efficiency and resilience. The second paper presents a new DDoS attack model for electric vehicle charging stations that employs a time-variant Poisson process and Ornstein–Uhlenbeck dynamics, though it does not provide specific datasets or quantitative accuracy metrics.

4.4. False Data Injection Attack (FDIA)

In a False Data Injection Attack (FDIA), an adversary selectively manipulates measurement data or sends misleading information to the grid’s communication system. This is particularly dangerous in IoT-driven smart grids, where a large number of sensors and interconnected devices including AMI gateways, smart meters, distributed energy resources (DERs) controllers, smart inverters, Phasor Measurement Units (PMUs), Remote Terminal Units (RTUs), smart thermostats, and grid-connected sensors constantly monitor and transmit data. By vulnerabilities in these IoT devices and their messages, attackers can induce errors in state estimation, tamper with electricity consumption reports, conceal illegal activity, interfere with automatic control signals, or cause false alarms at the control center. Within the framework of smart grids, FDIAs have the potential to destabilize the grid, improperly trigger demand–response functions, change dynamic prices, and cause significant losses for both utilities and consumers.

The sophisticated nature of FDIAs makes them difficult to detect, as the injected data can closely mimic legitimate measurements and bypass common anomaly detection [

262]. The study by [

263] briefly highlights the importance and outcomes of FDIA attacks and the associated challenges. Researchers have addressed these challenges; for example, one study [

264] proposed a multimodal deep learning model, including a variational graph auto-encoder (VGAE), a temporal convolutional network (TCN), and a gated recurrent unit (GRU), for the identification and classification of FDIAs under varied power-system topologies. Tested on the IEEE-14 and IEEE-118 bus systems, the model achieved an accuracy of 91.2%. To reduce the impact of FDIAs, a CNN-based model incorporating noise elimination and state estimation was implemented in [

265]. In [

266,

267] researchers suggested a model based on variational mode decomposition (VMD), Fast Independent Component Analysis (FastICA), and an XGBoost classifier optimized with Particle Swarm Optimization (PSO). When tested on the IEEE-14 bus system, this model achieved an accuracy of 99.84%. The sophisticated nature of FDIAs makes them difficult to identify when attackers exploit flaws in communication networks. A common technique used to facilitate such data manipulation is the Man-in-the-Middle (MiTM) attack.

4.4.1. Man-in-the-Middle Attack (MiTM)

In a MiTM attack, an attacker secretly intercepts the traffic between two parties and may alter the data. By placing themselves in the communication process, they can sabotage the confidentiality and integrity of the information. MiTM attacks pose a significant threat to IoT-based smart grids, where data interactions must be secure and trustworthy to ensure reliable operations. To counter these threats, researchers have proposed several countermeasures. For example, Ref. [

268] proposed an improved intrusion detection system (IDS) that integrates a machine-learning-based detection, a Quick Random Disturbance Algorithm, and a feature-selection technique. The model was validated on the IEEE-39 and IEEE-118 bus systems with an accuracy rate of 99.99%. In a different study, Ref. [

269] proposed a BP-based hybrid deep learning model (AEXB) that integrates an autoencoder with XGBoost to detect and prevent MiTM attacks in real time. Similarly, in [

270], a hybrid deep learning algorithm combining a Convolutional Neural Network (CNN) and Long Short-Term Memory (LSTM) was developed, tested on network traffic data, and achieved an accuracy of 97.85%.

4.4.2. Ransomware Attack

Besides MiTM and FDIA, ransomware attacks have emerged as a significant cybersecurity threat in smart grids [

271]. Ransomware refers to malicious software which encrypts vital system information to extort money from victims in exchange for data recovery. Ransomware’s rapid spread necessitates timely detection. To address these issues, various researchers have proposed diverse solutions. For example, Ref. [

272] presented a ransomware detection model based on a CNN, which converts binary executable files into 2D grayscale images. This approach achieved an accuracy of 96.22%. In [

273], the authors presented a twofold taxonomy of ransomware in cyber–physical systems (CPS), discussing infection vectors, targets, and objectives. They also analyzed real-life cases of ransomware against CPS to identify major security weaknesses. In a separate study, Ref. [

274] developed a deep-learning-based ransomware detection framework for SCADA-automated vehicle charging station. Appraising the performance of three deep learning models a deep neural network (DNN), a convolutional neural network (CNN), and a long short-term memory (LSTM) the authors reported that their approach had an average accuracy of 98%.

4.4.3. Advanced Persistent Threats (APTs)

These represent long-term and strategic attacks intended to gain unauthorized entry into critical energy infrastructure. The actors are typically sophisticated and/or state-sponsored. APTs can be difficult to detect, as they are covert and designed to accumulate intelligence or cause destruction over an extended period. APTs may tamper with energy grid operations, steal secrets, or disrupt renewable energy systems [

275,

276]. These studies also investigate the growing threat of APTs in energy systems, using the MITRE ATTACK framework to identify weaknesses in current mitigation strategies.

Table 16 presents a comparative analysis of several studies, identifying the key findings and limitations.

4.5. Potential Impact of Cyberattacks on Energy Utilization

The implications of cyberattacks on smart grids can have significant consequences, not just for energy distribution but also for public safety, financial markets, and the environment. These are some of the potential effects:

- 1.

Grid Disruptions and Blackouts: Cyberattacks on critical infrastructure can lead to widespread energy outages. A successful attack on the controllers of power stations or substations can result in blackouts, leaving millions of people without power and disrupting daily life. A coordinated attack on multiple grid nodes can make recovery efforts take days or even weeks.

- 2.

Financial Losses: Cyberattacks can result in significant financial losses for energy utilities and consumers. Downtime, system recovery costs, and legal liabilities can be extremely expensive for utilities. Data manipulation can lead to unnecessarily high bills for consumers, who may even face penalties for alleged over-utilization of energy due to tampered smart meters.

- 3.

Energy Efficiency and Sustainability Setbacks: Cyberattacks can sabotage energy-efficiency programs by disrupting smart data and compromising information in smart meters and grid control systems. For example, tampering with energy consumption data might lead to inefficient demand–response programs, which undermines the goals of energy use optimization and carbon reduction.

- 4.

Loss of Consumer Trust: A cybersecurity incident can erode public confidence in the energy system and the smart grid. When consumers believe their energy or personal information is at risk, they may be unwilling to accept smart meters or participate in energy-efficiency programs. This lack of public trust can make it significantly harder to implement smart-grid projects.

5. Energy Routing and Energy Internet

Energy Internet (EI) is a emerging innovation that enables peer-to-peer (P2P) energy trading and facilitates highly efficient energy distribution. According to [

286], EI can be understood as a smart-grid implementation in which energy flows over the Internet similarly to data packets. The study in [

287] highlights the advantages of EI, noting that it is open, robust, and reliable. While the concept of EI is widely discussed in China and the United States, analogous frameworks have been identified globally under different terminologies. For example, in Japan, the EI concept is commonly referred to as the Digital Grid.

The Digital Grid in Japan represents a fully decentralized energy system [

288], enabling energy transactions for P2P, stabilizing and reinforcing the grid, and supporting new on-demand energy markets. Routers within the Digital Grid facilitate the transfer of energy from point to point, analogous to the movement of data packets on the Internet. This innovation is transforming energy delivery and market dynamics, allowing for more versatile and efficient energy flow. The EI architecture can be structured into seven layers, as illustrated in

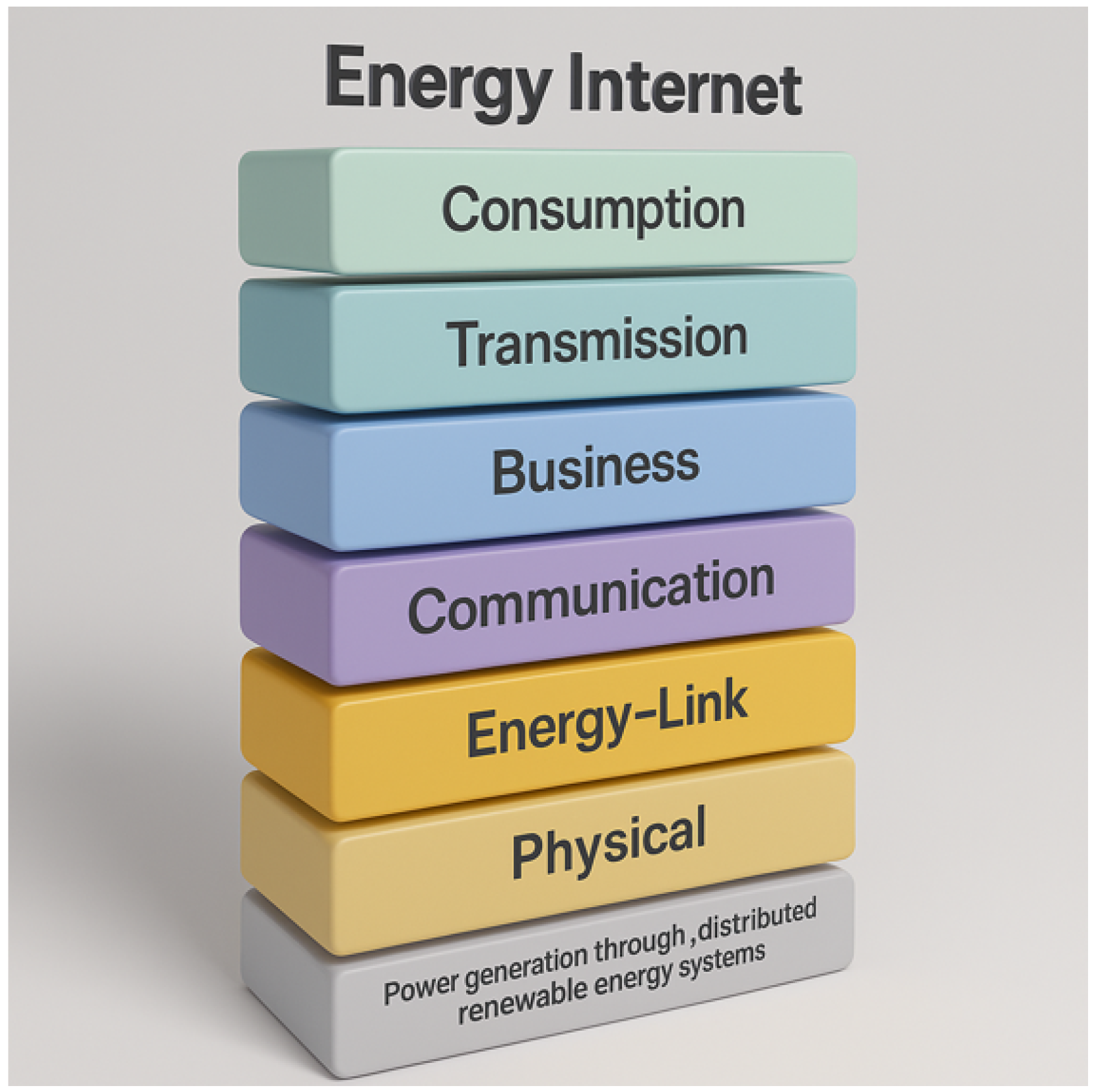

Figure 9, which are based on the Open Systems Interconnection (OSI) model.

The foundational layer is the physical layer, which consists of energy cells. The energy link layer interconnects these energy cells to enable P2P trading within an Energy Intranet. Energy Routers (ERs) play a central role in establishing and maintaining these connections. The communication layer ensures seamless information exchange among the system, stakeholders, and other entities. The business layer manages financial transactions, while the transmission layer facilitates the transfer of power between various Energy Intranets. Finally, the consumption layer regulates energy usage in the energy cells.

Recent research, such as [

289], describes the EI as a transformative network system comprising three primary layers: the physical, information, and value layers. The physical layer interconnects diverse energy sources, including heat, electricity, gas, and cooling to facilitate demand–response and energy sharing among distributed energy resources (DERs). The information layer collects data from the physical layer, enabling real-time coordination and decentralized energy management. The value layer leverages this information to create new business models.

The authors in [

290,

291] presented an EI model as a decentralized process for trading energy, where resources can exchange energy without the intervention of a centralized operator. System stability is maintained through the operation of an Energy Internet Service Provider (E-ISP), which ensures secure and stable energy exchange by regulating transaction volumes in real-time and managing centralized resources when necessary. The EI architecture is designed to be structurally compatible with traditional Internet protocols, incorporating EI Cards (analogous to MAC addresses) and Energy IP addresses to enable mobility tracking across networks. Additionally, a dedicated energy transport layer protocol ensures reliable delivery of energy communications, while an application layer facilitates standardized energy communication exchanges.

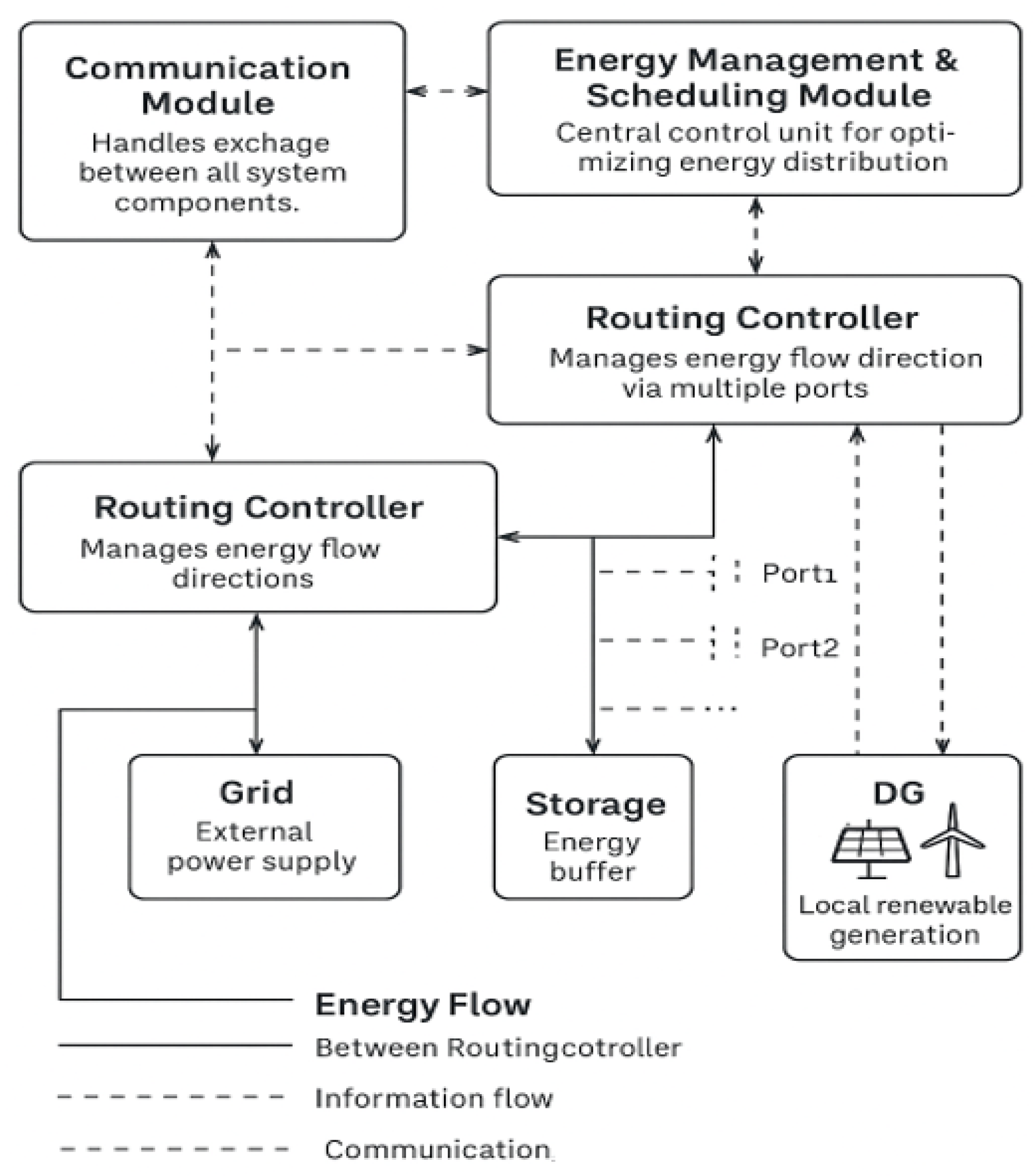

Energy Routers (ERs) play a pivotal role in integration resources within the EI, by enabling the routing of energy across geographically dispersed resources. These devices manage power dispatch, facilitate information exchange, and perfrom transmission scheduling, thereby supporting a dynamic grid and flexible grid structure. ERs are essential for regulating energy flows along interconnected lines, ensuring both stability and operational efficiency of the EI. A typical ER consists of input–output ports that interface with energy sources, electrical loads, and other ERs, as well as a core module comprising power converters and a controller. The literature presents several ER architectures with a generalized structure, illustrated in

Figure 10.

Microgrids (MGs) play a vital role within the EI by integrating intelligent devices, storage systems, distributed energy sources, and communication networks to optimize the flow of energy. As the network architecture evolves, traditional routers are being enhanced to support energy-routing functionalities, enabling bidirectional energy transfer and advance routing operations. Energy-routing challenges include consumer matching, congestion mitigation, and failure prevention through transmission scheduling, and determination of optimal energy paths. To address these issues, routing algorithms must be embedded within ERs to distribute energy from a source to a destination. Optimized energy routing not only minimizes transmission losses and maximizes energy delivery but also reduces greenhouse gas emissions and fossil fuel reliance. Furthermore, efficient routing supports renewable energy integration, enhances grid reliability, and stabilizes electricity flow. P2P energy trading and decentralized energy markets heavily rely on these routing protocols, as they facilitate cleaner energy consumption and reduce reliance on fossil-fuel-based power plants.

This section is motivated by the existing gaps in the literature on energy-routing protocols. Existing reviews [

292,

293,

294,

295,

296,

297] exhibit notable limitations: they often fail to provide a systematic classification of routing methods based on their characteristics and functionalities, and lack comprehensive comparative analyses, and offer limited guidance for future research directions. Additionally, these studies do not thoroughly address the diverse methodologies and terminologies employed to tackle energy-routing challenges, which impedes the development of robust and innovative solutions. Energy routing in modern energy networks is a rapidly evolving area, presenting challenges such as dynamic operation, security, and computational efficiency. Therefore, there is a pressing need for an in-depth comparative framework. Such a framework should evaluate routing protocols in terms of their strengths limitations, and suitability across different application environments.

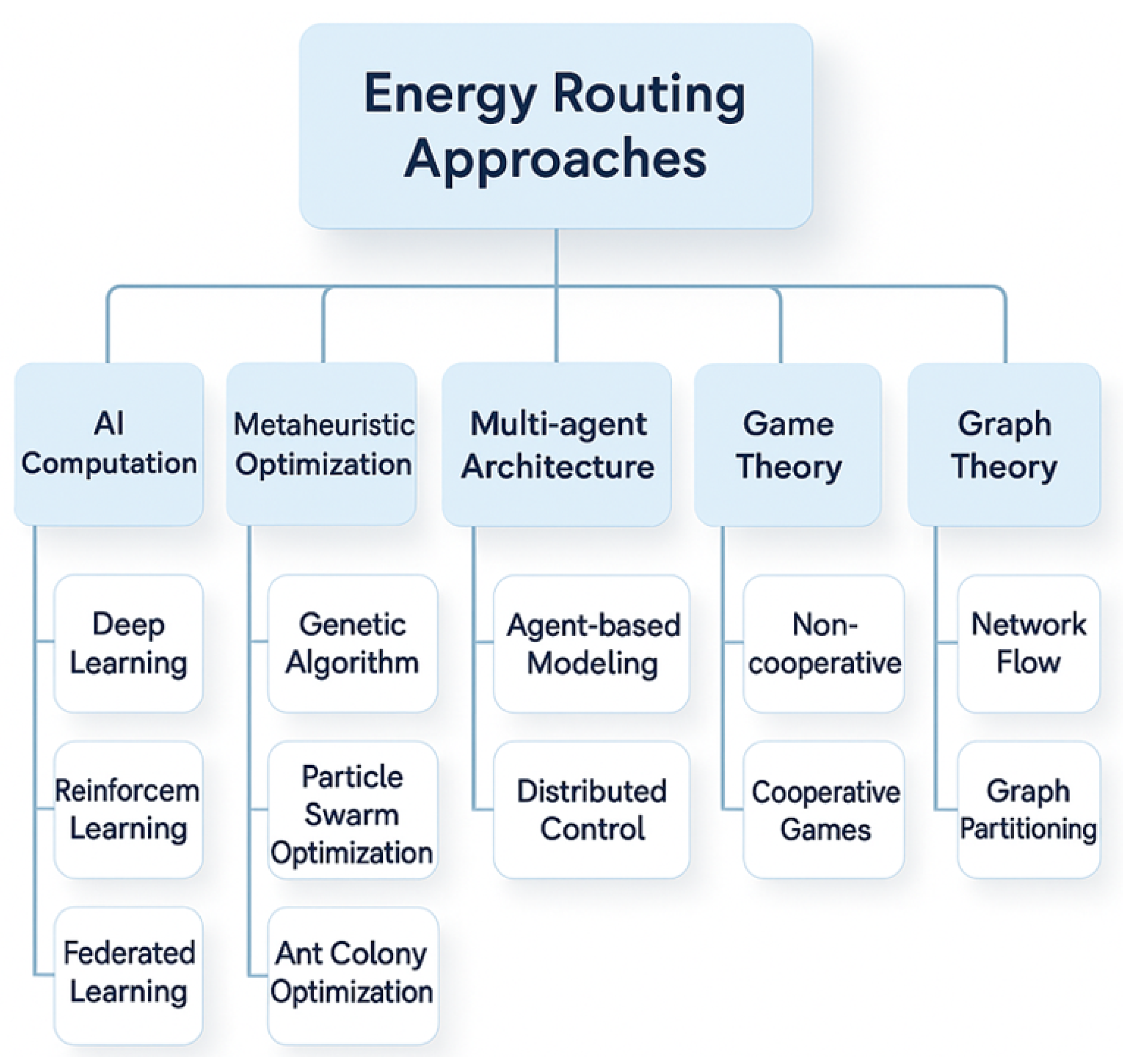

This section addresses these gaps by preenting a comprehensive discussion of energy routing protocols, with particular emphasis on artificial intelligence (AI), Multi-Agent Systems (MASs), and optimization models, including metaheuristics. It offeres an in-depth overview and a structured classification of existing methods, highlighting their strengths and limitations. Furthermore, the proposed framework integrates metaheuristic optimization for minimizing energy losses, AI-driven predictive routing for adaptive decision making, and MAS to enable decentralized control in dynamic, renewable energy networks. The paper also identifies critical challenges such as reducing energy losses, ensuring scalability, integrating renewable energy sources, and responding to fluctuating demand. The future research directions identified herein are intended to guide the development of efficient and sustainable energy routing, ultimately optimizing energy flow within intelligent and decentralized power networks.

5.1. Energy Network Architecture and Routing Constraints

The energy network architecture comprises two layers: the energy transmission layer and the communication layer, interconnected through multiple Energy Routers (ERs) [

298,

299,

300,

301,

302,

303,

304]. While the architecture of ERs remains largely consistent across existing studies [

305,

306,

307,

308], variations emerge in terms of implementation details and control design strategies.

Energy-routing algorithms, implemented within the ER’s routing controller as shown in

Figure 10, adapt their routing decisions dynamically based on information received from the communication layer. It is important to distinguish the energy-routing problem from the Optimal Energy Flow (OEF) problem. The OEF problem primarily addresses system-level optimization, such as determining generation levels and reduce transmission costs. In contrast, the energy-routing problem focuses on real-time energy routing within decentralized networks that involving ERs, distributed energy resources (DERs), and peer-to-peer (P2P) energy trading. It aims to ensure effective energy distribution by determining the best routes for source–load pairs while minimizing transmission losses and adhering to energy constraints.

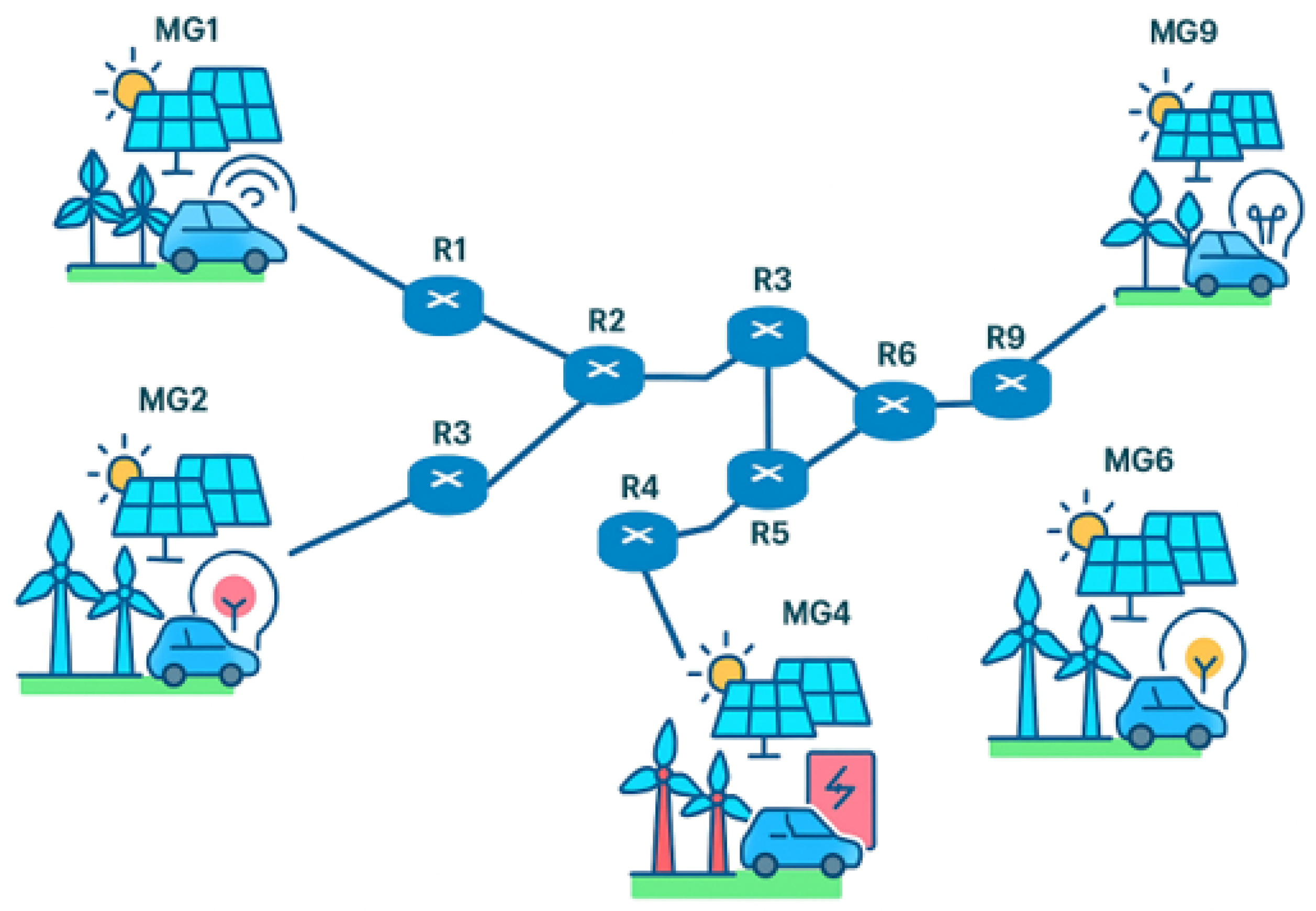

The (EI) model can be represented as a graph, where of Energy Routers (ERs) represent the vertices and energy transmission lines from the edges, as shown in

Figure 11. The routing of energy should be subjected to the following requirements:

The total energy loss across all paths within a network,

, in Equation (

1), must be less than the total transmitted energy

. Each path consists of one or more ERs that transfer energy packet between the destination and source.

In Equation (

2), the transmitted energy

must not exceed the maximum capacity of the selected path. This capacity, denoted as

, is determined by the minimum ER interface capacity

, and the minimum energy line capacity,

.

In Equation (

3), the total energy transmitted through an energy line must not exceed its capacity,

.

In Equation (

4), the energy entering a given ER interface must not exceed its interface capacity,

.

Minimizing transmission losses

to identify optimal energy-routing paths is a central focus in the literature [

309,

310,

311,

312,

313,

314,

315,

316,

317,

318]. The losses in a DC lines connecting two ERs depend on the line resistance

, voltage

, prior energy

, and the transmitted energy

. These parameters are incorporated into Equation (

5) to compute

for the line

.

The energy loss within an ER (

i), as described in Equation (

6), depends on the electronic converter efficiency

of that ER.

The total energy loss along a transmission path between a consumer and a producer as expressed in Equation (

7), is the sum of all losses in the ERs and the connecting energy lines along that path.

5.2. Existing Research on Energy Routing

Numerous studies have investigated the challenges of energy routing in decentralized networks, aiming to enhance efficiency and reliability. These works propose a variety of routing algorithms designed to achieve objectives, such as minimizing energy loss, ensuring scalability and adaptability, and responding to dynamic network conditions. As illustrated in

Figure 12, key strategies include Multi-Agent (MA) architectures for decentralized decision-making, metaheuristic optimization techniques for determining optimal energy paths, and artificial intelligence (AI) methods for predictive and adaptive routing. Emerging approaches based on graph theory and game theory are also being explored to further advance energy routing, with comprehensive reviews of these methodologies addressed in separate publications.

5.2.1. AI-Based Approach in Energy Routing

The application of Artificial Intelligence (AI) models and algorithms has gained prominence in automating the complexities of energy management in modern energy systems [

319,

320,

321]. This section provides an in-depth analysis of AI-based computation, highligting its roles, advantages, limitations, and applicability in enhancing energy routing within decentralized energy networks. A comprehensive overview for researchers and practitioners is presented in

Table 17.

An example of this approach is a model-free Deep Reinforcement Learning (DRL) algorithm that optimizes energy management by controlling Energy Routers (ERs) and Distributed Generators (DGs) within sub-grids. The algorithm leverages real-time observations of the system state to make energy allocation decisions [

322]. While evaluating on a nine-node network revealed limitations, including high computational demands, the requirement for large dataset, and challenges in hyper-parameter tuning.

In studies by [

323,

324], neural network-based reinforcement learning methods, including actor–critic and Q-learning algorithms, are employed to enable adaptive energy routing and demand prediction in large-scale energy networks. While these frameworks improve efficiency and resilience, they need for large training datasets, and limited scalability under dynamic conditions.

AI techniques, including DRL and neural network-based approaches, enhance energy routing by enabling adaptive, efficient, and cost-effective management in decentralized networks. However, their high computational demands, dependence on large datasets, and challenges in hyper-parameter tuning underscore the need for further research to improve scalability and support practical deployment.

Table 17 presents a comparative analysis of selected studies that apply AI to energy routing.

5.2.2. Metaheuristic Approach in Energy Routing