1. Introduction

Wind energy remains a central pillar in the global transition towards sustainable energy systems, generating over 2330 TWh in 2023, nearly equivalent to all the other renewable sources combined [

1]. With the rise in renewable electricity demand, the expansion of offshore wind farm developments is both expected and necessary. Although offshore wind offers superior capacity factors and energy yields than onshore installations, the associated operation and maintenance (O&M) cost, which accounts for up to 30% of the levelised cost of energy (LCOE), continues to pose a major economic challenge for the industry [

2]. Consequently, the development of effective maintenance strategies is critical in reducing both O&M expenditure and the overall LCOE of offshore wind energy.

In this context, recent studies show growing emphasis on predictive maintenance (PdM) strategies for wind farm operations. This is driven by their capacity to estimate system performance and enable timely prefailure intervention [

3]. A significant portion of PdM research has focused on the wind turbine gearbox, one of the components with the highest failure rates [

4]. Operational data of various turbine components are continuously collected at a high sampling rate via condition monitoring systems (CMSs) and supervisory control and data acquisition (SCADA) systems [

3,

5], both of which are widely deployed across modern wind farms. The availability of such large repositories of data contributes significantly to the development of data-driven approaches such as failure diagnosis, failure prognosis and effective maintenance plans of WTs in the literature.

Machine learning (ML) models such as linear regression (LINREG), random forest (RF), support vector machine (SVM) and extreme gradient boosting (XGBoost) are widely utilised in the early stages of data-driven gearbox research. These models often serve as baselines for developing more complex architectures and for validating novel methodological approaches. In [

6], ML algorithms were implemented to develop an interpretable predictive model for bearing failure by using SCADA data from wind turbines. The models’ performances were evaluated by comparing the predicted to the observed generator bearing temperature. The study also examined the model’s compatibility with Shapley additive explanations (SHAP)—a technique for interpreting ML models’ outputs. Similar ML models were adopted in [

7] to classify equipment failure types in WTs based on vibration data. The algorithms were trained on labelled datasets and tasked to classify simulated data for three distinct failure modes—gear scuffing, bearing overheating and bearing fretting corrosion. In contrast to prior studies, ref. [

8] integrated SCADA data with modelled data derived from physics-based models of the gearbox design parameters to enhance existing gearbox prognostics methods, which typically rely solely on SCADA data. Bearing-specific features obtained from the modelled data were utilised in conjunction with SCADA data to train an ML classifier for binary classification tasks.

In addition to conventional ML models, deep learning (DL) models with complex non-linear relationships have also been explored in wind turbine failure prognosis research. While DL models tend to achieve higher prediction accuracy, they require longer development time and are more demanding in terms of computational resources and data volume [

9]. In [

5], the study was granted full access to the extensive dataset, detailing operational and failure logs, SCADA data and vibration measurements sourced from multiple wind farms via an industrial partnership. This enabled the study to perform comparative binary classification analysis involving artificial neural networks (ANNs), logistic regression and support vector machine (SVM). When trained on the complete dataset, the ANN model achieved the highest prediction accuracy of 72.5%. In a different study [

10], the long short-term memory (LSTM) neural network was employed to perform early prediction of main bearing failure utilising SCADA data of an operational wind farm. A normality model was developed using only healthy data to predict the temperature associated with main bearing failure. Although the LSTM model developed was able to detect main bearing failure with high accuracy, the lack of failure data for validation raises concerns about model robustness when extended to more diverse datasets with more failure examples.

The advancement of data-driven approaches has accelerated significantly in recent years, driven in part by the emergence of large language models (LLMs) such as ChatGPT. LLMs have demonstrated great performance across a range of diverse tasks in natural language processing (NLP) without the need for specific fine-tuning. Recent studies have shown growing interest in applying LLMs beyond traditional NLP domains, including dynamic system problems such as energy load forecasting, anomaly detection and failure prediction due to their reasoning and generalisation capabilities [

11,

12,

13].

The zero-shot capabilities of LLMs have attracted growing attention in the time-series forecasting literature, particularly for addressing the challenge of data scarcity that often limits traditional machine learning models. In [

11], an LLM framework known as SigLLM was developed for time-series anomaly detection using LLMs. The approach incorporated a time-to-text conversion and end-to-end prompting timeline, enabling the LLM to perform time-series anomaly detection. Two LLMs—GPT-3.5-turbo and MISTRAL—were investigated, with a focus on MISTRAL due to cost efficiency. The study proposed two detection pipelines: PROMPTER, which directly queried the LLM to detect anomalies within a time-series window, and DETECTOR, which involved tasking the LLM to predict future values of a time-series window. These pipelines were benchmarked against state-of-the-art (SOTA) unsupervised time-series prediction models such as ARIMA, LSTM and MS Azure’s forecasting tools. Under zero-shot conditions, the DETECTOR pipeline was able to identify all anomalies correctly with only a single error, while the PROMPTER pipeline was more effective at detecting local outliers than identifying anomalies within the signal sequence. Limitations were also identified, particularly the LLM’s inability to consistently capture temporal trends in time-series signals and the PROMPTER pipeline’s relatively low precision of 0.219. In a related study [

12], the TIME-LLM framework was introduced to perform forecasting tasks. Unlike SigLLM, TIME-LLM employed an additional layer of input augmentation through declarative prompting that incorporated domain expert knowledge. This layer, known as Prompt-as-Prefix (PaP), provided the LLM with contextual information such as task description and input statistics to further improve LLM reasoning. Using a similar evaluation setup under both few-shot and zero-shot conditions, TIME-LLM achieved 5% reduction in mean squared error (MSE) compared to existing time-series LLMs under few-shot settings and outperformed competitive baselines under zero-shot conditions.

LLMs have also demonstrated promising potential in cross-domain applications, where minor or major fine-tuning is performed on pretrained LLMs with specific data to perform tasks in a defined domain. In [

13], an LLM-based framework was proposed for bearing fault diagnosis in rotating machinery. The study introduced a fine-tuning design to enhance LLM performance under challenging conditions such as cross-dataset generalisation, limited data availability and unseen operational scenarios. The framework converts numerical time-series features extracted from vibration data into textual format, which is then used to fine-tune the LLM using low-rank adaptation (LoRA) and quantised LoRA (QLoRA) techniques. This approach exhibited strong adaptability across multiple bearing fault datasets, demonstrating generalisation capabilities in diverse experimental setups. Within the context of wind turbine failure prognosis, LLM implementation remains an emerging research direction. In the domain of power forecasting, ref. [

14] proposed a model that integrates cross-modal data preprocessing, prompt engineering and a pretrained LLM-LLaMA. The approach extracts relevant information from SCADA data and reformats it into a modality suitable for LLM input. The forecasting process is further enhanced by incorporating prompt prefixes containing prior domain knowledge, improving the model’s contextual understanding of the power generation data. This enabled more accurate power forecasting while minimising information loss commonly associated with cross-modal data conversion.

Previous studies have demonstrated the strong potential of LLM to adapt to time-series data analysis tasks under zero-shot conditions. Some studies which were less inclined to architectural design also focused on improving interpretability of LLM-based anomaly detection [

15]. Motivated by these findings, this work investigates the implementations of LLMs for gearbox failure prediction. To the best of the authors’ knowledge, the application of LLMs in wind turbine gearbox prognosis remains largely unexplored in the current literature. The following are the summaries of the contributions of this research:

- -

The study has performed a comparative analysis on both GPT-4o and DeepSeek-V3 LLMs’ performance in assisting the development of an ML pipeline for gearbox failure prediction.

- -

The LLMs are tasked through a designed prompt to develop ML algorithms in the Python programming language to perform binary classification of gear tooth failure for wind turbines based on labelled SCADA data under a zero-shot prompt. The quality of the LLM-proposed ML methods is evaluated.

- -

An ML pipeline, adopting logistic regression, RF, SVM, XGBoost and multi-layer perceptron (MLP) classifier, is formulated to serve as the baseline of the comparative study. XGBoost and MLP classifier, which are more sensitive to hyperparameter tuning, are fine-tuned based on the SCADA dataset utilised for this study.

- -

The outputs of each LLM are analysed and compared to the baseline models. Additionally, the future possibilities of LLMs in the work of gearbox failure prognosis are discussed.

The remainder of this paper is organised as follows:

- -

Section 2 provides an overview of the methodology of this work in the following sequence—SCADA dataset investigated, baseline models, data preprocessing steps, baseline models optimisation strategy, the selected LLMs, the prompt design process and the evaluation metric adopted in this study.

- -

Section 3 presents and discusses the performance results of the baseline models and ChatGPT-generated and DeepSeek-generated ML pipelines. A comparative analysis of the pipeline designs is also presented and discussed.

- -

Section 4 offers concluding remarks and future research directions revolving around LLM applications in wind turbine gearbox failure predictions.

2. Methodology

The selection of failure prediction methods is closely linked to the nature and distribution of the available data. Factors such as whether the data consists of continuous signals or moving averages or the availability of class labels determine the suitability of different ML models. In this study, a comprehensive labelled SCADA dataset from a wind farm developer that was also investigated in [

5] was employed to develop baseline models which have been commonly used in the literature for supervised classification tasks [

5,

6,

7,

8,

10]. A binary classification task was formulated to evaluate model performance across different failure modes.

Based on this SCADA dataset, a structured prompt was subsequently designed to supply LLMs with relevant metadata, including dataset dimensions, feature types, data distribution and unique variables. The prompt provided explicit instructions regarding the expected model outputs. Details of the prompt design are presented in

Section 2.5. The performance of the baseline models and LLM-generated ML models is then evaluated and presented in

Section 3.1 and

Section 3.2.

2.1. SCADA Dataset

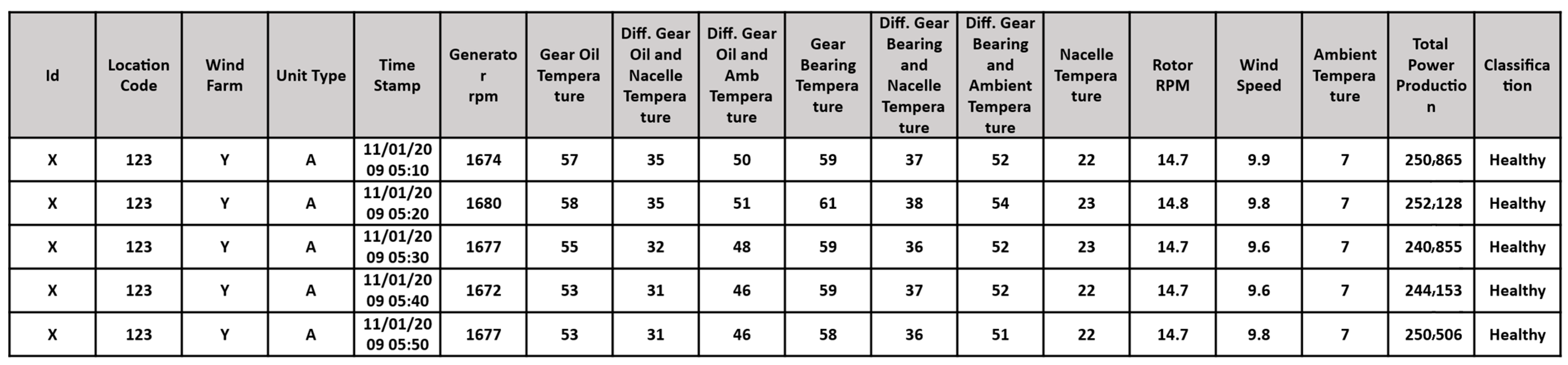

The database utilised in this study was provided by a wind turbine OEM industrial partner. The dataset is a collection of 99,904 data entries of 10 min average SCADA data points located at different wind farms. The SCADA dataset was labelled by the developer based on historical failure logs and corresponding prefailure time windows. The database records the operational data of 27 different wind turbines of types A and B collected from 1 August 2008 to 30 July 2015. The data variables selected to train our baseline models are listed in

Table 1. The same variables will be used for the LLM-proposed pipelines to avoid discrepancy due to differences in training data. The gear oil temperature and gear bearing temperature were measured by using resistance temperature detectors (RTDs), where the sensors were located in the sump of the gearbox lubrication system and on the intermediate speed bearing, respectively, in each wind turbine. The SCADA dataset is labelled based on time before failure, where the classifications are defined as “Healthy” for greater than 1 year, “1 year”, “6 months” and “3 months” before failure. The “Healthy” and “3 months” before failure data are selected for the binary classification task. An example data sample of the SCADA dataset is presented in

Appendix A.1,

Figure A1.

2.2. Baseline Machine Learning Models

This section presents an overview of the machine learning algorithms adopted for baseline models. A brief description of each algorithm is provided, with emphasis on their underlying classification mechanisms and suitability for the task of gearbox failure prediction.

2.2.1. Logistic Regression [16]

Logistic regression is a statistical approach used to illustrate the correlation between a categorical outcome and a set of input variables. In contrast to linear regression, which can only predict continuous outcomes, the algorithm utilises a logistic sigmoid function to transform linear combinations of input variables to predict the probability of the output corresponding to two defined classes of categorical variables (i.e., “Healthy”, “Unhealthy”). The algorithm is commonly implemented in binary and multi-class classification problems.

2.2.2. Support Vector Machine [17]

SVM is a supervised max-margin model, often used for classification and regression analysis tasks. The algorithm separates input vectors by defining the optimal hyperplane that maximises the margin between different classes in the feature space. Additionally, SVM supports non-linear classification by utilising the kernel trick, transforming the data into higher dimensions. The robustness of the model against noisy data and overfitting has contributed to its popularity in predictive tasks.

2.2.3. Random Forest [18]

RF, also known as random decision forests, is an ensemble learning method formed from a collection of decision trees that are trained on bootstrap samples of a given dataset. RF addresses the common issue of singular decision tree being overfitted by training each tree using random subsets of features at each split to improve generalisation. The final prediction made by RF is based on the majority voting of all decision trees for classification tasks and value averaging of all trees for regression tasks.

2.2.4. XGBoost [19]

Gradient boosting is an ensemble machine learning technique that involves sequential error correction of weak learners such as decision trees. A new model is trained using corrected residual errors of previous models at each iteration until a predetermined halt criterion is reached. Utilising this framework, XGBoost is one of the best ML libraries in terms of scalability and memory efficiency for model development.

2.2.5. Multi-Layer Perceptron Classifier [20]

MLP is a feedforward neural network made of multiple layers of interconnected artificial neurons. Non-linear activation functions such as sigmoid or ReLU are applied at each layer of neurons, allowing the neural network to learn complex relationships in the data. MLP forms the foundation of deep learning models and can be applied to different applications.

2.3. Baseline Model Data Preprocessing

2.3.1. Missing Data

Data quality plays a critical role in establishing the performance of ML models. Raw SCADA data are often affected by incompleteness or inaccuracies arising from faulty sensors, which typically manifest as outliers or missing values. While such anomalies may be apparent to human perceptions, ML algorithms lack inherent mechanisms to identify them. Consequently, training models on unfiltered or erroneous data will introduce bias in the model predictions. Notably, the SCADA dataset in this work only has one entry with missing values. A simple elimination strategy is adopted to remove the entry from the training dataset.

2.3.2. Data Type Handling

Presented in

Table 1, the SCADA dataset comprises both categorical and numerical features. These features require different preprocessing techniques to ensure compatibility with the selected baseline ML models. Although categorical features can be as informative as numerical features in the model training, they must first be converted into numerical representations to be processed by most ML algorithms. The transformation process is known as encoding, a standard step in data preprocessing. Common encoding methods employed in ML development include one-hot encoding, ordinal encoding and target encoding [

21].

This study applies one-hot encoding and ordinal encoding in the data preprocessing pipeline. One-hot encoding transforms categorical features into binary vectors based on the number of unique values, assigning 1 to the active category and 0 to the rest. Ordinal encoding is used to transform the class labels into ordinal numbers ranging from 0 to 3. Furthermore, numerical features are scaled using StandardScaler to ensure consistent feature ranges for model input.

2.3.3. Class Imbalance

Supervised learning algorithms perform classification tasks by identifying linear or non-linear boundaries that separate different classes within the training data. In this study, the distribution of classification is relatively balanced, where the data classification has the ratio of “Healthy”—0.24, “1 year”—0.24, “6 months”—0.26 and “3 months”—0.26. Although the data imbalance is not severe, the ML models may still display a minor bias towards “6 months” and “3 months” class predictions. In the case of severe class imbalance and multi-class classification, the synthetic minority over-sampling technique (SMOTE) [

22]—an oversampling approach that synthetically generates new data from the minority classes—is often adopted to address the issue.

2.3.4. Model Training

In this study, the SCADA dataset is split into training and validation data using a 7:3 ratio split after undergoing preprocessing to improve data quality. The same split ratio was adopted in [

5].

2.3.5. Baseline ML Model Optimisation

Hyperparameter optimisation is an important process in the development of ML algorithms as it has a heavy influence on their performance on a given dataset. The hyperparameters govern the training behaviour, model complexity and generalisation capability of the ML algorithms. There are two conventional strategies in hyperparameter tuning—grid search and random search. The grid search method involves an exhaustive process of examining all combinations of hyperparameter values within a predefined range, while the random search method involves random sampling of hyperparameter values within a predefined range. Comparatively, the random search approach is more efficient due to its larger dimensional coverage of tuning parameters with less computational time [

23]. Hence, the random sampling method is adopted in this study to fine-tune XGBoost and MLP classifier to maximise their performances. The ranges of hyperparameters tested are as shown in

Table 2 and

Table 3.

2.4. Large Language Models

Large language models are complex, large-scale AI systems pretrained on extensive datasets. Built upon advanced deep learning architectures, particularly transformer networks, LLMs are designed to perform next token prediction with high efficiency and accuracy. Although LLMs were originally developed for natural language processing (NLP) and natural language understanding (NLU) tasks, recent advancements have significantly broadened their application scope. The deployment of various LLMs has enabled their use in domains such as image processing, code generation and content summarisation. Studies demonstrated that modern LLMs are capable of performing zero-shot and few-shot learning tasks when provided with appropriately designed prompts [

24,

25]. The instruction-based zero-shot capabilities of LLMs motivate this study to explore their potential for wind turbine gearbox failure prognosis. In this work, 2 popular multimodal LLMs—GPT-4o and DeepSeek-V3—are examined.

2.4.1. ChatGPT (GPT-4o) [26]

ChatGPT is a large language model developed by OpenAI, which was initially designed to have separate multi-modal systems (i.e., GPT-4 with vision). The latest version, GPT-4o, is now a multi-modal model capable of receiving image and text inputs and providing text outputs in return. The model retains its transformer backbone, which is optimised for parallel processing to improve the next token prediction with long-term dependencies. Unique to GPT-4o, text, image and audio are integrated into one model architecture, eliminating the need for separate models for speech and visuals.

2.4.2. DeepSeek-V3 [27]

DeepSeek made a revolutionary entrance into the LLM industry with their first model, DeepSeek-R1, in 2023 with mixture-of-experts (MoE) architecture, achieving competitive performance compared to the leading LLM at the time, GPT-4, with significantly lower training cost. Their latest model, DeepSeek-V3, adopts multi-head latent attention (MLA) and DeepSeekMoE architectures, which are different from the transformer architecture. The model is trained on 14.8 trillion tokens, which is accompanied by supervised fine-tuning and reinforcement learning, enabling the model to maximise its capability.

2.5. Prompt Design [28]

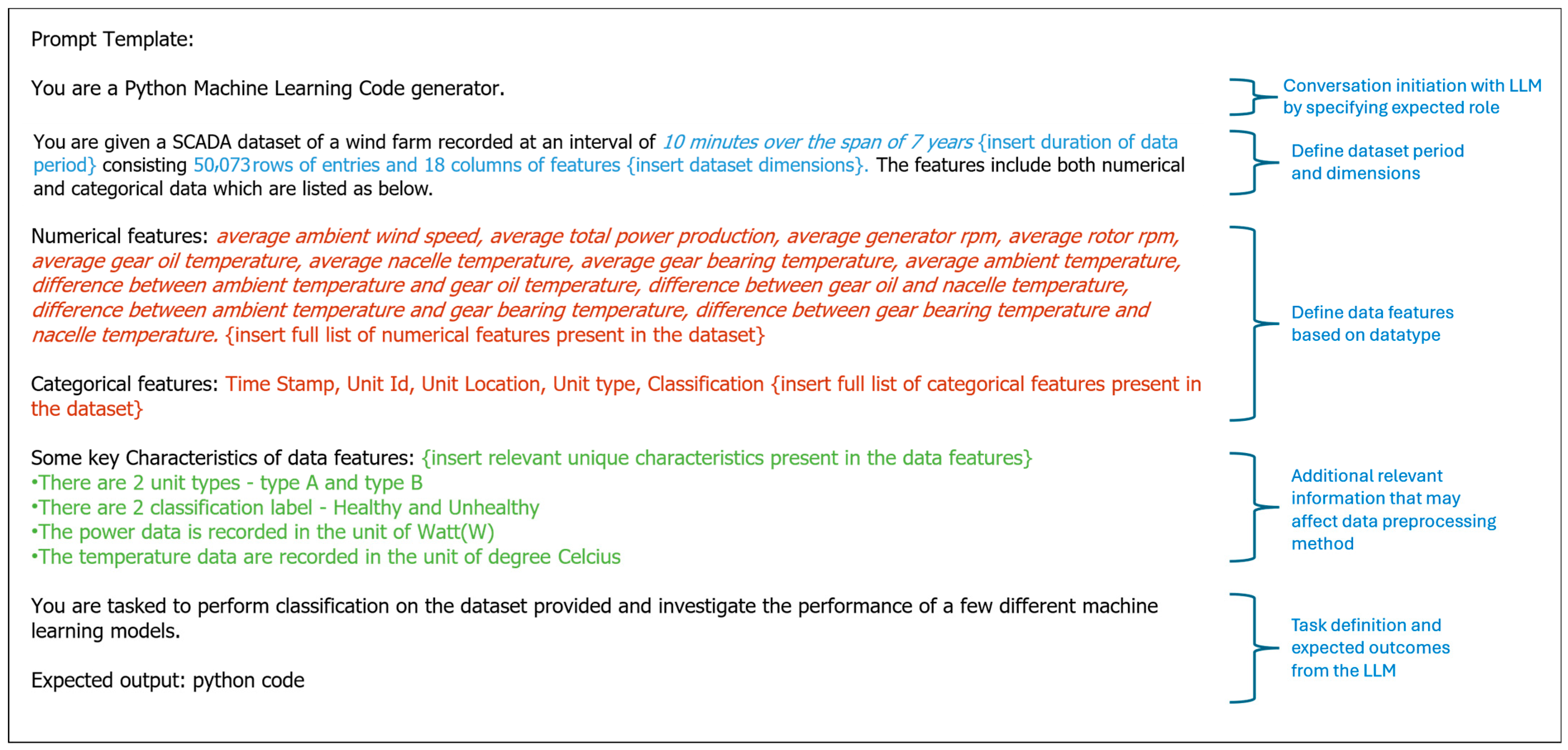

Prompt engineering is an active research area in LLM development due to its capability in enhancing model effectiveness without advanced architectural tinkering such as hyperparameter fine-tuning. In-context learning (ICL) and chain of thought (CoT) are two of the most common strategies adopted in the literature. As our work involves the generation of ML pipelines by the LLMs through prompting, the selection of prompt strategy will greatly affect the performance of these LLM-generated pipelines.

ICL involves the process of adding input–output example(s) to the LLM in a single prompt, generating responses based on the examples. While task description is crucial in the prompt design, the number of input–output examples provided in ICL differs based on the approach taken, which can be further divided into few-shot learning (multiple examples), one-shot learning (one example) and zero-shot learning (no example). In contrast to ICL, CoT provides precise guidance to the LLM based on the output generated for a given task description. Explanation and correction are given at every step to guide the LLM to generate the desired output rather than providing an input–output example directly. This technique may require multiple prompts to complete the task appropriately.

This work focuses on the zero-shot capability of the LLMs in the development of ML pipelines for carrying out binary classification tasks for wind turbine gearbox failure classes. Accordingly, a zero-shot ICL approach was employed in our prompt design. For the ML pipeline generation by the LLMs, the details and characteristics of the SCADA dataset were prompted to the LLMs as the context of a problem, without including any example of the output code or ML algorithms required to perform the described task. The full template of prompt design implemented is shown in

Appendix A.2. The task description and dataset information provided to the LLMs can be summarised as follows:

- -

Source context of the SCADA dataset (i.e., number of wind turbines);

- -

Number of data entries and features included in the SCADA dataset;

- -

List of numerical features and categorical features provided by the dataset;

- -

Included unique categorical feature details such as the number of unit types and class labelling;

- -

The measurement units for power generation and component temperatures;

- -

Expected output format: Python code.

2.6. Model Performance Evaluation

The baseline model performances are evaluated after being optimised with hyperparameter tuning in this study. This section presents an overview of the evaluation metric adopted to evaluate the performance of the baseline models.

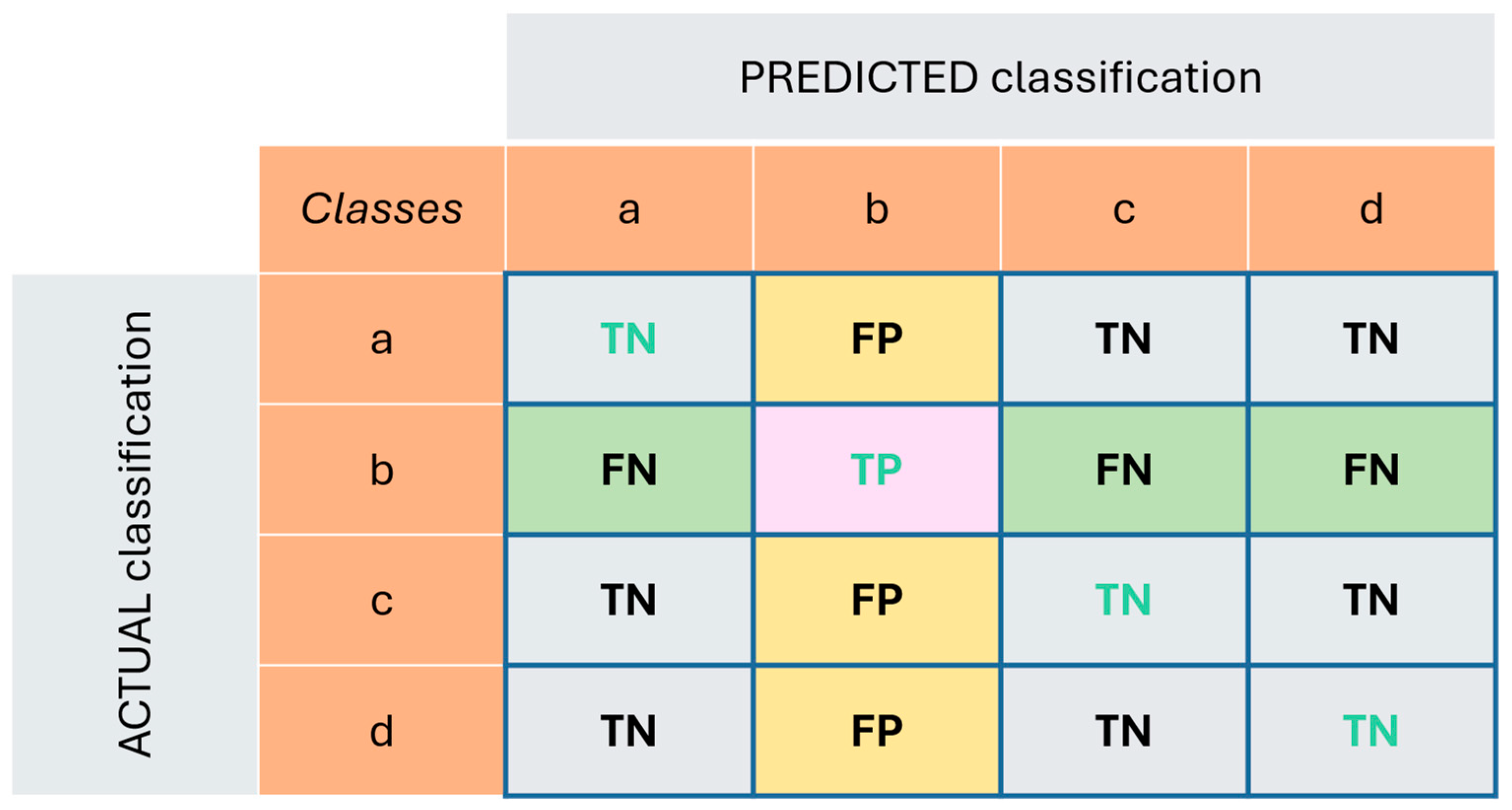

The most widely adopted evaluation metric for classification tasks (binary/multi-class) in the literature is the confusion matrix. An illustration of the technique for multiple classes is presented in

Figure 1. The confusion matrix records the occurrences of the actual classification and the predicted classification by the models. The occurrences are termed as true positive (TP)—elements labelled as the selected class (

k) by the model and are actually correctly classified, false positive (FP)—elements labelled as the selected class (

k) by the model but are actually wrongly classified, true negative (TN)—elements labelled as other classes by the model and are actually correctly classified and false negative (FN)—elements labelled as other classes by the model but are actually the selected class (

k). The confusion matrix in

Figure 1 highlights the results of the selected class b, where the TP was highlighted in the pink cell, while the FN and FP were highlighted in green and yellow cells respectively. The green texts in the figure represent the predictions when the predicted and actual classification aligns with one another.

The record helps to serve as the building blocks for calculating other evaluation metrics, namely precision, recall, F1-score and area under receiver operating characteristic curve (AUC). The formulas of these metrics for the generic class,

k, are shown in

Table 4. Precision determines the reliability of the model to predict a positive value of a generic class, while recall determines the accuracy of the model in the prediction of the generic class. These measurements help to define the reliability and the accuracy of the model in making predictions for each class in the task. The F1-score then gathers both measures in the form of a weighted average between precision and recall through the concept of the harmonic mean, resulting in a score which indicates the overall performance of the model, where the best value is 1 and the worst score is 0.

For this study, the models’ performances in binary classification were evaluated using confusion metrics. To avoid overfitting and possible data leakage issues, cross-validation at 5 folds is applied to investigate the average F1-score of the models.

3. Results and Discussion

This section presents and discusses the results of each pipeline in the binary classification of wind turbine gearbox failure based on the labelled SCADA dataset. The baseline models were assessed using the confusion metric and cross-validated evaluation at five folds to minimise overfitting. The precision, recall, F1-score and cross-validated average F1-scores of each model from baseline, GPT-4o and DeepSeek-V3 pipelines are presented in

Table 5,

Table 6 and

Table 7, respectively. For simplicity, the cross-validated (CV) average F1-score will be represented as CV-score moving forward. The ML model with the best CV-score in each pipeline is highlighted in the tables below.

Section 3.1 will cover the results produced by our baseline models, while

Section 3.2 will cover the results of LLM pipelines. Discussion on the comparison between the three pipelines will be presented in

Section 3.3.

3.1. Baseline Models

In the baseline pipeline, it is observed that the linear models (logistic regression and SVM) performed weakly compared to the ensemble models (random forest and XGBoost) and the neural network model (MLP classifier). The logistic regression model had the lowest CV-score at 0.6878, while XGBoost achieved the highest CV-score at 0.933. This shows that simple linear models are weaker in prediction tasks involving non-linear behaviour observed in wind turbine operation data. Although logistic regression and SVM are both inherently linear models, it is observed that the results of the SVM model were significantly better than those of the logistic regression model in our setup. The performance gain of SVM can be attributed to the use of the kernel function, which allowed SVM to project the input data into a higher-dimensional space where a linear hyperplane that separates non-linear patterns can be determined. On the other hand, logistic regression, lacking such capability, limits the model’s formation of a linear decision boundary in the input space, resulting in lower performance.

Notably, the MLP classifier scored lower than RF, while XGBoost achieved similar performance to RF despite having higher architectural complexity and undergoing fine-tuning. For the non-linear models, the leading performance of random forest despite the absence of fine-tuning can be attributed to the model’s strength in recognising average patterns in datasets. As XGBoost and MLP classifier are complex models that generally perform better when dealing with datasets with high complexity by capturing subtle feature interactions (i.e., continuous raw temperature signals at high frequency), their performances were relatively low considering the models’ complexity. In terms of scalability, it is important to note that XGBoost and MLP classifier will outperform random forest with an increase in data samples and reduced failure examples. As this is not the focus of this work, a comparative study on datasets with higher sampling rates is not conducted.

Comparing individual model performance in each class prediction, although minor, it is observed that the overall performance of the models was lower in the “Healthy” class prediction when compared to the “3 months” predictions. This is due to the slight data imbalance between the two classes, as mentioned in

Section 2.3.3, resulting in bias towards the class with more data samples.

3.2. LLM-Suggested ML Pipelines

The results of ML models generated by the LLMs based on the prompt outlined in

Section 2.5 are presented and discussed in this section. Due to the differences in LLM architecture, the resulting code differs in quality, length and suggested performance metrics. To enable consistent evaluation, the evaluation metrics introduced in the previous section are applied for comparison. This section compares the results of the LLM-suggested models in their default settings without additional fine-tuning as the component was not defined by GPT-4o’s design. The key differences in terms of pipeline functionality and design of the LLM-generated pipelines are further discussed in

Section 3.3.

All ML pipelines defined in this work included SVM and RF as part of their classification tools. By comparing the results of SVM and RF models from all three pipelines, it is observed that DeepSeek-V3’s model exceeded both GPT-4o and baseline models in CV-score. Notably, the XGBoost model from the DeepSeek-V3 pipeline achieved similar results to the fine-tuned baseline model despite operating at default settings. The leading performance observed across multiple models of the DeepSeek-V3 pipeline is likely enabled by an additional preprocessing step that was absent in the other two pipelines.

In the preprocessing steps of ML models, data timestamps were commonly excluded from the training data due to the risk of data leakage that is associated with the clustering of failure samples in a specific period window within the timeline of the dataset. While each pipeline addressed this risk by excluding timestamps from the training data, DeepSeek-V3 leveraged the temporal information that can be found within timestamp data before removing it from the training. This was performed by generating datetime features extracted from the timestamps. The extracted features were “year”, “month”, “day”, “hour”, “day of week” and “is weekend”. The additional features provided temporal information on the data seasonality to the models, consequently enabling better prediction accuracy. Although this technique is effective in uncovering seasonal patterns commonly observed in wind turbine operational data, the generated features in DeepSeek-V3’s ML pipeline might have resulted in data leakage from the timestamps. This is because the pipeline had extracted timestamp information as detailed as the “hour” of the data samples, which may have revealed more temporal information than just seasonality.

3.3. Comparative Analysis

In this work, both LLMs were given the same prompt to propose an ML pipeline aimed at performing binary classification on wind turbine gearbox failure based on SCADA datasets. As the ML pipelines proposed by the LLMs varied in code structures, the key components of all three pipelines will be compared and discussed in this section. The comparison will be made in terms of pipeline structure and design with reference to the baseline models. Notably, the same algorithms (SVM, RF, XGBoost) in the pipelines were sourced from the same ML libraries, and the processing time of each pipeline will be discussed in terms of overall time taken from code initiation to result generation instead of individual training times of the algorithms. The scope of data analysis and overall feasibility of the proposed pipelines will be discussed at the end of this section.

The pipeline structure and design can be divided into data preprocessing strategies, defined ML algorithms, defined evaluation matrices, fine-tuning techniques and overall processing time. Among the LLMs, GPT-4o provided the most streamlined ML pipeline comprising three basic ML models—random forest, gradient boosting classifier and SVM. The model presented results using a confusion matrix, along with performing cross-validation. This approach aligns with the evaluation metrics adopted for baseline models. GPT-4o proposed a test–train split of 20:80, where an additional 10% of the data was allocated for training compared to the test–train split ratio that this work applied. The GPT-4o pipeline enabled baseline model functionality without additional input by including essential data preprocessing steps—applying a StandardScaler to scale numerical features and OneHotEncoder to encode categorical features. However, the simplicity of the GPT-4o pipeline came at the expense of comprehensiveness. Notably, GPT-4o did not incorporate data cleaning processes, thereby overlooking critical concerns such as missing values. The issue can introduce biases during model training and reduce prediction accuracies. In terms of the overall processing time from code initiation to generating results, GPT-4o’s ML pipeline is much quicker than the other two pipelines due to the lesser number of ML algorithms investigated and the absence of fine-tuning steps in its code structure.

In contrast, DeepSeek-V3 proposed a more elaborate ML pipeline that encompassed a wide range of data analysis techniques prior to classification. The pipeline initiated with an exploratory data analysis (EDA) phase, providing overviews of the dataset including feature types, missing value distribution and descriptive statistics with categorical counts. Furthermore, it introduced visualisations of numerical data distributions and feature correlations, which are often underemphasised in the early stages of ML pipelines. Although these steps do not affect the prediction accuracy of the ML models, the EDA techniques are important in improving developers’ understanding of the dataset. The pipeline also implemented SimpleImputer for handling missing data, which was absent in GPT-4o’s approach. DeepSeek-V3 defined up to seven ML algorithms, including logistic regression, random forest, gradient boosting classifier, XGBoost classifier, SVM, K-nearest neighbours and naïve Bayes. The LLM utilised both confusion matrices and receiver operating characteristic (ROC) curves for performance evaluation. The same test–train split ratio was adopted by both LLM-proposed pipelines. Additionally, the selection of the best model under default settings was incorporated in the pipeline, where the best model was optimised via the grid search method. However, the selection process was hard-coded to consider only three classifiers—RF, XGBoost and gradient boosting classifier—thereby limiting the flexibility and robustness of the fine-tuning approach. Notably, the grid search method is more time and computationally demanding than the random search method that was employed for the fine-tuning of the baseline model. This results in a longer processing time for the code to fine-tune the selected model than the baseline model. While the random search method is adopted in baseline models for fine-tuning XGBoost and MLP classifier which were more sensitive to hyperparameter tuning, it is worth considering also fine-tuning RF to achieve better prediction accuracy for the baseline model. Between the ML pipelines in this work, DeepSeek-V3’s pipeline had significantly longer total processing time for the pipeline to generate the result due to the implementation of EDA for the dataset and adopting the grid search method for model fine-tuning.

In terms of functionality, both LLMs generated functionally accurate solutions to the classification task. However, DeepSeek-V3’s ML pipeline addressed a wider scope of data analysis, covering data exploration steps. While GPT-4o delivered a concise and efficient pipeline, DeepSeek-V3 offered a more holistic design, accounting for crucial stages of data exploration, cleaning and model optimisation without being explicitly prompted. Of particular concern is GPT-4o’s omission of missing data handling, which reduces the reliability of its generated code. From an ML development standpoint, these findings suggest that DeepSeek-V3 may be more suitable for scenarios requiring comprehensive pipeline generation, especially when developer input is limited or exploratory rigor is essential under zero-shot prompting. However, it is important to point out that, although GPT-4o performs more weakly in zero-shot prompting, this does not imply that GPT-4o cannot achieve similar performance under CoT prompting where feedback is provided by users based on the LLMs’ responses. Further investigation into the implementation of CoT prompting could be considered for future studies.

4. Conclusions

This study presents a comparative analysis of two state-of-the-art LLMs—GPT-4o and DeepSeek-V3—when generating ML methods targeting gearbox failure binary classification. Using zero-shot learning prompts, both LLMs were provided with detailed descriptions of SCADA data characteristics and problem context. Traditional ML methods—logistic regression, SVM, RF, XGBoost and MLP classifier (neural network)—were developed as a baseline comparison for this study. After fine-tuning, the baseline models were assessed using the confusion metric, with the XGBoost and RF models achieving the highest classification performance on the SCADA dataset. In assessing LLM-generated ML pipelines, the untuned RF model proposed by DeepSeek-V3 achieved the highest CV-score. Additionally, the untuned XGBoost model from the same pipeline delivered performance comparable to the best fine-tuned baseline models.

In the comparison analysis of the LLMs, the study discovered that DeepSeek-V3 outperformed GPT-4o in addressing the problem described, not only by generating task-appropriate solutions but also by including an unprompted EDA phase in its pipeline and addressing key preprocessing steps. Furthermore, DeepSeek-V3 proposed a feature extraction function in its pipeline that enhanced ML model prediction accuracy beyond both GPT-4o and baseline models. Although the adopted feature extraction method might have contributed to data leakage, the strategy to extract temporal information from timestamps is effective in increasing the prediction accuracy of ML algorithms through data processing. In contrast, although GPT-4o provided a technically correct solution, it omitted critical steps such as data cleaning and cross-validation steps, which compromised the reliability and the accuracy of its suggested ML pipeline. Conclusively, this work recommends the use of DeepSeek-V3 in assisting the development of ML pipelines for failure mode classification for datasets of a similar nature to wind turbine SCADA data under zero-shot prompting.

One of the biggest limitations of off-the-shelf LLMs lies in the replicability of responses despite providing a specific prompt. LLMs, like humans, do not “reason” identically in response to the same prompt. Developer-specific optimisation can result in different response quality. For instance, GPT-4o may have a limitation in the token window, which may limit response detail and exclude optimisations that were not prompted. As the evaluation metric adopted in this study was based on the ML model cross-validated F1-scores rather than direct LLM performance metrics, the results cannot serve as direct indicators of model quality. However, it was a good indicator in presenting the quality of the pipeline designed by LLMs in solving the given problem. The variation in response nuances necessitates a more robust and problem-specific metric, as well as more LLMs such as Gemini and Llama to be considered in future work. While there are LLM benchmarking metrics in the literature that specifically evaluate the quality of code generated by LLMs, these evaluation metrics are only able to reflect the quality of code syntax and functionality. They are unable to reflect the reasonability behind the design of the generated codes. It must be highlighted that the results presented in this work do not definitively reflect the quality of the LLMs, given the differences in LLM architecture and varying design intentions.

Additionally, another limitation of this work is the prompt strategy adopted for the ML pipeline generation. It is important to acknowledge that the description of SCADA dataset details does not provide the complete representation of the dataset. Notably, statistical information regarding our dataset was also not included in the prompt due to confidentiality. As a result, discrepancy in data description will affect the response of the LLMs when defined by different users. Recent development has introduced the input format of documents and folders in some state-of-the-art LLMs, and it is believed that providing a data file directly in the prompt would produce more accurate pipeline design. However, due to confidentiality, this method was not adopted in this work.

As the LLM is an accelerating emerging technology, it is important to acknowledge that this study does not provide a complete representation of the technology’s capability in wind turbine gearbox failure prediction. While this study focused on utilising the generative capacity of the LLMs in the development of ML techniques, an important consideration for future work is to leverage the predictive capabilities of the LLM architecture.

Prior studies have established the capacity of pretrained LLMs to perform next-token predictions beyond NLP applications. For future work, the adaptation of LLMs in the context of wind turbine gearbox failure prediction will be explored. As discussed in

Section 1, refs. [

11,

12] proposed prompt engineering approaches to enable the LLMs to process and predict numerical time-series data effectively. The success of these approaches in facilitating LLM-based analysis of purely numerical inputs inspires the exploration of similar strategies to be applied for classification and continuous data prediction for SCADA datasets of mixed data types. Furthermore, another feasible adaptation approach adopted by [

13] is to use lightweight fine-tuning on small pretrained LLMs by using domain-specific data, enabling response generation which is more accurate to work with SCADA datasets. However, this direction of future work might be unfavorable due to the requirement of large amounts of domain-specific data to fine-tune LLMs for the application.