1. Introduction

With the accelerated transformation of the global energy structure, renewable energy represented by solar power generation has been continuously increasing its share in power systems. However, solar power generation is affected by multiple uncertainties, including meteorological conditions, environmental factors, and equipment status, and the randomness and volatility of its power output pose serious challenges to grid dispatch, electricity market trading, and system stability. To address these issues, many scholars have studied methods for solar power generation forecasting [

1,

2,

3].

In recent years, solar power forecasting models have undergone extensive development. Mainstream power forecasting models primarily include physical models [

4], statistical models [

5], machine learning models, and deep learning models [

6,

7]. Physical models integrate underlying physical mechanisms such as solar radiation, atmospheric transmission, and photovoltaic cell characteristics to establish mapping relationships between meteorological parameters (e.g., solar radiation) and output power. This approach begins with solar radiation modeling [

8], progresses to clear-sky model development [

9,

10], incorporates atmospheric effects calculations (e.g., Rayleigh scattering, aerosol extinction) [

11], and finally performs photovoltaic panel photoelectric conversion calculations [

12,

13]. Scholars have proposed a physical model based on meteorological parameters and an improved maximum power point tracking (MPPT) algorithm. By predicting meteorological parameters, photovoltaic panel surface radiation, current–voltage characteristics, and executing the MPPT algorithm, hourly photovoltaic power output is forecasted [

14]. However, physical models involve complex modeling processes, require high-quality data, and perform poorly under extreme weather conditions. Statistical models, on the other hand, are mostly based on historical power data and meteorological data, employing time-series analysis (e.g., ARIMA and exponential smoothing) [

15], regression analysis [

16,

17], and Kalman filtering [

18] to achieve rapid photovoltaic power forecasting. Scholars have evaluated the performance of ARIMA models in solar radiation forecasting under different climatic conditions, and the results show high accuracy in terms of the mean squared error (MSE), root mean squared error (RMSE), and coefficient of determination (R

2) [

19].

With the rise of machine learning algorithms, methods such as Random Forest [

20], XGBoost [

21], and Support Vector Regression [

22] have been widely applied in the solar energy field by extracting key features to capture nonlinear relationships in data. Machine learning algorithms demonstrate superior advantages in handling complex nonlinear relationships, adaptive capability, and big data mining potential. Scholars have predicted solar irradiance based on meteorological parameters and subsequently forecasted power generation using the predicted irradiance. A comparison of three prediction methods—multiple regression, support vector machine, and neural network—revealed that the neural network model achieved the best accuracy [

23]. This feature-based prediction framework combined with shallow networks exhibits strong learning capability for nonlinear weather–power relationships. With recent advancements in computing power, deep learning approaches employing Recurrent Neural Network (RNN) [

24], Gated Recurrent Unit (GRU) [

25], and Long Short-Term Memory (LSTM) [

26] for time-series forecasting have further enhanced model learning capability. Based on three years of data from an Australian photovoltaic power station, a study combining LSTM and TCN models demonstrated high accuracy in both single-step and multi-step power prediction, effectively extracting features, capturing information, and learning data patterns [

27]. The latest research trends indicate that Transformer architectures based on attention mechanisms show advantages in long-sequence dependency modeling. Research focused on day-ahead photovoltaic power forecasting using Transformer variants demonstrated significant advantages in RMSE metrics and competitive performance across different datasets and prediction horizons [

28]. Scholars proposed PVTransNet, a multi-step photovoltaic power forecasting model based on Transformer networks, which significantly improves prediction accuracy by integrating historical PV generation data, meteorological observations, weather forecasts, and solar geometry data [

29].

These prediction methods primarily rely on single models while neglecting the complementarity between different models, with their performance being constrained by the inherent limitations of each model. For instance, physical models are highly sensitive to the accuracy of meteorological parameters, while statistical models struggle to capture complex nonlinear relationships. As for machine learning and deep learning models, their performance varies significantly when applied to different power plants with complex operating conditions due to variations in meteorological factors and data quality. To address these limitations, researchers have investigated ensemble learning-based prediction models. For example, one study proposed a hybrid deep learning framework for photovoltaic power forecasting that combines Maximum Overlap Discrete Wavelet Transform (MODWT) with Long Short-Term Memory (LSTM) networks, aggregating predictions from multiple LSTM models using a weighted averaging method [

30]. Another study explored four different ensemble methods: simple averaging, linear weighted averaging, nonlinear weighted averaging, and inverse variance-based combination. The results demonstrated that the inverse variance-based combination method generally performs best, improving the accuracy of day-ahead predictions by 4.55–36.21% [

31].

Ensemble learning-based forecasting frameworks can enhance accuracy through integration strategies; yet, these methods typically focus only on simple or weighted averaging of model outputs. They still rely on single models for feature selection and lack systematic screening criteria for sub-model pool selection, failing to consider the impact of model diversity on prediction accuracy. For result integration, they employ either simple averaging or static weighting, without accounting for temporal dimensions or proper model weighting under different weather conditions.

To address these limitations, this paper proposes a multi-stage ensemble learning framework that optimizes three key aspects: feature selection, model screening, and dynamic result integration. Scholars have utilized open data and machine learning algorithms to predict short-term (hourly) power generation at newly operational photovoltaic power plants, emphasizing the importance of multi-location evaluation [

32]. Accordingly, the proposed method was tested at multiple sites within public datasets and at a real-world 75 MW power station in Inner Mongolia. Experimental results demonstrate that the proposed method outperforms baseline models across multiple sites.

The highlights of this paper are as follows:

At the feature selection level, a multi-model-weighted RFE-based evaluation method is proposed, which effectively enhances the discriminative power and robustness of input features by leveraging complementary advantages.

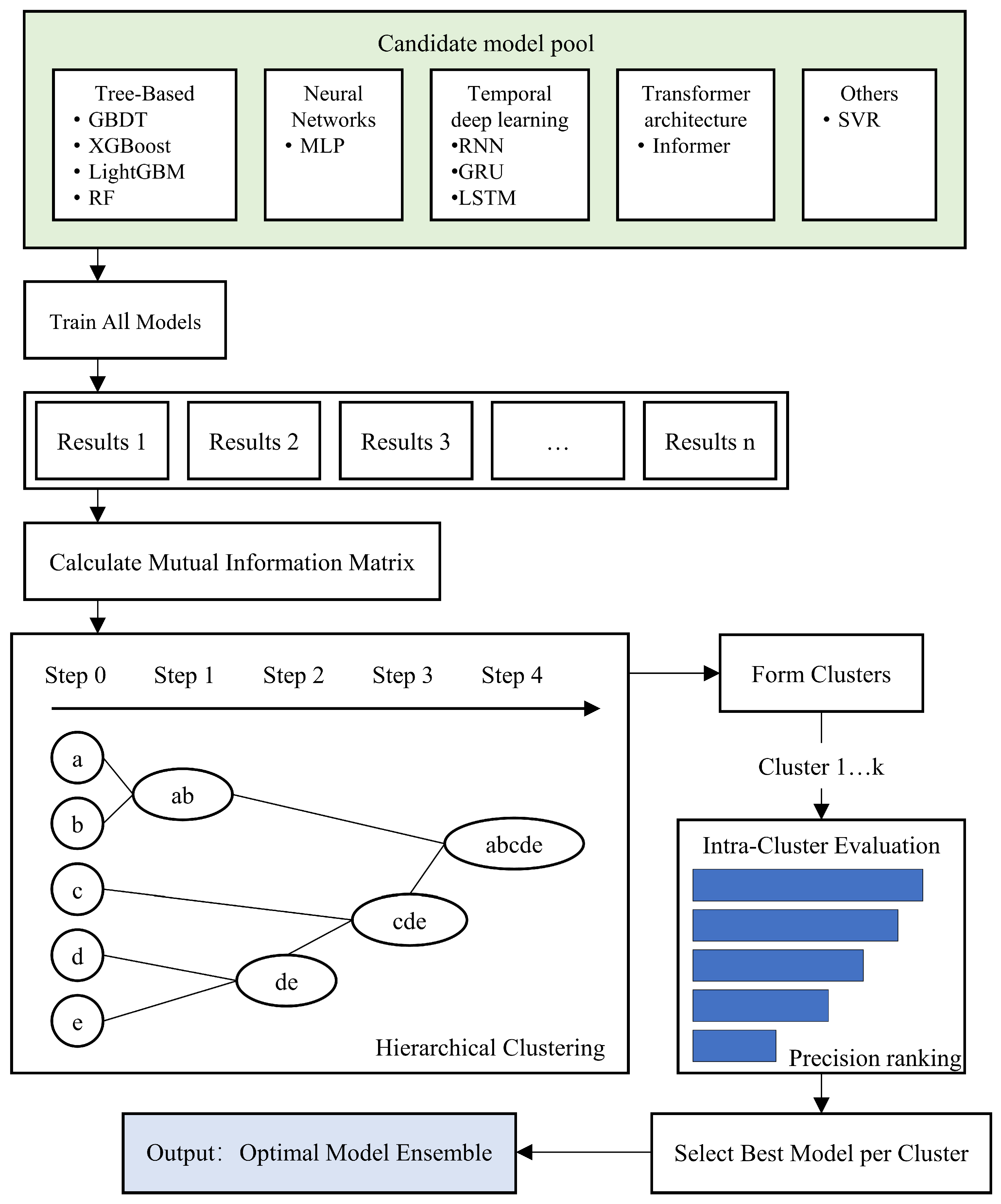

At the model construction level, an adaptive screening mechanism based on mutual information and hierarchical clustering is introduced to build a heterogeneous model pool, achieving intra-group competition and inter-group complementarity, thereby enhancing model diversity and generalization capability.

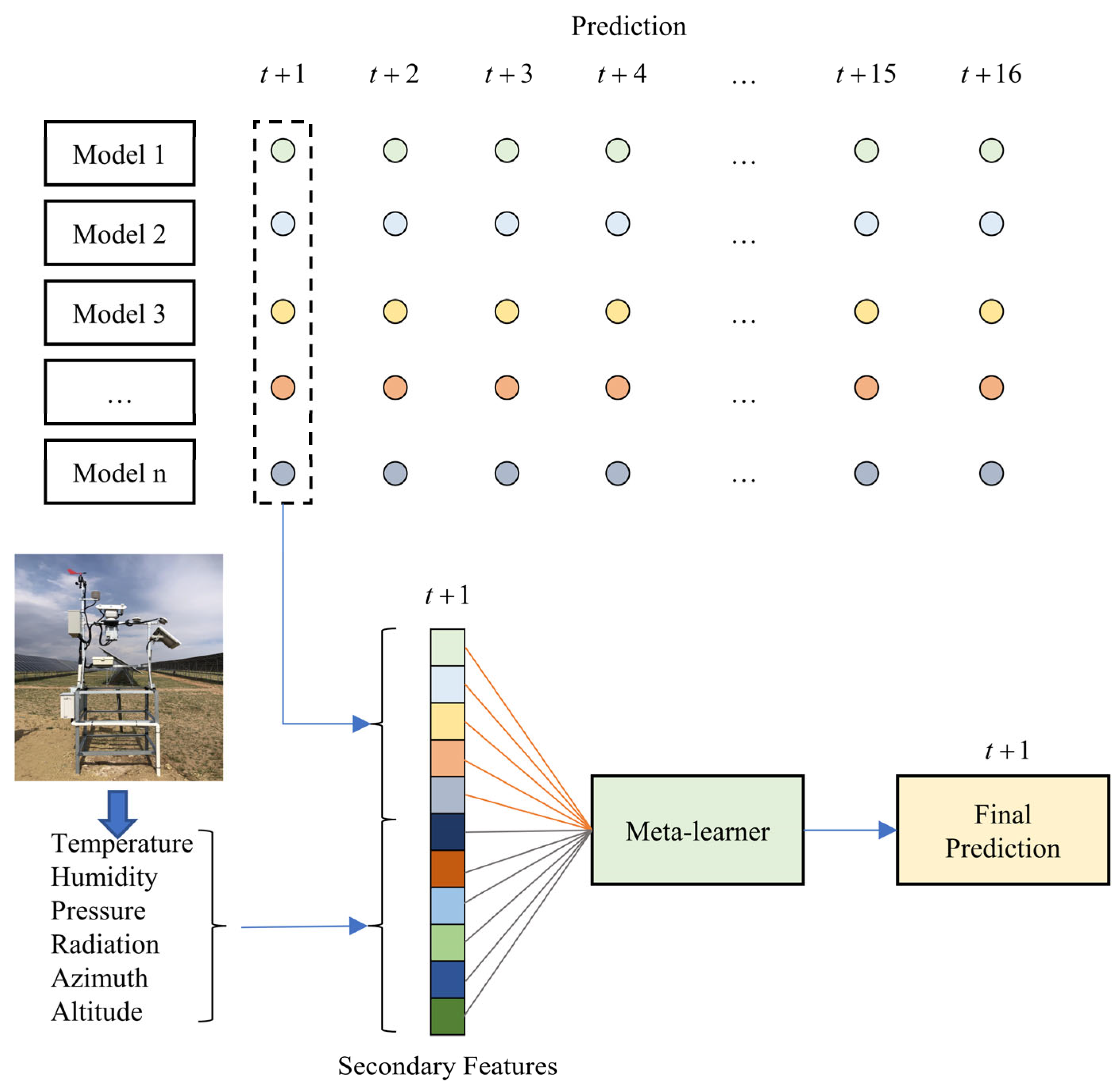

At the ensemble strategy level, traditional static weighting methods are improved by integrating multi-model outputs and real-time meteorological data to construct a time-dependent dynamic weight optimization module, boosting prediction accuracy across different time steps and weather conditions.

The performance of the proposed forecasting framework was validated across four dimensions—feature selection, model screening, ensemble strategy, and comprehensive performance—using data from a 75 MW power plant and the open-source PVOD dataset.

4. Conclusions

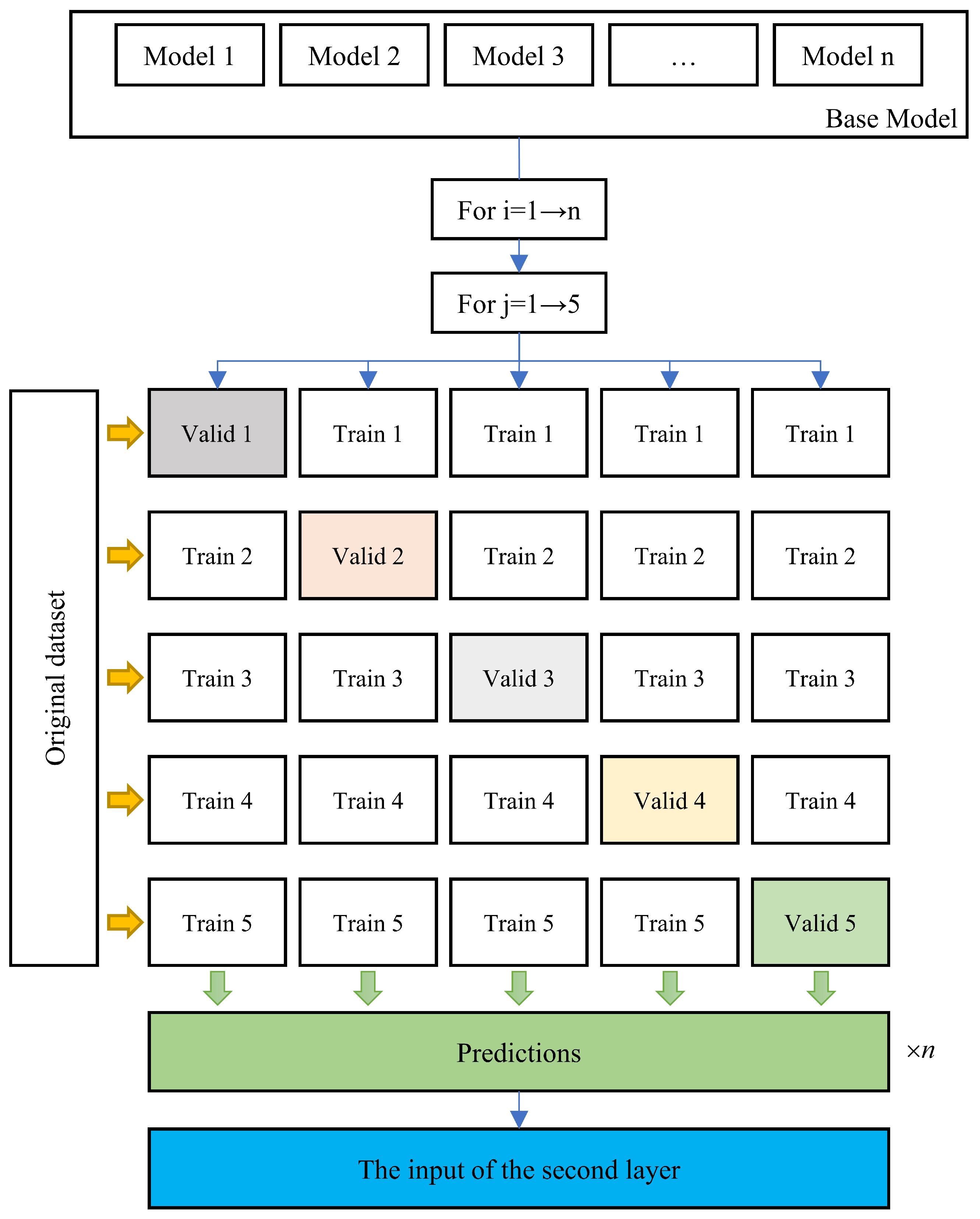

This paper proposes a multi-stage ensemble learning framework. The primary contributions lie in the strategies introduced for feature selection, model screening, and prediction result integration. For feature selection, a Recursive Feature Elimination (RFE) algorithm based on weighted scores from LightGBM, XGBoost, and MLP is employed to screen high-contribution features. In terms of model selection, mainstream power prediction models are utilized as a candidate pool. The similarity in predictive behavior among models is computed based on mutual information, and multiple categories of models with similar predictive behaviors are identified through hierarchical clustering. Guided by the principles of intra-group competition and inter-group complementarity, an optimal model combination is selected to enhance overall performance. For prediction integration, a secondary training process is applied to the predictions of the selected models, with separate modeling for different time steps to establish an adaptive weighting strategy.

In terms of feature selection, the construction of feature subsets is accomplished by recursively eliminating the least important features. For each distinct feature subset, LightGBM, XGBoost, and MLP models are trained separately, with a weighted score serving as the performance metric for that particular subset. Experiments compared three cases: employing no feature selection strategy, utilizing the feature selection strategy from the open-source library sklearn, and applying the RFE selection strategy based on LightGBM–XGBoost–MLP weighted scoring. The evaluation was conducted using data from three sites, including a 75 MW power station and the open-source PVOD dataset. The proposed method achieved optimal performance in both MAE and R2 metrics. The MAE values for sites P1, P2, and P3 were 3.932 MW, 0.232 kW, and 0.951 kW, respectively, with corresponding R2 values of 0.90, 0.92, and 0.90. The experimental results demonstrate that the proposed feature selection strategy has a positive impact on power forecasting.

In the aspect of model selection, to address the lack of systematic criteria and the inherent randomness and arbitrariness in traditional methods, this paper proposes a model selection strategy based on the principles of intra-group competition and inter-group complementarity. The similarity in prediction behavior among different models is quantified using mutual information, followed by hierarchical clustering to group models with similar prediction behaviors. Experiments compared three scenarios: selecting k models based on hierarchical clustering, selecting the same number of models based on Top-k accuracy, and randomly selecting k models. For sites P4, P5, and P6, the proposed model selection strategy achieved the lowest MAE values of 0.716 kW, 0.818 kW, and 1.454 kW, respectively, and the highest R2 values of 0.93, 0.92, and 0.85, respectively. It is noteworthy that selecting the same number of models based on Top-k accuracy did not yield the lowest MAE or the highest R2. The experimental results demonstrate that quantifying and clustering prediction behaviors to select models can enhance model diversity while preserving model disparity, thereby exerting a positive influence on final performance.

In terms of integrating model prediction outcomes, this study improves upon traditional static weighting schemes. Along the temporal dimension, separate models are constructed for each of the 16 prediction time steps to allow different models to perform optimally at appropriate time scales. For the inputs of the secondary training, initial meteorological parameters are incorporated to enable the model to learn how to make prediction decisions under varying weather conditions. Experiments compared two approaches: weighted averaging of different models and the proposed SVR-based secondary training method. For sites P7, P8, and P9, the proposed strategy achieved the lowest MAE values of 1.212 kW, 0.538 kW, and 0.774 kW, respectively, and the highest R2 values of 0.87, 0.90, and 0.89, respectively. The experiments demonstrate that the proposed dynamic weighting scheme outperforms traditional static weighting strategies.

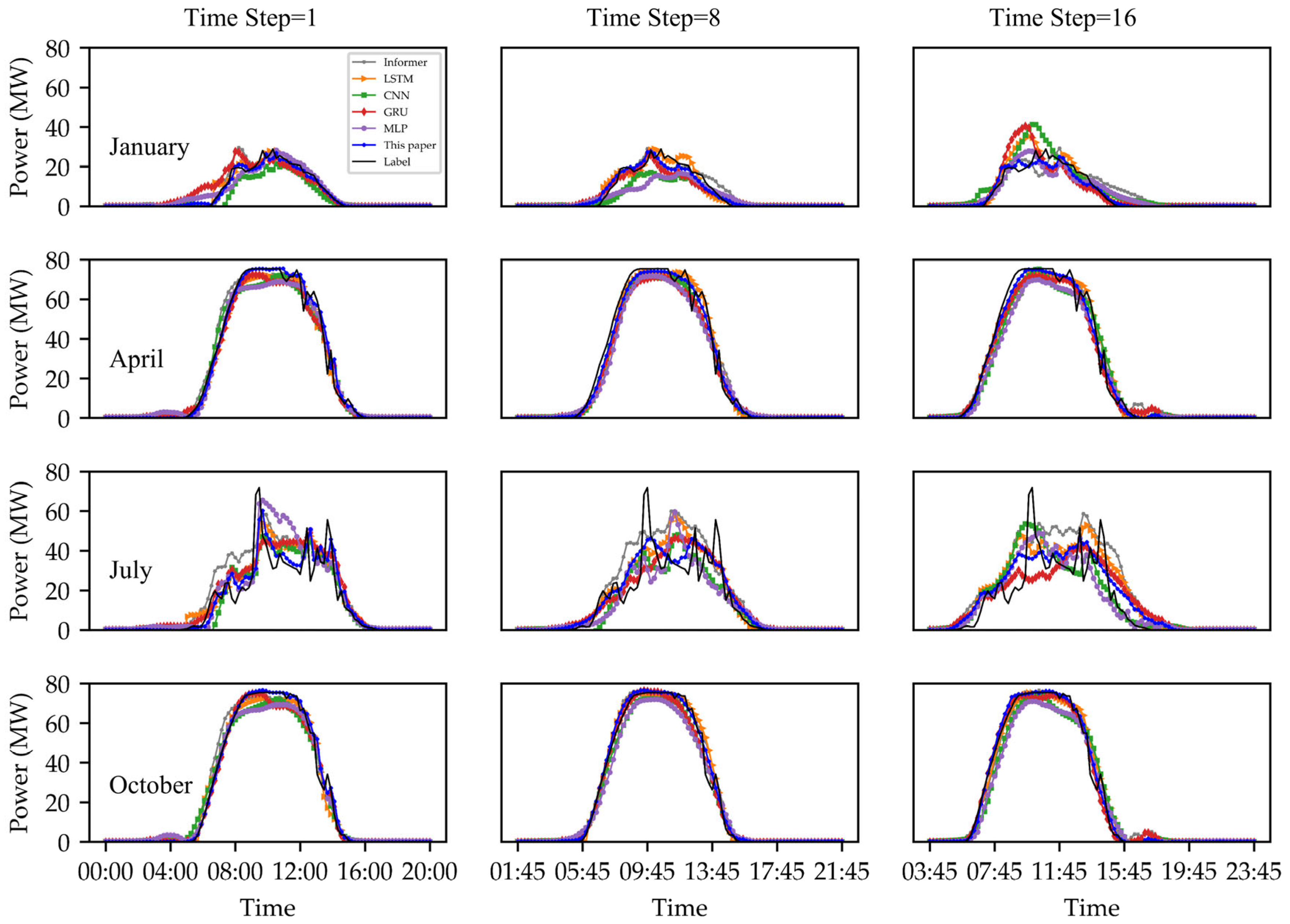

In terms of overall performance, this paper compares five mainstream power forecasting models, namely, Informer, LSTM, CNN, GRU, and MLP. Over a one-year dataset, the proposed model achieved optimal performance in 10 months. Across different prediction time steps, it exhibited superior correlation (0.94–0.98) and lower RMSE (5.74–9.86 MW) compared to baseline models. The experiments demonstrate that the proposed multi-stage ensemble learning framework—which synergistically optimizes feature selection, model screening, and dynamic weighted integration—enhances both the accuracy and robustness of power forecasting.