1. Introduction

With the increasing severity of global warming, the occurrence rate of extreme weather events has been growing, inflicting substantial damage on the national economy. Among these adverse meteorological phenomena, icing of transmission lines is one of the most severe disasters in power systems, posing a significant threat to the safety and stability of electricity networks. The history of transmission line icing accidents dates back to 1932, and since then, such disasters have successively struck countries and regions including Russia, Canada, the United States, the United Kingdom, and China [

1,

2,

3,

4]. Given that power systems are complex lifeline projects with the transmission link being of utmost importance, any problem in this link can severely disrupt the order of production and life, leading to incalculable losses. Along with the continuous increase in the demand for power, the harm caused by the combination of ice and other loads on the large span high voltage transmission line is becoming more and more serious. Therefore, conducting research on transmission line icing is of great significance.

In recent years, domestic and international researchers have extensively studied transmission-line icing, and research content and methods have advanced in step with technological progress. Current icing-prediction methods fall into three main categories: physical models, statistical approaches, and machine-learning models. Physical models, such as the Imai, Lenhard, and Makkonen models [

5,

6], exhibit notable differences. The Imai model focuses on heat and mass transfer and on the energy balance at the ice–air interface; it is suitable for preliminary predictions under stable weather but tends to underestimate ice thickness in high winds. The Lenhard model emphasizes the dynamics of droplet impact and employs empirical adhesion coefficients; it accurately describes the accumulation of large droplets but requires site-specific parameter calibration. The Makkonen model integrates thermodynamic and dynamic processes into a multi-factor framework that offers broader applicability, though its complex parameters complicate implementation. All three models differ in how they calculate ice-growth rate, process heat exchange, and apply correction coefficients.

Nevertheless, accurately predicting ice thickness remains challenging because key parameters such as droplet radius and adhesion coefficients are difficult to measure during icing events, which limits practical application. To address these limitations, conventional statistical methods, including multiple linear regression, time-series analysis, and extreme-value theory [

7,

8,

9], have been adopted. However, these approaches rely on numerous statistical assumptions, struggle to incorporate micro-meteorological factors, and consequently suffer from low accuracy and limited applicability.

Compared with traditional physical and statistical methods, machine learning has significantly enhanced prediction accuracy by deeply exploring the complex nonlinear relationships between ice thickness and meteorological factors such as temperature, humidity, and wind speed, thus becoming a powerful tool in the field of ice thickness prediction [

10,

11]. In the early stages of exploration, artificial neural network technologies, such as back propagation (BP) neural networks and support vector machines (SVMs), quickly became research hotspots due to their strong data processing capabilities [

12,

13]. For instance, Reference [

14] presented a framework utilizing the adaptive relevance vector machine (ARVM) to predict icing fault probabilities, achieving enhanced predictive accuracy. The application of a back propagation (BP) neural network architecture for the purpose of short-term icing forecasting was explored in the work by [

15]. Nevertheless, these methodologies exhibit certain limitations; notably, the BP network is susceptible to premature convergence to local optima, while support vector machines (SVMs) can be computationally intensive. To address these issues, scholars have introduced optimization algorithms for improvement. Reference [

16] proposed an innovative prediction model based on the generalized regression neural network (GRNN) and the fruit fly optimization algorithm (FOA) to enhance the accuracy and stability of icing prediction. Reference [

17] presented a galloping prediction model for iced transmission lines based on the Particle swarm optimization-conditional generative adversarial network (PSO-CGAN). The research in [

18] led to the formulation of the weighted support vector machine regression (WSVR), a specifically modified and improved framework based on conventional SVM regression principles. Through the application of a hybridized swarm intelligence approach, which integrates particle swarm optimization (PSO) with ant colony optimization (ACO) for parameter tuning, a notable improvement in the model’s predictive performance was achieved. An ice accretion forecasting system was put forth in [

19], constructed upon a hybridization of the fireworks algorithm and the weighted least squares support vector machine (W-LSSVM). This integration was designed to harness the complementary advantages of both methodologies, resulting in enhanced predictive outcomes. A hybrid modeling approach was examined in [

20], wherein a wavelet support vector machine (w-SVM) was coupled with the quantum fireworks algorithm (QFA) to achieve superior predictive capabilities. Reference [

21] proposed A BOA-VMD-LSTM hybrid model, which demonstrated superior performance.

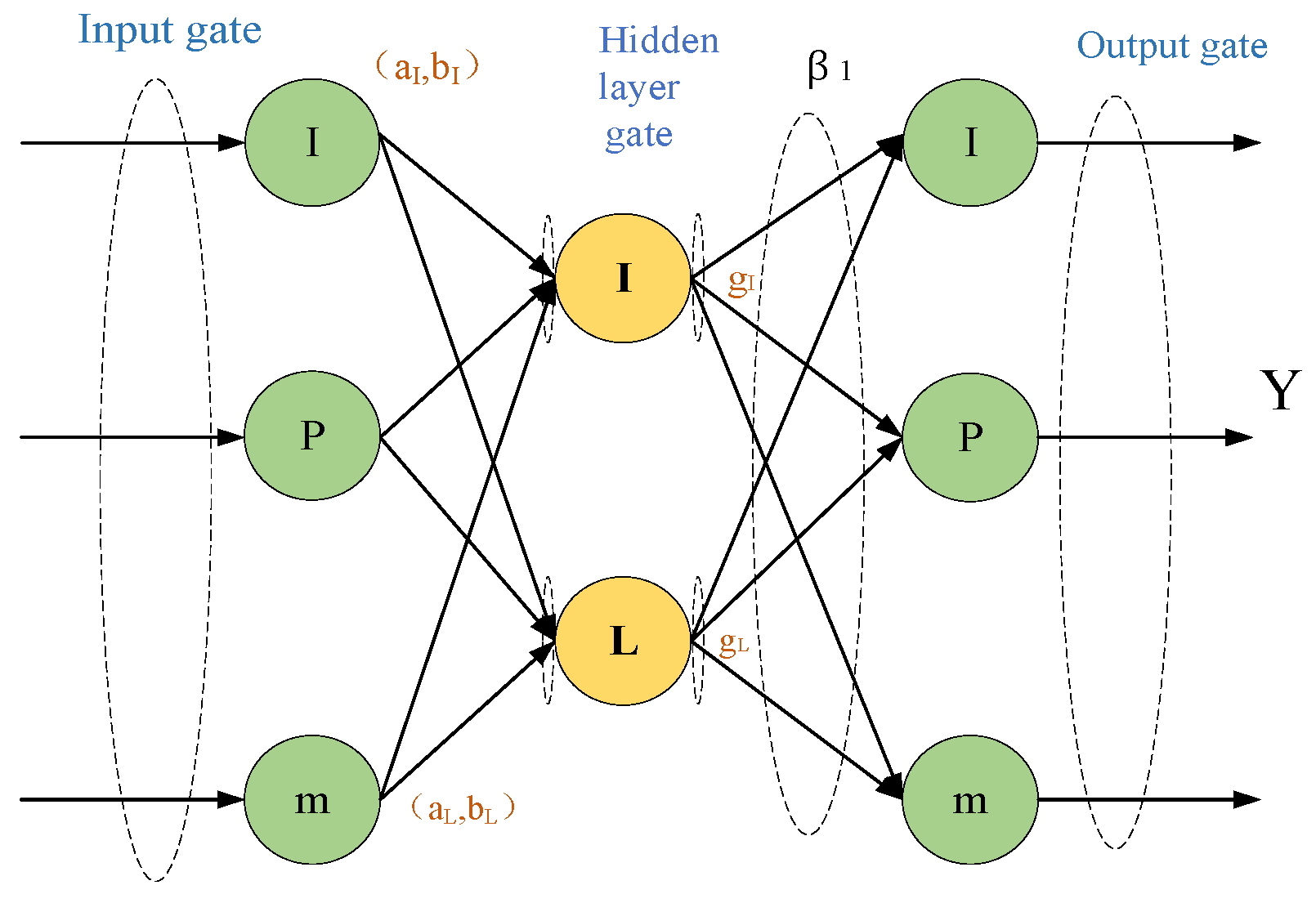

Compared with the aforementioned methods, the extreme learning machine (ELM) method can overcome their shortcomings. It effectively reduces the risk of falling into local optima while significantly enhancing the learning speed and generalization ability. Therefore, ELM has been widely applied in the field of prediction and has achieved satisfactory results in most cases [

22,

23]. However, the random initialization of weights and biases limits its performance. To address this issue, scholars have adopted various improvement methods. The work in Reference [

24] utilized the kernel extreme learning machine (KELM) to address the issue of model instability and to improve its predictive precision.

Table 1 will provide a detailed comparative description of the above-mentioned machine-learning algorithms.

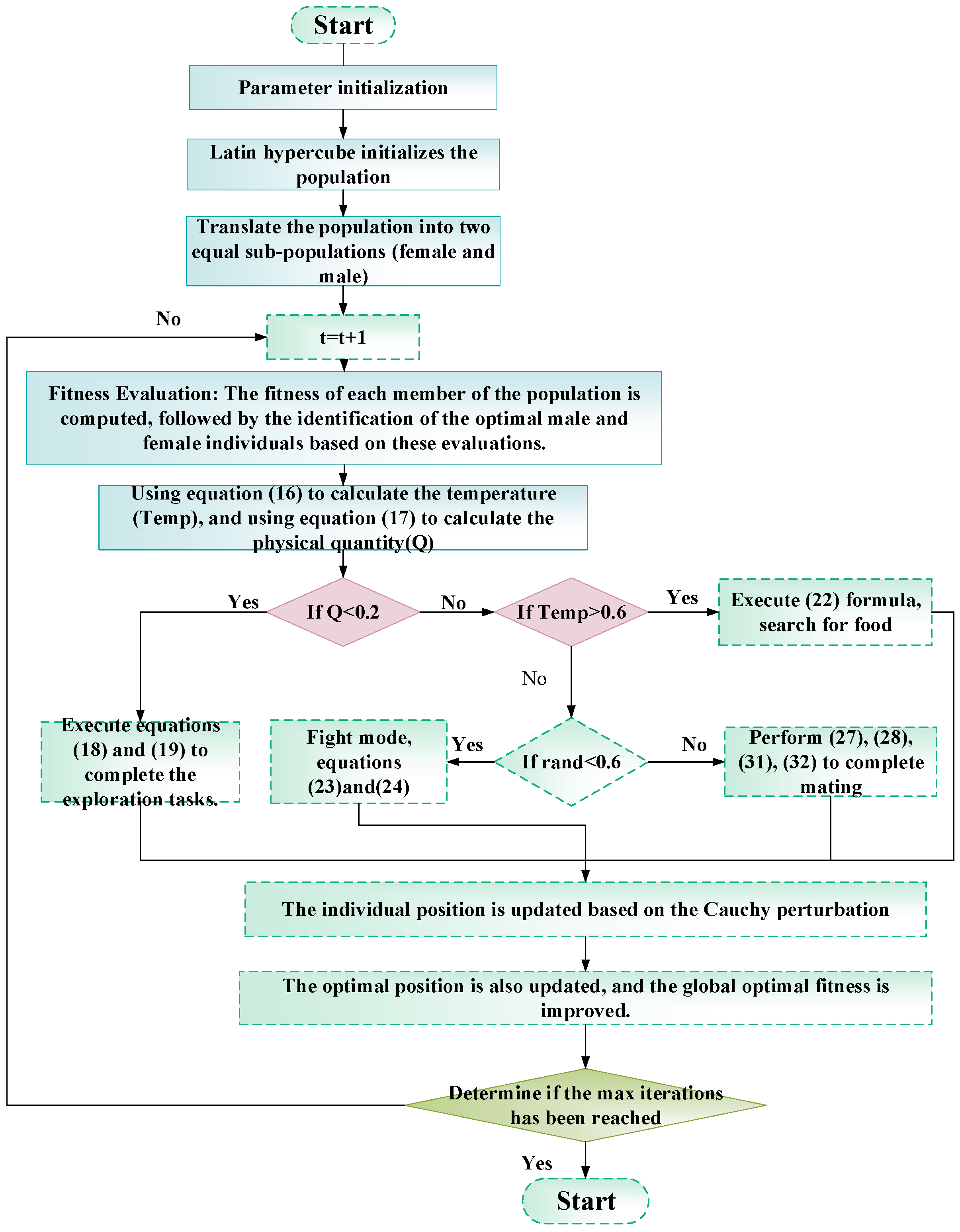

Intelligent optimization algorithms have provided new ideas for the parameter optimization of machine learning models. Among these algorithms, the snake optimization (SO) algorithm stands out. It models the feeding, mating, and combat behaviors of male and female snakes in different scenarios of food supply and temperature [

25]. Based on the living habits of snakes, the algorithm is divided into exploration and exploitation phases. Many scholars, both domestically and internationally, have investigated this algorithm and found that compared to other algorithms, it effectively balances local and global aspects of the problem. During the search process, these two aspects can transform into each other, thus avoiding local optima and achieving better convergence. Thanks to its excellent global search capability, SO has been successfully applied in various fields [

26,

27]. Additionally, from the perspective of combining a deep hidden layer kernel extreme learning machine (DHKELM) with optimization algorithms [

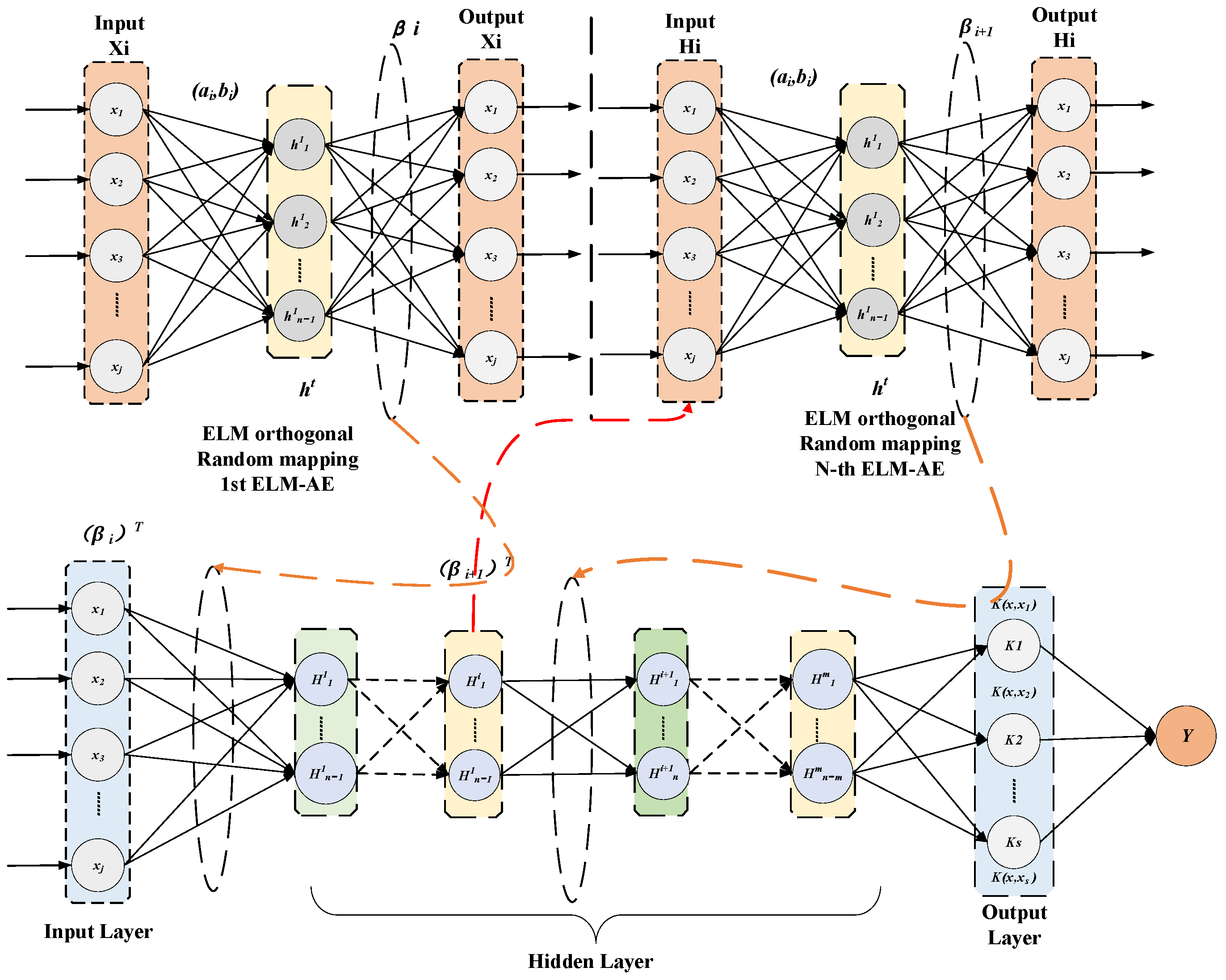

28], few researchers have applied it to conductor icing forecast. Therefore, this paper employs a hybrid model to predict transmission line icing.

The primary contributions of this study can be summarized as follows:

This study puts forth an improved snake optimization algorithm (ISO). The optimization capabilities of this algorithm are augmented through the integration of three distinct mechanisms: Latin hypercube sampling, a t-distribution mutation operator, and a Cauchy mutation strategy.

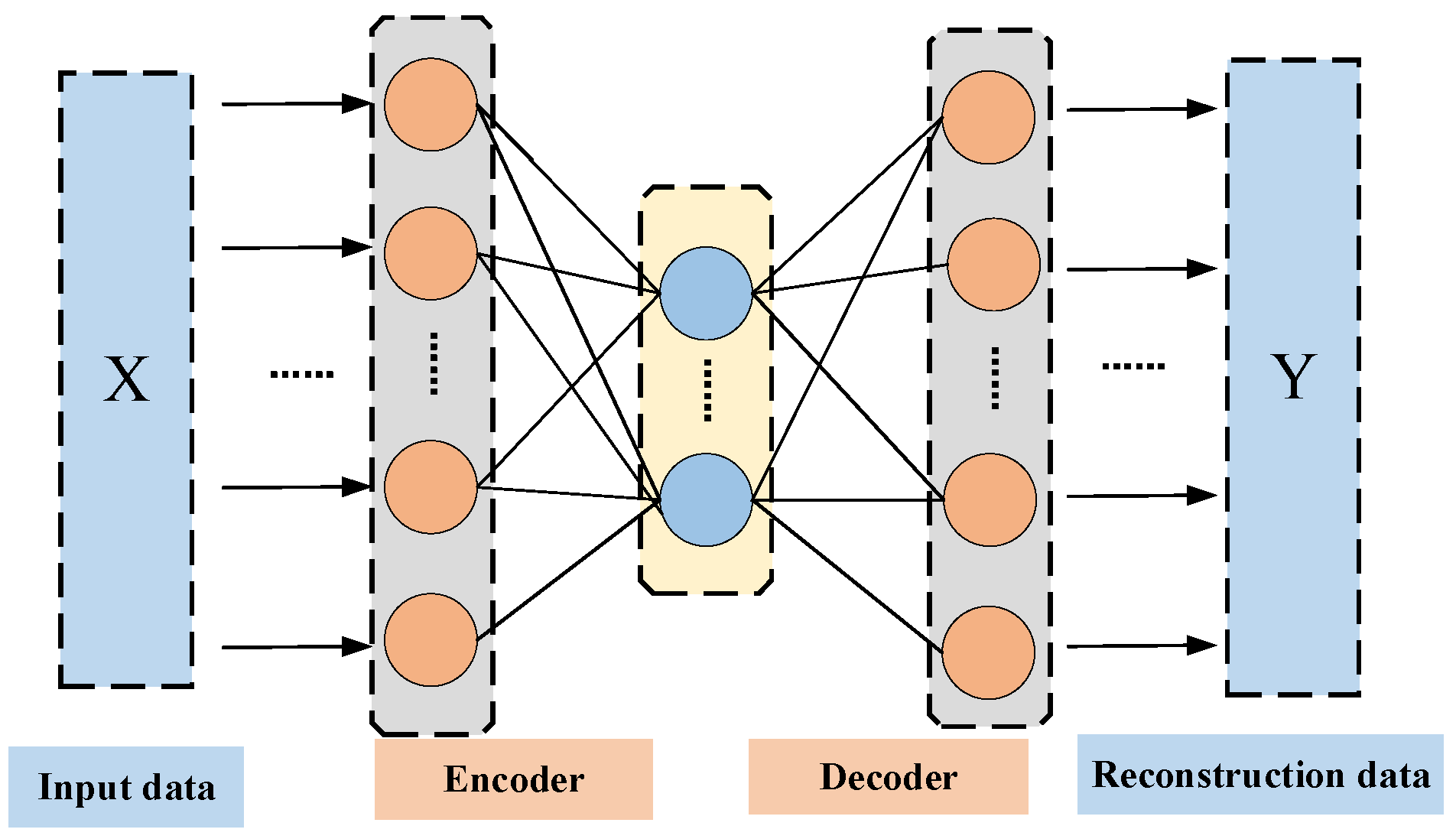

A DHKELM is constructed, combining the deep feature extraction of DELM and the kernel mapping advantages of HKELM to improve the model’s expressive ability.

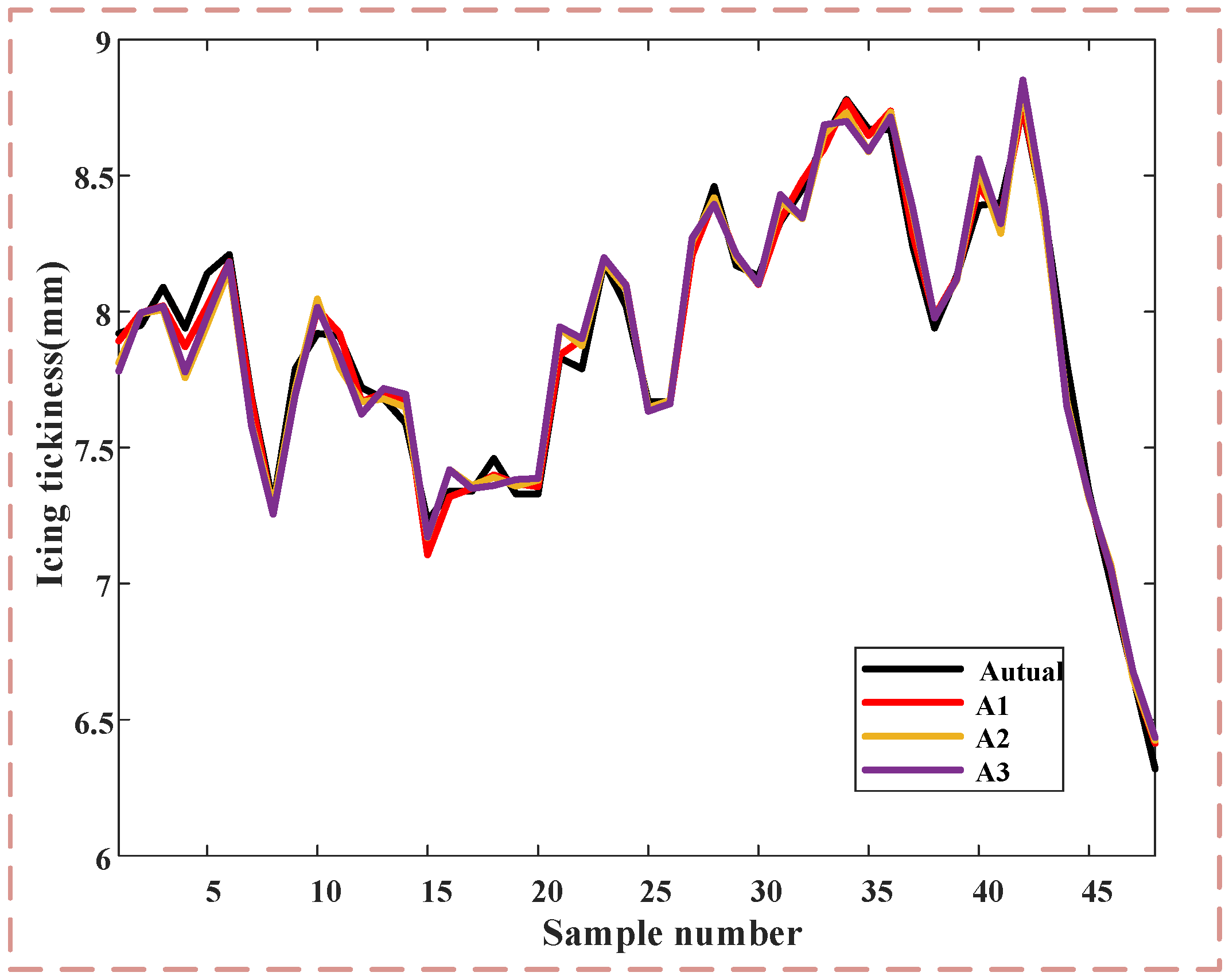

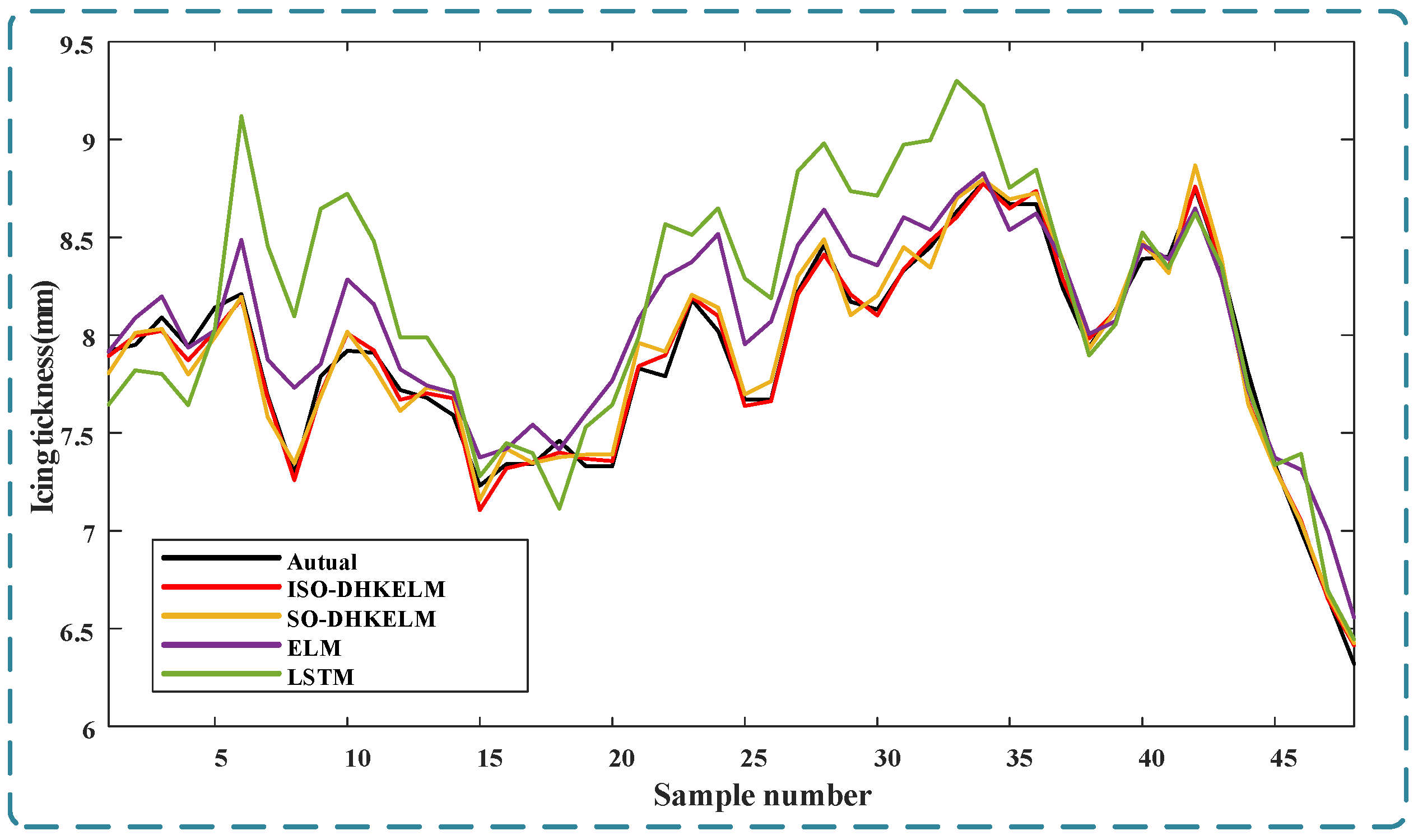

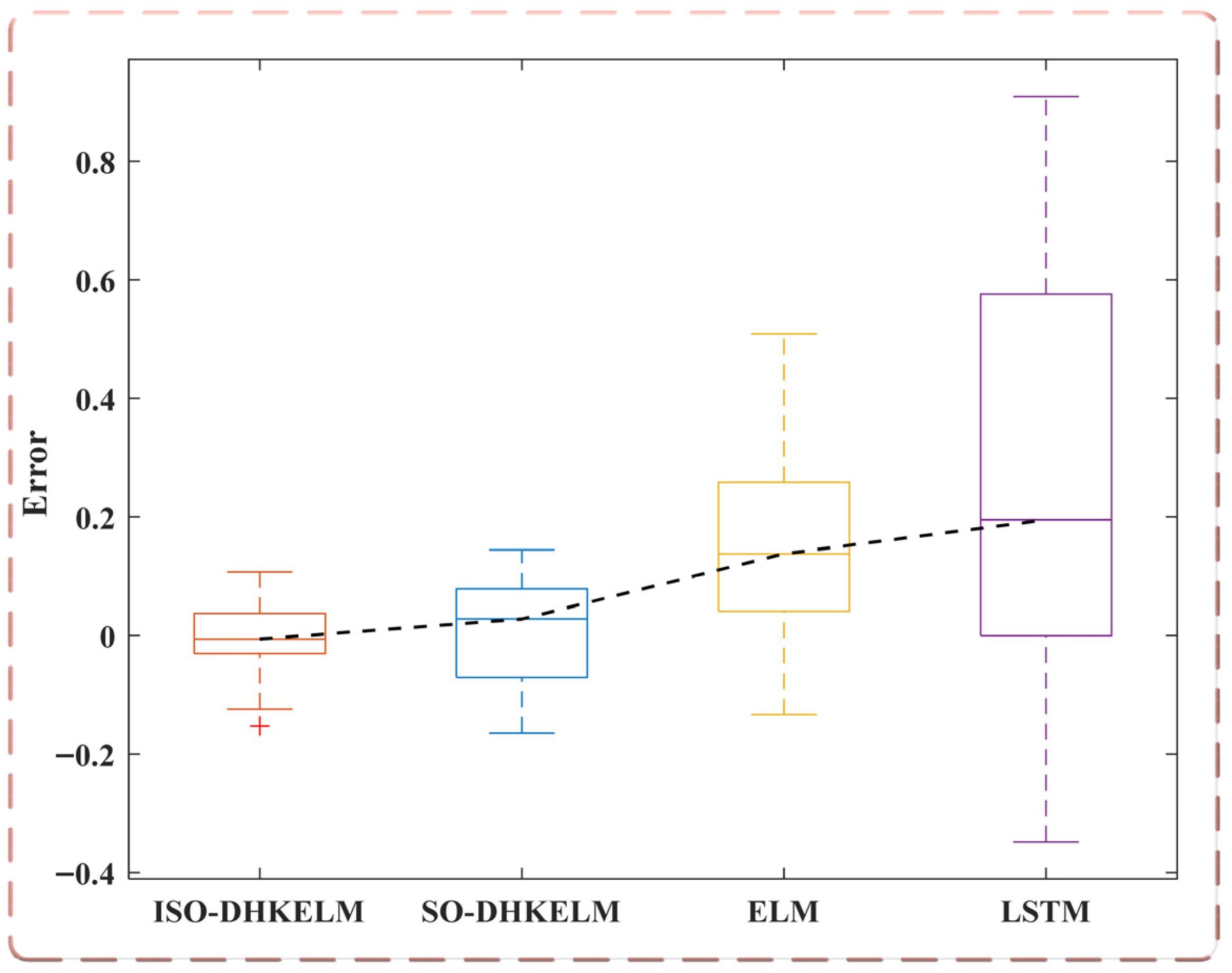

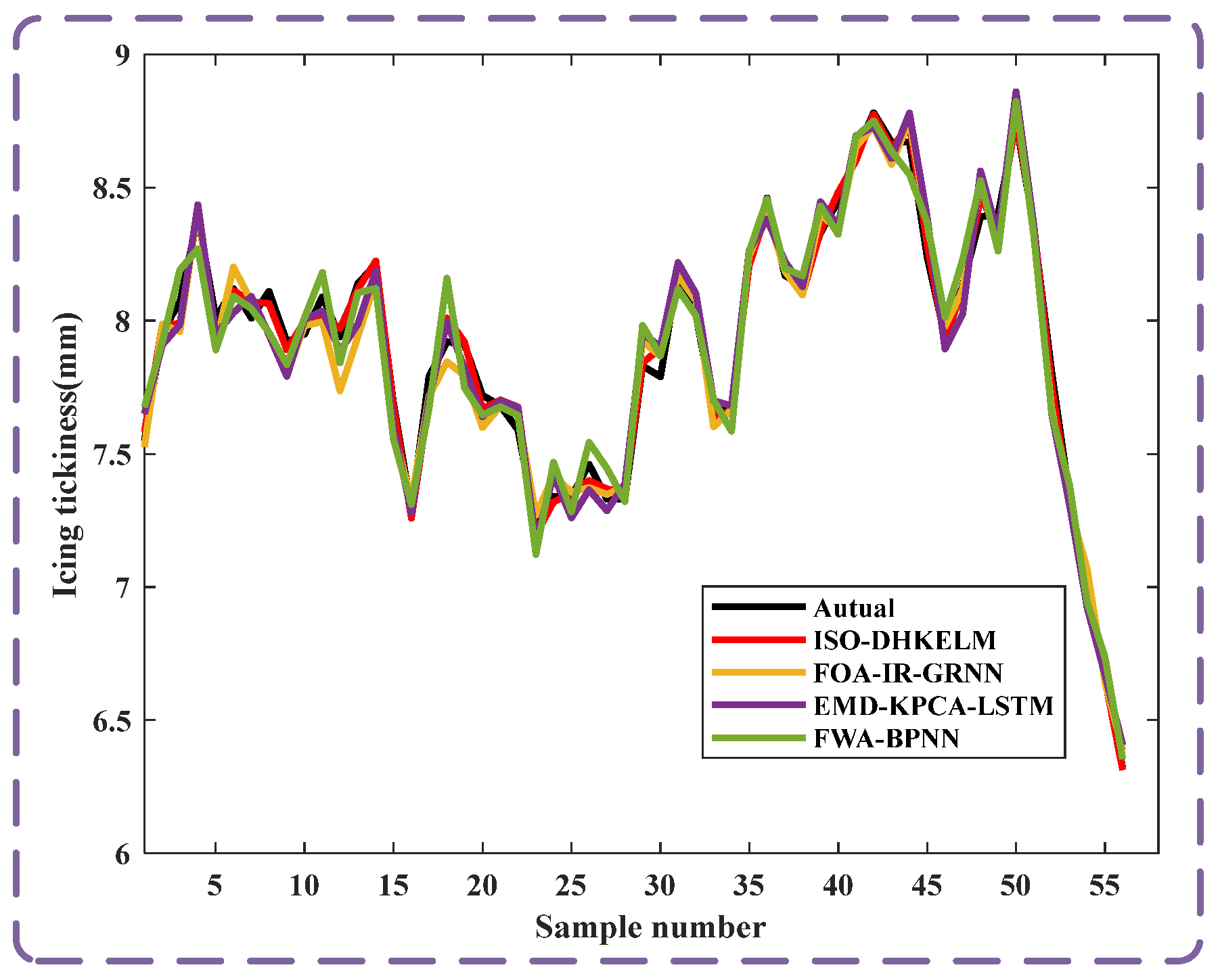

The ISO-DHKELM hybrid prediction model was constructed and demonstrated superior performance in ice prediction, achieving RMSE, MAE, and R2 values of 0.057, 0.044, and 0.993, respectively. This provides a novel approach for power grid ice disaster prevention and mitigation.

The structure of this paper is organized as follows:

Section 2 systematically elaborates the theoretical foundations of the relevant models and algorithms;

Section 3 delves into the analysis of data characteristics and processing methods;

Section 4 validates the effectiveness of the model through experiments; and

Section 5 summarizes the main conclusions of the research and provides an outlook for future research directions.

3. Data Processing and Analysis

3.1. Principle of Grey Relational Analysis

Grey relational analysis is employed to study uncertain systems. It judges the correlation by comparing the similarity of data curves. The steps include data collection, processing, modeling, validation, and application, and it is widely used in many fields. The principle is to calculate the correlation coefficient between the parent series and the child series. The higher the coefficient, the more significant the correlation.

The calculation process of the grey relational analysis method is as follows:

- (1)

Determine the sequence for analysis: Based on the analysis object, the ice thickness of the power line is determined as the reference sequence for grey relational analysis, as shown in Equation (36):

The various factors affecting the ice thickness are used as the comparison sub-sequences, as shown in Equation (37):

In the formula, is the data of the line icing thickness after standardization, represents the data of other micro-meteorological factors after standardization.

- (2)

Normalize data: Normalize selected sequences to eliminate dimensional differences before correlation analysis, as shown in Equation (38):

In the formula: represents the original value, and represent the minimum and maximum values, respectively.

- (3)

Calculate the correlation coefficient between the parent sequence and each sub-sequence using the formula in Equation (39):

In the formula, shows the correlation strength between parent and sub-sequence. It’s non-negative, with optimal discrimination when ∈ [0.321, 0.588]. Usually, = 0.5.

- (4)

Calculate the degree of correlation: Calculate the correlation degree between the reference sequence and factors. The correlation coefficient shows a sub-sequence relation to the parent sequence. Scattered correlation values over time need integration for easier comparison, resulting in the grey relational degree. The formula is as follows:

- (5)

Correlation degree ranking: Based on calculated results, the relationship between sequences is ranked. Higher values indicate closer relationships, while lower values indicate weaker relationships.

3.2. Grey Correlation Analysis Example

The formation of transmission line icing is an inherently complex phenomenon. Consequently, selecting input variables based on operational conditions can result in a high-dimensional feature space, which may increase the model’s computational burden, thereby reducing training efficiency and extending the required learning duration. Conversely, if the number of input variables is excessively reduced, it may compromise the model’s prediction accuracy. To address this, the grey correlation analysis method is employed to pinpoint the key factors that impact the ice thickness on conductors [

33]. The analysis is based on historical ice monitoring data from the “Kangshu No. 2 line” transmission line in Sichuan. The icing growth period spanned from 11:00 on 22 January 2024 to 08:00 on 26 January 2024, with data collected at 30-min intervals, yielding a total of 188 data sets.

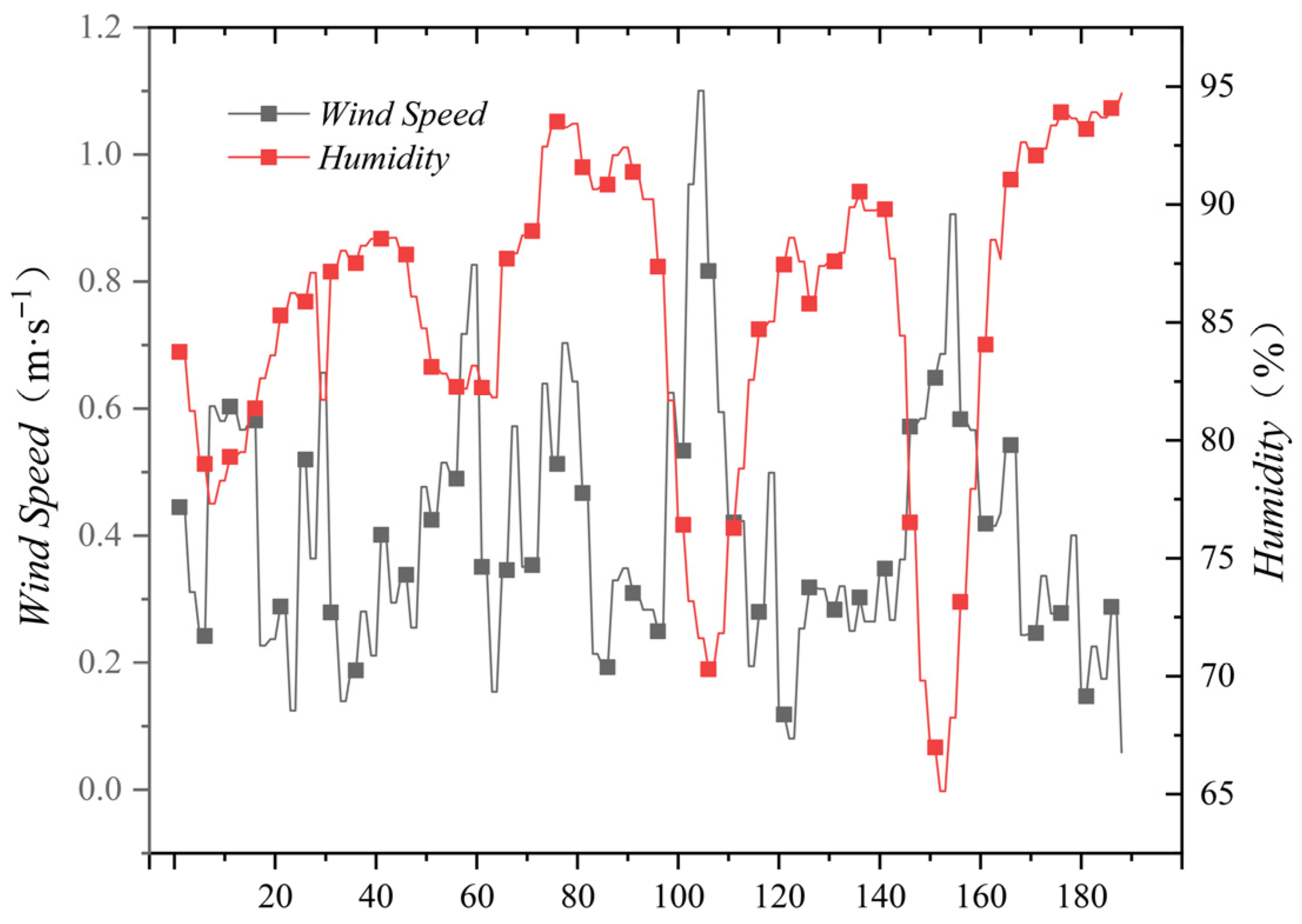

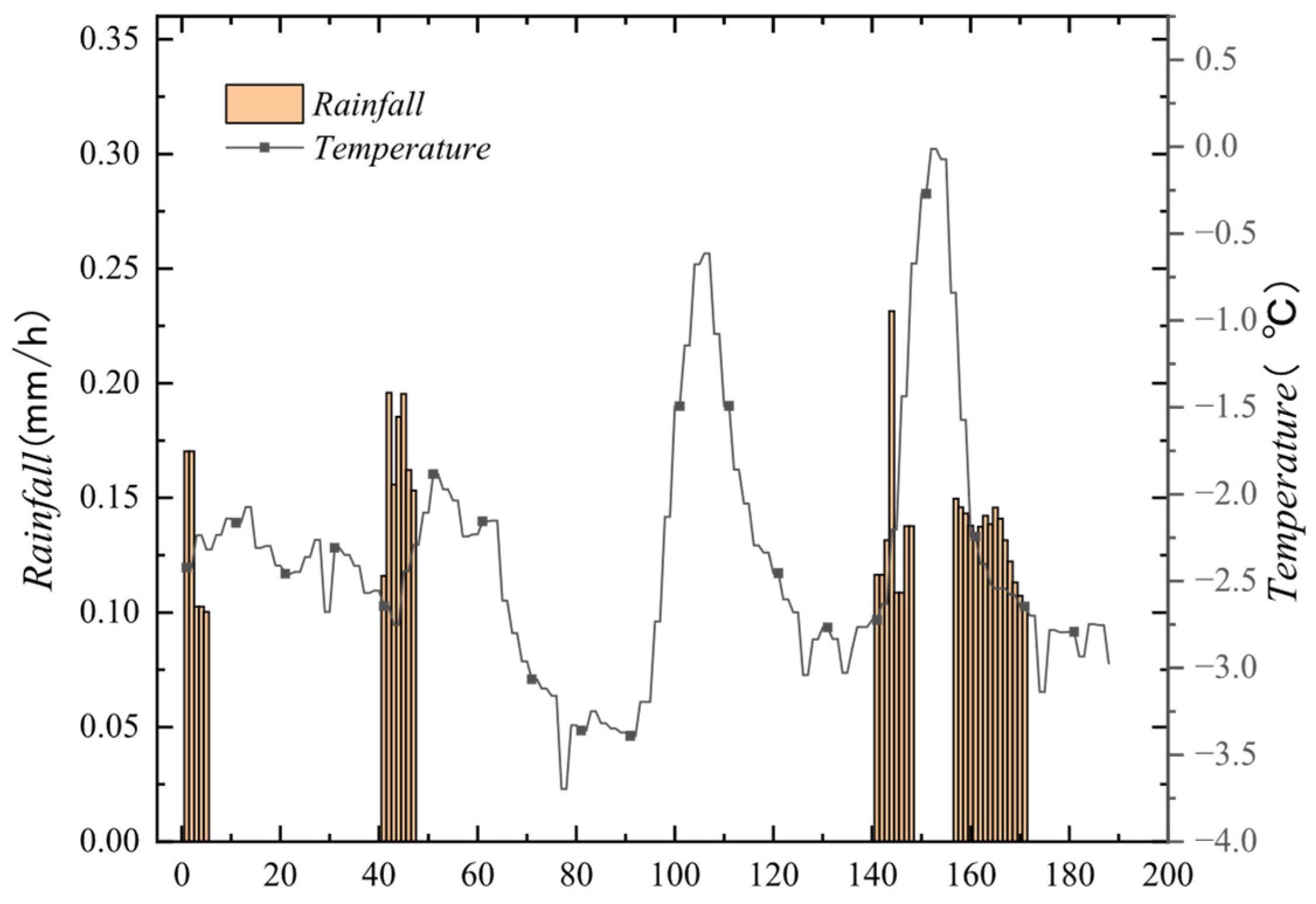

The development of ice thickness on transmission lines is closely related to the surrounding meteorological environment. The formation of conductor icing generally should meet the following meteorological conditions: the meteorological conditions for icing formation are usually temperatures below 0 degrees Celsius, a certain wind speed, and atmospheric humidity reaching above 80% with a certain amount of rainfall. The melting and shedding of line icing typically occur under the conditions of increased ambient temperature and increased ambient wind speed. As mentioned earlier, the original data sequence charts for temperature, wind speed, air humidity, and rainfall are shown in

Figure 6 and

Figure 7:

There is a close relationship between the formation of ice accumulation and the weather conditions in the vicinity. Based on the historical monitoring data shown in the figure, the meteorological conditions for ice formation typically occur when the temperature is below 0 degrees Celsius, with a certain wind speed and atmospheric humidity reaching above 80%, and there is a certain amount of rainfall. The melting and shedding of line ice usually occur under conditions of rising environmental temperature and increasing environmental wind speed.

Due to the difficulty in ensuring the real-time nature and consistency of historical data on line icing and environmental meteorological data, it is impossible to determine the exact relationship between the two based on manual observation. Therefore, a grey relational analysis was conducted between them, and the correlation between line icing thickness and other influencing factors is given in

Table 2 as follows:

When the correlation coefficient falls below 0.5, the correlation is deemed to be weak; when the correlation coefficient ranges from 0.5 to 1, the correlation is regarded as strong. As indicated in

Table 1, the ranking of correlation strength is as follows: temperature > humidity > wind speed > wind direction > rainfall > air pressure. In particular, environmental temperature exhibits the highest correlation coefficient, suggesting a significant relationship between temperature and ice accretion prediction. Air pressure, which ranks last, has a correlation coefficient less than 0.5 and is considered to have a weak correlation.

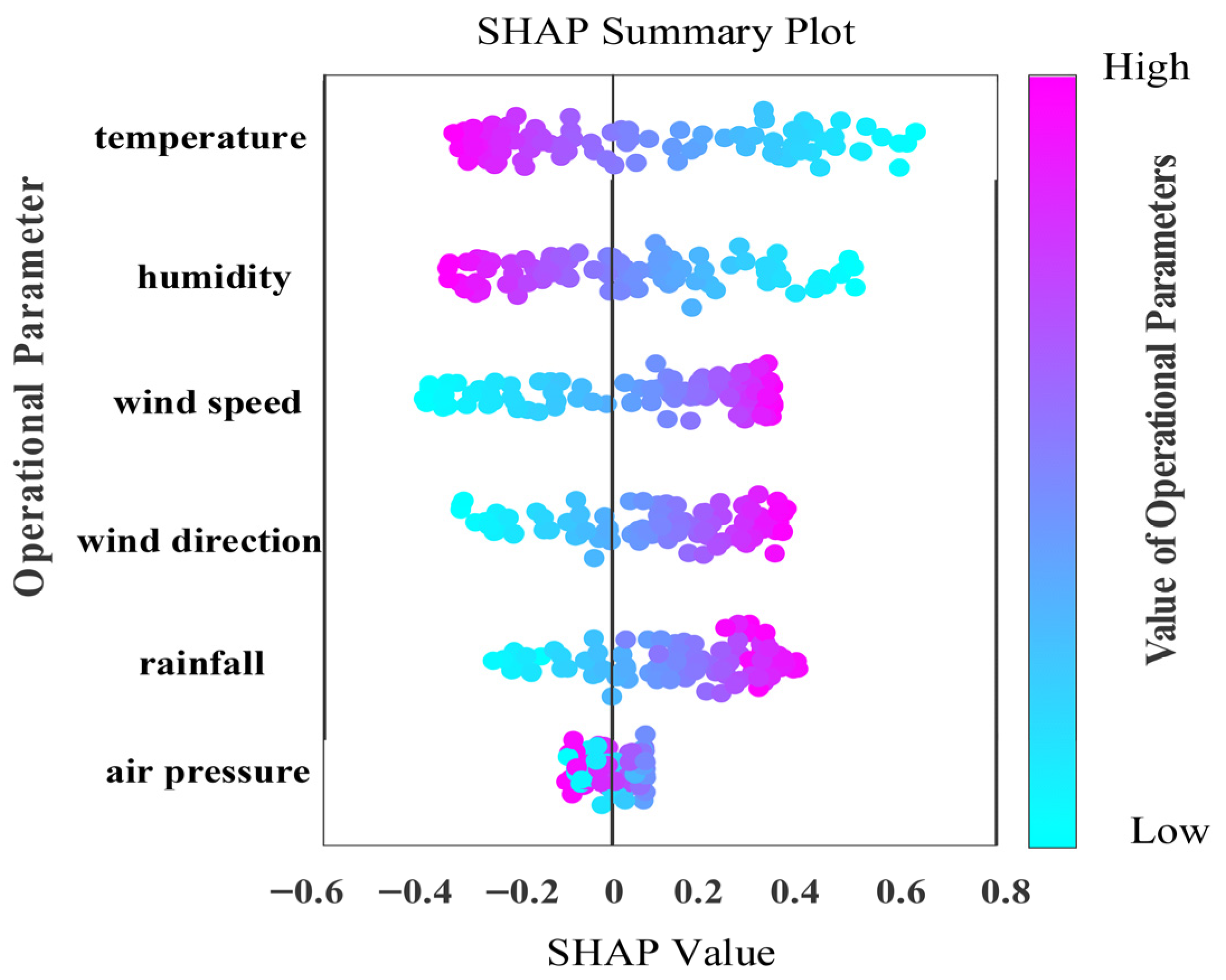

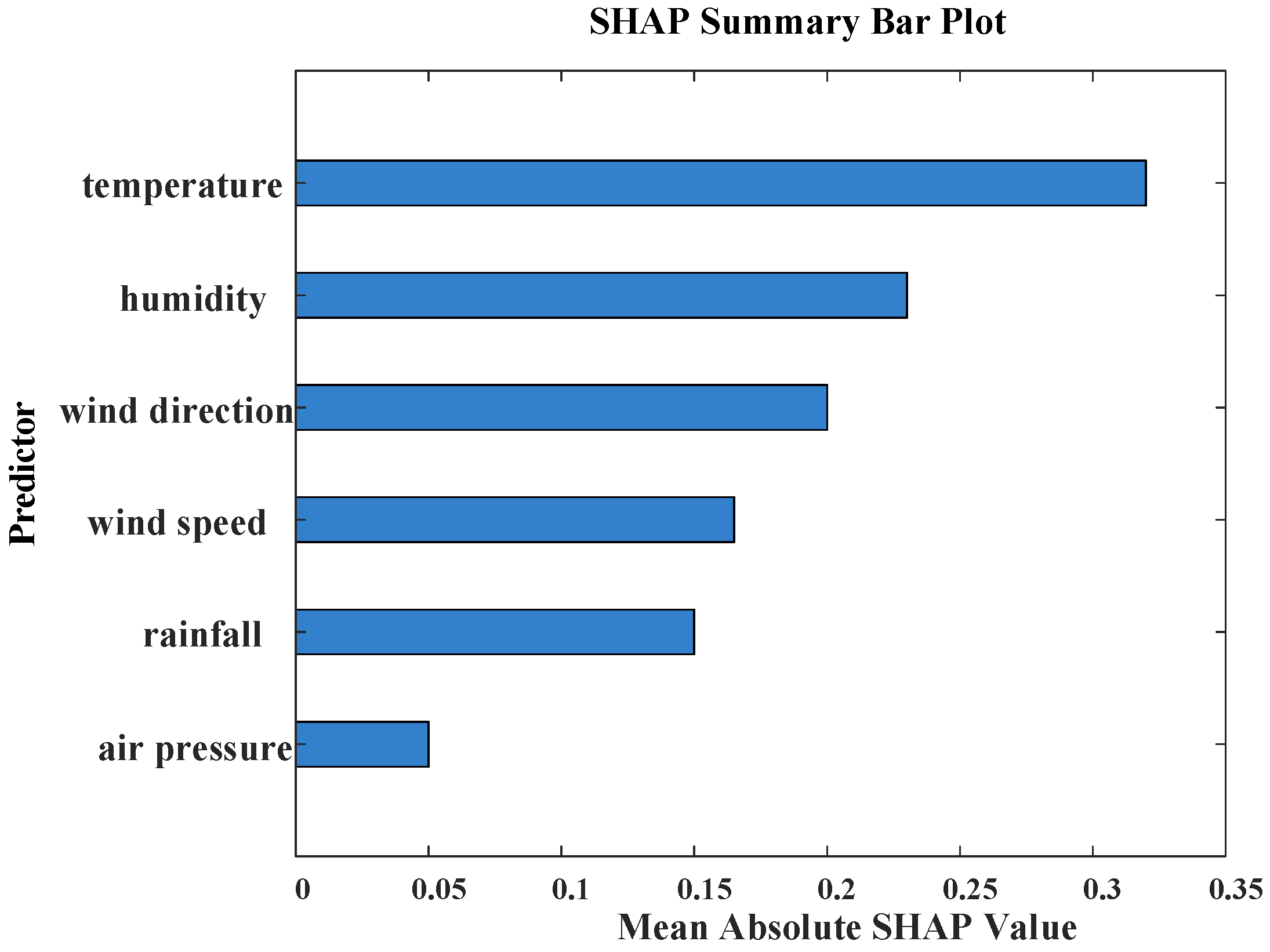

To further verify the validity of the input features, this study employs the Shapley additive explanation (SHAP) interpretability framework [

34]. SHAP adopts an additive attribution strategy to compute each input variable’s marginal contribution to the model’s output, thereby quantifying the relative importance of all features at the global level. For any single sample, its SHAP value represents the exact quantitative impact of that feature on the corresponding prediction. As shown in

Figure 8 and

Figure 9, SHAP is employed to interpret the characteristics of input for DHKELM, with features ranked in descending order of importance based on mean absolute SHAP values. Positive SHAP values correspond to positive effects, while negative values correspond to negative effects. The color bar in

Figure 8 illustrates that a color closer to the upper end represents a higher feature value, while a color nearer to the lower end corresponds to a smaller feature value. Furthermore, a wider color bar reflects a more significant feature impact, suggesting that such a feature is more critical.

As shown in

Figure 8, the variables are ranked by contribution as follows: temperature, humidity, wind speed, wind direction, precipitation, and atmospheric pressure.

Figure 9 further confirms that temperature, humidity, and wind speed have the largest mean absolute SHAP values. Integrating the results of grey relational analysis and SHAP, this paper ultimately selects temperature, humidity, wind speed, wind direction, and precipitation as the input variables for the predictive model.