Energy Efficiency Measurement Method and Thermal Environment in Data Centers—A Literature Review

Abstract

1. Introduction

1.1. Growth of Data Centers and Energy Concerns

1.2. Importance of Power Metrics

1.3. Research Gaps

1.4. Research Objectives

- To categorize IT equipment and measure the PUE value of different data centers.

- To investigate the influence of data center type on the energy distribution priorities and cooling methods of each data center type.

- To evaluate data centers’ compliance with existing thermal standards and identify the deviations that indicate a need for improvement.

- To evaluate the possible thermal comfort of employees working in data center environments, based on exposure cycles and workplace conditions, with the aim of proposing occupational thermal comfort guidelines.

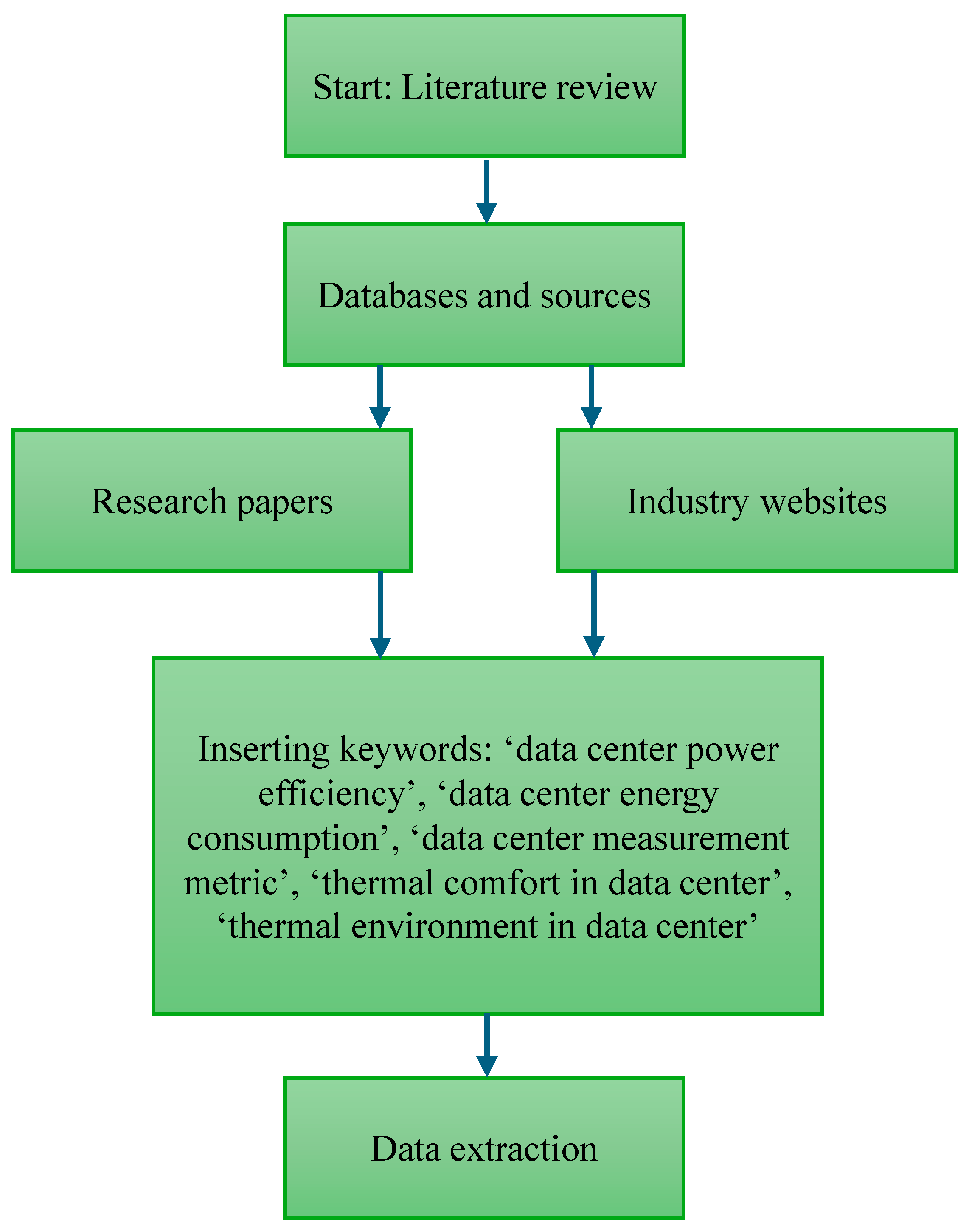

2. Research Methodology

2.1. Process of Literature Review

2.2. Power Efficiency Metric in Data Centers

2.3. Alternative Metrics to Measure Data Center Power Efficiency

3. Results and Discussion

3.1. Energy Usage and Efficiency Measurement in Data Centers

3.2. The Role of Data Center Classification in Energy Efficiency Measurement

3.2.1. Limitations of PUE Without Data Center Classification

3.2.2. Evaluating Passive Cooling Feasibility Through Data Center Classification

) due to their dense urban locations, higher ambient temperatures, polluted air and space limitations. These facilities are often in mixed-use buildings or small offices, which restricts large-scale cooling infrastructure. Therefore, mechanical cooling is recommended, such as liquid-cooled racks or precision air cooling. Similarly, Enterprise Branch and Internal data centers face constraints that limit passive cooling feasibility, although some waterside cooling (

) due to their dense urban locations, higher ambient temperatures, polluted air and space limitations. These facilities are often in mixed-use buildings or small offices, which restricts large-scale cooling infrastructure. Therefore, mechanical cooling is recommended, such as liquid-cooled racks or precision air cooling. Similarly, Enterprise Branch and Internal data centers face constraints that limit passive cooling feasibility, although some waterside cooling ( Possible) may be viable if wet-bulb temperatures remain stable, and the space for cooling towers is available. Larger-scale data centers, such as Colocation and Hyperscale, are generally well suited for passive cooling (

Possible) may be viable if wet-bulb temperatures remain stable, and the space for cooling towers is available. Larger-scale data centers, such as Colocation and Hyperscale, are generally well suited for passive cooling ( ), as they are designed with efficiency in mind rather than being constrained by mixed-use buildings. These facilities are typically built in locations that support passive cooling, benefiting from lower urban heat influence and sufficient space for airflow management, enabling airside economization. Their waterside cooling feasibility depends on location, with some facilities built near natural water sources while others ensuring feasibility by allocating space for cooling towers as part of their infrastructure planning. This classification highlights how facility type and location significantly influence cooling feasibility, reinforcing the need for strategic site selection and infrastructure planning in data center design.

), as they are designed with efficiency in mind rather than being constrained by mixed-use buildings. These facilities are typically built in locations that support passive cooling, benefiting from lower urban heat influence and sufficient space for airflow management, enabling airside economization. Their waterside cooling feasibility depends on location, with some facilities built near natural water sources while others ensuring feasibility by allocating space for cooling towers as part of their infrastructure planning. This classification highlights how facility type and location significantly influence cooling feasibility, reinforcing the need for strategic site selection and infrastructure planning in data center design.3.3. Thermal Environment in Data Centers

3.4. Thermal Comfort in Computer Rooms

4. Conclusions

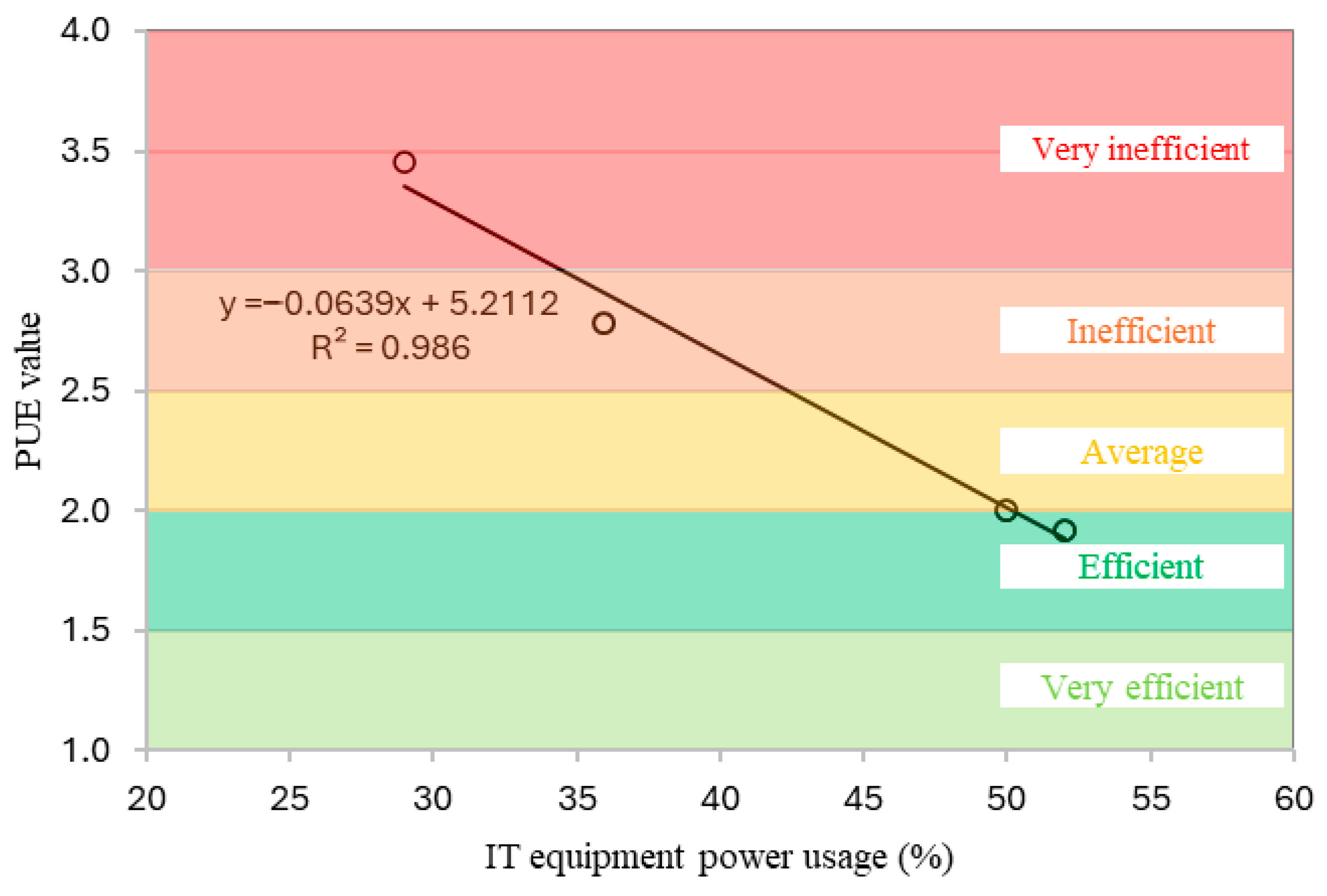

- The referenced data centers averaged 44.8% for IT equipment power, resulting in a PUE of 2.23, being categorized as “Average” efficiency. These results highlight that there is room for improvement to achieve more efficient data center power usage.

- Larger-scale data center types, such as hyperscale, are more compatible with passive cooling compared to smaller-scale ones, such as small and medium business data centers. These are related to data center size and location. Smaller data centers are often built near offices, while larger ones are placed in areas suited for passive cooling.

- Most data centers are already following the ASHRAE thermal guidelines, although many remain in the “allowable range” rather than the “recommended range”. Some facilities even exceed the allowable limits, which may pose risk to the equipment.

- Thermal comfort research specific to data centers was not found during this study; therefore, data from computer labs, which are unfortunately also limited and not standardized, were used instead. This reflects the lack of attention to the topic and highlights the need for further research. Even if it does not change how data centers operate, standards such as exposure duration or clothing requirements could be adopted instead.

Funding

Acknowledgments

Conflicts of Interest

References

- Jones, N. The information factories: Data centres are chewing up vast amounts of energy—So researchers are trying to make them more efficient. Nature 2018, 561, 163–166. [Google Scholar] [CrossRef] [PubMed]

- International Energy Agency (IEA). Energy and AI: World Energy Outlook Special Report; IEA: Paris, France, 2024; Available online: https://www.iea.org/reports/energy-and-ai (accessed on 3 July 2025).

- Dayarathna, M.; Wen, Y.; Fan, R. Data center energy consumption modeling: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 732–794. [Google Scholar] [CrossRef]

- US Environmental Protection Agency. Report to Congress on Server and Data Center Energy Efficiency: Public Law 109-431; U.S. Environmental Protection Agency: Washington, DC, USA, 2007. [CrossRef]

- Thangam, D.; Muniraju, H.; Ramesh, R.R.; Narasimhaiah, R.; Ahamed Khan, N.M.; Booshan, S.; Booshan, B.; Manickam, T.; Sankar, G.R. Impact of data centers on power consumption, climate change, and sustainability. In Sustainability of Data Centers via Energy Mix, Energy Conservation, and Circular Energy; IGI Global: Hershey, PA, USA, 2024. [Google Scholar] [CrossRef]

- Vemula, D.; Setz, B.; Rao, S.V.R.K.; Gangadharan, G.R.; Aiello, M. Metrics for sustainable data centers. IEEE Trans. Sustain. Comput. 2017, 2, 290–303. [Google Scholar] [CrossRef]

- Kim, J.H.; Shin, D.U.; Kim, H. Data center energy evaluation tool development and analysis of power usage effectiveness with different economizer types in various climate zones. Buildings 2024, 14, 299. [Google Scholar] [CrossRef]

- Shao, X.; Zhang, Z.; Song, P.; Feng, Y.; Wang, X. A review of energy efficiency evaluation metrics for data centers. Energy Build. 2022, 271, 112308. [Google Scholar] [CrossRef]

- Jaureguialzo, E. PUE: The Green Grid metric for evaluating the energy efficiency in DC (Data Center). In Proceedings of the 2011 IEEE 33rd International Telecommunications Energy Conference (INTELEC), Amsterdam, The Netherlands, 1–8 October 2011. [Google Scholar] [CrossRef]

- Beitelmal, A.H.; Fabris, D. Servers and data centers energy performance metrics. Energy Build. 2014, 80, 562–569. [Google Scholar] [CrossRef]

- Lajevardi, B.; Haapala, K.R.; Junker, J.F. An energy efficiency metric for data center assessment. In Proceedings of the 2014 Industrial and Systems Engineering Research Conference; Guan, Y., Liao, H., Eds.; Institute of Industrial and Systems Engineers: Montréal, QC, Canada, 2014. [Google Scholar]

- Patterson, M.K. The effect of data center temperature on energy efficiency. In Proceedings of the 2008 11th Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm), Orlando, FL, USA, 28–31 May 2008; pp. 1167–1174. [Google Scholar] [CrossRef]

- The Green Grid. PUE™: A Comprehensive Examination of the Metric (White Paper #49). The Green Grid Association. 2012. Available online: https://datacenters.lbl.gov/sites/default/files/WP49-PUE%20A%20Comprehensive%20Examination%20of%20the%20Metric_v6.pdf (accessed on 3 March 2025).

- Fiandrino, C.; Kliazovich, D.; Bouvry, P.; Zomaya, A.Y. Performance and energy efficiency metrics for communication systems of cloud computing data centers. IEEE Trans. Cloud Comput. 2017, 5, 738–750. [Google Scholar] [CrossRef]

- Zhang, Q.; Meng, Z.; Hong, X.; Zhan, Y.; Liu, J.; Dong, J.; Bai, T.; Niu, J.; Deen, M.J. A survey on data center cooling systems: Technology, power consumption modeling and control strategy optimization. J. Syst. Archit. 2021, 119, 102253. [Google Scholar] [CrossRef]

- Steinbrecher, R.A.; Schmidt, R. Data center environments: ASHRAE’s evolving thermal guidelines. ASHRAE J. 2011, 53, 42–49. [Google Scholar]

- Brown, R.; Masanet, E.; Nordman, B.; Tschudi, B.; Shehabi, A.; Stanley, J.; Koomey, J.; Sartor, D.; Chan, P.; Loper, J.; et al. Report to Congress on Server and Data Center Energy Efficiency: Public Law 109-431; U.S. Environmental Protection Agency: Washington, DC, USA, 2007. Available online: https://www.energystar.gov/ia/partners/prod_development/downloads/EPA_Datacenter_Report_Congress_Final1.pdf (accessed on 22 April 2025).

- Cho, J.; Lim, T.; Kim, B.S. Viability of datacenter cooling systems for energy efficiency in temperate or subtropical regions: Case study. Energy Build. 2012, 55, 189–197. [Google Scholar] [CrossRef]

- Schaeppi, B.; Bogner, T.; Schloesser, A.; Stobbe, L.; Dias de Asuncao, M. Metrics for Energy Efficiency Assessment in Data Centers and Server Rooms. PrimeEnergyIT Consortium. 2012. Available online: https://www.researchgate.net/publication/261243130_Metrics_for_energy_efficiency_assessment_in_data_centers_and_server_rooms (accessed on 3 July 2025).

- Sharma, M.; Arunachalam, K.; Sharma, D. Analyzing the data center efficiency by using PUE to make data centers more energy efficient. Procedia Comput. Sci. 2015, 48, 142–148. [Google Scholar] [CrossRef]

- Tong, T.; Yang, X.; Li, J. To boost waste heat harvesting and power generation through a portable heat pipe battery during high-efficient electronics cooling. Appl. Energy 2025, 377, 124397. [Google Scholar] [CrossRef]

- Patterson, M. Water Usage Effectiveness (WUE): A Green Grid Data Center Sustainability Metric (White Paper No. 35). The Green Grid. 2011. Available online: https://airatwork.com/wp-content/uploads/The-Green-Grid-White-Paper-35-WUE-Usage-Guidelines.pdf (accessed on 3 July 2025).

- Yaqi, W.; Baochuan, F.; Zhengtian, W.; Shuang, G. A review on energy-efficient technology in large data center. In Proceedings of the 30th Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 5109–5114. [Google Scholar]

- Info-Tech Research Group. Top 10 Energy-Saving Tips for a Greener Data Center. Info-Tech. 2007. Available online: https://www.yumpu.com/en/document/read/40017537/top-10-energy-saving-tips-for-a-greener-data-center-info-tech- (accessed on 3 July 2025).

- Karlsson, J.F.; Moshfegh, B. Investigation of indoor climate and power usage in a data center. Energy Build. 2005, 37, 897–902. [Google Scholar] [CrossRef]

- Ahuja, N.; Rego, C.W.; Ahuja, S.; Zhou, S.; Shrivastava, S. Real-time monitoring and availability of server airflow for efficient data center cooling. In Proceedings of the 29th IEEE SEMI-THERM Symposium, San Jose, CA, USA, 17–21 March 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Shehabi, A.; Smith, S.J.; Hubbard, A.; Newkirk, A.; Lei, N.; Siddik, M.A.B.; Holecek, B.; Koomey, J.; Masanet, E.; Sartor, D. United States Data Center Energy Usage Report; LBNL-2001637; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2024.

- Rasmussen, N. Electrical Efficiency Modeling for Data Centers. American Power Conversion (White Paper 113, Rev. 1). 2007. Available online: https://www.biblioite.ethz.ch/downloads/nran-66ck3d_r1_en.pdf (accessed on 3 July 2025).

- Zhou, C.; Hu, Y.; Liu, R.; Liu, Y.; Wang, M.; Luo, H.; Tian, Z. Energy performance study of a data center combined cooling system integrated with heat storage and waste heat recovery system. Buildings 2025, 15, 326. [Google Scholar] [CrossRef]

- Inoue, Y.; Hayama, H.; Mori, T.; Kikuta, K.; Toyohara, N. Analysis of cooling characteristics in datacenter using outdoor air cooling. In Proceedings of the IEEE 9th International Conference on Industrial Electronics and Applications (ICIEA), Hangzhou, China, 9–11 June 2014; Available online: https://www.researchgate.net/publication/281191844_Analysis_of_Cooling_Characteristics_in_Datacenter_Using_Outdoor_Air_Cooling (accessed on 3 July 2025).

- Mi, R.; Bai, X.; Xu, X.; Ren, F. Energy performance evaluation in a data center with water-side free cooling. Energy Build. 2023, 295, 113278. [Google Scholar] [CrossRef]

- Khalaj, A.H.; Halgamuge, S.K. A review on efficient thermal management of air- and liquid-cooled data centers: From chip to the cooling system. Appl. Energy 2017, 205, 1165–1188. [Google Scholar] [CrossRef]

- Time and Date. Past Weather in Bengkulu, Bengkulu, Indonesia—Yesterday or Further Back. Available online: https://www.timeanddate.com/weather/indonesia/bengkulu/historic (accessed on 1 May 2025).

- Weather Spark. Medan Weather in February 2018. Available online: https://weatherspark.com/h/y/112741/2018/Historical-Weather-during-2018-in-Medan-Indonesia (accessed on 3 July 2025).

- Weather Spark. Jakarta Weather in May 2018. Available online: https://weatherspark.com/h/m/116847/2018/5/Historical-Weather-in-May-2018-in-Jakarta-Indonesia (accessed on 3 July 2025).

- National Centers for Environmental Information (NCEI). Climate Data Online (CDO). National Oceanic and Atmospheric Administration (NOAA). Available online: https://www.ncei.noaa.gov/access/past-weather/oakland (accessed on 2 May 2025).

- Purwanto, F.H.; Utami, E.; Pramono, E. Implementation and optimization of server room temperature and humidity control system using fuzzy logic based on microcontroller. J. Phys. Conf. Ser. 2018, 1140, 012050. [Google Scholar] [CrossRef]

- Nasution, T.; Muchtar, M.A.; Seniman, S.; Siregar, I. Monitoring temperature and humidity of server room using Lattepanda and ThingSpeak. J. Phys. Conf. Ser. 2019, 1235, 012068. [Google Scholar] [CrossRef]

- Arifin, J.; Herryawan, P.; Gultom, B. Deteksi suhu ruang server dan penggerak kipas berbasis Arduino Uno dengan report SMS. Electrician 2019, 12, 2079. [Google Scholar] [CrossRef]

- Peng, W.Z.; Ning, S.; Li, S.; Sun, F.; Yang, K.C.; Westerdahl, D.; Louie, P.K.K. Impact analysis of temperature and humidity conditions on electrochemical sensor response in ambient air quality monitoring. Sensors 2018, 18, 59. [Google Scholar] [CrossRef]

- Shehabi, A.; Tschudi, W.; Gadgil, A. Data Center Economizer Contamination and Humidity Study; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 2007. Available online: https://escholarship.org/uc/item/8fm831xf (accessed on 26 May 2025).

- Vertiv Co. Data Center Precision Cooling: The Need for a Higher Level of Service Expertise. White Paper SL-24642. 2017. Available online: https://www.vertiv.com/48ea67/globalassets/services/services/preventive-maintenance/thermal-preventive-maintenance/vertiv-data-center-precision-cooling-wp-en-na-sl-24642_n.pdf (accessed on 3 July 2025).

- Ismail, A.R.; Jusoh, N.; Ibrahim, M.H.M.; Panior, K.N.; Zin, M.Z.M.; Hussain, M.A.; Makhtar, N.K. Thermal comfort assessment in computer lab: A case study at Ungku Omar Polytechnic Malaysia. In Proceedings of the National Conference in Mechanical Engineering Research and Postgraduate Students (NCMER), Kuantan, Malaysia, 26–27 May 2010; pp. 408–416. Available online: https://www.researchgate.net/profile/Mm-Noor/publication/257025553_42_NCMER_056 (accessed on 3 July 2025).

- Aqilah, N.; Rijal, H.B.; Zaki, S.A. A review of thermal comfort in residential buildings: Comfort threads and energy saving potential. Energies 2022, 15, 9012. [Google Scholar] [CrossRef]

- ISO 7730; Ergonomics of the Thermal Environment—Analytical Determination and Interpretation of Thermal Comfort Using Calculation of the PMV and PPD Indices and Local Thermal Comfort Criteria. ISO: Geneva, Switzerland, 2005.

- Fang, Z.; Feng, X.; Lin, Z. Investigation of PMV model for evaluation of the outdoor thermal comfort. Procedia Eng. 2017, 205, 2457–2462. [Google Scholar] [CrossRef]

- Abanto, J.; Barrero, D.; Reggio, M.; Ozell, B. Airflow modelling in a computer room. Build. Environ. 2004, 39, 1393–1402. [Google Scholar] [CrossRef]

- Pourshaghaghy, A.; Omidvari, M. Examination of thermal comfort in a hospital using PMV–PPD model. Appl. Ergon. 2012, 43, 1089–1095. [Google Scholar] [CrossRef]

- Telejko, M. Attempt to improve indoor air quality in computer laboratories. Procedia Eng. 2017, 172, 1154–1160. [Google Scholar] [CrossRef]

| References | Data Type | Equipment | Power Consumption (%) | ||

|---|---|---|---|---|---|

| Equipment Usage | PUE Categorization Usage | ||||

| Cho et al. [18] | Identified as typical data center power consumption in their study | Processor | 15 | IT equipment power | 52 |

| Communication equipment | 4 | ||||

| Storage | 4 | ||||

| Server power supply | 14 | ||||

| Other server | 15 | ||||

| Power distribution units (PDUs) | 1 | Facility power | 48 | ||

| Cooling | 38 | ||||

| Switchgear | 3 | ||||

| Lighting system | 1 | ||||

| Uninterruptable power supply (UPS) | 5 | ||||

| Yaqi et al. [23] | Referred to as data from an EYP report (source not given) | IT equipment | 50 | IT equipment power | 50 |

| Power supply system | 10 | Facility power | 50 | ||

| Lighting system | 3 | ||||

| Room cooling system | 25 | ||||

| Ventilation and humidification system | 12 | ||||

| Info-tech [24] | Identified as typical data center power consumption in their study | Network hardware | 10 | IT equipment power | 36 |

| Server and storage | 26 | ||||

| Power conversion | 11 | Facility power | 64 | ||

| Cooling | 50 | ||||

| Lighting system | 3 | ||||

| Karlsson and Moshfegh [25] | Measured data (Linköping, Sweden) | Computer equipment | 29 | IT equipment power | 29 |

| Central chiller plant | 34 | Facility power | 71 | ||

| Computer room air conditioner unit | 37 | ||||

| Zhang et al. [15] | Identified as typical data center power consumption in their study | Information and communication technology (ICT) | 50 | IT equipment power | 50 |

| Lighting | 3 | Facility power | 50 | ||

| Uninterruptible power supply (UPS) and energy transformation | 10 | ||||

| Chiller | 14 | ||||

| Fans | 12 | ||||

| Other equipment | 11 | ||||

| Ahuja et al. [26] | Simulation result | IT equipment | 52 | IT equipment power | 52 |

| Chiller system | 22 | Facility power | 48 | ||

| Computer room air conditioner | 11 | ||||

| Other | 15 | ||||

| Average | IT equipment power | 44.8 | |||

| Facility power | 51.2 | ||||

| References | Location of Data Center | Cooling Method | Climate Suitability | Space Requirement | Geographic Constraints |

|---|---|---|---|---|---|

| Inoue et al. [30] | Hokkaido, Japan | Airside free cooling | Cold climates required (e.g., Hokkaido) | Large open spaces for airflow stabilization | Should not be near urban pollution sources |

| Mi et al. [31] | Chongqing, China | Waterside free cooling | Moderate-to-humid climates | Requires cooling towers | Needs access to a stable water source (e.g., cooling towers) |

| Data Center Type [27] | Available Space * | Typical Location * | Cooling Load/Density * | Airside Free Cooling + [30] | Waterside Free Cooling + [31] | Recommended Cooling Method * |

|---|---|---|---|---|---|---|

| Telco Edge | Very limited | Dense urban areas | High |  Not feasible—Small footprint, high IT density limits airflow cooling. Not feasible—Small footprint, high IT density limits airflow cooling. |  Not feasible—No space for cooling towers. Not feasible—No space for cooling towers. | Use liquid-cooled racks or direct expansion (DX) cooling. |

| Commercial Edge | Small–medium | Office buildings, urban | High |  Not feasible—Restricted airflow makes passive cooling ineffective. Not feasible—Restricted airflow makes passive cooling ineffective. |  Not feasible—Lacks the required water cooling infrastructure. Not feasible—Lacks the required water cooling infrastructure. | Adopt precision air cooling or closed-loop liquid cooling. |

| Small and Medium Business (SMB) | Limited | Urban/suburban | Moderate–high |  Not feasible—Limited space for airflow management. Not feasible—Limited space for airflow management. |  Possible—Can work if wet-bulb temperature remains stable. Possible—Can work if wet-bulb temperature remains stable. | Consider small-scale waterside economizer with a backup chiller. |

| Enterprise Branch | Moderate | Mixed (urban/suburban) | Moderate |  Not feasible—Airflow constraints limit cooling efficiency. Not feasible—Airflow constraints limit cooling efficiency. |  Possible—Needs dedicated space for cooling towers. Possible—Needs dedicated space for cooling towers. | Install compact cooling towers with a hybrid chiller system. |

| Internal | Moderate | Office campuses | Moderate–high |  Not feasible—Typically located inside buildings, restricting outdoor air use. Not feasible—Typically located inside buildings, restricting outdoor air use. |  Possible—Works if wet-bulb temperature allows effective cooling tower placement. Possible—Works if wet-bulb temperature allows effective cooling tower placement. | Use high-efficiency air-cooled systems or waterside economizers. |

| Communications Service Providers (Comms SPs) | Moderate–large | Regional data hubs | High |  Not feasible—High IT density requires active cooling methods. Not feasible—High IT density requires active cooling methods. |  Possible—Requires reliable water resources and infrastructure. Possible—Requires reliable water resources and infrastructure. | Hybrid cooling with mechanical support for peak loads. |

| Colocation—Small/Med | Available | Mixed (suburban/regional) | Moderate |  Ideal—Large space and moderate IT load allow effective free cooling. Ideal—Large space and moderate IT load allow effective free cooling. |  Ideal—Designed with infrastructure for water cooling. Ideal—Designed with infrastructure for water cooling. | Prioritize free cooling via airside and waterside economizers. |

| Colocation—Large | Large | Regional/remote | High |  Ideal—Ample space, cooler regions support passive cooling. Ideal—Ample space, cooler regions support passive cooling. |  Ideal—Built with large-scale water cooling systems. Ideal—Built with large-scale water cooling systems. | Use optimized airside cooling and large-scale waterside economizers. |

| Hyperscale | Very large | Remote, optimized for cooling | Very high |  Ideal—Maximized efficiency in cold regions with large airflow management. Ideal—Maximized efficiency in cold regions with large airflow management. |  Possible—Requires strict water temperature control for efficiency. Possible—Requires strict water temperature control for efficiency. | Hybrid cooling strategy combining air and advanced liquid cooling. |

| References | Data Center Location | Air Temperature (°C) | Relative Humidity (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Room | Outside [33,34,35,36] | Room | Outside [33,34,35,36] | ||||||||||

| Min. | Avg. | Max. | Min. | Avg. | Max. | Min. | Avg. | Max. | Min. | Avg. | Max. | ||

| Purwanto et al. [37] | Bengkulu, Indonesia | 23.0 | 28.0 * | 33.0 | 24.0 | 27.0 | 30.0 | 46 | 57 * | 68 | 70 | 77 * | 85 |

| Nasution et al. [38] | Medan, Indonesia | 15.0 | 16.5 * | 18.0 | 24.0 | 27.5 | 31.0 | 18 | 19 * | 20 | - | - | - |

| Arifin et al. [39] | Jakarta, Indonesia | 19.5 | 23.9 * | 28.4 | 25.0 | 28.3 * | 32.0 | - | - | - | 65 | 83 | 98 |

| Peng et al. [40] | Hong Kong, China | 15.0 | 24.5 * | 34.0 | 17.0 | 20.5 * | 24.0 | 17 | 32 * | 48 | 54 | 74 * | 95 |

| Shehabi et al. [41] | Oakland, USA | 18.3 | 19.7 * | 21.1 | 10.0 | 17.0 * | 24.0 | 40 | 47 * | 55 | - | - | - |

| Reference | Location Type | Air Temperature (°C) | Relative Humidity (%) | Tc (°C) | PMV | PPD (%) | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Min. | Avg. | Max. | Min. | Avg. | Max. | |||||

| Ismail et al. [43] | Computer lab | - | - | 30.0 | 40 | 50 * | 60 | 20–24 | 1.1–1.4 | - |

| Telejko [49] | Computer lab | 26.8 | 28.2 * | 29.6 | 49 | 52 * | 55 | Exceeding the recommended temperature when occupied | - | - |

| Abanto et al. [47] | Computer room | 16.8 | 18.3 * | 19.8 | - | - | 46 | In line with thermal standard | - | <10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Setyo, Z.G.M.; Rijal, H.B.; Aqilah, N.; Abdullah, N. Energy Efficiency Measurement Method and Thermal Environment in Data Centers—A Literature Review. Energies 2025, 18, 3689. https://doi.org/10.3390/en18143689

Setyo ZGM, Rijal HB, Aqilah N, Abdullah N. Energy Efficiency Measurement Method and Thermal Environment in Data Centers—A Literature Review. Energies. 2025; 18(14):3689. https://doi.org/10.3390/en18143689

Chicago/Turabian StyleSetyo, Zaki Ghifari Muhamad, Hom Bahadur Rijal, Naja Aqilah, and Norhayati Abdullah. 2025. "Energy Efficiency Measurement Method and Thermal Environment in Data Centers—A Literature Review" Energies 18, no. 14: 3689. https://doi.org/10.3390/en18143689

APA StyleSetyo, Z. G. M., Rijal, H. B., Aqilah, N., & Abdullah, N. (2025). Energy Efficiency Measurement Method and Thermal Environment in Data Centers—A Literature Review. Energies, 18(14), 3689. https://doi.org/10.3390/en18143689