Abstract

The worldwide drive towards low-carbon transportation has made Hybrid Electric Vehicles (HEVs) a crucial component of sustainable mobility, particularly in areas with limited charging infrastructure. The core of HEV efficiency lies in the Energy Management Strategy (EMS), which regulates the energy distribution between the internal combustion engine and the electric motor. While rule-based and optimization methods have formed the foundation of EMS, their performance constraints under dynamic conditions have prompted researchers to explore artificial intelligence (AI)-based solutions. This paper systematically reviews four main AI-based EMS approaches—the knowledge-driven, data-driven, reinforcement learning, and hybrid methods—highlighting their theoretical foundations, core technologies, and key applications. The integration of AI has led to notable benefits, such as improved fuel efficiency, enhanced emission control, and greater system adaptability. However, several challenges remain, including generalization to diverse driving conditions, constraints in real-time implementation, and concerns related to data-driven interpretability. The review identifies emerging trends in hybrid methods, which combine AI and conventional optimization approaches to create more adaptive and effective HEV energy management systems. The paper concludes with a discussion of future research directions, focusing on safety, system resilience, and the role of AI in autonomous decision-making.

1. Introduction

Against the backdrop of the global “dual carbon” strategy and the accelerating transformation of the automotive industry, Hybrid Electric Vehicles (HEVs) are progressively cementing their pivotal role in the new energy vehicle market [1]. According to the Global EV Outlook 2025 published by the International Energy Agency (IEA), global electric vehicle sales—including hybrid models—exceeded 17 million units in 2024, representing a year-on-year increase of over 25% and accounting for more than 20% of total new vehicle sales [2]. Among these, Plug-in Hybrid Electric Vehicles (PHEVs) accounted for approximately 5.1 million units, making up about 30% of the total electric vehicle market [3]. Meanwhile, traditional automakers, notably represented by Toyota, have performed notably well in the non-plug-in hybrid sector, with annual sales surpassing 4 million units [4]. Due to their excellent fuel economy, low emissions, and strong adaptability to complex driving conditions, HEVs are widely regarded as a transitional technology for achieving low-carbon transportation—particularly suitable for regions where battery costs remain high and charging infrastructure is still underdeveloped [5]. Consequently, improving HEV operational efficiency and extending the lifespan of power systems has become a key focus for both industry and academia.

Within HEV systems, the Energy Management Strategy (EMS) serves as the core control logic, responsible for coordinating energy distribution between the internal combustion engine and the electric motor. It directly affects fuel efficiency, emission control, and driving experience [6]. Early commercial HEVs predominantly adopted rule-based control strategies, wherein engineers predefined logical rules and triggering conditions (such as battery state of charge or motor power demand) based on empirical knowledge to manage engine start–stop and energy flow switching. Although intuitive and easy to implement, these methods struggle to achieve global optimality under dynamically changing driving conditions [7]. To address this limitation, researchers proposed optimization-based methods such as Dynamic Programming (DP) and the Pontryagin’s Minimum Principle (PMP), which theoretically yield minimum fuel consumption paths and are often used as benchmarks to evaluate other strategies. However, their heavy computational burden and reliance on complete driving cycle information hinder real-time implementation [8].

To balance optimality and real-time responsiveness, online optimization strategies such as Equivalent Consumption Minimization Strategy (ECMS) and Model Predictive Control (MPC) have been explored. These approaches perform rolling horizon optimization to achieve near-optimal control based on current system states and short-term predictions [9,10]. While these methods outperform traditional rule-based strategies in terms of efficiency, challenges such as complex parameter tuning and sensitivity to model inaccuracies persist. Overall, although conventional EMS techniques have achieved substantial success in both theoretical and practical domains, their limited adaptability, delayed response to complex scenarios, and lack of scalability across different vehicle types and driving conditions have become major obstacles to further the performance improvement in HEVs. Furthermore, ensuring the safety and reliability of the entire HEV system, including critical aspects like wireless charging security (e.g., mitigating risks from foreign metal objects [11] and managing thermal hazards in ground assemblies during misaligned charging [12]) adds another layer of complexity that modern EMS must address.

In recent years, the rapid advancement of artificial intelligence (AI) technologies has provided new opportunities for breakthroughs in HEV energy management. With the increased computational power and widespread adoption of big data analytics, researchers have begun integrating AI algorithms into EMS frameworks, enabling environmental perception, autonomous learning, and intelligent decision-making—thus reducing the dependence on manual rules and predefined models [13]. In this context, this paper categorizes AI-driven EMS approaches into four types: knowledge-driven, data-driven, reinforcement learning-based, and hybrid strategies. Additionally, emerging AI technologies like deep learning and vehicle-to-vehicle communication are becoming central to modern EMS, providing enhanced adaptability and scalability in real-world conditions. These advancements mark a significant evolution in energy management, addressing the complex, dynamic scenarios encountered by modern HEVs.

While several previous studies have provided valuable insights into Energy Management Strategies (EMSs) for Hybrid Electric Vehicles (HEVs), notable gaps remain. Panday and Bansal [14] focused primarily on conventional rule-based and optimization methods, with limited discussion on recent advances in artificial intelligence (AI). Urooj et al. [1] proposed a classification into online and offline EMS approaches, but their review lacked a systematic comparison across AI paradigms and offered minimal discussion on performance metrics such as fuel economy or real-time feasibility. Lü et al. [15] conducted a detailed analysis of Model Predictive Control (MPC) in EMS but did not explore broader AI techniques or their integration with traditional strategies. Zhang et al. [16] introduced a structural classification of EMS; yet, their work did not fully account for the growing role of hybrid AI strategies or the need for Pareto-optimal trade-offs in multi-objective optimization. Additionally, reviews such as Khalatbarisoltani et al. [17], though informative for fuel cell vehicles, fall short in addressing the challenges specific to HEVs.

In contrast, the present review advances the field in several key aspects. First, it introduces a unified AI-based EMS classification framework encompassing four major paradigms—knowledge-driven, data-driven, reinforcement learning, and hybrid strategies—reflecting the most recent algorithmic trends. Second, it provides a detailed, quantitative comparison of each category’s strengths and limitations across critical dimensions, including fuel economy gains, computational cost, real-time performance, emissions reduction, and generalization ability. Third, it addresses emerging challenges such as interpretability, safety validation, and the lack of standardized benchmarking protocols, offering a structured analysis and future research perspectives. Finally, this work synthesizes recent interdisciplinary advances—such as digital twin technology, vehicle-to-everything (V2X) communication, and solid-state battery integration—into the EMS context, thereby offering a comprehensive and forward-looking roadmap for the development of intelligent, scalable, and robust energy management systems.

2. Overview of EMS for HEVs

The Energy Management Strategy (EMS) in Hybrid Electric Vehicles (HEVs) governs the power allocation between the internal combustion engine and the electric motor, playing a central role in determining fuel economy, emission levels, and overall vehicle performance. Over the past decades, researchers have developed a variety of EMS approaches, which can be broadly classified into two major categories based on their design principles: rule-based and optimization-based strategies. In addition to these, methods such as Model Predictive Control (MPC) and fuzzy control occupy important positions within the traditional EMS framework.

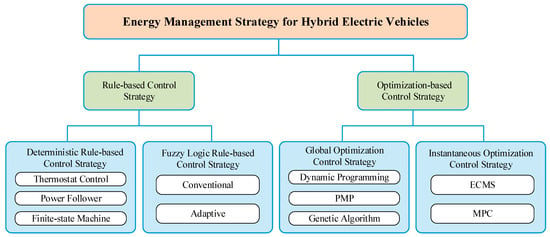

Figure 1 illustrates a commonly accepted classification framework for Energy Management Strategies in HEVs. Among them, rule-based control strategies and optimization-based control strategies constitute the two fundamental categories. The former relies on a set of predefined control rules, while the latter seeks optimal or sub-optimal control sequences through optimization algorithms.

Figure 1.

Classification of common EMSs for HEVs.

With technological advancements, additional strategies such as predictive control and intelligent control have emerged, extending and supplementing the traditional classification schemes. In the following sections, we will introduce the underlying principles and key characteristics of each strategy type, and analyze how the incorporation of artificial intelligence has driven the evolution of energy management paradigms.

2.1. Rule-Based Strategies

Rule-based EMSs determine the operating modes and power distribution between the engine and motor according to a set of predefined rules [18]. These strategies are generally guided by engineering experience, heuristic logic, or simple mathematical models, and make decisions based on vehicle states such as battery state of charge (SOC), vehicle speed, and power demand.

Typical rule-based control strategies can be divided into two main categories: deterministic rules and fuzzy logic strategies. Deterministic rule-based strategies rely on clearly defined thresholds and conditions, employing a strict “if–then” logic to control the engine and motor operation modes [19]. For example, a strategy may define charging and discharging thresholds based on SOC: when the SOC falls below a certain level, the engine starts charging the battery, whereas, when the SOC exceeds the threshold, the electric motor is prioritized for vehicle propulsion. These strategies are structurally simple and easy to implement, and respond quickly, making them well-suited for engineering applications. However, they lack flexibility and struggle to adapt to complex or rapidly changing driving conditions.

Fuzzy logic strategies, in contrast, utilize fuzzy sets and fuzzy inference rules to map input variables—such as SOC, vehicle speed, and power demand—into fuzzy values. A fuzzy inference system is then employed to derive output control decisions. This approach is more effective in handling system uncertainty and nonlinearities, thereby enhancing the smoothness and robustness of energy management. While fuzzy logic strategies offer greater adaptability than deterministic rules, they typically require more effort in design and parameter tuning [1].

An overview of commonly used rule-based strategies and their main characteristics is presented in Table 1.

Table 1.

Common rule-based EMSs and their characteristics [16,20].

2.2. Optimization-Based Strategies

Optimization-based Energy Management Strategies center on formulating and solving an optimization problem, typically aimed at minimizing a cost function—such as fuel consumption, emissions, or overall operational cost—while satisfying vehicle power demand and system constraints [21]. Unlike rule-based approaches that apply direct control based on predefined rules, optimization strategies define global performance objectives and derive real-time control decisions using vehicle models and driving conditions through dedicated optimization algorithms. These strategies are theoretically capable of significantly improving fuel economy and offer a more systematic and objective alternative to heuristic methods [22].

Optimization-based strategies can generally be classified into two subcategories: global optimization and instantaneous (real-time) optimization. Global optimization focuses on the entire driving cycle and seeks the optimal energy allocation trajectory using methods such as DP and optimal control theory, including Pontryagin’s Minimum Principle (PMP) [23]. The resulting control trajectories are typically regarded as benchmarks for evaluating the performance of other strategies. However, global optimization requires full knowledge of future driving conditions and involves intensive computation, which renders it impractical for real-time implementation. It is, therefore, mostly used in offline simulations or precomputed control maps [24].

In contrast, instantaneous or real-time optimization aims to make locally optimal decisions at each time step or within a short prediction horizon. Through rolling horizon prediction and continuous updates, these strategies approximate global optimality over the entire trip. Representative methods include the Equivalent Consumption Minimization Strategy (ECMS) and MPC [25]. At each sampling instant, control inputs are computed to minimize the instantaneous cost function based on the current vehicle state and short-term predictions, thereby achieving near-optimal performance throughout the driving process. Since these methods do not require complete a priori knowledge of the driving cycle, they are well suited for real-time applications and have become a research and application hotspot [17].

Common optimization-based EMS strategies and their characteristics are summarized in Table 2 below.

Table 2.

Common optimization-based EMSs and their characteristics [20,26].

2.3. Strategy Evolution and the Introduction of AI

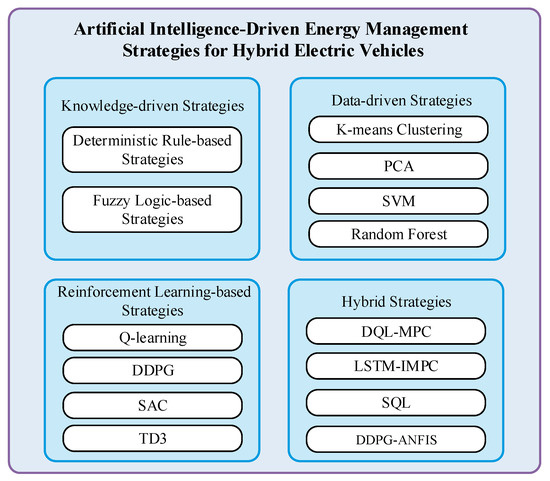

With the swift advancement in vehicle connectivity and artificial intelligence, EMS for HEVs are also undergoing a notable transformation. Traditional systems—whether rule-based or optimization-driven—are increasingly being augmented by intelligent algorithms. In recent years, technologies like reinforcement learning and deep learning have started to reshape the EMS landscape, pushing energy management beyond a rigid, predefined logic toward systems capable of adaptive learning. This shift marks a growing convergence between knowledge-based and data-centric approaches. As illustrated in Figure 2, the new generation of EMS methods can generally be grouped into a few main types.

Figure 2.

Classification framework of EMSs for HEVs in the era of artificial intelligence.

Knowledge-driven approaches are grounded in expert understanding and physical system modeling. These methods embed domain expertise into the decision-making process, typically relying on established rules or mathematical models. To enhance flexibility under varying driving conditions, researchers have focused on refining control parameters or incorporating modules capable of recognizing driving patterns [27].

On the other hand, data-driven approaches harness large-scale data and machine learning techniques to build models that can make decisions based on vehicle data—either from past records or in real time. These strategies often involve using neural networks or comparable tools to approximate optimal policies, or to function directly as controllers that learn how to split power between the engine and the electric motor. Crucially, these systems bypass the need for manually crafted rules, instead extracting control logic autonomously from extensive simulation or real-world data [28,29]. That said, their effectiveness is closely tied to the depth and diversity of the training datasets, along with the model’s ability to generalize to new conditions [29].

Reinforcement learning, as a subset of data-driven methods, focuses on learning by interacting with the environment. EMS is reframed here as a sequential decision-making problem, tackled by agents—often employing deep reinforcement learning—that iteratively refine their control strategies to maximize long-term benefits like improved fuel efficiency or reduced emissions [30]. These methods do not rely on precise system models, which allows them to adapt in real time to a wide array of driving situations. However, issues such as stability during training, the speed of convergence, and safety during exploration still pose significant challenges [31].

Finally, hybrid strategies aim to draw on the strengths of all the above to create more balanced systems. In many hybrid EMS frameworks, conventional rule-based or model-driven strategies handle higher-level decision-making to maintain safety and ensure performance, while AI-driven algorithms are tasked with fine-tuning the power distribution in real time. This setup allows for both global optimization and real-time responsiveness [32]. Some hybrid approaches take it a step further by using AI to improve existing rule-based systems—for example, optimizing fuzzy logic rules with genetic algorithms or deep learning techniques—or by embedding expert insights into learning algorithms to speed up convergence [33]. By combining the reliability of knowledge-driven methods with the adaptability of data-driven ones, hybrid strategies manage to achieve a more robust and versatile performance profile.

2.4. Benchmark Driving Cycles and Open-Source Tools for EMS Evaluation

To ensure reproducibility and an objective performance comparison, the recent EMS research for HEVs has emphasized the importance of standardized driving cycles and open-source simulation platforms. These elements enable the consistent evaluation of energy consumption, emissions, and real-time control performance across various operating conditions and strategy types [16,32].

Table 3 summarizes commonly used benchmark driving cycles. These standardized tests represent a wide range of driving conditions—including urban stop-and-go traffic, high-speed highway cruising, and aggressive acceleration scenarios—and are widely adopted in EMS validation studies. For instance, WLTC is now a globally accepted benchmark, while US06 and UDDS are often used in the U.S. to simulate real-world driving behavior.

Table 3.

Commonly used driving cycles for EMS evaluation [34,35].

To complement physical benchmarks, various open-source tools have emerged to support simulation-based EMS development and testing. As listed in Table 4, these tools provide capabilities such as powertrain modeling, signal acquisition, control optimization, and vehicle behavior simulation. Platforms like OpenVIBE and PyEM enable modular testing of AI-driven EMS designs under flexible and repeatable virtual conditions, significantly improving research transparency and comparability.

Table 4.

Open-Source Tools for EMS Development and Testing [36,37].

By integrating these benchmark cycles and tools into EMS evaluation frameworks, researchers can enhance the standardization of experimental setups, facilitate fair cross-study comparisons, and accelerate the development of reliable, scalable Energy Management Strategies for real-world HEV applications.

3. Classification and Principles of AI-Based EMSs for HEVs

In light of Section 2’s discussion on EMS evolution, this section categorizes AI-driven Energy Management Strategies into four main types: knowledge-driven, data-driven, reinforcement learning, and hybrid methods. For each category, the fundamental principles, typical algorithms, and representative use cases in HEV control are presented. This framework clarifies how various AI paradigms address HEV energy management tasks and prepares the ground for the detailed review in Section 3.1, Section 3.2, Section 3.3, Section 3.4.

3.1. Knowledge-Driven Strategies

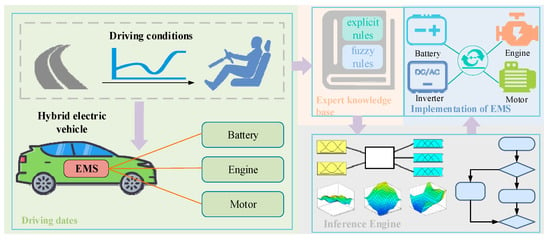

In the energy management of HEVs, knowledge-driven strategies primarily rely on engineering expertise and vehicle dynamics knowledge to establish control rules. These strategies do not require extensive data training, making them particularly suitable and robust in scenarios where system models are difficult to construct or where considerable uncertainties exist [38]. Such strategies typically collect driving condition inputs—such as road type and vehicle speed variations—alongside the operational states of key components including the battery, electric motor, and internal combustion engine. These inputs are processed by the EMS, which utilizes a rule base composed of deterministic and fuzzy logic rules. A reasoning engine then infers an optimal energy distribution plan, which is implemented by a controller to coordinate the operation of all power sources, thereby achieving intelligent energy regulation (as illustrated in Figure 3).

Figure 3.

Flow diagram of Energy Management Strategy for Hybrid Electric Vehicles based on knowledge-driven approach.

Although knowledge-driven strategies lack self-learning capabilities, their decision logic—derived from expert systems and rule-based frameworks—offers strong interpretability and real-time responsiveness. This approach reflects the core characteristics of early symbolic artificial intelligence. With the rapid evolution of AI technologies, EMS frameworks have increasingly embraced hybridized methodologies. Knowledge-driven strategies, due to their structural stability and engineering feasibility, are frequently integrated into data-driven or reinforcement-learning-based intelligent control schemes. As such, they continue to hold significant research and practical value under the current paradigm of AI-driven vehicle control.

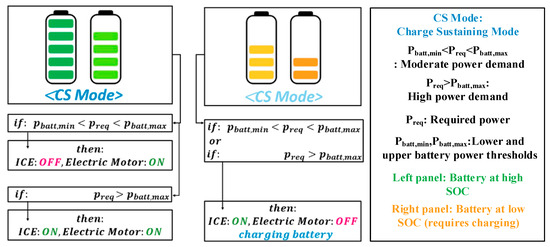

This category of strategy can be further divided into two main types: deterministic rule-based control and fuzzy logic control. Deterministic rule-based control, also known as logic threshold control, defines control logic by setting thresholds for key variables based on empirical knowledge. For instance, as shown in Figure 4, the engine’s start–stop behavior can be regulated by defining upper and lower bounds for the battery’s SOC. This approach was widely adopted in early HEV models—such as the SOC-hold strategy in the Toyota Prius—to balance energy consumption and power output. However, due to its tendency to cause abrupt control actions and result in poor system smoothness, its application is somewhat limited [39,40].

Figure 4.

Schematic diagram of Energy Management Strategy for Hybrid Electric Vehicles based on deterministic rules.

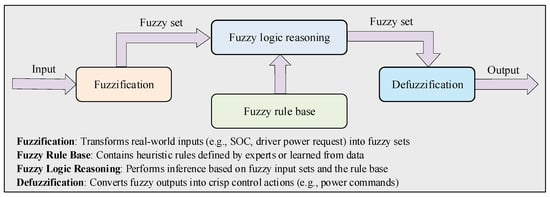

In contrast, fuzzy logic control is based on fuzzy set theory and aims to formalize expert knowledge to handle highly nonlinear systems. As shown in Figure 5, the typical fuzzy control process includes fuzzification, fuzzy inference, and defuzzification. Input variables are first transformed into linguistic variables (e.g., classifying SOC as “low,” “medium,” or “high”) and mapped through membership functions. Expert-defined IF–THEN rules are then applied for fuzzy inference—commonly using the Mamdani method, which determines the rule activation strength via the minimum operator and aggregates outputs via the maximum operator. Finally, defuzzification techniques such as the centroid method are used to derive concrete control commands [41,42].

Figure 5.

Schematic diagram of the energy management system based on fuzzy logic rules.

Compared with deterministic rule-based control, fuzzy logic control provides greater adaptability and flexibility, significantly improving system smoothness and reducing abrupt transitions. Nonetheless, the design of fuzzy systems is heavily reliant on expert experience. The development of rule sets and membership functions is inherently subjective and labor-intensive, and achieving global system optimality remains challenging. To address these limitations, recent studies have incorporated intelligent optimization algorithms—such as genetic algorithms and particle swarm optimization—to automatically fine-tune fuzzy rule parameters, thereby enhancing system performance [43]. Common input variables for fuzzy controllers include SOC, vehicle power demand, and vehicle speed, while outputs typically involve the distribution of power between the engine and electric motor. To better accommodate dynamic driving environments, multi-mode fuzzy control schemes have been proposed. These approaches dynamically switch between rule subsets tailored for urban, suburban, or highway conditions, thereby improving the generalization and real-world adaptability of the EMS.

3.2. Data-Driven Strategies

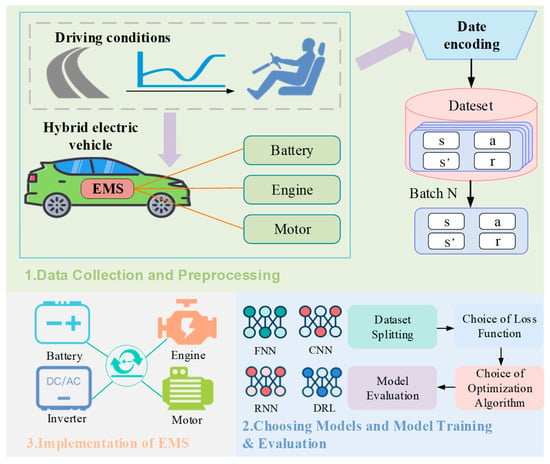

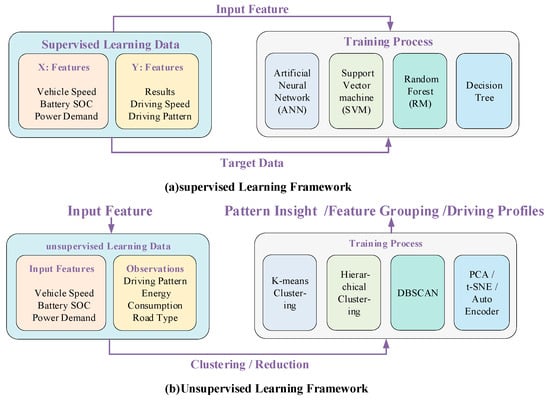

With the rapid development of artificial intelligence, data-driven strategies have become a central tool in energy management for HEVs. Unlike knowledge-driven approaches that rely on human expertise, data-driven methods—illustrated in Figure 6—leverage real-world driving data such as vehicle speed and road conditions to establish mappings between input features and optimal energy allocation strategies using supervised or unsupervised learning. This enables intelligent energy coordination and significantly enhances vehicle performance and energy efficiency [13]. As shown in Figure 7, these strategies can be broadly categorized into supervised and unsupervised learning approaches. By deeply modeling the vehicle and environmental states, data-driven methods drive energy allocation toward optimality and improve the synergy between fuel and electric power usage [44].

Figure 6.

Flow diagram of Energy Management Strategy for Hybrid Electric Vehicles based on data-driven approach.

Figure 7.

Application framework of supervised and unsupervised learning in Hybrid Electric Vehicle energy management.

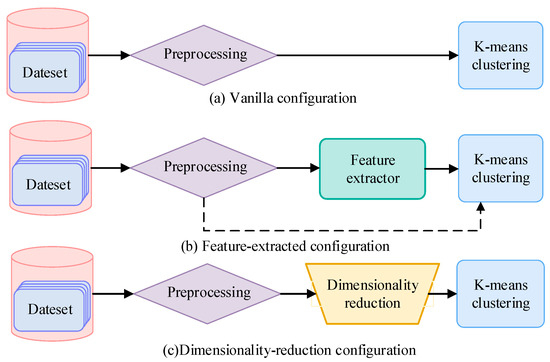

Unsupervised learning does not require labeled datasets and excels in identifying latent structures from raw data. It is widely applied in driving pattern recognition and dimensionality reduction. As depicted in Figure 8, K-means clustering is commonly used to differentiate various driving conditions, enabling the dynamic adjustment of energy allocation strategies to improve dual energy source efficiency [45,46]. However, the results are highly sensitive to initial cluster centroids, which may lead to suboptimal outcomes. Incorporating GA to optimize initial conditions can significantly enhance the clustering quality and overall system performance [47]. Meanwhile, a Principal Component Analysis (PCA) simplifies high-dimensional data by extracting key features through dimensionality reduction. When combined with GAs to optimize control parameters, the PCA further strengthens the performance of HEV control systems [28,48].

Figure 8.

Flowchart of three typical data-processing configurations using K-means clustering.

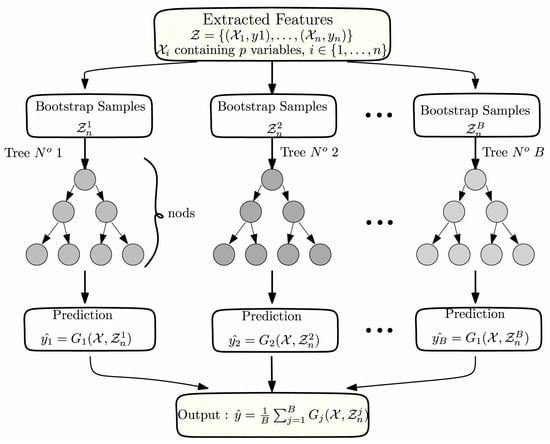

Supervised learning, by contrast, relies on labeled data to build predictive models and is well-suited for applications such as driving condition classification, fault detection, and energy forecasting. Support Vector Machines (SVMs), known for their excellent generalization ability in handling high-dimensional data, not only offer precise fault diagnosis but also assist in extracting critical conditions for the Equivalent Consumption Minimization Strategy (ECMS), thereby improving both fuel economy and driving comfort [45,49]. In addition, decision trees and ensemble methods like Random Forests have demonstrated strong performance in HEV control. By integrating multiple decision trees, Random Forests enhance input feature selection and construct more robust energy management models, effectively reducing energy waste [50].

In summary, both supervised and unsupervised learning approaches within data-driven strategies contribute distinct advantages to HEV energy management and collectively enhance system intelligence. From the synergistic integration of K-means and GA, to the efficient dimensionality reduction by PCA, and the precise applications of SVM and Random Forests in fault identification and energy control (see Figure 9), data-driven methods are progressively forming the technological foundation for intelligent energy management in HEVs [28,51].

Figure 9.

Flowchart of the Random Forest algorithm.

3.3. Reinforcement Learning Strategies

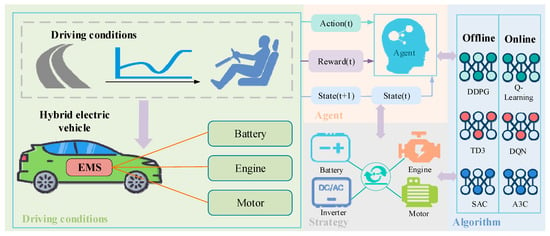

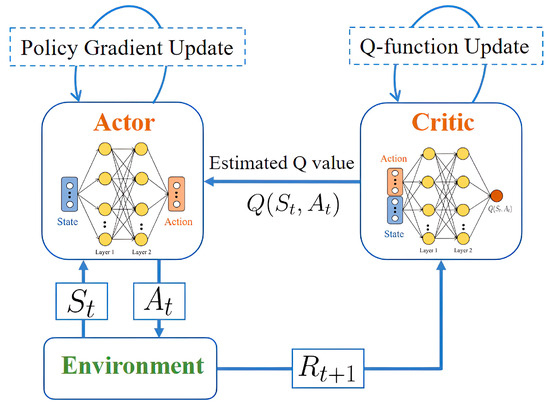

In reinforcement learning (RL), an agent interacts continuously with the environment and adjusts its strategy based on reward and punishment feedback, aiming to maximize long-term cumulative returns. As illustrated in Figure 10, RL plays a pivotal role in the energy management system of HEVs. It enables dynamic decision-making for power distribution between the internal combustion engine and electric motor while simultaneously considering multidimensional feedback such as fuel efficiency, emission impacts, and battery health, thereby continuously optimizing control strategies [52,53,54]. In contrast to conventional supervised learning methods, RL does not rely on predefined labeled datasets and exhibits a strong capacity for autonomous learning. This makes it particularly well-suited for complex driving scenarios characterized by a high uncertainty and dynamic environmental changes [55].

Figure 10.

Flow diagram of Energy Management Strategy for Hybrid Electric Vehicles based on reinforcement learning.

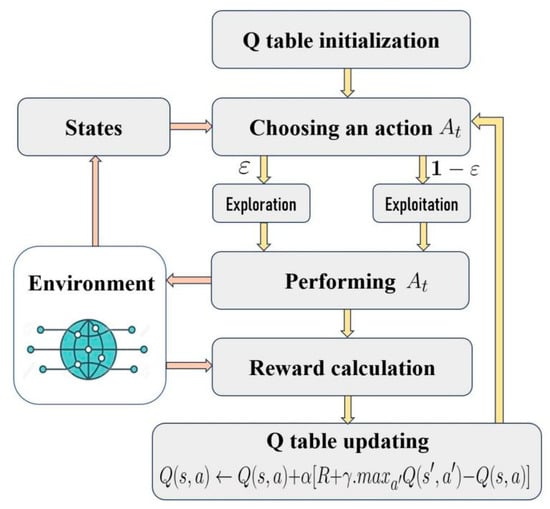

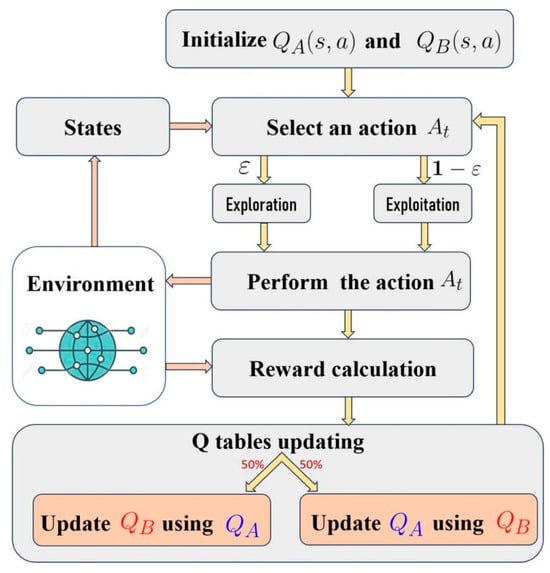

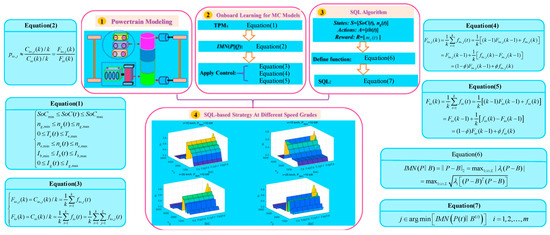

Among various RL algorithms, Q-Learning and SARSA are widely used representatives. As shown in Figure 11, Q-Learning is favored in HEV energy management due to its simplicity and deployment flexibility. It can gradually approximate optimal control policies even under conditions of incomplete information or inaccurate prediction, effectively improving fuel economy and extending battery lifespan [56,57]. SARSA, another value-based method, continuously updates the value of state-action pairs (Q(s, a)) in its Q-table to iteratively enhance the policy performance [58]. Given the high cost and safety risks associated with trial-and-error learning on physical vehicles, RL is typically trained offline within high-fidelity simulation environments. By utilizing extensive simulated driving data to achieve policy convergence, the trained model can then be deployed in real vehicles, with online learning and parameter fine-tuning incorporated to maintain a superior performance even under battery degradation or external condition fluctuations [59,60].

Figure 11.

Block diagram of the Q-Learning algorithm.

In recent years, the continued advancement of deep learning has expanded the application of deep reinforcement learning (DRL) in this field, as shown in Figure 12. DRL leverages deep neural networks to approximate Q-value functions or policy functions, significantly improving the system’s ability to handle high-dimensional state spaces and continuous action spaces, and enhancing adaptability in dynamically complex environments [61]. Techniques such as Deep Q-Networks (DQNs), Deep Deterministic Policy Gradient (DDPG), Proximal Policy Optimization (PPO), and Soft Actor–Critic (SAC) have demonstrated great potential in HEV energy management [62,63]. DRL not only enables multi-objective optimization—covering fuel consumption, emissions, and battery longevity—but also facilitates flexible energy allocation strategies based on different driving contexts (e.g., urban traffic, highway cruising, or congestion), thereby exhibiting a superior generalization capability and practical applicability [64]. A comparative overview of representative reinforcement learning algorithms applied in HEV energy management is summarized in Table 5, highlighting their key characteristics, typical applications, and main advantages.

Figure 12.

Block diagram of the DQL algorithm.

Table 5.

Comparison of representative reinforcement learning algorithms in HEV energy management systems [57,61,62,64].

3.4. Hybrid Strategies

In light of the performance limitations of single control methods, the integration of intelligent algorithms with traditional optimization approaches—referred to as hybrid strategies—has emerged as an effective pathway to enhance the robustness and overall performance of HEV energy management systems.

To provide a structured overview of hybrid energy management approaches, Table 6 summarizes the five principal categories currently adopted in the literature. Each category reflects a specific integration paradigm between intelligent algorithms and conventional optimization/control methods, highlighting their key mechanisms, strengths, and application domains.

Table 6.

Summary of hybrid energy management strategy categories for HEVs [65,66,67].

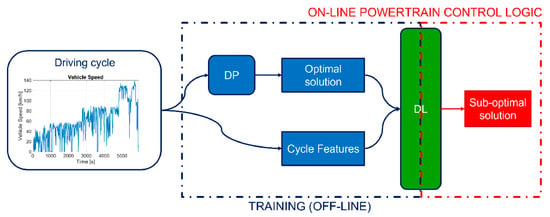

The first category involves a collaborative mechanism between offline optimization and online control. DP can generate globally optimal energy allocation strategies under predefined conditions; its computational complexity renders it unsuitable for real-time control applications [65]. In hybrid strategies, DP is typically used to generate a large volume of high-quality samples to train learning models that approximate DP-level performance in online environments. For example, Zhuang et al. obtained the optimal driving condition data using DP and constructed a mode transition map with Support Vector Machines (SVMs) to optimize the Equivalent Consumption Minimization Strategy (ECMS), leading to notable improvements in fuel economy and driving smoothness [48,68].

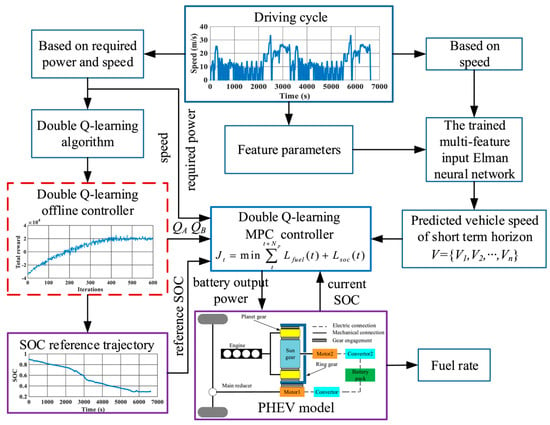

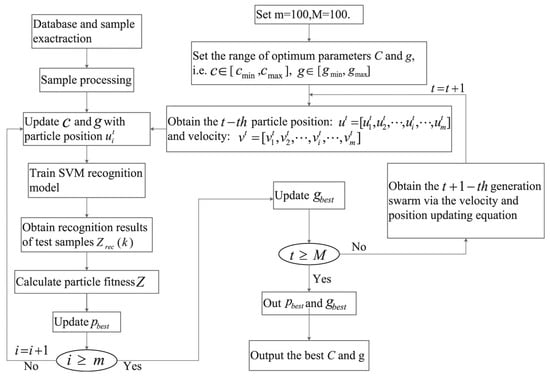

The second category embeds real-time optimization within a learning-based control framework. Model Predictive Control (MPC) is capable of dynamic optimization over short time horizons but incurs a high computational cost. To address this, Chen et al. [69]. proposed a strategy combining Q-Learning with MPC, as shown in Figure 13. A Q-table is trained offline and subsequently used in MPC’s rolling horizon optimization to assist value evaluation, effectively reducing the computational load and enhancing the energy efficiency [66]. Shen et al. further incorporated Double Delayed Q-Learning (DDQL) and vehicle speed predictions via Convolutional Neural Networks (CNNs) to develop a more adaptive MPC control structure [70].

Figure 13.

Block diagram of energy management for plug-in Hybrid Electric Vehicles based on the DQL–MPC algorithm.

The third category emphasizes the dynamic adjustment of optimization parameters using intelligent algorithms. For instance, the control performance of ECMS depends on fixed equivalence factors, which struggle to adapt to dynamic real-world conditions. Recent studies have proposed feeding the state of charge (SOC) and predicted driving conditions into deep reinforcement learning models to achieve the real-time adaptive tuning of equivalence factors [71]. Shi et al., for example, applied a Deep Double Q-Network (DDQN) combined with periodic prediction data to dynamically refine equivalence factors, enabling the precise coordination between the engine torque and gear ratio under varying driving demands and, ultimately, reducing fuel consumption [66].

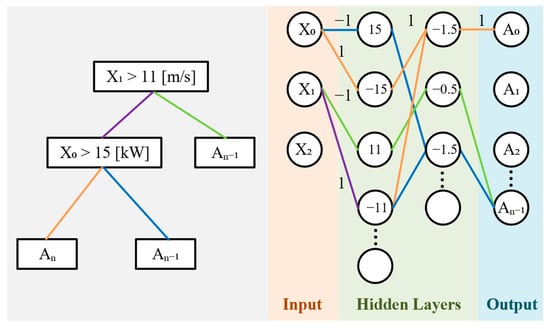

The fourth category focuses on the intelligent optimization of parameters within rule-based or fuzzy control systems. Algorithms such as Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) are frequently employed to fine-tune membership functions and control rules in fuzzy controllers. GA-based optimization has been shown to significantly improve fuel efficiency, achieving an up to 28% energy savings [72]. Fan et al. simultaneously optimized the fuzzy rule thresholds and ECMS equivalence factors using GA, achieving a superior real-time performance while maintaining its performance close to that of DP [73]. Additionally, adaptive Fuzzy Neural Network (FNN) structures have been proposed. Zhang et al. combined neural networks with driving cycle recognition to optimize fuzzy membership functions, improving the strategy’s adaptability across diverse conditions [74]. As shown in Figure 14, Wang et al. further developed the FlexNet network based on expert knowledge, integrating clustering techniques and hybrid learning to optimize FNN parameter configurations for a cooperative balancing between the battery and supercapacitor loads [67].

Figure 14.

Mapping from expert decision tree to FlexNet (left: decision tree; and right: FlexNet neural network).

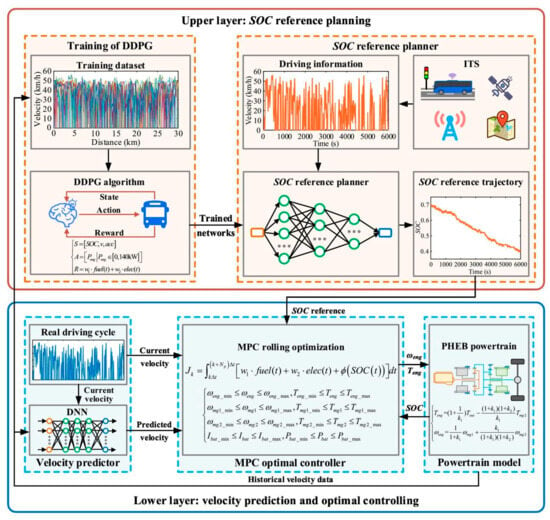

The fifth category of hybrid strategies implements hierarchical control architectures to coordinate multiple approaches. As illustrated in Figure 15, such architectures typically involve a high-level controller providing global or long-horizon control references—often based on reinforcement learning or global optimization—while low-level controllers (e.g., MPC or rule-based control) perform real-time tracking [75]. For example, Zhang et al. developed a dual-layer EMS, where the upper layer employs DDPG to generate control strategies, and the lower layer selects the appropriate control modes to achieve a balance between fuel economy and response time [74]. In intelligent and connected vehicle scenarios, this architecture can further scale to fleet-level and individual-vehicle-level control: the upper layer manages energy usage across the fleet, while the lower layer performs local optimization for each vehicle. This cooperative architecture enhances the overall system performance via inter-vehicle communication.

Figure 15.

Block diagram of hierarchical architecture for the energy management system of Hybrid Electric Vehicles.

In summary, hybrid strategies combine the global optimality and theoretical rigor of optimization algorithms with the self-learning and adaptability of intelligent algorithms, balancing real-time responsiveness with generalization capabilities. In practice, deep reinforcement learning algorithms such as Proximal Policy Optimization (PPO) have already demonstrated near-DP performance while being suitable for real-time applications [76]; GA-optimized fuzzy control strategies integrate expert knowledge with search capabilities to overcome the energy-saving limitations of traditional rule-based control [72]. With the continuous advancement of computational power and vehicular networking infrastructure, multi-strategy hybrid approaches for HEV energy management are becoming mainstream, driving the deeper integration between intelligent control and energy optimization.

4. Research Achievements and Advances in AI-Based Strategies

To provide a clear overview of the performance of various artificial intelligence-based Energy Management Strategies for Hybrid Electric Vehicles (HEVs), Table 7 presents a comparative analysis across key performance metrics. The table highlights the fuel economy improvements, computational costs (CPU time), real-time feasibility (latency), emissions reductions, generalization ability, and the validation methods used in the corresponding studies.

Table 7.

Comparative evaluation of AI-based energy management strategies for HEVs [30,73].

As shown in Table 7, each strategy type—knowledge-driven, data-driven, reinforcement learning, and hybrid strategies—exhibits distinct advantages and limitations. For example, knowledge-driven strategies such as FLC are extremely efficient in terms of computational cost and real-time performance, making them suitable for applications requiring fast response times. However, they tend to have a weaker generalization ability compared to data-driven and reinforcement learning approaches. On the other hand, reinforcement learning methods like PPO show higher fuel economy improvements and stronger generalization, but they come with higher computational costs and are typically validated in simulation environments. Hybrid strategies, which combine reinforcement learning with Model Predictive Control (MPC), provide a balanced solution, offering higher fuel economy improvements and better real-time feasibility, but at the cost of an increased computational complexity.

This comparative table helps underline the trade-offs and application scenarios for each approach, guiding the selection of the most suitable Energy Management Strategy based on specific HEV design goals and constraints.

4.1. Applications and Effectiveness of Knowledge-Driven Strategies

Knowledge-driven strategies exhibit outstanding adaptability and robustness in energy management for HEVs, as they integrate expert knowledge, engineering experience, and intelligent algorithms to construct efficient and reliable control frameworks. These strategies are grounded in an a priori understanding of vehicle dynamics, energy flow principles, and driving scenarios, demonstrating particular effectiveness in dynamic environments and multi-objective optimization [77].

Fuzzy logic control (FLC) emphasizes a multi-input/multi-output rule design, balancing fuel efficiency, battery longevity, and driving responsiveness. For series HEVs, ref. [78] proposed a three-input and two-output fuzzy controller using the state of charge (SOC), driver demand, and vehicle speed as inputs, and engine power and regenerative braking strength as outputs. This design effectively maintained the SOC within the target range and achieved an 8% reduction in fuel consumption. Based on this framework, ref. [79] streamlined the rule set and developed a controller based on the battery energy state and target SOC, improving the SOC tracking accuracy and reducing emissions by 12%. Higher-order fuzzy systems demonstrate enhanced performance in highly uncertain environments. The interval type-2 fuzzy logic controller (IT2-FLC) introduced in [80] reduced the dynamic response error by 15% compared to traditional type-1 fuzzy logic controllers (T1-FLCs), revealing its potential in autonomous driving applications.

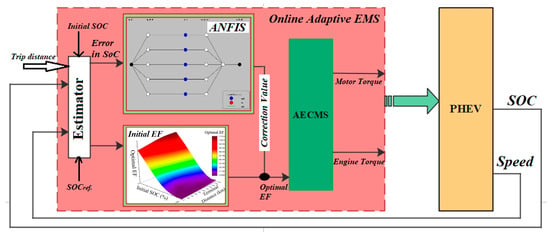

The adaptive neuro-fuzzy inference system (ANFIS) has further promoted the intelligence of knowledge-driven control strategies. In [81], an ANFIS-based energy management scheme was designed for vehicle-to-grid (V2G) microgrids, enabling the dynamic adjustment of electric vehicle (EV) charging and discharging power under conditions with large initial SOC disparities. This approach improved the power allocation efficiency by 20% compared to conventional FLC. As shown in Figure 16, ref. [82] integrated ANFIS with the equivalent consumption minimization strategy (ECMS) to dynamically calibrate the equivalent factor, resulting in a 23% reduction in the terminal SOC variance for a plug-in parallel HEV, while achieving fuel economy close to the DP benchmark. In a performance comparison between FLC and ANFIS, ref. [83] found that the latter, through data-driven rule base construction, not only enhanced the SOC maintenance capability by 15% but also significantly improved the smoothness of the control curve.

Figure 16.

Schematic diagram of online implementation of the designed ANFIS–AECMS.

In addition, Fu et al. [84] proposed a rule-based Energy Management Strategy for fuel cell electric vehicles (FCEVs) that optimizes the fuel cell’s participation, open-circuit voltage (OCV) operation, and start/stop actions based on predefined rules, which are dynamically adjusted according to different driving cycles and load conditions. This strategy improved the fuel cell lifespan by 9.47%, while reducing hydrogen consumption and system degradation rates. Similarly, Qin et al. [85] introduced a strategy for fuel cell hybrid mining trucks that combines dynamic programming (DP) with real-time optimization, embedding optimal rules derived from DP into a real-time model refined through sequential quadratic programming (SQP) for energy allocation. This strategy resulted in a reduction in operational costs by up to 15.90% in continuous operation conditions.

Knowledge-driven strategies also demonstrate distinct advantages in the coordination of multi-energy systems. Reference [86] proposed an online energy management framework based on game theory, which utilizes the real-time identification of power source characteristics and power allocation via game-based decision-making. This approach reduced the total operating cost of a multi-stack fuel cell HEV by 9.7% and decreased hydrogen consumption by 6.2%. In [87], a high-precision artificial neural network (ANN) fuel consumption model was developed to capture the transient characteristics of the engine. By incorporating this model into an improved adaptive equivalent consumption minimization strategy (ECMS), the additional fuel consumption caused by engine state fluctuations was reduced by 99.16%, and overall fuel economy improved by 3.37%.

Furthermore, Reference [88] developed a trip-energy-driven hybrid rule-based Energy Management Strategy, which integrates personalized control rules to reduce engine start–stop events by up to 30%. Compared to conventional rule-based strategies, this approach achieved a 5.8% improvement in fuel efficiency.

4.2. Technical Progress in Data-Driven Strategies

Data-driven approaches have been increasingly applied to energy management in HEVs, aiming to extract patterns from complex data through machine learning and to optimize control strategies accordingly. Based on differences in learning paradigms, existing methods can be categorized into unsupervised learning and supervised learning, both of which offer complementary advantages in terms of feature extraction, model training, and practical deployment scenarios.

In the EMS of HEVs, unsupervised learning techniques are primarily employed for driving pattern recognition and dimensionality reduction. These methods are advantageous due to their ability to explore working conditions without requiring labeled data. Common techniques include K-means clustering and PCA [15,89]. For example, Zhang et al. applied K-means to classify driving behaviors to guide energy allocation strategies, resulting in reduced fuel and electricity consumption [90]. Sun et al. combined K-means with Markov chains for vehicle speed prediction and the dynamic adjustment of the equivalent factor in the ECMS, thus improving energy utilization efficiency [89]. Furthermore, some studies have integrated PCA with GA to cluster PHEV driving conditions and optimize control parameters, yielding a control performance close to the global optimum achieved by DP [91,92]. Li et al. optimized K-means cluster centers via GA to enable driving state identification and efficient engine operation. Dimensionality reduction techniques such as autoencoders have also been used to reduce the model complexity and prevent overfitting [93].

In contrast, supervised learning is widely utilized in EMS for tasks such as energy distribution, condition recognition, and fault diagnosis. SVMs, known for their strong classification capabilities on small, high-dimensional datasets, are frequently adopted for pattern recognition and anomaly detection. Zhuang et al. combined SVM with optimal reference points generated by DP to extract mode-switching information and enhance real-time control strategies, thereby improving system efficiency [68]. Ji et al. implemented a one-class SVM model for fault detection, demonstrating high accuracy in real vehicle validations [94]. As shown in Figure 17, Shi et al. integrated an SVM with PSO for driving condition recognition and ECMS parameter tuning, significantly extending battery life [66]. Other studies have employed SVMs to construct multi-class diagnostic systems or to achieve dynamic parameter adjustment, thereby realizing both a high identification accuracy and improved fuel savings [66].

Figure 17.

Flowchart of the PSO–SVM algorithm.

Ensemble learning methods such as decision trees and Random Forests have also been used to build intelligent controllers. Wang et al. incorporated expert knowledge into a decision tree to initialize a neural network, thus enhancing the energy management performance [67]. Lu et al. applied Random Forests for input feature selection and trained controllers using data generated by DP, achieving better energy efficiency compared to conventional methods [95].

ANNs play a critical role in EMS as well. As illustrated in Figure 18, Millo et al. built a digital twin model and trained a bidirectional long short-term memory (Bi-LSTM) network with DP results to replicate the optimal control strategy, resulting in approximately a 4–5% improvement in fuel economy [96]. Xie et al. proposed a control method integrating PMP and ANN, taking battery aging into account to strike a balance between energy efficiency and battery life [97]. In recent years, hybrid approaches combining ensemble learning and ANN have gained attention. For example, Lu et al. used Random Forests for PHEV modeling and subsequently developed an ANN-based controller, significantly reducing the total system energy consumption [95].

Figure 18.

Flowchart of supervised learning algorithm trained by DP.

In addition, Bo et al. [98] introduced an optimization-based power-following Energy Management Strategy for hydrogen fuel cell vehicles, aiming to optimize the power distribution and reduce the hydrogen consumption. This strategy improved fuel efficiency by ensuring the fuel cell operated in its optimal efficiency range, and the optimized strategy resulted in a reduction in hydrogen consumption by 8.8% over a 100 km driving distance.

In summary, supervised and unsupervised learning methods each demonstrate strengths in HEV energy management. Supervised learning, with its reliance on labeled data, excels in accurate control and fault detection, whereas unsupervised learning enhances adaptability through working condition exploration. The integration of both paradigms has been shown to outperform traditional control strategies under various practical conditions. Coupled with classical optimization techniques such as DP, PMP, and GA, these intelligent methods offer robust technical support for the intelligent development of EMSs by balancing real-time control and global optimality [96].

4.3. Performance Evaluation of Reinforcement Learning Strategies

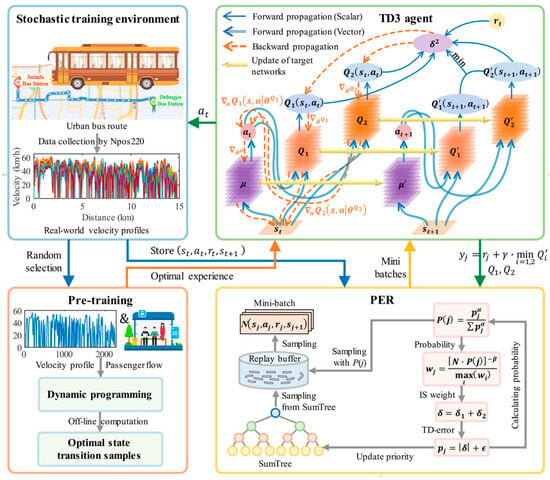

The application of reinforcement learning (RL) in HEV energy management has seen continuous advancement, covering key areas such as algorithm optimization, multi-objective policy integration, hierarchical control design, and adaptive capabilities under complex driving conditions. Overall, RL-based strategies have demonstrated significant performance improvements, with algorithmic refinement serving as a critical breakthrough point. For example, the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm has garnered considerable attention due to its high stability and precision. As illustrated in Figure 19, Huang and He incorporated a prioritized experience replay mechanism into the TD3 framework to develop a data-driven Energy Management Strategy, which improved the economic performance of fuel cell buses by 8.03%, achieving 97.15% of the performance benchmarked by DP [99]. Zhang et al. further extended TD3 to manage thermal systems in multi-mode PHEVs, integrating traffic and topographic data to achieve a 93.7% fuel efficiency rate and up to 9.6% energy savings [100]. Simultaneously, other RL variants such as Deep Q-Learning (DQL) and Actor–Critic algorithms have shown strong performance in high-dimensional state spaces: Wu et al. achieved only a 2.76% cost deviation from DP with the Actor–Critic approach, alongside an 88.7% reduction in computation time [101]. Some studies have introduced integrated mechanisms such as Dyna-H and end-to-end (E2E) learning to further enhance real-time response and computational efficiency [102].

Figure 19.

Block diagram of the EMS integrating the TD3 algorithm with a prioritized experience replay mechanism.

To quantitatively compare the performance of representative RL algorithms across different driving scenarios, Table 8 summarizes the fuel consumption results of Q-Learning, DQN, DDPG, SAC, and PPO under standard driving cycles such as FTP-75 and WVUSUB. These values were obtained using compact HEV or plug-in hybrid electric bus (PHEB) models on platforms like Simulink and CarSim. It is evident that deep RL methods like SAC and PPO significantly outperform classical algorithms in terms of fuel economy, with PPO (Clip) achieving the lowest fuel consumption (18.960 L/100 km) in the 4 × WVUSUB cycle, and SAC achieving 3.258 L/100 km under FTP-75.

Table 8.

Quantitative comparison of RL algorithms for HEV energy management under standard driving cycles [37,76,103].

In the case of FCHEVs, Tang et al. [104] proposed a degradation adaptive Energy Management Strategy using DDPG. This strategy dynamically adjusts the power distribution between the fuel cell and battery based on the real-time health status (SOH) estimates of the fuel cell stack, significantly improving the system durability while reducing the energy consumption.

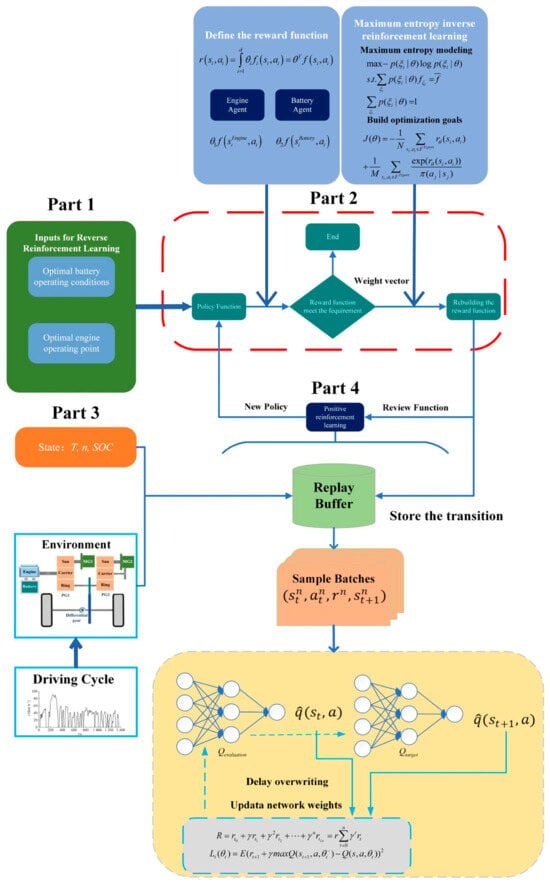

In the realm of multi-objective optimization and constraint handling, RL effectively balances fuel economy, battery longevity, and system safety by flexibly designing reward functions and incorporating dynamic models. Deng et al. combined TD3 with an online battery aging assessment model to achieve dual-objective optimization involving reduced hydrogen consumption and improved SOC control [105]. Inverse reinforcement learning (IRL), as shown in Figure 20, has also gained attention; for instance, Lv et al. extracted critical reward weights from expert trajectories to achieve fuel savings ranging from 5% to 10% [106]. Meanwhile, user comfort is increasingly integrated into the optimization objectives. Yavuz and Kivanc combined deep RL (DRL) with vehicle-to-everything (V2X) technology to manage the energy within vehicle fleets, reducing costs by 19.18% while meeting passenger requirements [107]. Similarly, Sun et al. [108] integrated deep RL with a behavior-aware EMS to optimize the power distribution, enhancing fuel efficiency by approximately 56% while significantly reducing battery degradation.

Figure 20.

Flowchart of the inverse reinforcement learning process.

To address complex driving scenarios and sparse reward challenges, hierarchical reinforcement learning (HRL) and multi-agent frameworks have emerged as research focal points. Qi et al. proposed a hierarchical DQL architecture that not only improved training efficiency but also reduced fuel consumption by 7.3–16.5% [109]. Yang et al. integrated Q-Learning with double Q-Learning to enable driver behavior prediction and regulation, enhancing system modularity and scalability [110].

In line with this, Lei et al. [111] proposed a hierarchical Energy Management Strategy based on HIO and MDDP–FSC, optimizing the power distribution and improving the hydrogen consumption efficiency by 95.20% for FCHEVs. This approach extended the lifespan of both the battery and fuel cell, while enhancing the overall system efficiency.

In terms of system integration and environmental adaptability, RL applications continue to expand. Pardhasaradhi et al. combined a deep reinforcement learning architecture (DRLA) with the global thermal optimal approach (GTOA) for a hybrid fuel cell–battery–supercapacitor system, significantly improving its dynamic performance [112]. Liu et al. introduced a reward function based on the remaining driving range, optimizing the SOC maintenance and control strategy, and validated its real-time performance and reliability through hardware-in-the-loop (HIL) testing [113].

Overall, RL has reached a near-mature level of control performance in HEV energy management, with fuel economy typically reaching 90–97% of the DP benchmark and computational efficiency gains ranging from 50% to 90%. Future research may focus on reducing the training data requirements, improving the cost estimation accuracy, and achieving vehicle-to-grid (V2G) integration. Lin et al. recommended combining distributed learning and transfer learning to enhance the generalization capability, and integrating physical modeling with multi-constraint frameworks to improve system safety [114,115]. Furthermore, the deep integration of RL with V2X communication and energy trading is expected to become a critical direction in the next generation of intelligent energy management systems.

4.4. Integrated Benefits of Hybrid Strategies

Research on Energy Management Strategies for HEVs is gradually evolving from single-algorithm approaches to hybrid strategies that integrate multiple methodologies. By combining AI technologies with conventional optimization techniques, researchers have achieved significant advancements in fuel efficiency, real-time control performance, and environmental adaptability.

In the fusion of reinforcement learning and optimization algorithms, Reference [116] proposed a DDPG–ANFIS network that integrates DDPG with an ANFIS, achieving a near-DP-level real-time control performance in real-world environments. This method outperformed standalone strategies in both the Worldwide Harmonized Light Vehicles Test Cycle (WLTC) and on-road experiments. Similarly, References [69,70] combined DQL with MPC, constructing an integrated framework that merges offline training and rolling optimization. This approach achieved a fuel economy comparable to DP while reducing the single-step computation time to 33.4 ms, indicating a high real-time capability. Further, References [102,117] incorporated the Dyna-H algorithm and an Actor–Critic architecture (as shown in Figure 21), which maintained the optimization effectiveness while significantly lowering the computational burden. Notably, the Actor–Critic-based method achieved training costs only 2.76% higher than DP, with a computation efficiency increase of up to 88.7%.

Figure 21.

Training diagram of the Actor–Critic framework.

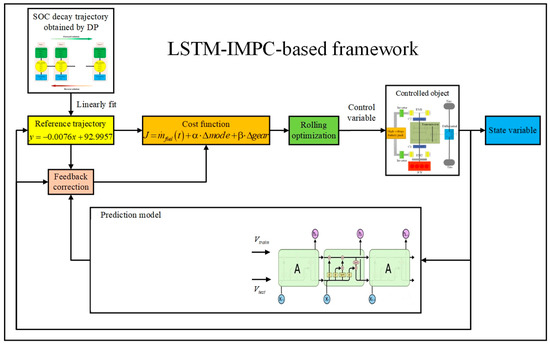

In the collaborative optimization of MPC and machine learning, Reference [118] embedded a long short-term memory (LSTM) network into an improved MPC framework (Figure 22), dynamically optimizing energy allocation and achieving an 18.71% improvement in fuel efficiency, approaching global optimality. References [119,120] employed convex optimization techniques such as the projected interior point method and the alternating direction method of multipliers (ADMM), which not only ensured computational efficiency but also outperformed DP in energy savings, demonstrating the potential of hybrid modeling in high-dimensional optimization problems.

Figure 22.

The overall control framework of LSTM–IMPC-based EMS.

The integration of fuzzy logic with intelligent optimization has also shown promising results in HEVs. Reference [121] proposed a Fuzzy Adaptive Equivalent Consumption Minimization Strategy (Fuzzy A-ECMS) that dynamically adjusts the equivalent factor, thereby improving both fuel economy and SOC stability under various standard driving cycles. Meanwhile, the fusion of data-driven techniques with simulation platforms has further extended the applicability of Energy Management Strategies. For instance, Reference [28] combined a TD3 algorithm with behavioral cloning regularization and simulation testing, achieving a 6.10% fuel saving rate in hardware-in-the-loop (HIL) experiments. Reference [122] employed a Gaussian Mixture Model (GMM)-based clustering of driving conditions to generate Stochastic Dynamic Programming (SDP) strategies, realizing the cooperative optimization of energy distribution efficiency and engine operation states.

To meet control demands under dynamic environments, the integration of reinforcement learning with Markov chains has emerged as a research hotspot. As shown in Figure 23, Reference [123] proposed a framework that combines online Markov chains with Speedy Q-Learning (SQL), allowing dynamic updates to state transition probabilities. This approach effectively accelerated policy convergence, enhanced fuel economy, and met real-time control requirements, showing a strong engineering adaptability in complex scenarios such as hybrid tracked vehicles.

Figure 23.

Flowchart of the control strategy based on SQL.

In addition, Tao et al. [124] proposed a hybrid strategy that combines Support Vector Machines (SVMs) and Artificial Neural Networks (ANNs) to optimize driving styles, achieving up to a 16.47% reduction in hydrogen consumption. This approach dynamically adapts the Energy Management Strategy based on the driving style, enhancing fuel efficiency under various driving conditions. Satheesh Kumar et al. [125] introduced a hybrid Energy Management Strategy by combining Finite Basis Physics-Informed Neural Networks (FBPINNs) with the Mountain Goat Optimizer (MGO). This method was highly effective in optimizing fuel economy, achieving a 95% improvement in efficiency by accurately predicting the fuel consumption and adjusting the Energy Management Strategies accordingly.

In conclusion, hybrid control strategies leverage the learning capability of AI and the theoretical strengths of traditional algorithms to achieve multi-objective optimization in HEV energy management. Future research may focus on the deep integration of reinforcement learning with deep models, while incorporating intelligent traffic information to improve the responsiveness of control strategies. In addition, optimization frameworks capable of handling multiple constraints should be developed, and real-world factors such as battery degradation should be embedded into control models to promote the widespread engineering application of hybrid strategies [70,102].

5. Limitations and Challenges of AI-Based Strategies

Balancing multiple conflicting objectives (fuel economy, emissions, battery health, and response time) inherently requires Pareto-efficient trade-offs. In practice, knowledge-driven controllers encode fixed trade-offs (e.g., rule- or fuzzy-based) to meet these goals, but they yield a single hand-crafted policy rather than a spectrum of Pareto-optimal solutions. Data-driven (supervised) models, likewise, learn one composite objective from data, so any multi-objective balance (e.g., including battery aging) still depends on manually weighted training targets. RL agents can, in principle, learn Pareto-efficient policies by reward design and have achieved near-optimal energy control in HEVs. However, as noted in [126], tuning multi-objective reward weights in rapidly changing driving scenarios is difficult, which typically limits RL to a narrow set of trade-offs. Hybrid EMS schemes may combine offline Pareto data (e.g., via DP) with online learning to cover more of the trade-off space, but this adds architectural complexity and, to date, unified Pareto-optimization remains largely unaddressed. Table 9 summarizes the relative capabilities of these AI strategies in handling multi-objective EMS tasks [127].

Table 9.

Comparative assessment of Pareto trade-off capabilities in AI-based EMSs [126,127,128,129].

5.1. Adaptability Issues of Knowledge-Driven Strategies

As explained earlier, rule-based energy management approaches for HEVs are mainly based on expert experience and preset rules to perform decision-making and control. They are easy to implement, have a low computational cost, and generally satisfy real-time control requirements. Additionally, their rule-based reasoning is transparent, with a high interpretability and engineering robustness [130,131]. Nonetheless, they have some major constraints as well.

Fixed rules are usually optimized for particular vehicle powertrains and specific sets of operating conditions and do not have the dynamic adaptation capability. The capability of such fixed rules to generalize under realistic and varied driving conditions is, hence, low, which renders them less optimal for unseen conditions [132]. The energy amount allocation strategy, as an illustration, can be optimized on normal driving cycles, yet perform poorly in encountering new patterns of driving or adverse conditions [27].

In contrast, expertise-based approaches rely heavily on human expertise, which constrains their potential for being optimized and their global optimality, respectively. In comparison to optimization-based approaches, such approaches fall short in maximizing the energy saving capabilities of hybrid powertrains in terms of suboptimal control performance [133]. Additionally, with different rule bases and tuning approaches being adopted by different developers, standardized evaluation criteria and benchmarking procedures are missing, which slows down objective comparisons between different expertise-based approaches and, to some degree, the progress of the field as well.

In short, important tasks facing knowledge-based energy management approaches are to improve their adaptability and generalizability, to unlock their optimization potential, and to build one integrated evaluation framework. These are open research problems to be addressed.

5.2. Data Dependency and Explainability Limitations of Data-Driven Strategies

Data-driven energy management policies are based on either past vehicle operation data or offline optimization solutions and can employ machine learning methods to automatically learn control policies. With better flexibility than rule-based methods, data-driven policies can detect more intricate nonlinear relationships without human interference, thus contributing to some level of improved fuel efficiency. Nevertheless, the implementation of such policies in real-world applications is still confronted with several challenges and technical hurdles.

First, the need for high-quality data creates inherent data collection and annotation challenges. Supervised learning, in fact, demands large quantities of training data covering extensive operating conditions, as well as trustworthy annotations—normally extracted from time-consuming offline optimum-seeking simulations. Without enough diversity and correct labeling, algorithms tend to overfit toward specific conditions, leading to a weak generalization to unknown conditions [134,135]. Although the need for labeled data is reduced by unsupervised learning, it still relies on a rich and diverse data distribution to account for common driving behaviors. In rare or boundary situations, data-driven approaches usually fail to learn adequate methods, leading to a degraded control performance in long-tail conditions [136].

Second, real-time execution is still a challenge. Even though deep or complex architecture trained models can be run fairly efficiently using present-day embedded hardware acceleration, it is still possible to fall short of the millisecond-level execution times needed for onboard controllers [137]. Moreover, data-driven models are usually “black boxes” with low interpretability, and it becomes hard to comprehend the reasons behind decisions [138]. The dearth of transparency gives rise to safety concerns in automotive applications involving safety, and makes debugging and validation cumbersome. In terms of algorithmic stability, data-driven approaches could be unstable both in training and actual operation. In training, random initial weight perturbations can lead to dramatically different learned policies. In deployment, the input being outside the training distribution can lead to anomalous or untrustworthy outputs [139]. Lastly, the lack of standardized datasets and widely accepted evaluation metrics amongst studies makes it hard to benchmark and comparatively, yet objectively, evaluate the performance of various data-driven approaches. Lacking this standardization, it is not easy to make distinct comparisons and progress within the domain.

Overall, augmenting the resilience and generalization capabilities of data-driven energy management approaches, diminishing the reliance on large and annotated data, increasing interpretability, and developing standardized evaluation protocols are critical challenges. With the improved availability of multi-source data provided by networked cars and self-driving vehicles, one of the primary challenges emerging is how to leverage this data effectively while maintaining confidence in the model.

5.3. Generalization and Safety Constraints in Reinforcement Learning Strategies

Reinforcement learning (RL)-based policies, which allow the automatic learning of energy management policies by means of iterated agent–environment interactions and trial-and-error learning, have been a hot research topic in recent years [53]. In contrast to supervised learning, RL does not need optimal control sequences as examples provided by humans. Instead, it uses a reward function to facilitate strategy optimization, with the agent being able to explore independent of human direction near-optimal solutions in large decision spaces [140]. This provides RL policies with the capability to beat human-designed rules and reach optimal control performance levels. For instance, deep reinforcement learning algorithms have been used in allocating energy to parallel hybrid vehicles, with better fuel-saving performance than traditional rule-based policies in simulations [141]. Nevertheless, implementing RL policies in real automotive applications is still confronted with various challenges.

First, sample efficiency and safe exploration are major concerns: large amounts of data are usually needed to train RL agents. Trial and error in real vehicles is slow and risky, so offline training often uses high-fidelity simulators (e.g., ADVISOR, and AVL Cruise) [142]. However, a significant experimental validation gap exists, as most studies rely solely on these simulation platforms. Simulation setups, though, fall short of closely modeling the complex dynamics and uncertainties involved in real conditions, and the discrepancy between virtual and real worlds can result in a dramatic performance degradation when policies well-performing in simulations are translated to real vehicles [143]. The lack of reported validation on real-vehicle testing platforms remains a critical barrier to deployment. RL policies can also destabilize or fail in long-tail circumstances (e.g., unusual weather or infrequent driving styles) because of the lack of training experience [144]. Generalization, robustness, and experimental validation are, therefore, still significant challenges to reinforcement learning in energy management, with the need to add varied scenarios and methods such as domain randomization to training, and, crucially, real-world testing [145].

Second, instability and convergence difficulties occur: the training process of deep reinforcement learning agents is sensitive to hyperparameters and initial conditions, and is likely to result in non-convergence or suboptimal performance. There is a need to fine-tune algorithms and network structures to overcome such difficulties [146]. Compared to static strategies, the policies of RL agents are not rigid rules but are iteratively updated, which makes it harder to ensure their performance stability [147].

Third, while real-time performance, in the case of online decision-making by learned RL policies, typically involves only one forward computation, decision latency and computational resource requirements must still be managed when the state space is large and sensory data is plentiful in complex environments [148]. Moreover, RL policies are often based on neural networks, which means their decision process is hard to interpret, and, thus, they are classic “black-box” models. This creates new safety and interpretability concerns in automotive control: engineering practices must guarantee safety constraints enforceable on vehicle control decisions by learned policies; otherwise, unacceptable control behaviors may occur [149]. The existing research seeks to embed safety considerations within the reward function and introduce rule-based constraints on training; yet, still, there is no mature solution to ensure the learned policies comply with the safety and legal-ethical specifications in every circumstance [150]. Demonstrating safety on real-vehicle platforms under diverse operating conditions is essential. Lastly, the differences in the simulation setups, vehicle models, experimental validation rigor (or lack thereof), and operating conditions among various studies, as well as the wide range of evaluation metrics (e.g., fuel consumption, emissions, and weight of battery degradation), result in the absence of common benchmarks with which to compare the performance of varied RL policies [151]. This benchmark gap is exacerbated by the scarcity of publicly available real-vehicle test results. In general, to leverage RL in the management of energy in HEVs, several challenges still need to be overcome, such as the efficiency in training, generalization of the adopted strategy, safety, experimental validation, and standards of validation [152]. Training with mixed data involving a real vehicle and simulations, the creation of reliable safety-constrained algorithms, the development and utilization of dedicated real-vehicle testing platforms, and the exploitation of connected vehicle predictive data have been proposed as research directions to enhance the performance and real-world applicability of RL strategies recently. These research directions indicate new challenges towards applying RL-based energy management in the age of AI [153].

5.4. Architectural Complexity and Validation Challenges of Hybrid Strategies

Hybrid energy management approaches are intended to integrate smart algorithms with classical rule-based control or optimization methods, reconciling the safety of rule-based methods with the superior performance of optimization methods to maximize the overall operating efficiency of HEVs [5,154]. Existing mainstream methods involve the following: applying rule-based methods to discrete control operations like engine start-stop, and optimizing continuous power allocation with reinforcement learning or neural networks, striking a trade-off between safety and flexibility; or utilizing algorithms like DP and ECMS to generate large amounts of optimal data offline used for training, greatly speeding the online response as well as approximating the optimal control performance [155]. Meanwhile, hierarchical control structures are also widely adopted, where the upper level is in charge of determining driving conditions and selecting corresponding sub-strategies, and the lower level executes in real time [156].

While the hybrid approach method is sophisticated, it has several technical difficulties in application contexts. The first one is architectural complexity: one needs to correctly delineate the functional interface of each submodule so as to not incur energy loss or system instability due to conflicting controls and frequent switching. Designing the mechanism of the optimal fusion of strategies is the key to success [157]. Secondly, the strategy fusion elevates the dimension of parameters, necessitating rule threshold tuning as well as frequent updates of the learning models, which greatly increases the cost of development and tests [72]. When it comes to real-time performance, in the case of integrating online optimization algorithms (like MPC) or deep-learning-based algorithms, the compression of algorithms or computational resource upgrading are necessary in order to ensure timely controls [158]. The question of interpretability is also crucial. While some solutions have sought to introduce expert rules in the learning module to constrain output values and increase strategy transparency [159], as soon as data-driven or RL algorithms are involved in the global architecture, their “black-box” status is still relevant. The validation of the strategies regarding safety and stability is still suboptimal. Lastly, unified evaluation metrics are still missing, and the majority of hybrid approaches only prove to be effective in particular test conditions, with the global capability to optimize fuel savings, emissions, and battery life as multi-objectives still deserving more research [114].

In the future, research needs to concentrate on developing a hybrid control framework with a unified architecture that can perform the dynamic selection and intelligent integration of various methods to manage the varied and complex operating conditions. Concurrently, control structures possessing good interpretability, safety assurance mechanisms, and excellent engineering implementability need to be developed, and integrated with the combination of simulation and real-vehicle experimental setups to evaluate their capabilities of generalization in depth. Although hybrid approaches are primitive in their development stage, the direction synthesizing conventional control stability features with the capabilities provided by AI-based optimization abilities is slowly emerging. With the advances in algorithms and computing capabilities, such approaches are anticipated to be very significant as an evolution trajectory of next-generation HEV energy management systems [109].

5.5. Future Research Directions and Development Trends of Different Strategies

For knowledge-driven strategies, the academic community has proposed various methods for improving adaptability and overcoming rigid rule structures. These include adaptive rule tuning, multi-mode switching logic based on driving condition recognition, and the integration of meta-rule frameworks [132]. Furthermore, fuzzy logic systems have been enhanced using intelligent optimization techniques to dynamically adjust control parameters. Moving forward, future research should emphasize the development of self-evolving control rules and lightweight expert-system fusion schemes to accommodate system degradation and variable user behavior over time [116].

In data-driven strategies, efforts have been made to improve generalization and robustness through the use of large-scale, diverse datasets and advanced feature extraction methods. Transfer learning and domain adaptation are increasingly adopted to extend the applicability across different vehicle types and driving conditions. In addition, hybrid models that embed physical constraints or expert knowledge into machine learning architectures have been introduced to enhance reliability and interpretability. Future research should focus on reducing the dependency on labeled data via self-supervised learning, improving model transparency, and ensuring long-term stability through continuous online adaptation [53].