Abstract

This research presents an advanced methodology for smart management of energy losses in electrical distribution networks by leveraging deep neural network architectures. The primary objective is to enhance the accuracy of short-term forecasting for nodal loads and corresponding energy losses, enabling more efficient and intelligent grid operation. Two predictive approaches were explored: the first involves separate forecasting of nodal loads followed by loss calculations, while the second directly estimates network-wide energy losses. For model implementation, Long Short-Term Memory (LSTM) networks and the enhanced Residual Network (eResNet) architecture, developed at the Institute of Electrodynamics of the National Academy of Sciences of Ukraine, were utilized. The models were validated using retrospective data from a Ukrainian Distribution System Operator (DSO) covering the period from 2017 to 2019 with 30 min sampling intervals. An adapted CIGRE benchmark medium-voltage network was employed to simulate real-world conditions. Given the presence of anomalies and missing values in the operational data, a two-stage preprocessing algorithm incorporating DBSCAN clustering was applied for data cleansing and imputation. The results indicate a Mean Absolute Percentage Error (MAPE) of just 3.29% for nodal load forecasts, which significantly outperforms conventional methods. These findings affirm the feasibility of integrating such models into Smart Grid infrastructures to improve decision-making, minimize operational losses, and reduce the costs associated with energy loss compensation. This study provides a practical framework for data-driven energy loss management, emphasizing the growing role of artificial intelligence in modern power systems.

1. Introduction

For the Ukrainian electricity market to function effectively, market participants, primarily Distribution System Operators (DSOs) and the Transmission System Operator (TSO), are obligated to purchase electricity to compensate for technical and non-technical losses within the power grid []. These purchases are necessary to maintain the reliability and balance of the electrical system, ensuring uninterrupted supply to end-users. The volume and cost of such energy losses are substantial, particularly in aging or overloaded infrastructure, which increases the financial burden on network operators []. Consequently, the expenses incurred from procuring electricity to cover these losses are embedded in the tariff structures regulated by national authorities []. As a result, these costs directly affect the final electricity prices paid by consumers, making energy loss management a key component of tariff policy and economic efficiency in the sector [,].

According to the latest publicly available reports of the energy regulator of Ukraine for the three quarters of 2021, the average total losses in the distribution network were 10.02%. For some distribution network operators, this percentage reaches 20%. This is significantly higher compared to most European countries, where typical loss levels range between 4% and 6% [,]. Such high losses indicate inefficiencies in network operation, aging infrastructure, and insufficient implementation of modern monitoring and control systems []. According to market rules [], Distribution and Transmission System Operators purchase electricity to cover their own losses on the wholesale electricity market. The earlier the electricity is purchased before the delivery date, the cheaper it is, and if the actual energy consumed does not match the energy purchased, an imbalance occurs, the price of which is the most expensive []. According to the analysis conducted in [], the calculation of the cost of forecast error for the New England power system market showed that the average annual losses for the period 2004–2014 for a company with a peak capacity of 1000 MW were estimated at USD 300 thousand for an increase in the error of short-term forecasts by 1%. For European markets, the annual cost of forecast error in 2017 reached OTE (Czech Republic)—EUR 890 thousand, EPEXSPOT (Western Europe)—EUR 340 thousand, and NordPool (Northern Europe and the Baltic States)—EUR 400 thousand. Calculations conducted for the Ukrainian market in 2019 showed that the price of 1 MWh of loss forecast error is ~8 €/MWh []; now, it is much higher because the day-ahead market price has increased four times, and UAH has devalued against EUR by 1.7. During winter peak load periods, when energy consumption surges and grid stress intensifies, improving the accuracy of energy loss estimation and forecasting becomes especially critical for ensuring system reliability and economic efficiency []. Therefore, increasing the accuracy of loss forecasts reduces their costs by reducing imbalances, which, in turn, can reduce the cost of their services to end-users.

High levels of electrical energy losses in distribution networks not only lead to economic inefficiencies but also contribute significantly to environmental degradation []. In systems with outdated infrastructure, such as in parts of Ukraine, these losses result in the unnecessary generation of additional electricity to compensate for inefficiencies, often sourced from fossil fuel-based power plants []. This excess generation increases greenhouse gas emissions, accelerates resource depletion, and elevates the overall carbon footprint of the energy sector. Moreover, the inability to accurately forecast and manage losses may hinder the integration of renewable energy sources, through competition for the “rotating” reserves of thermal power plants, which are necessary to compensate for the stochasticity of renewable generation, thereby prolonging dependency on non-renewable and environmentally harmful energy systems []. Addressing these environmental challenges through the application of smart forecasting methods, such as deep neural networks, supports a more sustainable energy transition by minimizing waste, improving grid efficiency, and enabling smarter, cleaner energy management [,].

Rapid changes in the topology of electrical networks can significantly impact the accuracy of loss forecasts, as these changes introduce more variability and complexity into the system [,]. When network topology undergoes sudden shifts, it disrupts the predictable patterns of energy flow, making it difficult to forecast energy losses as a single time series []. This increased unpredictability leads to larger forecast errors, as traditional models may not account for such dynamic alterations in network structure []. As a result, the efficiency of network management is compromised, since operators may not have accurate information to make informed decisions regarding load balancing, energy procurement, or loss mitigation strategies [,]. To address this challenge, more advanced forecasting techniques that can adapt to these topological changes are needed to enhance the reliability and effectiveness of network management practices [,].

The realization of the set goal involves solving the following tasks:

- The detection and replacement of anomalous values in data;

- The development of short-term forecasting models for aggregated values of electrical energy losses in the electrical network based on retrospective data, using deep learning artificial neural networks, which has allowed for an increase in the accuracy of determining losses without performing their calculation compared to existing methods;

- The development of short-term forecasting models for node loads based on deep learning artificial neural networks, which has enabled an increase in the accuracy of determining calculated values of losses in the electrical network by incorporating forecasted node load values during their calculation. With this approach, changes in network topology can be taken into account at the loss calculation stage.

Considering the necessity of accurate load forecasting, the idea of using advanced forecasting methods to determine electrical energy losses in electrical networks has gained increasing attention []. Forecasting energy losses is crucial for efficient grid management, as it allows operators to anticipate and mitigate potential inefficiencies. In this regard, artificial neural networks (ANNs) have emerged as effective forecasting methods, offering significant advantages over traditional models []. These networks can identify complex patterns and relationships in large datasets, making them well-suited for predicting both load demands and associated energy losses []. As machine learning models, ANNs can continuously improve their accuracy through training with historical data, adapting to changing grid conditions over time. Given their ability to handle large volumes of data and predict nonlinear behaviors, artificial neural networks have become recognized as highly effective prediction models for forecasting electrical energy losses in modern distribution networks [,].

It is crucial that reliable load forecasting results are available at the nodes of the calculation scheme for various forecasting horizons, as they play a significant role in enhancing the efficiency of electricity network management. In addition to reducing electricity purchase costs, accurate forecasting enables better decision-making regarding load distribution, minimizing operational inefficiencies, and optimizing the overall performance of the network []. This becomes especially relevant in the context of modernizing the Ukrainian electrical grid, where the implementation of Smart Grid systems can further improve the management of network modes and ensure greater stability and reliability in the face of fluctuating demand and supply conditions [,].

Classical forecasting methods include autoregressive integrated moving average (ARIMA) time series methods, as well as Holt–Winters exponential smoothing methods. Methods based on artificial neural networks are considered modern forecasting methods and are more popular due to their accuracy, speed, and efficient learning. In the article [], a combined neural network based on a multilayer perception and autoregression algorithm was constructed for single factor forecasting, with the autoregression algorithm used for data preprocessing. The validation was carried out on the PJM power system data in the USA, covering 96 nodes from 2014 to 2015 with hourly discreteness. Another example of a combined neural network is described in [], consisting of multiple neural networks and a fuzzy logic module (PROTREN) for trend detection. The data for the study included information from the isolated power system of the island of Crete, as well as air temperature data.

For single factor forecasting of node loads, the Support Vector Machine (SVM) method can be employed, as described in [], where its application during the management of the energy system in the Shandong province of China is discussed. The model takes into account the relationship between the total active load of the system and the node, as well as the relationship between the active and reactive power of the node, and the connection among the average power values of all nodes. To assess the effectiveness, the Support Vector Machine method was compared with a nonlinear autoregressive neural network [] for forecasting the active load of the node and an adaptive Kalman filter for predicting node power coefficients.

Studies on energy management in electrical distribution networks have increasingly explored the use of machine learning techniques, particularly deep neural networks (DNNs), for load forecasting and fault detection. Research demonstrates the effectiveness of deep learning models, such as Long Short-Term Memory (LSTM) and convolutional neural networks (CNN), in capturing complex temporal dependencies in power consumption data [,]. These works primarily focus on predictive accuracy without addressing real-time control or energy loss management []. Other approaches, including optimization-based and rule-based systems, have been employed to reduce energy losses, but often lack adaptability and scalability when dealing with dynamic network conditions []. Furthermore, most existing studies treat load forecasting and loss mitigation as separate tasks, thereby missing the opportunity for synergistic improvement through integrated models [,,].

2. Methodology—Forecasting of Nodal Load

The methodology for forecasting nodal load in distribution networks using deep neural networks (DNNs) combines advanced machine learning techniques with domain-specific data to improve accuracy in energy loss prediction []. This study employs Long Short-Term Memory (LSTM) networks and the recently developed eResNet architecture, specifically designed for handling the complex dynamics of electrical networks [,,]. LSTM networks, known for their ability to capture temporal dependencies, are utilized to predict nodal load over short-term horizons, taking into account the historical load data from Ukrainian distribution networks. The eResNet architecture, a specialized model for energy systems, is used for more efficient processing of the grid’s varying topologies and energy flows [,]. These models are trained on historical data from the CIGRE medium-voltage network, covering the years 2017–2019, with a focus on 30 min intervals to account for the granularity of real-time grid operations [].

The forecasting process involves a two-stage approach to handle missing or anomalous data within the dataset []. The first stage applies the DBSCAN clustering method to detect and replace anomalous values, ensuring a cleaner dataset for model training. After preprocessing, the trained models are tested and validated against real-world data provided by the Ukrainian Distribution System Operator, with an emphasis on minimizing forecasting errors [,,]. The methodology also explores the integration of these forecasting models within Smart Grid systems, focusing on how real-time, accurate load predictions can enable more efficient energy loss management and decision-making in energy procurement and energy saving [,]. This comprehensive approach not only enhances forecasting accuracy but also contributes to the development of smarter, more sustainable energy networks in Ukraine and beyond [,,].

To reduce the cost of energy losses, the utilization of modern methods for calculating and forecasting electrical energy losses using deep learning artificial neural networks is proposed. Various architectures of deep learning neural networks were employed to assess the effectiveness of forecasting electrical energy losses. Two approaches to forecasting losses were developed to test the accuracy of the prediction models:

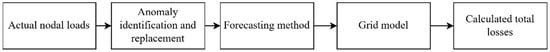

- Forecasting the load at each node simultaneously, followed by data consolidation and loss calculation based on the forecasted data (Figure 1);

Figure 1. Block diagram of the algorithm for calculating losses based on forecasted values.

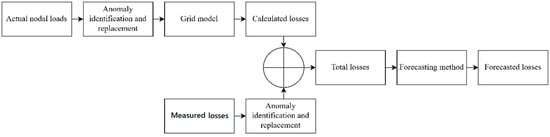

Figure 1. Block diagram of the algorithm for calculating losses based on forecasted values. - Directly calculating and then forecasting the total electrical energy losses (Figure 2).

Figure 2. Block diagram of the algorithm for the calculation and forecasting of losses.

Figure 2. Block diagram of the algorithm for the calculation and forecasting of losses. - Previous versions of algorithms for predicting and calculating electricity losses without analysis blocks and replacing anomalous values are given in the article [].

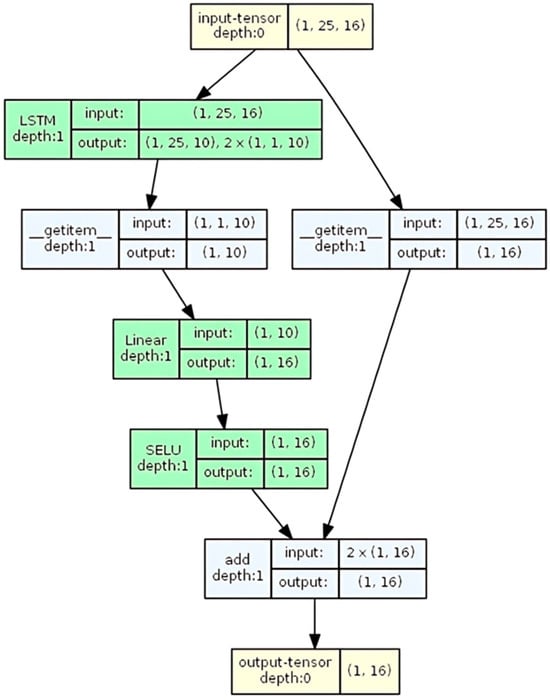

- A recurrent neural network of the type LSTM (Long Short-Term Memory), described in [], was used to forecast all load nodes. This neural network is a combined neural network architecture based on a recurrent LSTM module and a multilayer perception with two hidden layers. The SELU (scaled exponential linear unit) function was used as the activation function. A shortcut connection was used to mitigate the vanishing gradient (Figure 3).

Figure 3. Architecture of the LSTM-based network for simultaneous nodal load forecasting.

Figure 3. Architecture of the LSTM-based network for simultaneous nodal load forecasting.

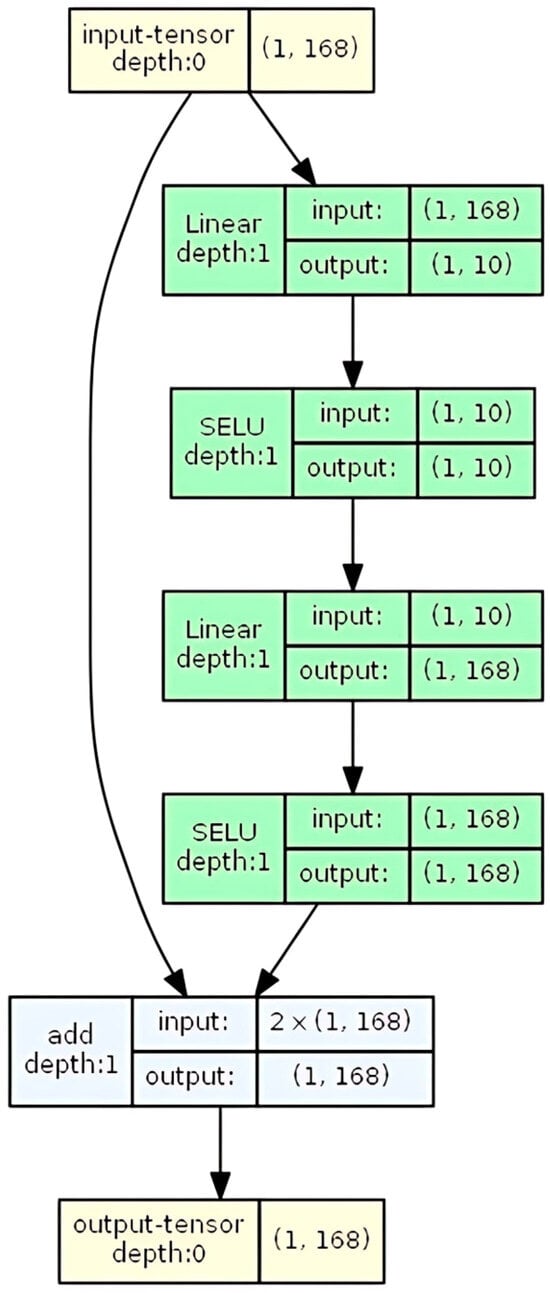

The neural network eResNet was used to forecast the time series of losses. This network was tested in the task of forecasting electrical load []. It includes 3 autoencoder blocks. Each block contains two dense layers with SELU activation and an identity shortcut. The output of the block is the element-wise sum of the output of the autoencoder and the shortcut (Figure 4).

Figure 4.

The autoencoder block with a shortcut connection of eResNet.

The stability of the proposed forecasting models was indirectly validated through the inclusion of perturbed data in the training and testing process. Specifically, the forecasting models were trained on datasets that included both verified and anomalous values, allowing them to learn from a variety of real-world scenarios, including load spikes, missing entries, and operational inconsistencies [,]. The use of the DBSCAN-based preprocessing framework further enhanced model robustness by cleansing the data of extreme outliers while preserving realistic fluctuations in load behavior. As shown in Table 1, the LSTM-based model maintained a low MAPE of 3.29% even after being tested on verified data derived from real network operations with inherent anomalies. Moreover, the architecture of the eResNet model, featuring residual connections and autoencoder blocks, enables stable learning and avoids gradient vanishing in deep networks, which is critical when processing abnormal or noisy inputs [,]. Importantly, the system’s ability to recalculate losses using forecasted nodal loads allows for the reflection of topological changes without requiring model retraining, which significantly contributes to operational stability under emergency conditions [].

Table 1.

Errors in forecasting electrical energy losses.

In a recent study, an adapted version of eResNet was evaluated against several forecasting models, including a linear regression stack, multilayer perception (MLP), ε-support vector regression (ε-SVR), and the seasonal autoregressive integrated moving average (SARIMA) model. The results showed that eResNet achieved significantly lower values of root mean square error (RMSE) and maximum absolute error compared to the other models. This confirms the strong potential of deep residual networks for short-term renewable energy forecasting tasks [].

Using a single network to forecast the load of all nodes of the power system simultaneously has several advantages. The complexity of the model in terms of the number of parameters increases more slowly with an increase in the number of nodes, which reduces the required number of computational resources. In addition, a unified model can learn cross-node dependencies implicitly, whereas isolated models treat each series as independent and therefore discard useful information embedded in the joint dynamics. However, the presence of significant correlation between nodes can lead to overfitting of the network, so special attention should be paid to the use of techniques to prevent overfitting. A single model implies one set of hyper-parameters, one retraining trigger, and one version to validate and deploy, lowering engineering overhead and the probability of configuration drift. In [], it is shown that the use of a network based on LSTM gives comparable accuracy indicators to the forecast of the load of each node by a separate model, but achieves a shorter training time, and this effect will be even more noticeable when predicting a larger number of nodes.

Both forecasting methods were adapted to retrospective data from “Vinnytsiaoblenergo”, which provided a detailed dataset for load and energy loss calculations. The training process for both algorithms was conducted using the ADAM optimization algorithm, known for its efficiency in handling large datasets and improving model performance. The use of the ADAM algorithm ensures that the models achieve higher accuracy by optimizing the learning rate and minimizing errors during the training process.

Training of the LSTM-based neural network took an average of 15 min (30 runs) on an Intel i3 10100 3.6 GHz processor and 16 GB RAM. The inference time is a few milliseconds. This does not pose a problem, considering that the discreteness of the time series is 30 min and the forecast horizon is 24 hours. Training one eResNet network took an average of 8 min (30 runs).

3. Results—Network Development and Retrospective Data Analysis

This research focused on developing a representative model of the electrical distribution network to enable accurate forecasting and loss estimation. A thorough analysis of retrospective load data was conducted to identify trends and anomalies that affect neural network efficiency. This data served as the foundation for training and validating forecasting models based on deep neural networks. The results demonstrated that integrating accurate modeling with historical data significantly improves the prediction of energy losses and overall network performance.

The calculation of losses and the forecasting of electrical energy were carried out using retrospective data provided by a Ukrainian Distribution System Operator (DSO). This dataset includes measurements from 16 load nodes, covering the period from 2017 to 2019, with a time resolution of 30 min. The total volume of processed data amounts to 48,048 individual values, ensuring a substantial foundation for model training and validation. Such detailed temporal and spatial data enable accurate modeling of load behavior and improve the reliability of loss prediction in electrical networks.

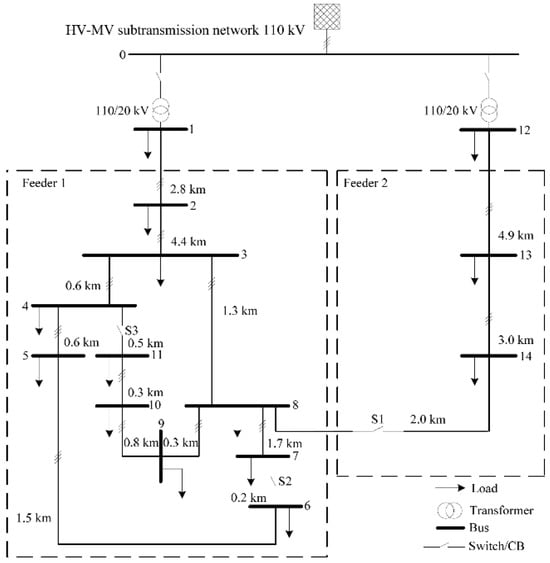

For modeling the electrical network used in loss calculation, the CIGRE [] benchmark medium-voltage level network was selected as the foundational structure for the test power system. This standardized model is widely recognized for its applicability in analyzing the integration of distributed energy resources and assessing network behavior under varying load conditions. It provides a well-documented topology and parameters that support accurate simulation and validation of forecasting algorithms. The schematic layout of the adopted test system is illustrated in Figure 5, highlighting key nodes and network components used in the analysis.

Figure 5.

CIGRE network diagram.

The topology of the distribution network directly influences the pattern and level of electrical energy consumption at various nodes, as it determines the flow paths and voltage profiles across the system. Nodes located farther from the source or in branches with higher impedance tend to exhibit greater losses and fluctuating consumption behavior due to voltage drops and load concentration []. Analyzing energy consumption in relation to network topology allows for the identification of critical sections where optimization or reinforcement can lead to significant reductions in technical losses and improved energy efficiency.

The Python (version 3.13.0) programming language and the Pandapower data analysis library were utilized for the construction and analysis of the test power system. This library constitutes a standalone set of tools for the development and analysis of electrical systems. The library encompasses a wide array of diverse models for electrical networks, including numerous test systems and examples of CIGRE power systems [].

The test power system consists of two transformers with a capacity of 40 MVA 110/20 kV, 15 nodes, 18 load sources, with two sources removed to align with retrospective data, 14 lines, including 12 cable lines, two overhead lines, and a switch. All elements used in this network are elements of the Pandapower library.

This test network was developed to simulate the operating modes of the electrical network and determine electrical energy losses; this network is presented in the article []. Since this network is built based on a real European network, it differs significantly in terms of load composition and network parameters from the Ukrainian network. Therefore, to ensure the proper functioning of the loss calculation algorithm, the calculation scheme was adapted to use the available retrospective data.

Taking into account the differences in load magnitude between the CIGRE power system and DSO data, cable and overhead lines were replaced with lines of larger cross-sections for the proper functioning of the test power system. Additionally, the node load data from the DSO was distributed according to the load magnitudes of the nodes in the CIGRE power system.

After conducting preliminary data analysis, it was identified that the data contains missing values and anomalous values that significantly deviate from normal values. To identify and replace missing values and anomalous data [,], a two-stage data validation algorithm was employed using the DBSCAN [] clustering method. The proposed validation algorithm for a single node is seen in Algorithm 1.

| Algorithm 1. Two-stage data validation algorithm for a single node |

| Input: Continuous load time series Rn×1 |

| Output: Validated and corrected load time series |

| 1: Select time slices from the continuous load time series Rn×1 → R(n/24 × 24) |

| 2: for each time slice do |

| 3: Apply DBSCAN clustering method to detect rough anomalous values |

| 4: if value does not belong to the first cluster, then |

| 5: Mark values abnormal |

| 6: end if |

| 7: Replace abnormal values using linear interpolation |

| 8: end for |

| 9: Merge corrected time slices back into the continuous load series R(n/24 × 24) → Rn×1 |

| 10: Decompose the time series into trend, seasonal, and residual components |

| 11: Apply DBSCAN to detect anomalous values in the residual component of the time series |

| 12: for each detected anomaly do |

| 13: Replace value using linear interpolation |

To estimate local data density, the algorithm relies on two hyper-parameters: ε, the radius of the neighborhood centered on a point p, and minPts, the minimum number of observations that must fall within that radius for p to be treated as a core point. In this study, the DBSCAN clustering routine was configured with minPts = 5 and ε = 0.5.

In addition, a two-sided moving-average decomposition with variable window widths was applied to the load time series. This decomposition—implemented as an adaptive model with stable seasonality and a seasonal lag of seven periods—enhanced the detection of anomalous values while reducing false positives in nodes that exhibit strong daily and weekly cycles.

The retrospective nodal load data was divided into training and testing datasets for two categories: one containing anomalous values and another without anomalies (following validation and preprocessing). This categorization was implemented to evaluate the robustness and adaptability of the forecasting models under both normal and perturbed operating conditions. The training datasets included all nodal load values except for a subset of 336 values, which represented one full week (7 days) of data. These 336 values were extracted and designated as the testing set. This approach enabled a comprehensive assessment of the model’s forecasting accuracy and generalization capacity in realistic scenarios, including periods characterized by unexpected fluctuations or abnormal load behaviors.

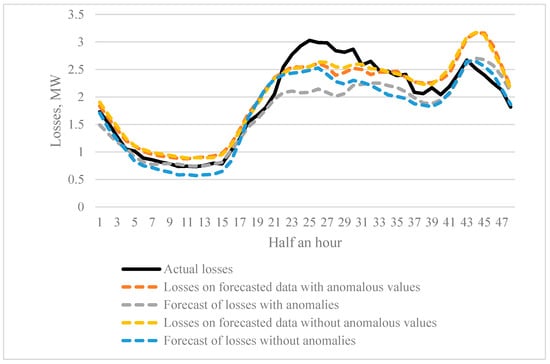

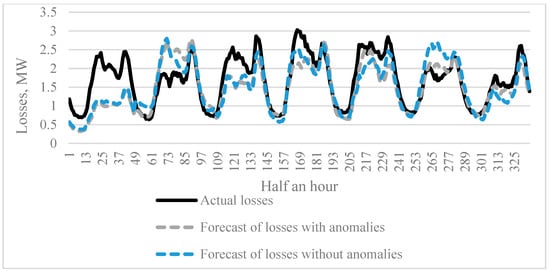

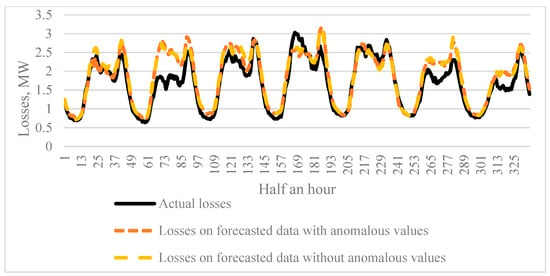

The results of forecasting electrical energy losses for both daily and weekly horizons are presented in Figure 6, Figure 7 and Figure 8. These visualizations illustrate the predictive performance of the neural network models under different temporal conditions. The comparative analysis of short-term (1 day) and medium-term (7 days) forecasts enables a deeper understanding of the model’s accuracy and adaptability across various load behavior patterns.

Figure 6.

Half-hourly values of losses using different forecasting methods.

Figure 7.

Half-hour losses for one week using loss forecasting methods.

Figure 8.

Calculated half-hour losses over one week using forecast data.

To assess the accuracy of the presented approaches for forecasting electrical energy losses, the Mean Absolute Percentage Error (MAPE) metric was employed. This evaluation criterion is widely used due to its interpretability and effectiveness in measuring prediction accuracy in percentage terms. Table 1 provides a summary of the forecast errors for both daily and weekly loss prediction horizons. The results reflect the performance of two distinct forecasting approaches applied to retrospective nodal load data. Lower MAPE values indicate better model accuracy, helping to identify the more efficient forecasting method under varying network conditions.

Having conducted forecasting and loss calculation using all approaches, it is evident that the method of load forecasting with subsequent loss calculation tends to yield a lower error in loss predictions compared to other methods. This approach has proven to be more accurate in estimating energy losses in the distribution network. Additionally, the application of advanced data analysis techniques, such as the detection and replacement of anomalous values, significantly enhances the accuracy of the forecasting results. This highlights the importance of data quality in improving the reliability and precision of the predictions.

The research findings are an essential part of the broader effort to develop a Smart Grid model, which aims to optimize the operational management of distribution networks. By incorporating artificial intelligence methods into the management processes, the results of this study contribute to the refinement of Smart Grid technologies, enabling more efficient and reliable energy distribution. These advancements are particularly relevant for enhancing grid management in modern energy systems.

4. Discussion

The results of this research reveal key insights into the modeling and forecasting of electrical energy losses within a distribution network. By using deep learning techniques, this study successfully identified how load forecasting, followed by loss calculation, could reduce forecasting errors [,,,]. Specifically, the use of a detailed historical dataset provided by a Ukrainian DSO, combined with advanced data validation methods, significantly improved the accuracy of energy loss predictions. The comparison of forecasting approaches, particularly those involving data with and without anomalies, showed that preprocessing and data quality directly impact the forecasting outcomes [,,]. This highlights the critical role of effective data handling, such as the identification and replacement of anomalous values, in enhancing the reliability of predictive models.

Furthermore, the integration of the CIGRE benchmark network model allowed for a standardized and robust platform to simulate loss calculation and evaluate different forecasting methods [,,]. The results of the daily and weekly forecasting demonstrated that including node-specific data and applying different modeling techniques could account for fluctuations and anomalies inherent in real-world scenarios. This is important for distribution network operators as it provides more accurate projections, which are essential for improving operational efficiency. In addition, the study’s findings contribute to the growing body of research supporting the development of Smart Grid systems, where AI-driven management strategies can be used to optimize network performance and reduce energy losses [,,]. These advancements will play a significant role in enhancing the overall stability and efficiency of modern electrical grids.

Recent advances in deep reinforcement learning (DRL) for energy systems have demonstrated promising results in enhancing the real-time efficiency of complex infrastructure. For instance, recent research has developed a real-time energy management strategy for a smart traction power supply system using Deep Q-Learning, achieving improved adaptability under dynamic operational conditions []. Similarly, another study proposed a multi-timescale reward-based DRL approach for managing energy in regenerative braking energy storage systems, highlighting the potential of reinforcement learning in multi-agent and time-sensitive scenarios []. While these studies demonstrate the applicability of DRL in transportation-related energy systems, their focuses remain domain-specific and do not address broader challenges within electric distribution networks—particularly those involving the simultaneous tasks of load forecasting and real-time energy loss management. In contrast, our approach is situated within the distribution-level Smart Grid domain, integrating deep learning not only for predictive load modeling but also for the real-time identification of energy loss patterns. This positions our work as a complementary yet distinct advancement within the broader landscape of intelligent energy management solutions.

The design of the proposed neural network architecture incorporates several techniques to mitigate overfitting and ensure robust generalization across unseen data. First, both the LSTM-based and eResNet models utilize shortcut (residual) connections, which not only facilitate gradient flow but also act as implicit regularizers that prevent the network from overfitting to noise in the training data []. Second, a unified model is used for simultaneous forecasting across multiple nodes, which inherently reduces the number of parameters compared to training separate models per node. This architectural decision minimizes the risk of overfitting by decreasing model complexity while preserving its ability to capture cross-node dependencies []. Furthermore, DBSCAN-based preprocessing removes anomalous or extreme outliers, helping the network focus on learning meaningful patterns rather than noise [,]. Additionally, model performance was validated on a hold-out test set representing one week of unseen data, confirming its capacity to generalize beyond training inputs. During training, optimization was performed using the ADAM algorithm with appropriate learning rate schedules to avoid excessive parameter updates that could lead to memorization. Together, these design principles and validation strategies confirm that the proposed models are resistant to overfitting and capable of stable performance in real-world operational settings.

Classical forecasting techniques, including ARIMA and Holt–Winters exponential smoothing, have traditionally been employed for load and loss prediction due to their simplicity and interpretability []. However, these methods rely on linear assumptions and exhibit a limited capacity in modeling the complex, non-stationary behavior of modern distribution networks, especially under conditions involving abrupt load fluctuations, topological reconfigurations, or incomplete datasets []. In contrast, the deep learning approaches proposed in this study, specifically LSTM-based networks and the eResNet architecture, demonstrate a superior ability to learn the temporal dependencies and nonlinear relationships inherent in power system data [,]. These models not only outperform classical approaches in terms of predictive accuracy (achieving MAPE as low as 3.29%), but also exhibit enhanced robustness when handling anomalous or missing values through preprocessing with DBSCAN [,]. Additionally, while classical methods often require model retraining when network conditions change, the proposed neural networks can accommodate such changes at the loss calculation stage without retraining the forecasting model []. Furthermore, the unified neural network architecture supports simultaneous forecasting across multiple nodes, improving scalability and learning of cross-node dependencies—capabilities not feasible with conventional univariate models [,]. Therefore, from both theoretical and practical standpoints, the proposed AI-based methods provide a more flexible, adaptive, and accurate framework for energy loss forecasting in Smart Grid environments.

By adopting these methods, grid operators can better anticipate load behavior and optimize resource allocation, leading to more sustainable energy consumption practices. Future research should further refine these models, exploring additional network configurations and forecast horizons to increase the model’s generalizability. Additionally, integrating real-time data and considering additional environmental factors may further enhance the predictive accuracy and operational effectiveness of the system.

Even though this approach allows us to significantly reduce the error of loss forecast, it is necessary to assess robustness under dynamic conditions. One of its advantages is that when changing the network topology, it is not necessary to retrain the models for load forecasting, except in particularly extreme cases when, due to emergency situations, the change in the network topology limits the load in the nodes.

The proposed approach has significant drawbacks that limit its practical application. For its effective use, long-term load data for each node is required, the storage period of which may be shorter than the data on total losses.

5. Conclusions

This research presents an effective method for short-term forecasting of aggregated electrical energy loss values in the power grid, utilizing deep learning artificial neural networks. This method significantly improves accuracy in predicting energy losses compared to traditional techniques that rely on direct loss calculations. By leveraging retrospective data, this approach eliminates the need for physical loss measurements, offering a more efficient and scalable solution for forecasting energy losses in distribution networks. The integration of advanced deep learning algorithms enhances the precision of these forecasts, ensuring more reliable predictions for grid management.

To forecast losses, this research also proposes methods for short-term forecasting of node loads based on deep learning artificial neural networks. By forecasting node loads, the model improves the accuracy of loss predictions by incorporating forecasted load values during the calculation process. This dual approach, forecasting both node loads and energy losses, proves highly effective in improving the reliability and accuracy of loss estimations. The incorporation of forecasted load data further refines the overall model, enabling more precise calculations of electrical energy losses in the power grid.

This study also emphasizes the importance of data verification and anomaly detection methods in improving the accuracy of forecasting models. By identifying and replacing anomalous values within the retrospective load data, this research demonstrates how additional data preprocessing can significantly reduce forecasting errors. The results show a marked improvement in loss predictions when using verified data, as opposed to forecasting based on raw data that includes anomalous values. This highlights the importance of maintaining high-quality, clean datasets for accurate predictive modeling in power grid management.

The forecast error analysis shows that when using artificial neural networks, the Mean Absolute Percentage Error (MAPE) for verified node load data used in loss calculations is as low as 3.29%. In contrast, forecasts based on unverified or anomalous data yield significantly higher error margins, with an MAPE of 20.24% for verified loss data and 20.08% for unverified loss data. These findings underscore the effectiveness of data verification methods in enhancing the precision of forecasting models. Overall, the proposed forecasting techniques offer a promising solution for optimizing power grid operations and improving energy loss management.

Author Contributions

Conceptualization, I.B., V.R., P.S., V.S. and V.M.; Methodology, I.B., V.R., P.S., V.S. and V.M.; Software, I.B., V.R., P.S., V.S. and V.M.; Formal analysis, I.B., V.R., P.S., V.S. and V.M.; Investigation, I.B., V.R., P.S., V.S. and V.M.; Resources, I.B., V.R., P.S., V.S. and V.M.; Data curation, A.D., K.S. and R.D.; Writing—original draft, A.D., K.S. and R.D.; Writing—review and editing, A.D., K.S. and R.D.; Visualization, A.D., K.S. and R.D.; Supervision, A.D., K.S., and R.D.; Project administration, A.D., K.S. and R.D.; Funding acquisition, A.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Blinov, I.; Miroshnyk, V.; Shymaniuk, P. The cost of error of “day ahead” forecast of technological losses of electrical energy. Tekhnichna Elektrodynamika 2020, 5, 70. (In Ukrainian) [Google Scholar] [CrossRef]

- Verkhovna Rada of Ukraine. On the Electricity Market: Law of Ukraine No. 2019-VIII. 13 April 2017. Available online: https://zakon.rada.gov.ua/laws/show/2019-19#Text (accessed on 11 April 2025).

- National Energy Utilities Regulatory Commission of Ukraine. Annual Report 2023; NEURC: Kyiv, Ukraine, 2023. Available online: https://www.nerc.gov.ua/ (accessed on 9 April 2025).

- International Energy Agency. Electricity Market Report 2023; IEA: Paris, France, 2023; Available online: https://www.iea.org/reports/electricity-market-report-2023 (accessed on 9 April 2025).

- European Commission. Benchmarking Smart Metering Deployment in the EU. Directorate-General for Energy. 2022. Available online: https://energy.ec.europa.eu (accessed on 10 April 2025).

- Market Operator of Ukraine. Rules of the Day-Ahead and Intraday Markets. 2021. Available online: https://www.oree.com.ua/?lang=english (accessed on 11 April 2025).

- Olabi, A.G.; Abdelkareem, M.A.; Wilberforce, T.; Elsaid, K. Smart energy grids and forecasting systems using AI: A review. Renew. Sustain. Energy Rev. 2021, 149, 111284. [Google Scholar] [CrossRef]

- Hong, T. Crystal Ball. Lessons in Predictive Analytics. EnergyBiz. 2015, 12, 35–37. [Google Scholar]

- Liu, Z.; Singh, C. Impact of load forecasting uncertainty on reliability of power systems. IEEE Trans. Power Syst. 2018, 33, 243–252. [Google Scholar]

- Woźniak, G.; Bryś, W.; Dychkovskyi, R.; Dyczko, A.; Nowak, T.; Piekarska-Stachowiak, A.; Trząski, L.; Molenda, T.; Hutniczak, A. Modelling ecosystem services—A tool for assessing novel ecosystems functioning in the urban-industrial landscape. J. Water Land Dev. 2024, 63, 168–175. [Google Scholar] [CrossRef]

- Beshta, O.; Albu, A.; Balakhontsev, A.; Fedoreyko, V. Universal model of the galvanic battery as a tool for calculations of electric vehicles. In Power Engineering, Control and Information Technologies in Geotechnical Systems; CRC Press: Boca Raton, FL, USA, 2015; pp. 7–11. [Google Scholar] [CrossRef]

- Polyanska, A.; Pazynich, Y.; Petinova, O.; Nesterova, O.; Mykytiuk, N.; Bodnar, G. Formation of a Culture of Frugal Energy Consumption in the Context of Social Security. J. Int. Comm. Hist. Technol. 2024, 29, 60–87. [Google Scholar] [CrossRef]

- Glisic, S.G.; Lorenzo; Beatriz, L. Artificial Neural Networks. In Artificial Intelligence and Quantum Computing for Advanced Wireless Networks; Wiley: Hoboken, NJ, USA, 2022; pp. 55–96. [Google Scholar] [CrossRef]

- Seo, J.; Cha, H. A New Reactive Power Compensation method for Voltage regulation of Low Voltage Distribution Line. In Proceedings of the 2021 IEEE Applied Power Electronics Conference and Exposition (APEC), Phoenix, AZ, USA, 14–17 June 2021; pp. 2681–2685. [Google Scholar] [CrossRef]

- European Network of Transmission System Operators for Electricity (ENTSO-E). ENTSO-ERDI Roadmap 2024–2034: Innovation Missions to Build the Power System for a Carbon-Neutral EUROPE; ENTSO-E: Brussels, Belgium, 2024; p. 38. Available online: https://www.entsoe.eu (accessed on 11 April 2025).

- Pazynich, Y.; Kolb, A.; Korcyl, A.; Buketov, V.; Petinova, O. Mathematical model and characteristics of dynamic modes for managing the asynchronous motors at voltage asymmetry. Polityka Energetyczna Energy Policy J. 2024, 27, 39–58. [Google Scholar] [CrossRef]

- Díaz-Bello, D.; Vargas-Salgado, C.; Alcázar-Ortega, M.; Águila-León, J. Optimizing Photovoltaic Power Plant Forecasting with Dynamic Neural Network Structure Refinement. Sci. Rep. 2024, 15, 3337. [Google Scholar] [CrossRef]

- Vladyko, O.; Maltsev, D.; Gliwiński, Ł.; Dychkovskyi, R.; Stecuła, K.; Dyczko, A. Enhancing Mining Enterprise Energy Resource Extraction Efficiency Through Technology Synthesis and Performance Indicator Development. Energies 2025, 18, 1641. [Google Scholar] [CrossRef]

- Richert, M.; Dudek, M.; Sala, D. Surface Quality as a Factor Affecting the Functionality of Products Manufactured with Metal and 3D Printing Technologies. Materials 2024, 17, 5371. [Google Scholar] [CrossRef]

- Mohsen, S.; Bajaj, M.; Kotb, H.; Pushkarna, M.; Alphonse, S.; Ghoneim, S.S.M.; Sun, Q. Efficient Artificial Neural Network for Smart Grid Stability Prediction. Int. Trans. Electr. Energy Syst. 2023, 2023, 9974409. [Google Scholar] [CrossRef]

- Beshta, O.; Cichoń, D.; Beshta, O., Jr.; Khalaimov, T.; Cabana, E.C. Analysis of the Use of Rational Electric Vehicle Battery Design as an Example of the Introduction of the Fit for 55 Package in the Real Estate Market. Energies 2023, 16, 7927. [Google Scholar] [CrossRef]

- Mohammad, F.; Kim, Y.-C. Energy load forecasting model based on deep neural networks for smart grids. Int. J. Syst. Assur. Eng. Manag. 2019, 11, 824–834. [Google Scholar] [CrossRef]

- Muralitharan, K.; Sakthivel, R.; Vishnuvarthan, R. Neural network based optimization approach for energy demand prediction in smart grid. Neurocomputing 2018, 273, 199–208. [Google Scholar] [CrossRef]

- Souhe, F.G.Y.; Mbey, C.F.; Boum, A.T.; Ele, P. Forecasting of electrical energy consumption of households in a smart grid. Int. J. Energy Econ. Policy 2021, 11, 221–233. [Google Scholar] [CrossRef]

- Ford, V.; Siraj, A.; Eberle, W. Smart grid energy fraud detection using artificial neural networks. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence Applications in Smart Grid (CIASG), Orlando, FL, USA, 9–12 December 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Hou, G.; Xu, K.; Yin, S.; Wang, Y.; Han, Y.; Wang, Z.; Mao, Y.; Lei, Z. A novel algorithm for multi-node load forecasting based on big data of distribution network. In Proceedings of the 2016 International Conference on Advanced Electronic Science and Technology (AEST 2016), Shenzhen, China, 19–21 August 2016; pp. 655–667. [Google Scholar]

- Hatziargyriou, N.; Wang, X.; Tsoukalas, L.H. A new methodology for nodal load forecasting in deregulated power systems. IEEE Power Eng. Rev. 2002, 22, 48–51. [Google Scholar]

- Gooi, H.B.; Han, X.S.; Han, L.; Pan, Z.Y. Ultra-short-term multi-node load forecasting—A composite approach. IET Gener. Transm. Distrib. 2012, 6, 436–444. [Google Scholar] [CrossRef]

- Bezerra, U.H.; Falcao, D.M. Short-term forecasting of nodal active and reactive load in electric power systems. In Proceedings of the Second International Conference on Power System Monitoring and Control, Durham, UK, 8–11 July 1986; Volume 266, pp. 18–22. [Google Scholar]

- Wang, Y.; Ji, L.; Li, B.; Wang, L.; Bai, Y.; Chen, H.; Ding, Y. Investigation on the thermal energy storage characteristics in a spouted bed based on different nozzle numbers. Energy Rep. 2020, 6, 774–782. [Google Scholar] [CrossRef]

- Planakis, N.; Papalambrou, G.; Kyrtatos, N. Ship energy management system development and experimental evaluation utilizing marine loading cycles based on machine learning techniques. Energy 2022, 306, 118085. [Google Scholar] [CrossRef]

- Maresch, K.; Marchesan, G.; Cardoso, G.; Borges, A. An underfrequency load shedding scheme for high dependability and security tolerant to induction motors dynamics. Electr. Power Syst. Res. 2021, 196, 107217. [Google Scholar] [CrossRef]

- Sievers, J.; Blank, T. Secure short-term load forecasting for smart grids with transformer-based federated learning. In Proceedings of the 2023 International Conference on Clean Electrical Power (ICCEP), Terrasini, Italy, 27–29 June 2023; pp. 229–236. [Google Scholar] [CrossRef]

- Chernenko, P.; Miroshnyk, V.; Shymaniuk, P. Univariable short-term forecast of nodal electrical loads of energy systems. Tekhnichna Elektr. 2020, 2, 67–73. [Google Scholar] [CrossRef]

- Kolb, A.; Pazynich, Y.; Mirek, A.; Petinova, O. Influence of voltage reserve on the parameters of parallel power active compensators in mining. E3S Web Conf. 2020, 201, 01024. [Google Scholar] [CrossRef]

- Seheda, M.S.; Beshta, O.S.; Gogolyuk, P.F.; Blyznak, Y.V.; Dychkovskyi, R.D.; Smoliński, A. Mathematical model for the management of the wave processes in three-winding transformers with consideration of the main magnetic flux in mining industry. J. Sustain. Min. 2024, 23, 20–39. [Google Scholar] [CrossRef]

- Bashynska, I.; Niekrasova, L.; Ivliev, D.; Dudek, M.; Kosenkov, V.; Yakimets, A. Justification for Transitioning Equipment to Direct Current for Smart Small Enterprises with Sustainable Solar Power Autonomy. In Proceedings of the 2024 IEEE 5th KhPI Week on Advanced Technology (KhPIWeek), Kharkiv, Ukraine, 7–11 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Kulkarni, V.A.; Katti, P.K. Estimation of Distribution Transformer Losses in Feeder Circuit. Int. J. Comput. Electr. Eng. 2014, 3, 659–662. [Google Scholar] [CrossRef]

- ELECTRA. Benchmark Systems for Network Integration of Renewable and Distributed Energy Resources. 2014. Available online: https://e-cigre.org/publication/ELT_273_8-benchmark-systems-for-network-integration-of-renewable-and-distributed-energy-resources (accessed on 11 April 2025).

- Beshta, O.S.; Beshta, O.O.; Khudolii, S.S.; Khalaimov, T.O.; Fedoreiko, V.S. Electric vehicle energy consumption taking into account the route topology. Nauk. Visnyk Natsionalnoho Hirnychoho Universytetu 2024, 2, 104–112. [Google Scholar] [CrossRef]

- Styczynski, Z.A.; Rudion, K.; Orths, O.; Strunz, K. Design of benchmark of medium voltage distribution network for investigation of DG integration. In Proceedings of the IEEE Power Engineering Society General Meeting, Montreal, QC, Canada, 18–22 June 2022. [Google Scholar] [CrossRef]

- Lepolesa, L.J.; Achari, S.; Cheng, L. Electricity theft detection in smart grids based on deep neural network. IEEE Access 2022, 10, 39638–39655. [Google Scholar] [CrossRef]

- Shymaniuk, P.; Miroshnyk, V.; Blinov, I. Estimation of electrical losses in the distribution network based on nodal electrical load forecasts. In Proceedings of the 2022 IEEE 3rd KhPI Week on Advanced Technology (KhPIWeek), Kharkiv, Ukraine, 3–7 October 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Ying, Y.; Tian, Z.; Wu, M.; Liu, Q.; Tricoli, P. A Real-Time Energy Management Strategy of Flexible Smart Traction Power Supply System Based on Deep Q-Learning. IEEE Trans. Intell. Transp. Syst. 2024, 25, 8938–8948. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, Y.; Wang, M.; Yang, K.; Ge, Y.; Wang, K.; Lin, H.; Pan, P.; Hu, H.; He, Z.; et al. Multi-Timescale Reward-Based DRL Energy Management for Regenerative Braking Energy Storage System. IEEE Trans. Transp. Electrif. 2025, 11, 7488–7500. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).